PentestGPT

A GPT-empowered penetration testing tool

Stars: 7977

PentestGPT is a penetration testing tool empowered by ChatGPT, designed to automate the penetration testing process. It operates interactively to guide penetration testers in overall progress and specific operations. The tool supports solving easy to medium HackTheBox machines and other CTF challenges. Users can use PentestGPT to perform tasks like testing connections, using different reasoning models, discussing with the tool, searching on Google, and generating reports. It also supports local LLMs with custom parsers for advanced users.

README:

A GPT-empowered penetration testing tool.

Explore the docs »

Design Details

·

View Demo

·

Report Bug or Request Feature

- [Update on 25/10/2024] We're completing the refactoring of PentestGPT and will release v1.0 soon!

- [Update on 12/08/2024] The research paper on PentestGPT is published at USENIX Security 2024

- [Update on 25/03/2024] We're working on the next version of PentestGPT, with online searching, RAGs and more powerful prompting. Stay tuned!

- [Update on 17/11/2023] GPTs for PentestGPT is out! Check this: https://chat.openai.com/g/g-4MHbTepWO-pentestgpt

- [Update on 07/11/2023] GPT-4-turbo is out! Update the default API usage to GPT-4-turbo.

- Available videos:

- The latest installation video is here.

- PentestGPT for OSCP-like machine: HTB-Jarvis. This is the first part only, and I'll complete the rest when I have time.

- PentestGPT on HTB-Lame. This is an easy machine, but it shows you how PentestGPT skipped the rabbit hole and worked on other potential vulnerabilities.

- We're testing PentestGPT on HackTheBox. You may follow this link. More details will be released soon.

- Feel free to join the Discord Channel for more updates and share your ideas!

- Create a virtual environment if necessary. (

virtualenv -p python3 venv,source venv/bin/activate) - Install the project with

pip3 install git+https://github.com/GreyDGL/PentestGPT -

Ensure that you have link a payment method to your OpenAI account. Export your API key with

export OPENAI_API_KEY='<your key here>',export API base withexport OPENAI_BASEURL='https://api.xxxx.xxx/v1'if you need. - Test the connection with

pentestgpt-connection - For Kali Users: use

tmuxas terminal environment. You can do so by simply runtmuxin the native terminal. - To start:

pentestgpt --logging

- PentestGPT is a penetration testing tool empowered by ChatGPT.

- It is designed to automate the penetration testing process. It is built on top of ChatGPT and operate in an interactive mode to guide penetration testers in both overall progress and specific operations.

-

PentestGPT is able to solve easy to medium HackTheBox machines, and other CTF challenges. You can check this example in

resourceswhere we use it to solve HackTheBox challenge TEMPLATED (web challenge). - A sample testing process of PentestGPT on a target VulnHub machine (Hackable II) is available at here.

- A sample usage video is below: (or available here: Demo)

-

Q: What is PentestGPT?

- A: PentestGPT is a penetration testing tool empowered by Large Language Models (LLMs). It is designed to automate the penetration testing process. It is built on top of ChatGPT API and operate in an interactive mode to guide penetration testers in both overall progress and specific operations.

-

Q: Do I need to pay to use PentestGPT?

- A: Yes in order to achieve the best performance. In general, you can use any LLMs you want, but you're recommended to use GPT-4 API, for which you have to link a payment method to OpenAI.

-

Q: Why GPT-4?

- A: After empirical evaluation, we find that GPT-4 performs better than GPT-3.5 and other LLMs in terms of penetration testing reasoning. In fact, GPT-3.5 leads to failed test in simple tasks.

-

Q: Why not just use GPT-4 directly?

- A: We found that GPT-4 suffers from losses of context as test goes deeper. It is essential to maintain a "test status awareness" in this process. You may check the PentestGPT Arxiv Paper for details.

-

Q: Can I use local GPT models?

- A: Yes. We support local LLMs with custom parser. Look at examples here.

PentestGPT is tested under Python 3.10. Other Python3 versions should work but are not tested.

PentestGPT relies on OpenAI API to achieve high-quality reasoning. You may refer to the installation video here.

- Install the latest version with

pip3 install git+https://github.com/GreyDGL/PentestGPT- You may also clone the project to local environment and install for better customization and development

git clone https://github.com/GreyDGL/PentestGPTcd PentestGPTpip3 install -e .

- You may also clone the project to local environment and install for better customization and development

- To use OpenAI API

- Ensure that you have link a payment method to your OpenAI account.

- export your API key with

export OPENAI_API_KEY='<your key here>' - export API base with

export OPENAI_BASEURL='https://api.xxxx.xxx/v1'if you need. - Test the connection with

pentestgpt-connection

- To verify that the connection is configured properly, you may run

pentestgpt-connection. After a while, you should see some sample conversation with ChatGPT.- A sample output is below

You're testing the connection for PentestGPT v 0.11.0 #### Test connection for OpenAI api (GPT-4) 1. You're connected with OpenAI API. You have GPT-4 access. To start PentestGPT, please use <pentestgpt --reasoning_model=gpt-4> #### Test connection for OpenAI api (GPT-3.5) 2. You're connected with OpenAI API. You have GPT-3.5 access. To start PentestGPT, please use <pentestgpt --reasoning_model=gpt-3.5-turbo-16k>- notice: if you have not linked a payment method to your OpenAI account, you will see error messages.

- The ChatGPT cookie solution is deprecated and not recommended. You may still use it by running

pentestgpt --reasoning_model=gpt-4 --useAPI=False.

- Clone the repository to your local environment.

- Ensure that

poetryis installed. If not, please refer to the poetry installation guide.

-

You are recommended to run:

- (recommended) -

pentestgpt --reasoning_model=gpt-4-turboto use the latest GPT-4-turbo API. -

pentestgpt --reasoning_model=gpt-4if you have access to GPT-4 API. -

pentestgpt --reasoning_model=gpt-3.5-turbo-16kif you only have access to GPT-3.5 API.

- (recommended) -

-

To start, run

pentestgpt --args.-

--helpshow the help message -

--reasoning_modelis the reasoning model you want to use. -

--parsing_modelis the parsing model you want to use. -

--useAPIis whether you want to use OpenAI API. By default it is set toTrue. -

--log_diris the customized log output directory. The location is a relative directory. -

--loggingdefines if you would like to share the logs with us. By default it is set toFalse.

-

-

The tool works similar to msfconsole. Follow the guidance to perform penetration testing.

-

In general, PentestGPT intakes commands similar to chatGPT. There are several basic commands.

- The commands are:

-

help: show the help message. -

next: key in the test execution result and get the next step. -

more: let PentestGPT to explain more details of the current step. Also, a new sub-task solver will be created to guide the tester. -

todo: show the todo list. -

discuss: discuss with the PentestGPT. -

google: search on Google. This function is still under development. -

quit: exit the tool and save the output as log file (see the reporting section below).

-

- You can use <SHIFT + right arrow> to end your input (and is for next line).

- You may always use

TABto autocomplete the commands. - When you're given a drop-down selection list, you can use cursor or arrow key to navigate the list. Press

ENTERto select the item. Similarly, use <SHIFT + right arrow> to confirm selection.

The user can submit info about:- tool: output of the security test tool used

- web: relevant content of a web page

- default: whatever you want, the tool will handle it

- user-comments: user comments about PentestGPT operations

- The commands are:

-

In the sub-task handler initiated by

more, users can execute more commands to investigate into a specific problem:- The commands are:

-

help: show the help message. -

brainstorm: let PentestGPT brainstorm on the local task for all the possible solutions. -

discuss: discuss with PentestGPT about this local task. -

google: search on Google. This function is still under development. -

continue: exit the subtask and continue the main testing session.

-

- The commands are:

- [Update] If you would like us to collect the logs to improve the tool, please run

pentestgpt --logging. We will only collect the LLM usage, without any information related to your OpenAI key. - After finishing the penetration testing, a report will be automatically generated in

logsfolder (if you quit withquitcommand). - The report can be printed in a human-readable format by running

python3 utils/report_generator.py <log file>. A sample reportsample_pentestGPT_log.txtis also uploaded.

PentestGPT now support local LLMs, but the prompts are only optimized for GPT-4.

- To use local GPT4ALL model, you may run

pentestgpt --reasoning_model=gpt4all --parsing_model=gpt4all. - To select the particular model you want to use with GPT4ALL, you may update the

module_mappingclass inpentestgpt/utils/APIs/module_import.py. - You can also follow the examples of

module_import.py,gpt4all.pyandchatgpt_api.pyto create API support for your own model.

Please cite our paper at:

@inproceedings {299699,

author = {Gelei Deng and Yi Liu and V{\'\i}ctor Mayoral-Vilches and Peng Liu and Yuekang Li and Yuan Xu and Tianwei Zhang and Yang Liu and Martin Pinzger and Stefan Rass},

title = {{PentestGPT}: Evaluating and Harnessing Large Language Models for Automated Penetration Testing},

booktitle = {33rd USENIX Security Symposium (USENIX Security 24)},

year = {2024},

isbn = {978-1-939133-44-1},

address = {Philadelphia, PA},

pages = {847--864},

url = {https://www.usenix.org/conference/usenixsecurity24/presentation/deng},

publisher = {USENIX Association},

month = aug

}

Distributed under the MIT License. See LICENSE.txt for more information.

The tool is for educational purpose only and the author does not condone any illegal use. Use as your own risk.

- Gelei Deng -

- [email protected]

- Víctor Mayoral Vilches -

- [email protected]

- Yi Liu - [email protected]

- Peng Liu - [email protected]

- Yuekang Li - [email protected]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for PentestGPT

Similar Open Source Tools

PentestGPT

PentestGPT is a penetration testing tool empowered by ChatGPT, designed to automate the penetration testing process. It operates interactively to guide penetration testers in overall progress and specific operations. The tool supports solving easy to medium HackTheBox machines and other CTF challenges. Users can use PentestGPT to perform tasks like testing connections, using different reasoning models, discussing with the tool, searching on Google, and generating reports. It also supports local LLMs with custom parsers for advanced users.

portia-sdk-python

Portia AI is an open source developer framework for predictable, stateful, authenticated agentic workflows. It allows developers to have oversight over their multi-agent deployments and focuses on production readiness. The framework supports iterating on agents' reasoning, extensive tool support including MCP support, authentication for API and web agents, and is production-ready with features like attribute multi-agent runs, large inputs and outputs storage, and connecting any LLM. Portia AI aims to provide a flexible and reliable platform for developing AI agents with tools, authentication, and smart control.

node_characterai

Node.js client for the unofficial Character AI API, an awesome website which brings characters to life with AI! This repository is inspired by RichardDorian's unofficial node API. Though, I found it hard to use and it was not really stable and archived. So I remade it in javascript. This project is not affiliated with Character AI in any way! It is a community project. The purpose of this project is to bring and build projects powered by Character AI. If you like this project, please check their website.

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, offers quick setup for conversational service robots, integrates diverse knowledge bases, provides flexible configuration options, and features an attractive user interface.

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, enables deployment of conversational service robots in minutes, integrates diverse knowledge bases, offers flexible configuration options, and features an attractive user interface.

AutoAgent

AutoAgent is a fully-automated and zero-code framework that enables users to create and deploy LLM agents through natural language alone. It is a top performer on the GAIA Benchmark, equipped with a native self-managing vector database, and allows for easy creation of tools, agents, and workflows without any coding. AutoAgent seamlessly integrates with a wide range of LLMs and supports both function-calling and ReAct interaction modes. It is designed to be dynamic, extensible, customized, and lightweight, serving as a personal AI assistant.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

horde-worker-reGen

This repository provides the latest implementation for the AI Horde Worker, allowing users to utilize their graphics card(s) to generate, post-process, or analyze images for others. It offers a platform where users can create images and earn 'kudos' in return, granting priority for their own image generations. The repository includes important details for setup, recommendations for system configurations, instructions for installation on Windows and Linux, basic usage guidelines, and information on updating the AI Horde Worker. Users can also run the worker with multiple GPUs and receive notifications for updates through Discord. Additionally, the repository contains models that are licensed under the CreativeML OpenRAIL License.

OpenAdapt

OpenAdapt is an open-source software adapter between Large Multimodal Models (LMMs) and traditional desktop and web Graphical User Interfaces (GUIs). It aims to automate repetitive GUI workflows by leveraging the power of LMMs. OpenAdapt records user input and screenshots, converts them into tokenized format, and generates synthetic input via transformer model completions. It also analyzes recordings to generate task trees and replay synthetic input to complete tasks. OpenAdapt is model agnostic and generates prompts automatically by learning from human demonstration, ensuring that agents are grounded in existing processes and mitigating hallucinations. It works with all types of desktop GUIs, including virtualized and web, and is open source under the MIT license.

bigcodebench

BigCodeBench is an easy-to-use benchmark for code generation with practical and challenging programming tasks. It aims to evaluate the true programming capabilities of large language models (LLMs) in a more realistic setting. The benchmark is designed for HumanEval-like function-level code generation tasks, but with much more complex instructions and diverse function calls. BigCodeBench focuses on the evaluation of LLM4Code with diverse function calls and complex instructions, providing precise evaluation & ranking and pre-generated samples to accelerate code intelligence research. It inherits the design of the EvalPlus framework but differs in terms of execution environment and test evaluation.

Easy-Translate

Easy-Translate is a script designed for translating large text files with a single command. It supports various models like M2M100, NLLB200, SeamlessM4T, LLaMA, and Bloom. The tool is beginner-friendly and offers seamless and customizable features for advanced users. It allows acceleration on CPU, multi-CPU, GPU, multi-GPU, and TPU, with support for different precisions and decoding strategies. Easy-Translate also provides an evaluation script for translations. Built on HuggingFace's Transformers and Accelerate library, it supports prompt usage and loading huge models efficiently.

Auto_Jobs_Applier_AIHawk

Auto_Jobs_Applier_AIHawk is an AI-powered job search assistant that revolutionizes the job search and application process. It automates application submissions, provides personalized recommendations, and enhances the chances of landing a dream job. The tool offers features like intelligent job search automation, rapid application submission, AI-powered personalization, volume management with quality, intelligent filtering, dynamic resume generation, and secure data handling. It aims to address the challenges of modern job hunting by saving time, increasing efficiency, and improving application quality.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

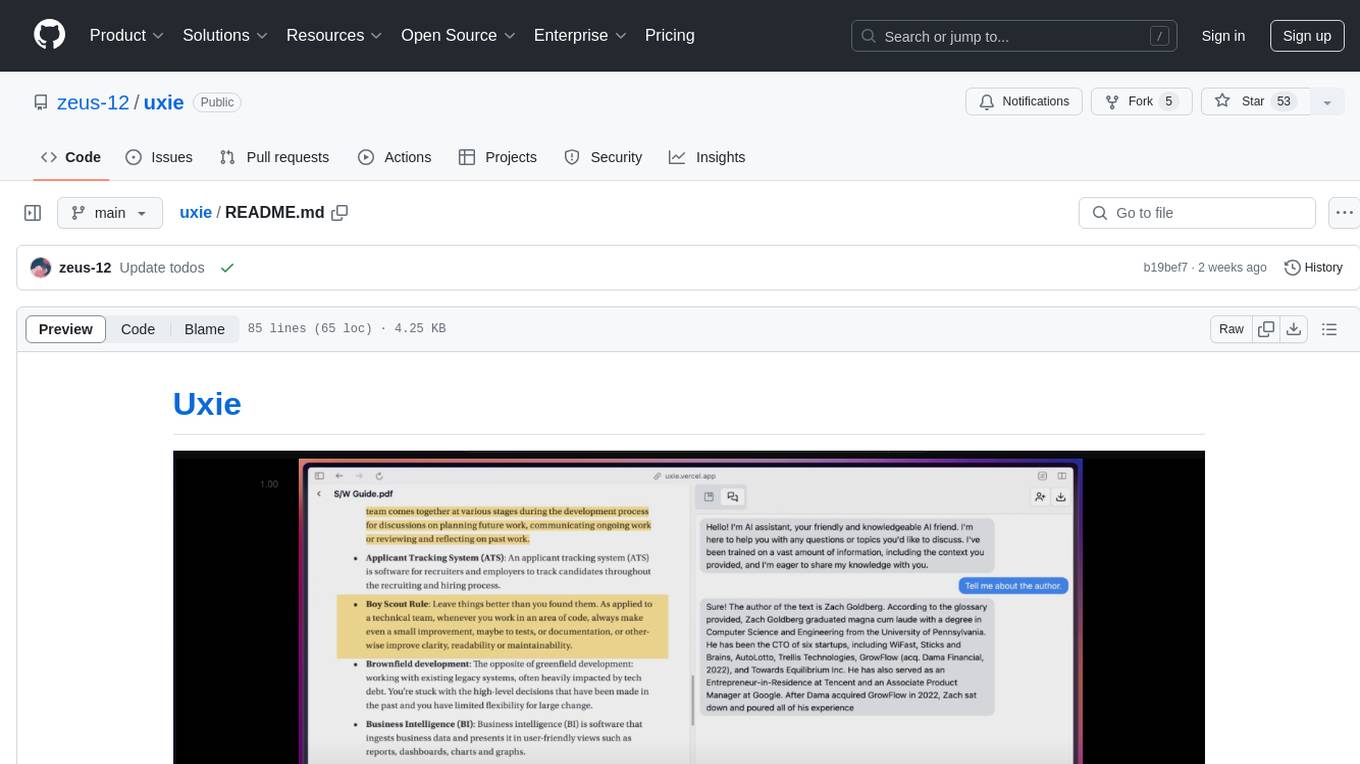

uxie

Uxie is a PDF reader app designed to revolutionize the learning experience. It offers features such as annotation, note-taking, collaboration tools, integration with LLM for enhanced learning, and flashcard generation with LLM feedback. Built using Nextjs, tRPC, Zod, TypeScript, Tailwind CSS, React Query, React Hook Form, Supabase, Prisma, and various other tools. Users can take notes, summarize PDFs, chat and collaborate with others, create custom blocks in the editor, and use AI-powered text autocompletion. The tool allows users to craft simple flashcards, test knowledge, answer questions, and receive instant feedback through AI evaluation.

restai

RestAI is an AIaaS (AI as a Service) platform that allows users to create and consume AI agents (projects) using a simple REST API. It supports various types of agents, including RAG (Retrieval-Augmented Generation), RAGSQL (RAG for SQL), inference, vision, and router. RestAI features automatic VRAM management, support for any public LLM supported by LlamaIndex or any local LLM supported by Ollama, a user-friendly API with Swagger documentation, and a frontend for easy access. It also provides evaluation capabilities for RAG agents using deepeval.

quivr

Quivr is a personal assistant powered by Generative AI, designed to be a second brain for users. It offers fast and efficient access to data, ensuring security and compatibility with various file formats. Quivr is open source and free to use, allowing users to share their brains publicly or keep them private. The marketplace feature enables users to share and utilize brains created by others, boosting productivity. Quivr's offline mode provides anytime, anywhere access to data. Key features include speed, security, OS compatibility, file compatibility, open source nature, public/private sharing options, a marketplace, and offline mode.

For similar tasks

PentestGPT

PentestGPT is a penetration testing tool empowered by ChatGPT, designed to automate the penetration testing process. It operates interactively to guide penetration testers in overall progress and specific operations. The tool supports solving easy to medium HackTheBox machines and other CTF challenges. Users can use PentestGPT to perform tasks like testing connections, using different reasoning models, discussing with the tool, searching on Google, and generating reports. It also supports local LLMs with custom parsers for advanced users.

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

DB-GPT

DB-GPT is an open source AI native data app development framework with AWEL(Agentic Workflow Expression Language) and agents. It aims to build infrastructure in the field of large models, through the development of multiple technical capabilities such as multi-model management (SMMF), Text2SQL effect optimization, RAG framework and optimization, Multi-Agents framework collaboration, AWEL (agent workflow orchestration), etc. Which makes large model applications with data simpler and more convenient.

Chat2DB

Chat2DB is an AI-driven data development and analysis platform that enables users to communicate with databases using natural language. It supports a wide range of databases, including MySQL, PostgreSQL, Oracle, SQLServer, SQLite, MariaDB, ClickHouse, DM, Presto, DB2, OceanBase, Hive, KingBase, MongoDB, Redis, and Snowflake. Chat2DB provides a user-friendly interface that allows users to query databases, generate reports, and explore data using natural language commands. It also offers a variety of features to help users improve their productivity, such as auto-completion, syntax highlighting, and error checking.

aide

AIDE (Advanced Intrusion Detection Environment) is a tool for monitoring file system changes. It can be used to detect unauthorized changes to monitored files and directories. AIDE was written to be a simple and free alternative to Tripwire. Features currently included in AIDE are as follows: o File attributes monitored: permissions, inode, user, group file size, mtime, atime, ctime, links and growing size. o Checksums and hashes supported: SHA1, MD5, RMD160, and TIGER. CRC32, HAVAL and GOST if Mhash support is compiled in. o Plain text configuration files and database for simplicity. o Rules, variables and macros that can be customized to local site or system policies. o Powerful regular expression support to selectively include or exclude files and directories to be monitored. o gzip database compression if zlib support is compiled in. o Free software licensed under the GNU General Public License v2.

OpsPilot

OpsPilot is an AI-powered operations navigator developed by the WeOps team. It leverages deep learning and LLM technologies to make operations plans interactive and generalize and reason about local operations knowledge. OpsPilot can be integrated with web applications in the form of a chatbot and primarily provides the following capabilities: 1. Operations capability precipitation: By depositing operations knowledge, operations skills, and troubleshooting actions, when solving problems, it acts as a navigator and guides users to solve operations problems through dialogue. 2. Local knowledge Q&A: By indexing local knowledge and Internet knowledge and combining the capabilities of LLM, it answers users' various operations questions. 3. LLM chat: When the problem is beyond the scope of OpsPilot's ability to handle, it uses LLM's capabilities to solve various long-tail problems.

aimeos-typo3

Aimeos is a professional, full-featured, and high-performance e-commerce extension for TYPO3. It can be installed in an existing TYPO3 website within 5 minutes and can be adapted, extended, overwritten, and customized to meet specific needs.

For similar jobs

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

PurpleLlama

Purple Llama is an umbrella project that aims to provide tools and evaluations to support responsible development and usage of generative AI models. It encompasses components for cybersecurity and input/output safeguards, with plans to expand in the future. The project emphasizes a collaborative approach, borrowing the concept of purple teaming from cybersecurity, to address potential risks and challenges posed by generative AI. Components within Purple Llama are licensed permissively to foster community collaboration and standardize the development of trust and safety tools for generative AI.

vpnfast.github.io

VPNFast is a lightweight and fast VPN service provider that offers secure and private internet access. With VPNFast, users can protect their online privacy, bypass geo-restrictions, and secure their internet connection from hackers and snoopers. The service provides high-speed servers in multiple locations worldwide, ensuring a reliable and seamless VPN experience for users. VPNFast is easy to use, with a user-friendly interface and simple setup process. Whether you're browsing the web, streaming content, or accessing sensitive information, VPNFast helps you stay safe and anonymous online.

taranis-ai

Taranis AI is an advanced Open-Source Intelligence (OSINT) tool that leverages Artificial Intelligence to revolutionize information gathering and situational analysis. It navigates through diverse data sources like websites to collect unstructured news articles, utilizing Natural Language Processing and Artificial Intelligence to enhance content quality. Analysts then refine these AI-augmented articles into structured reports that serve as the foundation for deliverables such as PDF files, which are ultimately published.

NightshadeAntidote

Nightshade Antidote is an image forensics tool used to analyze digital images for signs of manipulation or forgery. It implements several common techniques used in image forensics including metadata analysis, copy-move forgery detection, frequency domain analysis, and JPEG compression artifacts analysis. The tool takes an input image, performs analysis using the above techniques, and outputs a report summarizing the findings.

h4cker

This repository is a comprehensive collection of cybersecurity-related references, scripts, tools, code, and other resources. It is carefully curated and maintained by Omar Santos. The repository serves as a supplemental material provider to several books, video courses, and live training created by Omar Santos. It encompasses over 10,000 references that are instrumental for both offensive and defensive security professionals in honing their skills.

AIMr

AIMr is an AI aimbot tool written in Python that leverages modern technologies to achieve an undetected system with a pleasing appearance. It works on any game that uses human-shaped models. To optimize its performance, users should build OpenCV with CUDA. For Valorant, additional perks in the Discord and an Arduino Leonardo R3 are required.

admyral

Admyral is an open-source Cybersecurity Automation & Investigation Assistant that provides a unified console for investigations and incident handling, workflow automation creation, automatic alert investigation, and next step suggestions for analysts. It aims to tackle alert fatigue and automate security workflows effectively by offering features like workflow actions, AI actions, case management, alert handling, and more. Admyral combines security automation and case management to streamline incident response processes and improve overall security posture. The tool is open-source, transparent, and community-driven, allowing users to self-host, contribute, and collaborate on integrations and features.