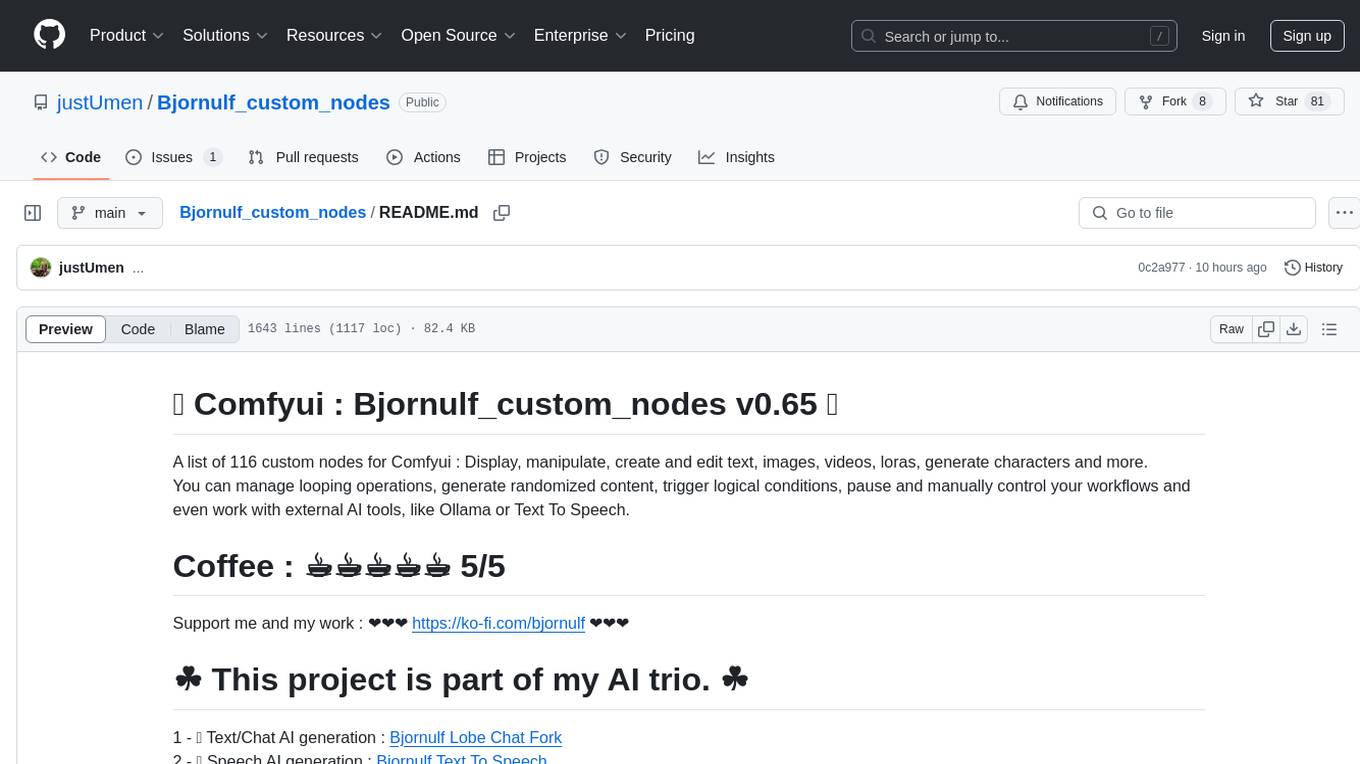

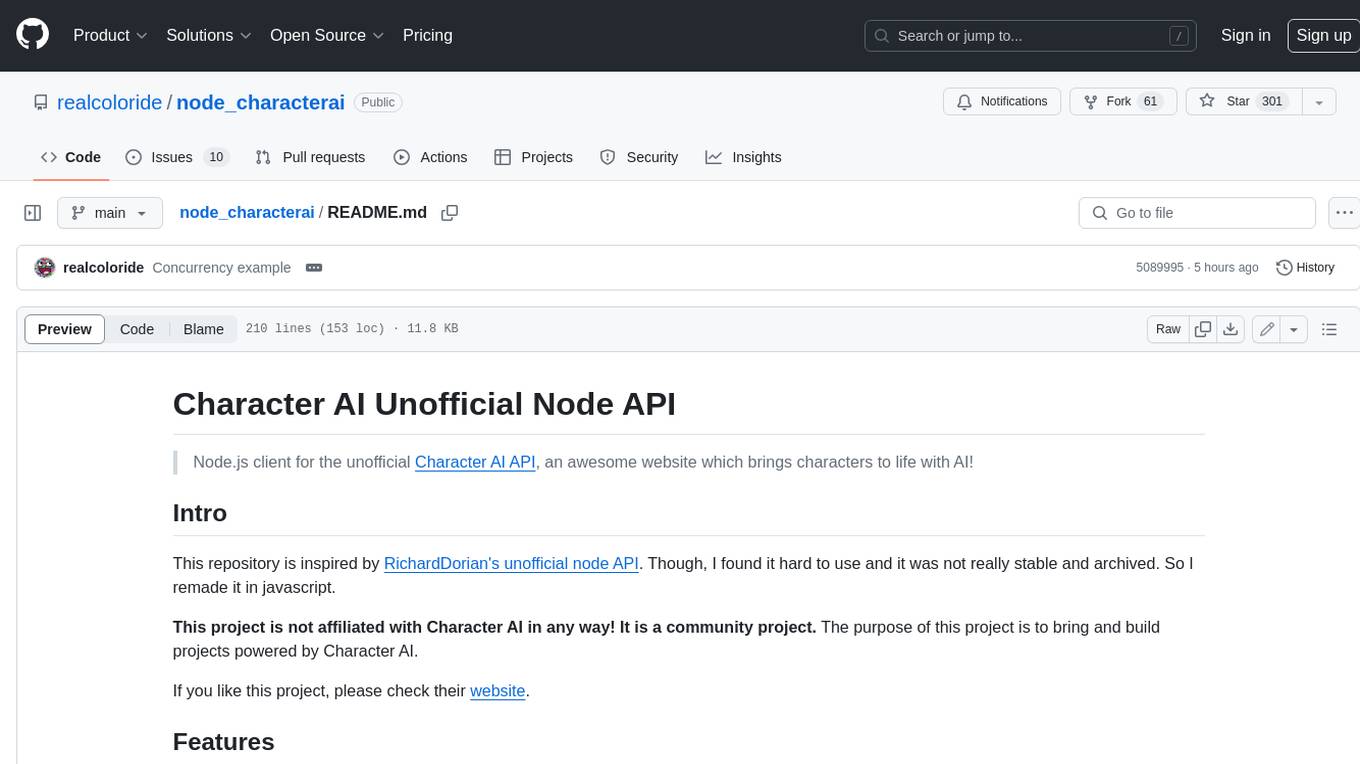

node_characterai

Unofficial Character AI wrapper for node.

Stars: 301

Node.js client for the unofficial Character AI API, an awesome website which brings characters to life with AI! This repository is inspired by RichardDorian's unofficial node API. Though, I found it hard to use and it was not really stable and archived. So I remade it in javascript. This project is not affiliated with Character AI in any way! It is a community project. The purpose of this project is to bring and build projects powered by Character AI. If you like this project, please check their website.

README:

Node.js client for the unofficial Character AI API, an awesome website which brings characters to life with AI!

This repository is inspired by RichardDorian's unofficial node API. Though, I found it hard to use and it was not really stable and archived. So I remade it in javascript.

This project is not affiliated with Character AI in any way! It is a community project. The purpose of this project is to bring and build projects powered by Character AI.

If you like this project, please check their website.

- 👍 Fully written in Javascript and CommonJS (for max compatibility and ease of use)

- ⌚ Asynchronous requests

- 🗣️ Use conversations or use the API to fetch information

- 🧸 Easy to use

- 🔁 Active development

- 👤 Guest & token login support

npm install node_characteraiBasic guest authentication and message:

const CharacterAI = require("node_characterai");

const characterAI = new CharacterAI();

(async () => {

// Authenticating as a guest (use `.authenticateWithToken()` to use an account)

await characterAI.authenticateAsGuest();

// Place your character's id here

const characterId = "8_1NyR8w1dOXmI1uWaieQcd147hecbdIK7CeEAIrdJw";

// Create a chat object to interact with the conversation

const chat = await characterAI.createOrContinueChat(characterId);

// Send a message

const response = await chat.sendAndAwaitResponse("Hello discord mod!", true);

console.log(response);

// Use `response.text` to use it as a string

})();Some parts of the API (like managing a conversation) require you to be logged in using a sessionToken.

To get it, you can open your browser, go to the Character.AI website in localStorage.

[!IMPORTANT]

If you are using old versions of the package and are getting aAuthentication token is invalid, you now again need asessionTokento authenticate (as of update1.2.5and higher). See below.If you are using something that is using the package and has not updated to the latest version in a while, make sure to update the package by doing

npm update node_characteraior manually copying the files or open a respective issue to their package (if they have one).

⚠️ WARNING: DO NOT share your session token to anyone you do not trust or if you do not know what you're doing.

Anyone with your session token could have access to your account without your consent. Do this at your own risk.

- Open the Character.AI website in your browser (https://beta.character.ai)

- Open the developer tools (F12, Ctrl+Shift+I, or Cmd+J)

- Go to the

Applicationtab - Go to the

Storagesection and click onLocal Storage - Look for the

char_tokenkey - Open the object, right click on value and copy your session token.

- Open the Character.AI website in your browser on the OLD interface (https://old.character.ai/)

- Open the URL bar, write

javascript:(case sensitive) and paste the following:

(function(){let e=window.localStorage["char_token"];if(!e){alert("You need to log in first!");return;}let t=JSON.parse(e).value;document.documentElement.innerHTML=`<div><i><p>provided by node_characterai - <a href="https://github.com/realcoloride/node_characterai?tab=readme-ov-file#using-an-access-token">click here for more information</a></p></i><p>Here is your session token:</p><input value="${t}" readonly><p><strong>Do not share this with anyone unless you know what you are doing! This is your personal session token. If stolen or requested by someone you don't trust, they could access your account without your consent; if so, please close the page immediately.</strong></p><button id="copy" onclick="navigator.clipboard.writeText('${t}'); alert('Copied to clipboard!')">Copy session token to clipboard</button><button onclick="window.location.reload();">Refresh the page</button></div>`;localStorageKey=null;storageInformation=null;t=null;})();-

Click the respective buttons to copy your access token or id token to your clipboard.

When using the package, you can:

- Login as guest using

authenticateAsGuest()- for mass usage or testing purposes - Login with your account or a token using

authenticateWithToken()- for full features and unlimited messaging

You can find your character ID in the URL of a Character's chat page.

For example, if you go to the chat page of the character Discord Moderator you will see the URL https://beta.character.ai/chat?char=8_1NyR8w1dOXmI1uWaieQcd147hecbdIK7CeEAIrdJw.

The last part of the URL is the character ID:

WARNING: This part is currently experimental, if you encounter any problem, open an Issue.

🖼️ Character AI has the ability to generate and interpret images in a conversation. Some characters base this concept into special characters, or maybe use it for recognizing images, or to interact with a character and give it more details on something: the possibilities are endless.

💁 Most of the Character AI image features can be used like so:

// Most of these functions will return you an URL to the image

await chat.generateImage("dolphins swimming in green water");

await chat.uploadImage("https://www.example.com/image.jpg");

await chat.uploadImage("./photos/image.jpg");

// Other supported types are Buffers, Readable Streams, File Paths, and URLs

await chat.uploadImage(imageBuffer);

// Including the image relative path is necessary to upload an image

await chat.sendAndAwaitResponse({

text: "What is in this image?",

image_rel_path: "https://www.example.com/coffee.jpg",

image_description: "This is coffee.",

image_description_type: "HUMAN" // Set this if you are manually saying what the AI is looking at

}, true);Props to @creepycats for implementing most of this stuff out

| Problem | Answer |

|---|---|

| ❌ Token was invalid | Make sure your token is actually valid and you copied your entire token (its pretty long) or, you have not updated the package. |

On most systems, puppeteer will automatically locate Chromium. But on certain distributions, the path has to be specified manually. This warning occurs if node_characterai could not locate Chromium on linux (/usr/bin/chromium-browser), and will error if puppeteer cannot locate it automatically. See this for a fix. |

|

| 😮 Why are chromium processes opening? | This is because as of currently, the simple fetching is broken and I use puppeteer (a chromium browser control library) to go around cloudflare's restrictions. |

👥 authenticateAsGuest() doesn't work

|

See issue #14. |

| 🦒 Hit the max amount of messages? | Sadly, guest accounts only have a limited amount of messages before they get limited and forced to login. See below for more info 👇 |

| 🪐 How to use an account to mass use the library? | You can use conversations, a feature introduced in 1.0.0, to assign to users and channels. To reproduce a conversation, use OOC (out of character) to make the AI think you're with multiple people. See an example here:   (Disclaimer: on some characters, their personality will make them ignore any OOC request). (Disclaimer: on some characters, their personality will make them ignore any OOC request). |

| 🏃 How do I avoid concurrency and crashes when using more than one request at a time? | Check the solution found by @SeoulSKY here using async-mutex. |

| 📣 Is this official? | No, this project is made by a fan of the website and is unofficial. To support the developers, please check out their website. |

😲 Did something awesome with node_characterai?

|

Please let me know! |

| ✉️ Want to contact me? | See my profile |

| ☕ Want to support me? | You can send me a coffee on ko.fi: https://ko-fi.com/coloride. Many thanks! |

| 💡 Have an idea? | Open an issue in the Issues tab |

| ➕ Other issue? | Open an issue in the Issues tab |

- In the

Clientclass, you can access theRequesterclass and define puppeteer or other variables related to how CharacterAI will work usingcharacterAI.requester.(property). Also, anything here is subject to change, so make sure to update the package frequently.

Change the property .usePlus from the requester and if needed, change .forceWaitingRoom.

For example:

// Default is `false`

characterAI.requester.usePlus = true;Around a few months ago, the package only required the node-fetch module to run. The package was made using simple API requests.

However, over time, Cloudflare started fighting against scraping and bots, which also made it almost impossible for anyone to use this package.

This is where in versions 1.1 and higher, puppeteer is used (which uses a chromium browser) to make requests with the API.

👉 IMPORTANT: do the changes before you initialize your client!

In the CharacterAI class, you can access the requester and define the .puppeteerPath variable or other arguments, and the properties include (and are subject to change in future versions):

// Chromium executable path (in some linux distributions, /usr/bin/chromium-browser)

puppeteerPath;

// Default arguments for when the browser launches

puppeteerLaunchArgs;

// Boolean representing the default timeout (default is 30000ms)

puppeteerNoDefaultTimeout;

// Number representing the default protocol timeout

puppeteerProtocolTimeout;🐧 For linux users, if your puppeteer doesn't automatically detect the path to Chromium, you will need to specify it manually.

To do this, you just need to set puppeteerPath to your Chromium path:

characterAI.puppeteerPath = "/path/to/chromium-browser";On Linux, you can use the which command to find where Chromium is installed:

$ which chromium-browser # or whatever command you use to launch chrome💡 I recommend that you frequently update this package for bug fixes and new additions.

❤️ This project is updated frequently, always check for the latest version for new features or bug fixes.

🚀 If you have an issue or idea, let me know in the Issues section.

📜 If you use this API, you also bound to the terms of usage of their website.

(real)coloride - 2023-2024, Licensed MIT.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for node_characterai

Similar Open Source Tools

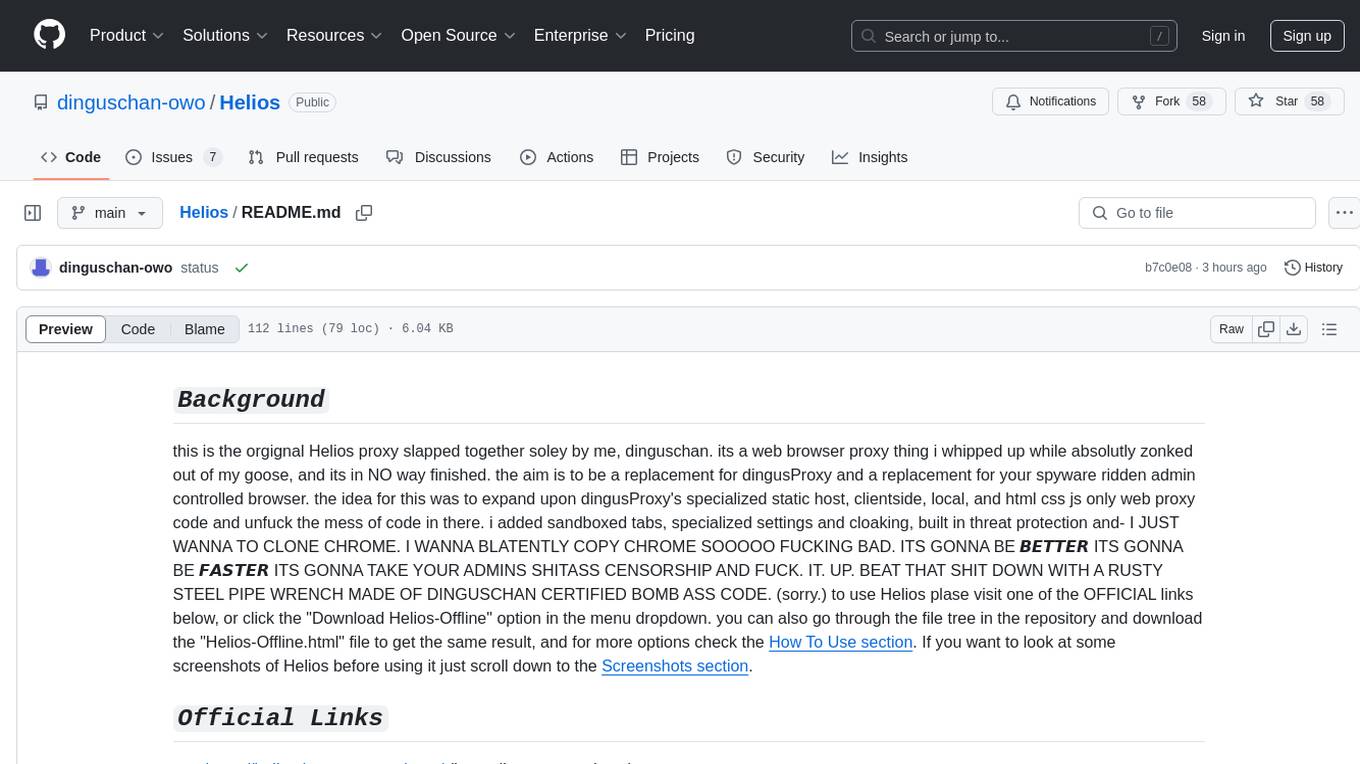

node_characterai

Node.js client for the unofficial Character AI API, an awesome website which brings characters to life with AI! This repository is inspired by RichardDorian's unofficial node API. Though, I found it hard to use and it was not really stable and archived. So I remade it in javascript. This project is not affiliated with Character AI in any way! It is a community project. The purpose of this project is to bring and build projects powered by Character AI. If you like this project, please check their website.

Helios

Helios is a powerful open-source tool for managing and monitoring your Kubernetes clusters. It provides a user-friendly interface to easily visualize and control your cluster resources, including pods, deployments, services, and more. With Helios, you can efficiently manage your containerized applications and ensure high availability and performance of your Kubernetes infrastructure.

Discord-AI-Selfbot

Discord-AI-Selfbot is a Python-based Discord selfbot that uses the `discord.py-self` library to automatically respond to messages mentioning its trigger word using Groq API's Llama-3 model. It functions as a normal Discord bot on a real Discord account, enabling interactions in DMs, servers, and group chats without needing to invite a bot. The selfbot comes with features like custom AI instructions, free LLM model usage, mention and reply recognition, message handling, channel-specific responses, and a psychoanalysis command to analyze user messages for insights on personality.

kwaak

Kwaak is a tool that allows users to run a team of autonomous AI agents locally from their own machine. It enables users to write code, improve test coverage, update documentation, and enhance code quality while focusing on building innovative projects. Kwaak is designed to run multiple agents in parallel, interact with codebases, answer questions about code, find examples, write and execute code, create pull requests, and more. It is free and open-source, allowing users to bring their own API keys or models via Ollama. Kwaak is part of the bosun.ai project, aiming to be a platform for autonomous code improvement.

fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a structured approach to breaking down problems into individual components and applying AI to them one at a time. Fabric includes a collection of pre-defined Patterns (prompts) that can be used for a variety of tasks, such as extracting the most interesting parts of YouTube videos and podcasts, writing essays, summarizing academic papers, creating AI art prompts, and more. Users can also create their own custom Patterns. Fabric is designed to be easy to use, with a command-line interface and a variety of helper apps. It is also extensible, allowing users to integrate it with their own AI applications and infrastructure.

OpenAI-sublime-text

The OpenAI Completion plugin for Sublime Text provides first-class code assistant support within the editor. It utilizes LLM models to manipulate code, engage in chat mode, and perform various tasks. The plugin supports OpenAI, llama.cpp, and ollama models, allowing users to customize their AI assistant experience. It offers separated chat histories and assistant settings for different projects, enabling context-specific interactions. Additionally, the plugin supports Markdown syntax with code language syntax highlighting, server-side streaming for faster response times, and proxy support for secure connections. Users can configure the plugin's settings to set their OpenAI API key, adjust assistant modes, and manage chat history. Overall, the OpenAI Completion plugin enhances the Sublime Text editor with powerful AI capabilities, streamlining coding workflows and fostering collaboration with AI assistants.

PentestGPT

PentestGPT is a penetration testing tool empowered by ChatGPT, designed to automate the penetration testing process. It operates interactively to guide penetration testers in overall progress and specific operations. The tool supports solving easy to medium HackTheBox machines and other CTF challenges. Users can use PentestGPT to perform tasks like testing connections, using different reasoning models, discussing with the tool, searching on Google, and generating reports. It also supports local LLMs with custom parsers for advanced users.

reader

Reader is a tool that converts any URL to an LLM-friendly input with a simple prefix `https://r.jina.ai/`. It improves the output for your agent and RAG systems at no cost. Reader supports image reading, captioning all images at the specified URL and adding `Image [idx]: [caption]` as an alt tag. This enables downstream LLMs to interact with the images in reasoning, summarizing, etc. Reader offers a streaming mode, useful when the standard mode provides an incomplete result. In streaming mode, Reader waits a bit longer until the page is fully rendered, providing more complete information. Reader also supports a JSON mode, which contains three fields: `url`, `title`, and `content`. Reader is backed by Jina AI and licensed under Apache-2.0.

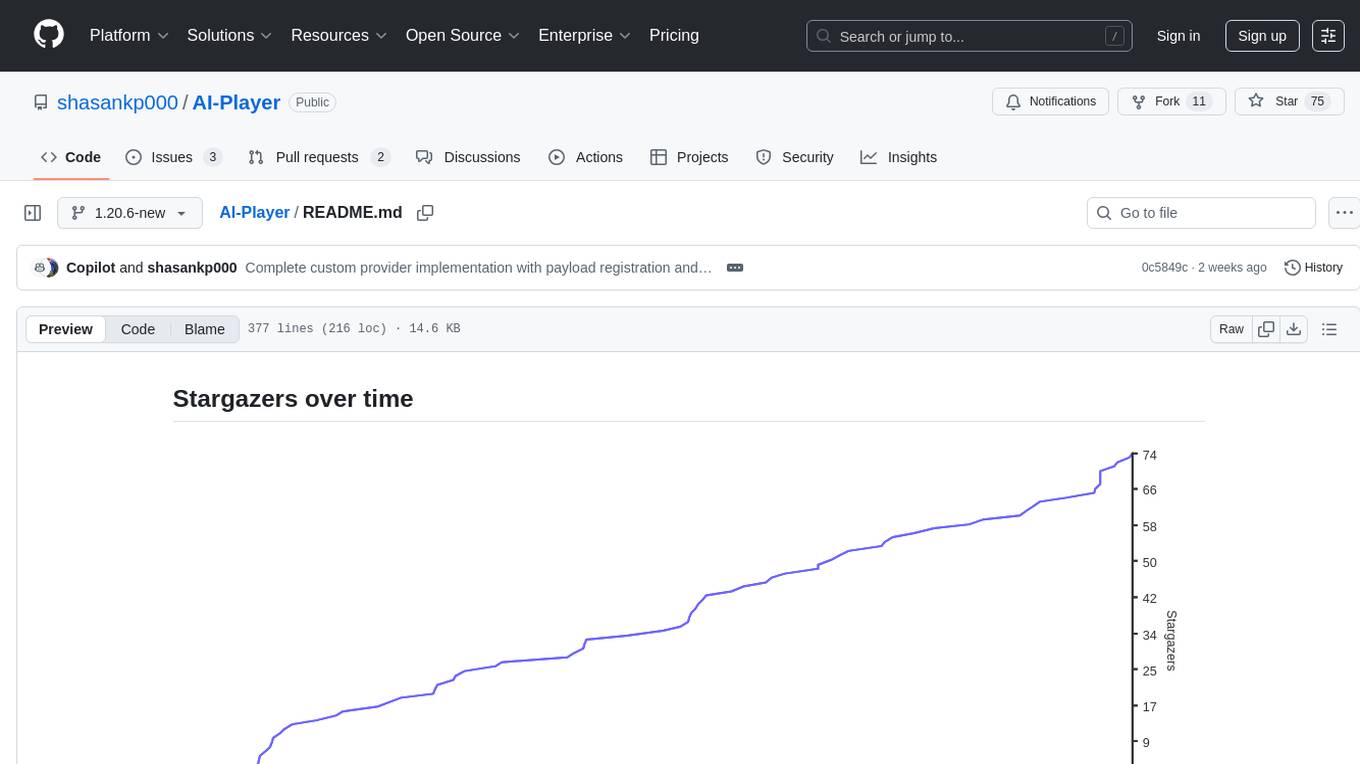

AI-Player

AI-Player is a Minecraft mod that adds an 'intelligent' second player to the game to combat loneliness while playing solo. It aims to enhance gameplay by providing companionship and interactive features. The mod leverages advanced AI algorithms and integrates with external tools to enhance the player experience. Developed with a focus on addressing the social aspect of gaming, AI-Player is a community-driven project that continues to evolve with user feedback and contributions.

actions

Sema4.ai Action Server is a tool that allows users to build semantic actions in Python to connect AI agents with real-world applications. It enables users to create custom actions, skills, loaders, and plugins that securely connect any AI Assistant platform to data and applications. The tool automatically creates and exposes an API based on function declaration, type hints, and docstrings by adding '@action' to Python scripts. It provides an end-to-end stack supporting various connections between AI and user's apps and data, offering ease of use, security, and scalability.

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

robocorp

Robocorp is a platform that allows users to create, deploy, and operate Python automations and AI actions. It provides an easy way to extend the capabilities of AI agents, assistants, and copilots with custom actions written in Python. Users can create and deploy tools, skills, loaders, and plugins that securely connect any AI Assistant platform to their data and applications. The Robocorp Action Server makes Python scripts compatible with ChatGPT and LangChain by automatically creating and exposing an API based on function declaration, type hints, and docstrings. It simplifies the process of developing and deploying AI actions, enabling users to interact with AI frameworks effortlessly.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

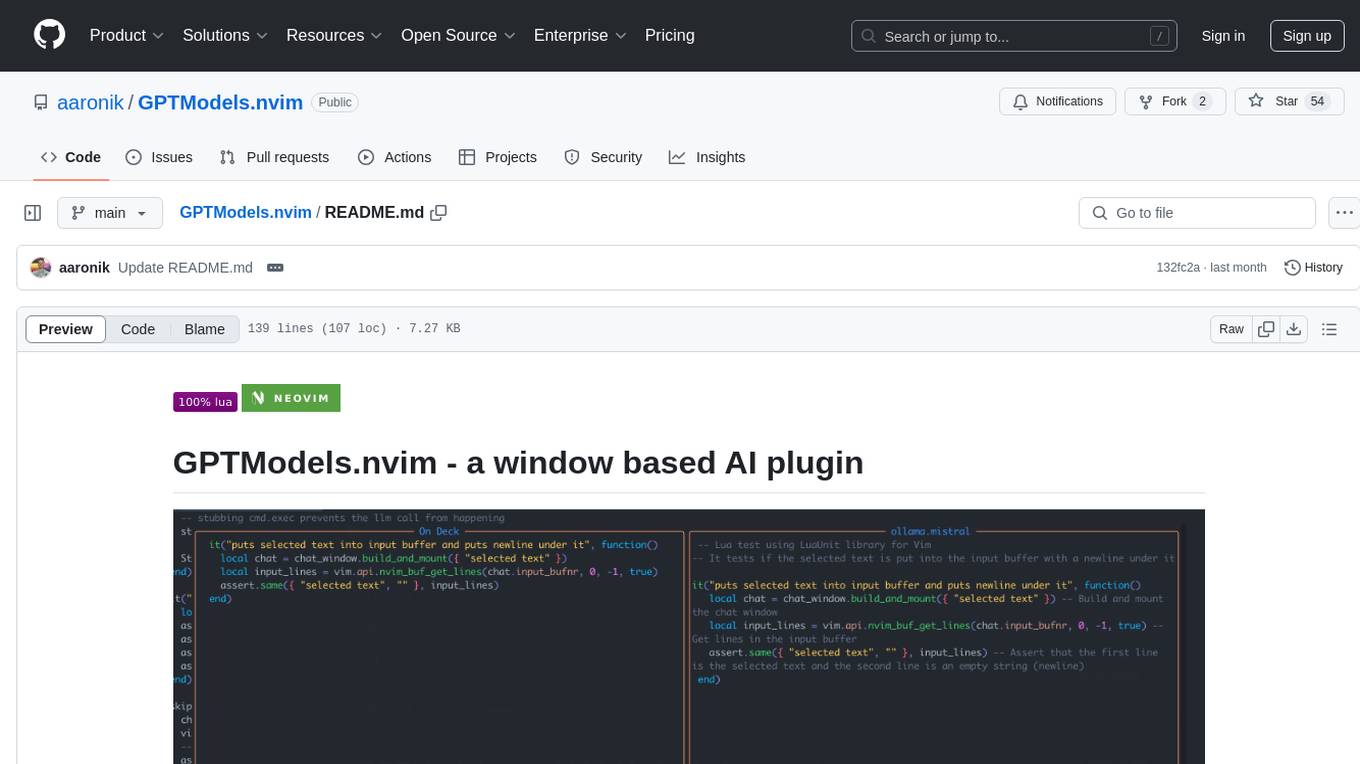

GPTModels.nvim

GPTModels.nvim is a window-based AI plugin for Neovim that enhances workflow with AI LLMs. It provides two popup windows for chat and code editing, focusing on stability and user experience. The plugin supports OpenAI and Ollama, includes LSP diagnostics, file inclusion, background processing, request cancellation, selection inclusion, and filetype inclusion. Developed with stability in mind, the plugin offers a seamless user experience with various features to streamline AI integration in Neovim.

allms

allms is a versatile and powerful library designed to streamline the process of querying Large Language Models (LLMs). Developed by Allegro engineers, it simplifies working with LLM applications by providing a user-friendly interface, asynchronous querying, automatic retrying mechanism, error handling, and output parsing. It supports various LLM families hosted on different platforms like OpenAI, Google, Azure, and GCP. The library offers features for configuring endpoint credentials, batch querying with symbolic variables, and forcing structured output format. It also provides documentation, quickstart guides, and instructions for local development, testing, updating documentation, and making new releases.

For similar tasks

99AI

99AI is a commercializable AI web application based on NineAI 2.4.2 (no authorization, no backdoors, no piracy, integrated front-end and back-end integration packages, supports Docker rapid deployment). The uncompiled source code is temporarily closed. Compared with the stable version, the development version is faster.

node_characterai

Node.js client for the unofficial Character AI API, an awesome website which brings characters to life with AI! This repository is inspired by RichardDorian's unofficial node API. Though, I found it hard to use and it was not really stable and archived. So I remade it in javascript. This project is not affiliated with Character AI in any way! It is a community project. The purpose of this project is to bring and build projects powered by Character AI. If you like this project, please check their website.

bidirectional_streaming_ai_voice

This repository contains Python scripts that enable two-way voice conversations with Anthropic Claude, utilizing ElevenLabs for text-to-speech, Faster-Whisper for speech-to-text, and Pygame for audio playback. The tool operates by transcribing human audio using Faster-Whisper, sending the transcription to Anthropic Claude for response generation, and converting the LLM's response into audio using ElevenLabs. The audio is then played back through Pygame, allowing for a seamless and interactive conversation between the user and the AI. The repository includes variations of the main script to support different operating systems and configurations, such as using CPU transcription on Linux or employing the AssemblyAI API instead of Faster-Whisper.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

ChatterUI

ChatterUI is a mobile app that allows users to manage chat files and character cards, and to interact with Large Language Models (LLMs). It supports multiple backends, including local, koboldcpp, text-generation-webui, Generic Text Completions, AI Horde, Mancer, Open Router, and OpenAI. ChatterUI provides a mobile-friendly interface for interacting with LLMs, making it easy to use them for a variety of tasks, such as generating text, translating languages, writing code, and answering questions.

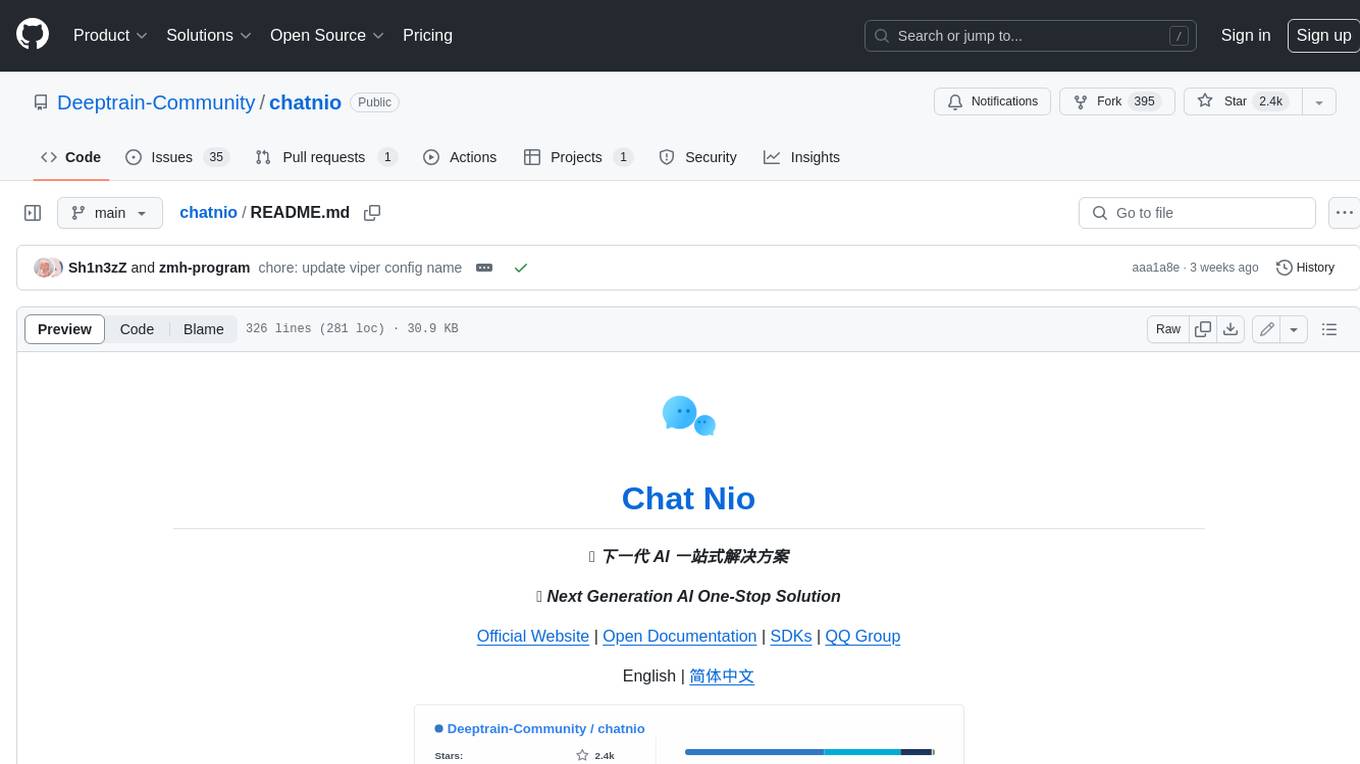

chatnio

Chat Nio is a next-generation AI one-stop solution that provides a rich and user-friendly interface for interacting with various AI models. It offers features such as AI chat conversation, rich format compatibility, markdown support, message menu support, multi-platform adaptation, dialogue memory, full-model file parsing, full-model DuckDuckGo online search, full-screen large text editing, model marketplace, preset support, site announcements, preference settings, internationalization support, and a rich admin system. Chat Nio also boasts a powerful channel management system that utilizes a self-developed channel distribution algorithm, supports multi-channel management, is compatible with multiple formats, allows for custom models, supports channel retries, enables balanced load within the same channel, and provides channel model mapping and user grouping. Additionally, Chat Nio offers forwarding API services that are compatible with multiple formats in the OpenAI universal format and support multiple model compatible layers. It also provides a custom build and install option for highly customizable deployments. Chat Nio is an open-source project licensed under the Apache License 2.0 and welcomes contributions from the community.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.