Open_Data_QnA

The Open Data QnA python library enables you to chat with your databases by leveraging LLM Agents on Google Cloud. Open Data QnA enables a conversational approach to interacting with your data by implementing state-of-the-art NL2SQL / Text2SQL methods.

Stars: 127

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

README:

The Open Data QnA python library enables you to chat with your databases by leveraging LLM Agents on Google Cloud.

Open Data QnA enables a conversational approach to interacting with your data. Ask questions about your PostgreSQL or BigQuery databases in natural language and receive informative responses, without needing to write SQL. Open Data QnA leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, streamlining data analysis and decision-making.

Key Features:

- Conversational Querying with Multiturn Support: Ask questions naturally, without requiring SQL knowledge and ask follow up questions.

- Table Grouping: Group tables under one usecase/user grouping name which can help filtering your large number tables for LLMs to understand about.

- Multi Schema/Dataset Support: You can group tables from different schemas/datasets for embedding and asking questions against.

- Prompt Customization and Additional Context: The prompts that are being used are loaded from a yaml file and it also give you ability to add extra context as well

- SQL Generation: Automatically generates SQL queries based on your questions.

- Query Refinement: Validates and debugs queries to ensure accuracy.

- Natural Language Responses: DRun queries and present results in clear, easy-to-understand language.

- Visualizations (Optional): Explore data visually with generated charts.

- Extensible: Customize and integrate with your existing workflows(API, UI, Notebooks).

It is built on a modular design and currently supports the following components:

- Google Cloud SQL for PostgreSQL

- Google BigQuery

- Google Firestore(for storing session logs)

- PGVector on Google Cloud SQL for PostgreSQL

- BigQuery Vector Store

- BuildSQLAgent: An agent specialized in generating SQL queries for BigQuery or PostgreSQL databases. It analyzes user questions, available table schemas, and column descriptions to construct syntactically and semantically correct SQL queries, adapting its process based on the target database type.

- ValidateSQLAgent: An agent that validates the syntax and semantic correctness of SQL queries. It uses a language model to analyze queries against a database schema and returns a JSON response indicating validity and potential errors.

- DebugSQLAgent: An agent designed to debug and refine SQL queries for BigQuery or PostgreSQL databases. It interacts with a chat-based language model to iteratively troubleshoot queries, using error messages to generate alternative, correct queries.

- DescriptionAgent: An agent specialized in generating descriptions for database tables and columns. It leverages a large language model to create concise and informative descriptions that aid in understanding data structures and facilitate SQL query generation.

- EmbedderAgent: An agent specialized in generating text embeddings using Large Language Models (LLMs). It supports direct interaction with Vertex AI's TextEmbeddingModel or uses LangChain's VertexAIEmbeddings for a simplified interface.

- ResponseAgent: An agent that generates natural language responses to user questions based on SQL query results. It acts as a data assistant, interpreting SQL results and transforming them into user-friendly answers using a language model.

- VisualizeAgent: An agent that generates JavaScript code for Google Charts based on user questions and SQL results. It suggests suitable chart types and constructs the JavaScript code to create visualizations of the data.

Note: the library was formerly named Talk2Data. You may still find artifacts with the old naming in this repository.

A detailed description of the Architecture can be found here in the docs.

Details on the Repository Structure can be found here in the docs.

git clone [email protected]:GoogleCloudPlatform/Open_Data_QnA.git

cd Open_Data_QnA

Make sure that Google Cloud CLI and Python >= 3.10 are installed before moving ahead! You can refer to the link below for guidance

Installation Guide: https://cloud.google.com/sdk/docs/install

Download Python: https://www.python.org/downloads/

ℹ️ You can setup this solution with three approaches. Choose one based on your requirements:

- A) Using Jupyter Notebooks (For better view at what is happening at each stage of the solution)

- B) Using CLI (For ease of use and running with simple python commands, without the need to understand every step of the solution)

- C) Using terraform deployment including your backend APIs with UI

All commands in this cell to be run on the terminal (typically Ctrl+Shift+`) where your notebooks are running

Install the dependencies by running the poetry commands below

# Install poetry

pip uninstall poetry -y

pip install poetry --quiet

#Run the poetry commands below to set up the environment

poetry lock #resolve dependecies (also auto create poetry venv if not exists)

poetry install --quiet #installs dependencies

poetry env info #Displays the evn just created and the path to it

poetry shell #this command should activate your venv and you should see it enters into the venv

##inside the activated venv shell []

#If you are running on Worbench instance where the service account used has required permissions to run this solution you can skip the below gcloud auth commands and get to next kernel creation section

gcloud auth login # Use this or below command to authenticate

gcloud auth application-default login

gcloud services enable \

serviceusage.googleapis.com \

cloudresourcemanager.googleapis.com --project <<Enter Project Id>>

Chose the relevant instructions based on where you are running the notebook

For IDEs like Cloud Shell Editor, VS Code

For IDEs adding Juypter Extensions will automatically give you option to change the kernel. If not, manually select the python interpreter in your IDE (The exact is shown in the above cell. Path would look like e.g. /home/admin_/opendata/.venv/bin/python or ~cache/user/opendataqna/.venv/bin/python)

Proceed to the Step 1 below

For Jupyter Lab or Jupyter Environments on Workbench etc

Create Kernel for with the envrionment created

pip install jupyter

ipython kernel install --name "openqna-venv" --user

Restart your kernel or close the exsiting notebook and open again, you should now see the "openqna-venv" in the kernel drop down

What did we do here?

- Created Application Default Credentials to use for the code

- Added venv to kernel to select for running the notebooks (For standalone Jupyter setups like Workbench etc)

1. Run the 1_Setup_OpenDataQnA (Run Once for Initial Setup)

This notebook guides you through the setup and execution of the Open Data QnA application. It provides comprehensive instructions for setup the solution.

2. Run the 2_Run_OpenDataQnA

This notebook guides you by reading the configuration you setup with 1_Setup_OpenDataQnA and running the pipeline to answer questions about your data.

In case you want to separately load Known Good SQLs please run this notebook once the config variables are setup in config.ini file. This can be run multiple times just to load the known good sql queries and create embeddings for it.

1. Add Configuration values for the solution in config.ini

For setup we require details for vector store, source database etc. Edit the config.ini file and add values for the parameters based of below information.

ℹ️ Follow the guidelines from the config guide document to populate your config.ini file.

Sources to connect

- This solution lets you setup multiple data source at same time.

- You can group multiple tables from different datasets or schema into a grouping and provide the details

- If your dataset/schema has many tables and you want to run the solution against few you should specifically choose a group for that tables only

Format for data_source_list.csv

source | user_grouping | schema | table

source - Supported Data Sources. #Options: bigquery , cloudsql-pg

user_grouping - Logical grouping or use case name for tables from same or different schema/dataset. When left black it default to the schema value in the next column

schema - schema name for postgres or dataset name in bigquery

table - name of the tables to run the solutions against. Leave this column blank after filling schema/dataset if you want to run solution for whole dataset/schema

Update the data_source_list.csv according for your requirement.

Note that the source details filled in the csv should have already be present. If not please use the Copy Notebooks if you want the demo source setup.

Enabled Data Sources:

- PostgreSQL on Google Cloud SQL (Copy Sample Data: 0_CopyDataToCloudSqlPG.ipynb)

- BigQuery (Copy Sample Data: 0_CopyDataToBigQuery.ipynb)

pip install poetry --quiet

poetry lock

poetry install --quiet

poetry env info

poetry shell

Authenticate your credentials

gcloud auth login

or

gcloud auth application-default login

gcloud services enable \

serviceusage.googleapis.com \

cloudresourcemanager.googleapis.com --project <<Enter Project Id>>

gcloud auth application-default set-quota-project <<Enter Project Id for using resources>>

Enable APIs for the solution setup

gcloud services enable \

cloudapis.googleapis.com \

compute.googleapis.com \

iam.googleapis.com \

run.googleapis.com \

sqladmin.googleapis.com \

aiplatform.googleapis.com \

bigquery.googleapis.com \

firestore.googleapis.com --project <<Enter Project Id>>

3. Run env_setup.py to create vector store based on the configuration you did in Step 1

python env_setup.py

4. Run opendataqna.py to run the pipeline you just setup

The Open Data QnA SQL Generation tool can be conveniently used from your terminal or command prompt using a simple CLI interface. Here's how:

python opendataqna.py --session_id "122133131f--ade-eweq" --user_question "What is most 5 common genres we have?" --user_grouping "MovieExplorer-bigquery"

Where

session_id : Keep this unique unique same for follow up questions.

user_question : Enter your question in string

user_grouping : Enter the BQ_DATASET_NAME for BigQuery sources or PG_SCHEMA for PostgreSQL sources (refer your data_source_list.csv file)

Optional Parameters

You can customize the pipeline's behavior using optional parameters. Here are some common examples:

# Enable the SQL debugger:

python opendataqna.py --session_id="..." --user_question "..." --user_grouping "..." --run_debugger

# Execute the final generated SQL:

python opendataqna.py --session_id="..." --user_question "..." --user_grouping "..." --execute_final_sql

# Change the number of debugging rounds:

python opendataqna.py --session_id="..." --user_question "..." --user_grouping "..." --debugging_rounds 5

# Adjust similarity thresholds:

python opendataqna.py --session_id="..." --user_question "..." --user_grouping "..." --table_similarity_threshold 0.25 --column_similarity_threshold 0.4

You can find a full list of available options and their descriptions by running:

python opendataqna.py --help

The provided terraform streamlines the setup of this solution and serves as a blueprint for deployment. The script provides a one-click, one-time deployment option. However, it doesn't include CI/CD capabilities and is intended solely for initial setup.

[!NOTE] Current version of the Terraform Google Cloud provider does not support deployment of a few resources, this solution uses null_resource to create those resources using Google Cloud SDK.

Prior to executing terraform, ensure that the below mentioned steps have been completed.

- Source data should already be available. If you do not have readily available source data, use the notebooks 0_CopyDataToBigQuery.ipynb or 0_CopyDataToCloudSqlPG.ipynb based on the preferred source to populate sample data.

- Ensure that the data_source_list.csv is populated with the list of datasources to be used in this solution. Terraform will take care of creating the embeddings in the destination. Use data_source_list_sample.csv to fill the data_source_list.csv

- If you want to use known good sqls for few shot prompting, ensure that the known_good_sql.csv is populated with the required data. Terraform will take care of creating the embeddings in the destination.

Firebase will be used to host the frontend of the application.

- Go to https://console.firebase.google.com/

- Select add project and load your Google Cloud Platform project

- Add Firebase to one of your existing Google Cloud projects

- Confirm Firebase billing plan

- Continue and complete

[!NOTE]

Terraform apply command for this application uses gcloud config to fetch & pass the set project id to the scripts. Please ensure that gcloud config has been set to your intended project id before proceeding.

[!IMPORTANT]

The Terraform scripts require specific IAM permissions to function correctly. The user needs either the broadroles/resourcemanager.projectIamAdminrole or a custom role with tailored permissions to manage IAM policies and roles. Additionally, one script TEMPORARILY disables Domain Restricted Sharing Org Policies to enable the creation of a public endpoint. This requires the user to also have theroles/orgpolicy.policyAdminrole.

- Install terraform 1.7 or higher.

- [OPTIONAL] Update default values of variables in variables.tf according to your preferences. You can find the description for each variable inside the file. This file will be used by terraform to get information about the resources it needs to deploy. If you do not update these, terraform will use the already specified default values in the file.

- Move to the terraform directory in the terminal

cd Open_Data_QnA/terraform

#If you are running this outside Cloud Shell you need to set up your Google Cloud SDK Credentials

gcloud config set project <your_project_id>

gcloud auth application-default set-quota-project <your_project_id>

gcloud services enable \

serviceusage.googleapis.com \

cloudresourcemanager.googleapis.com --project <<Enter Project Id>>

sh ./scripts/deploy-all.sh

This script will perform the following steps:

- Run terraform scripts - These terraform scripts will generate all the GCP resources and configuration files required for the frontend & backend. It will also generate embeddings and store it in the destination vector db.

- Deploy cloud run backend service with latest backend code - The terraform in the previous step uses a dummy container image to deploy the initial version of cloud run service. This is the step where the actual backend code gets deployed.

- Deploy frontend app - All the config files, web app etc required to create the frontend are deployed via terraform. However, the actual UI deployment takes place in this step.

Auth Provider

You need to enable at least one authentication provider in Firebase, you can enable it using the following steps:

- Go to https://console.firebase.google.com/project/your_project_id/authentication/providers (change the

your_project_idvalue) - Click on Get Started (if needed)

- Select Google and enable it

- Set the name for the project and support email for project

- Save

This should deploy you end to end solution in the project with firebase web url

For detailed steps and known issues refer to README.md under /terraform

Deploy backend apis for the solution, refer to the README.md under /backend-apis. This APIs are designed with work with the frontend and provide access to run the solution.

Once the backend APIs deployed successfully deploy the frontend for the solution, refer to the README.md under /frontend.

If you successfully set up the solution accelerator and want to start optimizing to your needs, you can follow the tips in the Best Practice doc.

Additionally, if you stumble across any problems, take a look into the FAQ.

If neither of these resources helps, feel free to reach out to us directly by raising an Issue.

To clean up the resources provisioned in this solution, use commands below to remove them using gcloud/bq:

For cloudsql-pgvector as vector store : Delete SQL Instance

gcloud sql instances delete <CloudSQL Instance Name> -q

Delete BigQuery Dataset Created for Logs and Vector Store : Remove BQ Dataset

bq rm -r -f -d <BigQuery Dataset Name for OpenDataQnA>

(For Backend APIs)Remove the Cloud Run service : Delete Service

gcloud run services delete <Cloud Run Service Name>

For frontend, based on firebase: Remove the firebase app

BigQuery quotas including hardware, software, and network components.

Open Data QnA is distributed with the Apache-2.0 license.

It also contains code derived from the following third-party packages:

This repository provides an open-source solution accelerator designed to streamline your development process. Please be aware that all resources associated with this accelerator will be deployed within your own Google Cloud Platform (GCP) instances.

It is imperative that you thoroughly test all components and configurations in a non-production environment before integrating any part of this accelerator with your production data or systems.

While we strive to provide a secure and reliable solution, we cannot be held responsible for any data loss, service disruptions, or other issues that may arise from the use of this accelerator.

By utilizing this repository, you acknowledge that you are solely responsible for the deployment, management, and security of the resources deployed within your GCP environment.

If you encounter any issues or have concerns about potential risks, please refrain from using this accelerator in a production setting.

We encourage responsible and informed use of this open-source solution.

If you have any questions or if you found any problems with this repository, please report through GitHub issues.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Open_Data_QnA

Similar Open Source Tools

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

raggenie

RAGGENIE is a low-code RAG builder tool designed to simplify the creation of conversational AI applications. It offers out-of-the-box plugins for connecting to various data sources and building conversational AI on top of them, including integration with pre-built agents for actions. The tool is open-source under the MIT license, with a current focus on making it easy to build RAG applications and future plans for maintenance, monitoring, and transitioning applications from pilots to production.

open-repo-wiki

OpenRepoWiki is a tool designed to automatically generate a comprehensive wiki page for any GitHub repository. It simplifies the process of understanding the purpose, functionality, and core components of a repository by analyzing its code structure, identifying key files and functions, and providing explanations. The tool aims to assist individuals who want to learn how to build various projects by providing a summarized overview of the repository's contents. OpenRepoWiki requires certain dependencies such as Google AI Studio or Deepseek API Key, PostgreSQL for storing repository information, Github API Key for accessing repository data, and Amazon S3 for optional usage. Users can configure the tool by setting up environment variables, installing dependencies, building the server, and running the application. It is recommended to consider the token usage and opt for cost-effective options when utilizing the tool.

vector-vein

VectorVein is a no-code AI workflow software inspired by LangChain and langflow, aiming to combine the powerful capabilities of large language models and enable users to achieve intelligent and automated daily workflows through simple drag-and-drop actions. Users can create powerful workflows without the need for programming, automating all tasks with ease. The software allows users to define inputs, outputs, and processing methods to create customized workflow processes for various tasks such as translation, mind mapping, summarizing web articles, and automatic categorization of customer reviews.

leettools

LeetTools is an AI search assistant that can perform highly customizable search workflows and generate customized format results based on both web and local knowledge bases. It provides an automated document pipeline for data ingestion, indexing, and storage, allowing users to focus on implementing workflows without worrying about infrastructure. LeetTools can run with minimal resource requirements on the command line with configurable LLM settings and supports different databases for various functions. Users can configure different functions in the same workflow to use different LLM providers and models.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

conversational-agent-langchain

This repository contains a Rest-Backend for a Conversational Agent that allows embedding documents, semantic search, QA based on documents, and document processing with Large Language Models. It uses Aleph Alpha and OpenAI Large Language Models to generate responses to user queries, includes a vector database, and provides a REST API built with FastAPI. The project also features semantic search, secret management for API keys, installation instructions, and development guidelines for both backend and frontend components.

webwhiz

WebWhiz is an open-source tool that allows users to train ChatGPT on website data to build AI chatbots for customer queries. It offers easy integration, data-specific responses, regular data updates, no-code builder, chatbot customization, fine-tuning, and offline messaging. Users can create and train chatbots in a few simple steps by entering their website URL, automatically fetching and preparing training data, training ChatGPT, and embedding the chatbot on their website. WebWhiz can crawl websites monthly, collect text data and metadata, and process text data using tokens. Users can train custom data, but bringing custom open AI keys is not yet supported. The tool has no limitations on context size but may limit the number of pages based on the chosen plan. WebWhiz SDK is available on NPM, CDNs, and GitHub, and users can self-host it using Docker or manual setup involving MongoDB, Redis, Node, Python, and environment variables setup. For any issues, users can contact [email protected].

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

holohub

Holohub is a central repository for the NVIDIA Holoscan AI sensor processing community to share reference applications, operators, tutorials, and benchmarks. It includes example applications, community components, package configurations, and tutorials. Users and developers of the Holoscan platform are invited to reuse and contribute to this repository. The repository provides detailed instructions on prerequisites, building, running applications, contributing, and glossary terms. It also offers a searchable catalog of available components on the Holoscan SDK User Guide website.

bedrock-claude-chatbot

Bedrock Claude ChatBot is a Streamlit application that provides a conversational interface for users to interact with various Large Language Models (LLMs) on Amazon Bedrock. Users can ask questions, upload documents, and receive responses from the AI assistant. The app features conversational UI, document upload, caching, chat history storage, session management, model selection, cost tracking, logging, and advanced data analytics tool integration. It can be customized using a config file and is extensible for implementing specialized tools using Docker containers and AWS Lambda. The app requires access to Amazon Bedrock Anthropic Claude Model, S3 bucket, Amazon DynamoDB, Amazon Textract, and optionally Amazon Elastic Container Registry and Amazon Athena for advanced analytics features.

azure-search-openai-javascript

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access the ChatGPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval.

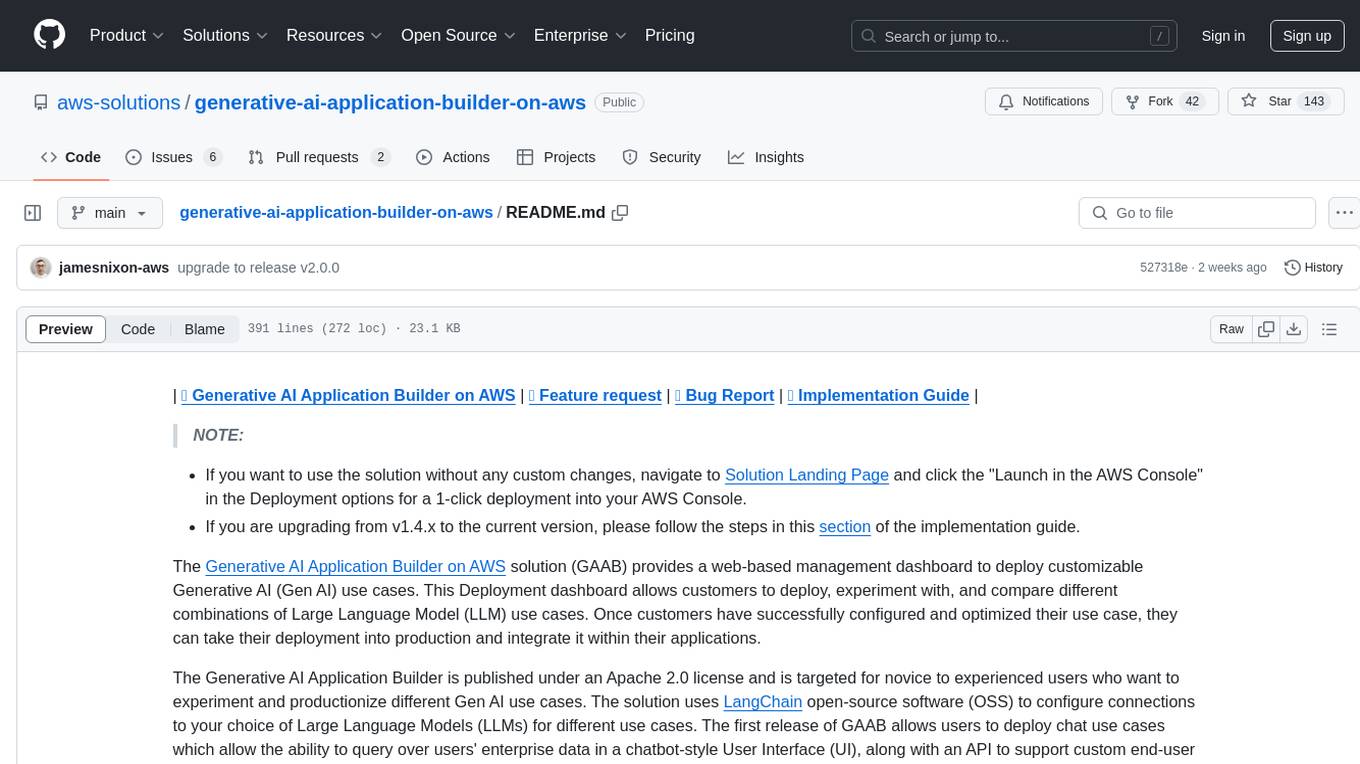

generative-ai-application-builder-on-aws

The Generative AI Application Builder on AWS (GAAB) is a solution that provides a web-based management dashboard for deploying customizable Generative AI (Gen AI) use cases. Users can experiment with and compare different combinations of Large Language Model (LLM) use cases, configure and optimize their use cases, and integrate them into their applications for production. The solution is targeted at novice to experienced users who want to experiment and productionize different Gen AI use cases. It uses LangChain open-source software to configure connections to Large Language Models (LLMs) for various use cases, with the ability to deploy chat use cases that allow querying over users' enterprise data in a chatbot-style User Interface (UI) and support custom end-user implementations through an API.

genai-for-marketing

This repository provides a deployment guide for utilizing Google Cloud's Generative AI tools in marketing scenarios. It includes step-by-step instructions, examples of crafting marketing materials, and supplementary Jupyter notebooks. The demos cover marketing insights, audience analysis, trendspotting, content search, content generation, and workspace integration. Users can access and visualize marketing data, analyze trends, improve search experience, and generate compelling content. The repository structure includes backend APIs, frontend code, sample notebooks, templates, and installation scripts.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.