chatnio

🚀 Next Generation AI One-Stop Internationalization Solution. 🚀 下一代 AI 一站式 B/C 端解决方案,支持 OpenAI,Midjourney,Claude,讯飞星火,Stable Diffusion,DALL·E,ChatGLM,通义千问,腾讯混元,360 智脑,百川 AI,火山方舟,新必应,Gemini,Moonshot 等模型,支持对话分享,自定义预设,云端同步,模型市场,支持弹性计费和订阅计划模式,支持图片解析,支持联网搜索,支持模型缓存,丰富美观的后台管理与仪表盘数据统计。

Stars: 2762

Chat Nio is a next-generation AI one-stop solution that provides a rich and user-friendly interface for interacting with various AI models. It offers features such as AI chat conversation, rich format compatibility, markdown support, message menu support, multi-platform adaptation, dialogue memory, full-model file parsing, full-model DuckDuckGo online search, full-screen large text editing, model marketplace, preset support, site announcements, preference settings, internationalization support, and a rich admin system. Chat Nio also boasts a powerful channel management system that utilizes a self-developed channel distribution algorithm, supports multi-channel management, is compatible with multiple formats, allows for custom models, supports channel retries, enables balanced load within the same channel, and provides channel model mapping and user grouping. Additionally, Chat Nio offers forwarding API services that are compatible with multiple formats in the OpenAI universal format and support multiple model compatible layers. It also provides a custom build and install option for highly customizable deployments. Chat Nio is an open-source project licensed under the Apache License 2.0 and welcomes contributions from the community.

README:

🚀 下一代 AI 一站式解决方案

🚀 Next Generation AI One-Stop Solution

English | 简体中文

Official Website | Docs | SDKs | QQ Group

- ✨ AI Chat Conversation

- Rich Format Compatibility

-

Markdown Support / Theme Switching Support, supports light and dark modes, code highlighting, Mermaid, LaTeX formulas, tables, progress bars, Virtual Message, etc.

-

Support for Message Menu, including re-answering, copying messages, using messages, editing messages, deleting messages, saving as a file, and more operations...

- Support for Multi-platform Adaptation, supports PWA apps, desktop platforms (desktop is based on Tauri).

-

Dialogue Memory, cloud synchronization, native support for direct link sharing of site conversations, supports using shared conversations, saving shared conversations as images, and share management (including viewing, deleting shares, etc.).

-

Native Support for Full Model File Parsing, supports pdf, docx, pptx, xlsx, images, and other formats parsing (for more details, see the project chatnio-blob-service).

- Supports Full-model DuckDuckGo Online Search (for details, refer to the duckduckgo-api project, needs to be set up on your own and configured in the internet settings in the system settings, thanks to the author @binjie09, enable web searching by prefixing the relay api model with web-.)

-

Full-screen Large Text Editing, supports plain text editing, edit-preview mode, pure preview mode three mode switching.

-

Model Marketplace, supports model search, supports drag-and-drop in sequence, includes model name, model description, model Tags, model avatar, automatic binding of model pricing, automatic binding of subscription quotas (models included in the subscription will be tagged with plus label)

-

Support for Preset, supports custom presets and cloud synchronization features, supports preset cloning, preset avatar settings, preset introduction settings

- Support for Site Announcements Supports site announcements and notifications

-

Support for Preference Settings, i18n multi-language support, custom maximum number of carry-over sessions, maximum reply tokens number, model parameters customization, reset settings, etc.

- Internationalization Support, support multi-language switching

- Additional (User group permissions for additional functions can be enabled and disabled through backend system settings)

- [Discontinued] 🍎 AI project generator function, supports viewing of the generation process, supports TAR / ZIP format downloads (based on presets, may be unstable)

- [Discontinued] 📂 Bulk article generation function, supports progress bar, one-click generation of DOCX documents in TAR / ZIP format download (requires a generation quantity higher than the highest concurrency number of the upstream model)

- [Deprecated] 🥪 AI Card feature (deprecated), AI questions and answers are presented in the form of cards, can be directly embedded via image url. (Based on dynamic SVG generation)

- 🔔 Rich Admin System

-

Rich and beautiful dashboard, including current day and month's crediting information, subscribers, model usage statistics line charts, pie charts, revenue statistics, user type statistics, model usage statistics, request counts and model error counts statistics charts, etc.

- Support user management, User list, User details, Administrative operations (Change password, Change mailbox, Block/Unblock user, Set as administrator, Points change, Points setup, Subscription management, Subscription level setup, Release subscription, etc).

- Support Gift Code and Redemption Code Management Support management operations, support batch generation and save to file.

-

Price setting, support model price setting (Counts billing, Token flexible billing, No billing etc.), support synchronize the price setting of upstream Chat Nio site (optional whether to override the price rules of the existing models on this site), unset price model detection (if non-administrator will automatically detect and stop using the model to prevent loss of money). to prevent loss of money)

!

! -

Subscription Settings, different from flexible billing, subscription is a kind of fixed price per time billing, platform users can subscribe to a fixed price through the package, support whether to enable subscription (default off), support subscription tiering, support subscription quota settings, support subscription quotas cover the model settings, icon settings, quotas imported from other packages and other functions.

!

! -

Customize Model Marketplace, Edit Frontend Model Marketplace Model Name, Description, Tags, Avatar (Built-in Model Image Selection and Custom Model Image Setting), Whether or not to add Model Models and other information!

-

System Settings, Customized Site Name, Site Logo, Documentation Link, Whether to Suspend Registration, User Initial Points Settings, Customized Purchase Link (Card Address), Contact Information, Footer Information, etc. !

- SMTP support, support whether to enable email suffix whitelisting, support customized email suffix whitelisting.

- Support model caching, i.e., under the same entry, if it has been requested before, it will return the cached result directly (hit caching will not be billed), to reduce the number of requests. You can customize the maximum number of cached results for a case (default is 1), customize the models that can be cached (default is empty), customize the caching time (default is 1 hour), support one-click settings All models are not cached, Free models are cached, All models are cached, and other operations.

-

Rich and beautiful dashboard, including current day and month's crediting information, subscribers, model usage statistics line charts, pie charts, revenue statistics, user type statistics, model usage statistics, request counts and model error counts statistics charts, etc.

- ⚡ Channel Management System

- Chat Nio uses a self-developed channel distribution algorithm (doesn't rely on http context), abstracts Adapter compatibility layer architecture, low coupling, and high scalability.

- Supports Multi-channel Management, supports priority allocation, weight load, channel status management, etc. (Priority refers to the allocation of channel priority during the model request process, where higher priority channels are used first. If an error occurs, the system will automatically fall back to a channel of lower priority; weight refers to the weight of a channel within the same priority, with higher weight increasing the probability of being used. For channels at the same priority level, only one can be used at any time, and the higher the weight, the higher the probability of being selected.)

- Compatible With Multiple Formats, supports multiple model compatibility layers, see the model support section below for details.

- Supports Custom Models, allows for the use of known models through adding models, supports the addition of custom models, supports one-click filling of template models (referring to the template models supported by the current format by default, such as OpenAI format template models like gpt-3.5-turbo-0613), supports one-click clearing of models.

- Supports Channel Retries, supports a Retry mechanism for channels, allows for custom retry counts, after surpassing the retry count the system will automatically fall.

- Supports Balanced Load Within The Same Channel, a single channel can be configured with multiple keys instead of creating channels in bulk (multiple keys separated by line breaks), assigning requests with equal weight randomly, and the Retry mechanism will also be used in conjunction with multiple keys within the same channel, randomly selecting keys for retries.

-

Supports Channel Model Mapping, maps models to the models already supported by this channel, format as target model>existing model, adding a prefix

!allows existing models not to be allocated in the channel's hit models, for the specific usage method, please refer to the instructions and tips in the channel settings within the program. - Supports User Grouping, custom selection of user groups that can use this model (such as anonymous users, regular users, basic subscription users, standard subscription users, professional subscription users, etc., setting to group 0 available and setting to all groups available are the same effect).

-

Built-in Upstream Hiding, automatically hides the upstream URL set within the channel when an error occurs (e.g., channel://2/v1/chat/completions), also supports hiding keys (Gemini, you're really good job), to prevent upstream channels from being misused in cases where keys are not set or upstream error information exposes the full key (such as reverse-type channels), and also facilitates troubleshooting in cases where multiple channels serve the same access point simultaneously.

- ✨ Forwarding API Services

- Compatible with multiple formats in the OpenAI universal format and supporting multiple model compatible layers, this means you can use a format while supporting multiple AI models.

- Replace

https://api.openai.comwithhttps://api.chatnio.net(example), enter the API Key fromAPI Settingsin the console to use, support for resetting Key. - Supported Formats

- [x] Chat Completions (/v1/chat/completions)

- [x] Image Generation (/v1/images)

- [x] Model List (/v1/models)

- [x] Dashboard Billing (/v1/billing)

- 🎃 More features waiting for your discovery...

- [x] OpenAI

- [x] Chat Completions (support vision, tools_calling and function_calling)

- [x] Image Generation

- [x] Azure OpenAI

- [x] Anthropic Claude (support vision)

- [x] Slack Claude (deprecated)

- [x] Sparkdesk (support function_calling)

- [x] Google Gemini (PaLM2)

- [x] New Bing (creative, balanced, precise)

- [x] ChatGLM (turbo, pro, std, lite)

- [x] DashScope Tongyi (plus, turbo)

- [x] Midjourney

- [x] Mode Toggling (relax, fast, turbo)

- [x] Support U/V/R Actions

- [x] Tencent Hunyuan

- [x] Baichuan AI

- [x] Moonshot AI

- [x] Groq Cloud AI

- [x] ByteDance Skylark (support function_calling)

- [x] 360 GPT

- [x] LocalAI (Stable Diffusion, RWKV, LLaMa 2, Baichuan 7b, Mixtral, ...) *requires local deployment

Once deployment is successful, the administrator account is root, the default password is chatnio123456

-

⚡ Install with Docker Compose (Recommended)

The host mapping address is

http://localhost:8000after successful operation.git clone --depth=1 --branch=main --single-branch https://github.com/Deeptrain-Community/chatnio.git cd chatnio docker-compose up -d # Running the service # To use the stable version, replace with `docker-compose -f docker-compose.stable.yaml up -d`. # To enable automatic updates with Watchtower, replace with `docker-compose -f docker-compose.watch.yaml up -d`.

Version Update (With Watchtower auto-updating enabled, there is no need for manual updates):

docker-compose down docker-compose pull docker-compose up -d

- Mount directory for MySQL database: ~/db

- Mount directory for Redis database: ~/redis

- Mount directory for configuration files: ~/config

-

⚡ Docker installation (when running lightweight, it is often used in external Mysql/RDS service)

The host mapping address is

http://localhost:8094after successful operation. If you need to use the stable version, please useprogramzmh/chatnio:stableinstead ofprogramzmh/chatnio:latest.docker run -d --name chatnio \ --network host \ -v ~/config:/config \ -v ~/logs:/logs \ -v ~/storage:/storage \ -e MYSQL_HOST=localhost \ -e MYSQL_PORT=3306 \ -e MYSQL_DB=chatnio \ -e MYSQL_USER=root \ -e MYSQL_PASSWORD=chatnio123456 \ -e REDIS_HOST=localhost \ -e REDIS_PORT=6379 \ -e SECRET=secret \ -e SERVE_STATIC=true \ programzmh/chatnio:latest

- --network host Assigns the container to use the host network, allowing it to access the host's network. You can modify this as needed.

- SECRET: JWT Secret Key, generate a random string to modify

- SERVE_STATIC: Whether to enable static file service (normally there is no need to change this item, see below for answers to frequently asked questions)

- -v ~/config:/config mount host machine directory of configuration file, -v ~/logs:/logs mount host machine directory of log file,-v ~/storage:/storage mount generated files of additional functions

- You need to configure MySQL and Redis services, please refer to the information above to modify the environment variables yourself.

Version Update (With Watchtower auto-updating enabled, there is no need for manual updates, Just run through the steps above again after execution.):

docker stop chatnio docker rm chatnio docker pull programzmh/chatnio:latest

-

⚒ Custom Build and Install (Highly Customizable)

After deployment, the default port is 8094, and the access address is

http://localhost:8094. Configuration options (~/config/config.yaml) can be overridden using environment variables, such as theMYSQL_HOSTenvironment variable can override themysql.hostconfiguration item.git clone https://github.com/Deeptrain-Community/chatnio.git cd chatnio cd app npm install -g pnpm pnpm install pnpm build cd .. go build -o chatnio nohup ./chatnio > output.log & # using nohup to run in background

-

Why does my deployed site have access to pages, can sign in, but can't use chat (keeps going in circles)?

- Chat and other such features communicate through websockets; please ensure that your server supports websockets. (Tip: Transmission through HTTP can be achieved, without the need for websocket support)

- If you are using reverse proxies such as Nginx, Apache, please make sure you have configured websocket support.

- If you are using services like port mapping, port forwarding, CDN, API Gateway, etc., ensure that your service supports and has WebSocket enabled.

-

My Midjourney Proxy-formatted channel keeps spinning or showing an error message

please provide available notify url- If it's going around in circles, make sure your Midjourney Proxy service is up and running and that you've configured the correct upstream address.

- Midjourney To fill in the channel type, use Midjourney instead of OpenAI (IDK why a lot of people fill in the OpenAI type format and then come back with feedback on why it's an empty response, except for the mj-chat class).

- After resolving these issues, please check if the backend domain is properly configured in your system settings. If it's not set up, it may prevent the Midjourney Proxy service from functioning correctly.

-

What external dependencies does this project have?

- MySQL: Store persistent data such as user information, chat records, and administrator details.

- Redis: Storing user quick authentication information, IP rate limits, subscription quotas, email verification codes, etc.

- If the environment is not properly set up, it may cause the service to fail. Please ensure that your MySQL and Redis services are running smoothly (for Docker deployment, you'll need to set up external services manually).

-

My server is ARM architecture, does this project support ARM architecture?

- Yes. The Chat Nio project uses BuildX to build multi-arch images, so you can run it directly with docker-compose or docker without any additional configuration.

- If you are using a build installation, you can compile directly on the ARM machine without any additional configuration. If you are compiling on an x86 machine, please use

GOARCH=arm64 go build -o chatniofor cross-compilation and upload it to the ARM machine for execution.

-

How do I change the default root password?

- Please click on the avatar in the top right corner or the user box at the bottom of the sidebar to go to the backend management, click on the Modify Root Password under the Operation Bar of the General Settings in the System Settings. Alternatively, you can choose to modify the root user's password in the User Management.

-

What is the backend domain in the System Settings?

- The backend domain refers to the address of the backend API service, which is usually the address of the site followed by

/api, such ashttps://example.com/api. - If set to non-SERVE_STATIC mode, enabling frontend and backend separation deployment, please set the backend domain to the address of your backend API service, such as

https://api.example.com. - The backend domain is used here for the backend callback address of the Midjourney Proxy service; if you do not need to use the Midjourney Proxy service, please ignore this setting.

- The backend domain refers to the address of the backend API service, which is usually the address of the site followed by

-

How do I configure the payment method?

- The Chat Nio open-source version supports the card issuance model; simply set the Purchase Link in the System Settings to your card issuance address. Card codes can be generated in bulk through the Gift Code Management in User Management.

-

What is the difference between gift codes and redemption codes?

- A gift code can only be bound once by a single user, and it is not an aff code or a way to distribute benefits; it can be redeemed from the Gift Code option under the avatar dropdown menu.

- A redemption code can be bound by multiple users and can be used for normal purchases and card issuance; it can be generated in bulk through the Redemption Code Management in User Management and redeemed by entering the code in the Points option (the first item in the menu) under the avatar dropdown menu.

- For example: If I distribute a welfare item with the type Happy New Year, it is recommended to use gift codes; assuming 100 codes with 66 points each, if they were redemption codes, a quick user could use up all 6600 points, but gift codes ensure each user can use them only once (receiving 66 points).

- When setting up card issuance, if gift codes are used, the limitation of using once per type may lead to redemption failures when purchasing multiple gift codes, but redemption codes can be used in this scenario.

-

Does the project support deployment on Vercel?

- Chat Nio itself does not directly support Vercel deployment, but you can use a client-server separation mode where you deploy the frontend on Vercel and the backend with Docker or compiled deployment.

-

What is the client-server separation deployment mode?

- Normally, the frontend and backend are in the same service, with the backend accessible at

/api. In client-server separation deployment, the frontend and backend are deployed on separate services: the frontend as a static file service and the backend as an API service.- For example, the frontend is deployed using Nginx (or Vercel), with a domain like

https://www.chatnio.net. - The backend is deployed with Docker, with a domain like

https://api.chatnio.net.

- For example, the frontend is deployed using Nginx (or Vercel), with a domain like

- You need to manually package the frontend and set the environment variable

VITE_BACKEND_ENDPOINTto your backend URL, such ashttps://api.chatnio.net. - To configure the backend, set

SERVE_STATIC=falseto prevent it from serving static files.

- Flexible Billing and Subscriptions Explained

-

Billing on Demand (Points), represented by a cloud icon, is a universal pricing model where 10 points are fixed at 1 yuan (CNY). Custom exchange rates can be set in the billing rules' embedded templates. - Subscriptions are fixed-price plans with per-item quotas. Users must have sufficient points to subscribe (e.g., if a user wants to subscribe to a plan for 32 yuan, they need at least 320 points). Subscriptions are a combination of Items, each defining covered models, quotas (-1 for unlimited), names, IDs, icons, and more. Managed in the subscription management section, you can enable subscriptions, set prices, edit Items per subscription level, and import Items from other levels.

- Subscriptions support three predefined tiers: Regular Users (0) , Basic Subscription (1) , Standard Subscription (2) , Professional Subscription (3). Subscription levels serve as user groups, which can be configured in channel management, selecting which user groups can access these models.

- Quotas for subscriptions can be managed, including whether to allow API forwarding (default off).

- Minimum Point Request Detection for

User Quota Insufficient

- To prevent misuse, if a request for points falls below the minimum threshold, an error message indicating insufficient points will be returned. Requests above or equal to the minimum are processed normally.

- Minimum request point rules for models:

- Free models have no limit.

- Per-use models require at least one request point (e.g., if a model costs 0.1 points per use, the minimum is 0.1 points).

- Token-based elastic billing models have a minimum of 1K input token price plus 1K output token price (e.g., if 1K input tokens cost 0.05 points and 1K output tokens cost 0.1 points, the minimum is 0.15 points).

-

Notes for setting up DuckDuckGo API

- First of all, thanks to the author Binjie for the duckduckgo-api project, which provides the connected search function (prompt implementation) for Chat Nio.

- The DDG API service needs to be set up by yourself. The default site of Binjie author often runs out of quota. Please set up by yourself and set it in the network settings in the system settings.

- DuckDuckGo cannot be used in China's network environment, please use a proxy or overseas server to build DDG API endpoints.

- After successful deployment, please test

https://your.domain/search?q=hito simply test whether the setup is successful. If it cannot be accessed, please check whether your DDG API service is running properly or seek help from the original project. - After successful deployment, please go to the network settings in the system settings, set your DDG API endpoint address (do not add suffix

/search), the maximum number of results is5by default (results set to 0 or negative numbers default to 5) - You can use connected search normally after enabling it in the chat. If it still cannot be used, there is usually a problem with the model (such as GPT-3.5 sometimes fails to understand).

- This connected search is implemented through preset, which means it can guarantee the universal function that all models can support. Compatibility cannot guarantee sensitivity. It doesn't rely on model Function Calling. Other models that support connection can choose to turn off this function directly.

-

Why can't my GPT-4-All and other reverse models use the images in uploaded files?

- Uploaded model images are in Base64 format. If the reverse engine does not support Base64 format, use a URL direct link instead of uploading the file.

-

How to start strict cross-origin domain inspection?

- Normally, the backend is open to cross-origin requests for all domains. There is no need to enable strict cross-origin detection if there is no specific requirement.

- If strict cross-origin detection needs to be enabled, it can be configured in the backend environment variable and

ALLOW_ORIGINS, such asALLOW_ORIGINS=chatnio.net,chatnio.app(no protocol prefix is required, and www parsing does not need to be added manually, The backend will automatically identify and allow cross-domain). In this way, strict cross-origin detection will be supported (such as http://www.chatnio.app, https://chatnio.net will be allowed, and other domains will be rejected). - Even when strict cross-origin detection is enabled, /v1 interface will still allow cross-origin requests from all domains to ensure normal use of the transfer API.

-

How is the model mapping feature used?

- Model mappings within a channel are in the format

[from]>[to], with line breaks between mappings, from is the model requested, to is the model actually sent upstream and needs to be actually supported by the upstream. - e.g. I have a reverse channel, fill in

gpt-4-all>gpt-4, then when my user requests a gpt-4-all model from that channel, the backend will model map to gpt-4 to request gpt-4 from that channel, which supports 2 models, gpt-4 and gpt-4-all (essentially both gpt-4 and gpt-4-all). Both are essentially gpt-4). - If I don't want my reverse channel to affect the gpt-4 channel group, I can prefix it with

!gpt-4-all>gpt-4, and the channel gpt-4 will be ignored, and the channel will only support 1 model, gpt-4-all (but is essentially gpt-4).

- Model mappings within a channel are in the format

- Frontend: React + Radix UI + Tailwind CSS + Shadcn + Tremor + Redux

- Backend: Golang + Gin + Redis + MySQL

- Application Technology: PWA + WebSocket

APIs are divided into Gateway APIs and exclusive features of Chat Nio.

The transfer API is a general format for OpenAI, supporting various formats. For details, see the OpenAI API documentation and SDKs.

The following SDKs are unique features of Chat Nio API SDKs

- JavaScript SDK

- Python SDK

- Golang SDK

- Java SDK (Thanks to @hujiayucc)

- PHP SDK (Thanks to @hujiayucc)

Frontend projects here refer to projects that focus on user chat interfaces, backend projects refer to projects that focus on API transfer and management, and one-stop projects refer to projects that include user chat interfaces and API transfer and management

- Next Chat @yidadaa (Front-end Oriented Projects)

- Lobe Chat @arvinxx (Front-end Oriented Projects)

- Chat Box @bin-huang (Front-end Oriented Projects)

- OpenAI Forward @kenyony (Back-end Oriented Projects)

- One API @justsong (Back-end Oriented Projects)

- New API @calon (Back-end Oriented Projects)

- FastGPT @labring (Knowledge Base)

- Quivr @quivrhq (Knowledge Base)

- Bingo @weaigc (Knowledge Base)

- Midjourney Proxy @novicezk (Model Library)

Apache License 2.0

- LightXi Provide Font CDN support

- BootCDN & Static File Provide Resources CDN support

Chat Nio leans towards a one-stop service, integrating user chat interface and API intermediary and management projects.

- Compared to projects like NextChat which are front-end and lightweight deployment-oriented, Chat Nio's advantages include more convenient cloud synchronization, account management, richer sharing functionalities, as well as a billing management system.

- Compared to projects like OneAPI which are backend and lightweight deployment-oriented, Chat Nio's advantages include a richer user interface, a more comprehensive channel management system, richer user management, and the introduction of a subscription management system aimed at the user interface.

The advantage of a one-stop service is that users can perform all operations on one site without the need to frequently switch sites, making it more convenient. This includes more convenient viewing of one's points, the consumption of points by messages, and subscription quotas. While using the chat interface, it also opens up intermediary APIs and Chat Nio's unique functional APIs.

At the same time, we strive to achieve Chat Nio > Next Chat + One API, realizing richer functionalities and more detailed experiences.

Additionally, since the developers are still in school, the development progress of Chat Nio may be affected. If we consider this issue to be non-essential, we will postpone dealing with it or choose to close it directly, not accepting any form of urging. We highly welcome PR contributions, and we are very thankful for your contribution.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chatnio

Similar Open Source Tools

chatnio

Chat Nio is a next-generation AI one-stop solution that provides a rich and user-friendly interface for interacting with various AI models. It offers features such as AI chat conversation, rich format compatibility, markdown support, message menu support, multi-platform adaptation, dialogue memory, full-model file parsing, full-model DuckDuckGo online search, full-screen large text editing, model marketplace, preset support, site announcements, preference settings, internationalization support, and a rich admin system. Chat Nio also boasts a powerful channel management system that utilizes a self-developed channel distribution algorithm, supports multi-channel management, is compatible with multiple formats, allows for custom models, supports channel retries, enables balanced load within the same channel, and provides channel model mapping and user grouping. Additionally, Chat Nio offers forwarding API services that are compatible with multiple formats in the OpenAI universal format and support multiple model compatible layers. It also provides a custom build and install option for highly customizable deployments. Chat Nio is an open-source project licensed under the Apache License 2.0 and welcomes contributions from the community.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

Stable-Diffusion-Android

Stable Diffusion AI is an easy-to-use app for generating images from text or other images. It allows communication with servers powered by various AI technologies like AI Horde, Hugging Face Inference API, OpenAI, StabilityAI, and LocalDiffusion. The app supports Txt2Img and Img2Img modes, positive and negative prompts, dynamic size and sampling methods, unique seed input, and batch image generation. Users can also inpaint images, select faces from gallery or camera, and export images. The app offers settings for server URL, SD Model selection, auto-saving images, and clearing cache.

restai

RestAI is an AIaaS (AI as a Service) platform that allows users to create and consume AI agents (projects) using a simple REST API. It supports various types of agents, including RAG (Retrieval-Augmented Generation), RAGSQL (RAG for SQL), inference, vision, and router. RestAI features automatic VRAM management, support for any public LLM supported by LlamaIndex or any local LLM supported by Ollama, a user-friendly API with Swagger documentation, and a frontend for easy access. It also provides evaluation capabilities for RAG agents using deepeval.

ai_automation_suggester

An integration for Home Assistant that leverages AI models to understand your unique home environment and propose intelligent automations. By analyzing your entities, devices, areas, and existing automations, the AI Automation Suggester helps you discover new, context-aware use cases you might not have considered, ultimately streamlining your home management and improving efficiency, comfort, and convenience. The tool acts as a personal automation consultant, providing actionable YAML-based automations that can save energy, improve security, enhance comfort, and reduce manual intervention. It turns the complexity of a large Home Assistant environment into actionable insights and tangible benefits.

KlicStudio

Klic Studio is a versatile audio and video localization and enhancement solution developed by Krillin AI. This minimalist yet powerful tool integrates video translation, dubbing, and voice cloning, supporting both landscape and portrait formats. With an end-to-end workflow, users can transform raw materials into beautifully ready-to-use cross-platform content with just a few clicks. The tool offers features like video acquisition, accurate speech recognition, intelligent segmentation, terminology replacement, professional translation, voice cloning, video composition, and cross-platform support. It also supports various speech recognition services, large language models, and TTS text-to-speech services. Users can easily deploy the tool using Docker and configure it for different tasks like subtitle translation, large model translation, and optional voice services.

OllamaSharp

OllamaSharp is a .NET binding for the Ollama API, providing an intuitive API client to interact with Ollama. It offers support for all Ollama API endpoints, real-time streaming, progress reporting, and an API console for remote management. Users can easily set up the client, list models, pull models with progress feedback, stream completions, and build interactive chats. The project includes a demo console for exploring and managing the Ollama host.

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

KrillinAI

KrillinAI is a video subtitle translation and dubbing tool based on AI large models, featuring speech recognition, intelligent sentence segmentation, professional translation, and one-click deployment of the entire process. It provides a one-stop workflow from video downloading to the final product, empowering cross-language cultural communication with AI. The tool supports multiple languages for input and translation, integrates features like automatic dependency installation, video downloading from platforms like YouTube and Bilibili, high-speed subtitle recognition, intelligent subtitle segmentation and alignment, custom vocabulary replacement, professional-level translation engine, and diverse external service selection for speech and large model services.

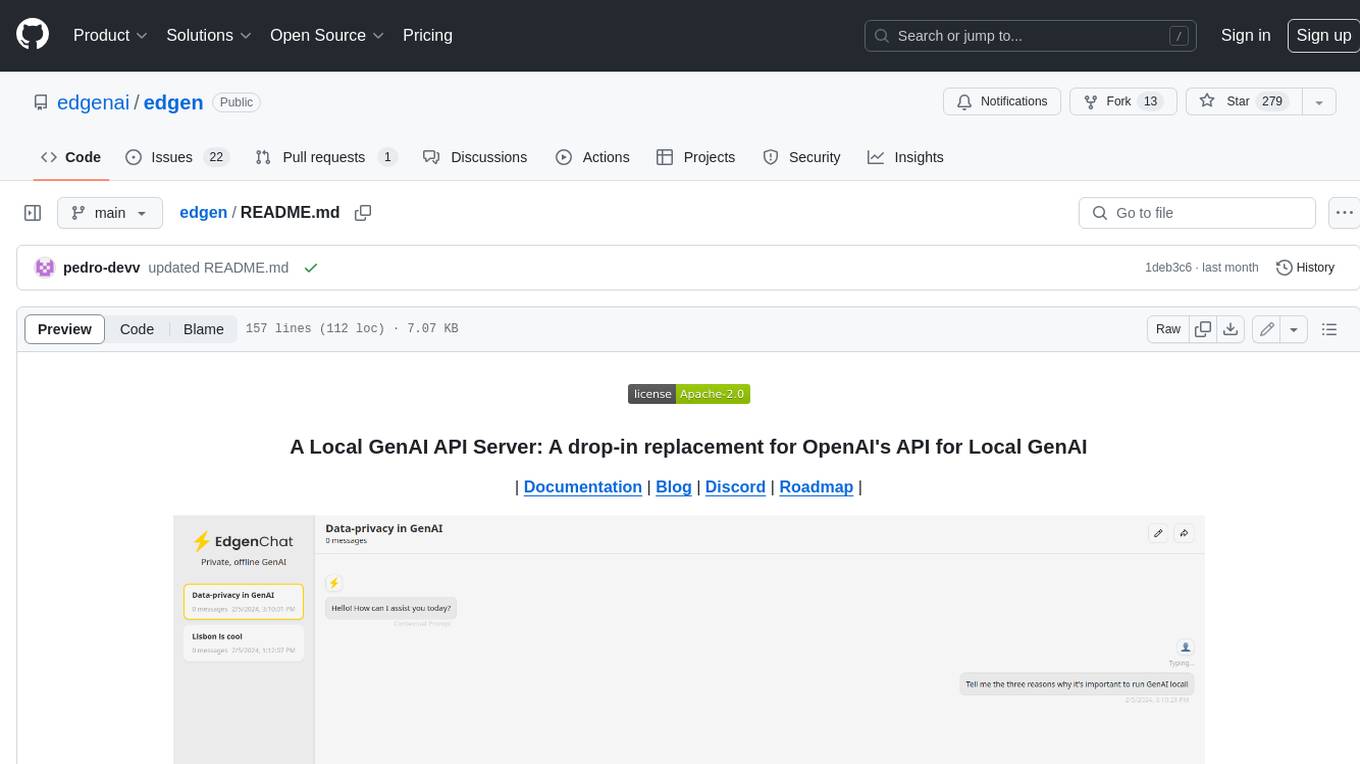

edgen

Edgen is a local GenAI API server that serves as a drop-in replacement for OpenAI's API. It provides multi-endpoint support for chat completions and speech-to-text, is model agnostic, offers optimized inference, and features model caching. Built in Rust, Edgen is natively compiled for Windows, MacOS, and Linux, eliminating the need for Docker. It allows users to utilize GenAI locally on their devices for free and with data privacy. With features like session caching, GPU support, and support for various endpoints, Edgen offers a scalable, reliable, and cost-effective solution for running GenAI applications locally.

hal-9100

This repository is now archived and the code is privately maintained. If you are interested in this infrastructure, please contact the maintainer directly.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

Easy-Translate

Easy-Translate is a script designed for translating large text files with a single command. It supports various models like M2M100, NLLB200, SeamlessM4T, LLaMA, and Bloom. The tool is beginner-friendly and offers seamless and customizable features for advanced users. It allows acceleration on CPU, multi-CPU, GPU, multi-GPU, and TPU, with support for different precisions and decoding strategies. Easy-Translate also provides an evaluation script for translations. Built on HuggingFace's Transformers and Accelerate library, it supports prompt usage and loading huge models efficiently.

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

portia-sdk-python

Portia AI is an open source developer framework for predictable, stateful, authenticated agentic workflows. It allows developers to have oversight over their multi-agent deployments and focuses on production readiness. The framework supports iterating on agents' reasoning, extensive tool support including MCP support, authentication for API and web agents, and is production-ready with features like attribute multi-agent runs, large inputs and outputs storage, and connecting any LLM. Portia AI aims to provide a flexible and reliable platform for developing AI agents with tools, authentication, and smart control.

For similar tasks

blog

这是一个程序员关于 ChatGPT 学习过程的记录,其中包括了 ChatGPT 的使用技巧、相关工具和资源的整理,以及一些个人见解和思考。 **使用技巧** * **充值 OpenAI API**:可以通过 https://beta.openai.com/account/api-keys 进行充值,支持信用卡和 PayPal。 * **使用专梯**:推荐使用稳定的专梯,可以有效提高 ChatGPT 的访问速度和稳定性。 * **使用魔法**:可以通过 https://my.x-air.app:666/#/register?aff=32853 访问 ChatGPT,无需魔法即可访问。 * **下载各种 apk**:可以通过 https://apkcombo.com 下载各种安卓应用的 apk 文件。 * **ChatGPT 官网**:ChatGPT 的官方网站是 https://ai.com。 * **Midjourney**:Midjourney 是一个生成式 AI 图像平台,可以通过 https://midjourney.com 访问。 * **文本转视频**:可以通过 https://www.d-id.com 将文本转换为视频。 * **国内大模型**:国内也有很多大模型,如阿里巴巴的通义千问、百度文心一言、讯飞星火、阿里巴巴通义听悟等。 * **查看 OpenAI 状态**:可以通过 https://status.openai.com/ 查看 OpenAI 的服务状态。 * **Canva 画图**:Canva 是一个在线平面设计平台,可以通过 https://www.canva.cn 进行画图。 **相关工具和资源** * **文字转语音**:可以通过 https://modelscope.cn/models?page=1&tasks=text-to-speech&type=audio 找到文字转语音的模型。 * **可好好玩玩的项目**: * https://github.com/sunner/ChatALL * https://github.com/labring/FastGPT * https://github.com/songquanpeng/one-api * **个人博客**: * https://baoyu.io/ * https://gorden-sun.notion.site/527689cd2b294e60912f040095e803c5?v=4f6cc12006c94f47aee4dc909511aeb5 * **srt 2 lrc 歌词**:可以通过 https://gotranscript.com/subtitle-converter 将 srt 格式的字幕转换为 lrc 格式的歌词。 * **5 种速率限制**:OpenAI API 有 5 种速率限制:RPM(每分钟请求数)、RPD(每天请求数)、TPM(每分钟 tokens 数量)、TPD(每天 tokens 数量)、IPM(每分钟图像数量)。 * **扣子平台**:coze.cn 是一个扣子平台,可以提供各种扣子。 * **通过云函数免费使用 GPT-3.5**:可以通过 https://juejin.cn/post/7353849549540589587 免费使用 GPT-3.5。 * **不蒜子 统计网页基数**:可以通过 https://busuanzi.ibruce.info/ 统计网页的基数。 * **视频总结和翻译网页**:可以通过 https://glarity.app/zh-CN 总结和翻译视频。 * **视频翻译和配音工具**:可以通过 https://github.com/jianchang512/pyvideotrans 翻译和配音视频。 * **文字生成音频**:可以通过 https://www.cnblogs.com/jijunjian/p/18118366 将文字生成音频。 * **memo ai**:memo.ac 是一个多模态 AI 平台,可以将视频链接、播客链接、本地音视频转换为文字,支持多语言转录后翻译,还可以将文字转换为新的音频。 * **视频总结工具**:可以通过 https://summarize.ing/ 总结视频。 * **可每天免费玩玩**:可以通过 https://www.perplexity.ai/ 每天免费玩玩。 * **Suno.ai**:Suno.ai 是一个 AI 语言模型,可以通过 https://bibigpt.co/ 访问。 * **CapCut**:CapCut 是一个视频编辑软件,可以通过 https://www.capcut.cn/ 下载。 * **Valla.ai**:Valla.ai 是一个多模态 AI 模型,可以通过 https://www.valla.ai/ 访问。 * **Viggle.ai**:Viggle.ai 是一个 AI 视频生成平台,可以通过 https://viggle.ai 访问。 * **使用免费的 GPU 部署文生图大模型**:可以通过 https://www.cnblogs.com/xuxiaona/p/18088404 部署文生图大模型。 * **语音转文字**:可以通过 https://speech.microsoft.com/portal 将语音转换为文字。 * **投资界的 ai**:可以通过 https://reportify.cc/ 了解投资界的 ai。 * **抓取小视频 app 的各种信息**:可以通过 https://github.com/NanmiCoder/MediaCrawler 抓取小视频 app 的各种信息。 * **马斯克 Grok1 开源**:马斯克的 Grok1 模型已经开源,可以通过 https://github.com/xai-org/grok-1 访问。 * **ChatALL**:ChatALL 是一个跨端支持的聊天机器人,可以通过 https://github.com/sunner/ChatALL 访问。 * **零一万物**:零一万物是一个 AI 平台,可以通过 https://www.01.ai/cn 访问。 * **智普**:智普是一个 AI 语言模型,可以通过 https://chatglm.cn/ 访问。 * **memo ai 下载**:可以通过 https://memo.ac/ 下载 memo ai。 * **ffmpeg 学习**:可以通过 https://www.ruanyifeng.com/blog/2020/01/ffmpeg.html 学习 ffmpeg。 * **自动生成文章小工具**:可以通过 https://www.cognition-labs.com/blog 生成文章。 * **简易商城**:可以通过 https://www.cnblogs.com/whuanle/p/18086537 搭建简易商城。 * **物联网**:可以通过 https://www.cnblogs.com/xuxiaona/p/18088404 学习物联网。 * **自定义表单、自定义列表、自定义上传和下载、自定义流程、自定义报表**:可以通过 https://www.cnblogs.com/whuanle/p/18086537 实现自定义表单、自定义列表、自定义上传和下载、自定义流程、自定义报表。 **个人见解和思考** * ChatGPT 是一个强大的工具,可以用来提高工作效率和创造力。 * ChatGPT 的使用门槛较低,即使是非技术人员也可以轻松上手。 * ChatGPT 的发展速度非常快,未来可能会对各个行业产生深远的影响。 * 我们应该理性看待 ChatGPT,既要看到它的优点,也要意识到它的局限性。 * 我们应该积极探索 ChatGPT 的应用场景,为社会创造价值。

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

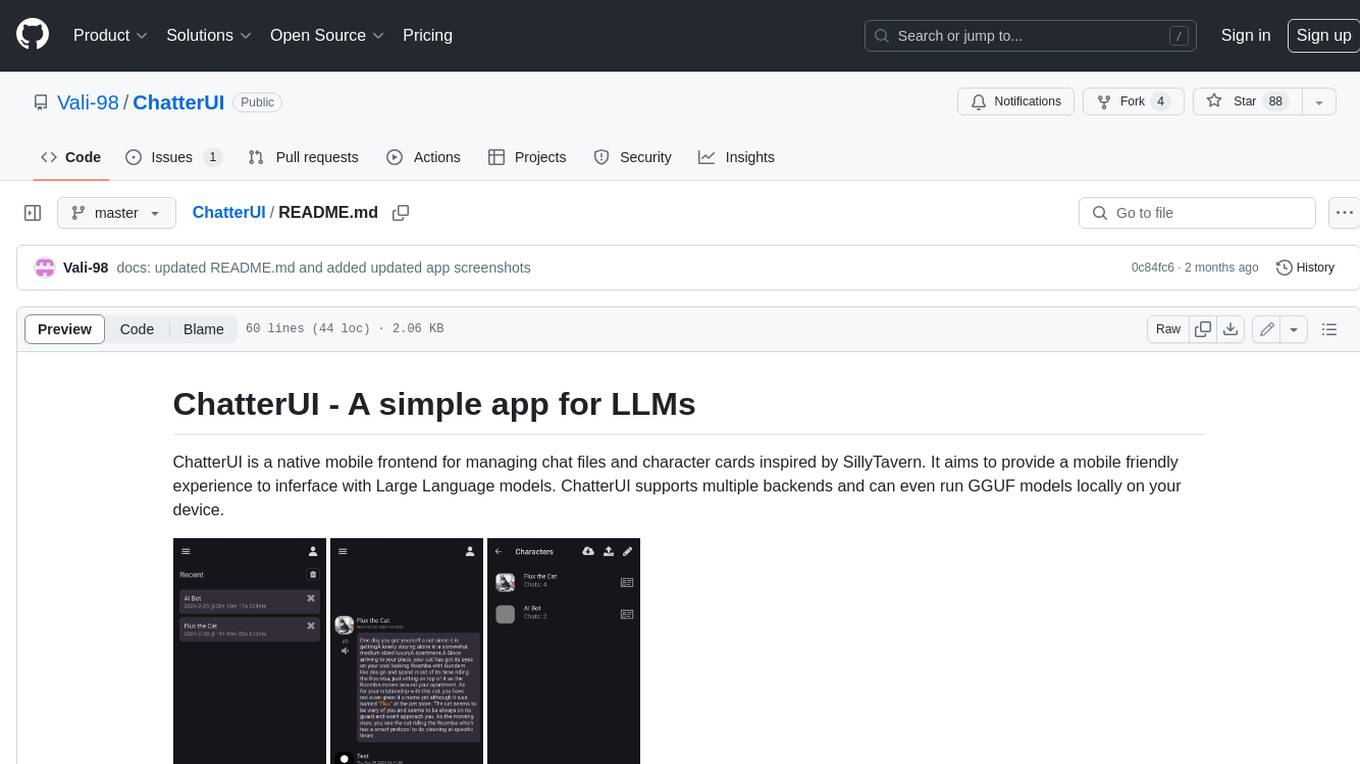

ChatterUI

ChatterUI is a mobile app that allows users to manage chat files and character cards, and to interact with Large Language Models (LLMs). It supports multiple backends, including local, koboldcpp, text-generation-webui, Generic Text Completions, AI Horde, Mancer, Open Router, and OpenAI. ChatterUI provides a mobile-friendly interface for interacting with LLMs, making it easy to use them for a variety of tasks, such as generating text, translating languages, writing code, and answering questions.

99AI

99AI is a commercializable AI web application based on NineAI 2.4.2 (no authorization, no backdoors, no piracy, integrated front-end and back-end integration packages, supports Docker rapid deployment). The uncompiled source code is temporarily closed. Compared with the stable version, the development version is faster.

chatnio

Chat Nio is a next-generation AI one-stop solution that provides a rich and user-friendly interface for interacting with various AI models. It offers features such as AI chat conversation, rich format compatibility, markdown support, message menu support, multi-platform adaptation, dialogue memory, full-model file parsing, full-model DuckDuckGo online search, full-screen large text editing, model marketplace, preset support, site announcements, preference settings, internationalization support, and a rich admin system. Chat Nio also boasts a powerful channel management system that utilizes a self-developed channel distribution algorithm, supports multi-channel management, is compatible with multiple formats, allows for custom models, supports channel retries, enables balanced load within the same channel, and provides channel model mapping and user grouping. Additionally, Chat Nio offers forwarding API services that are compatible with multiple formats in the OpenAI universal format and support multiple model compatible layers. It also provides a custom build and install option for highly customizable deployments. Chat Nio is an open-source project licensed under the Apache License 2.0 and welcomes contributions from the community.

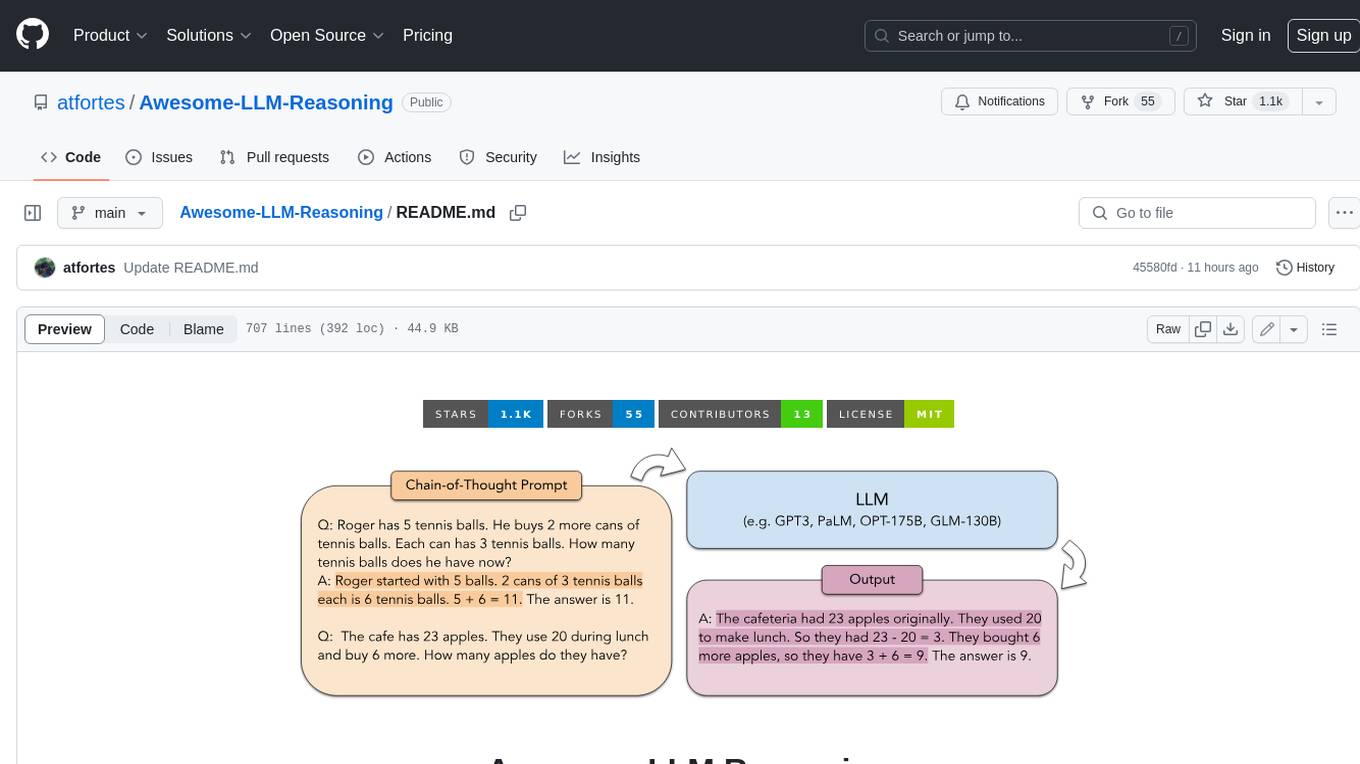

Awesome-LLM-Reasoning

**Curated collection of papers and resources on how to unlock the reasoning ability of LLMs and MLLMs.** **Description in less than 400 words, no line breaks and quotation marks.** Large Language Models (LLMs) have revolutionized the NLP landscape, showing improved performance and sample efficiency over smaller models. However, increasing model size alone has not proved sufficient for high performance on challenging reasoning tasks, such as solving arithmetic or commonsense problems. This curated collection of papers and resources presents the latest advancements in unlocking the reasoning abilities of LLMs and Multimodal LLMs (MLLMs). It covers various techniques, benchmarks, and applications, providing a comprehensive overview of the field. **5 jobs suitable for this tool, in lowercase letters.** - content writer - researcher - data analyst - software engineer - product manager **Keywords of the tool, in lowercase letters.** - llm - reasoning - multimodal - chain-of-thought - prompt engineering **5 specific tasks user can use this tool to do, in less than 3 words, Verb + noun form, in daily spoken language.** - write a story - answer a question - translate a language - generate code - summarize a document

Chinese-LLaMA-Alpaca-2

Chinese-LLaMA-Alpaca-2 is a large Chinese language model developed by Meta AI. It is based on the Llama-2 model and has been further trained on a large dataset of Chinese text. Chinese-LLaMA-Alpaca-2 can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. Here are some of the key features of Chinese-LLaMA-Alpaca-2: * It is the largest Chinese language model ever trained, with 13 billion parameters. * It is trained on a massive dataset of Chinese text, including books, news articles, and social media posts. * It can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. * It is open-source and available for anyone to use. Chinese-LLaMA-Alpaca-2 is a powerful tool that can be used to improve the performance of a wide range of natural language processing tasks. It is a valuable resource for researchers and developers working in the field of artificial intelligence.

Linly-Talker

Linly-Talker is an innovative digital human conversation system that integrates the latest artificial intelligence technologies, including Large Language Models (LLM) 🤖, Automatic Speech Recognition (ASR) 🎙️, Text-to-Speech (TTS) 🗣️, and voice cloning technology 🎤. This system offers an interactive web interface through the Gradio platform 🌐, allowing users to upload images 📷 and engage in personalized dialogues with AI 💬.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.