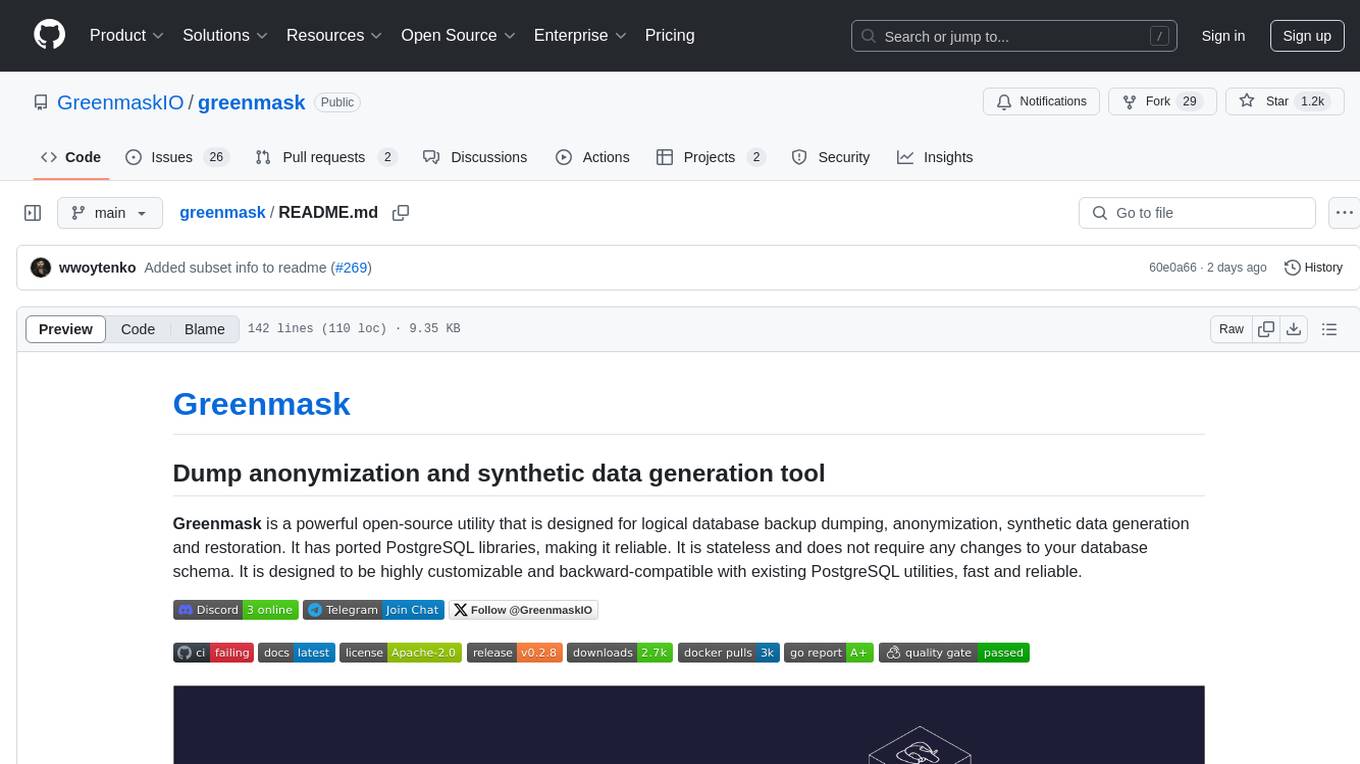

greenmask

PostgreSQL database anonymization and synthetic data generation tool

Stars: 1285

Greenmask is a powerful open-source utility designed for logical database backup dumping, anonymization, synthetic data generation, and restoration. It is highly customizable, stateless, and backward-compatible with existing PostgreSQL utilities. Greenmask supports advanced subset systems, deterministic transformers, dynamic parameters, transformation conditions, and more. It is cross-platform, database type safe, extensible, and supports parallel execution and various storage options. Ideal for backup and restoration tasks, anonymization, transformation, and data masking.

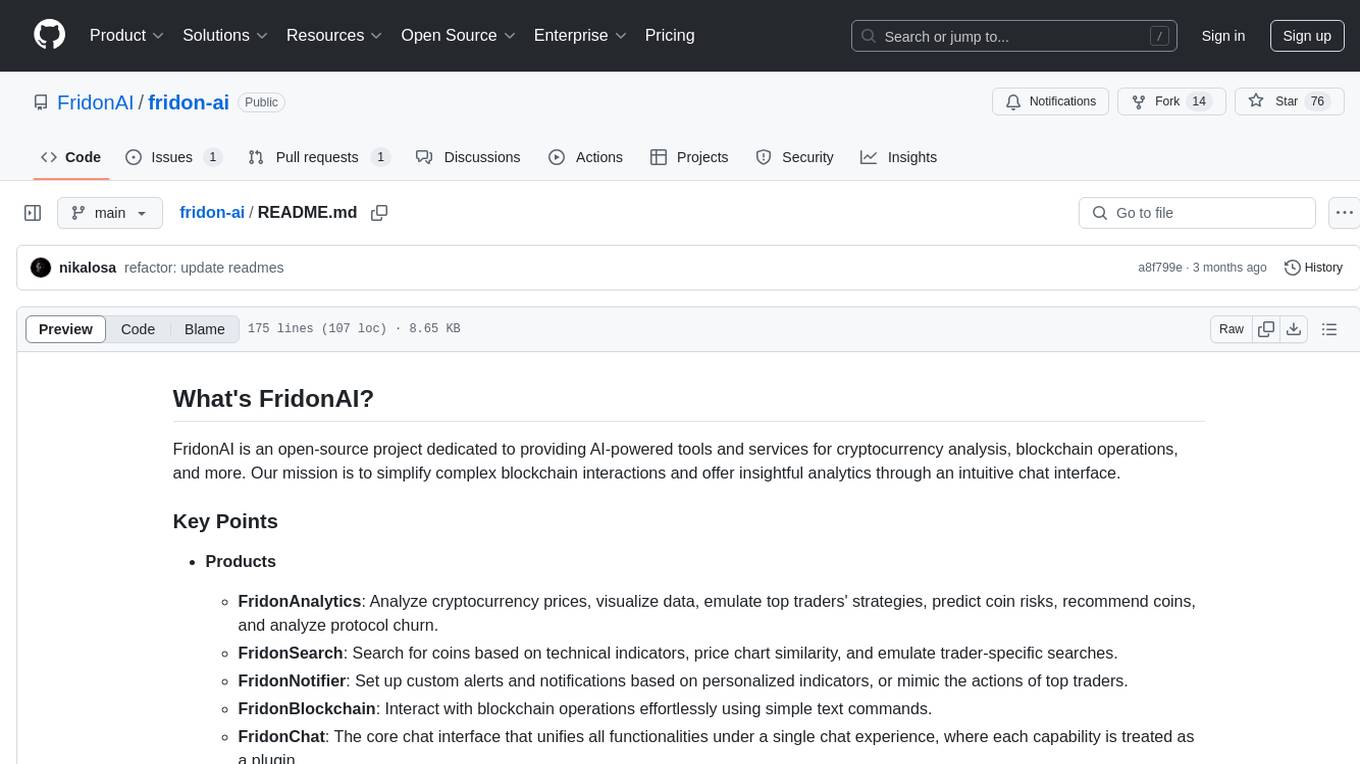

README:

Greenmask is a powerful open-source utility that is designed for logical database backup dumping, anonymization, synthetic data generation and restoration. It has ported PostgreSQL libraries, making it reliable. It is stateless and does not require any changes to your database schema. It is designed to be highly customizable and backward-compatible with existing PostgreSQL utilities, fast and reliable.

Greenmask has a Playground - it is a sandbox environment in Docker with sample databases included to help you try Greenmask without any additional actions

-

Clone the

greenmaskrepository and navigate to its directory by running the following commands:git clone [email protected]:GreenmaskIO/greenmask.git && cd greenmask

-

Once you have cloned the repository, start the environment by running Docker Compose:

docker-compose run greenmask

- Database subset - One of the most advanced subset systems on the market. It supports virtual references, nullable columns, polymorphic references, and can subset even the most complex schemas with cyclic references.

-

Deterministic transformers — Uses hash functions to ensure consistent output for the same input. Most transformers support both

randomandhashengines, offering flexibility for various use cases. - Dynamic parameters — most transformers support dynamic parameters, allowing them to adapt based on table column values. This feature helps manage dependencies between columns and meet constraints effectively.

- Transformation Condition — applies the transformation only when a specified condition is met, making it useful for targeting specific rows.

- Transformation validation and easy maintenance — Greenmask provides validation warnings, data transformation diffs, and schema diffs during configuration, enabling effective monitoring and maintenance of transformations. The schema diff feature helps prevent data leakage when the schema changes.

- Transformation inheritance — transformation inheritance for partitioned tables and tables with foreign keys. Define once and apply to all.

- Stateless — Greenmask operates as a logical dump and does not impact your existing database schema.

- Cross-platform — Can be easily built and executed on any platform, thanks to its Go-based architecture, which eliminates platform dependencies.

- Database type safe — Ensures data integrity by validating data and using the database driver for encoding and decoding operations, preserving accurate data formats.

-

Backward compatible — Fully supports the same features and protocols as standard PostgreSQL utilities. Dumps

created by Greenmask can be seamlessly restored using the

pg_restoreutility. - Extensible — Users have the flexibility to implement domain-based transformations in any programming language or use predefined templates.

- Parallel execution — Enables parallel dumping and restoration to significantly speed up results.

- Variety of storages — Supports both local and remote storage, including directories and S3-compatible solutions.

-

Pgzip support for faster compression — Speeds up dump and restoration processes with parallel compression

by setting

--pgzip.

Greenmask is ideal for various scenarios, including:

- Backup and Restoration. Use Greenmask for your daily routines involving logical backup dumping and restoration. It seamlessly handles tasks like table restoration after truncation. Its functionality closely mirrors that of pg_dump and pg_restore, making it a straightforward replacement.

- Anonymization, Transformation, and Data Masking. Employ Greenmask for anonymizing, transforming, and masking backups, especially when setting up a staging environment or for analytical purposes. It simplifies the deployment of a pre-production environment with consistently anonymized data, facilitating faster time-to-market in the development lifecycle.

The best approach for logical backup dumping and restoration is to use core PostgreSQL utilities, specifically pg_dump

and pg_restore. Greenmask is designed to align with these native tools, ensuring full compatibility. It independently

manages data dumping while delegating schema dumping and restoration to pg_dump and pg_restore, ensuring smooth

integration with PostgreSQL’s standard workflow.

Greenmask utilizes the directory format of pg_dump and pg_restore, ideal for parallel execution and partial restoration.

This format includes metadata files to guide backup and restoration steps.

- s3 - Supports any S3-compatible storage system, including AWS S3, offering flexibility across different cloud storage solutions.

- directory - This is the default option, representing a standard filesystem directory for local storage.

Greenmask works with COPY lines, collects schema metadata using the Golang driver, and employs this driver in the encoding and decoding process. The validate command offers a way to assess the impact on both schema (validation warnings) and data (transformation and displaying differences). This command allows you to validate the schema and data transformations, ensuring the desired outcomes during the Anonymization process.

If your table schema relies on functional dependencies between columns, you can address this challenge using the Dynamic parameters. By setting dynamic parameters, you can resolve such as created_at and updated_at cases, where the updated_at must be greater or equal than the created_at.

If you need to implement custom logic imperatively use Cmd or TemplateRecord or Template transformers.

Greenmask is compatible with PostgreSQL versions 11 and higher.

- Utilized the Demo database, provided by PostgresPro, for integration testing purposes.

- Employed the adventureworks database created

by

morenoh149/postgresDBSamples, in the Docker Compose playground.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for greenmask

Similar Open Source Tools

greenmask

Greenmask is a powerful open-source utility designed for logical database backup dumping, anonymization, synthetic data generation, and restoration. It is highly customizable, stateless, and backward-compatible with existing PostgreSQL utilities. Greenmask supports advanced subset systems, deterministic transformers, dynamic parameters, transformation conditions, and more. It is cross-platform, database type safe, extensible, and supports parallel execution and various storage options. Ideal for backup and restoration tasks, anonymization, transformation, and data masking.

trustgraph

TrustGraph is a tool that deploys private GraphRAG pipelines to build a RDF style knowledge graph from data, enabling accurate and secure `RAG` requests compatible with cloud LLMs and open-source SLMs. It showcases the reliability and efficiencies of GraphRAG algorithms, capturing contextual language flags missed in conventional RAG approaches. The tool offers features like PDF decoding, text chunking, inference of various LMs, RDF-aligned Knowledge Graph extraction, and more. TrustGraph is designed to be modular, supporting multiple Language Models and environments, with a plug'n'play architecture for easy customization.

comfyui_LLM_Polymath

LLM Polymath Chat Node is an advanced Chat Node for ComfyUI that integrates large language models to build text-driven applications and automate data processes, enhancing prompt responses by incorporating real-time web search, linked content extraction, and custom agent instructions. It supports both OpenAI’s GPT-like models and alternative models served via a local Ollama API. The core functionalities include Comfy Node Finder and Smart Assistant, along with additional agents like Flux Prompter, Custom Instructors, Python debugger, and scripter. The tool offers features for prompt processing, web search integration, model & API integration, custom instructions, image handling, logging & debugging, output compression, and more.

postgresml

PostgresML is a powerful Postgres extension that seamlessly combines data storage and machine learning inference within your database. It enables running machine learning and AI operations directly within PostgreSQL, leveraging GPU acceleration for faster computations, integrating state-of-the-art large language models, providing built-in functions for text processing, enabling efficient similarity search, offering diverse ML algorithms, ensuring high performance, scalability, and security, supporting a wide range of NLP tasks, and seamlessly integrating with existing PostgreSQL tools and client libraries.

deepflow

DeepFlow is an open-source project that provides deep observability for complex cloud-native and AI applications. It offers Zero Code data collection with eBPF for metrics, distributed tracing, request logs, and function profiling. DeepFlow is integrated with SmartEncoding to achieve Full Stack correlation and efficient access to all observability data. With DeepFlow, cloud-native and AI applications automatically gain deep observability, removing the burden of developers continually instrumenting code and providing monitoring and diagnostic capabilities covering everything from code to infrastructure for DevOps/SRE teams.

aistore

AIStore is a lightweight object storage system designed for AI applications. It is highly scalable, reliable, and easy to use. AIStore can be deployed on any commodity hardware, and it can be used to store and manage large datasets for deep learning and other AI applications.

cosdata

Cosdata is a cutting-edge AI data platform designed to power the next generation search pipelines. It features immutability, version control, and excels in semantic search, structured knowledge graphs, hybrid search capabilities, real-time search at scale, and ML pipeline integration. The platform is customizable, scalable, efficient, enterprise-grade, easy to use, and can manage multi-modal data. It offers high performance, indexing, low latency, and high requests per second. Cosdata is designed to meet the demands of modern search applications, empowering businesses to harness the full potential of their data.

vulcan-sql

VulcanSQL is an Analytical Data API Framework for AI agents and data apps. It aims to help data professionals deliver RESTful APIs from databases, data warehouses or data lakes much easier and secure. It turns your SQL into APIs in no time!

Upsonic

Upsonic offers a cutting-edge enterprise-ready framework for orchestrating LLM calls, agents, and computer use to complete tasks cost-effectively. It provides reliable systems, scalability, and a task-oriented structure for real-world cases. Key features include production-ready scalability, task-centric design, MCP server support, tool-calling server, computer use integration, and easy addition of custom tools. The framework supports client-server architecture and allows seamless deployment on AWS, GCP, or locally using Docker.

agent-zero

Agent Zero is a personal, organic agentic framework designed to be dynamic, transparent, customizable, and interactive. It uses the computer as a tool to accomplish tasks, with features like general-purpose assistant, computer as a tool, multi-agent cooperation, customizable and extensible framework, and communication skills. The tool is fully Dockerized, with Speech-to-Text and TTS capabilities, and offers real-world use cases like financial analysis, Excel automation, API integration, server monitoring, and project isolation. Agent Zero can be dangerous if not used properly and is prompt-based, guided by the prompts folder. The tool is extensively documented and has a changelog highlighting various updates and improvements.

vts

VTS (Vector Transport Service) is an open-source tool developed by Zilliz based on Apache Seatunnel for moving vectors and unstructured data. It addresses data migration needs, supports real-time data streaming and offline import, simplifies unstructured data transformation, and ensures end-to-end data quality. Core capabilities include rich connectors, stream and batch processing, distributed snapshot support, high performance, and real-time monitoring. Future developments include incremental synchronization, advanced data transformation, and enhanced monitoring. VTS supports various connectors for data migration and offers advanced features like Transformers, cluster mode deployment, RESTful API, Docker deployment, and more.

cline-based-code-generator

HAI Code Generator is a cutting-edge tool designed to simplify and automate task execution while enhancing code generation workflows. Leveraging Specif AI, it streamlines processes like task execution, file identification, and code documentation through intelligent automation and AI-driven capabilities. Built on Cline's powerful foundation for AI-assisted development, HAI Code Generator boosts productivity and precision by automating task execution and integrating file management capabilities. It combines intelligent file indexing, context generation, and LLM-driven automation to minimize manual effort and ensure task accuracy. Perfect for developers and teams aiming to enhance their workflows.

reductstore

ReductStore is a high-performance time series database designed for storing and managing large amounts of unstructured blob data. It offers features such as real-time querying, batching data, and HTTP(S) API for edge computing, computer vision, and IoT applications. The database ensures data integrity, implements retention policies, and provides efficient data access, making it a cost-effective solution for applications requiring unstructured data storage and access at specific time intervals.

nextpy

Nextpy is a cutting-edge software development framework optimized for AI-based code generation. It provides guardrails for defining AI system boundaries, structured outputs for prompt engineering, a powerful prompt engine for efficient processing, better AI generations with precise output control, modularity for multiplatform and extensible usage, developer-first approach for transferable knowledge, and containerized & scalable deployment options. It offers 4-10x faster performance compared to Streamlit apps, with a focus on cooperation within the open-source community and integration of key components from various projects.

fridon-ai

FridonAI is an open-source project offering AI-powered tools for cryptocurrency analysis and blockchain operations. It includes modules like FridonAnalytics for price analysis, FridonSearch for technical indicators, FridonNotifier for custom alerts, FridonBlockchain for blockchain operations, and FridonChat as a unified chat interface. The platform empowers users to create custom AI chatbots, access crypto tools, and interact effortlessly through chat. The core functionality is modular, with plugins, tools, and utilities for easy extension and development. FridonAI implements a scoring system to assess user interactions and incentivize engagement. The application uses Redis extensively for communication and includes a Nest.js backend for system operations.

For similar tasks

greenmask

Greenmask is a powerful open-source utility designed for logical database backup dumping, anonymization, synthetic data generation, and restoration. It is highly customizable, stateless, and backward-compatible with existing PostgreSQL utilities. Greenmask supports advanced subset systems, deterministic transformers, dynamic parameters, transformation conditions, and more. It is cross-platform, database type safe, extensible, and supports parallel execution and various storage options. Ideal for backup and restoration tasks, anonymization, transformation, and data masking.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.