clearml-server

ClearML - Auto-Magical CI/CD to streamline your AI workload. Experiment Management, Data Management, Pipeline, Orchestration, Scheduling & Serving in one MLOps/LLMOps solution

Stars: 364

ClearML Server is a backend service infrastructure for ClearML, facilitating collaboration and experiment management. It includes a web app, RESTful API, and file server for storing images and models. Users can deploy ClearML Server using Docker, AWS EC2 AMI, or Kubernetes. The system design supports single IP or sub-domain configurations with specific open ports. ClearML-Agent Services container allows launching long-lasting jobs and various use cases like auto-scaler service, controllers, optimizer, and applications. Advanced functionality includes web login authentication and non-responsive experiments watchdog. Upgrading ClearML Server involves stopping containers, backing up data, downloading the latest docker-compose.yml file, configuring ClearML-Agent Services, and spinning up docker containers. Community support is available through ClearML FAQ, Stack Overflow, GitHub issues, and email contact.

README:

ClearML - Auto-Magical Suite of tools to streamline your ML workflow Experiment Manager, ML-Ops and Data-Management

Note regarding Apache Log4j2 Remote Code Execution (RCE) Vulnerability - CVE-2021-44228 - ESA-2021-31

According to ElasticSearch's latest report, supported versions of Elasticsearch (6.8.9+, 7.8+) used with recent versions of the JDK (JDK9+) are not susceptible to either remote code execution or information leakage due to Elasticsearch’s usage of the Java Security Manager.

As the latest version of ClearML Server uses Elasticsearch 7.10+ with JDK15, it is not affected by these vulnerabilities.

As a precaution, we've upgraded the ES version to 7.16.2 and added the mitigation recommended by ElasticSearch to our latest docker-compose.yml file.

While previous Elasticsearch versions (5.6.11+, 6.4.0+ and 7.0.0+) used by older ClearML Server versions are only susceptible to the information leakage vulnerability (which in any case does not permit access to data within the Elasticsearch cluster), we still recommend upgrading to the latest version of ClearML Server. Alternatively, you can apply the mitigation as implemented in our latest docker-compose.yml file.

Update 15 December: A further vulnerability (CVE-2021-45046) was disclosed on December 14th. ElasticSearch's guidance for Elasticsearch remains unchanged by this new vulnerability, thus not affecting ClearML Server.

Update 22 December: To keep with ElasticSearch's recommendations, we've upgraded the ES version to the newly released 7.16.2

The ClearML Server is the backend service infrastructure for ClearML. It allows multiple users to collaborate and manage their experiments. ClearML offers a free hosted service, which is maintained by ClearML and open to anyone. In order to host your own server, you will need to launch the ClearML Server and point ClearML to it.

The ClearML Server contains the following components:

- The ClearML Web-App, a single-page UI for experiment management and browsing

- RESTful API for:

- Documenting and logging experiment information, statistics and results

- Querying experiments history, logs and results

- Locally-hosted file server for storing images and models making them easily accessible using the Web-App

You can quickly deploy your ClearML Server using Docker, AWS EC2 AMI, or Kubernetes.

The ClearML Server has two supported configurations:

-

Single IP (domain) with the following open ports

- Web application on port 8080

- API service on port 8008

- File storage service on port 8081

-

Sub-Domain configuration with default http/s ports (80 or 443)

- Web application on sub-domain: app.*.*

- API service on sub-domain: api.*.*

- File storage service on sub-domain: files.*.*

The ports 8080/8081/8008 must be available for the ClearML Server services.

For example, to see if port 8080 is in use:

-

Linux or macOS:

sudo lsof -Pn -i4 | grep :8080 | grep LISTEN -

Windows:

netstat -an |find /i "8080"

Launch The ClearML Server in any of the following formats:

- Pre-built AWS EC2 AMI

- Pre-built GCP Custom Image

- Pre-built Docker Image

- Kubernetes

In order to set up the ClearML client to work with your ClearML Server:

-

Run the

clearml-initcommand for an interactive setup. -

Or manually edit

~/clearml.conffile, making sure the server settings (api_server,web_server,file_server) are configured correctly, for example:api { # API server on port 8008 api_server: "http://localhost:8008" # web_server on port 8080 web_server: "http://localhost:8080" # file server on port 8081 files_server: "http://localhost:8081" }

Note: If you have set up your ClearML Server in a sub-domain configuration, then there is no need to specify a port number, it will be inferred from the http/s scheme.

After launching the ClearML Server and configuring the ClearML client to use the ClearML Server,

you can use ClearML in your experiments and view them in your ClearML Server web server,

for example http://localhost:8080.

For more information about the ClearML client, see ClearML.

As of version 0.15 of ClearML Server, dockerized deployment includes a ClearML-Agent Services container running as part of the docker container collection.

ClearML-Agent Services is an extension of ClearML-Agent that provides the ability to launch long-lasting jobs that previously had to be executed on local / dedicated machines. It allows a single agent to launch multiple dockers (Tasks) for different use cases. To name a few use cases, auto-scaler service (spinning instances when the need arises and the budget allows), Controllers (Implementing pipelines and more sophisticated DevOps logic), Optimizer (such as Hyper-parameter Optimization or sweeping), and Application (such as interactive Bokeh apps for increased data transparency)

ClearML-Agent Services container will spin any task enqueued into the dedicated services queue.

Every task launched by ClearML-Agent Services will be registered as a new node in the system,

providing tracking and transparency capabilities.

You can also run the ClearML-Agent Services manually, see details in ClearML-agent services mode

Note: It is the user's responsibility to make sure the proper tasks are pushed into the services queue.

Do not enqueue training / inference tasks into the services queue, as it will put unnecessary load on the server.

The ClearML Server provides a few additional useful features, which can be manually enabled:

To restart the ClearML Server, you must first stop the containers, and then restart them.

docker-compose down

docker-compose -f docker-compose.yml upClearML Server releases are also reflected in the docker compose configuration file.

We strongly encourage you to keep your ClearML Server up to date, by keeping up with the current release.

Note: The following upgrade instructions use the Linux OS as an example.

To upgrade your existing ClearML Server deployment:

-

Shut down the docker containers

docker-compose down

-

We highly recommend backing up your data directory before upgrading.

Assuming your data directory is

/opt/clearml, to archive all data into~/clearml_backup.tgzexecute:sudo tar czvf ~/clearml_backup.tgz /opt/clearml/dataRestore instructions:

To restore this example backup, execute:

sudo rm -R /opt/clearml/data sudo tar -xzf ~/clearml_backup.tgz -C /opt/clearml/data -

Download the latest

docker-compose.ymlfile.curl https://raw.githubusercontent.com/allegroai/trains-server/master/docker/docker-compose.yml -o docker-compose.yml

-

Configure the ClearML-Agent Services (not supported on Windows installation). If

CLEARML_HOST_IPis not provided, ClearML-Agent Services will use the external public address of the ClearML Server. IfCLEARML_AGENT_GIT_USER/CLEARML_AGENT_GIT_PASSare not provided, the ClearML-Agent Services will not be able to access any private repositories for running service tasks.export CLEARML_HOST_IP=server_host_ip_here export CLEARML_AGENT_GIT_USER=git_username_here export CLEARML_AGENT_GIT_PASS=git_password_here

-

Spin up the docker containers, it will automatically pull the latest ClearML Server build

docker-compose -f docker-compose.yml pull docker-compose -f docker-compose.yml up

* If something went wrong along the way, check our FAQ: Common Docker Upgrade Errors.

If you have any questions, look to the ClearML FAQ, or tag your questions on stackoverflow with 'clearml' tag.

For feature requests or bug reports, please use GitHub issues.

Additionally, you can always find us at [email protected]

Server Side Public License v1.0

The ClearML Server relies on both MongoDB and ElasticSearch. With the recent changes in both MongoDB's and ElasticSearch's OSS license, we feel it is our responsibility as a member of the community to support the projects we love and cherish. We believe the cause for the license change in both cases is more than just, and chose SSPL because it is the more general and flexible of the two licenses.

This is our way to say - we support you guys!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for clearml-server

Similar Open Source Tools

clearml-server

ClearML Server is a backend service infrastructure for ClearML, facilitating collaboration and experiment management. It includes a web app, RESTful API, and file server for storing images and models. Users can deploy ClearML Server using Docker, AWS EC2 AMI, or Kubernetes. The system design supports single IP or sub-domain configurations with specific open ports. ClearML-Agent Services container allows launching long-lasting jobs and various use cases like auto-scaler service, controllers, optimizer, and applications. Advanced functionality includes web login authentication and non-responsive experiments watchdog. Upgrading ClearML Server involves stopping containers, backing up data, downloading the latest docker-compose.yml file, configuring ClearML-Agent Services, and spinning up docker containers. Community support is available through ClearML FAQ, Stack Overflow, GitHub issues, and email contact.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

open-webui

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs. For more information, be sure to check out our Open WebUI Documentation.

restai

RestAI is an AIaaS (AI as a Service) platform that allows users to create and consume AI agents (projects) using a simple REST API. It supports various types of agents, including RAG (Retrieval-Augmented Generation), RAGSQL (RAG for SQL), inference, vision, and router. RestAI features automatic VRAM management, support for any public LLM supported by LlamaIndex or any local LLM supported by Ollama, a user-friendly API with Swagger documentation, and a frontend for easy access. It also provides evaluation capabilities for RAG agents using deepeval.

chatnio

Chat Nio is a next-generation AIGC one-stop business solution that combines the advantages of frontend-oriented lightweight deployment projects with powerful API distribution systems. It offers rich model support, beautiful UI design, complete Markdown support, multi-theme support, internationalization support, text-to-image support, powerful conversation sync, model market & preset system, rich file parsing, full model internet search, Progressive Web App (PWA) support, comprehensive backend management, multiple billing methods, innovative model caching, and additional features. The project aims to address limitations in conversation synchronization, billing, file parsing, conversation URL sharing, channel management, and API call support found in existing AIGC commercial sites, while also providing a user-friendly interface design and C-end features.

higress

Higress is an open-source cloud-native API gateway built on the core of Istio and Envoy, based on Alibaba's internal practice of Envoy Gateway. It is designed for AI-native API gateway, serving AI businesses such as Tongyi Qianwen APP, Bailian Big Model API, and Machine Learning PAI platform. Higress provides capabilities to interface with LLM model vendors, AI observability, multi-model load balancing/fallback, AI token flow control, and AI caching. It offers features for AI gateway, Kubernetes Ingress gateway, microservices gateway, and security protection gateway, with advantages in production-level scalability, stream processing, extensibility, and ease of use.

DesktopCommanderMCP

Desktop Commander MCP is a server that allows the Claude desktop app to execute long-running terminal commands on your computer and manage processes through Model Context Protocol (MCP). It is built on top of MCP Filesystem Server to provide additional search and replace file editing capabilities. The tool enables users to execute terminal commands with output streaming, manage processes, perform full filesystem operations, and edit code with surgical text replacements or full file rewrites. It also supports vscode-ripgrep based recursive code or text search in folders.

middleware

Middleware is an open-source engineering management tool that helps engineering leaders measure and analyze team effectiveness using DORA metrics. It integrates with CI/CD tools, automates DORA metric collection and analysis, visualizes key performance indicators, provides customizable reports and dashboards, and integrates with project management platforms. Users can set up Middleware using Docker or manually, generate encryption keys, set up backend and web servers, and access the application to view DORA metrics. The tool calculates DORA metrics using GitHub data, including Deployment Frequency, Lead Time for Changes, Mean Time to Restore, and Change Failure Rate. Middleware aims to provide DORA metrics to users based on their Git data, simplifying the process of tracking software delivery performance and operational efficiency.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

inngest

Inngest is a platform that offers durable functions to replace queues, state management, and scheduling for developers. It allows writing reliable step functions faster without dealing with infrastructure. Developers can create durable functions using various language SDKs, run a local development server, deploy functions to their infrastructure, sync functions with the Inngest Platform, and securely trigger functions via HTTPS. Inngest Functions support retrying, scheduling, and coordinating operations through triggers, flow control, and steps, enabling developers to build reliable workflows with robust support for various operations.

AIOStreams

AIOStreams is a versatile tool that combines streams from various addons into one platform, offering extensive customization options. Users can change result formats, filter results by various criteria, remove duplicates, prioritize services, sort results, specify size limits, and more. The tool scrapes results from selected addons, applies user configurations, and presents the results in a unified manner. It simplifies the process of finding and accessing desired content from multiple sources, enhancing user experience and efficiency.

clearml

ClearML is a suite of tools designed to streamline the machine learning workflow. It includes an experiment manager, MLOps/LLMOps, data management, and model serving capabilities. ClearML is open-source and offers a free tier hosting option. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm. ClearML provides extensive logging capabilities, including source control info, execution environment, hyper-parameters, and experiment outputs. It also offers automation features, such as remote job execution and pipeline creation. ClearML is designed to be easy to integrate, requiring only two lines of code to add to existing scripts. It aims to improve collaboration, visibility, and data transparency within ML teams.

actionbook

Actionbook is a browser action engine designed for AI agents, providing up-to-date action manuals and DOM structure to enable instant website operations without guesswork. It offers faster execution, token savings, resilient automation, and universal compatibility, making it ideal for building reliable browser agents. Actionbook integrates seamlessly with AI coding assistants and offers three integration methods: CLI, MCP Server, and JavaScript SDK. The tool is well-documented and actively developed in a monorepo setup using pnpm workspaces and Turborepo.

LLMstudio

LLMstudio by TensorOps is a platform that offers prompt engineering tools for accessing models from providers like OpenAI, VertexAI, and Bedrock. It provides features such as Python Client Gateway, Prompt Editing UI, History Management, and Context Limit Adaptability. Users can track past runs, log costs and latency, and export history to CSV. The tool also supports automatic switching to larger-context models when needed. Coming soon features include side-by-side comparison of LLMs, automated testing, API key administration, project organization, and resilience against rate limits. LLMstudio aims to streamline prompt engineering, provide execution history tracking, and enable effortless data export, offering an evolving environment for teams to experiment with advanced language models.

trigger.dev

Trigger.dev is an open source platform and SDK for creating long-running background jobs. It provides features like JavaScript and TypeScript SDK, no timeouts, retries, queues, schedules, observability, React hooks, Realtime API, custom alerts, elastic scaling, and works with existing tech stack. Users can create tasks in their codebase, deploy tasks using the SDK, manage tasks in different environments, and have full visibility of job runs. The platform offers a trace view of every task run for detailed monitoring. Getting started is easy with account creation, project setup, and onboarding instructions. Self-hosting and development guides are available for users interested in contributing or hosting Trigger.dev.

mattermost-plugin-agents

The Mattermost Agents Plugin integrates AI capabilities directly into your Mattermost workspace, allowing users to run local LLMs on their infrastructure or connect to cloud providers. It offers multiple AI assistants with specialized personalities, thread and channel summarization, action item extraction, meeting transcription, semantic search, smart reactions, direct conversations with AI assistants, and flexible LLM support. The plugin comes with comprehensive documentation, installation instructions, system requirements, and development guidelines for users to interact with AI features and configure LLM providers.

For similar tasks

clearml-server

ClearML Server is a backend service infrastructure for ClearML, facilitating collaboration and experiment management. It includes a web app, RESTful API, and file server for storing images and models. Users can deploy ClearML Server using Docker, AWS EC2 AMI, or Kubernetes. The system design supports single IP or sub-domain configurations with specific open ports. ClearML-Agent Services container allows launching long-lasting jobs and various use cases like auto-scaler service, controllers, optimizer, and applications. Advanced functionality includes web login authentication and non-responsive experiments watchdog. Upgrading ClearML Server involves stopping containers, backing up data, downloading the latest docker-compose.yml file, configuring ClearML-Agent Services, and spinning up docker containers. Community support is available through ClearML FAQ, Stack Overflow, GitHub issues, and email contact.

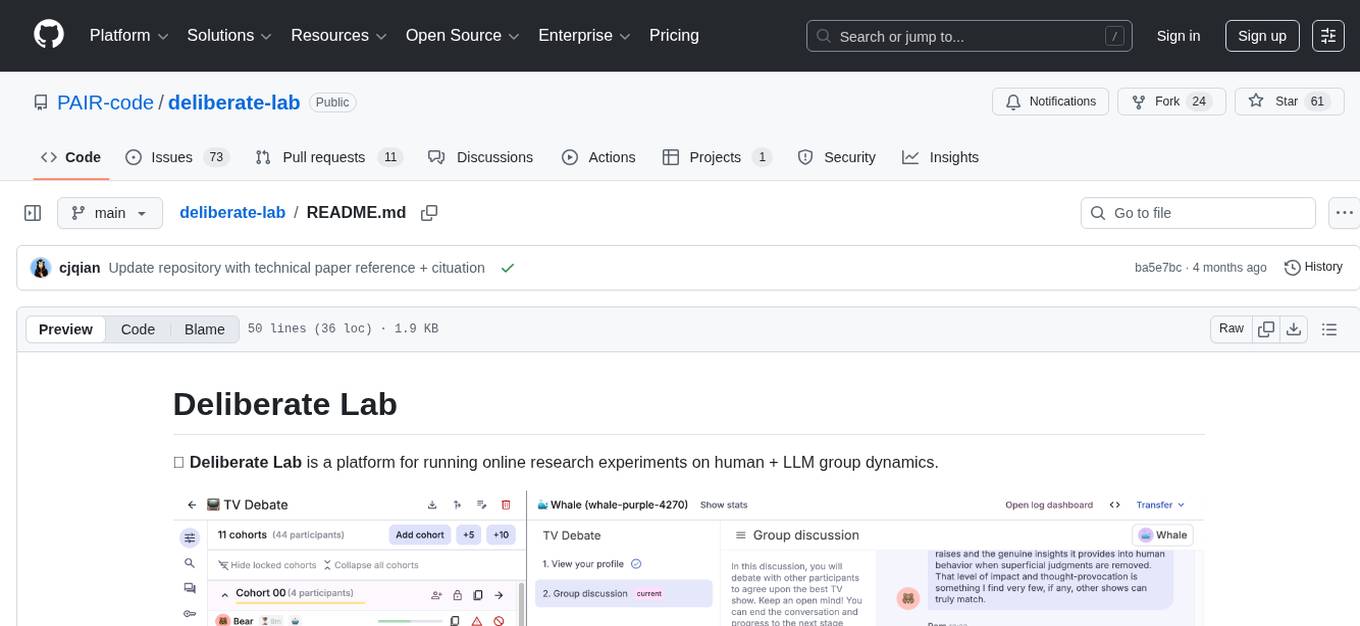

deliberate-lab

Deliberate Lab is a platform designed for conducting online research experiments focusing on human and LLM group dynamics. It provides documentation for researchers and developers, offers a quick start guide for developers, and includes a technical paper. The platform allows users to run real-time human-AI social experiments and provides tools for analyzing group interactions and dynamics.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.