nlux

The 𝗣𝗼𝘄𝗲𝗿𝗳𝘂𝗹 Conversational AI JavaScript Library 💬 — UI for any LLM, supporting LangChain / HuggingFace / Vercel AI, and more 🧡 React, Next.js, and plain JavaScript ⭐️

Stars: 963

NLUX is an open-source JavaScript and React JS library that simplifies the integration of powerful large language models (LLMs) like ChatGPT into web apps or websites. With just a few lines of code, users can add conversational AI capabilities and interact with their favorite LLM. The library offers features such as building AI chat interfaces in minutes, React components and hooks for easy integration, LLM adapters for various APIs, customizable assistant and user personas, streaming LLM output, custom renderers, high customizability, and zero dependencies. NLUX is designed with principles of intuitiveness, performance, accessibility, and developer experience in mind. The mission of NLUX is to enable developers to build outstanding LLM front-ends and applications with a focus on performance and usability.

README:

The Powerful Conversational AI

JavaScript Library ✨💬

Docs Website | Discord Community | X

Do you like this project ? Please star the repo to show your support 🌟 🧡

Building with NLUX ? Get in touch — We'd love to hear from you.

NLUX is React and JavaScript open-source library for building conversational AI interfaces. It makes it super simple

to build web applications powered by Large Language Models (LLMs). With just a few lines of code, you can add

conversational AI capabilities and interact with your favorite AI models.

Use nlux-cli to quickly spin up a new Next.js, React, or Vanilla TypeScript project with NLUX integrated.

Get started with NLUX and your favorite web framework under a minute:

# Next.js 🔼 with NLUX

npx nlux-cli create next my-next-app# React ⚛️ , Vite, with NLUX

npx nlux-cli create react my-react-app# Or, vanilla TypeScript 🟨 , Vite, with NLUX

npx nlux-cli create vanilla my-vanilla-app-

The docs website is available at:

docs.nlkit.com/nlux -

Several Get Started Guides are available, including for:

Next.js and Vercel AI — LangChain LangServe — React with Node.js Backend

- Build AI Chat Interfaces In Minutes ― High quality conversational AI interfaces with just a few lines of code.

-

React Components & Hooks ―

<AiChat />for UI anduseChatAdapterhook for easy integration. - Next.js & Vercel AI ― Out-of-the-box support, demos, and examples for Next.js and Vercel AI.

- React Server Components (RSC) and Generative UI 🔥 ― With Next.js or any RSC compatible framework.

-

LLM Adapters ― For

ChatGPT―LangChain🦜LangServeAPIs ―Hugging Face🤗 Inference. - A flexible interface to Create Your Own Adapter 🎯 for any LLM ― with support for stream or batch modes.

- Assistant and User Personas ― Customize participant personas with names, images, and descriptions.

- Highly Customizable ― Tune almost every UI aspect through theming, layout options, and more.

- Zero Dependency ― Lightweight codebase ― Core with zero dependency and no external UI libraries.

This GitHub repository contains the source code for the NLUX library.

It is a monorepo that contains code for following NPM packages:

⚛️ React JS Packages:

-

@nlux/react― React JS components forNLUX. -

@nlux/langchain-react― React hooks and adapter for APIs created using LangChain's LangServe library. -

@nlux/openai-react― React hooks for the OpenAI API, for testing and development. -

@nlux/hf-react― React hooks and pre-processors for the Hugging Face Inference API -

@nlux/nlbridge-react― Integration withnlbridge, the Express.js LLM middleware by the NLUX team.

🟨 Vanilla JS Packages:

-

@nlux/core― The core Vanilla JS library to use with any web framework. -

@nlux/langchain― Adapter for APIs created using LangChain's LangServe library. -

@nlux/openai― Adapter for the OpenAI API, for testing and development. -

@nlux/hf― Adapter and pre-processors for the Hugging Face Inference API. -

@nlux/nlbridge― Integration withnlbridge, the Express.js LLM middleware by the NLUX team.

🎁 Themes & Extensions:

-

@nlux/themes― The defaultLunatheme and CSS styles. -

@nlux/markdown― Markdown stream parser to render markdown as it's being generated. -

@nlux/highlighter― Syntax highlighter based on Highlight.js.

Please visit each package's NPM page for information on how to use it.

The following design principles guide the development of NLUX:

-

Intuitive ― Interactions enabled by

NLUXshould be intuitive. Usage should unfold naturally without obstacles or friction. No teaching or thinking should be required to use UI built withNLUX. -

Performant ―

NLUXshould be as fast as possible. Fast to load, fast to render and update, fast to respond to user input. To achieve that, we should avoid unnecessary work, optimize for performance, minimize bundle size, and not depend on external libraries. -

Accessible ― UI built with

NLUXshould be accessible to everyone. It should be usable by people with disabilities, on various devices, in various environments, and using various input methods (keyboard, touch, voice). -

DX ―

NLUXrecognizes developers as first-class citizens. The library should enable an optimal DX (developer experience). It should be effortless to use, easy to understand, and simple to extend. Stellar documentation should be provided. The feature roadmap should evolve aligning to developer needs voiced.

Our mission is to enable developers to build outstanding LLM front-ends and applications, cross platforms, with a focus on performance and usability.

-

Star The Repo 🌟 ― If you like

NLUX, please star the repo to show your support.

Your support is what keeps this open-source project going 🧡 - GitHub Discussions ― Ask questions, report issues, and share your ideas with the community.

- Discord Community ― Join our Discord server to chat with the community and get support.

- docs.nlkit.com/nlux Developer Website ― Examples, learning resources, and API reference.

NLUX is licensed under Mozilla Public License Version 2.0 with restriction to use as

part of a training dataset to develop or improve AI models, or as an input for code

translation tools.

Paragraphs (3.6) and (3.7) were added to the original MPL 2.0 license.

The full license text can be found in the LICENSE file.

In a nutshell:

- You can use

NLUXin your personal projects. - You can use

NLUXin your commercial projects. - You can modify

NLUXand publish your changes under the same license. - You cannot use

NLUX's source code as dataset to train AI models, nor with code translation tools.

Wondering what it means to use software licensed under MPL 2.0? Learn more

on MPL 2.0 FAQ.

Please read the full license text in the LICENSE file for details.

This open-source project fits under the umbrella of NLKit, a suite of tools and libraries for

building conversational AI applications. NLUX is the first project in the NLKit suite, with more to come.

The project is being led by Salmen Hichri, a senior software engineer with over a decade of experience building user interfaces and developer tools at companies like Amazon and Goldman Sachs, and contributions to open-source projects.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nlux

Similar Open Source Tools

nlux

NLUX is an open-source JavaScript and React JS library that simplifies the integration of powerful large language models (LLMs) like ChatGPT into web apps or websites. With just a few lines of code, users can add conversational AI capabilities and interact with their favorite LLM. The library offers features such as building AI chat interfaces in minutes, React components and hooks for easy integration, LLM adapters for various APIs, customizable assistant and user personas, streaming LLM output, custom renderers, high customizability, and zero dependencies. NLUX is designed with principles of intuitiveness, performance, accessibility, and developer experience in mind. The mission of NLUX is to enable developers to build outstanding LLM front-ends and applications with a focus on performance and usability.

nlux

nlux is an open-source Javascript and React JS library that makes it super simple to integrate powerful large language models (LLMs) like ChatGPT into your web app or website. With just a few lines of code, you can add conversational AI capabilities and interact with your favourite LLM.

spacy-llm

This package integrates Large Language Models (LLMs) into spaCy, featuring a modular system for **fast prototyping** and **prompting** , and turning unstructured responses into **robust outputs** for various NLP tasks, **no training data** required. It supports open-source LLMs hosted on Hugging Face 🤗: Falcon, Dolly, Llama 2, OpenLLaMA, StableLM, Mistral. Integration with LangChain 🦜️🔗 - all `langchain` models and features can be used in `spacy-llm`. Tasks available out of the box: Named Entity Recognition, Text classification, Lemmatization, Relationship extraction, Sentiment analysis, Span categorization, Summarization, Entity linking, Translation, Raw prompt execution for maximum flexibility. Soon: Semantic role labeling. Easy implementation of **your own functions** via spaCy's registry for custom prompting, parsing and model integrations. For an example, see here. Map-reduce approach for splitting prompts too long for LLM's context window and fusing the results back together

testzeus-hercules

Hercules is the world’s first open-source testing agent designed to handle the toughest testing tasks for modern web applications. It turns simple Gherkin steps into fully automated end-to-end tests, making testing simple, reliable, and efficient. Hercules adapts to various platforms like Salesforce and is suitable for CI/CD pipelines. It aims to democratize and disrupt test automation, making top-tier testing accessible to everyone. The tool is transparent, reliable, and community-driven, empowering teams to deliver better software. Hercules offers multiple ways to get started, including using PyPI package, Docker, or building and running from source code. It supports various AI models, provides detailed installation and usage instructions, and integrates with Nuclei for security testing and WCAG for accessibility testing. The tool is production-ready, open core, and open source, with plans for enhanced LLM support, advanced tooling, improved DOM distillation, community contributions, extensive documentation, and a bounty program.

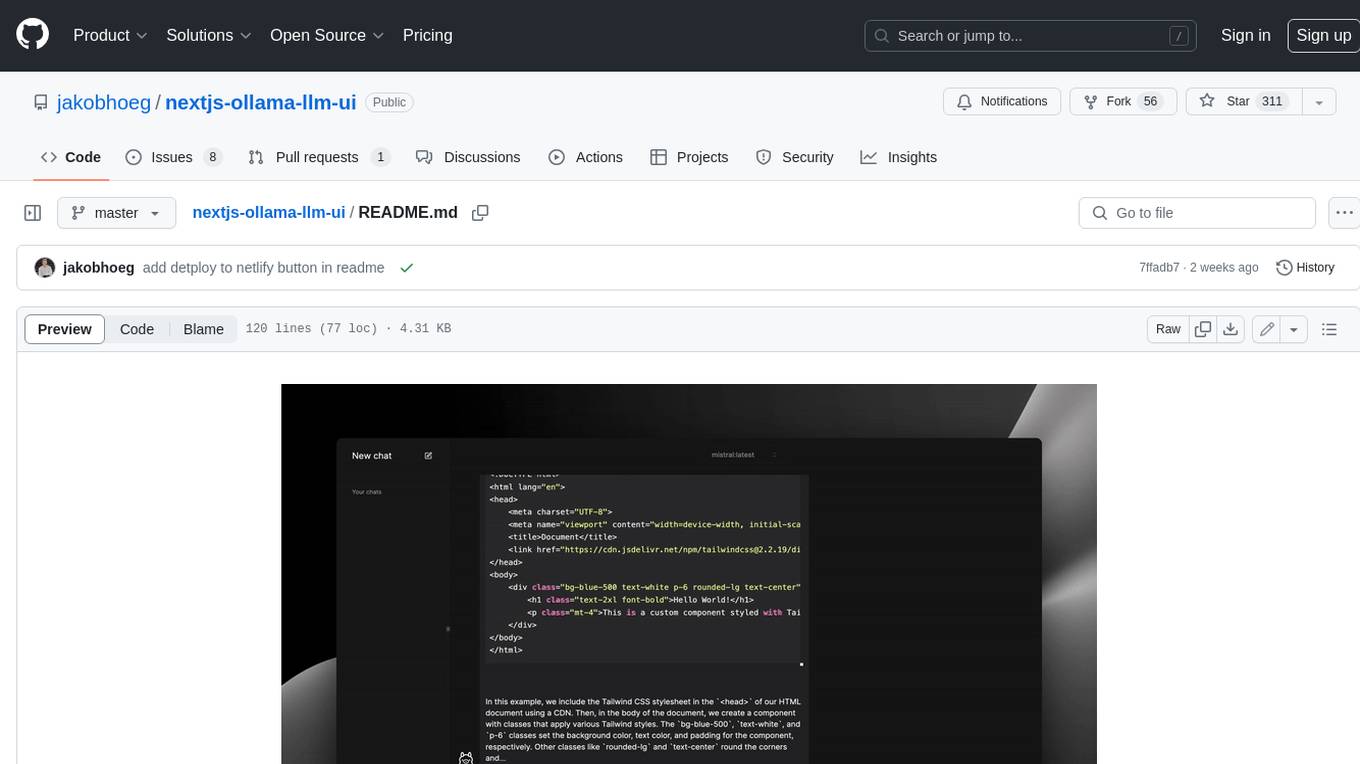

nextjs-ollama-llm-ui

This web interface provides a user-friendly and feature-rich platform for interacting with Ollama Large Language Models (LLMs). It offers a beautiful and intuitive UI inspired by ChatGPT, making it easy for users to get started with LLMs. The interface is fully local, storing chats in local storage for convenience, and fully responsive, allowing users to chat on their phones with the same ease as on a desktop. It features easy setup, code syntax highlighting, and the ability to easily copy codeblocks. Users can also download, pull, and delete models directly from the interface, and switch between models quickly. Chat history is saved and easily accessible, and users can choose between light and dark mode. To use the web interface, users must have Ollama downloaded and running, and Node.js (18+) and npm installed. Installation instructions are provided for running the interface locally. Upcoming features include the ability to send images in prompts, regenerate responses, import and export chats, and add voice input support.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

MyDeviceAI

MyDeviceAI is a personal AI assistant app for iPhone that brings the power of artificial intelligence directly to the device. It focuses on privacy, performance, and personalization by running AI models locally and integrating with privacy-focused web services. The app offers seamless user experience, web search integration, advanced reasoning capabilities, personalization features, chat history access, and broad device support. It requires macOS, Xcode, CocoaPods, Node.js, and a React Native development environment for installation. The technical stack includes React Native framework, AI models like Qwen 3 and BGE Small, SearXNG integration, Redux for state management, AsyncStorage for storage, Lucide for UI components, and tools like ESLint and Prettier for code quality.

Loyal-Elephie

Embark on an exciting adventure with Loyal Elephie, your faithful AI sidekick! This project combines the power of a neat Next.js web UI and a mighty Python backend, leveraging the latest advancements in Large Language Models (LLMs) and Retrieval Augmented Generation (RAG) to deliver a seamless and meaningful chatting experience. Features include controllable memory, hybrid search, secure web access, streamlined LLM agent, and optional Markdown editor integration. Loyal Elephie supports both open and proprietary LLMs and embeddings serving as OpenAI compatible APIs.

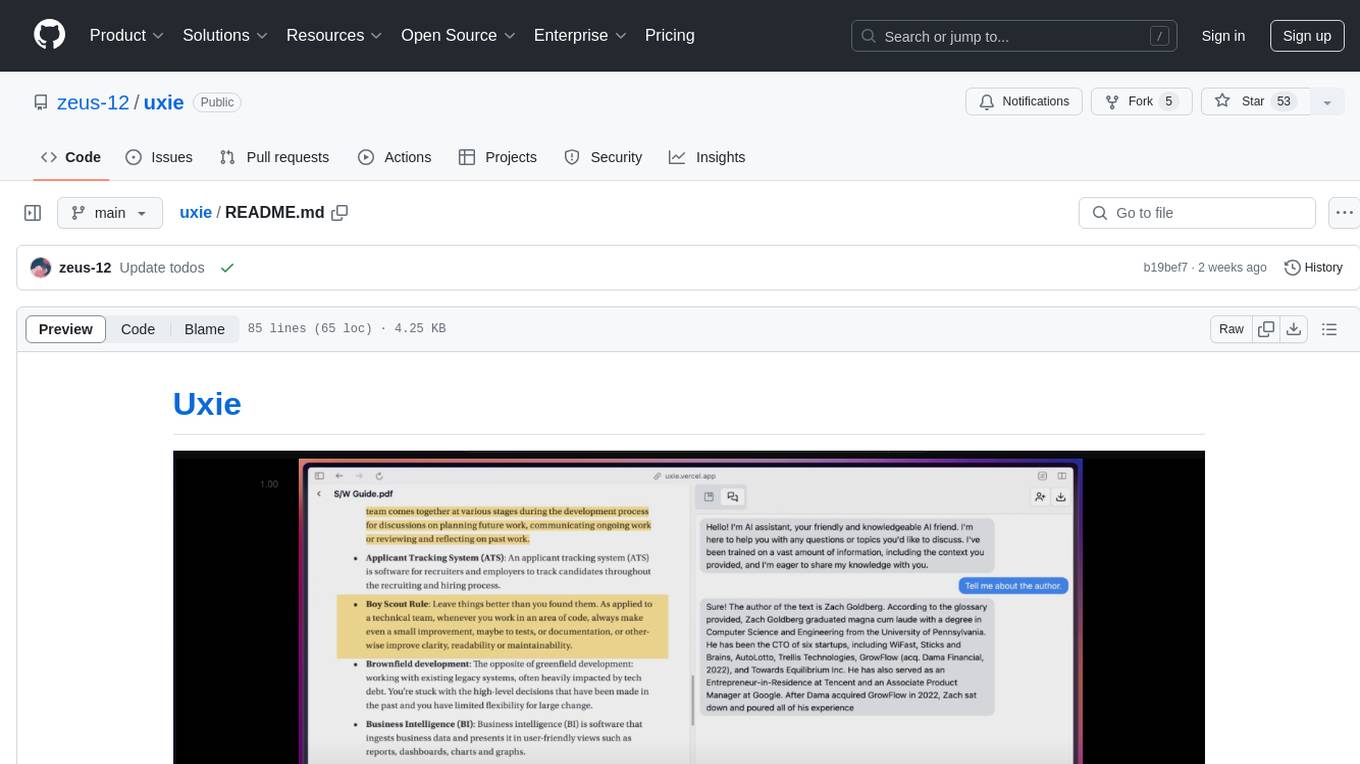

uxie

Uxie is a PDF reader app designed to revolutionize the learning experience. It offers features such as annotation, note-taking, collaboration tools, integration with LLM for enhanced learning, and flashcard generation with LLM feedback. Built using Nextjs, tRPC, Zod, TypeScript, Tailwind CSS, React Query, React Hook Form, Supabase, Prisma, and various other tools. Users can take notes, summarize PDFs, chat and collaborate with others, create custom blocks in the editor, and use AI-powered text autocompletion. The tool allows users to craft simple flashcards, test knowledge, answer questions, and receive instant feedback through AI evaluation.

OllamaSharp

OllamaSharp is a .NET binding for the Ollama API, providing an intuitive API client to interact with Ollama. It offers support for all Ollama API endpoints, real-time streaming, progress reporting, and an API console for remote management. Users can easily set up the client, list models, pull models with progress feedback, stream completions, and build interactive chats. The project includes a demo console for exploring and managing the Ollama host.

Sanmill

Sanmill is a free, powerful UCI-like N men's morris program with CUI, Flutter GUI and Qt GUI. Nine men's morris is a strategy board game for two players dating at least to the Roman Empire. The game is also known as nine-man morris , mill , mills , the mill game , merels , merrills , merelles , marelles , morelles , and ninepenny marl in English.

cognita

Cognita is an open-source framework to organize your RAG codebase along with a frontend to play around with different RAG customizations. It provides a simple way to organize your codebase so that it becomes easy to test it locally while also being able to deploy it in a production ready environment. The key issues that arise while productionizing RAG system from a Jupyter Notebook are: 1. **Chunking and Embedding Job** : The chunking and embedding code usually needs to be abstracted out and deployed as a job. Sometimes the job will need to run on a schedule or be trigerred via an event to keep the data updated. 2. **Query Service** : The code that generates the answer from the query needs to be wrapped up in a api server like FastAPI and should be deployed as a service. This service should be able to handle multiple queries at the same time and also autoscale with higher traffic. 3. **LLM / Embedding Model Deployment** : Often times, if we are using open-source models, we load the model in the Jupyter notebook. This will need to be hosted as a separate service in production and model will need to be called as an API. 4. **Vector DB deployment** : Most testing happens on vector DBs in memory or on disk. However, in production, the DBs need to be deployed in a more scalable and reliable way. Cognita makes it really easy to customize and experiment everything about a RAG system and still be able to deploy it in a good way. It also ships with a UI that makes it easier to try out different RAG configurations and see the results in real time. You can use it locally or with/without using any Truefoundry components. However, using Truefoundry components makes it easier to test different models and deploy the system in a scalable way. Cognita allows you to host multiple RAG systems using one app. ### Advantages of using Cognita are: 1. A central reusable repository of parsers, loaders, embedders and retrievers. 2. Ability for non-technical users to play with UI - Upload documents and perform QnA using modules built by the development team. 3. Fully API driven - which allows integration with other systems. > If you use Cognita with Truefoundry AI Gateway, you can get logging, metrics and feedback mechanism for your user queries. ### Features: 1. Support for multiple document retrievers that use `Similarity Search`, `Query Decompostion`, `Document Reranking`, etc 2. Support for SOTA OpenSource embeddings and reranking from `mixedbread-ai` 3. Support for using LLMs using `Ollama` 4. Support for incremental indexing that ingests entire documents in batches (reduces compute burden), keeps track of already indexed documents and prevents re-indexing of those docs.

deer-flow

DeerFlow is a community-driven Deep Research framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It supports FaaS deployment and one-click deployment based on Volcengine. The framework includes core capabilities like LLM integration, search and retrieval, RAG integration, MCP seamless integration, human collaboration, report post-editing, and content creation. The architecture is based on a modular multi-agent system with components like Coordinator, Planner, Research Team, and Text-to-Speech integration. DeerFlow also supports interactive mode, human-in-the-loop mechanism, and command-line arguments for customization.

craftium

Craftium is an open-source platform based on the Minetest voxel game engine and the Gymnasium and PettingZoo APIs, designed for creating fast, rich, and diverse single and multi-agent environments. It allows for connecting to Craftium's Python process, executing actions as keyboard and mouse controls, extending the Lua API for creating RL environments and tasks, and supporting client/server synchronization for slow agents. Craftium is fully extensible, extensively documented, modern RL API compatible, fully open source, and eliminates the need for Java. It offers a variety of environments for research and development in reinforcement learning.

hal-9100

This repository is now archived and the code is privately maintained. If you are interested in this infrastructure, please contact the maintainer directly.

lmql

LMQL is a programming language designed for large language models (LLMs) that offers a unique way of integrating traditional programming with LLM interaction. It allows users to write programs that combine algorithmic logic with LLM calls, enabling model reasoning capabilities within the context of the program. LMQL provides features such as Python syntax integration, rich control-flow options, advanced decoding techniques, powerful constraints via logit masking, runtime optimization, sync and async API support, multi-model compatibility, and extensive applications like JSON decoding and interactive chat interfaces. The tool also offers library integration, flexible tooling, and output streaming options for easy model output handling.

For similar tasks

pro-chat

ProChat is a components library focused on quickly building large language model chat interfaces. It empowers developers to create rich, dynamic, and intuitive chat interfaces with features like automatic chat caching, streamlined conversations, message editing tools, auto-rendered Markdown, and programmatic controls. The tool also includes design evolution plans such as customized dialogue rendering, enhanced request parameters, personalized error handling, expanded documentation, and atomic component design.

nlux

NLUX is an open-source JavaScript and React JS library that simplifies the integration of powerful large language models (LLMs) like ChatGPT into web apps or websites. With just a few lines of code, users can add conversational AI capabilities and interact with their favorite LLM. The library offers features such as building AI chat interfaces in minutes, React components and hooks for easy integration, LLM adapters for various APIs, customizable assistant and user personas, streaming LLM output, custom renderers, high customizability, and zero dependencies. NLUX is designed with principles of intuitiveness, performance, accessibility, and developer experience in mind. The mission of NLUX is to enable developers to build outstanding LLM front-ends and applications with a focus on performance and usability.

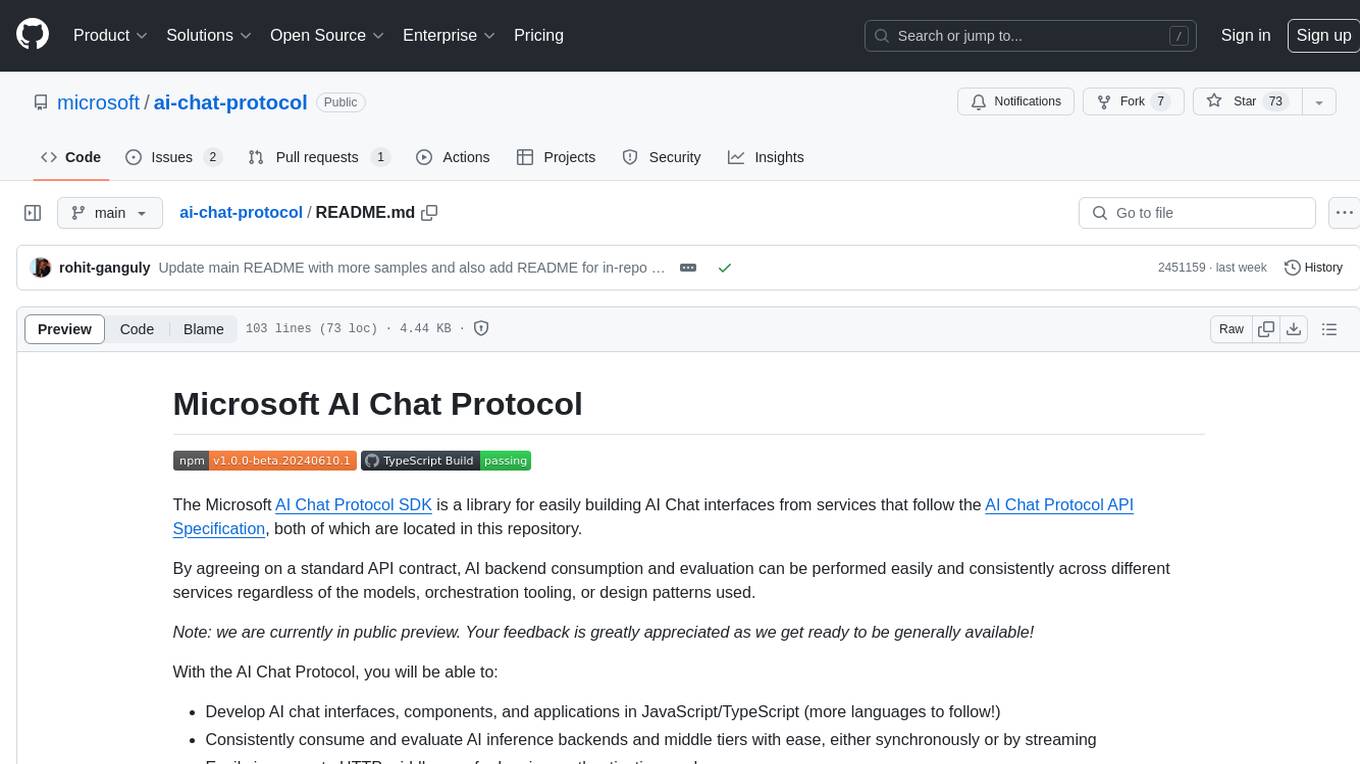

ai-chat-protocol

The Microsoft AI Chat Protocol SDK is a library for easily building AI Chat interfaces from services that follow the AI Chat Protocol API Specification. By agreeing on a standard API contract, AI backend consumption and evaluation can be performed easily and consistently across different services. It allows developers to develop AI chat interfaces, consume and evaluate AI inference backends, and incorporate HTTP middleware for logging and authentication.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.