inbox-zero

The world's best AI personal assistant for email. Open source app to help you reach inbox zero fast.

Stars: 10120

Inbox Zero is an open-source email app that helps you reach inbox zero fast with AI assistance. It offers various features such as a newsletter cleaner, AI assistant for auto-responding, archiving, labeling, and forwarding emails, a cold email blocker, email analytics, tracking of new senders and unreplied emails, and a large email finder to free up space. Inbox Zero is built with Next.js, Tailwind CSS, Prisma, Tinybird, Upstash, and Turbo.

README:

Organizes your inbox, pre-drafts replies, and tracks follow‑ups - so you reach inbox zero faster. Open source alternative to Fyxer, but more customisable and secure.

Website

·

Discord

·

Issues

To help you spend less time in your inbox, so you can focus on what matters most.

- AI Personal Assistant: Organizes your inbox and pre-drafts replies in your tone and style.

- Cursor Rules for email: Explain in plain English how your AI should handle your inbox.

- Reply Zero: Track emails to reply to and those awaiting responses.

- Bulk Unsubscriber: One-click unsubscribe and archive emails you never read.

- Bulk Archiver: Clean up your inbox by bulk archiving old emails.

- Cold Email Blocker: Auto‑block cold emails.

- Email Analytics: Track your activity and trends over time.

- Meeting Briefs: Get personalized briefings before every meeting, pulling context from your email and calendar.

- Smart Filing: Automatically save email attachments to Google Drive or OneDrive.

Learn more in our docs.

|

|

|---|---|

| AI Assistant | Reply Zero |

|

|

| Gmail client | Bulk Unsubscriber |

To request a feature open a GitHub issue, or join our Discord.

We offer a hosted version of Inbox Zero at getinboxzero.com.

The fastest way to self-host Inbox Zero is with the CLI:

npx @inbox-zero/cli setup # One-time setup wizard

npx @inbox-zero/cli start # Start containersFor complete self-hosting instructions, production deployment, OAuth setup, and configuration options, see our Self-Hosting Docs.

git clone https://github.com/elie222/inbox-zero.git

cd inbox-zero

docker compose -f docker-compose.dev.yml up -d # Postgres + Redis

pnpm install

npm run setup # Interactive env setup

cd apps/web && pnpm prisma migrate dev && cd ../..

pnpm devSee the Contributing Guide for more details including devcontainer setup.

View open tasks in GitHub Issues and join our Discord to discuss what's being worked on.

Docker images are automatically built on every push to main and tagged with the commit SHA (e.g., elie222/inbox-zero:abc1234). The latest tag always points to the most recent main build. Formal releases use version tags (e.g., v2.26.0).

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for inbox-zero

Similar Open Source Tools

inbox-zero

Inbox Zero is an open-source email app that helps you reach inbox zero fast with AI assistance. It offers various features such as a newsletter cleaner, AI assistant for auto-responding, archiving, labeling, and forwarding emails, a cold email blocker, email analytics, tracking of new senders and unreplied emails, and a large email finder to free up space. Inbox Zero is built with Next.js, Tailwind CSS, Prisma, Tinybird, Upstash, and Turbo.

ChatGPT-Shortcut

ChatGPT Shortcut is an AI tool designed to maximize efficiency and productivity by providing a concise list of AI instructions. Users can easily find prompts suitable for various scenarios, boosting productivity and work efficiency. The tool offers one-click prompts, optimization for non-English languages, prompt saving and sharing, and a community voting system. It includes a browser extension compatible with Chrome, Edge, Firefox, and other Chromium-based browsers, as well as a Tampermonkey script for custom domain use. The tool is open-source, allowing users to modify the website's nomenclature, usage directives, and prompts for different languages.

auto-news

Auto-News is an automatic news aggregator tool that utilizes Large Language Models (LLM) to pull information from various sources such as Tweets, RSS feeds, YouTube videos, web articles, Reddit, and journal notes. The tool aims to help users efficiently read and filter content based on personal interests, providing a unified reading experience and organizing information effectively. It features feed aggregation with summarization, transcript generation for videos and articles, noise reduction, task organization, and deep dive topic exploration. The tool supports multiple LLM backends, offers weekly top-k aggregations, and can be deployed on Linux/MacOS using docker-compose or Kubernetes.

Everywhere

Everywhere is an interactive AI assistant with context-aware capabilities, featuring a sleek, modern UI and powerful integrated functionality. It instantly perceives and understands anything on your screen, providing seamless AI assistant support without the need for screenshots or app switching. The tool offers troubleshooting expertise, quick web summarization, instant translation, and email draft assistance. It supports LLM from various providers, integrates with web browsers, file systems, terminals, and more, and provides an interactive experience with a modern UI, context-aware invocation, keyboard shortcuts, and markdown rendering. Everywhere is available on Windows and macOS, with Linux support coming soon. Language support includes Simplified Chinese, English, German, Spanish, French, Italian, Japanese, Korean, Russian, Turkish, Traditional Chinese, and Traditional Chinese (Hong Kong).

coreply

Coreply is an open-source Android app that provides texting suggestions while typing, enhancing the typing experience with intelligent, context-aware suggestions. It supports various texting apps and offers real-time AI suggestions, customizable LLM settings, and ensures no data collection. Users can install the app, configure it with an API key, and start receiving suggestions while typing in messaging apps. The tool supports different AI models from providers like OpenAI, Google AI Studio, Openrouter, Groq, and Codestral for chat completion and fill-in-the-middle tasks.

llm-x

LLM X is a ChatGPT-style UI for the niche group of folks who run Ollama (think of this like an offline chat gpt server) locally. It supports sending and receiving images and text and works offline through PWA (Progressive Web App) standards. The project utilizes React, Typescript, Lodash, Mobx State Tree, Tailwind css, DaisyUI, NextUI, Highlight.js, React Markdown, kbar, Yet Another React Lightbox, Vite, and Vite PWA plugin. It is inspired by ollama-ui's project and Perplexity.ai's UI advancements in the LLM UI space. The project is still under development, but it is already a great way to get started with building your own LLM UI.

postiz-app

Postiz is an ultimate AI social media scheduling tool that offers everything you need to manage your social media posts, build an audience, capture leads, and grow your business. It allows you to schedule posts with AI features, measure work with analytics, collaborate with team members, and invite others to comment and schedule posts. The tech stack includes NX (Monorepo), NextJS (React), NestJS, Prisma, Redis, and Resend for email notifications.

Open-Interface

Open Interface is a self-driving software that automates computer tasks by sending user requests to a language model backend (e.g., GPT-4V) and simulating keyboard and mouse inputs to execute the steps. It course-corrects by sending current screenshots to the language models. The tool supports MacOS, Linux, and Windows, and requires setting up the OpenAI API key for access to GPT-4V. It can automate tasks like creating meal plans, setting up custom language model backends, and more. Open Interface is currently not efficient in accurate spatial reasoning, tracking itself in tabular contexts, and navigating complex GUI-rich applications. Future improvements aim to enhance the tool's capabilities with better models trained on video walkthroughs. The tool is cost-effective, with user requests priced between $0.05 - $0.20, and offers features like interrupting the app and primary display visibility in multi-monitor setups.

mindpocket

MindPocket is a fully open-source, free, multi-platform, one-click deployable personal bookmark system with AI Agent integration. It organizes bookmarks with AI-powered RAG content summarization and automatic tag generation, making it easy to find and manage saved content. The project is built using VIBE CODING principles and offers features like zero cost deployment, one-click deploy setup, multi-platform support, AI enhancement for smart tagging and summarization, and full open-source accessibility for user data ownership. The tool is designed to provide a seamless bookmarking experience across web, mobile, and browser extension platforms.

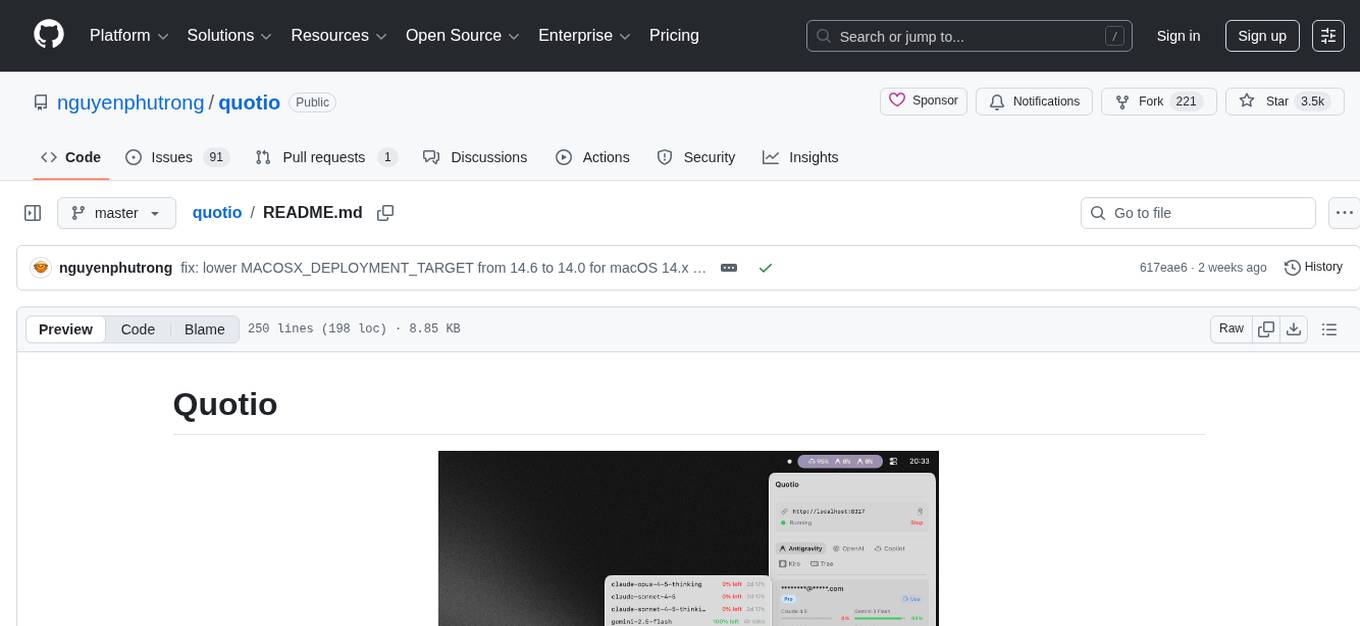

quotio

Quotio is a native macOS application designed as the ultimate command center for managing CLIProxyAPI, a local proxy server that powers AI coding agents. It allows users to connect multiple AI accounts, track quotas, configure CLI tools, and monitor request traffic in real-time. With features like multi-provider support, standalone quota mode, one-click agent configuration, real-time dashboard, smart quota management, API key management, menu bar integration, notifications, auto-update, and multilingual support, Quotio offers a comprehensive solution for AI coding assistants on macOS.

AgentGPT

AgentGPT is a platform that allows users to configure and deploy autonomous AI agents. Users can name their own custom AI and set it on any goal. The AI will think of tasks, execute them, and learn from the results to reach the goal. The platform provides a demo experience, automatic setup CLI, and a tech stack including Next.js, FastAPI, Prisma, TailwindCSS, Zod, and more. AgentGPT is designed to help users easily create and deploy AI agents for various tasks.

pipecat

Pipecat is an open-source framework designed for building generative AI voice bots and multimodal assistants. It provides code building blocks for interacting with AI services, creating low-latency data pipelines, and transporting audio, video, and events over the Internet. Pipecat supports various AI services like speech-to-text, text-to-speech, image generation, and vision models. Users can implement new services and contribute to the framework. Pipecat aims to simplify the development of applications like personal coaches, meeting assistants, customer support bots, and more by providing a complete framework for integrating AI services.

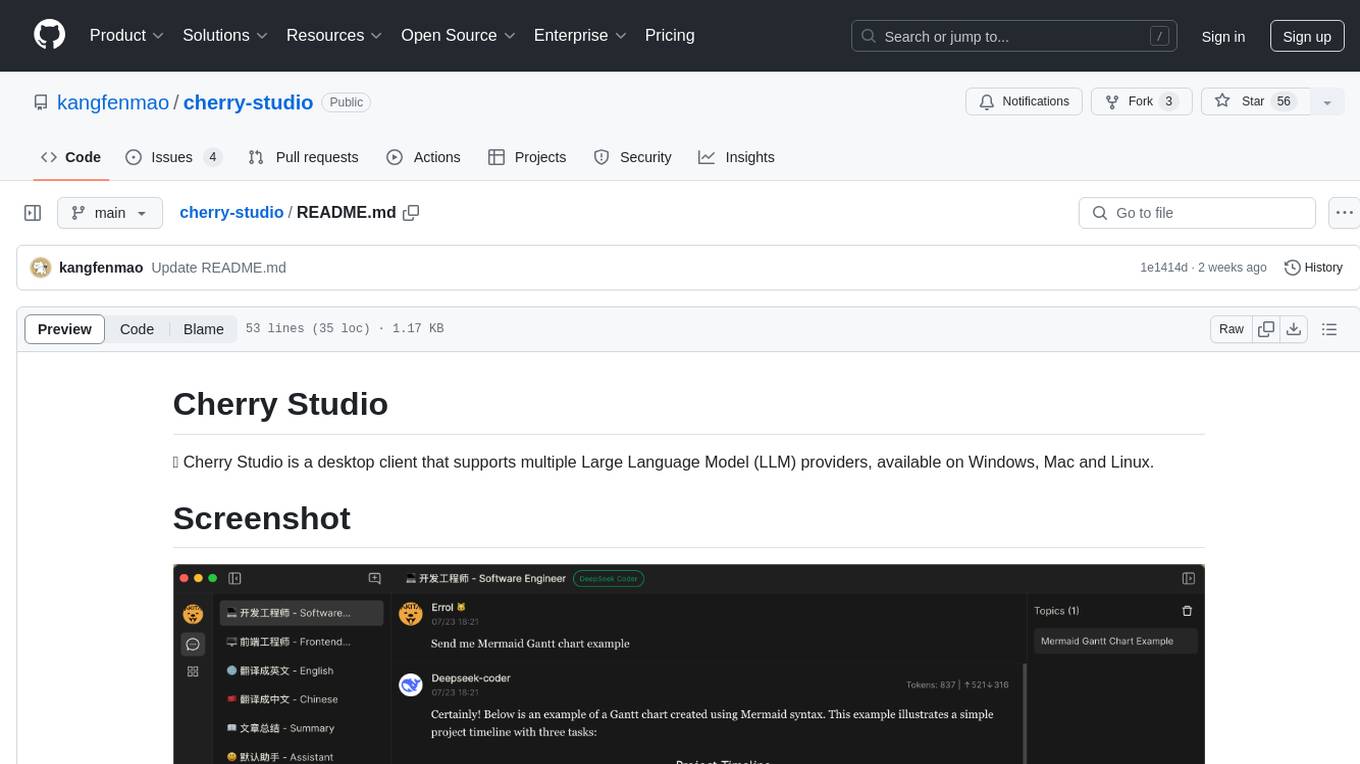

cherry-studio

Cherry Studio is a desktop client that supports multiple Large Language Model (LLM) providers, available on Windows, Mac, and Linux. It allows users to create multiple Assistants and topics, use multiple models to answer questions in the same conversation, and supports drag-and-drop sorting, code highlighting, and Mermaid chart. The tool is designed to enhance productivity and streamline the process of interacting with various language models.

growi

GROWI is a collaborative wiki platform that allows users to create hierarchical pages with markdown, edit simultaneously with multiple people, and support authentication with LDAP/Active Directory, OAuth, and SAML. It also integrates with Slack/Mattermost, IFTTT, and allows for plugin customization. GROWI is Docker and Docker Compose ready, supports multiple sites, HTTPS with Let's Encrypt proxy integration, and offers migration guides for on-premise installations. The tool is built with Node.js, npm, pnpm, Turborepo, and requires MongoDB, with optional dependencies on Redis and ElasticSearch for full-text search functionality.

aide

Aide is a Visual Studio Code extension that offers AI-powered features to help users master any code. It provides functionalities such as code conversion between languages, code annotation for readability, quick copying of files/folders as AI prompts, executing custom AI commands, defining prompt templates, multi-file support, setting keyboard shortcuts, and more. Users can enhance their productivity and coding experience by leveraging Aide's intelligent capabilities.

macai

Macai is a native macOS client for interacting with modern AI tools, such as ChatGPT and Ollama. It features organized chats with custom system messages, system-defined light/dark themes, backup and restore functionality, customizable context size, support for any model with a compatible API, formatted code blocks and tables, multiple chat tabs, CoreData data storage, streamed responses, and automatic chat name generation. Macai is in active development, with contributions welcome.

For similar tasks

inbox-zero

Inbox Zero is an open-source email app that helps you reach inbox zero fast with AI assistance. It offers various features such as a newsletter cleaner, AI assistant for auto-responding, archiving, labeling, and forwarding emails, a cold email blocker, email analytics, tracking of new senders and unreplied emails, and a large email finder to free up space. Inbox Zero is built with Next.js, Tailwind CSS, Prisma, Tinybird, Upstash, and Turbo.

For similar jobs

inbox-zero

Inbox Zero is an open-source email app that helps you reach inbox zero fast with AI assistance. It offers various features such as a newsletter cleaner, AI assistant for auto-responding, archiving, labeling, and forwarding emails, a cold email blocker, email analytics, tracking of new senders and unreplied emails, and a large email finder to free up space. Inbox Zero is built with Next.js, Tailwind CSS, Prisma, Tinybird, Upstash, and Turbo.