gemini-pro-bot

A Python Telegram bot powered by Google's gemini-pro LLM API

Stars: 130

This Python Telegram bot utilizes Google's `gemini-pro` LLM API to generate creative text formats based on user input. It's designed to be an engaging and interactive way to explore the capabilities of large language models. Key features include generating various text formats like poems, code, scripts, and musical pieces. The bot supports real-time streaming of the generation process, allowing users to witness the text unfold. Additionally, it can respond to messages with Bard's creative output and handle image-based inputs for multimodal responses. User authentication is optional, and the bot can be easily integrated with Docker or installed via pipenv.

README:

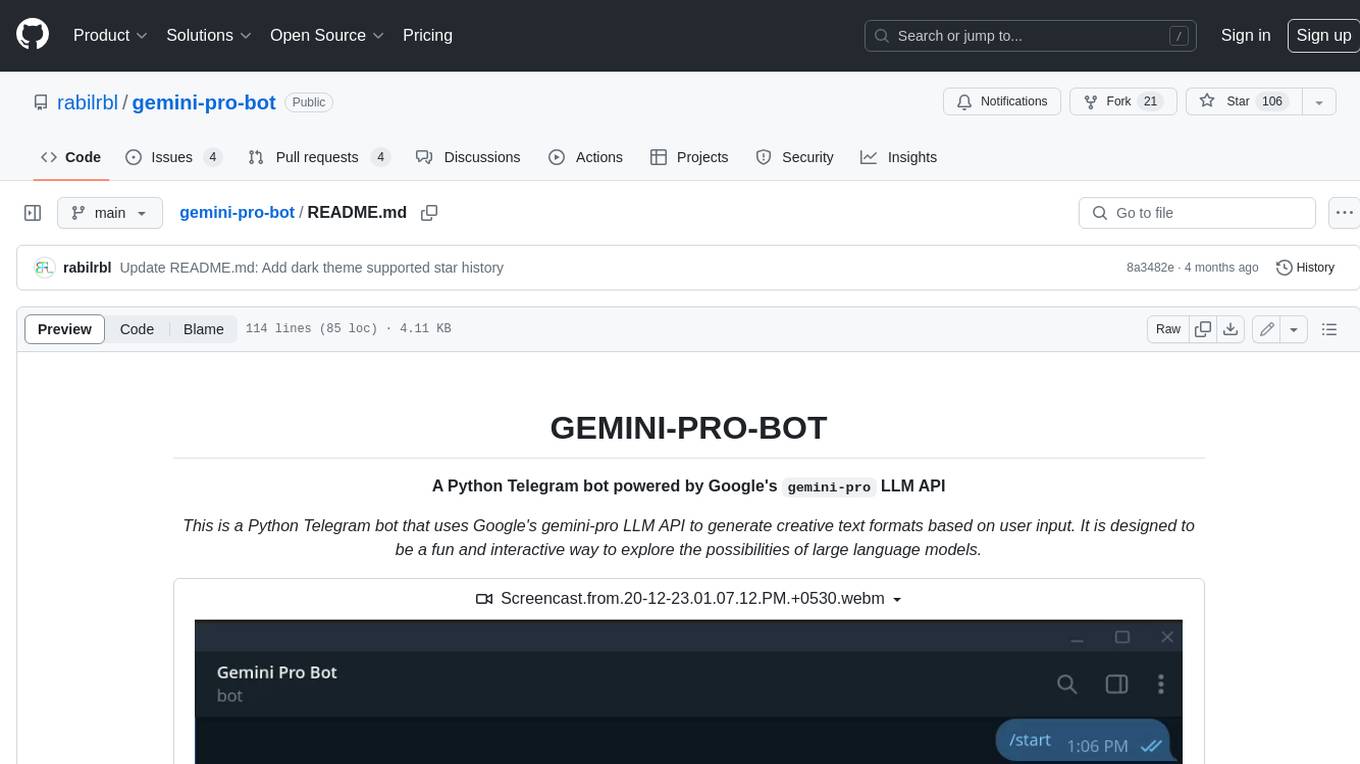

A Python Telegram bot powered by Google's gemini-pro LLM API

This is a Python Telegram bot that uses Google's gemini-pro LLM API to generate creative text formats based on user input. It is designed to be a fun and interactive way to explore the possibilities of large language models.

- Generate creative text formats like poems, code, scripts, musical pieces, etc.

- Stream the generation process, so you can see the text unfold in real-time.

- Reply to your messages with Bard's creative output.

- Easy to use with simple commands:

-

/start: Greet the bot and get started. -

/help: Get information about the bot's capabilities.

-

- Send any text message to trigger the generation process.

- Send any image with captions to generate responses based on the image. (Multi-modal support)

- User authentication to prevent unauthorized access by setting

AUTHORIZED_USERSin the.envfile (optional).

- Python 3.10+

- Telegram Bot API token

- Google

gemini-proAPI key - dotenv (for environment variables)

Simply run the following command to run the pre-built image from GitHub Container Registry:

docker run --env-file .env ghcr.io/rabilrbl/gemini-pro-bot:latestUpdate the image with:

docker pull ghcr.io/rabilrbl/gemini-pro-bot:latestBuild the image with:

docker build -t gemini-pro-bot .Once the image is built, you can run it with:

docker run --env-file .env gemini-pro-bot- Clone this repository.

- Install the required dependencies:

-

pipenv install(if using pipenv) -

pip install -r requirements.txt(if not using pipenv)

-

- Create a

.envfile and add the following environment variables:-

BOT_TOKEN: Your Telegram Bot API token. You can get one by talking to @BotFather. -

GOOGLE_API_KEY: Your Google Bard API key. You can get one from Google AI Studio. -

AUTHORIZED_USERS: A comma-separated list of Telegram usernames or user IDs that are authorized to access the bot. (optional) Example value:shonan23,1234567890

-

- Run the bot:

-

python main.py(if not using pipenv) -

pipenv run python main.py(if using pipenv)

-

- Start the bot by running the script.

python main.py

- Open the bot in your Telegram chat.

- Send any text message to the bot.

- The bot will generate creative text formats based on your input and stream the results back to you.

- If you want to restrict public access to the bot, you can set

AUTHORIZED_USERSin the.envfile to a comma-separated list of Telegram user IDs. Only these users will be able to access the bot. Example:AUTHORIZED_USERS=shonan23,1234567890

| Command | Description |

|---|---|

/start |

Greet the bot and get started. |

/help |

Get information about the bot's capabilities. |

/new |

Start a new chat session. |

We welcome contributions to this project. Please feel free to fork the repository and submit pull requests.

This bot is still under development and may sometimes provide nonsensical or inappropriate responses. Use it responsibly and have fun!

This is a free and open-source project released under the GNU Affero General Public License v3.0 license. See the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gemini-pro-bot

Similar Open Source Tools

gemini-pro-bot

This Python Telegram bot utilizes Google's `gemini-pro` LLM API to generate creative text formats based on user input. It's designed to be an engaging and interactive way to explore the capabilities of large language models. Key features include generating various text formats like poems, code, scripts, and musical pieces. The bot supports real-time streaming of the generation process, allowing users to witness the text unfold. Additionally, it can respond to messages with Bard's creative output and handle image-based inputs for multimodal responses. User authentication is optional, and the bot can be easily integrated with Docker or installed via pipenv.

sandbox

Sandbox is an open-source cloud-based code editing environment with custom AI code autocompletion and real-time collaboration. It consists of a frontend built with Next.js, TailwindCSS, Shadcn UI, Clerk, Monaco, and Liveblocks, and a backend with Express, Socket.io, Cloudflare Workers, D1 database, R2 storage, Workers AI, and Drizzle ORM. The backend includes microservices for database, storage, and AI functionalities. Users can run the project locally by setting up environment variables and deploying the containers. Contributions are welcome following the commit convention and structure provided in the repository.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

ComfyUI

ComfyUI is a powerful and modular visual AI engine and application that allows users to design and execute advanced stable diffusion pipelines using a graph/nodes/flowchart based interface. It provides a user-friendly environment for creating complex Stable Diffusion workflows without the need for coding. ComfyUI supports various models for image editing, video processing, audio manipulation, 3D modeling, and more. It offers features like smart memory management, support for different GPU types, loading and saving workflows as JSON files, and offline functionality. Users can also use API nodes to access paid models from external providers through the online Comfy API.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

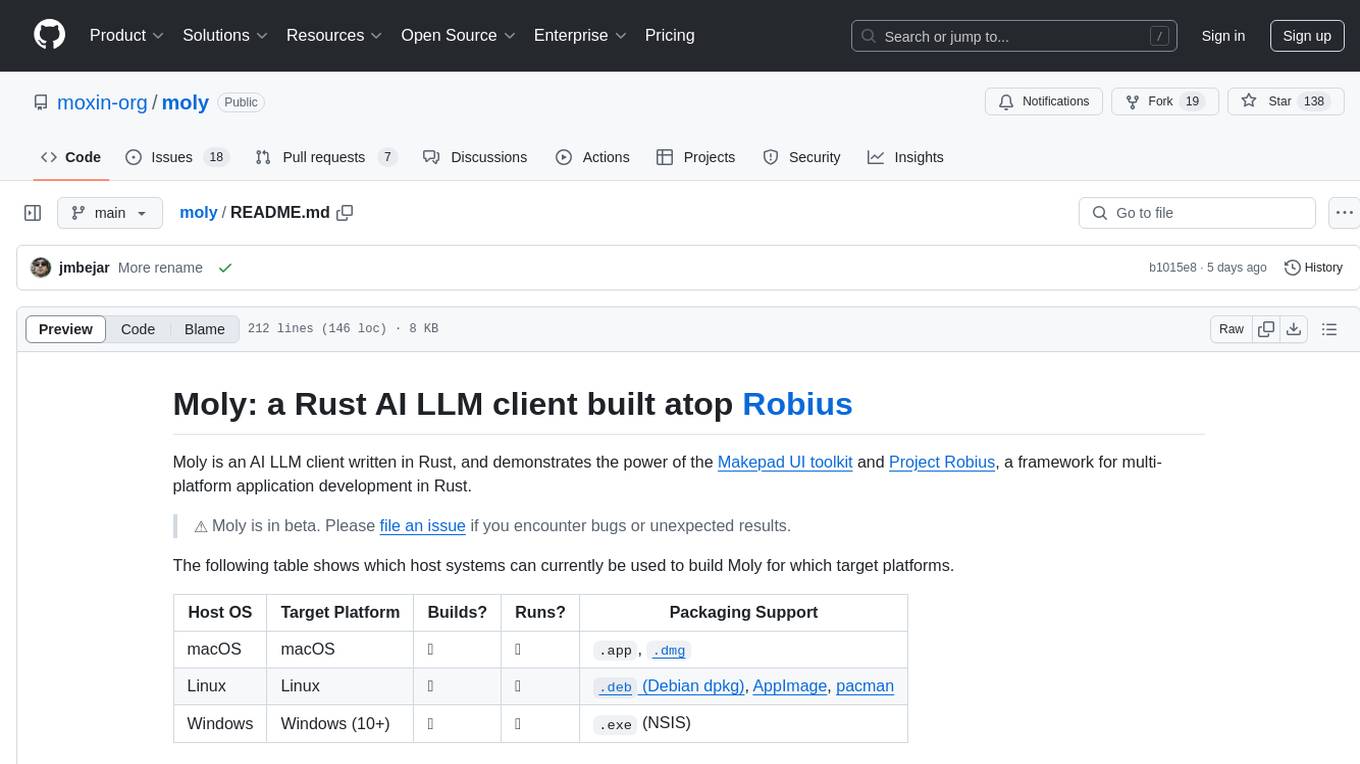

moly

Moly is an AI LLM client written in Rust, showcasing the capabilities of the Makepad UI toolkit and Project Robius, a framework for multi-platform application development in Rust. It is currently in beta, allowing users to build and run Moly on macOS, Linux, and Windows. The tool provides packaging support for different platforms, such as `.app`, `.dmg`, `.deb`, AppImage, pacman, and `.exe` (NSIS). Users can easily set up WasmEdge using `moly-runner` and leverage `cargo` commands to build and run Moly. Additionally, Moly offers pre-built releases for download and supports packaging for distribution on Linux, Windows, and macOS.

log10

Log10 is a one-line Python integration to manage your LLM data. It helps you log both closed and open-source LLM calls, compare and identify the best models and prompts, store feedback for fine-tuning, collect performance metrics such as latency and usage, and perform analytics and monitor compliance for LLM powered applications. Log10 offers various integration methods, including a python LLM library wrapper, the Log10 LLM abstraction, and callbacks, to facilitate its use in both existing production environments and new projects. Pick the one that works best for you. Log10 also provides a copilot that can help you with suggestions on how to optimize your prompt, and a feedback feature that allows you to add feedback to your completions. Additionally, Log10 provides prompt provenance, session tracking and call stack functionality to help debug prompt chains. With Log10, you can use your data and feedback from users to fine-tune custom models with RLHF, and build and deploy more reliable, accurate and efficient self-hosted models. Log10 also supports collaboration, allowing you to create flexible groups to share and collaborate over all of the above features.

vim-ollama

The 'vim-ollama' plugin for Vim adds Copilot-like code completion support using Ollama as a backend, enabling intelligent AI-based code completion and integrated chat support for code reviews. It does not rely on cloud services, preserving user privacy. The plugin communicates with Ollama via Python scripts for code completion and interactive chat, supporting Vim only. Users can configure LLM models for code completion tasks and interactive conversations, with detailed installation and usage instructions provided in the README.

cortex

Nitro is a high-efficiency C++ inference engine for edge computing, powering Jan. It is lightweight and embeddable, ideal for product integration. The binary of nitro after zipped is only ~3mb in size with none to minimal dependencies (if you use a GPU need CUDA for example) make it desirable for any edge/server deployment.

raycast_api_proxy

The Raycast AI Proxy is a tool that acts as a proxy for the Raycast AI application, allowing users to utilize the application without subscribing. It intercepts and forwards Raycast requests to various AI APIs, then reformats the responses for Raycast. The tool supports multiple AI providers and allows for custom model configurations. Users can generate self-signed certificates, add them to the system keychain, and modify DNS settings to redirect requests to the proxy. The tool is designed to work with providers like OpenAI, Azure OpenAI, Google, and more, enabling tasks such as AI chat completions, translations, and image generation.

deaddit

Deaddit is a project showcasing an AI-filled internet platform similar to Reddit. All content, including subdeaddits, posts, and comments, is generated by AI algorithms. Users can interact with AI-generated content and explore a simulated social media experience. The project provides a demonstration of how AI can be used to create online content and simulate user interactions in a virtual community.

mycoder

An open-source mono-repository containing the MyCoder agent and CLI. It leverages Anthropic's Claude API for intelligent decision making, has a modular architecture with various tool categories, supports parallel execution with sub-agents, can modify code by writing itself, features a smart logging system for clear output, and is human-compatible using README.md, project files, and shell commands to build its own context.

mark

Mark is a CLI tool that allows users to interact with large language models (LLMs) using Markdown format. It enables users to seamlessly integrate GPT responses into Markdown files, supports image recognition, scraping of local and remote links, and image generation. Mark focuses on using Markdown as both a prompt and response medium for LLMs, offering a unique and flexible way to interact with language models for various use cases in development and documentation processes.

pacha

Pacha is an AI tool designed for retrieving context for natural language queries using a SQL interface and Python programming environment. It is optimized for working with Hasura DDN for multi-source querying. Pacha is used in conjunction with language models to produce informed responses in AI applications, agents, and chatbots.

svelte-bench

SvelteBench is an LLM benchmark tool for evaluating Svelte components generated by large language models. It supports multiple LLM providers such as OpenAI, Anthropic, Google, and OpenRouter. Users can run predefined test suites to verify the functionality of the generated components. The tool allows configuration of API keys for different providers and offers debug mode for faster development. Users can provide a context file to improve component generation. Benchmark results are saved in JSON format for analysis and visualization.

For similar tasks

gemini-pro-bot

This Python Telegram bot utilizes Google's `gemini-pro` LLM API to generate creative text formats based on user input. It's designed to be an engaging and interactive way to explore the capabilities of large language models. Key features include generating various text formats like poems, code, scripts, and musical pieces. The bot supports real-time streaming of the generation process, allowing users to witness the text unfold. Additionally, it can respond to messages with Bard's creative output and handle image-based inputs for multimodal responses. User authentication is optional, and the bot can be easily integrated with Docker or installed via pipenv.

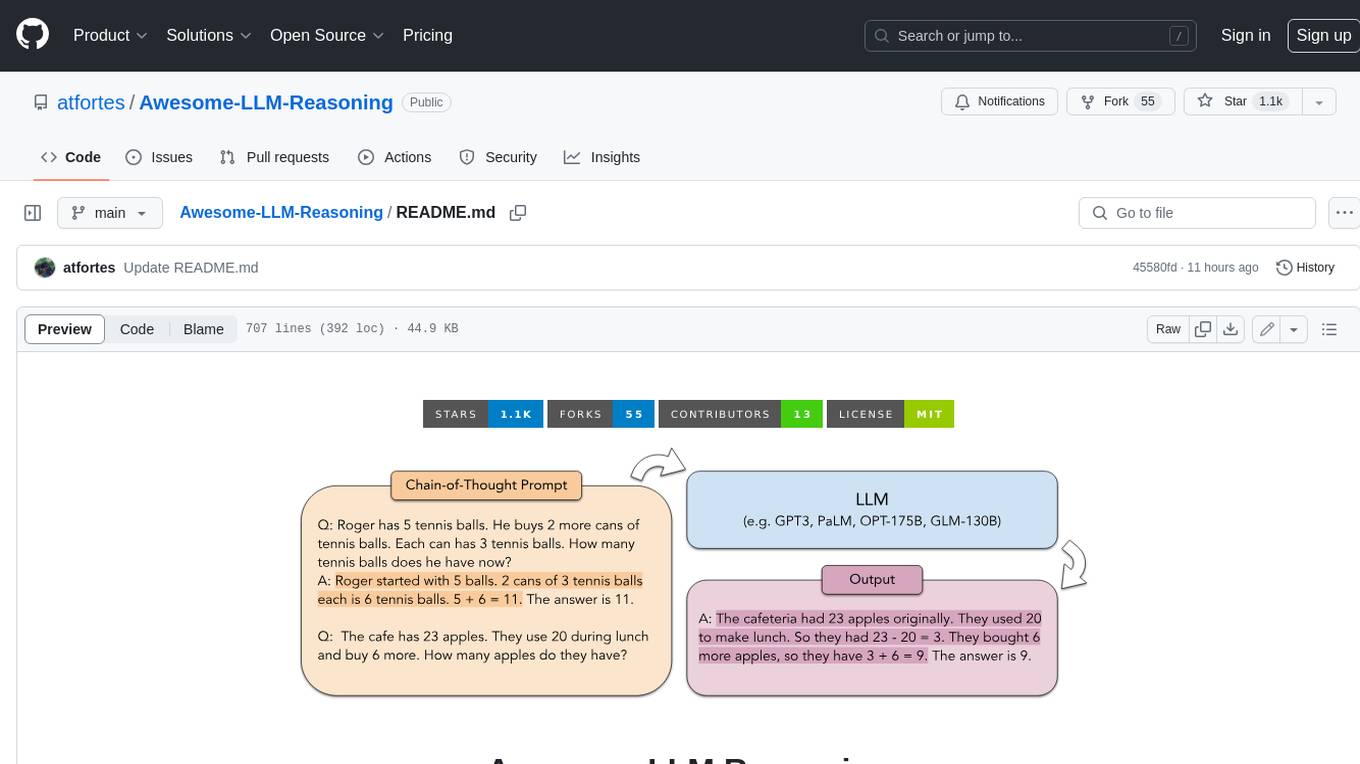

Awesome-LLM-Reasoning

**Curated collection of papers and resources on how to unlock the reasoning ability of LLMs and MLLMs.** **Description in less than 400 words, no line breaks and quotation marks.** Large Language Models (LLMs) have revolutionized the NLP landscape, showing improved performance and sample efficiency over smaller models. However, increasing model size alone has not proved sufficient for high performance on challenging reasoning tasks, such as solving arithmetic or commonsense problems. This curated collection of papers and resources presents the latest advancements in unlocking the reasoning abilities of LLMs and Multimodal LLMs (MLLMs). It covers various techniques, benchmarks, and applications, providing a comprehensive overview of the field. **5 jobs suitable for this tool, in lowercase letters.** - content writer - researcher - data analyst - software engineer - product manager **Keywords of the tool, in lowercase letters.** - llm - reasoning - multimodal - chain-of-thought - prompt engineering **5 specific tasks user can use this tool to do, in less than 3 words, Verb + noun form, in daily spoken language.** - write a story - answer a question - translate a language - generate code - summarize a document

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

Linly-Talker

Linly-Talker is an innovative digital human conversation system that integrates the latest artificial intelligence technologies, including Large Language Models (LLM) 🤖, Automatic Speech Recognition (ASR) 🎙️, Text-to-Speech (TTS) 🗣️, and voice cloning technology 🎤. This system offers an interactive web interface through the Gradio platform 🌐, allowing users to upload images 📷 and engage in personalized dialogues with AI 💬.

simplemind

Simplemind is an AI library designed to simplify the experience with AI APIs in Python. It provides easy-to-use AI tools with a human-centered design and minimal configuration. Users can tap into powerful AI capabilities through simple interfaces, without needing to be experts. The library supports various APIs from different providers/models and offers features like text completion, streaming text, structured data handling, conversational AI, tool calling, and logging. Simplemind aims to make AI models accessible to all by abstracting away complexity and prioritizing readability and usability.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.