chatgpt-cli

ChatGPT CLI is a versatile tool for interacting with LLMs through OpenAI, Azure, and other popular providers like Perplexity AI and Llama. It supports prompt files, history tracking, and live data injection via MCP (Model Context Protocol), making it ideal for both casual users and developers seeking a powerful, customizable GPT experience.

Stars: 804

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

README:

Tested and Compatible with OpenAI ChatGPT, Azure OpenAI Service, Perplexity AI, Llama and 302.AI!

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure, featuring streaming capabilities and extensive configuration options.

- Features

- Installation

- Getting Started

- Configuration

- Markdown Rendering

- Development

- Reporting Issues and Contributing

- Uninstallation

- Useful Links

- Additional Resources

- Streaming mode: Real-time interaction with the GPT model.

- Query mode: Single input-output interactions with the GPT model.

- Interactive mode: The interactive mode allows for a more conversational experience with the model. Prints the token usage when combined with query mode.

- Thread-based context management: Enjoy seamless conversations with the GPT model with individualized context for each thread, much like your experience on the OpenAI website. Each unique thread has its own history, ensuring relevant and coherent responses across different chat instances.

-

Sliding window history: To stay within token limits, the chat history automatically trims while still preserving

the necessary context. The size of this window can be adjusted through the

context-windowsetting. - Custom context from any source: You can provide the GPT model with a custom context during conversation. This context can be piped in from any source, such as local files, standard input, or even another program. This flexibility allows the model to adapt to a wide range of conversational scenarios.

-

Support for images: Upload an image or provide an image URL using the

--imageflag. Note that image support may not be available for all models. You can also pipe an image directly:pngpaste - | chatgpt "What is this photo?" -

Generate images: Use the

--drawand--outputflags to generate an image from a prompt (requires image-capable models likegpt-image-1). -

Edit images: Use the

--drawflag with--imageand--outputto modify an existing image using a prompt ( e.g., "add sunglasses to the cat"). Supported formats: PNG, JPEG, and WebP. -

Audio support: You can upload audio files using the

--audioflag to ask questions about spoken content. This feature is compatible only with audio-capable models like gpt-4o-audio-preview. Currently, only.mp3and.wavformats are supported. -

Transcription support: You can also use the

--transcribeflag to generate a transcript of the uploaded audio. This uses OpenAI’s transcription endpoint (compatible with models like gpt-4o-transcribe) and supports a wider range of formats, including.mp3,.mp4,.mpeg,.mpga,.m4a,.wav, and.webm. -

Text-to-speech support: Use the

--speakand--outputflags to convert text to speech (works with models likegpt-4o-mini-tts). If you haveafplayinstalled (macOS), you can even chain playback like this:chatgpt --speak "convert this to audio" --output test.mp3 && afplay test.mp3

-

Model listing: Access a list of available models using the

-lor--list-modelsflag. -

Advanced configuration options: The CLI supports a layered configuration system where settings can be specified

through default values, a

config.yamlfile, and environment variables. For quick adjustments, various--set-<value>flags are provided. To verify your current settings, use the--configor-cflag.

We’re excited to introduce support for prompt files with the --prompt flag in version 1.7.1! This feature

allows you to provide a rich and detailed context for your conversations directly from a file.

The --prompt flag lets you specify a file containing the initial context or instructions for your ChatGPT

conversation. This is especially useful when you have detailed instructions or context that you want to reuse across

different conversations.

To use the --prompt flag, pass the path of your prompt file like this:

chatgpt --prompt path/to/your/prompt.md "Use a pipe or provide a query here"The contents of prompt.md will be read and used as the initial context for the conversation, while the query you

provide directly will serve as the specific question or task you want to address.

Here’s a fun example where you can use the output of a git diff command as a prompt:

git diff | chatgpt --prompt ../prompts/write_pull-request.mdIn this example, the content from the write_pull-request.md prompt file is used to guide the model's response based on

the diff data from git diff.

For a variety of ready-to-use prompts, check out this awesome prompts repository. These can serve as great starting points or inspiration for your own custom prompts!

Here’s the updated README section for MCP Support, placed after the ### Prompt Support section you shared:

We’re excited to introduce Model Context Protocol (MCP) support in version 1.8.3+, allowing you to enrich your chat sessions with structured, live data. For now, this feature is limited to Apify integrations.

MCP enables the CLI to call external plugins — like Apify actors — and inject their responses into the chat context before your actual query is sent. This is useful for fetching weather, scraping Google Maps, or summarizing PDFs.

You can use either --param (for individual key=value pairs) or --params (for raw JSON).

Using --param flags:

chatgpt --mcp apify/epctex~weather-scraper \

--param locations='["Brooklyn"]' \

--param language=en \

--param forecasts=true \

"what should I wear today"Using a single --params flag:

chatgpt --mcp apify/epctex~weather-scraper \

--params '{"locations": ["Brooklyn"], "language": "en", "forecasts": true}' \

"what should I wear today"If no version is specified, @latest is assumed:

chatgpt --mcp apify/user~weatheris equivalent to:

chatgpt --mcp apify/user~weather@latestResponses from MCP plugins are automatically injected into the conversation thread as context. You can use MCP in two different modes:

-

MCP-only mode (Context Injection Only)

chatgpt --mcp apify/epctex~weather-scraper --param location=Brooklyn

- Fetches live data

- Injects it into the current thread

- Does not trigger a GPT completion

- CLI prints a confirmation

-

MCP + Query mode (Context + Completion)

chatgpt --mcp apify/epctex~weather-scraper --param location=Brooklyn "What should I wear today?"- Fetches and injects MCP data

- Immediately sends your query to GPT

- Returns the assistant’s response

You’ll need to set the APIFY_API_KEY as an environment variable or config value

Example:

export APIFY_API_KEY=your-api-keyYou can install chatgpt-cli using Homebrew:

brew tap kardolus/chatgpt-cli && brew install chatgpt-cliFor a quick and easy installation without compiling, you can directly download the pre-built binary for your operating system and architecture:

curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-darwin-arm64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-darwin-amd64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-linux-amd64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-linux-arm64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-linux-386 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-freebsd-amd64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/curl -L -o chatgpt https://github.com/kardolus/chatgpt-cli/releases/latest/download/chatgpt-freebsd-arm64 && chmod +x chatgpt && sudo mv chatgpt /usr/local/bin/Download the binary from this link and add it to your PATH.

Choose the appropriate command for your system, which will download the binary, make it executable, and move it to your /usr/local/bin directory (or %PATH% on Windows) for easy access.

-

Set the

OPENAI_API_KEYenvironment variable to your ChatGPT secret key. To set the environment variable, you can add the following line to your shell profile (e.g., ~/.bashrc, ~/.zshrc, or ~/.bash_profile), replacing your_api_key with your actual key:export OPENAI_API_KEY="your_api_key"

-

To enable history tracking across CLI calls, create a ~/.chatgpt-cli directory using the command:

mkdir -p ~/.chatgpt-cliOnce this directory is in place, the CLI automatically manages the message history for each "thread" you converse with. The history operates like a sliding window, maintaining context up to a configurable token maximum. This ensures a balance between maintaining conversation context and achieving optimal performance.

By default, if a specific thread is not provided by the user, the CLI uses the default thread and stores the history at

~/.chatgpt-cli/history/default.json. You can find more details about how to configure thethreadparameter in the Configuration section of this document. -

Try it out:

chatgpt what is the capital of the Netherlands

-

To start interactive mode, use the

-ior--interactiveflag:chatgpt --interactive

If you want the CLI to automatically create a new thread for each session, ensure that the

auto_create_new_threadconfiguration variable is set totrue. This will create a unique thread identifier for each interactive session. -

To use the pipe feature, create a text file containing some context. For example, create a file named context.txt with the following content:

Kya is a playful dog who loves swimming and playing fetch.

Then, use the pipe feature to provide this context to ChatGPT:

cat context.txt | chatgpt "What kind of toy would Kya enjoy?"

-

To list all available models, use the -l or --list-models flag:

chatgpt --list-models

-

For more options, see:

chatgpt --help

The ChatGPT CLI adopts a four-tier configuration strategy, with different levels of precedence assigned to flags, environment variables, a config.yaml file, and default values, in that respective order:

- Flags: Command-line flags have the highest precedence. Any value provided through a flag will override other configurations.

- Environment Variables: If a setting is not specified by a flag, the corresponding environment variable (prefixed with the name field from the config) will be checked.

- Config file (config.yaml): If neither a flag nor an environment variable is set, the value from the config.yaml file will be used.

- Default Values: If no value is specified through flags, config.yaml, or environment variables, the CLI will fall back to its built-in default values.

| Variable | Description | Default |

|---|---|---|

name |

The prefix for environment variable overrides. | 'openai' |

thread |

The name of the current chat thread. Each unique thread name has its own context. | 'default' |

target |

Load configuration from config.target.yaml | '' |

omit_history |

If true, the chat history will not be used to provide context for the GPT model. | false |

command_prompt |

The command prompt in interactive mode. Should be single-quoted. | '[%datetime] [Q%counter]' |

output_prompt |

The output prompt in interactive mode. Should be single-quoted. | '' |

command_prompt_color |

The color of the command_prompt in interactive mode. Supported colors: "red", "green", "blue", "yellow", "magenta". | '' |

output_prompt_color |

The color of the output_prompt in interactive mode. Supported colors: "red", "green", "blue", "yellow", "magenta". | '' |

auto_create_new_thread |

If set to true, a new thread with a unique identifier (e.g., int_a1b2) will be created for each interactive session. If false, the CLI will use the thread specified by the thread parameter. |

false |

track_token_usage |

If set to true, displays the total token usage after each query in --query mode, helping you monitor API usage. | false |

debug |

If set to true, prints the raw request and response data during API calls, useful for debugging. | false |

skip_tls_verify |

If set to true, skips TLS certificate verification, allowing insecure HTTPS requests. | false |

multiline |

If set to true, enables multiline input mode in interactive sessions. | false |

role_file |

Path to a file that overrides the system role (role). | '' |

prompt |

Path to a file that provides additional context before the query. | '' |

image |

Local path or URL to an image used in the query. | '' |

audio |

Path to an audio file (MP3/WAV) used as part of the query. | '' |

output |

Path where synthesized audio is saved when using --speak. | '' |

transcribe |

Enables transcription mode. This flags takes the path of an audio file. | false |

speak |

If true, enables text-to-speech synthesis for the input query. | false |

draw |

If true, generates an image from a prompt and saves it to the path specified by output. Requires image-capable models. |

false |

| Variable | Description | Default |

|---|---|---|

api_key |

Your API key. | (none for security) |

auth_header |

The header used for authorization in API requests. | 'Authorization' |

auth_token_prefix |

The prefix to be added before the token in the auth_header. |

'Bearer ' |

completions_path |

The API endpoint for completions. | '/v1/chat/completions' |

context_window |

The memory limit for how much of the conversation can be remembered at one time. | 8192 |

effort |

Sets the reasoning effort. Used by o1-pro models. | 'low' |

frequency_penalty |

Number between -2.0 and 2.0. Positive values penalize new tokens based on their existing frequency in the text so far. | 0.0 |

image_edits_path |

The API endpoint for image editing. | '/v1/images/edits' |

image_generations_path |

The API endpoint for image generation. | '/v1/images/generations' |

max_tokens |

The maximum number of tokens that can be used in a single API call. | 4096 |

model |

The GPT model used by the application. | 'gpt-4o' |

models_path |

The API endpoint for accessing model information. | '/v1/models' |

presence_penalty |

Number between -2.0 and 2.0. Positive values penalize new tokens based on whether they appear in the text so far. | 0.0 |

responses_path |

The API endpoint for responses. Used by o1-pro models. | '/v1/responses' |

role |

The system role | 'You are a helpful assistant.' |

seed |

Sets the seed for deterministic sampling (Beta). Repeated requests with the same seed and parameters aim to return the same result. | 0 |

speech_path |

The API endpoint for text-to-speech synthesis. | '/v1/audio/transcriptions' |

temperature |

What sampling temperature to use, between 0 and 2. Higher values make the output more random; lower values make it more focused and deterministic. | 1.0 |

top_p |

An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. | 1.0 |

transcriptions_path |

The API endpoint for audio transcription requests. | '/v1/audio/speech' |

url |

The base URL for the OpenAI API. | 'https://api.openai.com' |

user_agent |

The header used for the user agent in API requests. | 'chatgpt-cli' |

voice |

The voice to use when generating audio with TTS models like gpt-4o-mini-tts. | 'nova' |

By default, ChatGPT CLI stores configuration and history files in the ~/.chatgpt-cli directory. However, you can

easily

override these locations by setting environment variables, allowing you to store configuration and history in custom

directories.

| Environment Variable | Description | Default Location |

|---|---|---|

OPENAI_CONFIG_HOME |

Overrides the default config directory path. | ~/.chatgpt-cli |

OPENAI_DATA_HOME |

Overrides the default data directory path. | ~/.chatgpt-cli/history |

To change the default configuration or data directories, set the appropriate environment variables:

export OPENAI_CONFIG_HOME="/custom/config/path"

export OPENAI_DATA_HOME="/custom/data/path"

If these environment variables are not set, the application defaults to ~/.chatgpt-cli for configuration files and ~ /.chatgpt-cli/history for history.

You can maintain multiple configuration files side by side and switch between them using the --target flag. This is

especially useful if you use multiple LLM providers (like OpenAI, Perplexity, Azure, etc.) or have different contexts or

workflows that require distinct settings.

How it Works

When you use the --target flag, the CLI loads a config file named:

config.<target>.yamlFor example:

chatgpt --target perplexity --configThis will load:

~/.chatgpt-cli/config.perplexity.yamlIf the --target flag is not provided, the CLI falls back to:

~/.chatgpt-cli/config.yamlExample Setup

You can maintain the following structure:

~/.chatgpt-cli/

├── config.yaml # Default (e.g., OpenAI)

├── config.perplexity.yaml # Perplexity setup

├── config.azure.yaml # Azure-specific config

└── config.llama.yaml # LLaMA setupThen switch between them like so:

chatgpt --target azure "Explain Azure's GPT model differences"

chatgpt --target perplexity "What are some good restaurants in the Red Hook area"Or just use the default:

chatgpt "What's the capital of Sweden?"CLI and Environment Interaction

- The value of

--targetis never persisted — it must be explicitly passed for each run. - The config file corresponding to the target is loaded before any environment variable overrides are applied.

- Environment variables still follow the name: field inside the loaded config, so name: perplexity enables

PERPLEXITY_API_KEY.

-

%date: The current date in the formatYYYY-MM-DD. -

%time: The current time in the formatHH:MM:SS. -

%datetime: The current date and time in the formatYYYY-MM-DD HH:MM:SS. -

%counter: The total number of queries in the current session. -

%usage: The usage in total tokens used (only works in query mode).

The defaults can be overridden by providing your own values in the user configuration file. The structure of this file

mirrors that of the default configuration. For instance, to override

the model and max_tokens parameters, your file might look like this:

model: gpt-3.5-turbo-16k

max_tokens: 4096This alters the model to gpt-3.5-turbo-16k and adjusts max_tokens to 4096. All other options, such as url

, completions_path, and models_path, can similarly be modified. If the user configuration file cannot be accessed or

is missing, the application will resort to the default configuration.

Another way to adjust values without manually editing the configuration file is by using environment variables.

The name attribute forms the prefix for these variables. As an example, the model can be modified using

the OPENAI_MODEL environment variable. Similarly, to disable history during the execution of a command, use:

OPENAI_OMIT_HISTORY=true chatgpt what is the capital of Denmark?This approach is especially beneficial for temporary changes or for testing varying configurations.

Moreover, you can use the --config or -c flag to view the present configuration. This handy feature allows users to

swiftly verify their current settings without the need to manually inspect the configuration files.

chatgpt --configExecuting this command will display the active configuration, including any overrides instituted by environment variables or the user configuration file.

To facilitate convenient adjustments, the ChatGPT CLI provides flags for swiftly modifying the model, thread

, context-window and max_tokens parameters in your user configured config.yaml. These flags are --set-model

, --set-thread, --set-context-window and --set-max-tokens.

For instance, to update the model, use the following command:

chatgpt --set-model gpt-3.5-turbo-16kThis feature allows for rapid changes to key configuration parameters, optimizing your experience with the ChatGPT CLI.

For Azure, you need to configure these, or similar, value

name: azure

api_key: <your azure api key>

url: https://<your_resource>.openai.azure.com

completions_path: /openai/deployments/<your_deployment>/chat/completions?api-version=<your_api>

auth_header: api-key

auth_token_prefix: " "You can set the API key either in the config.yaml file as shown above or export it as an environment variable:

export AZURE_API_KEY=<your_key>For Perplexity, you will need something equivelent to the following values:

name: perplexity

api_key: <your perplexity api key>

model: sonar

url: https://api.perplexity.aiYou can set the API key either in the config.yaml file as shown above or export it as an environment variable:

export PERPLEXITY_API_KEY=<your_key>You can set the API key either in the config.yaml file as shown above or export it as an environment variable:

export AZURE_API_KEY=<your_key>I successfully tested 302.AI with the following values

name: ai302 # environment variables cannot start with numbers

api_key: <your 302.AI api key>

url: https://api.302.aiYou can set the API key either in the config.yaml file as shown above or export it as an environment variable:

export AI302_API_KEY=<your_key>Enhance your CLI experience with our new autocompletion feature for command flags!

Autocompletion is currently supported for the following shells: Bash, Zsh, Fish, and PowerShell. To activate flag completion in your current shell session, execute the appropriate command based on your shell:

-

Bash

. <(chatgpt --set-completions bash)

-

Zsh

. <(chatgpt --set-completions zsh)

-

Fish

chatgpt --set-completions fish | source

-

PowerShell

chatgpt --set-completions powershell | Out-String | Invoke-Expression

For added convenience, you can make autocompletion persist across all new shell sessions by adding the appropriate sourcing command to your shell's startup file. Here are the files typically used for each shell:

-

Bash: Add to

.bashrcor.bash_profile -

Zsh: Add to

.zshrc -

Fish: Add to

config.fish - PowerShell: Add to your PowerShell profile script

For example, for Bash, you would add the following line to your .bashrc file:

. <(chatgpt --set-completions bash)This ensures that command flag autocompletion is enabled automatically every time you open a new terminal window.

You can render markdown in real-time using the mdrender.sh script, located here. You'll first

need to

install glow.

Example:

chatgpt write a hello world program in Java | ./scripts/mdrender.shTo start developing, set the OPENAI_API_KEY environment variable to

your ChatGPT secret key.

The Makefile simplifies development tasks by providing several targets for testing, building, and deployment.

-

all-tests: Run all tests, including linting, formatting, and go mod tidy.

make all-tests

-

binaries: Build binaries for multiple platforms.

make binaries

-

shipit: Run the release process, create binaries, and generate release notes.

make shipit

-

updatedeps: Update dependencies and commit any changes.

make updatedeps

For more available commands, use:

make help.\scripts\install.ps1-

After a successful build, test the application with the following command:

./bin/chatgpt what type of dog is a Jack Russel?

-

As mentioned previously, the ChatGPT CLI supports tracking conversation history across CLI calls. This feature creates a seamless and conversational experience with the GPT model, as the history is utilized as context in subsequent interactions.

To enable this feature, you need to create a

~/.chatgpt-clidirectory using the command:mkdir -p ~/.chatgpt-cli

If you encounter any issues or have suggestions for improvements, please submit an issue on GitHub. We appreciate your feedback and contributions to help make this project better.

If for any reason you wish to uninstall the ChatGPT CLI application from your system, you can do so by following these steps:

If you installed the CLI using Homebrew you can do:

brew uninstall chatgpt-cliAnd to remove the tap:

brew untap kardolus/chatgpt-cliIf you installed the binary directly, follow these steps:

-

Remove the binary:

sudo rm /usr/local/bin/chatgpt

-

Optionally, if you wish to remove the history tracking directory, you can also delete the

~/.chatgpt-clidirectory:rm -rf ~/.chatgpt-cli

-

Navigate to the location of the

chatgptbinary in your system, which should be in your PATH. -

Delete the

chatgptbinary. -

Optionally, if you wish to remove the history tracking, navigate to the

~/.chatgpt-clidirectory (where~refers to your user's home directory) and delete it.

Please note that the history tracking directory ~/.chatgpt-cli only contains conversation history and no personal

data. If you have any concerns about this, please feel free to delete this directory during uninstallation.

- Amazing Prompts

- OpenAI API Reference

- OpenAI Key Usage Dashboard

- OpenAI Pricing Page

- Perplexity API Reference

- Perplexity Key Usage Dashboard

- Perplexity Models

- 302.AI API Reference

- "Summarize any text instantly with a single shortcut" on Medium: Dive deep into the capabilities of this CLI tool with this detailed walkthrough.

- Join the conversation on Reddit: Discuss the tool, ask questions, and share your experiences with our growing community.

Thank you for using ChatGPT CLI!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chatgpt-cli

Similar Open Source Tools

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

runpod-worker-comfy

runpod-worker-comfy is a serverless API tool that allows users to run any ComfyUI workflow to generate an image. Users can provide input images as base64-encoded strings, and the generated image can be returned as a base64-encoded string or uploaded to AWS S3. The tool is built on Ubuntu + NVIDIA CUDA and provides features like built-in checkpoints and VAE models. Users can configure environment variables to upload images to AWS S3 and interact with the RunPod API to generate images. The tool also supports local testing and deployment to Docker hub using Github Actions.

llm2sh

llm2sh is a command-line utility that leverages Large Language Models (LLMs) to translate plain-language requests into shell commands. It provides a convenient way to interact with your system using natural language. The tool supports multiple LLMs for command generation, offers a customizable configuration file, YOLO mode for running commands without confirmation, and is easily extensible with new LLMs and system prompts. Users can set up API keys for OpenAI, Claude, Groq, and Cerebras to use the tool effectively. llm2sh does not store user data or command history, and it does not record or send telemetry by itself, but the LLM APIs may collect and store requests and responses for their purposes.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

gpt-cli

gpt-cli is a command-line interface tool for interacting with various chat language models like ChatGPT, Claude, and others. It supports model customization, usage tracking, keyboard shortcuts, multi-line input, markdown support, predefined messages, and multiple assistants. Users can easily switch between different assistants, define custom assistants, and configure model parameters and API keys in a YAML file for easy customization and management.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

paxml

Pax is a framework to configure and run machine learning experiments on top of Jax.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

shellChatGPT

ShellChatGPT is a shell wrapper for OpenAI's ChatGPT, DALL-E, Whisper, and TTS, featuring integration with LocalAI, Ollama, Gemini, Mistral, Groq, and GitHub Models. It provides text and chat completions, vision, reasoning, and audio models, voice-in and voice-out chatting mode, text editor interface, markdown rendering support, session management, instruction prompt manager, integration with various service providers, command line completion, file picker dialogs, color scheme personalization, stdin and text file input support, and compatibility with Linux, FreeBSD, MacOS, and Termux for a responsive experience.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

binary_ninja_mcp

This repository contains a Binary Ninja plugin, MCP server, and bridge that enables seamless integration of Binary Ninja's capabilities with your favorite LLM client. It provides real-time integration, AI assistance for reverse engineering, multi-binary support, and various MCP tools for tasks like decompiling functions, getting IL code, managing comments, renaming variables, and more.

For similar tasks

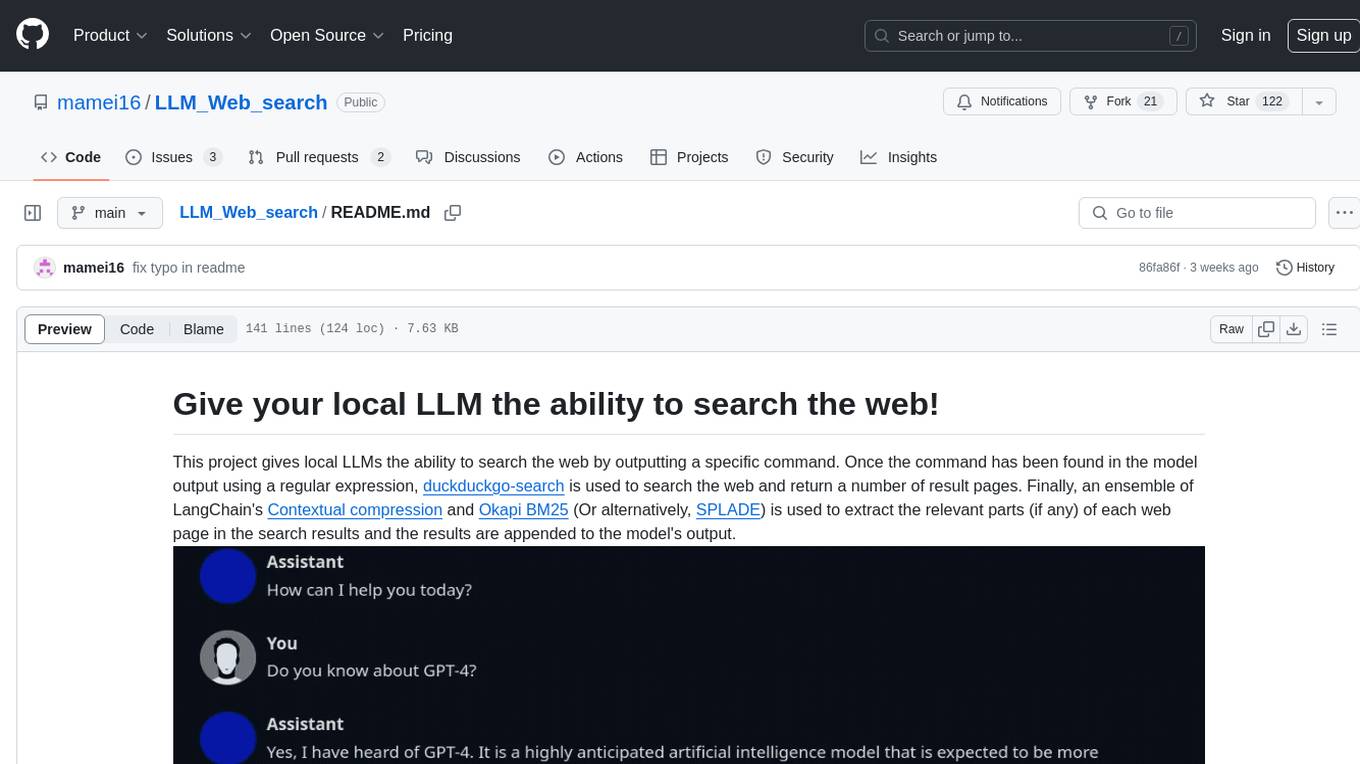

LLM_Web_search

LLM_Web_search project gives local LLMs the ability to search the web by outputting a specific command. It uses regular expressions to extract search queries from model output and then utilizes duckduckgo-search to search the web. LangChain's Contextual compression and Okapi BM25 or SPLADE are used to extract relevant parts of web pages in search results. The extracted results are appended to the model's output.

node-llama-cpp

node-llama-cpp is a tool that allows users to run AI models locally on their machines. It provides pre-built bindings with the option to build from source using cmake. Users can interact with text generation models, chat with models using a chat wrapper, and force models to generate output in a parseable format like JSON. The tool supports Metal and CUDA, offers CLI functionality for chatting with models without coding, and ensures up-to-date compatibility with the latest version of llama.cpp. Installation includes pre-built binaries for macOS, Linux, and Windows, with the option to build from source if binaries are not available for the platform.

Jlama

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

elmer

Elmer is a user-friendly wrapper over common APIs for calling llm’s, with support for streaming and easy registration and calling of R functions. Users can interact with Elmer in various ways, such as interactive chat console, interactive method call, programmatic chat, and streaming results. Elmer also supports async usage for running multiple chat sessions concurrently, useful for Shiny applications. The tool calling feature allows users to define external tools that Elmer can request to execute, enhancing the capabilities of the chat model.

mlx-lm

MLX LM is a Python package designed for generating text and fine-tuning large language models on Apple silicon using MLX. It offers integration with the Hugging Face Hub for easy access to thousands of LLMs, support for quantizing and uploading models to the Hub, low-rank and full model fine-tuning capabilities, and distributed inference and fine-tuning with `mx.distributed`. Users can interact with the package through command line options or the Python API, enabling tasks such as text generation, chatting with language models, model conversion, streaming generation, and sampling. MLX LM supports various Hugging Face models and provides tools for efficient scaling to long prompts and generations, including a rotating key-value cache and prompt caching. It requires macOS 15.0 or higher for optimal performance.

mini-sglang

Mini-SGLang is a lightweight yet high-performance inference framework for Large Language Models. With a compact codebase of ~5,000 lines of Python, it serves as both a capable inference engine and a transparent reference for researchers and developers. It achieves state-of-the-art throughput and latency with advanced optimizations such as Radix Cache, Chunked Prefill, Overlap Scheduling, Tensor Parallelism, and Optimized Kernels integrating FlashAttention and FlashInfer for maximum efficiency. Mini-SGLang is designed to demystify the complexities of modern LLM serving systems, providing a clean, modular, and fully type-annotated codebase that is easy to understand and modify.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.