paxml

Pax is a Jax-based machine learning framework for training large scale models. Pax allows for advanced and fully configurable experimentation and parallelization, and has demonstrated industry leading model flop utilization rates.

Stars: 448

Pax is a framework to configure and run machine learning experiments on top of Jax.

README:

Pax is a framework to configure and run machine learning experiments on top of Jax.

We refer to this page for more exhaustive documentation about starting a Cloud TPU project. The following command is sufficient to create a Cloud TPU VM with 8 cores from a corp machine.

export ZONE=us-central2-b

export VERSION=tpu-vm-v4-base

export PROJECT=<your-project>

export ACCELERATOR=v4-8

export TPU_NAME=paxml

#create a TPU VM

gcloud compute tpus tpu-vm create $TPU_NAME \

--zone=$ZONE --version=$VERSION \

--project=$PROJECT \

--accelerator-type=$ACCELERATORIf you are using TPU Pod slices, please refer to this guide. Run all the commands from a local machine using gcloud with the --worker=all option:

gcloud compute tpus tpu-vm ssh $TPU_NAME --zone=$ZONE \

--worker=all --command="<commmands>"The following quickstart sections assume you run on a single-host TPU, so you can ssh to the VM and run the commands there.

gcloud compute tpus tpu-vm ssh $TPU_NAME --zone=$ZONEAfter ssh-ing the VM, you can install the paxml stable release from PyPI, or the dev version from github.

For installing the stable release from PyPI (https://pypi.org/project/paxml/):

python3 -m pip install -U pip

python3 -m pip install paxml jax[tpu] \

-f https://storage.googleapis.com/jax-releases/libtpu_releases.htmlIf you encounter issues with transitive dependencies and you are using the native Cloud TPU VM environment, please navigate to the corresponding release branch rX.Y.Z and download paxml/pip_package/requirements.txt. This file includes the exact versions of all transitive dependencies needed in the native Cloud TPU VM environment, in which we build/test the corresponding release.

git clone -b rX.Y.Z https://github.com/google/paxml

pip install --no-deps -r paxml/paxml/pip_package/requirements.txtFor installing the dev version from github, and for the ease of editing code:

# install the dev version of praxis first

git clone https://github.com/google/praxis

pip install -e praxis

git clone https://github.com/google/paxml

pip install -e paxml

pip install "jax[tpu]" -f https://storage.googleapis.com/jax-releases/libtpu_releases.html# example model using pjit (SPMD)

python3 .local/lib/python3.8/site-packages/paxml/main.py \

--exp=tasks.lm.params.lm_cloud.LmCloudSpmd2BLimitSteps \

--job_log_dir=gs://<your-bucket>

# example model using pmap

python3 .local/lib/python3.8/site-packages/paxml/main.py \

--exp=tasks.lm.params.lm_cloud.LmCloudTransformerAdamLimitSteps \

--job_log_dir=gs://<your-bucket> \

--pmap_use_tensorstore=TruePlease visit our docs folder for documentations and Jupyter Notebook tutorials. Please see the following section for instructions of running Jupyter Notebooks on a Cloud TPU VM.

You can run the example notebooks in the TPU VM in which you just installed paxml.

####Steps to enable a notebook in a v4-8

-

ssh in TPU VM with port forwarding

gcloud compute tpus tpu-vm ssh $TPU_NAME --project=$PROJECT_NAME --zone=$ZONE --ssh-flag="-4 -L 8080:localhost:8080" -

install jupyter notebook on the TPU vm and downgrade markupsafe

pip install notebook

pip install markupsafe==2.0.1

-

export

jupyterpathexport PATH=/home/$USER/.local/bin:$PATH -

scp the example notebooks to your TPU VM

gcloud compute tpus tpu-vm scp $TPU_NAME:<path inside TPU> <local path of the notebooks> --zone=$ZONE --project=$PROJECT -

start jupyter notebook from the TPU VM and note the token generated by jupyter notebook

jupyter notebook --no-browser --port=8080 -

then in your local browser go to: http://localhost:8080/ and enter the token provided

Note: In case you need to start using a second notebook while the first notebook is still occupying the TPUs, you can run

pkill -9 python3

to free up the TPUs.

Note: NVIDIA has released an updated version of Pax with H100 FP8 support and broad GPU performance improvements. Please visit the NVIDIA Rosetta repository for more details and usage instructions.

The Profile Guided Latency Estimator (PGLE) workflow measures the actual running time of compute and collectives, the the profile information is fed back into XLA compiler for a better scheduling decision.

The workflow to use the Profile Guided Latency Estimator workflow in XLA/GPU is:

-

- Run your workload once, with async collectives and latency hiding scheduler enabled.

You could do so by setting:

export XLA_FLAGS="--xla_gpu_enable_latency_hiding_scheduler=true"-

- Collect and post process a profile by using JAX profiler, saving the extracted instruction latencies into a binary protobuf file.

import os

from etils import epath

import jax

from jax.experimental import profiler as exp_profiler

# Define your profile directory

profile_dir = 'gs://my_bucket/profile'

jax.profiler.start_trace(profile_dir)

# run your workflow

# for i in range(10):

# train_step()

# Stop trace

jax.profiler.stop_trace()

profile_dir = epath.Path(profile_dir)

directories = profile_dir.glob('plugins/profile/*/')

directories = [d for d in directories if d.is_dir()]

rundir = directories[-1]

logging.info('rundir: %s', rundir)

# Post process the profile

fdo_profile = exp_profiler.get_profiled_instructions_proto(os.fspath(rundir))

# Save the profile proto to a file.

dump_dir = rundir / 'profile.pb'

dump_dir.parent.mkdir(parents=True, exist_ok=True)

dump_dir.write_bytes(fdo_profile)After this step, you will get a profile.pb file under the rundir printed in the code.

-

- Run the workload again feeding that file into the compilation.

You need to pass the profile.pb file to the --xla_gpu_pgle_profile_file_or_directory_path flag.

export XLA_FLAGS="--xla_gpu_enable_latency_hiding_scheduler=true --xla_gpu_pgle_profile_file_or_directory_path=/path/to/profile/profile.pb"To enable logging in the XLA and check if the profile is good, set the logging level to include INFO:

export TF_CPP_MIN_LOG_LEVEL=0Run the real workflow, if you found these loggings in the running log, it means the profiler is used in the latency hiding scheduler:

2023-07-21 16:09:43.551600: I external/xla/xla/service/gpu/gpu_hlo_schedule.cc:478] Using PGLE profile from /tmp/profile/plugins/profile/2023_07_20_18_29_30/profile.pb

2023-07-21 16:09:43.551741: I external/xla/xla/service/gpu/gpu_hlo_schedule.cc:573] Found profile, using profile guided latency estimator

-

Pax runs on Jax, you can find details on running Jax jobs on Cloud TPU here, also you can find details on running Jax jobs on a Cloud TPU pod here

-

If you run into dependency errors, please refer to the

requirements.txtfile in the branch corresponding to the stable release you are installing. For e.g., for the stable release 0.4.0 use branchr0.4.0and refer to the requirements.txt for the exact versions of the dependencies used for the stable release.

Here are some sample convergence runs on c4 dataset.

You can run a 1B params model on c4 dataset on TPU v4-8using the config C4Spmd1BAdam4Replicasfrom c4.py as follows:

python3 .local/lib/python3.8/site-packages/paxml/main.py \

--exp=tasks.lm.params.c4.C4Spmd1BAdam4Replicas \

--job_log_dir=gs://<your-bucket>You can observe loss curve and log perplexity graph as follows:

You can run a 16B params model on c4 dataset on TPU v4-64using the config C4Spmd16BAdam32Replicasfrom c4.py as follows:

python3 .local/lib/python3.8/site-packages/paxml/main.py \

--exp=tasks.lm.params.c4.C4Spmd16BAdam32Replicas \

--job_log_dir=gs://<your-bucket>You can observe loss curve and log perplexity graph as follows:

You can run the GPT3-XL model on c4 dataset on TPU v4-128using the config C4SpmdPipelineGpt3SmallAdam64Replicasfrom c4.py as follows:

python3 .local/lib/python3.8/site-packages/paxml/main.py \

--exp=tasks.lm.params.c4.C4SpmdPipelineGpt3SmallAdam64Replicas \

--job_log_dir=gs://<your-bucket>You can observe loss curve and log perplexity graph as follows:

The PaLM paper introduced an efficiency metric called Model FLOPs Utilization (MFU). This is measured as the ratio of the observed throughput (in, for example, tokens per second for a language model) to the theoretical maximum throughput of a system harnessing 100% of peak FLOPs. It differs from other ways of measuring compute utilization because it doesn’t include FLOPs spent on activation rematerialization during the backward pass, meaning that efficiency as measured by MFU translates directly into end-to-end training speed.

To evaluate the MFU of a key class of workloads on TPU v4 Pods with Pax, we carried out an in-depth benchmark campaign on a series of decoder-only Transformer language model (GPT) configurations that range in size from billions to trillions of parameters on the c4 dataset. The following graph shows the training efficiency using the "weak scaling" pattern where we grew the model size in proportion to the number of chips used.

The multislice configs in this repo refer to 1. Singlie slice configs for syntax / model architecture and 2. MaxText repo for config values.

We provide example runs under c4_multislice.py` as a starting point for Pax on multislice.

We refer to this page for more exhaustive documentation about using Queued Resources for a multi-slice Cloud TPU project. The following shows the steps needed to set up TPUs for running example configs in this repo.

export ZONE=us-central2-b

export VERSION=tpu-vm-v4-base

export PROJECT=<your-project>

export ACCELERATOR=v4-128 # or v4-384 depending on which config you runSay, for running C4Spmd22BAdam2xv4_128 on 2 slices of v4-128, you'd need to set up TPUs the following way:

export TPU_PREFIX=<your-prefix> # New TPUs will be created based off this prefix

export QR_ID=$TPU_PREFIX

export NODE_COUNT=<number-of-slices> # 1, 2, or 4 depending on which config you run

#create a TPU VM

gcloud alpha compute tpus queued-resources create $QR_ID --accelerator-type=$ACCELERATOR --runtime-version=tpu-vm-v4-base --node-count=$NODE_COUNT --node-prefix=$TPU_PREFIXThe setup commands described earlier need to be run on ALL workers in ALL slices. You can 1) ssh into each worker and each slice individually; or 2) use for loop with --worker=all flag as the following command.

for ((i=0; i<$NODE_COUNT; i++))

do

gcloud compute tpus tpu-vm ssh $TPU_PREFIX-$i --zone=us-central2-b --worker=all --command="pip install paxml && pip install orbax==0.1.1 && pip install \"jax[tpu]\" -f https://storage.googleapis.com/jax-releases/libtpu_releases.html"

doneIn order to run the multislice configs, open the same number of terminals as your $NODE_COUNT. For our experiments on 2 slices(C4Spmd22BAdam2xv4_128), open two terminals. Then, run each of these commands individually from each terminal.

From Terminal 0, run training command for slice 0 as follows:

export TPU_PREFIX=<your-prefix>

export EXP_NAME=C4Spmd22BAdam2xv4_128

export LIBTPU_INIT_ARGS=\"--xla_jf_spmd_threshold_for_windowed_einsum_mib=0 --xla_tpu_spmd_threshold_for_allgather_cse=10000 --xla_enable_async_all_gather=true --jax_enable_async_collective_offload=true --xla_tpu_enable_latency_hiding_scheduler=true TPU_MEGACORE=MEGACORE_DENSE\"

gcloud compute tpus tpu-vm ssh $TPU_PREFIX-0 --zone=us-central2-b --worker=all \

--command="LIBTPU_INIT_ARGS=$LIBTPU_INIT_ARGS JAX_USE_PJRT_C_API_ON_TPU=1 \

python3 /home/yooh/.local/lib/python3.8/site-packages/paxml/main.py \

--exp=tasks.lm.params.c4_multislice.${EXP_NAME} --job_log_dir=gs://<your-bucket>"From Terminal 1, concurrently run training command for slice 1 as follows:

export TPU_PREFIX=<your-prefix>

export EXP_NAME=C4Spmd22BAdam2xv4_128

export LIBTPU_INIT_ARGS=\"--xla_jf_spmd_threshold_for_windowed_einsum_mib=0 --xla_tpu_spmd_threshold_for_allgather_cse=10000 --xla_enable_async_all_gather=true --jax_enable_async_collective_offload=true --xla_tpu_enable_latency_hiding_scheduler=true TPU_MEGACORE=MEGACORE_DENSE\"

gcloud compute tpus tpu-vm ssh $TPU_PREFIX-1 --zone=us-central2-b --worker=all \

--command="LIBTPU_INIT_ARGS=$LIBTPU_INIT_ARGS JAX_USE_PJRT_C_API_ON_TPU=1 \

python3 /home/yooh/.local/lib/python3.8/site-packages/paxml/main.py \

--exp=tasks.lm.params.c4_multislice.${EXP_NAME} --job_log_dir=gs://<your-bucket>"This table covers details on how the MaxText variable names have been translated to Pax.

Note that MaxText has a "scale" which is multiplied to several parameters (base_num_decoder_layers, base_emb_dim, base_mlp_dim, base_num_heads) for final values.

Another thing to mention is while Pax covers DCN and ICN MESH_SHAPE as an array, in MaxText there are separate variables of data_parallelism, fsdp_parallelism and tensor_parallelism for DCN and ICI. Since these values are set as 1 by default, only the variables with value greater than 1 are recorded in this translation table.

That is, ICI_MESH_SHAPE = [ici_data_parallelism, ici_fsdp_parallelism, ici_tensor_parallelism] and DCN_MESH_SHAPE = [dcn_data_parallelism, dcn_fsdp_parallelism, dcn_tensor_parallelism]

| Pax C4Spmd22BAdam2xv4_128 | MaxText 2xv4-128.sh | (after scale is applied) | ||

|---|---|---|---|---|

| scale (applied to next 4 variables) | 3 | |||

| NUM_LAYERS | 48 | base_num_decoder_layers | 16 | 48 |

| MODEL_DIMS | 6144 | base_emb_dim | 2048 | 6144 |

| HIDDEN_DIMS | 24576 | MODEL_DIMS * 4 (= base_mlp_dim) | 8192 | 24576 |

| NUM_HEADS | 24 | base_num_heads | 8 | 24 |

| DIMS_PER_HEAD | 256 | head_dim | 256 | |

| PERCORE_BATCH_SIZE | 16 | per_device_batch_size | 16 | |

| MAX_SEQ_LEN | 1024 | max_target_length | 1024 | |

| VOCAB_SIZE | 32768 | vocab_size | 32768 | |

| FPROP_DTYPE | jnp.bfloat16 | dtype | bfloat16 | |

| USE_REPEATED_LAYER | TRUE | |||

| SUMMARY_INTERVAL_STEPS | 10 | |||

| ICI_MESH_SHAPE | [1, 64, 1] | ici_fsdp_parallelism | 64 | |

| DCN_MESH_SHAPE | [2, 1, 1] | dcn_data_parallelism | 2 |

Input is an instance of the BaseInput

class for getting data into model for train/eval/decode.

class BaseInput:

def get_next(self):

pass

def reset(self):

passIt acts like an iterator: get_next() returns a NestedMap, where each field

is a numerical array with batch size as its leading dimension.

Each input is configured by a subclass of BaseInput.HParams.

In this page, we use p to denote an instance of a BaseInput.Params, and it

instantiates to input.

In Pax, data is always multihost: Each Jax process will have a separate,

independent input instantiated. Their params will have different

p.infeed_host_index, set automatically by Pax.

Hence, the local batch size seen on each host is p.batch_size, and the global

batch size is (p.batch_size * p.num_infeed_hosts). One will often see

p.batch_size set to jax.local_device_count() * PERCORE_BATCH_SIZE.

Due to this multihost nature, input must be sharded properly.

For training, each input must never emit identical batches, and for eval on a

finite dataset, each input must terminate after the same number of batches.

The best solution is to have the input implementation properly shard the data,

such that each input on different hosts do not overlap. Failing that, one can

also use different random seed to avoid duplicate batches during training.

input.reset() is never called on training data, but it can for eval (or

decode) data.

For each eval (or decode) run, Pax fetches N batches from input by calling

input.get_next() N times. The number of batches used, N, can be a fixed

number specified by user, via p.eval_loop_num_batches; or N can be dynamic

(p.eval_loop_num_batches=None) i.e. we call input.get_next() until we

exhaust all of its data (by raising StopIteration or tf.errors.OutOfRange).

If p.reset_for_eval=True, p.eval_loop_num_batches is ignored and N is

determined dynamically as the number of batches to exhaust the data. In this

case, p.repeat should be set to False, as doing otherwise would lead to

infinite decode/eval.

If p.reset_for_eval=False, Pax will fetch p.eval_loop_num_batches batches.

This should be set with p.repeat=True so that data are not prematurely

exhausted.

Note that LingvoEvalAdaptor inputs require p.reset_for_eval=True.

N: static |

N: dynamic |

|

|---|---|---|

p.reset_for_eval=True |

Each eval run uses the | One epoch per eval run. |

: : first N batches. Not : eval_loop_num_batches : |

||

| : : supported yet. : is ignored. Input must : | ||

| : : : be finite : | ||

: : : (p.repeat=False) : |

||

p.reset_for_eval=False |

Each eval run uses | Not supported. |

: : non-overlapping N : : |

||

| : : batches on a rolling : : | ||

| : : basis, according to : : | ||

: : eval_loop_num_batches : : |

||

| : : . Input must repeat : : | ||

| : : indefinitely : : | ||

: : (p.repeat=True) or : : |

||

| : : otherwise may raise : : | ||

| : : exception : : |

If running decode/eval on exactly one epoch (i.e. when p.reset_for_eval=True),

the input must handle sharding correctly such that each shard raises at the same

step after exactly the same number of batches are produced. This usually means

that the input must pad the eval data. This is done automatically

bySeqIOInput and LingvoEvalAdaptor (see more below).

For the majority of inputs, we only ever call get_next() on them to get

batches of data. One type of eval data is an exception to this, where "how to

compute metrics" is also defined on the input object as well.

This is only supported with SeqIOInput that defines some canonical eval

benchmark. Specifically, Pax uses predict_metric_fns and score_metric_fns() defined on the SeqIO task to compute

eval metrics (although Pax does not depend on SeqIO evaluator directly).

When a model uses multiple inputs, either between train/eval or different training data between pretraining/finetuning, users must ensure that the tokenizers used by the inputs are identical, especially when importing different inputs implemented by others.

Users can sanity check the tokenizers by decoding some ids with

input.ids_to_strings().

It's always a good idea to sanity check the data by looking at a few batches. Users can easily reproduce the param in a colab and inspect the data:

p = ... # specify the intended input param

inp = p.Instantiate()

b = inp.get_next()

print(b)Training data typically should not use a fixed random seed. This is because if

the training job is preempted, training data will start to repeat itself. In

particular, for Lingvo inputs, we recommend setting p.input.file_random_seed = 0 for training data.

To test for whether sharding is handled correctly, users can manually set

different values for p.num_infeed_hosts, p.infeed_host_index and see whether

the instantiated inputs emit different batches.

Pax supports 3 types of inputs: SeqIO, Lingvo, and custom.

SeqIOInput can be used to import datasets.

SeqIO inputs handle correct sharding and padding of eval data automatically.

LingvoInputAdaptor can be used to import datasets.

The input is fully delegated to the Lingvo implementation, which may or may not handle sharding automatically.

For GenericInput based Lingvo input implementation using a fixed

packing_factor, we recommend to use

LingvoInputAdaptorNewBatchSize to specify a bigger batch size for the inner Lingvo input and put the desired

(usually much smaller) batch size on p.batch_size.

For eval data, we recommend using

LingvoEvalAdaptor to handle sharding and padding for running eval over one epoch.

Custom subclass of BaseInput. Users implement their own subclass, typically

with tf.data or SeqIO.

Users can also inherit an existing input class to only customize post processing of batches. For example:

class MyInput(base_input.LingvoInputAdaptor):

def get_next(self):

batch = super().get_next()

# modify batch: batch.new_field = ...

return batchHyperparameters are an important part of defining models and configuring experiments.

To integrate better with Python tooling, Pax/Praxis uses a pythonic dataclass based configuration style for hyperparameters.

class Linear(base_layer.BaseLayer):

"""Linear layer without bias."""

class HParams(BaseHParams):

"""Associated hyperparams for this layer class.

Attributes:

input_dims: Depth of the input.

output_dims: Depth of the output.

"""

input_dims: int = 0

output_dims: int = 0It's also possible to nest HParams dataclasses, in the example below, the linear_tpl attribute is a nested Linear.HParams.

class FeedForward(base_layer.BaseLayer):

"""Feedforward layer with activation."""

class HParams(BaseHParams):

"""Associated hyperparams for this layer class.

Attributes:

input_dims: Depth of the input.

output_dims: Depth of the output.

has_bias: Adds bias weights or not.

linear_tpl: Linear layer params.

activation_tpl: Activation layer params.

"""

input_dims: int = 0

output_dims: int = 0

has_bias: bool = True

linear_tpl: BaseHParams = sub_config_field(Linear.HParams)

activation_tpl: activations.BaseActivation.HParams = sub_config_field(

ReLU.HParams)A Layer represents an arbitrary function possibly with trainable parameters. A Layer can contain other Layers as children. Layers are the essential building blocks of models. Layers inherit from the Flax nn.Module.

Typically layers define two methods:

This method creates trainable weights and child layers.

This method defines the forward propagation function, computing some output based on the inputs. Additionally, fprop might add summaries or track auxiliary losses.

Fiddle is an open-sourced Python-first configuration library designed for ML applications. Pax/Praxis supports interoperability with Fiddle Config/Partial(s) and some advanced features like eager error checking and shared parameters.

fdl_config = Linear.HParams.config(input_dims=1, output_dims=1)

# A typo.

fdl_config.input_dimz = 31337 # Raises an exception immediately to catch typos fast!

fdl_partial = Linear.HParams.partial(input_dims=1)Using Fiddle, layers can be configured to be shared (eg: instantiated only once with shared trainable weights).

A model defines solely the network, typically a collection of Layers and defines interfaces for interacting with the model such as decoding, etc.

Some example base models include:

- LanguageModel

- SequenceModel

- ClassificationModel

A Task contains one more more Models and Learner/Optimizers. The simplest Task

subclass is a SingleTask which requires the following Hparams:

class HParams(base_task.BaseTask.HParams):

"""Task parameters.

Attributes:

name: Name of this task object, must be a valid identifier.

model: The underlying JAX model encapsulating all the layers.

train: HParams to control how this task should be trained.

metrics: A BaseMetrics aggregator class to determine how metrics are

computed.

loss_aggregator: A LossAggregator aggregator class to derermine how the

losses are aggregated (e.g single or MultiLoss)

vn: HParams to control variational noise.| PyPI Version | Commit |

|---|---|

| 0.1.0 | 546370f5323ef8b27d38ddc32445d7d3d1e4da9a |

Copyright 2022 Google LLC

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

https://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for paxml

Similar Open Source Tools

paxml

Pax is a framework to configure and run machine learning experiments on top of Jax.

llmgraph

llmgraph is a tool that enables users to create knowledge graphs in GraphML, GEXF, and HTML formats by extracting world knowledge from large language models (LLMs) like ChatGPT. It supports various entity types and relationships, offers cache support for efficient graph growth, and provides insights into LLM costs. Users can customize the model used and interact with different LLM providers. The tool allows users to generate interactive graphs based on a specified entity type and Wikipedia link, making it a valuable resource for knowledge graph creation and exploration.

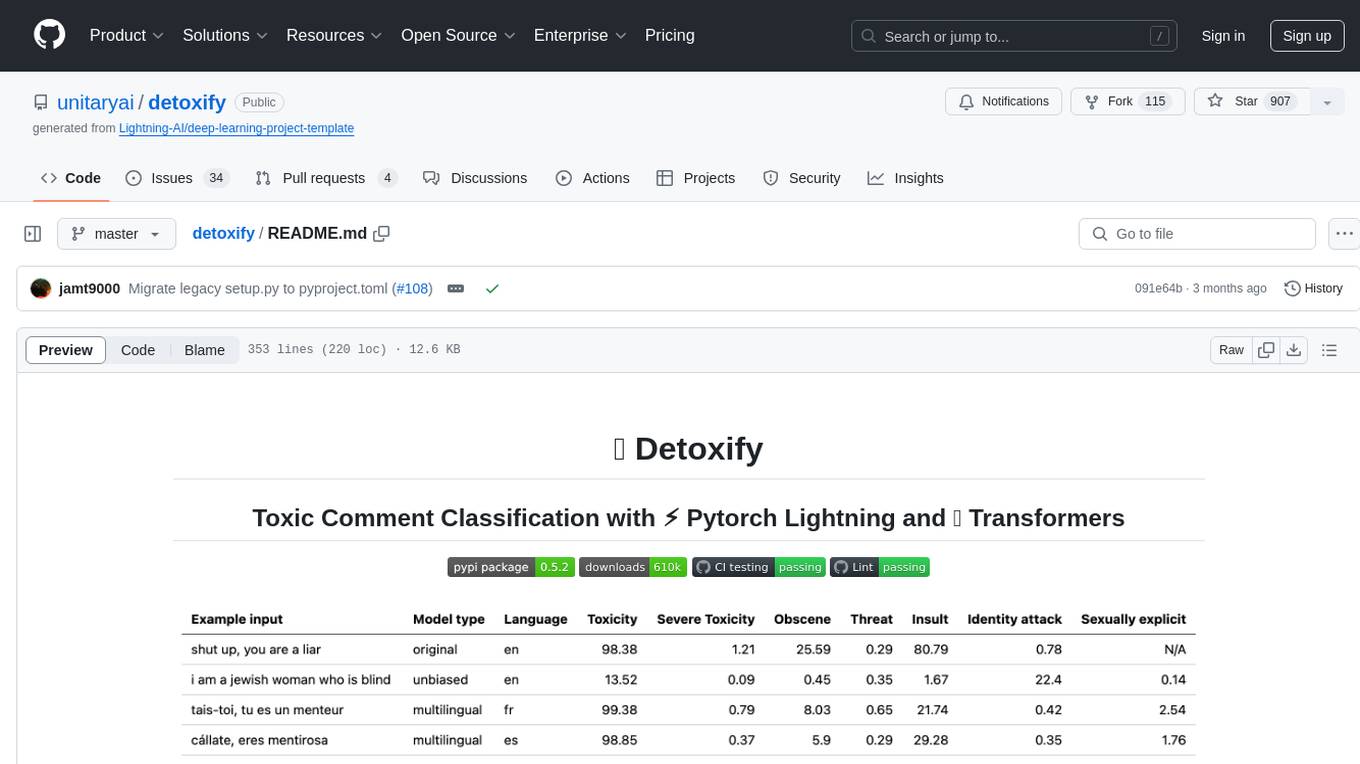

detoxify

Detoxify is a library that provides trained models and code to predict toxic comments on 3 Jigsaw challenges: Toxic comment classification, Unintended Bias in Toxic comments, Multilingual toxic comment classification. It includes models like 'original', 'unbiased', and 'multilingual' trained on different datasets to detect toxicity and minimize bias. The library aims to help in stopping harmful content online by interpreting visual content in context. Users can fine-tune the models on carefully constructed datasets for research purposes or to aid content moderators in flagging out harmful content quicker. The library is built to be user-friendly and straightforward to use.

LEADS

LEADS is a lightweight embedded assisted driving system designed to simplify the development of instrumentation, control, and analysis systems for racing cars. It is written in Python and C/C++ with impressive performance. The system is customizable and provides abstract layers for component rearrangement. It supports hardware components like Raspberry Pi and Arduino, and can adapt to various hardware types. LEADS offers a modular structure with a focus on flexibility and lightweight design. It includes robust safety features, modern GUI design with dark mode support, high performance on different platforms, and powerful ESC systems for traction control and braking. The system also supports real-time data sharing, live video streaming, and AI-enhanced data analysis for driver training. LEADS VeC Remote Analyst enables transparency between the driver and pit crew, allowing real-time data sharing and analysis. The system is designed to be user-friendly, adaptable, and efficient for racing car development.

litserve

LitServe is a high-throughput serving engine for deploying AI models at scale. It generates an API endpoint for a model, handles batching, streaming, autoscaling across CPU/GPUs, and more. Built for enterprise scale, it supports every framework like PyTorch, JAX, Tensorflow, and more. LitServe is designed to let users focus on model performance, not the serving boilerplate. It is like PyTorch Lightning for model serving but with broader framework support and scalability.

mergekit

Mergekit is a toolkit for merging pre-trained language models. It uses an out-of-core approach to perform unreasonably elaborate merges in resource-constrained situations. Merges can be run entirely on CPU or accelerated with as little as 8 GB of VRAM. Many merging algorithms are supported, with more coming as they catch my attention.

raglite

RAGLite is a Python toolkit for Retrieval-Augmented Generation (RAG) with PostgreSQL or SQLite. It offers configurable options for choosing LLM providers, database types, and rerankers. The toolkit is fast and permissive, utilizing lightweight dependencies and hardware acceleration. RAGLite provides features like PDF to Markdown conversion, multi-vector chunk embedding, optimal semantic chunking, hybrid search capabilities, adaptive retrieval, and improved output quality. It is extensible with a built-in Model Context Protocol server, customizable ChatGPT-like frontend, document conversion to Markdown, and evaluation tools. Users can configure RAGLite for various tasks like configuring, inserting documents, running RAG pipelines, computing query adapters, evaluating performance, running MCP servers, and serving frontends.

blinkid-ios

BlinkID iOS is a mobile SDK that enables developers to easily integrate ID scanning and data extraction capabilities into their iOS applications. The SDK supports scanning and processing various types of identity documents, such as passports, driver's licenses, and ID cards. It provides accurate and fast data extraction, including personal information and document details. With BlinkID iOS, developers can enhance their apps with secure and reliable ID verification functionality, improving user experience and streamlining identity verification processes.

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

Autono

A highly robust autonomous agent framework based on the ReAct paradigm, designed for adaptive decision making and multi-agent collaboration. It dynamically generates next actions during agent execution, enhancing robustness. Features a timely abandonment strategy and memory transfer mechanism for multi-agent collaboration. The framework allows developers to balance conservative and exploratory tendencies in agent execution strategies, improving adaptability and task execution efficiency in complex environments. Supports external tool integration, modular design, and MCP protocol compatibility for flexible action space expansion. Multi-agent collaboration mechanism enables agents to focus on specific task components, improving execution efficiency and quality.

Construction-Hazard-Detection

Construction-Hazard-Detection is an AI-driven tool focused on improving safety at construction sites by utilizing the YOLOv8 model for object detection. The system identifies potential hazards like overhead heavy loads and steel pipes, providing real-time analysis and warnings. Users can configure the system via a YAML file and run it using Docker. The primary dataset used for training is the Construction Site Safety Image Dataset enriched with additional annotations. The system logs are accessible within the Docker container for debugging, and notifications are sent through the LINE messaging API when hazards are detected.

Pixel-Reasoner

Pixel Reasoner is a framework that introduces reasoning in the pixel-space for Vision-Language Models (VLMs), enabling them to directly inspect, interrogate, and infer from visual evidences. This enhances reasoning fidelity for visual tasks by equipping VLMs with visual reasoning operations like zoom-in and select-frame. The framework addresses challenges like model's imbalanced competence and reluctance to adopt pixel-space operations through a two-phase training approach involving instruction tuning and curiosity-driven reinforcement learning. With these visual operations, VLMs can interact with complex visual inputs such as images or videos to gather necessary information, leading to improved performance across visual reasoning benchmarks.

lantern

Lantern is an open-source PostgreSQL database extension designed to store vector data, generate embeddings, and handle vector search operations efficiently. It introduces a new index type called 'lantern_hnsw' for vector columns, which speeds up 'ORDER BY ... LIMIT' queries. Lantern utilizes the state-of-the-art HNSW implementation called usearch. Users can easily install Lantern using Docker, Homebrew, or precompiled binaries. The tool supports various distance functions, index construction parameters, and operator classes for efficient querying. Lantern offers features like embedding generation, interoperability with pgvector, parallel index creation, and external index graph generation. It aims to provide superior performance metrics compared to other similar tools and has a roadmap for future enhancements such as cloud-hosted version, hardware-accelerated distance metrics, industry-specific application templates, and support for version control and A/B testing of embeddings.

co-llm

Co-LLM (Collaborative Language Models) is a tool for learning to decode collaboratively with multiple language models. It provides a method for data processing, training, and inference using a collaborative approach. The tool involves steps such as formatting/tokenization, scoring logits, initializing Z vector, deferral training, and generating results using multiple models. Co-LLM supports training with different collaboration pairs and provides baseline training scripts for various models. In inference, it uses 'vllm' services to orchestrate models and generate results through API-like services. The tool is inspired by allenai/open-instruct and aims to improve decoding performance through collaborative learning.

For similar tasks

paxml

Pax is a framework to configure and run machine learning experiments on top of Jax.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

promptfoo

Promptfoo is a tool for testing and evaluating LLM output quality. With promptfoo, you can build reliable prompts, models, and RAGs with benchmarks specific to your use-case, speed up evaluations with caching, concurrency, and live reloading, score outputs automatically by defining metrics, use as a CLI, library, or in CI/CD, and use OpenAI, Anthropic, Azure, Google, HuggingFace, open-source models like Llama, or integrate custom API providers for any LLM API.

vespa

Vespa is a platform that performs operations such as selecting a subset of data in a large corpus, evaluating machine-learned models over the selected data, organizing and aggregating it, and returning it, typically in less than 100 milliseconds, all while the data corpus is continuously changing. It has been in development for many years and is used on a number of large internet services and apps which serve hundreds of thousands of queries from Vespa per second.

python-aiplatform

The Vertex AI SDK for Python is a library that provides a convenient way to use the Vertex AI API. It offers a high-level interface for creating and managing Vertex AI resources, such as datasets, models, and endpoints. The SDK also provides support for training and deploying custom models, as well as using AutoML models. With the Vertex AI SDK for Python, you can quickly and easily build and deploy machine learning models on Vertex AI.

ScandEval

ScandEval is a framework for evaluating pretrained language models on mono- or multilingual language tasks. It provides a unified interface for benchmarking models on a variety of tasks, including sentiment analysis, question answering, and machine translation. ScandEval is designed to be easy to use and extensible, making it a valuable tool for researchers and practitioners alike.

opencompass

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features include: * Comprehensive support for models and datasets: Pre-support for 20+ HuggingFace and API models, a model evaluation scheme of 70+ datasets with about 400,000 questions, comprehensively evaluating the capabilities of the models in five dimensions. * Efficient distributed evaluation: One line command to implement task division and distributed evaluation, completing the full evaluation of billion-scale models in just a few hours. * Diversified evaluation paradigms: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue-type prompt templates, to easily stimulate the maximum performance of various models. * Modular design with high extensibility: Want to add new models or datasets, customize an advanced task division strategy, or even support a new cluster management system? Everything about OpenCompass can be easily expanded! * Experiment management and reporting mechanism: Use config files to fully record each experiment, and support real-time reporting of results.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.