opencompass

OpenCompass is an LLM evaluation platform, supporting a wide range of models (Llama3, Mistral, InternLM2,GPT-4,LLaMa2, Qwen,GLM, Claude, etc) over 100+ datasets.

Stars: 4850

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features include: * Comprehensive support for models and datasets: Pre-support for 20+ HuggingFace and API models, a model evaluation scheme of 70+ datasets with about 400,000 questions, comprehensively evaluating the capabilities of the models in five dimensions. * Efficient distributed evaluation: One line command to implement task division and distributed evaluation, completing the full evaluation of billion-scale models in just a few hours. * Diversified evaluation paradigms: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue-type prompt templates, to easily stimulate the maximum performance of various models. * Modular design with high extensibility: Want to add new models or datasets, customize an advanced task division strategy, or even support a new cluster management system? Everything about OpenCompass can be easily expanded! * Experiment management and reporting mechanism: Use config files to fully record each experiment, and support real-time reporting of results.

README:

🌐Website | 📖CompassHub | 📊CompassRank | 📘Documentation | 🛠️Installation | 🤔Reporting Issues

English | 简体中文

👋 join us on Discord and WeChat

[!IMPORTANT]

Star Us, You will receive all release notifications from GitHub without any delay ~ ⭐️

to OpenCompass!

Just like a compass guides us on our journey, OpenCompass will guide you through the complex landscape of evaluating large language models. With its powerful algorithms and intuitive interface, OpenCompass makes it easy to assess the quality and effectiveness of your NLP models.

🚩🚩🚩 Explore opportunities at OpenCompass! We're currently hiring full-time researchers/engineers and interns. If you're passionate about LLM and OpenCompass, don't hesitate to reach out to us via email. We'd love to hear from you!

🔥🔥🔥 We are delighted to announce that the OpenCompass has been recommended by the Meta AI, click Get Started of Llama for more information.

Attention

Breaking Change Notice: In version 0.4.0, we are consolidating all AMOTIC configuration files (previously located in ./configs/datasets, ./configs/models, and ./configs/summarizers) into the opencompass package. Users are advised to update their configuration references to reflect this structural change.

-

[2025.02.28] We have added a tutorial for

DeepSeek-R1series model, please check Evaluating Reasoning Model for more details! 🔥🔥🔥 -

[2025.02.15] We have added two powerful evaluation tools:

GenericLLMEvaluatorfor LLM-as-judge evaluations andMATHEvaluatorfor mathematical reasoning assessments. Check out the documentation for LLM Judge and Math Evaluation for more details! 🔥🔥🔥 - [2025.01.16] We now support the InternLM3-8B-Instruct model which has enhanced performance on reasoning and knowledge-intensive tasks.

- [2024.12.17] We have provided the evaluation script for the December CompassAcademic, which allows users to easily reproduce the official evaluation results by configuring it.

- [2024.11.14] OpenCompass now offers support for a sophisticated benchmark designed to evaluate complex reasoning skills — MuSR. Check out the demo and give it a spin! 🔥🔥🔥

- [2024.11.14] OpenCompass now supports the brand new long-context language model evaluation benchmark — BABILong. Have a look at the demo and give it a try! 🔥🔥🔥

- [2024.10.14] We now support the OpenAI multilingual QA dataset MMMLU. Feel free to give it a try! 🔥🔥🔥

- [2024.09.19] We now support Qwen2.5(0.5B to 72B) with multiple backend(huggingface/vllm/lmdeploy). Feel free to give them a try! 🔥🔥🔥

-

[2024.09.17] We now support OpenAI o1(

o1-mini-2024-09-12ando1-preview-2024-09-12). Feel free to give them a try! 🔥🔥🔥 - [2024.09.05] We now support answer extraction through model post-processing to provide a more accurate representation of the model's capabilities. As part of this update, we have integrated XFinder as our first post-processing model. For more detailed information, please refer to the documentation, and give it a try! 🔥🔥🔥

- [2024.08.20] OpenCompass now supports the SciCode: A Research Coding Benchmark Curated by Scientists. 🔥🔥🔥

- [2024.08.16] OpenCompass now supports the brand new long-context language model evaluation benchmark — RULER. RULER provides an evaluation of long-context including retrieval, multi-hop tracing, aggregation, and question answering through flexible configurations. Check out the RULER evaluation config now! 🔥🔥🔥

- [2024.08.09] We have released the example data and configuration for the CompassBench-202408, welcome to CompassBench for more details. 🔥🔥🔥

- [2024.08.01] We supported the Gemma2 models. Welcome to try! 🔥🔥🔥

- [2024.07.23] We supported the ModelScope datasets, you can load them on demand without downloading all the data to your local disk. Welcome to try! 🔥🔥🔥

- [2024.07.17] We are excited to announce the release of NeedleBench's technical report. We invite you to visit our support documentation for detailed evaluation guidelines. 🔥🔥🔥

- [2024.07.04] OpenCompass now supports InternLM2.5, which has outstanding reasoning capability, 1M Context window and and stronger tool use, you can try the models in OpenCompass Config and InternLM .🔥🔥🔥.

- [2024.06.20] OpenCompass now supports one-click switching between inference acceleration backends, enhancing the efficiency of the evaluation process. In addition to the default HuggingFace inference backend, it now also supports popular backends LMDeploy and vLLM. This feature is available via a simple command-line switch and through deployment APIs. For detailed usage, see the documentation.🔥🔥🔥.

We provide OpenCompass Leaderboard for the community to rank all public models and API models. If you would like to join the evaluation, please provide the model repository URL or a standard API interface to the email address [email protected].

You can also refer to CompassAcademic to quickly reproduce the leaderboard results. The currently selected datasets include Knowledge Reasoning (MMLU-Pro/GPQA Diamond), Logical Reasoning (BBH), Mathematical Reasoning (MATH-500, AIME), Code Generation (LiveCodeBench, HumanEval), and Instruction Following (IFEval)."

Below are the steps for quick installation and datasets preparation.

We highly recommend using conda to manage your python environment.

-

conda create --name opencompass python=3.10 -y conda activate opencompass

-

pip install -U opencompass ## Full installation (with support for more datasets) # pip install "opencompass[full]" ## Environment with model acceleration frameworks ## Manage different acceleration frameworks using virtual environments ## since they usually have dependency conflicts with each other. # pip install "opencompass[lmdeploy]" # pip install "opencompass[vllm]" ## API evaluation (i.e. Openai, Qwen) # pip install "opencompass[api]"

-

If you want to use opencompass's latest features, or develop new features, you can also build it from source

git clone https://github.com/open-compass/opencompass opencompass cd opencompass pip install -e . # pip install -e ".[full]" # pip install -e ".[vllm]"

You can choose one for the following method to prepare datasets.

You can download and extract the datasets with the following commands:

# Download dataset to data/ folder

wget https://github.com/open-compass/opencompass/releases/download/0.2.2.rc1/OpenCompassData-core-20240207.zip

unzip OpenCompassData-core-20240207.zipWe have supported download datasets automatic from the OpenCompass storage server. You can run the evaluation with extra --dry-run to download these datasets.

Currently, the supported datasets are listed in here. More datasets will be uploaded recently.

Also you can use the ModelScope to load the datasets on demand.

Installation:

pip install modelscope[framework]

export DATASET_SOURCE=ModelScopeThen submit the evaluation task without downloading all the data to your local disk. Available datasets include:

humaneval, triviaqa, commonsenseqa, tydiqa, strategyqa, cmmlu, lambada, piqa, ceval, math, LCSTS, Xsum, winogrande, openbookqa, AGIEval, gsm8k, nq, race, siqa, mbpp, mmlu, hellaswag, ARC, BBH, xstory_cloze, summedits, GAOKAO-BENCH, OCNLI, cmnliSome third-party features, like Humaneval and Llama, may require additional steps to work properly, for detailed steps please refer to the Installation Guide.

After ensuring that OpenCompass is installed correctly according to the above steps and the datasets are prepared. Now you can start your first evaluation using OpenCompass!

-

Your first evaluation with OpenCompass!

OpenCompass support setting your configs via CLI or a python script. For simple evaluation settings we recommend using CLI, for more complex evaluation, it is suggested using the script way. You can find more example scripts under the configs folder.

# CLI opencompass --models hf_internlm2_5_1_8b_chat --datasets demo_gsm8k_chat_gen # Python scripts opencompass examples/eval_chat_demo.py

You can find more script examples under examples folder.

-

API evaluation

OpenCompass, by its design, does not really discriminate between open-source models and API models. You can evaluate both model types in the same way or even in one settings.

export OPENAI_API_KEY="YOUR_OPEN_API_KEY" # CLI opencompass --models gpt_4o_2024_05_13 --datasets demo_gsm8k_chat_gen # Python scripts opencompass examples/eval_api_demo.py # You can use o1_mini_2024_09_12/o1_preview_2024_09_12 for o1 models, we set max_completion_tokens=8192 as default.

-

Accelerated Evaluation

Additionally, if you want to use an inference backend other than HuggingFace for accelerated evaluation, such as LMDeploy or vLLM, you can do so with the command below. Please ensure that you have installed the necessary packages for the chosen backend and that your model supports accelerated inference with it. For more information, see the documentation on inference acceleration backends here. Below is an example using LMDeploy:

# CLI opencompass --models hf_internlm2_5_1_8b_chat --datasets demo_gsm8k_chat_gen -a lmdeploy # Python scripts opencompass examples/eval_lmdeploy_demo.py

-

Supported Models

OpenCompass has predefined configurations for many models and datasets. You can list all available model and dataset configurations using the tools.

# List all configurations python tools/list_configs.py # List all configurations related to llama and mmlu python tools/list_configs.py llama mmlu

If the model is not on the list but supported by Huggingface AutoModel class, you can also evaluate it with OpenCompass. You are welcome to contribute to the maintenance of the OpenCompass supported model and dataset lists.

opencompass --datasets demo_gsm8k_chat_gen --hf-type chat --hf-path internlm/internlm2_5-1_8b-chat

If you want to use multiple GPUs to evaluate the model in data parallel, you can use

--max-num-worker.CUDA_VISIBLE_DEVICES=0,1 opencompass --datasets demo_gsm8k_chat_gen --hf-type chat --hf-path internlm/internlm2_5-1_8b-chat --max-num-worker 2

[!TIP]

--hf-num-gpusis used for model parallel(huggingface format),--max-num-workeris used for data parallel.

[!TIP]

configuration with

_pplis designed for base model typically. configuration with_gencan be used for both base model and chat model.

Through the command line or configuration files, OpenCompass also supports evaluating APIs or custom models, as well as more diversified evaluation strategies. Please read the Quick Start to learn how to run an evaluation task.

We are thrilled to introduce OpenCompass 2.0, an advanced suite featuring three key components: CompassKit, CompassHub, and CompassRank.

CompassRank has been significantly enhanced into the leaderboards that now incorporates both open-source benchmarks and proprietary benchmarks. This upgrade allows for a more comprehensive evaluation of models across the industry.

CompassHub presents a pioneering benchmark browser interface, designed to simplify and expedite the exploration and utilization of an extensive array of benchmarks for researchers and practitioners alike. To enhance the visibility of your own benchmark within the community, we warmly invite you to contribute it to CompassHub. You may initiate the submission process by clicking here.

CompassKit is a powerful collection of evaluation toolkits specifically tailored for Large Language Models and Large Vision-language Models. It provides an extensive set of tools to assess and measure the performance of these complex models effectively. Welcome to try our toolkits for in your research and products.

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features include:

-

Comprehensive support for models and datasets: Pre-support for 20+ HuggingFace and API models, a model evaluation scheme of 70+ datasets with about 400,000 questions, comprehensively evaluating the capabilities of the models in five dimensions.

-

Efficient distributed evaluation: One line command to implement task division and distributed evaluation, completing the full evaluation of billion-scale models in just a few hours.

-

Diversified evaluation paradigms: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue-type prompt templates, to easily stimulate the maximum performance of various models.

-

Modular design with high extensibility: Want to add new models or datasets, customize an advanced task division strategy, or even support a new cluster management system? Everything about OpenCompass can be easily expanded!

-

Experiment management and reporting mechanism: Use config files to fully record each experiment, and support real-time reporting of results.

We have supported a statistical list of all datasets that can be used on this platform in the documentation on the OpenCompass website.

You can quickly find the dataset you need from the list through sorting, filtering, and searching functions.

Please refer to the dataset statistics chapter of official document for details.

| Open-source Models | API Models |

|

- [x] Subjective Evaluation

- [x] Release CompassAreana.

- [x] Subjective evaluation.

- [x] Long-context

- [x] Long-context evaluation with extensive datasets.

- [ ] Long-context leaderboard.

- [x] Coding

- [ ] Coding evaluation leaderboard.

- [x] Non-python language evaluation service.

- [x] Agent

- [ ] Support various agent frameworks.

- [x] Evaluation of tool use of the LLMs.

- [x] Robustness

- [x] Support various attack methods.

We appreciate all contributions to improving OpenCompass. Please refer to the contributing guideline for the best practice.

|

|

|---|

Some code in this project is cited and modified from OpenICL.

Some datasets and prompt implementations are modified from chain-of-thought-hub and instruct-eval.

@misc{2023opencompass,

title={OpenCompass: A Universal Evaluation Platform for Foundation Models},

author={OpenCompass Contributors},

howpublished = {\url{https://github.com/open-compass/opencompass}},

year={2023}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for opencompass

Similar Open Source Tools

opencompass

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features include: * Comprehensive support for models and datasets: Pre-support for 20+ HuggingFace and API models, a model evaluation scheme of 70+ datasets with about 400,000 questions, comprehensively evaluating the capabilities of the models in five dimensions. * Efficient distributed evaluation: One line command to implement task division and distributed evaluation, completing the full evaluation of billion-scale models in just a few hours. * Diversified evaluation paradigms: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue-type prompt templates, to easily stimulate the maximum performance of various models. * Modular design with high extensibility: Want to add new models or datasets, customize an advanced task division strategy, or even support a new cluster management system? Everything about OpenCompass can be easily expanded! * Experiment management and reporting mechanism: Use config files to fully record each experiment, and support real-time reporting of results.

PySpur

PySpur is a graph-based editor designed for LLM workflows, offering modular building blocks for easy workflow creation and debugging at node level. It allows users to evaluate final performance and promises self-improvement features in the future. PySpur is easy-to-hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies, making it a versatile tool for workflow management in the field of AI and machine learning.

BizyAir

BizyAir is a collection of ComfyUI nodes that help users overcome environmental and hardware limitations to generate high-quality content. It includes features such as ControlNet preprocessing, image background removal, photo-quality image generation, and animation super-resolution. Users can run ComfyUI anywhere without worrying about hardware requirements. Installation methods include using ComfyUI Manager, Comfy CLI, downloading standalone packages for Windows, or cloning the BizyAir repository into the custom_nodes subdirectory of ComfyUI.

Linly-Talker

Linly-Talker is an innovative digital human conversation system that integrates the latest artificial intelligence technologies, including Large Language Models (LLM) 🤖, Automatic Speech Recognition (ASR) 🎙️, Text-to-Speech (TTS) 🗣️, and voice cloning technology 🎤. This system offers an interactive web interface through the Gradio platform 🌐, allowing users to upload images 📷 and engage in personalized dialogues with AI 💬.

MME-RealWorld

MME-RealWorld is a benchmark designed to address real-world applications with practical relevance, featuring 13,366 high-resolution images and 29,429 annotations across 43 tasks. It aims to provide substantial recognition challenges and overcome common barriers in existing Multimodal Large Language Model benchmarks, such as small data scale, restricted data quality, and insufficient task difficulty. The dataset offers advantages in data scale, data quality, task difficulty, and real-world utility compared to existing benchmarks. It also includes a Chinese version with additional images and QA pairs focused on Chinese scenarios.

kalavai-client

Kalavai is an open-source platform that transforms everyday devices into an AI supercomputer by aggregating resources from multiple machines. It facilitates matchmaking of resources for large AI projects, making AI hardware accessible and affordable. Users can create local and public pools, connect with the community's resources, and share computing power. The platform aims to be a management layer for research groups and organizations, enabling users to unlock the power of existing hardware without needing a devops team. Kalavai CLI tool helps manage both versions of the platform.

LLM-Zero-to-Hundred

LLM-Zero-to-Hundred is a repository showcasing various applications of LLM chatbots and providing insights into training and fine-tuning Language Models. It includes projects like WebGPT, RAG-GPT, WebRAGQuery, LLM Full Finetuning, RAG-Master LLamaindex vs Langchain, open-source-RAG-GEMMA, and HUMAIN: Advanced Multimodal, Multitask Chatbot. The projects cover features like ChatGPT-like interaction, RAG capabilities, image generation and understanding, DuckDuckGo integration, summarization, text and voice interaction, and memory access. Tutorials include LLM Function Calling and Visualizing Text Vectorization. The projects have a general structure with folders for README, HELPER, .env, configs, data, src, images, and utils.

UFO

UFO is a UI-focused dual-agent framework to fulfill user requests on Windows OS by seamlessly navigating and operating within individual or spanning multiple applications.

partcad

PartCAD is a tool for documenting manufacturable physical products, providing tools to maintain product information and streamline workflows at all product lifecycle phases. It is a next-generation CAD tool that focuses on specifying manufacturable physical products using computer-aided design in a more generic sense, including the use of AI models. PartCAD offers modular and reusable packages for product information, generating outputs like product documentation, bill of materials, sourcing information, and manufacturing process specifications. It integrates with third-party tools for iterative improvements, design validation, and manufacturing processes verification. PartCAD also offers supplementary products like a CRM and inventory tool for managing part manufacturing and assembly shops. By enabling easy switching between third-party tools, PartCAD creates a competitive environment for service providers and ensures data sovereignty for users.

InternGPT

InternGPT (iGPT) is a pointing-language-driven visual interactive system that enhances communication between users and chatbots by incorporating pointing instructions. It improves chatbot accuracy in vision-centric tasks, especially in complex visual scenarios. The system includes an auxiliary control mechanism to enhance the control capability of the language model. InternGPT features a large vision-language model called Husky, fine-tuned for high-quality multi-modal dialogue. Users can interact with ChatGPT by clicking, dragging, and drawing using a pointing device, leading to efficient communication and improved chatbot performance in vision-related tasks.

hal-9100

This repository is now archived and the code is privately maintained. If you are interested in this infrastructure, please contact the maintainer directly.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

superduper

superduper.io is a Python framework that integrates AI models, APIs, and vector search engines directly with existing databases. It allows hosting of models, streaming inference, and scalable model training/fine-tuning. Key features include integration of AI with data infrastructure, inference via change-data-capture, scalable model training, model chaining, simple Python interface, Python-first approach, working with difficult data types, feature storing, and vector search capabilities. The tool enables users to turn their existing databases into centralized repositories for managing AI model inputs and outputs, as well as conducting vector searches without the need for specialized databases.

HPT

Hyper-Pretrained Transformers (HPT) is a novel multimodal LLM framework from HyperGAI, trained for vision-language models capable of understanding both textual and visual inputs. The repository contains the open-source implementation of inference code to reproduce the evaluation results of HPT Air on different benchmarks. HPT has achieved competitive results with state-of-the-art models on various multimodal LLM benchmarks. It offers models like HPT 1.5 Air and HPT 1.0 Air, providing efficient solutions for vision-and-language tasks.

Upsonic

Upsonic offers a cutting-edge enterprise-ready framework for orchestrating LLM calls, agents, and computer use to complete tasks cost-effectively. It provides reliable systems, scalability, and a task-oriented structure for real-world cases. Key features include production-ready scalability, task-centric design, MCP server support, tool-calling server, computer use integration, and easy addition of custom tools. The framework supports client-server architecture and allows seamless deployment on AWS, GCP, or locally using Docker.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

For similar tasks

byteir

The ByteIR Project is a ByteDance model compilation solution. ByteIR includes compiler, runtime, and frontends, and provides an end-to-end model compilation solution. Although all ByteIR components (compiler/runtime/frontends) are together to provide an end-to-end solution, and all under the same umbrella of this repository, each component technically can perform independently. The name, ByteIR, comes from a legacy purpose internally. The ByteIR project is NOT an IR spec definition project. Instead, in most scenarios, ByteIR directly uses several upstream MLIR dialects and Google Mhlo. Most of ByteIR compiler passes are compatible with the selected upstream MLIR dialects and Google Mhlo.

ScandEval

ScandEval is a framework for evaluating pretrained language models on mono- or multilingual language tasks. It provides a unified interface for benchmarking models on a variety of tasks, including sentiment analysis, question answering, and machine translation. ScandEval is designed to be easy to use and extensible, making it a valuable tool for researchers and practitioners alike.

opencompass

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features include: * Comprehensive support for models and datasets: Pre-support for 20+ HuggingFace and API models, a model evaluation scheme of 70+ datasets with about 400,000 questions, comprehensively evaluating the capabilities of the models in five dimensions. * Efficient distributed evaluation: One line command to implement task division and distributed evaluation, completing the full evaluation of billion-scale models in just a few hours. * Diversified evaluation paradigms: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue-type prompt templates, to easily stimulate the maximum performance of various models. * Modular design with high extensibility: Want to add new models or datasets, customize an advanced task division strategy, or even support a new cluster management system? Everything about OpenCompass can be easily expanded! * Experiment management and reporting mechanism: Use config files to fully record each experiment, and support real-time reporting of results.

openvino.genai

The GenAI repository contains pipelines that implement image and text generation tasks. The implementation uses OpenVINO capabilities to optimize the pipelines. Each sample covers a family of models and suggests certain modifications to adapt the code to specific needs. It includes the following pipelines: 1. Benchmarking script for large language models 2. Text generation C++ samples that support most popular models like LLaMA 2 3. Stable Diffuison (with LoRA) C++ image generation pipeline 4. Latent Consistency Model (with LoRA) C++ image generation pipeline

GPT4Point

GPT4Point is a unified framework for point-language understanding and generation. It aligns 3D point clouds with language, providing a comprehensive solution for tasks such as 3D captioning and controlled 3D generation. The project includes an automated point-language dataset annotation engine, a novel object-level point cloud benchmark, and a 3D multi-modality model. Users can train and evaluate models using the provided code and datasets, with a focus on improving models' understanding capabilities and facilitating the generation of 3D objects.

octopus-v4

The Octopus-v4 project aims to build the world's largest graph of language models, integrating specialized models and training Octopus models to connect nodes efficiently. The project focuses on identifying, training, and connecting specialized models. The repository includes scripts for running the Octopus v4 model, methods for managing the graph, training code for specialized models, and inference code. Environment setup instructions are provided for Linux with NVIDIA GPU. The Octopus v4 model helps users find suitable models for tasks and reformats queries for effective processing. The project leverages Language Large Models for various domains and provides benchmark results. Users are encouraged to train and add specialized models following recommended procedures.

Awesome-LLM-RAG

This repository, Awesome-LLM-RAG, aims to record advanced papers on Retrieval Augmented Generation (RAG) in Large Language Models (LLMs). It serves as a resource hub for researchers interested in promoting their work related to LLM RAG by updating paper information through pull requests. The repository covers various topics such as workshops, tutorials, papers, surveys, benchmarks, retrieval-enhanced LLMs, RAG instruction tuning, RAG in-context learning, RAG embeddings, RAG simulators, RAG search, RAG long-text and memory, RAG evaluation, RAG optimization, and RAG applications.

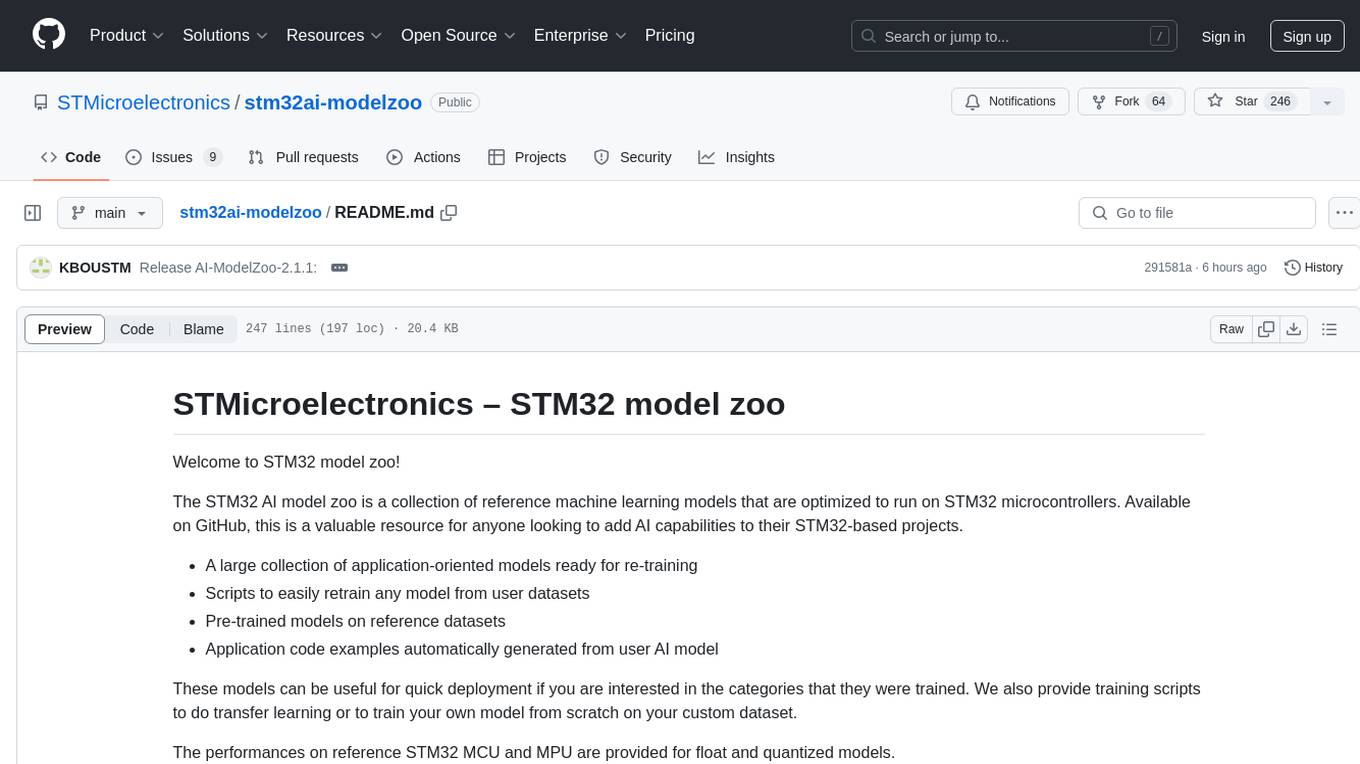

stm32ai-modelzoo

The STM32 AI model zoo is a collection of reference machine learning models optimized to run on STM32 microcontrollers. It provides a large collection of application-oriented models ready for re-training, scripts for easy retraining from user datasets, pre-trained models on reference datasets, and application code examples generated from user AI models. The project offers training scripts for transfer learning or training custom models from scratch. It includes performances on reference STM32 MCU and MPU for float and quantized models. The project is organized by application, providing step-by-step guides for training and deploying models.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.