stm32ai-modelzoo

AI Model Zoo for STM32 devices

Stars: 255

The STM32 AI model zoo is a collection of reference machine learning models optimized to run on STM32 microcontrollers. It provides a large collection of application-oriented models ready for re-training, scripts for easy retraining from user datasets, pre-trained models on reference datasets, and application code examples generated from user AI models. The project offers training scripts for transfer learning or training custom models from scratch. It includes performances on reference STM32 MCU and MPU for float and quantized models. The project is organized by application, providing step-by-step guides for training and deploying models.

README:

Welcome to STM32 model zoo!

The STM32 AI model zoo is a collection of reference machine learning models that are optimized to run on STM32 microcontrollers. Available on GitHub, this is a valuable resource for anyone looking to add AI capabilities to their STM32-based projects.

- A large collection of application-oriented models ready for re-training

- Scripts to easily retrain any model from user datasets

- Pre-trained models on reference datasets

- Application code examples automatically generated from user AI model

These models can be useful for quick deployment if you are interested in the categories that they were trained. We also provide training scripts to do transfer learning or to train your own model from scratch on your custom dataset.

The performances on reference STM32 MCU and MPU are provided for float and quantized models.

This project is organized by application, for each application you will have a step by step guide that will indicate how to train and deploy the models.

2.0:

- An aligned and

uniform architecturefor all the use case - A modular design to run different operation modes (training, benchmarking, evaluation, deployment, quantization) independently or with an option of chaining multiple modes in a single launch.

- A simple and

single entry pointto the code : a .yaml configuration file to configure all the needed services. - Support of the

Bring Your Own Model (BYOM)feature to allow the user (re-)training his own model. Example is provided here, chapter 5.1. - Support of the

Bring Your Own Data (BYOD)feature to allow the user finetuning some pretrained models with his own datasets. Example is provided here, chapter 2.3.

2.1:

- Included additional models compatible with the STM32MP257F-EV1 board.

- Added support for per-tensor quantization.

- Integrated support for

ONNX modelquantization and evaluation. - Included support for

STEdgeAI(STM32Cube.AI v9.1.0 and subsequent versions). - Expanded use case support to include

Pose EstimationandSemantic Segmentation. - Standardized logging information for a unified experience.

[!TIP] For all use-cases below, quick and easy examples are provided and can be executed for a fast ramp up (click on use cases links below)

Image classification (IC)

| Models | Input Resolutions | Supported Services | Suitable Targets for deployment |

|---|---|---|---|

| MobileNet v1 0.25 | 96x96x1 96x96x3 224x224x3 |

Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

| MobileNet v1 0.5 | 224x224x3 | Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

| MobileNet v2 0.35 | 128x128x3 224x224x3 |

Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

| MobileNet v2 1.0 | 224x224x3 | Full IC Services |

STM32MP257F-EV1 |

| ResNet8 v1 | 32x32x3 | Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

| ST ResNet8 | 32x32x3 | Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

| ResNet32 v1 | 32x32x3 | Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

| SqueezeNet v1.1 | 128x128x3 224x224x3 |

Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

| FD MobileNet 0.25 | 128x128x3 224x224x3 |

Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

| ST FD MobileNet | 128x128x3 224x224x3 |

Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

| ST EfficientNet | 128x128x3 224x224x3 |

Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

| Mnist | 28x28x1 |

Full IC Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board NUCLEO-H743ZI2 with B-CAMS-OMV camera daughter board |

Full IC Services : training, evaluation, quantization, benchmarking, prediction, deployment

Object Detection (OD)

| Models | Input Resolutions | Supported Services | Targets for deployment |

|---|---|---|---|

| ST SSD MobileNet v1 0.25 | 192x192x3 224x224x3 256x256x3 |

Full OD Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board |

| SSD MobileNet v2 fpn lite 0.35 | 192x192x3 224x224x3 256x256x3 416x416x3 |

Full OD Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board or STM32MP257F-EV1 |

| SSD MobileNet v2 fpn lite 1.0 | 256x256x3 416x416x3 |

Full OD Services | STM32MP257F-EV1 |

| ST Yolo LC v1 | 192x192x3 224x224x3 256x256x3 |

Full OD Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board |

| Tiny Yolo v2 | 224x224x3 416x416x3 |

Full OD Services |

STM32H747I-DISCO with B-CAMS-OMV camera daughter board |

Full OD Services : training, evaluation, quantization, benchmarking, prediction, deployment

Pose Estimation (PE)

| Models | Input Resolutions | Supported Services | Targets for deployment |

|---|---|---|---|

| Yolo v8 n pose | 256x256x3 |

Evaluation / Benchmarking / Prediction / Deployment |

STM32MP257F-EV1 |

| MoveNet 17 kps | 192x192x3 224x224x3 256x256x3 |

Evaluation / Quantization / Benchmarking / Prediction | N/A |

| ST MoveNet 13 kps | 192x192x3 |

Evaluation / Quantization / Benchmarking / Prediction | N/A |

Segmentation (Seg)

| Models | Input Resolutions | Supported Services | Targets for deployment |

|---|---|---|---|

| DeepLab v3 | 512x512x3 |

Full Seg Services |

STM32MP257F-EV1 |

Full Seg Services : training, evaluation, quantization, benchmarking, prediction, deployment

Human Activity Recognition (HAR)

Human Activity Recognition use case

| Models | Input Resolutions | Supported Services | Targets for deployment |

|---|---|---|---|

| gmp | 24x3x1 48x3x1 |

training / Evaluation / Benchmarking / Deployment |

B-U585I-IOT02A using ThreadX RTOS |

| ign | 24x3x1 48x3x1 |

training / Evaluation / Benchmarking / Deployment |

B-U585I-IOT02A using ThreadX RTOS |

Audio Event Detection (AED)

Audio Event Detection use case

| Models | Input Resolutions | Supported Services | Targets for deployment |

|---|---|---|---|

| miniresnet | 64x50x1 |

Full AED Services |

B-U585I-IOT02A using RTOS, ThreadX or FreeRTOS |

| miniresnet v2 | 64x50x1 |

Full AED Services |

B-U585I-IOT02A using RTOS, ThreadX or FreeRTOS |

| yamnet 256 | 64x96x1 |

Full AED Services |

B-U585I-IOT02A using RTOS, ThreadX or FreeRTOS |

Full AED Services : training, evaluation, quantization, benchmarking, prediction, deployment

Hand Posture Recognition (HPR)

Hand Posture Recognition use case

| Models | Input Resolutions | Supported Services | Targets for deployment |

|---|---|---|---|

| ST CNN 2D Hand Posture | 64x50x1 |

training / Evaluation / Benchmarking / Deployment |

NUCLEO-F401RE with X-NUCLEO-53LxA1 Time-of-Flight Nucleo expansion board |

- stm32ai_model_zoo_colab.ipynb: a Jupyter notebook that can be easily deployed on Colab to exercise STM32 model zoo training scripts.

- stm32ai_devcloud.ipynb: a Jupyter notebook that shows how to access to the STM32Cube.AI Developer Cloud through ST Python APIs (based on REST API) instead of using the web application https://stm32ai-cs.st.com.

- stm32ai_quantize_onnx_benchmark.ipynb: a Jupyter notebook that shows how to quantize ONNX format models with fake or real data by using ONNX runtime and benchmark it by using the STM32Cube.AI Developer Cloud.

- STM32 Developer Cloud examples: a collection of Python scripts that you can use in order to get started with STM32Cube.AI Developer Cloud ST Python APIs.

- Tutorial video: discover how to create an AI application for image classification using the STM32 model zoo.

- stm32ai-tao: this GitHub repository provides Python scripts and Jupyter notebooks to manage a complete life cycle of a model from training, to compression, optimization and benchmarking using NVIDIA TAO Toolkit and STM32Cube.AI Developer Cloud.

- stm32ai-nota: this GitHub repository contains Jupyter notebooks that demonstrate how to use NetsPresso to prune pre-trained deep learning models from the model zoo and fine-tune, quantize and benchmark them by using STM32Cube.AI Developer Cloud for your specific use case.

For more in depth guide on installing and setting up the model zoo and its requirement on your PC, specially in the cases when you are running behind the proxy in corporate setup, follow the detailed wiki article on How to install STM32 model zoo.

-

Create an account on myST and then sign in to STM32Cube.AI Developer Cloud to be able access the service.

-

Or, install STM32Cube.AI locally by following the instructions provided in the user manual in section 2, and get the path to

stm32aiexecutable.- Alternatively, download latest version of STM32Cube.AI

for your OS, extract the package and get the path to

stm32aiexecutable.

- Alternatively, download latest version of STM32Cube.AI

for your OS, extract the package and get the path to

-

If you don't have python already installed, you can download and install it from here, a Python Version == 3.10.x is required to be able to run the the code

-

(For Windows systems make sure to check the Add python.exe to PATH option during the installation process).

-

If using GPU make sure to install the GPU driver. For NVIDIA GPUs please refer to https://docs.nvidia.com/deeplearning/cudnn/install-guide/index.html to install CUDA and CUDNN. On Windows, it is not recommended to use WSL to get the best GPU training acceleration. If using conda, see below for installation.

-

Clone this repository using the following command:

git clone https://github.com/STMicroelectronics/stm32ai-modelzoo.git

- Create a python virtual environment for the project:

Activate your virtual environment On Windows run:cd stm32ai-modelzoo python -m venv st_zoo

On Unix or MacOS, run:st_zoo\Scripts\activate.batsource st_zoo/bin/activate - Or create a conda virtual environment for the project:

Activate your virtual environment:cd stm32ai-modelzoo conda create -n st_zoo

Install python 3.10:conda activate st_zoo

If using NVIDIA GPU, install cudatoolkit and cudnn and add to conda path:conda install -c conda-forge python=3.10

Add cudatoolkit and cudnn to path permanently:conda install -c conda-forge cudatoolkit=11.8 cudnnmkdir -p $CONDA_PREFIX/etc/conda/activate.d echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$CONDA_PREFIX/lib/' > $CONDA_PREFIX/etc/conda/activate.d/env_vars.sh - Then install all the necessary python packages, the requirement file contains it all.

pip install -r requirements.txt

In tutorials/notebooks you will find a jupyter notebook that can be easily deployed on Colab to exercise STM32 model zoo training scripts.

[!IMPORTANT] In this project, we are using TensorFLow version 2.8.3 following unresolved issues with newest versions of TensorFlow, see more.

[!CAUTION] If there are some white spaces in the paths (for Python, STM32CubeIDE, or, STM32Cube.AI local installation) this can result in errors. So avoid having paths with white spaces in them.

[!TIP] In this project we are using the

mlflowlibrary to log the results of different runs. Depending on which version of Windows OS are you using or where you place the project the output log files might have a very long path which might result in an error at the time of logging the results. As by default, Windows uses a path length limitation (MAX_PATH) of 256 characters: Naming Files, Paths, and Namespaces. To avoid this potential error, create (or edit) a variable namedLongPathsEnabledin Registry Editor under Computer/HKEY_LOCAL_MACHINE/SYSTEM/CurrentControlSet/Control/FileSystem/ and assign it a value of1. This will change the maximum length allowed for the file length on Windows machines and will avoid any errors resulting due to this. For more details have a look at this link. Note that using GIT, line below may help solving long path issue :

git config --system core.longpaths trueFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for stm32ai-modelzoo

Similar Open Source Tools

stm32ai-modelzoo

The STM32 AI model zoo is a collection of reference machine learning models optimized to run on STM32 microcontrollers. It provides a large collection of application-oriented models ready for re-training, scripts for easy retraining from user datasets, pre-trained models on reference datasets, and application code examples generated from user AI models. The project offers training scripts for transfer learning or training custom models from scratch. It includes performances on reference STM32 MCU and MPU for float and quantized models. The project is organized by application, providing step-by-step guides for training and deploying models.

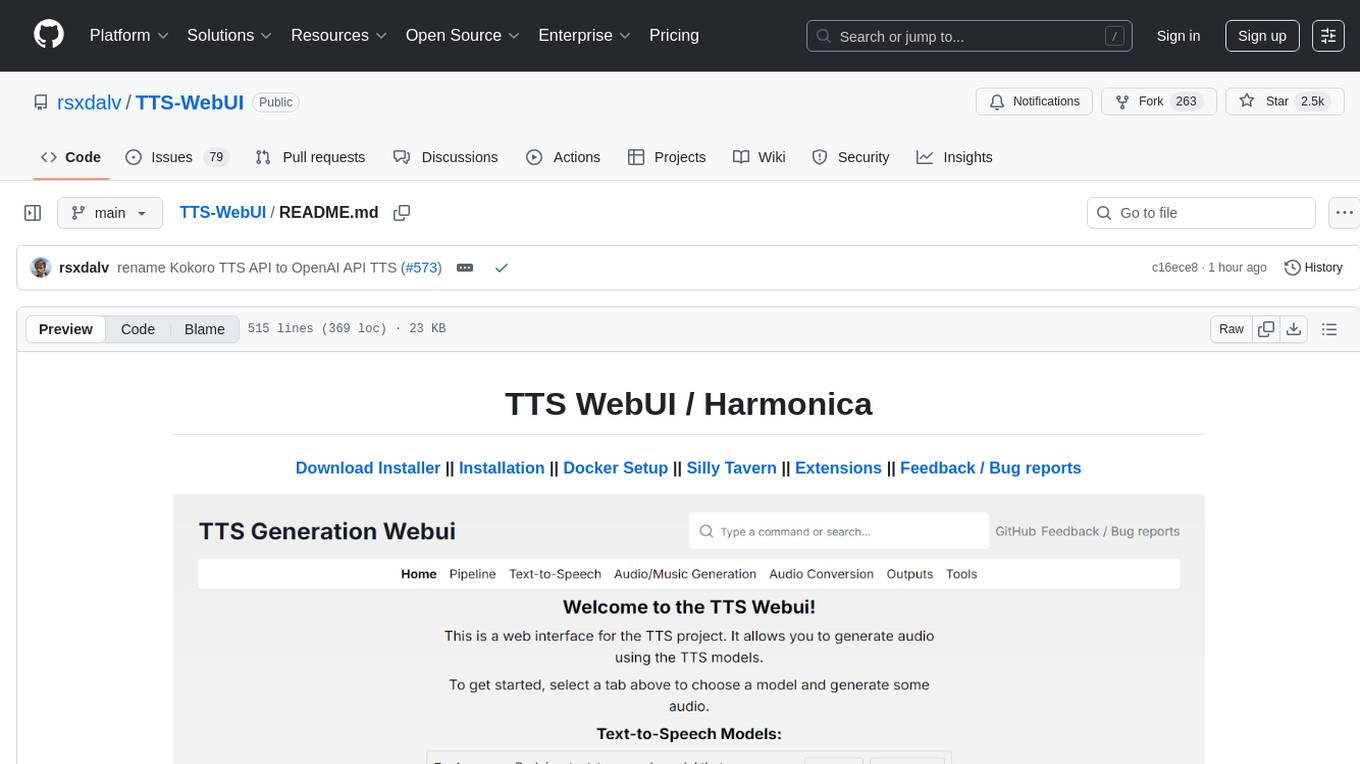

TTS-WebUI

TTS WebUI is a comprehensive tool for text-to-speech synthesis, audio/music generation, and audio conversion. It offers a user-friendly interface for various AI projects related to voice and audio processing. The tool provides a range of models and extensions for different tasks, along with integrations like Silly Tavern and OpenWebUI. With support for Docker setup and compatibility with Linux and Windows, TTS WebUI aims to facilitate creative and responsible use of AI technologies in a user-friendly manner.

lemonade

Lemonade is a tool that helps users run local Large Language Models (LLMs) with high performance by configuring state-of-the-art inference engines for their Neural Processing Units (NPUs) and Graphics Processing Units (GPUs). It is used by startups, research teams, and large companies to run LLMs efficiently. Lemonade provides a high-level Python API for direct integration of LLMs into Python applications and a CLI for mixing and matching LLMs with various features like prompting templates, accuracy testing, performance benchmarking, and memory profiling. The tool supports both GGUF and ONNX models and allows importing custom models from Hugging Face using the Model Manager. Lemonade is designed to be easy to use and switch between different configurations at runtime, making it a versatile tool for running LLMs locally.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

ScaleLLM

ScaleLLM is a cutting-edge inference system engineered for large language models (LLMs), meticulously designed to meet the demands of production environments. It extends its support to a wide range of popular open-source models, including Llama3, Gemma, Bloom, GPT-NeoX, and more. ScaleLLM is currently undergoing active development. We are fully committed to consistently enhancing its efficiency while also incorporating additional features. Feel free to explore our **_Roadmap_** for more details. ## Key Features * High Efficiency: Excels in high-performance LLM inference, leveraging state-of-the-art techniques and technologies like Flash Attention, Paged Attention, Continuous batching, and more. * Tensor Parallelism: Utilizes tensor parallelism for efficient model execution. * OpenAI-compatible API: An efficient golang rest api server that compatible with OpenAI. * Huggingface models: Seamless integration with most popular HF models, supporting safetensors. * Customizable: Offers flexibility for customization to meet your specific needs, and provides an easy way to add new models. * Production Ready: Engineered with production environments in mind, ScaleLLM is equipped with robust system monitoring and management features to ensure a seamless deployment experience.

helicone

Helicone is an open-source observability platform designed for Language Learning Models (LLMs). It logs requests to OpenAI in a user-friendly UI, offers caching, rate limits, and retries, tracks costs and latencies, provides a playground for iterating on prompts and chat conversations, supports collaboration, and will soon have APIs for feedback and evaluation. The platform is deployed on Cloudflare and consists of services like Web (NextJs), Worker (Cloudflare Workers), Jawn (Express), Supabase, and ClickHouse. Users can interact with Helicone locally by setting up the required services and environment variables. The platform encourages contributions and provides resources for learning, documentation, and integrations.

UMOE-Scaling-Unified-Multimodal-LLMs

Uni-MoE is a MoE-based unified multimodal model that can handle diverse modalities including audio, speech, image, text, and video. The project focuses on scaling Unified Multimodal LLMs with a Mixture of Experts framework. It offers enhanced functionality for training across multiple nodes and GPUs, as well as parallel processing at both the expert and modality levels. The model architecture involves three training stages: building connectors for multimodal understanding, developing modality-specific experts, and incorporating multiple trained experts into LLMs using the LoRA technique on mixed multimodal data. The tool provides instructions for installation, weights organization, inference, training, and evaluation on various datasets.

autogen

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

Home-AssistantConfig

Bear Stone Smart Home Documentation is a live, personal Home Assistant configuration shared for browsing and inspiration. It provides real-world automations, scripts, and scenes for users to reverse-engineer patterns and adapt to their own setups. The repository layout includes reusable config under `config/`, with runtime artifacts hidden by `.gitignore`. The platform runs on Docker/compose, and featured examples cover various smart home scenarios like alarm monitoring, lighting control, and presence awareness. The repository also includes a network diagram and lists Docker add-ons & utilities, gear tied to real automations, and affiliate links for supported hardware.

ASTRA.ai

ASTRA is an open-source platform designed for developing applications utilizing large language models. It merges the ideas of Backend-as-a-Service and LLM operations, allowing developers to swiftly create production-ready generative AI applications. Additionally, it empowers non-technical users to engage in defining and managing data operations for AI applications. With ASTRA, you can easily create real-time, multi-modal AI applications with low latency, even without any coding knowledge.

nacos

Nacos is an easy-to-use platform designed for dynamic service discovery and configuration and service management. It helps build cloud native applications and microservices platform easily. Nacos provides functions like service discovery, health check, dynamic configuration management, dynamic DNS service, and service metadata management.

RAG-Driven-Generative-AI

RAG-Driven Generative AI provides a roadmap for building effective LLM, computer vision, and generative AI systems that balance performance and costs. This book offers a detailed exploration of RAG and how to design, manage, and control multimodal AI pipelines. By connecting outputs to traceable source documents, RAG improves output accuracy and contextual relevance, offering a dynamic approach to managing large volumes of information. This AI book also shows you how to build a RAG framework, providing practical knowledge on vector stores, chunking, indexing, and ranking. You'll discover techniques to optimize your project's performance and better understand your data, including using adaptive RAG and human feedback to refine retrieval accuracy, balancing RAG with fine-tuning, implementing dynamic RAG to enhance real-time decision-making, and visualizing complex data with knowledge graphs. You'll be exposed to a hands-on blend of frameworks like LlamaIndex and Deep Lake, vector databases such as Pinecone and Chroma, and models from Hugging Face and OpenAI. By the end of this book, you will have acquired the skills to implement intelligent solutions, keeping you competitive in fields ranging from production to customer service across any project.

PromptClip

PromptClip is a tool that allows developers to create video clips using LLM prompts. Users can upload videos from various sources, prompt the video in natural language, use different LLM models, instantly watch the generated clips, finetune the clips, and add music or image overlays. The tool provides a seamless way to extract specific moments from videos based on user queries, making video editing and content creation more efficient and intuitive.

palico-ai

Palico AI is a tech stack designed for rapid iteration of LLM applications. It allows users to preview changes instantly, improve performance through experiments, debug issues with logs and tracing, deploy applications behind a REST API, and manage applications with a UI control panel. Users have complete flexibility in building their applications with Palico, integrating with various tools and libraries. The tool enables users to swap models, prompts, and logic easily using AppConfig. It also facilitates performance improvement through experiments and provides options for deploying applications to cloud providers or using managed hosting. Contributions to the project are welcomed, with easy ways to get involved by picking issues labeled as 'good first issue'.

LLaMA-Factory

LLaMA Factory is a unified framework for fine-tuning 100+ large language models (LLMs) with various methods, including pre-training, supervised fine-tuning, reward modeling, PPO, DPO and ORPO. It features integrated algorithms like GaLore, BAdam, DoRA, LongLoRA, LLaMA Pro, LoRA+, LoftQ and Agent tuning, as well as practical tricks like FlashAttention-2, Unsloth, RoPE scaling, NEFTune and rsLoRA. LLaMA Factory provides experiment monitors like LlamaBoard, TensorBoard, Wandb, MLflow, etc., and supports faster inference with OpenAI-style API, Gradio UI and CLI with vLLM worker. Compared to ChatGLM's P-Tuning, LLaMA Factory's LoRA tuning offers up to 3.7 times faster training speed with a better Rouge score on the advertising text generation task. By leveraging 4-bit quantization technique, LLaMA Factory's QLoRA further improves the efficiency regarding the GPU memory.

For similar tasks

byteir

The ByteIR Project is a ByteDance model compilation solution. ByteIR includes compiler, runtime, and frontends, and provides an end-to-end model compilation solution. Although all ByteIR components (compiler/runtime/frontends) are together to provide an end-to-end solution, and all under the same umbrella of this repository, each component technically can perform independently. The name, ByteIR, comes from a legacy purpose internally. The ByteIR project is NOT an IR spec definition project. Instead, in most scenarios, ByteIR directly uses several upstream MLIR dialects and Google Mhlo. Most of ByteIR compiler passes are compatible with the selected upstream MLIR dialects and Google Mhlo.

ScandEval

ScandEval is a framework for evaluating pretrained language models on mono- or multilingual language tasks. It provides a unified interface for benchmarking models on a variety of tasks, including sentiment analysis, question answering, and machine translation. ScandEval is designed to be easy to use and extensible, making it a valuable tool for researchers and practitioners alike.

opencompass

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features include: * Comprehensive support for models and datasets: Pre-support for 20+ HuggingFace and API models, a model evaluation scheme of 70+ datasets with about 400,000 questions, comprehensively evaluating the capabilities of the models in five dimensions. * Efficient distributed evaluation: One line command to implement task division and distributed evaluation, completing the full evaluation of billion-scale models in just a few hours. * Diversified evaluation paradigms: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue-type prompt templates, to easily stimulate the maximum performance of various models. * Modular design with high extensibility: Want to add new models or datasets, customize an advanced task division strategy, or even support a new cluster management system? Everything about OpenCompass can be easily expanded! * Experiment management and reporting mechanism: Use config files to fully record each experiment, and support real-time reporting of results.

openvino.genai

The GenAI repository contains pipelines that implement image and text generation tasks. The implementation uses OpenVINO capabilities to optimize the pipelines. Each sample covers a family of models and suggests certain modifications to adapt the code to specific needs. It includes the following pipelines: 1. Benchmarking script for large language models 2. Text generation C++ samples that support most popular models like LLaMA 2 3. Stable Diffuison (with LoRA) C++ image generation pipeline 4. Latent Consistency Model (with LoRA) C++ image generation pipeline

GPT4Point

GPT4Point is a unified framework for point-language understanding and generation. It aligns 3D point clouds with language, providing a comprehensive solution for tasks such as 3D captioning and controlled 3D generation. The project includes an automated point-language dataset annotation engine, a novel object-level point cloud benchmark, and a 3D multi-modality model. Users can train and evaluate models using the provided code and datasets, with a focus on improving models' understanding capabilities and facilitating the generation of 3D objects.

octopus-v4

The Octopus-v4 project aims to build the world's largest graph of language models, integrating specialized models and training Octopus models to connect nodes efficiently. The project focuses on identifying, training, and connecting specialized models. The repository includes scripts for running the Octopus v4 model, methods for managing the graph, training code for specialized models, and inference code. Environment setup instructions are provided for Linux with NVIDIA GPU. The Octopus v4 model helps users find suitable models for tasks and reformats queries for effective processing. The project leverages Language Large Models for various domains and provides benchmark results. Users are encouraged to train and add specialized models following recommended procedures.

Awesome-LLM-RAG

This repository, Awesome-LLM-RAG, aims to record advanced papers on Retrieval Augmented Generation (RAG) in Large Language Models (LLMs). It serves as a resource hub for researchers interested in promoting their work related to LLM RAG by updating paper information through pull requests. The repository covers various topics such as workshops, tutorials, papers, surveys, benchmarks, retrieval-enhanced LLMs, RAG instruction tuning, RAG in-context learning, RAG embeddings, RAG simulators, RAG search, RAG long-text and memory, RAG evaluation, RAG optimization, and RAG applications.

stm32ai-modelzoo

The STM32 AI model zoo is a collection of reference machine learning models optimized to run on STM32 microcontrollers. It provides a large collection of application-oriented models ready for re-training, scripts for easy retraining from user datasets, pre-trained models on reference datasets, and application code examples generated from user AI models. The project offers training scripts for transfer learning or training custom models from scratch. It includes performances on reference STM32 MCU and MPU for float and quantized models. The project is organized by application, providing step-by-step guides for training and deploying models.

For similar jobs

Qwen-TensorRT-LLM

Qwen-TensorRT-LLM is a project developed for the NVIDIA TensorRT Hackathon 2023, focusing on accelerating inference for the Qwen-7B-Chat model using TRT-LLM. The project offers various functionalities such as FP16/BF16 support, INT8 and INT4 quantization options, Tensor Parallel for multi-GPU parallelism, web demo setup with gradio, Triton API deployment for maximum throughput/concurrency, fastapi integration for openai requests, CLI interaction, and langchain support. It supports models like qwen2, qwen, and qwen-vl for both base and chat models. The project also provides tutorials on Bilibili and blogs for adapting Qwen models in NVIDIA TensorRT-LLM, along with hardware requirements and quick start guides for different model types and quantization methods.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

ai-edge-torch

AI Edge Torch is a Python library that supports converting PyTorch models into a .tflite format for on-device applications on Android, iOS, and IoT devices. It offers broad CPU coverage with initial GPU and NPU support, closely integrating with PyTorch and providing good coverage of Core ATen operators. The library includes a PyTorch converter for model conversion and a Generative API for authoring mobile-optimized PyTorch Transformer models, enabling easy deployment of Large Language Models (LLMs) on mobile devices.

awesome-RK3588

RK3588 is a flagship 8K SoC chip by Rockchip, integrating Cortex-A76 and Cortex-A55 cores with NEON coprocessor for 8K video codec. This repository curates resources for developing with RK3588, including official resources, RKNN models, projects, development boards, documentation, tools, and sample code.

cl-waffe2

cl-waffe2 is an experimental deep learning framework in Common Lisp, providing fast, systematic, and customizable matrix operations, reverse mode tape-based Automatic Differentiation, and neural network model building and training features accelerated by a JIT Compiler. It offers abstraction layers, extensibility, inlining, graph-level optimization, visualization, debugging, systematic nodes, and symbolic differentiation. Users can easily write extensions and optimize their networks without overheads. The framework is designed to eliminate barriers between users and developers, allowing for easy customization and extension.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

depthai

This repository contains a demo application for DepthAI, a tool that can load different networks, create pipelines, record video, and more. It provides documentation for installation and usage, including running programs through Docker. Users can explore DepthAI features via command line arguments or a clickable QT interface. Supported models include various AI models for tasks like face detection, human pose estimation, and object detection. The tool collects anonymous usage statistics by default, which can be disabled. Users can report issues to the development team for support and troubleshooting.