palico-ai

Quickly Iterate on your LLM Development with an Integrated Workspace

Stars: 302

Palico AI is a tech stack designed for rapid iteration of LLM applications. It allows users to preview changes instantly, improve performance through experiments, debug issues with logs and tracing, deploy applications behind a REST API, and manage applications with a UI control panel. Users have complete flexibility in building their applications with Palico, integrating with various tools and libraries. The tool enables users to swap models, prompts, and logic easily using AppConfig. It also facilitates performance improvement through experiments and provides options for deploying applications to cloud providers or using managed hosting. Contributions to the project are welcomed, with easy ways to get involved by picking issues labeled as 'good first issue'.

README:

Building an LLM application involves continously trying out different ideas (models, prompts, architectures). Palico provides you with an integrated tech stack that helps you quickly iterate on your LLM development.

- ✅ Build any application in code with complete flexibility (docs)

- ✅ Integrate with any external libraries like LangChain, LlamaIndex, Portkey, and more (docs)

- ✅ Preview changes instantly with hot-reload and Playground UI (docs)

- ✅ Systematically improve performance with Experiments (docs)

- ✅ Debug issues with comprehensive logs and tracing (docs)

- ✅ Deploy your application behind a REST API (docs)

- ✅ Manage your application with an UI control panel (docs)

[!TIP] ⭐️ Star this repo to get release notifications for new features.

npx palico init <project-name>Checkout our quickstart guide.

https://github.com/palico-ai/palico-main/assets/32821894/54f35583-41c1-48a3-9565-95c484a4909b

With Palico, you have complete control over the implementation details of your LLM application. Building an LLM application with Palico just involves implementing the Agent interface. Here's an example:

import {

Agent,

AgentResponse,

ConversationContext,

ConversationRequestContent,

} from "@palico-ai/app";

class MyLLMApp implements Agent {

async chat(

content: ConversationRequestContent,

context: ConversationContext

): Promise<AgentResponse> {

// Your LLM application logic

// 1. Pre-processing

// 2. Build your prompt

// 3. Call your LLM model

// 4. Post-processing

return {

// 5. Return a response to caller

}

}

}Learn more about building your application with palico (docs).

Since you own the implementation details, you can use Palico with most other external tools and libraries

| Tools or Libraries | Supported | |

|---|---|---|

| Langchain | ✅ | |

| LlamaIndex | ✅ | |

| Portkey | ✅ | |

| OpenAI | ✅ | |

| Anthropic | ✅ | |

| Cohere | ✅ | |

| Azure | ✅ | |

| AWS Bedrock | ✅ | |

| GCP Vertex | ✅ | |

| Pinecone | ✅ | |

| PG Vector | ✅ | |

| Chroma | ✅ |

Learn more from docs.

Make a code change and instantly preview it locally on our playground UI

https://github.com/user-attachments/assets/c33ae53d-acf5-4c89-9c41-743ea1cb4722

Working on LLM application involves testing different variations of models, prompts, and application logic. Palico helps you build an interchangable application layer using "feature-flag-like" feature called AppConfig. Using AppConfig, you can easily swap models, prompts, or any logic in your application layer.

Learn more about AppConfig.

Palico helps you create an iterative loop to systematically improve performance of your LLM application using experiments.

With experiments, you can:

- Setup a list of test-cases that models the behavior of your application

- Make a change to your application

- Run an evaluation to measure how well your application performed against your test-cases

- Iterate

Learn more about experiments

You can deploy your Palico app to any cloud provider using Docker or use our managed hosting (coming soon). You can then use our ClientSDK or REST API to communicate with your LLM application.

Learn more from docs.

The easiest way to contribute is to pick an issue with the good first issue tag 💪.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for palico-ai

Similar Open Source Tools

palico-ai

Palico AI is a tech stack designed for rapid iteration of LLM applications. It allows users to preview changes instantly, improve performance through experiments, debug issues with logs and tracing, deploy applications behind a REST API, and manage applications with a UI control panel. Users have complete flexibility in building their applications with Palico, integrating with various tools and libraries. The tool enables users to swap models, prompts, and logic easily using AppConfig. It also facilitates performance improvement through experiments and provides options for deploying applications to cloud providers or using managed hosting. Contributions to the project are welcomed, with easy ways to get involved by picking issues labeled as 'good first issue'.

Open-Interface

Open Interface is a self-driving software that automates computer tasks by sending user requests to a language model backend (e.g., GPT-4V) and simulating keyboard and mouse inputs to execute the steps. It course-corrects by sending current screenshots to the language models. The tool supports MacOS, Linux, and Windows, and requires setting up the OpenAI API key for access to GPT-4V. It can automate tasks like creating meal plans, setting up custom language model backends, and more. Open Interface is currently not efficient in accurate spatial reasoning, tracking itself in tabular contexts, and navigating complex GUI-rich applications. Future improvements aim to enhance the tool's capabilities with better models trained on video walkthroughs. The tool is cost-effective, with user requests priced between $0.05 - $0.20, and offers features like interrupting the app and primary display visibility in multi-monitor setups.

EvoAgentX

EvoAgentX is an open-source framework for building, evaluating, and evolving LLM-based agents or agentic workflows in an automated, modular, and goal-driven manner. It enables developers and researchers to move beyond static prompt chaining or manual workflow orchestration by introducing a self-evolving agent ecosystem. The framework includes features such as agent workflow autoconstruction, built-in evaluation, self-evolution engine, plug-and-play compatibility, comprehensive built-in tools, memory module support, and human-in-the-loop interactions.

TTS-WebUI

TTS WebUI is a comprehensive tool for text-to-speech synthesis, audio/music generation, and audio conversion. It offers a user-friendly interface for various AI projects related to voice and audio processing. The tool provides a range of models and extensions for different tasks, along with integrations like Silly Tavern and OpenWebUI. With support for Docker setup and compatibility with Linux and Windows, TTS WebUI aims to facilitate creative and responsible use of AI technologies in a user-friendly manner.

inference

Xorbits Inference (Xinference) is a powerful and versatile library designed to serve language, speech recognition, and multimodal models. With Xorbits Inference, you can effortlessly deploy and serve your or state-of-the-art built-in models using just a single command. Whether you are a researcher, developer, or data scientist, Xorbits Inference empowers you to unleash the full potential of cutting-edge AI models.

txtai

Txtai is an all-in-one embeddings database for semantic search, LLM orchestration, and language model workflows. It combines vector indexes, graph networks, and relational databases to enable vector search with SQL, topic modeling, retrieval augmented generation, and more. Txtai can stand alone or serve as a knowledge source for large language models (LLMs). Key features include vector search with SQL, object storage, topic modeling, graph analysis, multimodal indexing, embedding creation for various data types, pipelines powered by language models, workflows to connect pipelines, and support for Python, JavaScript, Java, Rust, and Go. Txtai is open-source under the Apache 2.0 license.

beeai-framework

BeeAI Framework is a versatile tool for building production-ready multi-agent systems. It offers flexibility in orchestrating agents, seamless integration with various models and tools, and production-grade controls for scaling. The framework supports Python and TypeScript libraries, enabling users to implement simple to complex multi-agent patterns, connect with AI services, and optimize token usage and resource management.

generative-ai-with-javascript

The 'Generative AI with JavaScript' repository is a comprehensive resource hub for JavaScript developers interested in delving into the world of Generative AI. It provides code samples, tutorials, and resources from a video series, offering best practices and tips to enhance AI skills. The repository covers the basics of generative AI, guides on building AI applications using JavaScript, from local development to deployment on Azure, and scaling AI models. It is a living repository with continuous updates, making it a valuable resource for both beginners and experienced developers looking to explore AI with JavaScript.

anx-reader

Anx Reader is a meticulously designed e-book reader tailored for book enthusiasts. It boasts powerful AI functionalities and supports various e-book formats, enhancing the reading experience. With a modern interface, the tool aims to provide a seamless and enjoyable reading journey. It offers rich format support, seamless sync across devices, smart AI assistance, personalized reading experiences, professional reading analytics, a powerful note system, practical tools, and cross-platform support. The tool is continuously evolving with features like UI adaptation for tablets, page-turning animation, TTS voice reading, reading fonts, translation, and more in the pipeline.

note-gen

Note-gen is a simple tool for generating notes automatically based on user input. It uses natural language processing techniques to analyze text and extract key information to create structured notes. The tool is designed to save time and effort for users who need to summarize large amounts of text or generate notes quickly. With note-gen, users can easily create organized and concise notes for study, research, or any other purpose.

superduperdb

SuperDuperDB is a Python framework for integrating AI models, APIs, and vector search engines directly with your existing databases, including hosting of your own models, streaming inference and scalable model training/fine-tuning. Build, deploy and manage any AI application without the need for complex pipelines, infrastructure as well as specialized vector databases, and moving our data there, by integrating AI at your data's source: - Generative AI, LLMs, RAG, vector search - Standard machine learning use-cases (classification, segmentation, regression, forecasting recommendation etc.) - Custom AI use-cases involving specialized models - Even the most complex applications/workflows in which different models work together SuperDuperDB is **not** a database. Think `db = superduper(db)`: SuperDuperDB transforms your databases into an intelligent platform that allows you to leverage the full AI and Python ecosystem. A single development and deployment environment for all your AI applications in one place, fully scalable and easy to manage.

coreply

Coreply is an open-source Android app that provides texting suggestions while typing, enhancing the typing experience with intelligent, context-aware suggestions. It supports various texting apps and offers real-time AI suggestions, customizable LLM settings, and ensures no data collection. Users can install the app, configure it with an API key, and start receiving suggestions while typing in messaging apps. The tool supports different AI models from providers like OpenAI, Google AI Studio, Openrouter, Groq, and Codestral for chat completion and fill-in-the-middle tasks.

off-grid-mobile

Off Grid is a complete offline AI suite that allows users to perform various tasks such as text generation, image generation, vision AI, voice transcription, and document analysis on their mobile devices without sending any data out. The tool offers high performance on flagship devices and supports a wide range of models for different tasks. Users can easily install the tool on Android by downloading the APK from GitHub Releases or build it from source with Node.js and JDK. The documentation provides detailed information on the system architecture, codebase, design system, visual hierarchy, test flows, and more. Contributions are welcome, and the tool is built with a focus on user privacy and data security, ensuring no cloud, subscription, or data harvesting.

llm4ad

LLM4AD is an open-source Python-based platform leveraging Large Language Models (LLMs) for Automatic Algorithm Design (AD). It provides unified interfaces for methods, tasks, and LLMs, along with features like evaluation acceleration, secure evaluation, logs, GUI support, and more. The platform was originally developed for optimization tasks but is versatile enough to be used in other areas such as machine learning, science discovery, game theory, and engineering design. It offers various search methods and algorithm design tasks across different domains. LLM4AD supports remote LLM API, local HuggingFace LLM deployment, and custom LLM interfaces. The project is licensed under the MIT License and welcomes contributions, collaborations, and issue reports.

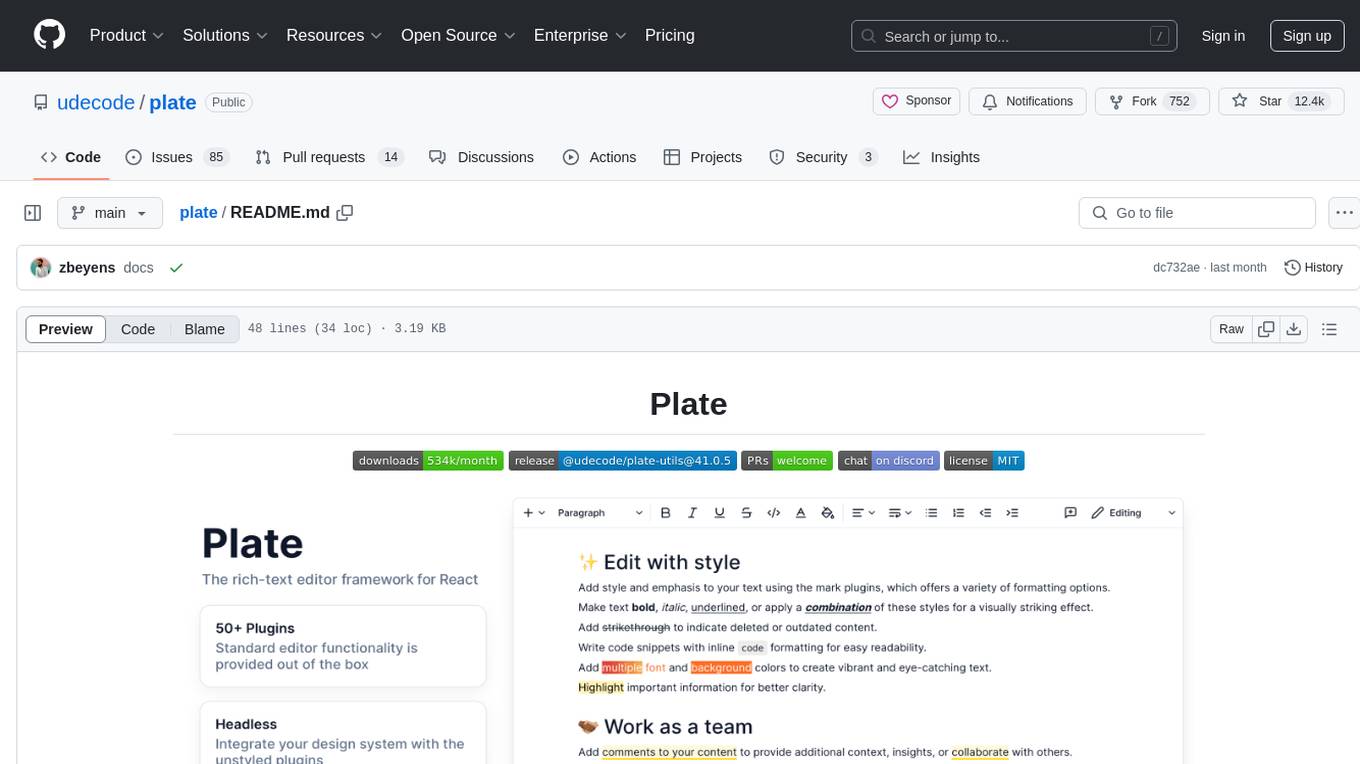

plate

Plate is a rich-text editor framework designed for simplicity and efficiency. It consists of core plugin system, various plugin packages, primitive hooks and components, and pre-built components. Plate offers templates for different use cases like Notion-like template, Plate playground template, and Plate minimal template. Users can refer to the documentation for more information on Plate. Contributors are welcome to join the project by giving stars, making pull requests, or sharing plugins.

PromptClip

PromptClip is a tool that allows developers to create video clips using LLM prompts. Users can upload videos from various sources, prompt the video in natural language, use different LLM models, instantly watch the generated clips, finetune the clips, and add music or image overlays. The tool provides a seamless way to extract specific moments from videos based on user queries, making video editing and content creation more efficient and intuitive.

For similar tasks

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

maxtext

MaxText is a high performance, highly scalable, open-source Large Language Model (LLM) written in pure Python/Jax targeting Google Cloud TPUs and GPUs for training and inference. It aims to be a launching off point for ambitious LLM projects in research and production, supporting TPUs and GPUs, models like Llama2, Mistral, and Gemma. MaxText provides specific instructions for getting started, runtime performance results, comparison to alternatives, and features like stack trace collection, ahead of time compilation for TPUs and GPUs, and automatic upload of logs to Vertex Tensorboard.

claude-coder

Claude Coder is an AI-powered coding companion in the form of a VS Code extension that helps users transform ideas into code, convert designs into applications, debug intuitively, accelerate development with automation, and improve coding skills. It aims to bridge the gap between imagination and implementation, making coding accessible and efficient for developers of all skill levels.

palico-ai

Palico AI is a tech stack designed for rapid iteration of LLM applications. It allows users to preview changes instantly, improve performance through experiments, debug issues with logs and tracing, deploy applications behind a REST API, and manage applications with a UI control panel. Users have complete flexibility in building their applications with Palico, integrating with various tools and libraries. The tool enables users to swap models, prompts, and logic easily using AppConfig. It also facilitates performance improvement through experiments and provides options for deploying applications to cloud providers or using managed hosting. Contributions to the project are welcomed, with easy ways to get involved by picking issues labeled as 'good first issue'.

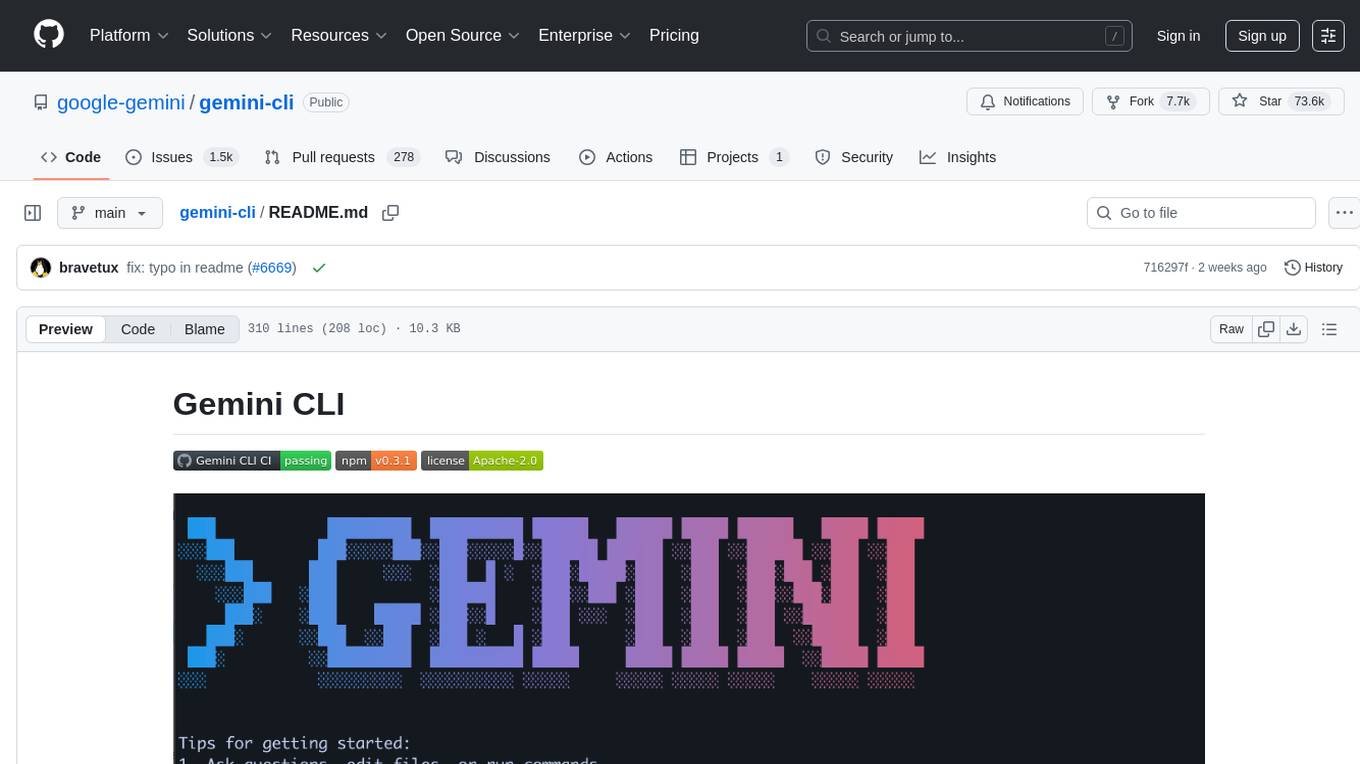

gemini-cli

Gemini CLI is an open-source AI agent that provides lightweight access to Gemini, offering powerful capabilities like code understanding, generation, automation, integration, and advanced features. It is designed for developers who prefer working in the command line and offers extensibility through MCP support. The tool integrates directly into GitHub workflows and offers various authentication options for individual developers, enterprise teams, and production workloads. With features like code querying, editing, app generation, debugging, and GitHub integration, Gemini CLI aims to streamline development workflows and enhance productivity.

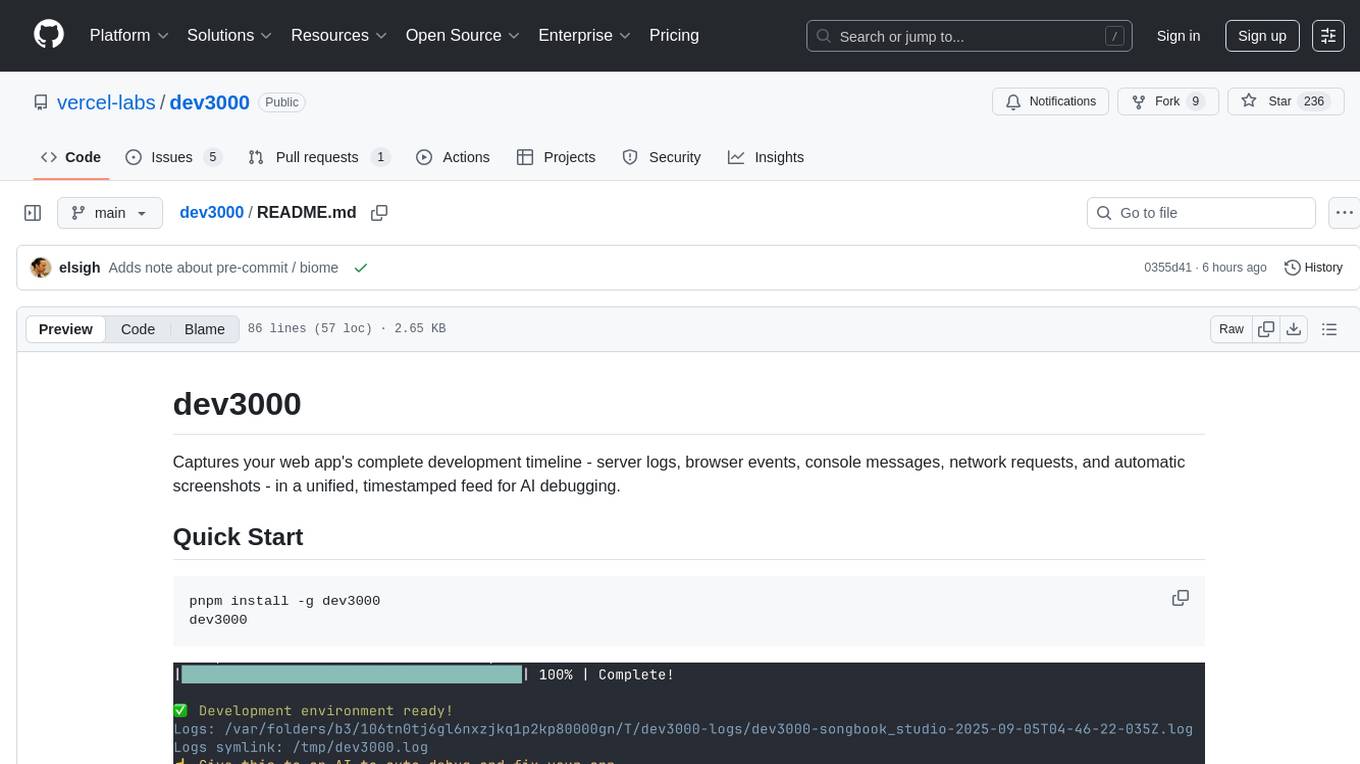

dev3000

dev3000 captures your web app's complete development timeline including server logs, browser events, console messages, network requests, and automatic screenshots in a unified, timestamped feed for AI debugging. It creates a comprehensive log of your development session that AI assistants can easily understand, monitoring your app in a real browser and capturing server logs, console output, browser console messages and errors, network requests and responses, and automatic screenshots on navigation, errors, and key events. Logs are saved with timestamps and rotated to keep the 10 most recent per project, with the current session symlinked for easy access. The tool integrates with AI assistants for instant debugging and provides advanced querying options through the MCP server.

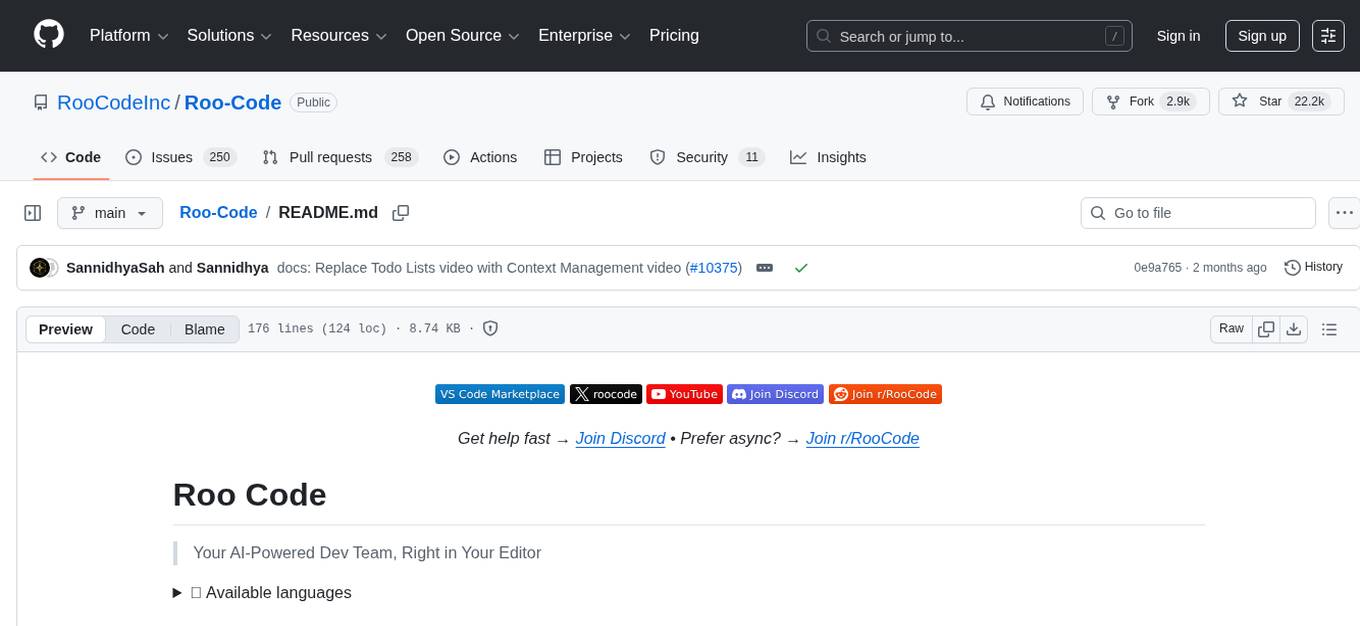

Roo-Code

Roo Code is an AI-powered development tool that integrates with your code editor to help you generate code from natural language descriptions and specifications, refactor and debug existing code, write and update documentation, answer questions about your codebase, automate repetitive tasks, and utilize MCP servers. It offers different modes such as Code, Architect, Ask, Debug, and Custom Modes to adapt to various tasks and workflows. Roo Code provides tutorial and feature videos, documentation, a YouTube channel, a Discord server, a Reddit community, GitHub issues tracking, and a feature request platform. Users can set up and develop Roo Code locally by cloning the repository, installing dependencies, and running the extension in development mode or by automated/manual VSIX installation. The tool uses changesets for versioning and publishing. Please note that Roo Code, Inc. does not make any representations or warranties regarding the tools provided, and users assume all risks associated with their use.

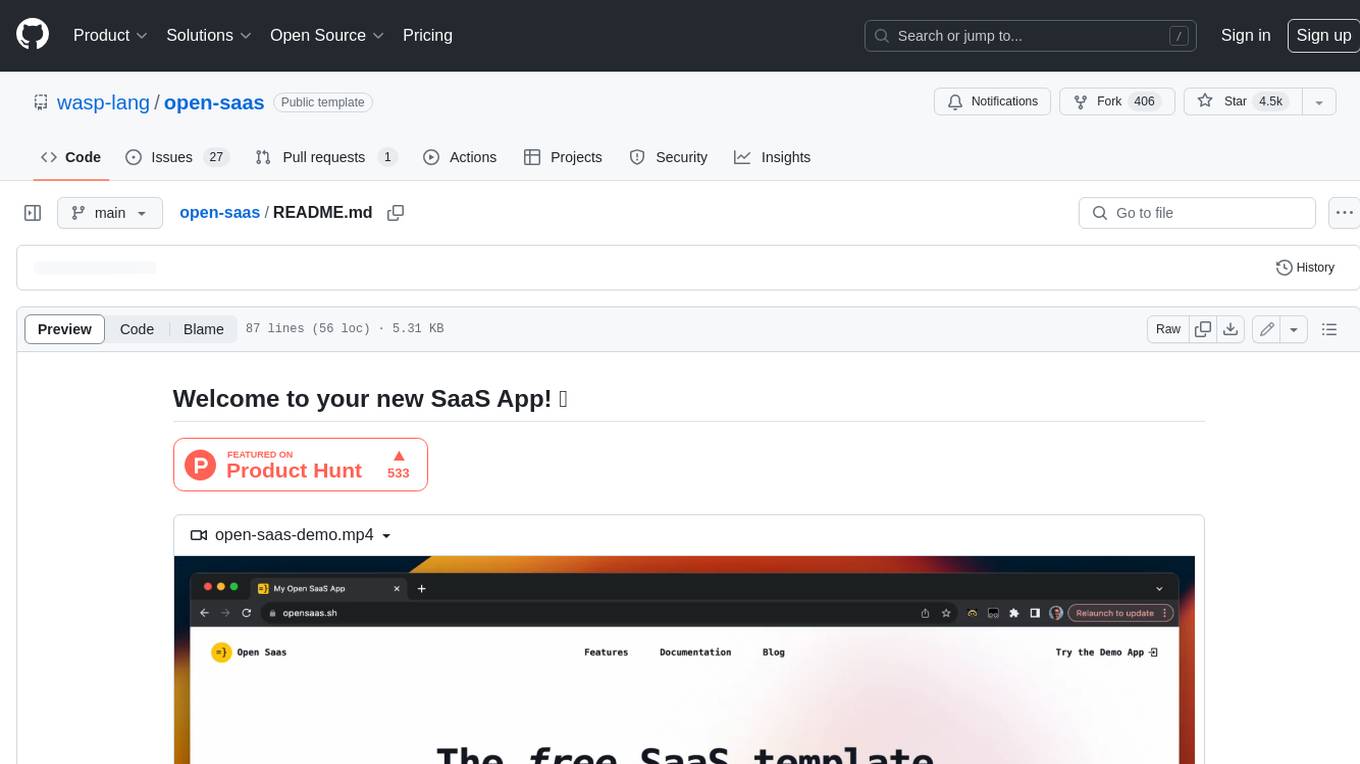

open-saas

Open SaaS is a free and open-source React and Node.js template for building SaaS applications. It comes with a variety of features out of the box, including authentication, payments, analytics, and more. Open SaaS is built on top of the Wasp framework, which provides a number of features to make it easy to build SaaS applications, such as full-stack authentication, end-to-end type safety, jobs, and one-command deploy.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.