OpenAiTx

Auto-translate platform for your GitHub project readme & wiki.

Stars: 190

OpenAiTx is a language auto-translate tool designed for GitHub project readme & wiki. It uses premium-grade LLM for one-time translation and makes the results freely accessible to the open-source community. The tool supports Google/Bing multiple languages SEO search, which regular client translate tools cannot do. It is free and open source forever, allowing project maintainers to save time by submitting once and auto-updating in the future. OpenAiTx provides users with different style options for language display badges or text, making it easy to integrate into readme files. Contributors can participate by forking the project, cloning it, choosing a script in their preferred language, filling in their AI token, running it, and creating a pull request. It is important not to upload personal tokens for security reasons. The tool is specifically designed for GitHub markdown and aims to help users translate their projects efficiently.

README:

OpenAiTx 20 languages auto-translate tool for your GitHub project readme & wiki.

- One-time translation using premium-grade LLM and make the result freely accessible to the open-source community.

- Support Google/Bing multiple languages SEO search, client translate tool can't do it.

- Free & Open Source forever.

- Submit one time and auto-update future, it can save your time if you're a project maintainer.

- Replace URL

GitHubbyOpenAiTx, e.g. https://github.com/OpenAiTx/OpenAiTx → https://openaitx.com/OpenAiTx/OpenAiTx - Submit your project.

- Click and copy the style badges or text you like.

- Update to your readme file.

or

- Access https://openaitx.com

- Submit your project link.

- Click and copy the style badges or text you like.

- Update to your readme file.

English | 简体中文 | 繁體中文 | 日本語 | 한국어 | हिन्दी | ไทย | Français | Deutsch | Español | Italiano | Русский | Português | Nederlands | Polski | العربية | فارسی | Türkçe | Tiếng Việt | Bahasa Indonesia

If you would like to have a contribution in the project, all you need to do is: Fork project → Clone project → Choose a script in your language → Fill in your AI token → Run it → Commit & push & create a PR

Note: Please do not upload your tokens!

- Only support github markdown.

Source Code Project Link by @mikechen

- 10~30 mins/ per project

- 200~400 projects/one console per day.

- Every 3~7 days will update exist projects (depending future computing resources)

- Microsoft MVP team

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OpenAiTx

Similar Open Source Tools

OpenAiTx

OpenAiTx is a language auto-translate tool designed for GitHub project readme & wiki. It uses premium-grade LLM for one-time translation and makes the results freely accessible to the open-source community. The tool supports Google/Bing multiple languages SEO search, which regular client translate tools cannot do. It is free and open source forever, allowing project maintainers to save time by submitting once and auto-updating in the future. OpenAiTx provides users with different style options for language display badges or text, making it easy to integrate into readme files. Contributors can participate by forking the project, cloning it, choosing a script in their preferred language, filling in their AI token, running it, and creating a pull request. It is important not to upload personal tokens for security reasons. The tool is specifically designed for GitHub markdown and aims to help users translate their projects efficiently.

openkore

OpenKore is a custom client and intelligent automated assistant for Ragnarok Online. It is a free, open source, and cross-platform program (Linux, Windows, and MacOS are supported). To run OpenKore, you need to download and extract it or clone the repository using Git. Configure OpenKore according to the documentation and run openkore.pl to start. The tool provides a FAQ section for troubleshooting, guidelines for reporting issues, and information about botting status on official servers. OpenKore is developed by a global team, and contributions are welcome through pull requests. Various community resources are available for support and communication. Users are advised to comply with the GNU General Public License when using and distributing the software.

generative-ai-with-javascript

The 'Generative AI with JavaScript' repository is a comprehensive resource hub for JavaScript developers interested in delving into the world of Generative AI. It provides code samples, tutorials, and resources from a video series, offering best practices and tips to enhance AI skills. The repository covers the basics of generative AI, guides on building AI applications using JavaScript, from local development to deployment on Azure, and scaling AI models. It is a living repository with continuous updates, making it a valuable resource for both beginners and experienced developers looking to explore AI with JavaScript.

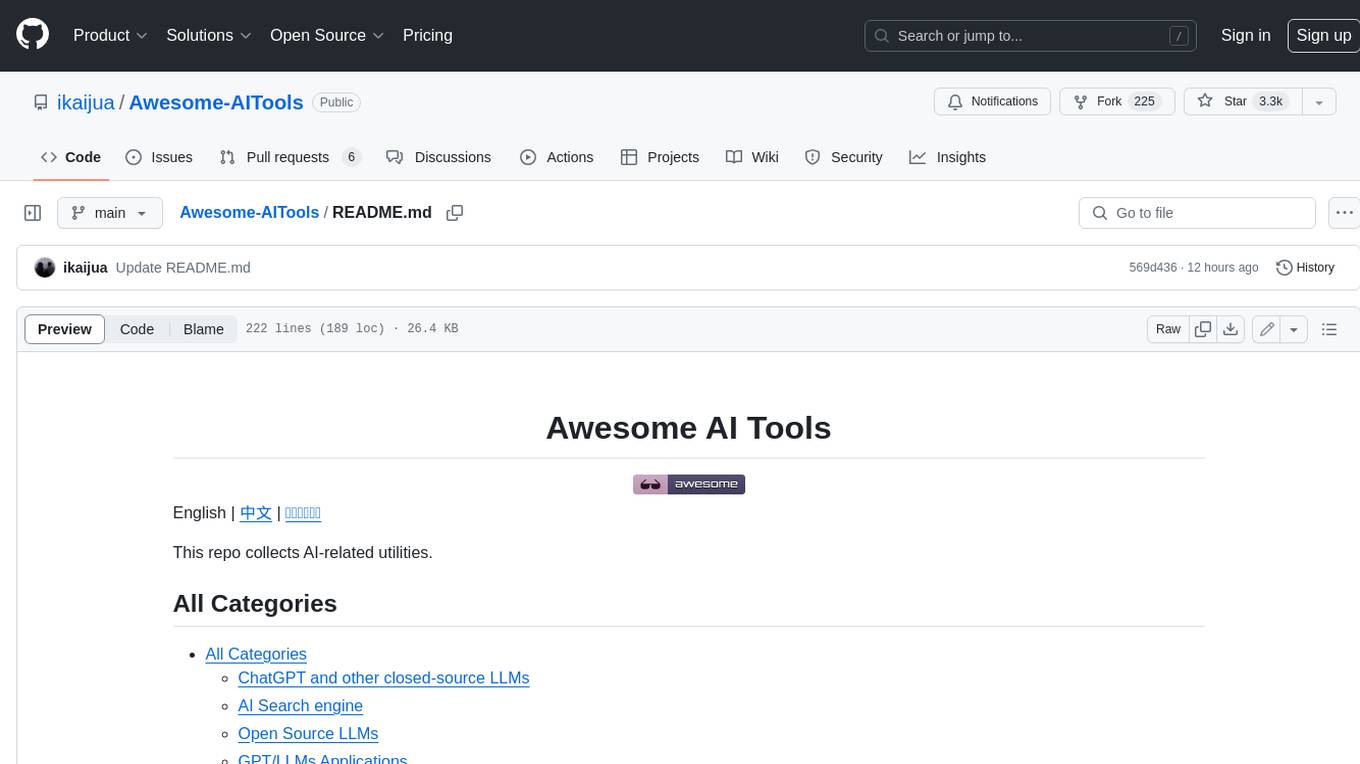

Awesome-AITools

This repo collects AI-related utilities. ## All Categories * All Categories * ChatGPT and other closed-source LLMs * AI Search engine * Open Source LLMs * GPT/LLMs Applications * LLM training platform * Applications that integrate multiple LLMs * AI Agent * Writing * Programming Development * Translation * AI Conversation or AI Voice Conversation * Image Creation * Speech Recognition * Text To Speech * Voice Processing * AI generated music or sound effects * Speech translation * Video Creation * Video Content Summary * OCR(Optical Character Recognition)

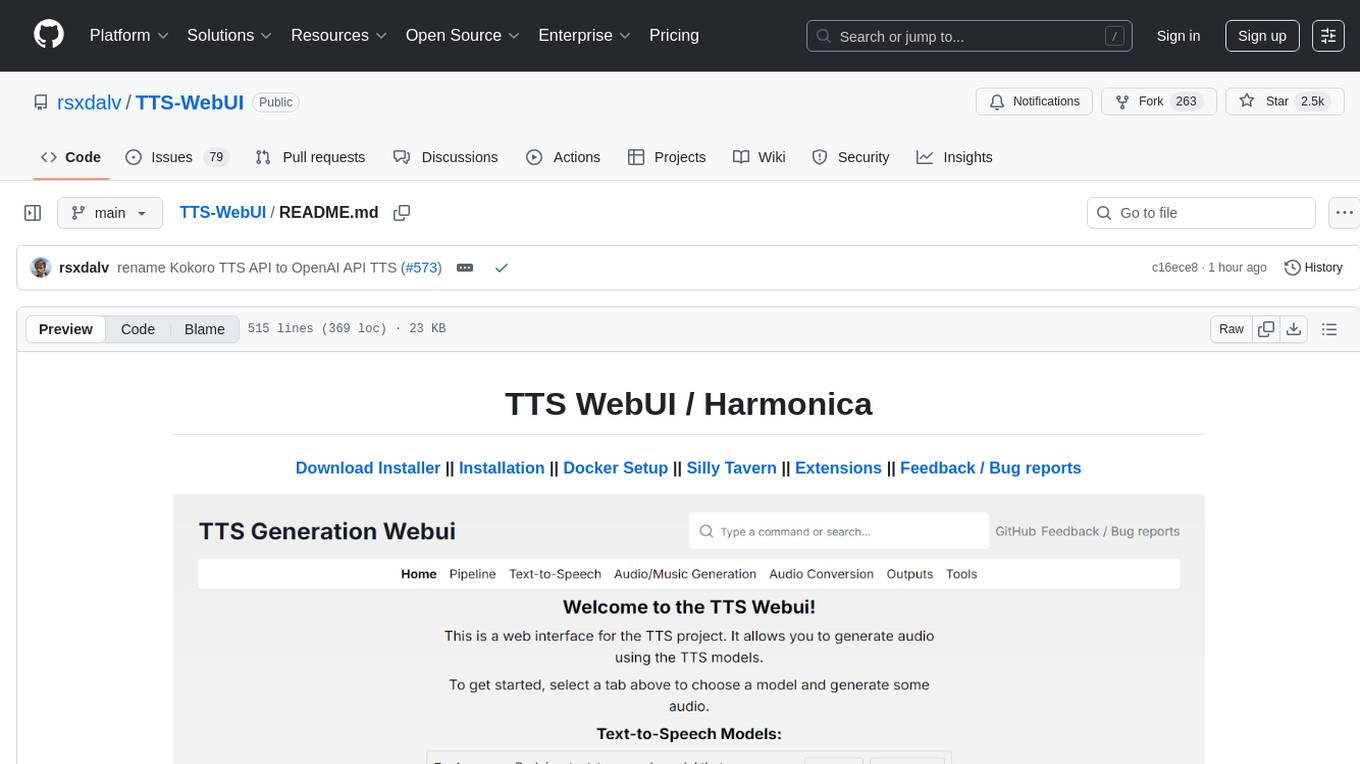

TTS-WebUI

TTS WebUI is a comprehensive tool for text-to-speech synthesis, audio/music generation, and audio conversion. It offers a user-friendly interface for various AI projects related to voice and audio processing. The tool provides a range of models and extensions for different tasks, along with integrations like Silly Tavern and OpenWebUI. With support for Docker setup and compatibility with Linux and Windows, TTS WebUI aims to facilitate creative and responsible use of AI technologies in a user-friendly manner.

note-gen

Note-gen is a simple tool for generating notes automatically based on user input. It uses natural language processing techniques to analyze text and extract key information to create structured notes. The tool is designed to save time and effort for users who need to summarize large amounts of text or generate notes quickly. With note-gen, users can easily create organized and concise notes for study, research, or any other purpose.

Awesome-AI-GPTs

Awesome AI GPTs is an open repository that collects resources and fun ways to use OpenAI GPTs. It includes databases, search tools, open-source projects, articles, attack and defense strategies, installation of custom plugins, knowledge bases, and community interactions related to GPTs. Users can find curated lists, leaked prompts, and various GPT applications in this repository. The project aims to empower users with AI capabilities and foster collaboration in the AI community.

generative-ai-for-beginners

This course has 18 lessons. Each lesson covers its own topic so start wherever you like! Lessons are labeled either "Learn" lessons explaining a Generative AI concept or "Build" lessons that explain a concept and code examples in both **Python** and **TypeScript** when possible. Each lesson also includes a "Keep Learning" section with additional learning tools. **What You Need** * Access to the Azure OpenAI Service **OR** OpenAI API - _Only required to complete coding lessons_ * Basic knowledge of Python or Typescript is helpful - *For absolute beginners check out these Python and TypeScript courses. * A Github account to fork this entire repo to your own GitHub account We have created a **Course Setup** lesson to help you with setting up your development environment. Don't forget to star (🌟) this repo to find it easier later. ## 🧠 Ready to Deploy? If you are looking for more advanced code samples, check out our collection of Generative AI Code Samples in both **Python** and **TypeScript**. ## 🗣️ Meet Other Learners, Get Support Join our official AI Discord server to meet and network with other learners taking this course and get support. ## 🚀 Building a Startup? Sign up for Microsoft for Startups Founders Hub to receive **free OpenAI credits** and up to **$150k towards Azure credits to access OpenAI models through Azure OpenAI Services**. ## 🙏 Want to help? Do you have suggestions or found spelling or code errors? Raise an issue or Create a pull request ## 📂 Each lesson includes: * A short video introduction to the topic * A written lesson located in the README * Python and TypeScript code samples supporting Azure OpenAI and OpenAI API * Links to extra resources to continue your learning ## 🗃️ Lessons | | Lesson Link | Description | Additional Learning | | :-: | :------------------------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------: | ------------------------------------------------------------------------------ | | 00 | Course Setup | **Learn:** How to Setup Your Development Environment | Learn More | | 01 | Introduction to Generative AI and LLMs | **Learn:** Understanding what Generative AI is and how Large Language Models (LLMs) work. | Learn More | | 02 | Exploring and comparing different LLMs | **Learn:** How to select the right model for your use case | Learn More | | 03 | Using Generative AI Responsibly | **Learn:** How to build Generative AI Applications responsibly | Learn More | | 04 | Understanding Prompt Engineering Fundamentals | **Learn:** Hands-on Prompt Engineering Best Practices | Learn More | | 05 | Creating Advanced Prompts | **Learn:** How to apply prompt engineering techniques that improve the outcome of your prompts. | Learn More | | 06 | Building Text Generation Applications | **Build:** A text generation app using Azure OpenAI | Learn More | | 07 | Building Chat Applications | **Build:** Techniques for efficiently building and integrating chat applications. | Learn More | | 08 | Building Search Apps Vector Databases | **Build:** A search application that uses Embeddings to search for data. | Learn More | | 09 | Building Image Generation Applications | **Build:** A image generation application | Learn More | | 10 | Building Low Code AI Applications | **Build:** A Generative AI application using Low Code tools | Learn More | | 11 | Integrating External Applications with Function Calling | **Build:** What is function calling and its use cases for applications | Learn More | | 12 | Designing UX for AI Applications | **Learn:** How to apply UX design principles when developing Generative AI Applications | Learn More | | 13 | Securing Your Generative AI Applications | **Learn:** The threats and risks to AI systems and methods to secure these systems. | Learn More | | 14 | The Generative AI Application Lifecycle | **Learn:** The tools and metrics to manage the LLM Lifecycle and LLMOps | Learn More | | 15 | Retrieval Augmented Generation (RAG) and Vector Databases | **Build:** An application using a RAG Framework to retrieve embeddings from a Vector Databases | Learn More | | 16 | Open Source Models and Hugging Face | **Build:** An application using open source models available on Hugging Face | Learn More | | 17 | AI Agents | **Build:** An application using an AI Agent Framework | Learn More | | 18 | Fine-Tuning LLMs | **Learn:** The what, why and how of fine-tuning LLMs | Learn More |

supabase

Supabase is an open source Firebase alternative that provides a wide range of features including a hosted Postgres database, authentication and authorization, auto-generated APIs, REST and GraphQL support, realtime subscriptions, functions, file storage, AI and vector/embeddings toolkit, and a dashboard. It aims to offer developers a Firebase-like experience using enterprise-grade open source tools.

Topu-ai

TOPU Md is a simple WhatsApp user bot created by Topu Tech. It offers various features such as multi-device support, AI photo enhancement, downloader commands, hidden NSFW commands, logo commands, anime commands, economy menu, various games, and audio/video editor commands. Users can fork the repo, get a session ID by pairing code, and deploy on Heroku. The bot requires Node version 18.x or higher for optimal performance. Contributions to TOPU-MD are welcome, and the tool is safe for use on WhatsApp and Heroku. The tool is licensed under the MIT License and is designed to enhance the WhatsApp experience with diverse features.

chat-your-doc

Chat Your Doc is an experimental project exploring various applications based on LLM technology. It goes beyond being just a chatbot project, focusing on researching LLM applications using tools like LangChain and LlamaIndex. The project delves into UX, computer vision, and offers a range of examples in the 'Lab Apps' section. It includes links to different apps, descriptions, launch commands, and demos, aiming to showcase the versatility and potential of LLM applications.

MiniCPM-V-CookBook

MiniCPM-V & o Cookbook is a comprehensive repository for building multimodal AI applications effortlessly. It provides easy-to-use documentation, supports a wide range of users, and offers versatile deployment scenarios. The repository includes live demonstrations, inference recipes for vision and audio capabilities, fine-tuning recipes, serving recipes, quantization recipes, and a framework support matrix. Users can customize models, deploy them efficiently, and compress models to improve efficiency. The repository also showcases awesome works using MiniCPM-V & o and encourages community contributions.

lemonade

Lemonade is a tool that helps users run local Large Language Models (LLMs) with high performance by configuring state-of-the-art inference engines for their Neural Processing Units (NPUs) and Graphics Processing Units (GPUs). It is used by startups, research teams, and large companies to run LLMs efficiently. Lemonade provides a high-level Python API for direct integration of LLMs into Python applications and a CLI for mixing and matching LLMs with various features like prompting templates, accuracy testing, performance benchmarking, and memory profiling. The tool supports both GGUF and ONNX models and allows importing custom models from Hugging Face using the Model Manager. Lemonade is designed to be easy to use and switch between different configurations at runtime, making it a versatile tool for running LLMs locally.

auto-dev

AutoDev is an AI-powered coding wizard that supports multiple languages, including Java, Kotlin, JavaScript/TypeScript, Rust, Python, Golang, C/C++/OC, and more. It offers a range of features, including auto development mode, copilot mode, chat with AI, customization options, SDLC support, custom AI agent integration, and language features such as language support, extensions, and a DevIns language for AI agent development. AutoDev is designed to assist developers with tasks such as auto code generation, bug detection, code explanation, exception tracing, commit message generation, code review content generation, smart refactoring, Dockerfile generation, CI/CD config file generation, and custom shell/command generation. It also provides a built-in LLM fine-tune model and supports UnitEval for LLM result evaluation and UnitGen for code-LLM fine-tune data generation.

Home-AssistantConfig

Bear Stone Smart Home Documentation is a live, personal Home Assistant configuration shared for browsing and inspiration. It provides real-world automations, scripts, and scenes for users to reverse-engineer patterns and adapt to their own setups. The repository layout includes reusable config under `config/`, with runtime artifacts hidden by `.gitignore`. The platform runs on Docker/compose, and featured examples cover various smart home scenarios like alarm monitoring, lighting control, and presence awareness. The repository also includes a network diagram and lists Docker add-ons & utilities, gear tied to real automations, and affiliate links for supported hardware.

palico-ai

Palico AI is a tech stack designed for rapid iteration of LLM applications. It allows users to preview changes instantly, improve performance through experiments, debug issues with logs and tracing, deploy applications behind a REST API, and manage applications with a UI control panel. Users have complete flexibility in building their applications with Palico, integrating with various tools and libraries. The tool enables users to swap models, prompts, and logic easily using AppConfig. It also facilitates performance improvement through experiments and provides options for deploying applications to cloud providers or using managed hosting. Contributions to the project are welcomed, with easy ways to get involved by picking issues labeled as 'good first issue'.

For similar tasks

OpenAiTx

OpenAiTx is a language auto-translate tool designed for GitHub project readme & wiki. It uses premium-grade LLM for one-time translation and makes the results freely accessible to the open-source community. The tool supports Google/Bing multiple languages SEO search, which regular client translate tools cannot do. It is free and open source forever, allowing project maintainers to save time by submitting once and auto-updating in the future. OpenAiTx provides users with different style options for language display badges or text, making it easy to integrate into readme files. Contributors can participate by forking the project, cloning it, choosing a script in their preferred language, filling in their AI token, running it, and creating a pull request. It is important not to upload personal tokens for security reasons. The tool is specifically designed for GitHub markdown and aims to help users translate their projects efficiently.

For similar jobs

starcoder2-self-align

StarCoder2-Instruct is an open-source pipeline that introduces StarCoder2-15B-Instruct-v0.1, a self-aligned code Large Language Model (LLM) trained with a fully permissive and transparent pipeline. It generates instruction-response pairs to fine-tune StarCoder-15B without human annotations or data from proprietary LLMs. The tool is primarily finetuned for Python code generation tasks that can be verified through execution, with potential biases and limitations. Users can provide response prefixes or one-shot examples to guide the model's output. The model may have limitations with other programming languages and out-of-domain coding tasks.

can-ai-code

Can AI Code is a self-evaluating interview tool for AI coding models. It includes interview questions written by humans and tests taken by AI, inference scripts for common API providers and CUDA-enabled quantization runtimes, a Docker-based sandbox environment for validating untrusted Python and NodeJS code, and the ability to evaluate the impact of prompting techniques and sampling parameters on large language model (LLM) coding performance. Users can also assess LLM coding performance degradation due to quantization. The tool provides test suites for evaluating LLM coding performance, a webapp for exploring results, and comparison scripts for evaluations. It supports multiple interviewers for API and CUDA runtimes, with detailed instructions on running the tool in different environments. The repository structure includes folders for interviews, prompts, parameters, evaluation scripts, comparison scripts, and more.

SWE-agent

SWE-agent is a tool that turns language models (e.g. GPT-4) into software engineering agents capable of fixing bugs and issues in real GitHub repositories. It achieves state-of-the-art performance on the full test set by resolving 12.29% of issues. The tool is built and maintained by researchers from Princeton University. SWE-agent provides a command line tool and a graphical web interface for developers to interact with. It introduces an Agent-Computer Interface (ACI) to facilitate browsing, viewing, editing, and executing code files within repositories. The tool includes features such as a linter for syntax checking, a specialized file viewer, and a full-directory string searching command to enhance the agent's capabilities. SWE-agent aims to improve prompt engineering and ACI design to enhance the performance of language models in software engineering tasks.

vocode-python

Vocode is an open source library that enables users to easily build voice-based LLM (Large Language Model) apps. With Vocode, users can create real-time streaming conversations with LLMs and deploy them for phone calls, Zoom meetings, and more. The library offers abstractions and integrations for transcription services, LLMs, and synthesis services, making it a comprehensive tool for voice-based applications.

langstream

LangStream is a tool for natural language processing tasks, providing a CLI for easy installation and usage. Users can try sample applications like Chat Completions and create their own applications using the developer documentation. It supports running on Kubernetes for production-ready deployment, with support for various Kubernetes distributions and external components like Apache Kafka or Apache Pulsar cluster. Users can deploy LangStream locally using minikube and manage the cluster with mini-langstream. Development requirements include Docker, Java 17, Git, Python 3.11+, and PIP, with the option to test local code changes using mini-langstream.

Devon

Devon is an open-source pair programmer tool designed to facilitate collaborative coding sessions. It provides features such as multi-file editing, codebase exploration, test writing, bug fixing, and architecture exploration. The tool supports Anthropic, OpenAI, and Groq APIs, with plans to add more models in the future. Devon is community-driven, with ongoing development goals including multi-model support, plugin system for tool builders, self-hostable Electron app, and setting SOTA on SWE-bench Lite. Users can contribute to the project by developing core functionality, conducting research on agent performance, providing feedback, and testing the tool.

ipex-llm-tutorial

IPEX-LLM is a low-bit LLM library on Intel XPU (Xeon/Core/Flex/Arc/PVC) that provides tutorials to help users understand and use the library to build LLM applications. The tutorials cover topics such as introduction to IPEX-LLM, environment setup, basic application development, Chinese language support, intermediate and advanced application development, GPU acceleration, and finetuning. Users can learn how to build chat applications, chatbots, speech recognition, and more using IPEX-LLM.

lsp-ai

LSP-AI is an open source language server designed to enhance software engineers' productivity by integrating AI-powered functionality into various text editors. It serves as a backend for completion with large language models and offers features like unified AI capabilities, simplified plugin development, enhanced collaboration, broad compatibility with editors supporting Language Server Protocol, flexible LLM backend support, and commitment to staying updated with the latest advancements in LLM-driven software development. The tool aims to centralize open-source development work, provide a collaborative platform for developers, and offer a future-ready solution for AI-powered assistants in text editors.