LLM-Zero-to-Hundred

This repository contains different LLM chatbot projects (RAG, LLM agents, etc.) and well-known techniques for training and fine tuning LLMs.

Stars: 180

LLM-Zero-to-Hundred is a repository showcasing various applications of LLM chatbots and providing insights into training and fine-tuning Language Models. It includes projects like WebGPT, RAG-GPT, WebRAGQuery, LLM Full Finetuning, RAG-Master LLamaindex vs Langchain, open-source-RAG-GEMMA, and HUMAIN: Advanced Multimodal, Multitask Chatbot. The projects cover features like ChatGPT-like interaction, RAG capabilities, image generation and understanding, DuckDuckGo integration, summarization, text and voice interaction, and memory access. Tutorials include LLM Function Calling and Visualizing Text Vectorization. The projects have a general structure with folders for README, HELPER, .env, configs, data, src, images, and utils.

README:

This repository showcases various applications of LLM chatbots and provides comprehensive insights into established methodologies for training and fine-tuning Language Models.

- [x] WebGPT

- [x] RAG-GPT

- [x] WebRAGQuery

- [x] LLM Full Finetuning

- [x] RAG-Master LLamaindex vs Langchain

- [x] open-source-RAG-GEMMA

- [x] HUMAIN: Advanced Multimodal, Multitask Chatbot

General structure of the projects:

Project-folder

├── README.md <- The top-level README for developers using this project.

├── HELPER.md <- Contains extra information that might be useful to know for executing the project.

├── .env <- dotenv file for local configuration.

├── .here <- Marker for project root.

├── configs <- Holds yml files for project configs

├── data <- Contains the sample data for the project.

├── src <- Contains the source code(s) for executing the project.

| └── utils <- Contains all the necesssary project's modules.

└── images <- Contains all the images used in the user interface and the README file.

NOTE: This is the general structure of the projects, however there might be small changes duo to the specific needs of each project.

Features:

- ChatGPT-like interaction: Chatbot can act as a normal AI assistant.

- RAG (Retrieval Augmented Generation) capabilities: The chatbot can perform RAG in 3 different ways

- With preprocessed documents

- Documents that the user uploads while using the chatbot

- Any webiste that the user requests.

- Image generation: Chatbot utilizes a stable diffusion model to generate images.

- Image understanding: Chatbot Understands the content of images and can answer user's question based on the content of the image using the LLava model.

- DuckDuckGo integration: Access the DuckDuckGo search engine to provide answers based on search results when needed.

- Summarization: Summarize website content or documents upon user request.

- Text and voice interaction: Interact with chatbot through both text and voice inputs.

- Memory: The GPT models in the chatbot also have access to the memory (user's previous queries during the current session).

NOTE: This chatbot was built on top of RAG-GPT and WebRAGQuery projects.

YouTube video:: To be added

In this project, I demonstrate how an open source LLM can be deployed on-prem. For that, I took RAG-GPT project and convert it into a fully open source RAG chatbot. The open source chatbot is designed using Google Gemma7B LLm and BAAI/bge-large-en as the embedding model.

YouTube video: Link

In this project, I compare the performance of `5` famous RAG techniques which have been proposed by Langchain and Llama-index. The test is being done on `40` questions on `5` different documents. Moreover, the projects provides `2` separate RAG chatbots that offer `8` RAG techniques from these two frameworks.

YouTube video: Link

In this project, we use a fictional company called Cubetriangle and design the pipeline to process its raw data, finetune `3` large language models (LLMs) on it, and design a chatbot using the best model.

YouTube video: Link

Libraries: huggingface - OpenAI - chainlit

WebGPT is a powerful tool enabling users to pose questions that require internet searches. Leveraging GPT models:

- It identifies and executes the most relevant given Python functions in response to user queries.

- The second GPT model generates responses by combining user queries with content retrieved from the web search engine.

- The user-friendly interface is built using Streamlit.

- The web search supports diverse searches such as text, news, PDFs, images, videos, maps, and instant responses.

- Overcoming knowledge-cutoff limitations, the chatbot delivers answers based on the latest internet content.

YouTube video: Link

Libraries: OpenAI (It uses GPT model's function calling capability) - duckduckgo-search - streamlit

RAG-GPT is a chatbot that enables you to chat with your documents (PDFs and Doc). The chatbot offers versatile usage through three distinct methods:

- Offline Documents: Engage with documents that you've pre-processed and vectorized. These documents can be seamlessly integrated into your chat sessions.

- Real-time Uploads: Easily upload documents during your chat sessions, allowing the chatbot to process and respond to the content on-the-fly.

- Summarization Requests: Request the chatbot to provide a comprehensive summary of an entire PDF or document in a single interaction, streamlining information retrieval.

Libraries: OpenAI - Langchain - ChromaDB - Gradio

YouTube video: Link

WebRAGQuery: (Combining WebGPT and RAG-GPT)WebRAGQuery is a chatbot that is built on the foundations of WebGPT and RAG-GPT, this project gives the users the ability to utilize the LLM's pretrained knowledge, Duckduckgo web search engine, and chatting with websites.

Key Features:

- Intelligent Decision-Making: The GPT model intelligently decides whether to answer user queries based on its internal knowledge base or execute relevant Python functions and access the internet.

- Web-Integrated Responses: The second GPT model seamlessly combines user queries with content retrieved from web searches, providing rich and context-aware responses. WebRAGQuery supports a variety of searches, including text, news, PDFs, images, videos, maps, and instant responses.

- Website-Specific Queries: When users inquire about a specific website, the model dynamically calls a function to load, vectorize, and create a vectordb from the site's content and therefore, gives the user the ability to ask questions about the content of the website. Users can query the content of the vectordb by starting their questions with ** and exit the RAG conversation by omitting ** from the query. ** can trigger the third GPT model for RAG Q&A.

- Website summarization: On demand, this chatbot is able to go through a website and provide the user with a summary of the content.

- Memory: WebRAGQuery boasts a memory feature that allows it to retain information about user interactions. This enables a more coherent and context-aware conversation by keeping track of previous questions and answers.

- Chainlit Interface: The user-friendly interface is built using Chainlit.

- Overcoming Knowledge-Cutoff Limitations: This chatbot transcends knowledge-cutoff limitations, providing answers based on the latest internet content and even allowing users to ask questions about webpage content.

YouTube video: Link

Libraries: OpenAI - Langchain - ChromaDB - chainlit

## Tutorial description:

This project showcases the capacity of GPT models to produce executable functions in JSON format. It illustrates this capability through a practical example involving the utilization of Python with the GPT model.

Libraries: OpenAI

YouTube video: Link

This project provides a comprehensive visualization of text vectorization and demonstrates the power of vector search. It further explores the vectorization on both OpenAi `text-embedding-ada-002` and the open source `BAAI/bge-large-zh-v1.5` model.

Libraries: OpenAI - HuggingFace

YouTube video: Link

Slides: Link

To run the projects, you will need to install the required libraries. Follow the steps below to get started:

- Clone the repository and navigate to the project directory.

git clone https://github.com/Farzad-R/LLM-Zero-to-Hundred.git

cd LLM-Zero-to-Hundred

- Create a new virtual environment using a tool like virtualenv or conda, and activate the environment:

conda create --name projectenv python=3.11

conda activate projectenv

- Change directory to your desired project and install the required libraries using the following commands: Ex:

cd WebRAGQuery

pip install -r requirements.txt

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-Zero-to-Hundred

Similar Open Source Tools

LLM-Zero-to-Hundred

LLM-Zero-to-Hundred is a repository showcasing various applications of LLM chatbots and providing insights into training and fine-tuning Language Models. It includes projects like WebGPT, RAG-GPT, WebRAGQuery, LLM Full Finetuning, RAG-Master LLamaindex vs Langchain, open-source-RAG-GEMMA, and HUMAIN: Advanced Multimodal, Multitask Chatbot. The projects cover features like ChatGPT-like interaction, RAG capabilities, image generation and understanding, DuckDuckGo integration, summarization, text and voice interaction, and memory access. Tutorials include LLM Function Calling and Visualizing Text Vectorization. The projects have a general structure with folders for README, HELPER, .env, configs, data, src, images, and utils.

colors_ai

Colors AI is a cross-platform color scheme generator that uses deep learning from public API providers. It is available for all mainstream operating systems, including mobile. Features: - Choose from open APIs, with the ability to set up custom settings - Export section with many export formats to save or clipboard copy - URL providers to other static color generators - Localized to several languages - Dark and light theme - Material Design 3 - Data encryption - Accessibility - And much more

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

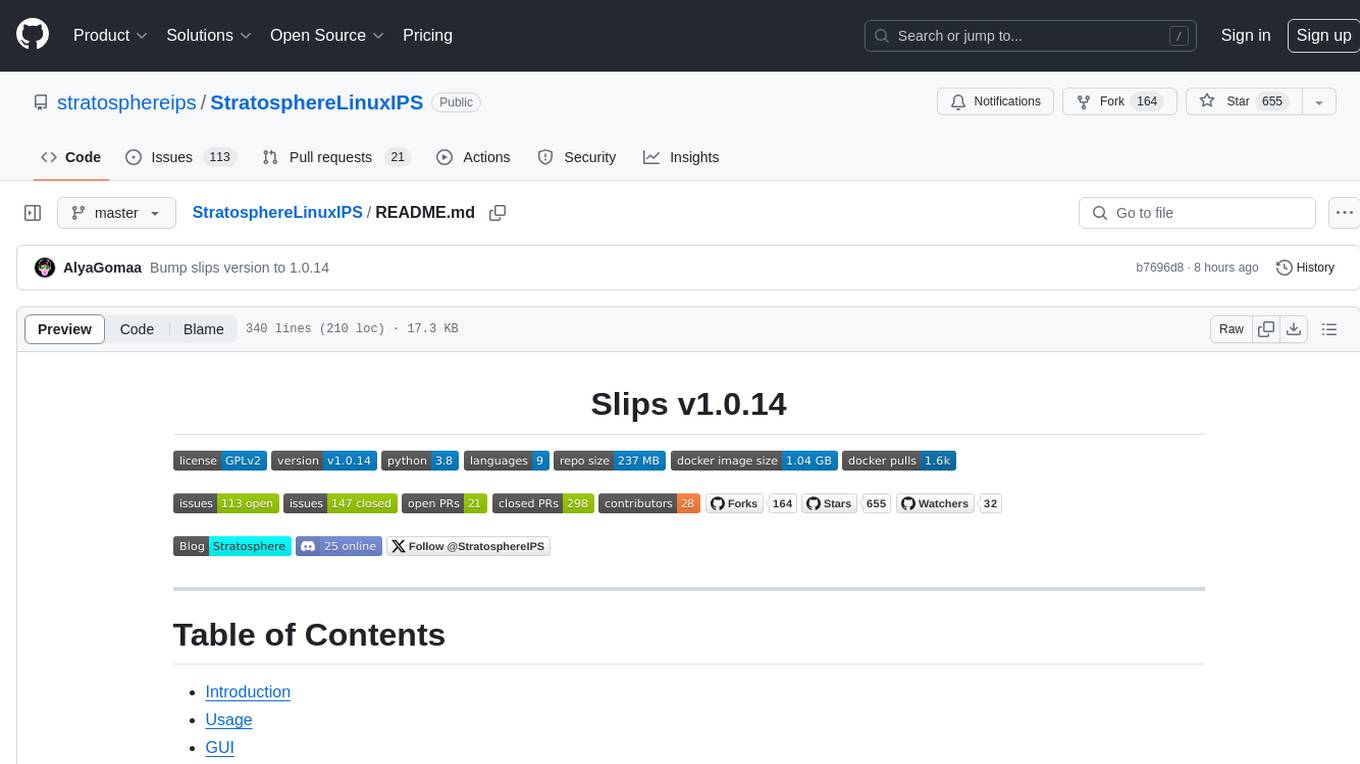

StratosphereLinuxIPS

Slips is a powerful endpoint behavioral intrusion prevention and detection system that uses machine learning to detect malicious behaviors in network traffic. It can work with network traffic in real-time, PCAP files, and network flows from tools like Suricata, Zeek/Bro, and Argus. Slips threat detection is based on machine learning models, threat intelligence feeds, and expert heuristics. It gathers evidence of malicious behavior and triggers alerts when enough evidence is accumulated. The tool is Python-based and supported on Linux and MacOS, with blocking features only on Linux. Slips relies on Zeek network analysis framework and Redis for interprocess communication. It offers a graphical user interface for easy monitoring and analysis.

workflows-py

LlamaIndex Workflows is a framework for orchestrating and chaining together complex systems of steps and events. It shines in orchestrating complex, multi-step processes involving AI models, APIs, and decision-making. The async-first, event-driven architecture allows building workflows that can route between different capabilities, implement parallel processing patterns, loop over complex sequences, and maintain state across multiple steps. Key features include async-first design, event-driven structure, state management, and observability through tools like Arize Phoenix and OpenTelemetry.

neuro-san-studio

Neuro SAN Studio is an open-source library for building agent networks across various industries. It simplifies the development of collaborative AI systems by enabling users to create sophisticated multi-agent applications using declarative configuration files. The tool offers features like data-driven configuration, adaptive communication protocols, safe data handling, dynamic agent network designer, flexible tool integration, robust traceability, and cloud-agnostic deployment. It has been used in various use-cases such as automated generation of multi-agent configurations, airline policy assistance, banking operations, market analysis in consumer packaged goods, insurance claims processing, intranet knowledge management, retail operations, telco network support, therapy vignette supervision, and more.

gptme

Personal AI assistant/agent in your terminal, with tools for using the terminal, running code, editing files, browsing the web, using vision, and more. A great coding agent that is general-purpose to assist in all kinds of knowledge work, from a simple but powerful CLI. An unconstrained local alternative to ChatGPT with 'Code Interpreter', Cursor Agent, etc. Not limited by lack of software, internet access, timeouts, or privacy concerns if using local models.

Open-LLM-VTuber

Open-LLM-VTuber is a voice-interactive AI companion supporting real-time voice conversations and featuring a Live2D avatar. It can run offline on Windows, macOS, and Linux, offering web and desktop client modes. Users can customize appearance and persona, with rich LLM inference, text-to-speech, and speech recognition support. The project is highly customizable, extensible, and actively developed with exciting features planned. It provides privacy with offline mode, persistent chat logs, and various interaction features like voice interruption, touch feedback, Live2D expressions, pet mode, and more.

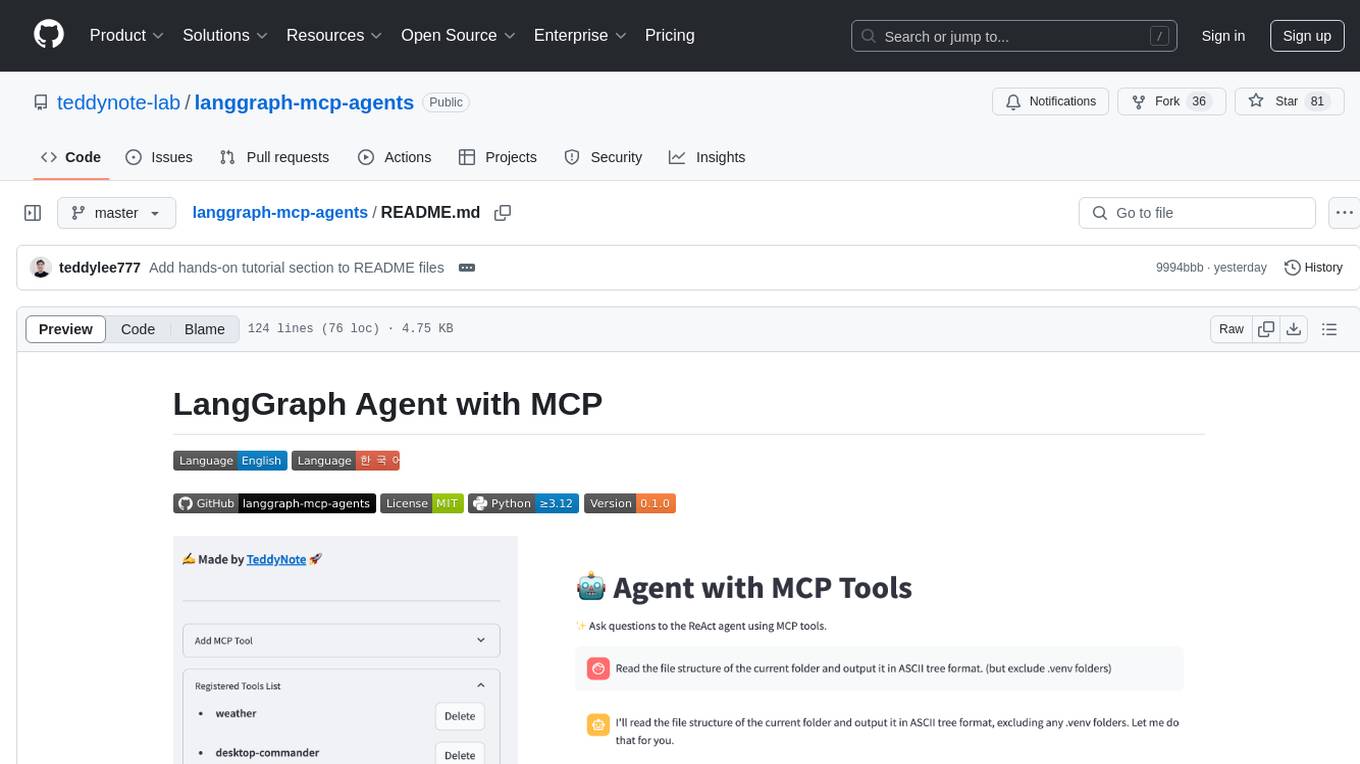

langgraph-mcp-agents

LangGraph Agent with MCP is a toolkit provided by LangChain AI that enables AI agents to interact with external tools and data sources through the Model Context Protocol (MCP). It offers a user-friendly interface for deploying ReAct agents to access various data sources and APIs through MCP tools. The toolkit includes features such as a Streamlit Interface for interaction, Tool Management for adding and configuring MCP tools dynamically, Streaming Responses in real-time, and Conversation History tracking.

kalavai-client

Kalavai is an open-source platform that transforms everyday devices into an AI supercomputer by aggregating resources from multiple machines. It facilitates matchmaking of resources for large AI projects, making AI hardware accessible and affordable. Users can create local and public pools, connect with the community's resources, and share computing power. The platform aims to be a management layer for research groups and organizations, enabling users to unlock the power of existing hardware without needing a devops team. Kalavai CLI tool helps manage both versions of the platform.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

lobe-chat

Lobe Chat is an open-source, modern-design ChatGPT/LLMs UI/Framework. Supports speech-synthesis, multi-modal, and extensible ([function call][docs-functionc-call]) plugin system. One-click **FREE** deployment of your private OpenAI ChatGPT/Claude/Gemini/Groq/Ollama chat application.

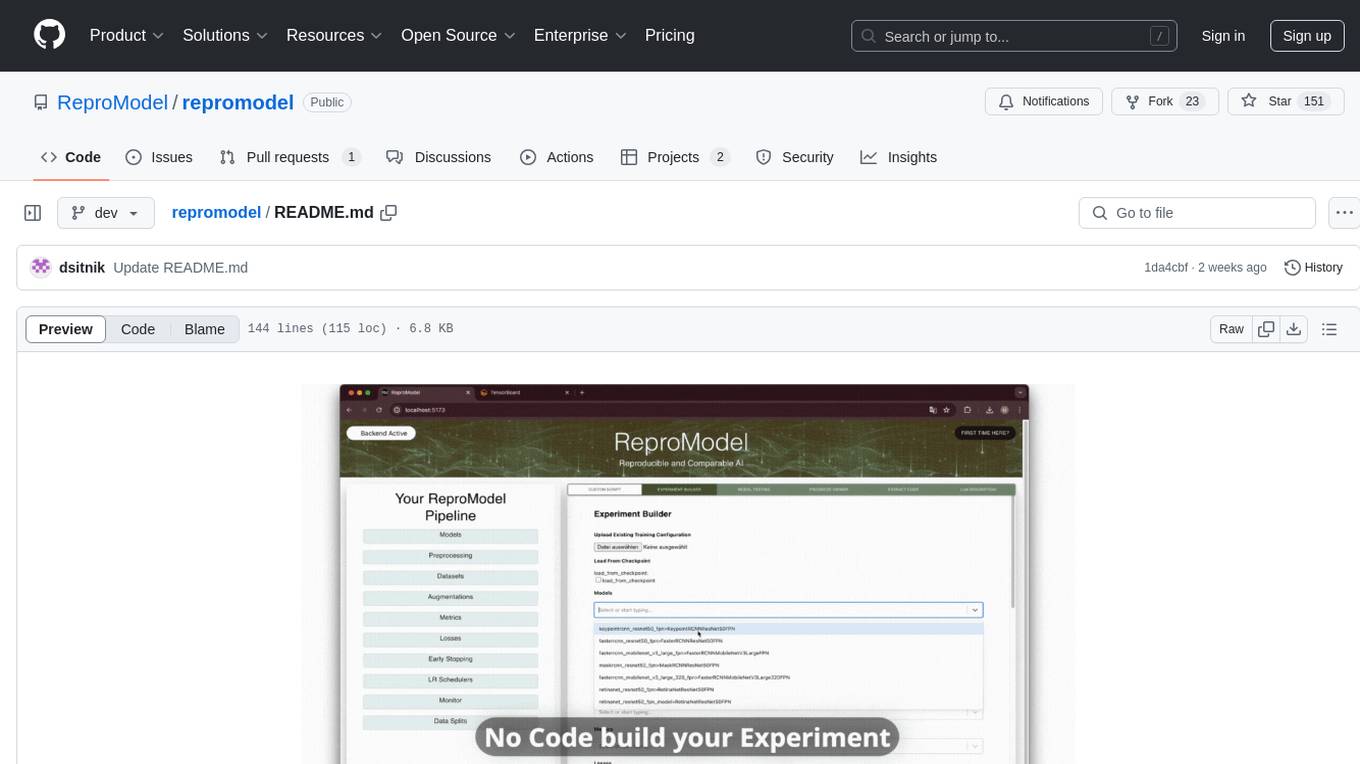

repromodel

ReproModel is an open-source toolbox designed to boost AI research efficiency by enabling researchers to reproduce, compare, train, and test AI models faster. It provides standardized models, dataloaders, and processing procedures, allowing researchers to focus on new datasets and model development. With a no-code solution, users can access benchmark and SOTA models and datasets, utilize training visualizations, extract code for publication, and leverage an LLM-powered automated methodology description writer. The toolbox helps researchers modularize development, compare pipeline performance reproducibly, and reduce time for model development, computation, and writing. Future versions aim to facilitate building upon state-of-the-art research by loading previously published study IDs with verified code, experiments, and results stored in the system.

aistore

AIStore is a lightweight object storage system designed for AI applications. It is highly scalable, reliable, and easy to use. AIStore can be deployed on any commodity hardware, and it can be used to store and manage large datasets for deep learning and other AI applications.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

For similar tasks

LLM-Zero-to-Hundred

LLM-Zero-to-Hundred is a repository showcasing various applications of LLM chatbots and providing insights into training and fine-tuning Language Models. It includes projects like WebGPT, RAG-GPT, WebRAGQuery, LLM Full Finetuning, RAG-Master LLamaindex vs Langchain, open-source-RAG-GEMMA, and HUMAIN: Advanced Multimodal, Multitask Chatbot. The projects cover features like ChatGPT-like interaction, RAG capabilities, image generation and understanding, DuckDuckGo integration, summarization, text and voice interaction, and memory access. Tutorials include LLM Function Calling and Visualizing Text Vectorization. The projects have a general structure with folders for README, HELPER, .env, configs, data, src, images, and utils.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

civitai

Civitai is a platform where people can share their stable diffusion models (textual inversions, hypernetworks, aesthetic gradients, VAEs, and any other crazy stuff people do to customize their AI generations), collaborate with others to improve them, and learn from each other's work. The platform allows users to create an account, upload their models, and browse models that have been shared by others. Users can also leave comments and feedback on each other's models to facilitate collaboration and knowledge sharing.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.