llm-analysis

Latency and Memory Analysis of Transformer Models for Training and Inference

Stars: 300

llm-analysis is a tool designed for Latency and Memory Analysis of Transformer Models for Training and Inference. It automates the calculation of training or inference latency and memory usage for Large Language Models (LLMs) or Transformers based on specified model, GPU, data type, and parallelism configurations. The tool helps users to experiment with different setups theoretically, understand system performance, and optimize training/inference scenarios. It supports various parallelism schemes, communication methods, activation recomputation options, data types, and fine-tuning strategies. Users can integrate llm-analysis in their code using the `LLMAnalysis` class or use the provided entry point functions for command line interface. The tool provides lower-bound estimations of memory usage and latency, and aims to assist in achieving feasible and optimal setups for training or inference.

README:

Latency and Memory Analysis of Transformer Models for Training and Inference

- llm-analysis

Many formulas or equations are floating around in papers, blogs, etc., about how to calculate training or inference latency and memory for Large Language Models (LLMs) or Transformers. Rather than doing math on papers or typing in Excel sheets, let's automate the boring stuff with llm-analysis ⚙️!

Given the specified model, GPU, data type, and parallelism configurations, llm-analysis estimates the latency and memory usage of LLMs for training or inference. With llm-analysis, one can easily try out different training/inference setups theoretically, and better understand the system performance for different scenarios.

llm-analysis helps answer questions such as:

- what batch size, data type, parallelism scheme to use to get a

feasible(not getting OOM) andoptimal(maximizing throughput with a latency constraint) setup for training or inference -

timeit takes with the given setup to do training or inference and thecost(GPU-hours) - how the latency/memory changes if using a different model, GPU type, number of GPU, data type for weights and activations, parallelism configuration (suggesting the performance benefit of

modeling change,hardware improvement,quantization,parallelism, etc.)

Check the example use cases. With llm-analysis, you can do such analysis in minutes 🚀!

-

To install llm-analysis from pypi:

pip install llm-analysis

-

To install the latest development build:

pip install --upgrade git+https://github.com/cli99/llm-analysis.git@main

-

To install from source, clone the repo and run

pip install .orpoetry install(install poetry bypip install poetry).

To integrate llm-analysis in your code, use the LLMAnalysis class. Refer to doc LLMAnalysis for details.

LLMAnalysis is constructed with flops and memory efficiency numbers and the following configuration classes:

-

ModelConfigcovers model information, i.e. max sequence length, number of transformer layers, number of attention heads, hidden dimension, vocabulary size -

GPUConfigcovers GPU compute and memory specifications -

DtypeConfigcovers the number of bits used for the model weight, activation, and embedding -

ParallelismConfigcovers Tensor Parallelism (tp), Pipeline Parallelism (pp), Sequence Parallelism (sp), Expert Parallelism (ep),and Data Parallelism (dp).

Then LLMAnalysis can be queried with different arguments through the training and inference methods.

llm-analysis provides two entry functions, train and infer, for ease of use through the command line interface. Run

python -m llm_analysis.analysis train --helpor

python -m llm_analysis.analysis infer --helpto check the options or read the linked doc. Refer to the examples to see how they are used.

train and infer use the pre-defined name-to-configuration mappings (model_configs, gpu_configs, dtype_configs) and other user-input arguments to construct the LLMAnalysis and do the query.

The pre-defined mappings are populated at the runtime from the model, GPU, and data type configuration json files under model_configs, gpu_configs, and dtype_configs. To add a new model, GPU or data type to the mapping for query, just add a json description file to the corresponding folder.

llm-analysis also supports retrieving ModelConfig from a model config json file path or Hugging Face with the model name .

- From a local model config json file, e.g.,

python -m llm_analysis.analysis train --model_name=local_example_model.json. Check the model configurations under the model_configs folder. - From Hugging Face, e.g., use

EleutherAI/gpt-neox-20basmodel_namewhen calling thetrainorinferentry functions.python -m llm_analysis.analysis train --model_name=EleutherAI/gpt-neox-20b --total_num_gpus 32 --ds_zero 3. With this method, llm-analysis relies ontransformersto find the corresponding model configuration on huggingface.co/models, meaning information of newer models only exist after certain version of the transformers library. To access latest models through their names, update the installedtransformerspackage.

A list of handy commands is provided to query against the pre-defined mappings as well as Hugging Face, or to dump configurations. Run python -m llm_analysis.config --help for details.

Some examples:

python -m llm_analysis.config get_model_config_by_name EleutherAI/gpt-neox-20bgets the ModelConfig from the populated mapping by name, if not found, llm-analysis tries to get it from HuggingFace.

Note that LLaMA models need at least transformers-4.28.1 to retrieve, either update to a later transformers library, or use the predefined ModelConfig for LLaMA models (/ in model names are replaced with _).

python -m llm_analysis.config list_gpu_configslists the names of all predefined GPU configurations, then you can query with

python -m llm_analysis.config get_gpu_config_by_name a100-sxm-80gbto show the corresponding GPUConfig.

Setting flops and memory efficiency to 1 (default) gives the lower bound of training or inference latency, as it assumes the peak hardware performance (which is never the case).

A close-to-reality flops or memory efficiency can be found by benchmarking and profiling using the input dimensions in the model.

If one has to make assumptions, for flops efficiency, literature reports up to 0.5 for large scale model training, and up to 0.7 for inference; 0.9 can be an aggressive target for memory efficiency.

llm-analysis aims to provide a lower-bound estimation of memory usage and latency.

llm-analysis currently covers Tensor Parallelism (tp), Pipeline Parallelism (pp), Sequence Parallelism (sp), Expert Parallelism (ep), and Data Parallelism (dp).

-

tp, pp, and sp adopt the style of parallelization used in

Megatron-LMfor training andFasterTransformerfor inference -

In the training analysis, dp sharding assumes using

DeepSpeed ZeROorFSDP.ds_zerois used to specify the dp sharding strategyds_zero DeepSpeed ZeRO FSDP Sharding 0 disabled NO_SHARD No sharding 1 Stage 1 N/A Shard optimizer states 2 Stage 2 SHARD_GRAD_OP Shard gradients and optimizer states 3 Stage 3 FULL_SHARD Shard gradients, optimizer states, model parameters -

ep parallelizes the number of MLP experts across

ep_sizedevices, i.e. the number of experts per GPU istotal number of experts / ep_size. Thus for the MLP module, the number of devices for other parallelization dimensions is divided byep_sizecompared to other parts of the model.

tp communication is calculated as using ring allreduce. ep communication is calculated as using alltoall.

dp communication time to unshard model weight when using FSDP or DeepSpeed ZeRO is estimated and compared against the compute latency, the larger value of the two is used for the overall latency.

Other dp and pp communications are ignored for now, i.e. assuming perfect computation and communication overlapping, which is not true when communication cannot overlap with compute due to dependency, or when communication is too long to hide due to slow interconnect or large data volume.

llm-analysis supports both full and selective activation recomputation.

| activation_recomputation | what is checkpointed and recomputed |

|---|---|

| 0 | No activation recomputation; requires the most amount of memory |

| 1 | Checkpoints the attention computation (QK^T matrix multiply, softmax, softmax dropout, and attention over V.) in the attention module of a transformer layer; as described in Reducing Activation Recomputation in Large Transformer Models. |

| 2 | Checkpoints the input to the attention module in a transformer layer; requires an extra forward pass on attention. |

| 3 | Checkpoints the input to the sequence of modules (layernom-attention-layernom) in a transformer layer; requires an extra forward pass on (layernom-attention-layernom). |

| 4 | Full activation recomputation stores the input to the transformer layer; requires the least amount of memory; requires an extra forward pass of the entire layer. |

Data types are expressed with the number of bits, only 32 (FP32, TF32), 16 (FP16, BF16), 8 (INT8), and 4 (INT4) bits data types are modeled for now.

Fine-tuning is modeled the same (controlled by total_num_tokens passed to the train entry function) as pre-training, thus assuming full (all model parameters) fine-tuning. Parameter-efficient fine-tuning (PEFT) is

in future support.

Inference assumes perfect overlapping of compute and memory operations when calculating latency, and maximum memory reuse when calculating memory usage.

TODOs (stay tuned 📻)

Check the TODOs below for what's next and stay tuned 📻! Any contributions or feedback are highly welcome!

- [ ] Add dp (across and within a node), ep (within a node), pp (across nodes) communication analysis

- [ ] Support efficient fine-tuning methods such as LoRA or Adapters

- [ ] Add FP8 datatype support

- [ ] Support CPU offloading (weight, KV cache, etc.) analysis in training and inference

- [ ] Support other hardware (e.g. CPU) for inference analysis

If you use llm-analysis in your work, please cite:

Cheng Li. (2023). LLM-Analysis: Latency and Memory Analysis of Transformer Models for Training and Inference. GitHub repository, https://github.com/cli99/llm-analysis.

or

@misc{llm-analysis-chengli,

author = {Cheng Li},

title = {LLM-Analysis: Latency and Memory Analysis of Transformer Models for Training and Inference},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/cli99/llm-analysis}},

}

Contributions and suggestions are welcome.

llm-analysis uses pre-commit to ensure code formatting is consistent. For pull requests with code contribution, please install the pre-commit (pip install pre-commit) as well as the used hooks (pip install in the repo), and format the code (runs automatically before each git commit) before submitting the PR.

- Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism

- ZeRO: Memory Optimizations Toward Training Trillion Parameter Models

- Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM

- Using DeepSpeed and Megatron to Train Megatron-Turing NLG 530B, A Large-Scale Generative Language Model

- Reducing Activation Recomputation in Large Transformer Models

- Training Compute-Optimal Large Language Models

- Efficiently Scaling Transformer Inference

- Training Compute-Optimal Large Language Models

- Understanding INT4 Quantization for Transformer Models: Latency Speedup, Composability, and Failure Cases

- A Comprehensive Study on Post-Training Quantization for Large Language Models

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-analysis

Similar Open Source Tools

llm-analysis

llm-analysis is a tool designed for Latency and Memory Analysis of Transformer Models for Training and Inference. It automates the calculation of training or inference latency and memory usage for Large Language Models (LLMs) or Transformers based on specified model, GPU, data type, and parallelism configurations. The tool helps users to experiment with different setups theoretically, understand system performance, and optimize training/inference scenarios. It supports various parallelism schemes, communication methods, activation recomputation options, data types, and fine-tuning strategies. Users can integrate llm-analysis in their code using the `LLMAnalysis` class or use the provided entry point functions for command line interface. The tool provides lower-bound estimations of memory usage and latency, and aims to assist in achieving feasible and optimal setups for training or inference.

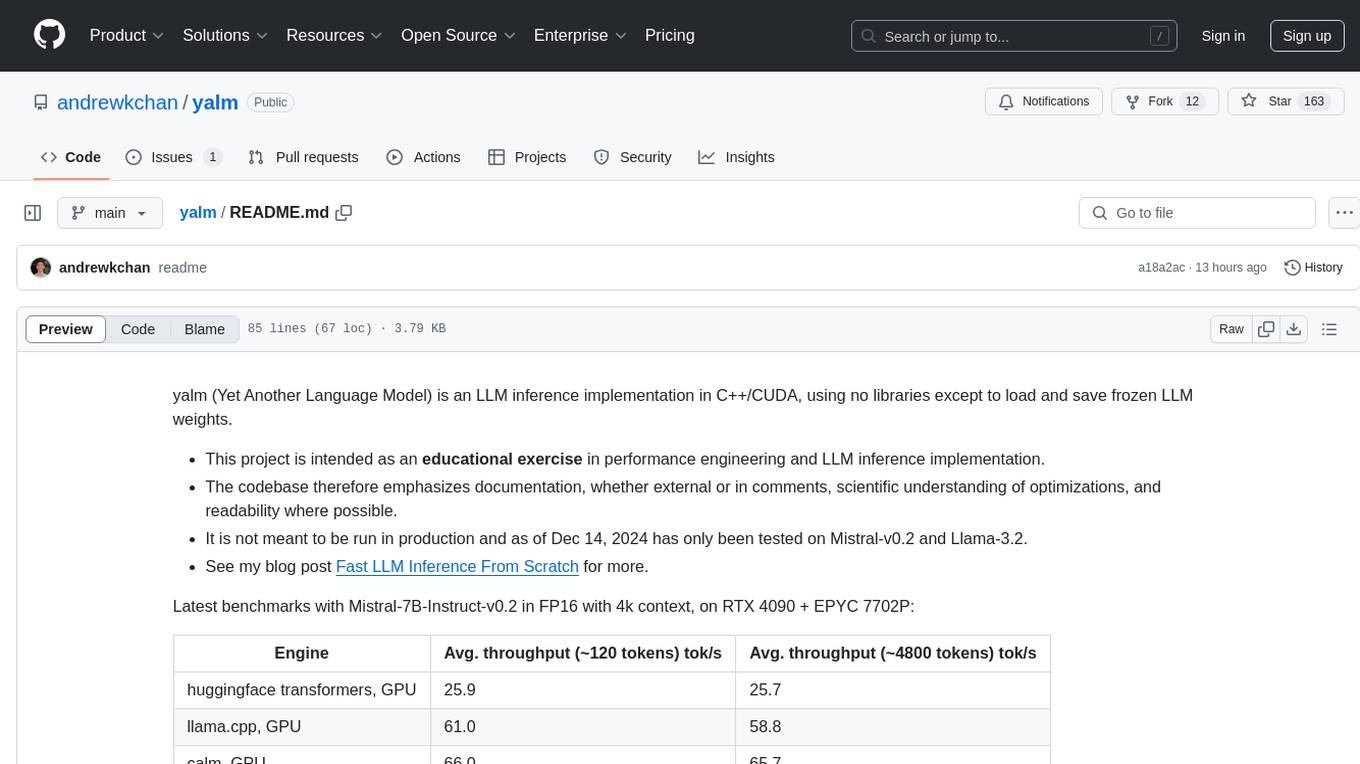

yalm

Yalm (Yet Another Language Model) is an LLM inference implementation in C++/CUDA, emphasizing performance engineering, documentation, scientific optimizations, and readability. It is not for production use and has been tested on Mistral-v0.2 and Llama-3.2. Requires C++20-compatible compiler, CUDA toolkit, and LLM safetensor weights in huggingface format converted to .yalm file.

gepa

GEPA (Genetic-Pareto) is a framework for optimizing arbitrary systems composed of text components like AI prompts, code snippets, or textual specs against any evaluation metric. It employs LLMs to reflect on system behavior, using feedback from execution and evaluation traces to drive targeted improvements. Through iterative mutation, reflection, and Pareto-aware candidate selection, GEPA evolves robust, high-performing variants with minimal evaluations, co-evolving multiple components in modular systems for domain-specific gains. The repository provides the official implementation of the GEPA algorithm as proposed in the paper titled 'GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning'.

matsciml

The Open MatSci ML Toolkit is a flexible framework for machine learning in materials science. It provides a unified interface to a variety of materials science datasets, as well as a set of tools for data preprocessing, model training, and evaluation. The toolkit is designed to be easy to use for both beginners and experienced researchers, and it can be used to train models for a wide range of tasks, including property prediction, materials discovery, and materials design.

LLMeBench

LLMeBench is a flexible framework designed for accelerating benchmarking of Large Language Models (LLMs) in the field of Natural Language Processing (NLP). It supports evaluation of various NLP tasks using model providers like OpenAI, HuggingFace Inference API, and Petals. The framework is customizable for different NLP tasks, LLM models, and datasets across multiple languages. It features extensive caching capabilities, supports zero- and few-shot learning paradigms, and allows on-the-fly dataset download and caching. LLMeBench is open-source and continuously expanding to support new models accessible through APIs.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

guidellm

GuideLLM is a platform for evaluating and optimizing the deployment of large language models (LLMs). By simulating real-world inference workloads, GuideLLM enables users to assess the performance, resource requirements, and cost implications of deploying LLMs on various hardware configurations. This approach ensures efficient, scalable, and cost-effective LLM inference serving while maintaining high service quality. The tool provides features for performance evaluation, resource optimization, cost estimation, and scalability testing.

codellm-devkit

Codellm-devkit (CLDK) is a Python library that serves as a multilingual program analysis framework bridging traditional static analysis tools and Large Language Models (LLMs) specialized for code (CodeLLMs). It simplifies the process of analyzing codebases across multiple programming languages, enabling the extraction of meaningful insights and facilitating LLM-based code analysis. The library provides a unified interface for integrating outputs from various analysis tools and preparing them for effective use by CodeLLMs. Codellm-devkit aims to enable the development and experimentation of robust analysis pipelines that combine traditional program analysis tools and CodeLLMs, reducing friction in multi-language code analysis and ensuring compatibility across different tools and LLM platforms. It is designed to seamlessly integrate with popular analysis tools like WALA, Tree-sitter, LLVM, and CodeQL, acting as a crucial intermediary layer for efficient communication between these tools and CodeLLMs. The project is continuously evolving to include new tools and frameworks, maintaining its versatility for code analysis and LLM integration.

TriForce

TriForce is a training-free tool designed to accelerate long sequence generation. It supports long-context Llama models and offers both on-chip and offloading capabilities. Users can achieve a 2.2x speedup on a single A100 GPU. TriForce also provides options for offloading with tensor parallelism or without it, catering to different hardware configurations. The tool includes a baseline for comparison and is optimized for performance on RTX 4090 GPUs. Users can cite the associated paper if they find TriForce useful for their projects.

aimo-progress-prize

This repository contains the training and inference code needed to replicate the winning solution to the AI Mathematical Olympiad - Progress Prize 1. It consists of fine-tuning DeepSeekMath-Base 7B, high-quality training datasets, a self-consistency decoding algorithm, and carefully chosen validation sets. The training methodology involves Chain of Thought (CoT) and Tool Integrated Reasoning (TIR) training stages. Two datasets, NuminaMath-CoT and NuminaMath-TIR, were used to fine-tune the models. The models were trained using open-source libraries like TRL, PyTorch, vLLM, and DeepSpeed. Post-training quantization to 8-bit precision was done to improve performance on Kaggle's T4 GPUs. The project structure includes scripts for training, quantization, and inference, along with necessary installation instructions and hardware/software specifications.

fuse-med-ml

FuseMedML is a Python framework designed to accelerate machine learning-based discovery in the medical field by promoting code reuse. It provides a flexible design concept where data is stored in a nested dictionary, allowing easy handling of multi-modality information. The framework includes components for creating custom models, loss functions, metrics, and data processing operators. Additionally, FuseMedML offers 'batteries included' key components such as fuse.data for data processing, fuse.eval for model evaluation, and fuse.dl for reusable deep learning components. It supports PyTorch and PyTorch Lightning libraries and encourages the creation of domain extensions for specific medical domains.

semlib

Semlib is a Python library for building data processing and data analysis pipelines that leverage the power of large language models (LLMs). It provides functional programming primitives like map, reduce, sort, and filter, programmed with natural language descriptions. Semlib handles complexities such as prompting, parsing, concurrency control, caching, and cost tracking. The library breaks down sophisticated data processing tasks into simpler steps to improve quality, feasibility, latency, cost, security, and flexibility of data processing tasks.

Pixel-Reasoner

Pixel Reasoner is a framework that introduces reasoning in the pixel-space for Vision-Language Models (VLMs), enabling them to directly inspect, interrogate, and infer from visual evidences. This enhances reasoning fidelity for visual tasks by equipping VLMs with visual reasoning operations like zoom-in and select-frame. The framework addresses challenges like model's imbalanced competence and reluctance to adopt pixel-space operations through a two-phase training approach involving instruction tuning and curiosity-driven reinforcement learning. With these visual operations, VLMs can interact with complex visual inputs such as images or videos to gather necessary information, leading to improved performance across visual reasoning benchmarks.

RAG-FiT

RAG-FiT is a library designed to improve Language Models' ability to use external information by fine-tuning models on specially created RAG-augmented datasets. The library assists in creating training data, training models using parameter-efficient finetuning (PEFT), and evaluating performance using RAG-specific metrics. It is modular, customizable via configuration files, and facilitates fast prototyping and experimentation with various RAG settings and configurations.

cuvs

cuVS is a library that contains state-of-the-art implementations of several algorithms for running approximate nearest neighbors and clustering on the GPU. It can be used directly or through the various databases and other libraries that have integrated it. The primary goal of cuVS is to simplify the use of GPUs for vector similarity search and clustering.

aigverse

aigverse is a Python infrastructure framework that bridges the gap between logic synthesis and AI/ML applications. It allows efficient representation and manipulation of logic circuits, making it easier to integrate logic synthesis and optimization tasks into machine learning pipelines. Built upon EPFL Logic Synthesis Libraries, particularly mockturtle, aigverse provides a high-level Python interface to state-of-the-art algorithms for And-Inverter Graph (AIG) manipulation and logic synthesis, widely used in formal verification, hardware design, and optimization tasks.

For similar tasks

llm-analysis

llm-analysis is a tool designed for Latency and Memory Analysis of Transformer Models for Training and Inference. It automates the calculation of training or inference latency and memory usage for Large Language Models (LLMs) or Transformers based on specified model, GPU, data type, and parallelism configurations. The tool helps users to experiment with different setups theoretically, understand system performance, and optimize training/inference scenarios. It supports various parallelism schemes, communication methods, activation recomputation options, data types, and fine-tuning strategies. Users can integrate llm-analysis in their code using the `LLMAnalysis` class or use the provided entry point functions for command line interface. The tool provides lower-bound estimations of memory usage and latency, and aims to assist in achieving feasible and optimal setups for training or inference.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.