Best AI tools for< Decode Models >

20 - AI tool Sites

Dataku.ai

Dataku.ai is an advanced data extraction and analysis tool powered by AI technology. It offers seamless extraction of valuable insights from documents and texts, transforming unstructured data into structured, actionable information. The tool provides tailored data extraction solutions for various needs, such as resume extraction for streamlined recruitment processes, review insights for decoding customer sentiments, and leveraging customer data to personalize experiences. With features like market trend analysis and financial document analysis, Dataku.ai empowers users to make strategic decisions based on accurate data. The tool ensures precision, efficiency, and scalability in data processing, offering different pricing plans to cater to different user needs.

Evaxion

Evaxion is a pioneering TechBio company utilizing their AI-Immunology™ platform to decode the human immune system and develop novel vaccines for cancer and infectious diseases. Their platform accelerates vaccine target discovery, design, and development by simulating the human immune system and generating predictive models. Evaxion's cutting-edge research and multidisciplinary capabilities enable the rapid development of transformative treatments. The company aims to revolutionize the prevention and treatment of diseases through personalized and precision vaccines.

Typly

Typly is an advanced AI writing assistant that leverages Large Language Models like GPT, ChatGPT, LLaMA, and others to automatically generate suggestions that match the context of the conversation. It is designed to help users craft unique responses effortlessly, decode complex text, summarize articles, perfect emails, and boost conversations with sentence bundles from various sources. Typly also offers extra functions like a Dating Function to find matches on dating apps and Typly Translate for language freedom. The application aims to address the challenge of managing numerous messages and notifications by providing AI-generated responses that enhance communication efficiency and effectiveness.

implicator.ai

implicator.ai is an AI tool that provides a daily newsletter focusing on AI-related news, politics, coding trends, startups, research funding, and AI tools. The platform offers insights into the latest developments in the AI industry, including new models, acquisitions, legal battles, and market trends. With a team of tech journalists and analysts, implicator.ai decodes complex AI topics and delivers concise, informative content for readers interested in staying updated on the fast-paced world of artificial intelligence.

Shib GPT

Shib GPT is an advanced AI-driven platform tailored for real-time crypto market analysis. It revolutionizes the realm of cryptocurrency by leveraging sophisticated algorithms to provide comprehensive insights into pricing, trends, and exchange dynamics. The platform empowers investors and traders with unparalleled accuracy and depth in navigating both decentralized and centralized exchanges, enabling informed decision-making with speed and precision. Shib GPT also offers a chat platform for dynamic interaction with financial markets, generating multimedia content powered by cutting-edge Large Language Models (LLMs).

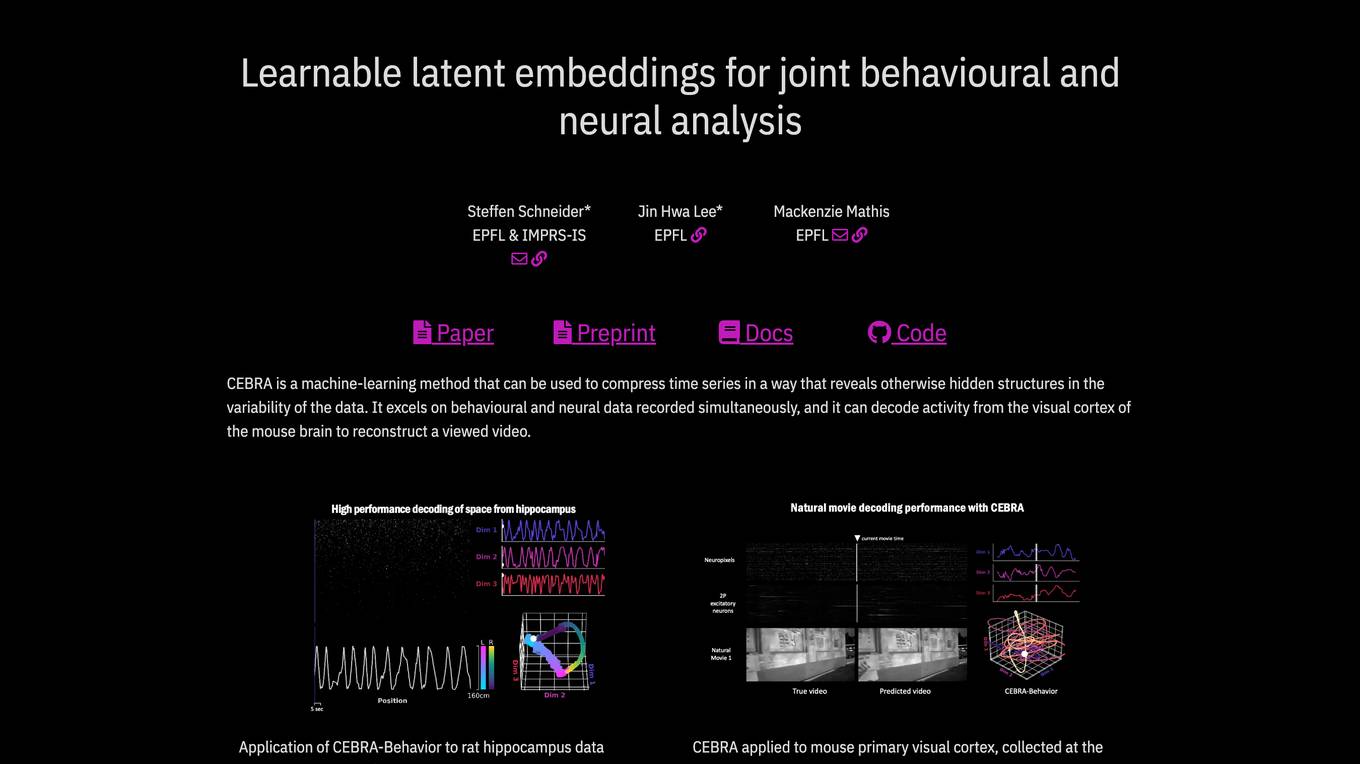

CEBRA

CEBRA is a self-supervised learning algorithm designed for obtaining interpretable embeddings of high-dimensional recordings using auxiliary variables. It excels in compressing time series data to reveal hidden structures, particularly in behavioral and neural data. The algorithm can decode neural activity, reconstruct viewed videos, decode trajectories, and determine position during navigation. CEBRA is a valuable tool for joint behavioral and neural analysis, providing consistent and high-performance latent spaces for hypothesis testing and label-free applications across various datasets and species.

Cogitotech

Cogitotech is an AI tool that specializes in data annotation and labeling expertise. The platform offers a comprehensive suite of services tailored to meet training data needs for computer vision models and AI applications. With a decade-long industry exposure, Cogitotech provides high-quality training data for industries like healthcare, financial services, security, and more. The platform helps minimize biases in AI algorithms and ensures accurate and reliable training data solutions for deploying AI in real-life systems.

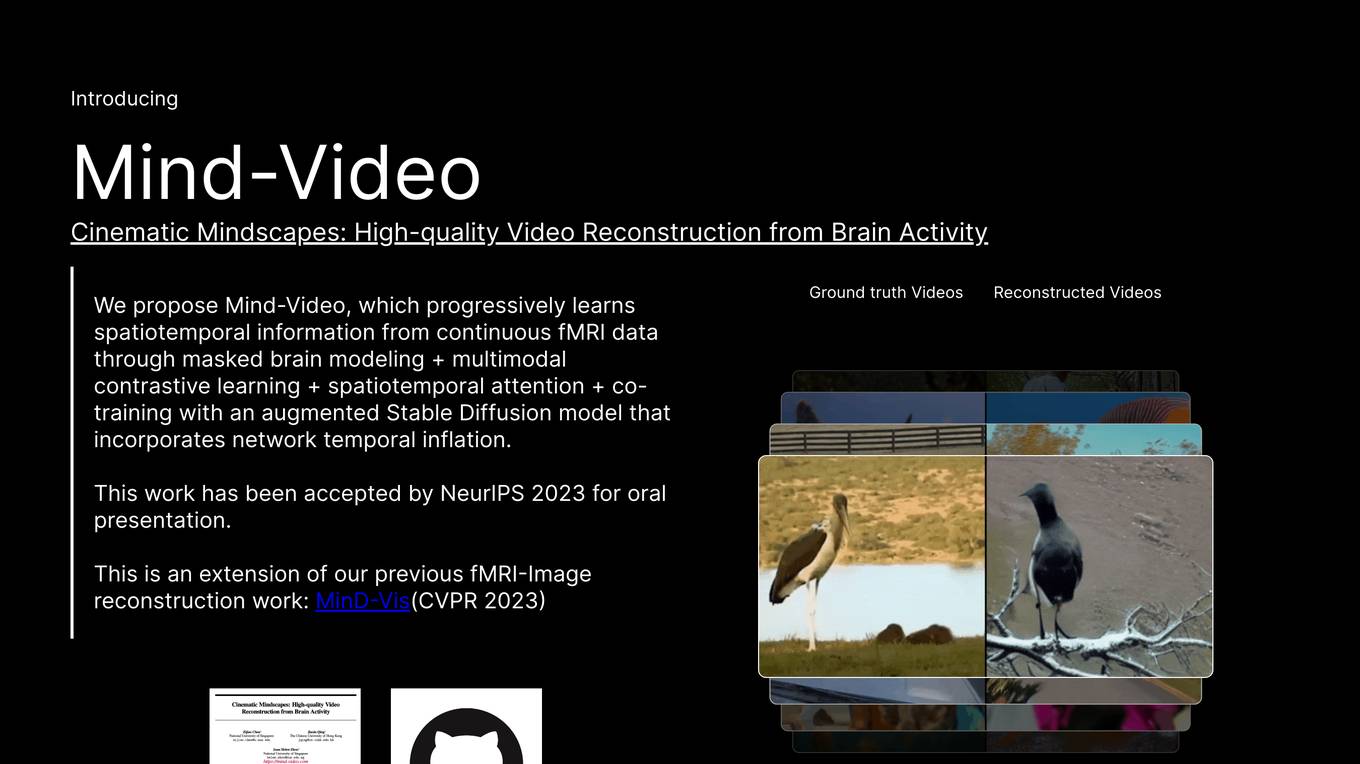

Mind-Video

Mind-Video is an AI tool that focuses on high-quality video reconstruction from brain activity data. It bridges the gap between image and video brain decoding by utilizing masked brain modeling, multimodal contrastive learning, spatiotemporal attention, and co-training with an augmented Stable Diffusion model. The tool aims to recover accurate semantic information from fMRI signals, enabling the generation of realistic videos based on brain activities.

deepsense.ai

deepsense.ai is an Artificial Intelligence Development Company that offers AI Guidance and Implementation Services across various industries such as Retail, Manufacturing, Financial Services, IT Operations, TMT, Medical & Beauty. The company provides Generative AI Solution Center resources to help plan and implement AI solutions. With a focus on AI vision, solutions, and products, deepsense.ai leverages its decade of AI experience to accelerate AI implementation for businesses.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

Digital Sense

Digital Sense is an AI tool that offers a wide range of AI, Machine Learning, and Computer Vision services. The company specializes in custom AI development, AI consulting services, biometrics solutions, NLP & LLMs development services, data science consulting services, remote sensing services, machine learning development services, generative AI development services, and computer vision development services. With over a decade of experience, Digital Sense helps businesses leverage cutting-edge AI technologies to solve complex technological challenges.

Infinity AI

Infinity AI is an AI tool that focuses on generative video models centered around people. The platform aims to empower a team of writers to create award-winning movies without the need for actors, directors, or other crew members. The technology is still in development, with a vision to revolutionize storytelling and filmmaking in the next decade. Users can follow the progress of the project and experiment with the tool on Discord Alpha Web App. Clone Yourself YouTube Twitter TikTok Instagram

Otherweb

Otherweb is a public-benefit corporation that provides an AI-powered news app. The app uses 20 cutting-edge AI models to filter out junk news, misinformation, and propaganda. It also provides a nutrition label for each news item, which helps users decide whether something is worth consuming before they consume it. Otherweb is committed to providing a factual and unbiased news experience for its users.

Phenaki

Phenaki is a model capable of generating realistic videos from a sequence of textual prompts. It is particularly challenging to generate videos from text due to the computational cost, limited quantities of high-quality text-video data, and variable length of videos. To address these issues, Phenaki introduces a new causal model for learning video representation, which compresses the video to a small representation of discrete tokens. This tokenizer uses causal attention in time, which allows it to work with variable-length videos. To generate video tokens from text, Phenaki uses a bidirectional masked transformer conditioned on pre-computed text tokens. The generated video tokens are subsequently de-tokenized to create the actual video. To address data issues, Phenaki demonstrates how joint training on a large corpus of image-text pairs as well as a smaller number of video-text examples can result in generalization beyond what is available in the video datasets. Compared to previous video generation methods, Phenaki can generate arbitrarily long videos conditioned on a sequence of prompts (i.e., time-variable text or a story) in an open domain. To the best of our knowledge, this is the first time a paper studies generating videos from time-variable prompts. In addition, the proposed video encoder-decoder outperforms all per-frame baselines currently used in the literature in terms of spatio-temporal quality and the number of tokens per video.

Decode Investing

Decode Investing is an AI-powered platform that helps users automate their stock research process. The platform offers a range of tools such as an AI Chat assistant, Stock Screener, Earnings Calls analysis, SEC Filings analysis, and more. Users can easily find and analyze stocks by simply entering the stock name or ticker. Decode Investing aims to simplify the investment process by providing valuable insights and data to make informed decisions. The platform is designed to cater to both novice and experienced investors, offering a user-friendly interface and comprehensive features.

Decode Health

Decode Health is an AI and analytics platform that accelerates precision healthcare by supporting healthcare teams in launching machine learning and advanced analytics projects. The platform collaborates with pharmaceutical companies to enhance patient selection, biomarker identification, diagnostics development, data asset creation, and analysis. Decode Health offers modules for biomarker discovery, patient recruitment, next-generation sequencing, data analysis, and clinical decision support. The platform aims to provide fast, accurate, and actionable insights for acute and chronic disease management. Decode Health's custom-built modules are designed to work together to solve complex data problems efficiently.

Mendel AI

Mendel AI is an advanced clinical AI tool that deciphers clinical data with clinician-like logic. It offers a fully integrated suite of clinical-specific data processing products, combining OCR, de-identification, and clinical reasoning to interpret medical records. Users can ask questions in plain English and receive accurate answers from health records in seconds. Mendel's technology goes beyond traditional AI by understanding patient-level data and ensuring consistency and explainability of results in healthcare.

MeowTalk

MeowTalk is an AI tool that allows users to decode their cat's meows and understand what their feline friends are trying to communicate. By analyzing the sound patterns of your cat's meows, MeowTalk translates them into human language, providing insights into your cat's thoughts and feelings. With MeowTalk, you can bridge the communication gap between you and your cat, leading to a deeper understanding and stronger bond.

Runix

Runix is a powerful AI-driven tool designed to help businesses decode successful advertising strategies. It offers features such as discovering viral ads, AI content analysis, and creating result-driven blog content. With Runix, users can replicate successful ad campaigns, decode success patterns, and draw inspiration from millions of AI-decoded contents. The platform provides real-time insights on viral ads across various platforms like TikTok, Youtube, and Facebook, enabling users to stay ahead of market trends.

Insitro

Insitro is a drug discovery and development company that uses machine learning and data to identify and develop new medicines. The company's platform integrates in vitro cellular data produced in its labs with human clinical data to help redefine disease. Insitro's pipeline includes wholly-owned and partnered therapeutic programs in metabolism, oncology, and neuroscience.

2 - Open Source AI Tools

paxml

Pax is a framework to configure and run machine learning experiments on top of Jax.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

20 - OpenAI Gpts

OGAA (Oil and Gas Acronym Assistant)

I decode acronyms from the oil & gas industry, asking for context if needed.

Paper Interpreter (international)

Automatically structure and decode academic papers with ease - simply upload a PDF!

Emoji GPT

🌟 Discover the Charm of EmojiGPT! 🤖💬🎉 Dive into a world where emojis reign supreme with EmojiGPT, your whimsical AI companion that speaks the universal language of emojis. Get ready to decode delightful emoji messages, laugh at clever combinations, and express yourself like never before! 🤔

🧬GenoCode Wizard🔬

Unlock the secrets of DNA with 🧬GenoCode Wizard🔬! Dive into genetic analysis, decode sequences, and explore bioinformatics with ease. Perfect for researchers and students!

Social Navigator

A specialist in explaining social cues and cultural norms for clarity in conversations

N.A.R.C. Bott

This app decodes texts from narcissists, advising across all life scenarios. Navigate. Analyze. Recognize. Communicate.

What a Girl Says Translator

Simply tell me what the girl texted you or said to you, and I will respond with what she means. 💋