extractor

Extractor: AI-Powered Data Extraction for your Laravel application.

Stars: 86

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

README:

Effortlessly extract structured data from various sources, including images, PDFs, and emails, using OpenAI within your Laravel application.

- A convenient wrapper around OpenAI Chat and Completion endpoints.

- Supports multiple input formats such as Plain Text, PDF, Rtf, Images, Word documents and Web content.

- Includes a flexible Field Extractor that can extract any arbitrary data without writing custom logic.

- Can return a regular array or a Spatie/data object.

- Integrates with Textract for OCR functionality.

- Uses JSON Mode from the latest GPT-3.5 and GPT-4 models.

Example code

<?php

use HelgeSverre\Extractor\Facades\Extractor;

use HelgeSverre\Extractor\Facades\Text;

use Illuminate\Support\Facades\Storage;

$image = Storage::get("restaurant_menu.png")

// Extract text from images

$textFromImage = Text::textract($image);

// Extract structured data from plain text

$menu = Extractor::fields($textFromImage,

fields: [

'restaurantName',

'phoneNumber',

'dishes' => [

'name' => 'name of the dish',

'description' => 'description of the dish',

'price' => 'price of the dish as a number',

],

],

model: "gpt-3.5-turbo-1106",

maxTokens: 4000,

);Install the package via composer:

composer require helgesverre/extractorPublish the configuration file:

php artisan vendor:publish --tag="extractor-config"You can find all the configuration options in the configuration file.

Since this package relies on the OpenAI Laravel Package, you also need to

publish their configuration and add the OPENAI_API_KEY to your .env file:

php artisan vendor:publish --provider="OpenAI\Laravel\ServiceProvider"OPENAI_API_KEY="your-key-here"

# Optional: Set request timeout (default: 30s).

OPENAI_REQUEST_TIMEOUT=60use HelgeSverre\Extractor\Facades\Text;

$textPlainText = Text::text(file_get_contents('./data.txt'));

$textPdf = Text::pdf(file_get_contents('./data.pdf'));

$textImageOcr = Text::textract(file_get_contents('./data.jpg'));

$textPdfOcr = Text::textractUsingS3Upload(file_get_contents('./data.pdf'));

$textWord = Text::word(file_get_contents('./data.doc'));

$textWeb = Text::web('https://example.com');

$textHtml = Text::html(file_get_contents('./data.html'));| Description | Method |

|---|---|

| Extract text from a plain text, useful if you need trim/normalize whitespace in a string. | Text::text |

| Extract text from a PDF file, uses smalot/pdfparser | Text::pdf |

| Extract text with AWS Textract by sending the content as a base64 encoded string (faster, but has limitations | Text::textract |

| Extract text with AWS Textract by uploading file to S3 and polling for completion (handles larger files and multi-page PDFs) | Text::textractUsingS3Upload |

| Extract plain text from a Word document (Uses simple xml parsing and unzipping) | Text::word |

| Fetches HTML from an URL via HTTP, strip all HTML tags, squish and trim all whitespace. | Text::web |

| Extract text from an HTML file (same, but for HTML content) | Text::html |

The Extractor package includes a set of pre-built extractors designed to simplify the extraction of structured data

from various types of text. Each extractor is optimized for specific data formats, making it easy to process different

types of information. Below is a list of the included extractors along with brief descriptions and convenient shortened

methods for each:

| Example | Extractor | Description |

|---|---|---|

Extractor::extract(Contacts::class, $text); |

Contacts | Extracts a list of contacts (name, title, email, phone). |

Extractor::extract(Receipt::class, $text); |

Receipt | Extracts common Receipt data, See receipt-scanner for details. |

Extractor::fields($text, fields: ["name","address", "phone"]); |

Fields | Extracts arbitrary fields provided as an array of output key, and optional description, also supports nested fields |

These extractors are provided out of the box and offer a convenient way to extract specific types of structured data from text. You can use the shortened methods to easily access the functionality of each extractor.

The field extractor is great if you don't need much custom logic or validation and just want to extract out some structured data from a piece of text.

Here is an example of extracting information from a CV, note that providing a description to guide the AI model is supported, as well as nested items (which is useful for lists of sub-items, like work history, line items, comments on a product etc )

$sample = Text::pdf(file_get_contents(__DIR__.'/../samples/helge-cv.pdf'));

$data = Extractor::fields($sample,

fields: [

'name' => 'the name of the candidate',

'email',

'certifications' => 'list of certifications, if any',

'workHistory' => [

'companyName',

'from' => 'Y-m-d if available, Year only if not, null if missing',

'to' => 'Y-m-d if available, Year only if not, null if missing',

'text',

],

],

model: Engine::GPT_3_TURBO_1106,

);Note: This feature is still WIP.

The Extractor package also integrates with OpenAI's new Vision API, leveraging the powerful gpt-4-vision-preview

model to extract

structured data from images. This feature enables you to analyze and interpret visual content with ease, whether it's

reading text from images, extracting data from charts, or understanding complex visual scenarios.

To use the Vision features in Extractor, you need to provide an image as input. This can be done in a few different

ways:

- Using a File Path: Load an image from a file path.

- Using Raw Image Data: Use the raw data of an image, for example, from an uploaded file.

- Using an Image URL: Load an image directly from a URL.

Here's how you can use each method:

use HelgeSverre\Extractor\Text\ImageContent;

$imagePath = __DIR__ . '/../samples/sample-image.jpg';

$imageContent = ImageContent::file($imagePath);use HelgeSverre\Extractor\Text\ImageContent;

$rawImageData = file_get_contents(__DIR__ . '/../samples/sample-image.jpg');

$imageContent = ImageContent::raw($rawImageData);use HelgeSverre\Extractor\Text\ImageContent;

$imageUrl = 'https://example.com/sample-image.jpg';

$imageContent = ImageContent::url($imageUrl);After preparing your ImageContent object, you can pass it to the Extractor::fields method to extract structured data

using OpenAI's Vision API. For example:

use HelgeSverre\Extractor\Facades\Extractor;

use HelgeSverre\Extractor\Text\ImageContent;

$imageContent = ImageContent::file(__DIR__ . '/../samples/product-catalog.jpg');

$data = Extractor::fields(

$imageContent,

fields: [

'productName',

'price',

'description',

],

model: Engine::GPT_4_VISION,

);Custom extractors in Extractor allow for tailored data extraction to meet specific needs. Here's how you can create and use a custom extractor, using the example of a Job Posting Extractor.

Create a new class for your custom extractor by extending the Extractor class. In this example, we'll create

a JobPostingExtractor to extract key information from job postings:

<?php

namespace App\Extractors;

use HelgeSverre\Extractor\Extraction\Extractor;use HelgeSverre\Extractor\Text\TextContent;

class JobPostingExtractor extends Extractor

{

public function prompt(string|TextContent $input): string

{

$outputKey = $this->expectedOutputKey();

return "Extract the following fields from the job posting below:"

. "\n- jobTitle: The title or designation of the job."

. "\n- companyName: The name of the company or organization posting the job."

. "\n- location: The geographical location or workplace where the job is based."

. "\n- jobType: The nature of employment (e.g., Full-time, Part-time, Contract)."

. "\n- description: A brief summary or detailed description of the job."

. "\n- applicationDeadline: The closing date for applications, if specified."

. "\n\nThe output should be a JSON object under the key '{$outputKey}'."

. "\n\nINPUT STARTS HERE\n\n$input\n\nOUTPUT IN JSON:\n";

}

public function expectedOutputKey(): string

{

return 'extractedData';

}

}Note: Adding an instruction on which $outputKey key to nest the data under is recommended, as the JsonMode

response from OpenAI end to want to put everything under a root key, by overriding the expectedOutputKey() method,

it will tell the base Extractor class which key to pull the data from.

After defining your custom extractor, register it with the main Extractor class using the extend method:

use HelgeSverre\Extractor\Extractor;

Extractor::extend("job-posting", fn() => new JobPostingExtractor());Once registered, you can use your custom extractor just like the built-in ones. Here's an example of how to use

the JobPostingExtractor:

use HelgeSverre\Extractor\Facades\Text;

use HelgeSverre\Extractor\Extractor;

$jobPostingContent = Text::web("https://www.finn.no/job/fulltime/ad.html?finnkode=329443482");

$extractedData = Extractor::extract('job-posting', $jobPostingContent);

// Or you can specify the class-string instead

// ex: Extractor::extract(JobPostingExtractor::class, $jobPostingContent);

// $extractedData now contains structured information from the job postingWith the JobPostingExtractor, you can efficiently parse and extract key information from job postings, structuring it

in a way that's easy to manage and use within your Laravel application.

To ensure the integrity of the extracted data, you can add validation rules to your Job Posting Extractor. This is done

by using the HasValidation trait and defining validation rules in the rules method:

<?php

namespace App\Extractors;

use HelgeSverre\Extractor\Extraction\Concerns\HasValidation;

use HelgeSverre\Extractor\Extraction\Extractor;

class JobPostingExtractor extends Extractor

{

use HasValidation;

public function rules(): array

{

return [

'jobTitle' => ['required', 'string'],

'companyName' => ['required', 'string'],

'location' => ['required', 'string'],

'jobType' => ['required', 'string'],

'salary' => ['required', 'numeric'],

'description' => ['required', 'string'],

'applicationDeadline' => ['required', 'date']

];

}

}This will ensure that each key field in the job posting data meets the specified criteria, enhancing the reliability of your data extraction.

Extractor can integrate with spatie/data to cast the extracted data into a Data Transfer Object (DTO) of your

choosing. To do this, add the HasDto trait to your extractor and specify the DTO class in the dataClass method:

<?php

namespace App\Extractors;

use DateTime;

use App\Extractors\JobPostingDto;

use HelgeSverre\Extractor\Extraction\Concerns\HasDto;

use HelgeSverre\Extractor\Extraction\Extractor;

use Spatie\LaravelData\Data;

class JobPostingDto extends Data

{

public function __construct(

public string $jobTitle,

public string $companyName,

public string $location,

public string $jobType,

public int|float $salary,

public string $description,

public DateTime $applicationDeadline

) {

}

}

class JobPostingExtractor extends Extractor

{

use HasDto;

public function dataClass(): string

{

return JobPostingDto::class;

}

public function isCollection(): bool

{

return false;

}

}To use AWS Textract for extracting text from large images and multi-page PDFs, the package needs to upload the file to S3 and pass the s3 object location along to the textract service.

So you need to configure your AWS Credentials in the config/extractor.php file as follows:

TEXTRACT_KEY="your-aws-access-key"

TEXTRACT_SECRET="your-aws-security"

TEXTRACT_REGION="your-textract-region"

# Can be omitted

TEXTRACT_VERSION="2018-06-27"You also need to configure a seperate Textract disk where the files will be stored,

open your config/filesystems.php configuration file and add the following:

'textract' => [

'driver' => 's3',

'key' => env('TEXTRACT_KEY'),

'secret' => env('TEXTRACT_SECRET'),

'region' => env('TEXTRACT_REGION'),

'bucket' => env('TEXTRACT_BUCKET'),

],Ensure the textract_disk setting in config/extractor.php is the same as your disk name in

the filesystems.php

config, you can change it with the .env value TEXTRACT_DISK.

return [

"textract_disk" => env("TEXTRACT_DISK")

];.env

TEXTRACT_DISK="uploads"You can configure a lifecycle rule on your S3 bucket to delete the files after a certain amount of time, see the AWS docs for more info:

https://repost.aws/knowledge-center/s3-empty-bucket-lifecycle-rule

By default, the package will NOT delete the files that has been uploaded in the textract S3 bucket, if you want to

delete these files, you can implement this using the TextractUsingS3Upload::cleanupFileUsing(Closure) hook.

// Delete the file from the S3 bucket

TextractUsingS3Upload::cleanupFileUsing(function (string $filePath) {

Storage::disk('textract')->delete($filePath);

}Note

Textract is not available in all regions:

Q: In which AWS regions is Amazon Textract available? Amazon Textract is currently available in the US East (Northern Virginia), US East (Ohio), US West (Oregon), US West ( N. California), AWS GovCloud (US-West), AWS GovCloud (US-East), Canada (Central), EU (Ireland), EU (London), EU ( Frankfurt), EU (Paris), Asia Pacific (Singapore), Asia Pacific (Sydney), Asia Pacific (Seoul), and Asia Pacific ( Mumbai) Regions.

See: https://aws.amazon.com/textract/faqs/

$input (TextContent|string)

The input text or data that needs to be processed. It accepts either a TextContent object or a string.

$model (Model)

This parameter specifies the OpenAI model used for the extraction process.

It accepts a string value. Different models have different speed/accuracy characteristics and use cases, for

convenience, most of the accepted models are provided as constants on the Engine class.

Available Models:

| Model Identifier | Model | Note |

|---|---|---|

Engine::GPT_4_1106_PREVIEW |

'gpt-4-1106-preview' | GPT-4 Turbo, featuring improved instruction following, JSON mode, reproducible outputs, parallel function calling. Maximum 4,096 output tokens. Preview model, not yet for production traffic. |

Engine::GPT_3_TURBO_1106 |

'gpt-3.5-turbo-1106' | Updated GPT-3.5 Turbo, with improvements similar to GPT-4 Turbo. Returns up to 4,096 output tokens. |

Engine::GPT_4 |

'gpt-4' | Large multimodal model, capable of solving complex problems with greater accuracy. Suited for both chat and traditional completions tasks. |

Engine::GPT4_32K |

'gpt-4-32k' | Extended version of GPT-4 with a larger context window of 32,768 tokens. |

Engine::GPT_3_TURBO_INSTRUCT |

'gpt-3.5-turbo-instruct' | Similar to text-davinci-003, optimized for legacy Completions endpoint, not for Chat Completions. |

Engine::GPT_3_TURBO_16K |

'gpt-3.5-turbo-16k' | Extended version of GPT-3.5 Turbo, supporting a larger context window of 16,385 tokens. |

Engine::GPT_3_TURBO |

'gpt-3.5-turbo' | Optimized for chat using the Chat Completions API, suitable for traditional completion tasks. |

Engine::TEXT_DAVINCI_003 |

'text-davinci-003' | Legacy model, better quality and consistency for language tasks. To be deprecated on Jan 4, 2024. |

Engine::TEXT_DAVINCI_002 |

'text-davinci-002' | Similar to text-davinci-003 but trained with supervised fine-tuning. To be deprecated on Jan 4, 2024. |

$maxTokens (int)

The maximum number of tokens that the model will process.

The default value is 2000, and adjusting this value may be necessary for very long text. A value of 2000 is usually

sufficient.

$temperature (float)

Controls the randomness/creativity of the model's output.

A higher value (e.g., 0.8) makes the output more random, which is usually not desired in this context. A recommended

value is 0.1 or 0.2; anything over 0.5 tends to be less useful. The default is 0.1.

This package is licensed under the MIT License. For more details, refer to the License File.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for extractor

Similar Open Source Tools

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

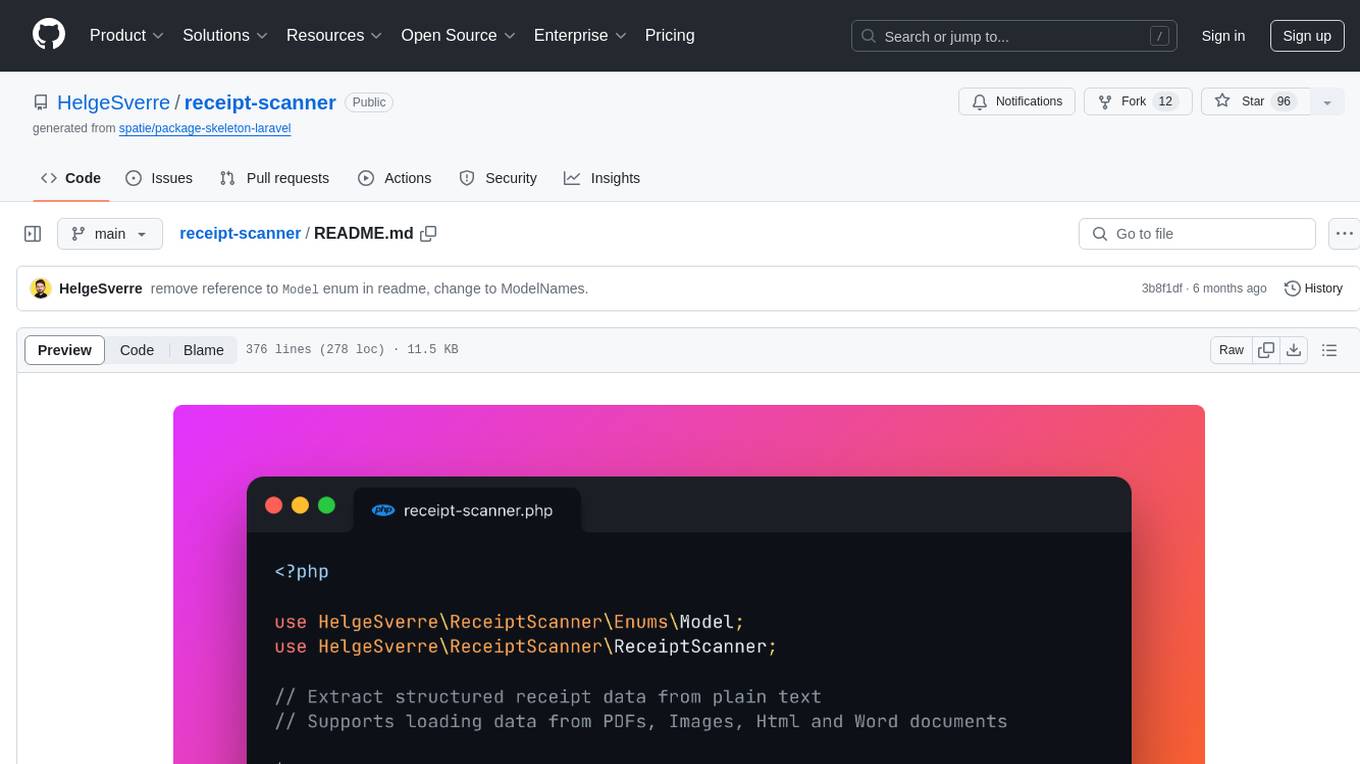

receipt-scanner

The receipt-scanner repository is an AI-Powered Receipt and Invoice Scanner for Laravel that allows users to easily extract structured receipt data from images, PDFs, and emails within their Laravel application using OpenAI. It provides a light wrapper around OpenAI Chat and Completion endpoints, supports various input formats, and integrates with Textract for OCR functionality. Users can install the package via composer, publish configuration files, and use it to extract data from plain text, PDFs, images, Word documents, and web content. The scanned receipt data is parsed into a DTO structure with main classes like Receipt, Merchant, and LineItem.

monacopilot

Monacopilot is a powerful and customizable AI auto-completion plugin for the Monaco Editor. It supports multiple AI providers such as Anthropic, OpenAI, Groq, and Google, providing real-time code completions with an efficient caching system. The plugin offers context-aware suggestions, customizable completion behavior, and framework agnostic features. Users can also customize the model support and trigger completions manually. Monacopilot is designed to enhance coding productivity by providing accurate and contextually appropriate completions in daily spoken language.

pg_vectorize

pg_vectorize is a Postgres extension that automates text to embeddings transformation, enabling vector search and LLM applications with minimal function calls. It integrates with popular LLMs, provides workflows for vector search and RAG, and automates Postgres triggers for updating embeddings. The tool is part of the VectorDB Stack on Tembo Cloud, offering high-level APIs for easy initialization and search.

magentic

Easily integrate Large Language Models into your Python code. Simply use the `@prompt` and `@chatprompt` decorators to create functions that return structured output from the LLM. Mix LLM queries and function calling with regular Python code to create complex logic.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

llm-client

LLMClient is a JavaScript/TypeScript library that simplifies working with large language models (LLMs) by providing an easy-to-use interface for building and composing efficient prompts using prompt signatures. These signatures enable the automatic generation of typed prompts, allowing developers to leverage advanced capabilities like reasoning, function calling, RAG, ReAcT, and Chain of Thought. The library supports various LLMs and vector databases, making it a versatile tool for a wide range of applications.

nano-graphrag

nano-GraphRAG is a simple, easy-to-hack implementation of GraphRAG that provides a smaller, faster, and cleaner version of the official implementation. It is about 800 lines of code, small yet scalable, asynchronous, and fully typed. The tool supports incremental insert, async methods, and various parameters for customization. Users can replace storage components and LLM functions as needed. It also allows for embedding function replacement and comes with pre-defined prompts for entity extraction and community reports. However, some features like covariates and global search implementation differ from the original GraphRAG. Future versions aim to address issues related to data source ID, community description truncation, and add new components.

playword

PlayWord is a tool designed to supercharge web test automation experience with AI. It provides core features such as enabling browser operations and validations using natural language inputs, as well as monitoring interface to record and dry-run test steps. PlayWord supports multiple AI services including Anthropic, Google, and OpenAI, allowing users to select the appropriate provider based on their requirements. The tool also offers features like assertion handling, frame handling, custom variables, test recordings, and an Observer module to track user interactions on web pages. With PlayWord, users can interact with web pages using natural language commands, reducing the need to worry about element locators and providing AI-powered adaptation to UI changes.

auto-playwright

Auto Playwright is a tool that allows users to run Playwright tests using AI. It eliminates the need for selectors by determining actions at runtime based on plain-text instructions. Users can automate complex scenarios, write tests concurrently with or before functionality development, and benefit from rapid test creation. The tool supports various Playwright actions and offers additional options for debugging and customization. It uses HTML sanitization to reduce costs and improve text quality when interacting with the OpenAI API.

llm-chain

LLM Chain is a PHP library for building LLM-based features and applications. It provides abstractions for Language Models and Embeddings Models from platforms like OpenAI, Azure, Google, Replicate, and others. The core feature is to interact with language models via messages, supporting different message types and content. LLM Chain also supports tool calling, document embedding, vector stores, similarity search, structured output, response streaming, image processing, audio processing, embeddings, parallel platform calls, and input/output processing. Contributions are welcome, and the repository contains fixture licenses for testing multi-modal features.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output with respect to defined Context-Free Grammar (CFG) rules. It supports general-purpose programming languages like Python, Go, SQL, JSON, and more, allowing users to define custom grammars using EBNF syntax. The tool compares favorably to other constrained decoders and offers features like fast grammar-guided generation, compatibility with HuggingFace Language Models, and the ability to work with various decoding strategies.

laravel-slower

Laravel Slower is a powerful package designed for Laravel developers to optimize the performance of their applications by identifying slow database queries and providing AI-driven suggestions for optimal indexing strategies and performance improvements. It offers actionable insights for debugging and monitoring database interactions, enhancing efficiency and scalability.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output based on a Context-Free Grammar (CFG). It supports various programming languages like Python, Go, SQL, Math, JSON, and more. Users can define custom grammars using EBNF syntax. SynCode offers fast generation, seamless integration with HuggingFace Language Models, and the ability to sample with different decoding strategies.

LarAgent

LarAgent is a framework designed to simplify the creation and management of AI agents within Laravel projects. It offers an Eloquent-like syntax for creating and managing AI agents, Laravel-style artisan commands, flexible agent configuration, structured output handling, image input support, and extensibility. LarAgent supports multiple chat history storage options, custom tool creation, event system for agent interactions, multiple provider support, and can be used both in Laravel and standalone environments. The framework is constantly evolving to enhance developer experience, improve AI capabilities, enhance security and storage features, and enable advanced integrations like provider fallback system, Laravel Actions integration, and voice chat support.

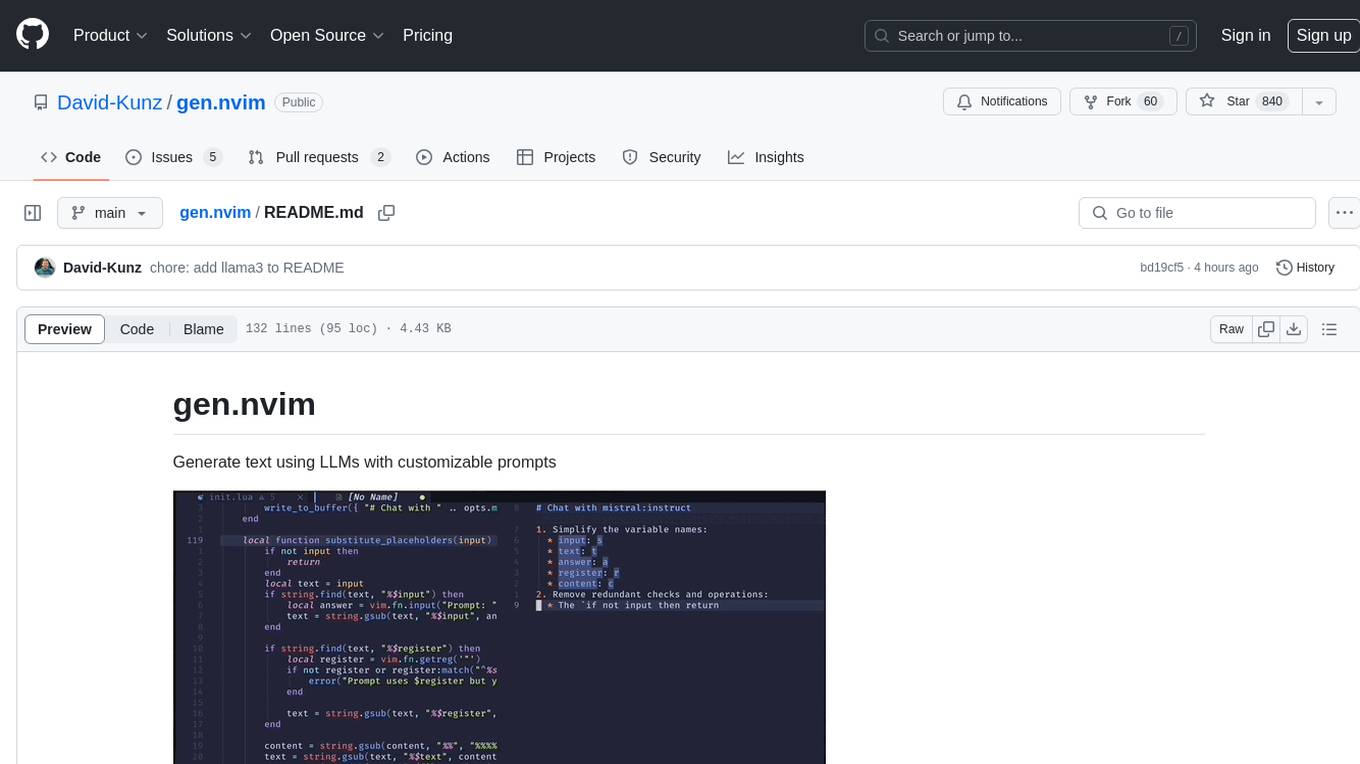

gen.nvim

gen.nvim is a tool that allows users to generate text using Language Models (LLMs) with customizable prompts. It requires Ollama with models like `llama3`, `mistral`, or `zephyr`, along with Curl for installation. Users can use the `Gen` command to generate text based on predefined or custom prompts. The tool provides key maps for easy invocation and allows for follow-up questions during conversations. Additionally, users can select a model from a list of installed models and customize prompts as needed.

For similar tasks

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

NeMo-Guardrails

NeMo Guardrails is an open-source toolkit for easily adding _programmable guardrails_ to LLM-based conversational applications. Guardrails (or "rails" for short) are specific ways of controlling the output of a large language model, such as not talking about politics, responding in a particular way to specific user requests, following a predefined dialog path, using a particular language style, extracting structured data, and more.

kor

Kor is a prototype tool designed to help users extract structured data from text using Language Models (LLMs). It generates prompts, sends them to specified LLMs, and parses the output. The tool works with the parsing approach and is integrated with the LangChain framework. Kor is compatible with pydantic v2 and v1, and schema is typed checked using pydantic. It is primarily used for extracting information from text based on provided reference examples and schema documentation. Kor is designed to work with all good-enough LLMs regardless of their support for function/tool calling or JSON modes.

awesome-llm-json

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

tensorzero

TensorZero is an open-source platform that helps LLM applications graduate from API wrappers into defensible AI products. It enables a data & learning flywheel for LLMs by unifying inference, observability, optimization, and experimentation. The platform includes a high-performance model gateway, structured schema-based inference, observability, experimentation, and data warehouse for analytics. TensorZero Recipes optimize prompts and models, and the platform supports experimentation features and GitOps orchestration for deployment.

stagehand

Stagehand is an AI web browsing framework that simplifies and extends web automation using three simple APIs: act, extract, and observe. It aims to provide a lightweight, configurable framework without complex abstractions, allowing users to automate web tasks reliably. The tool generates Playwright code based on atomic instructions provided by the user, enabling natural language-driven web automation. Stagehand is open source, maintained by the Browserbase team, and supports different models and model providers for flexibility in automation tasks.

azure-ai-document-processing-samples

This repository contains a collection of code samples that demonstrate how to use various Azure AI capabilities to process documents. The samples help engineering teams establish techniques with Azure AI Foundry, Azure OpenAI, Azure AI Document Intelligence, and Azure AI Language services to build solutions for extracting structured data, classifying, and analyzing documents. The techniques simplify custom model training, improve reliability in document processing, and simplify document processing workflows by providing reusable code and patterns that can be easily modified and evaluated for most use cases.

firecrawl-mcp-server

Firecrawl MCP Server is a Model Context Protocol (MCP) server implementation that integrates with Firecrawl for web scraping capabilities. It supports features like scrape, crawl, search, extract, and batch scrape. It provides web scraping with JS rendering, URL discovery, web search with content extraction, automatic retries with exponential backoff, credit usage monitoring, comprehensive logging system, support for cloud and self-hosted FireCrawl instances, mobile/desktop viewport support, and smart content filtering with tag inclusion/exclusion. The server includes configurable parameters for retry behavior and credit usage monitoring, rate limiting and batch processing capabilities, and tools for scraping, batch scraping, checking batch status, searching, crawling, and extracting structured information from web pages.

For similar jobs

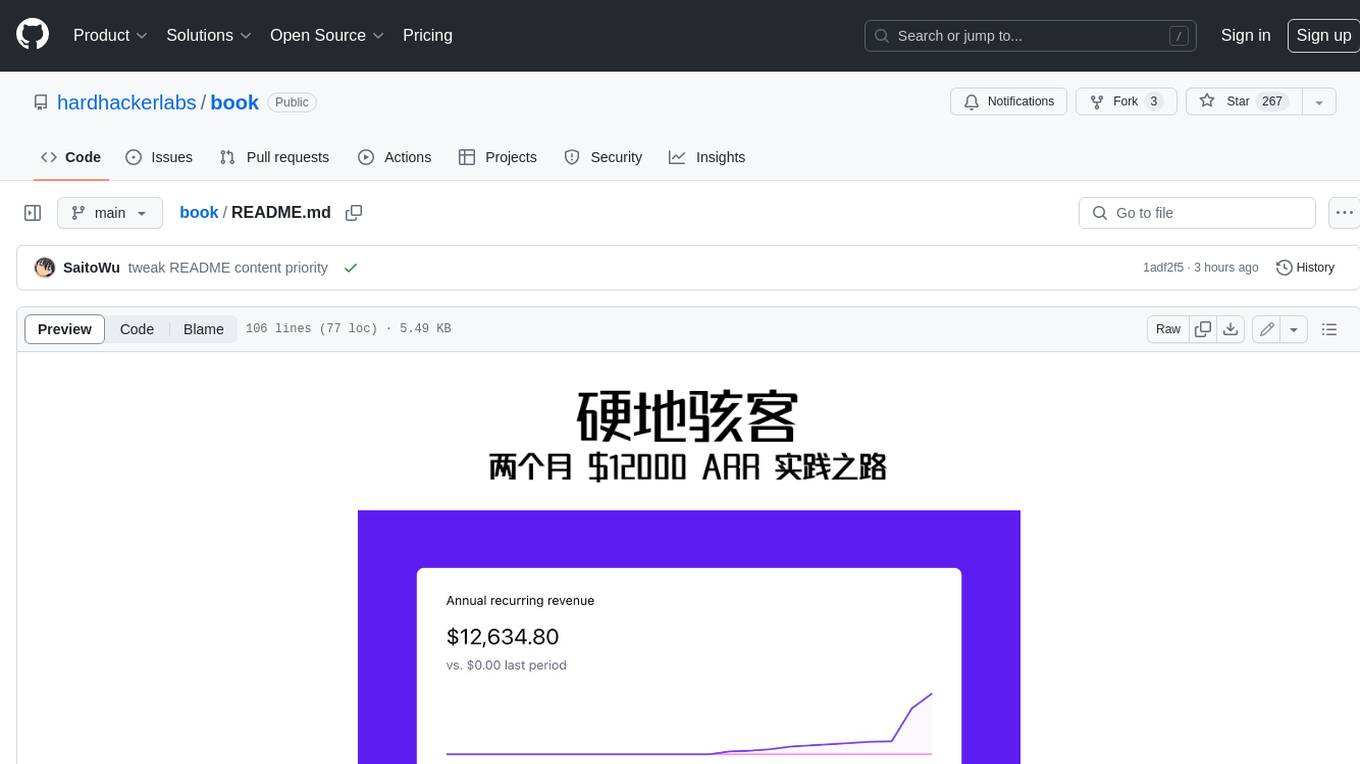

book

Podwise is an AI knowledge management app designed specifically for podcast listeners. With the Podwise platform, you only need to follow your favorite podcasts, such as "Hardcore Hackers". When a program is released, Podwise will use AI to transcribe, extract, summarize, and analyze the podcast content, helping you to break down the hard-core podcast knowledge. At the same time, it is connected to platforms such as Notion, Obsidian, Logseq, and Readwise, embedded in your knowledge management workflow, and integrated with content from other channels including news, newsletters, and blogs, helping you to improve your second brain 🧠.

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

Scrapegraph-ai

ScrapeGraphAI is a Python library that uses Large Language Models (LLMs) and direct graph logic to create web scraping pipelines for websites, documents, and XML files. It allows users to extract specific information from web pages by providing a prompt describing the desired data. ScrapeGraphAI supports various LLMs, including Ollama, OpenAI, Gemini, and Docker, enabling users to choose the most suitable model for their needs. The library provides a user-friendly interface through its `SmartScraper` class, which simplifies the process of building and executing scraping pipelines. ScrapeGraphAI is open-source and available on GitHub, with extensive documentation and examples to guide users. It is particularly useful for researchers and data scientists who need to extract structured data from web pages for analysis and exploration.

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

auto-news

Auto-News is an automatic news aggregator tool that utilizes Large Language Models (LLM) to pull information from various sources such as Tweets, RSS feeds, YouTube videos, web articles, Reddit, and journal notes. The tool aims to help users efficiently read and filter content based on personal interests, providing a unified reading experience and organizing information effectively. It features feed aggregation with summarization, transcript generation for videos and articles, noise reduction, task organization, and deep dive topic exploration. The tool supports multiple LLM backends, offers weekly top-k aggregations, and can be deployed on Linux/MacOS using docker-compose or Kubernetes.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

Agently-Daily-News-Collector

Agently Daily News Collector is an open-source project showcasing a workflow powered by the Agent ly AI application development framework. It allows users to generate news collections on various topics by inputting the field topic. The AI agents automatically perform the necessary tasks to generate a high-quality news collection saved in a markdown file. Users can edit settings in the YAML file, install Python and required packages, input their topic idea, and wait for the news collection to be generated. The process involves tasks like outlining, searching, summarizing, and preparing column data. The project dependencies include Agently AI Development Framework, duckduckgo-search, BeautifulSoup4, and PyYAM.