SemanticFinder

SemanticFinder - frontend-only live semantic search with transformers.js

Stars: 204

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

README:

Frontend-only live semantic search and chat-with-your-documents built on transformers.js. Supports Wasm and WebGPU!

🔥 For best performance try the WebGPU Version here! 🔥

Semantic search right in your browser! Calculates the embeddings and cosine similarity client-side without server-side inferencing, using transformers.js and latest SOTA embedding models from Huggingface.

All transformers.js-compatible feature-extraction models are supported. Here is a sortable list you can go through: daily updated list. Download the compatible models table as xlsx, csv, json, parquet, or html here: https://github.com/do-me/trending-huggingface-models/. Note that the wasm backend in transformers.js supports all mentioned models. If you want the best performance, make sure to use a WebGPU-compatible model.

You can use super fast pre-indexed examples for really large books like the Bible or Les Misérables with hundreds of pages and search the content in less than 2 seconds 🚀. Try one of these and convince yourself:

| filesize | textTitle | textAuthor | textYear | textLanguage | URL | modelName | quantized | splitParam | splitType | characters | chunks | wordsToAvoidAll | wordsToCheckAll | wordsToAvoidAny | wordsToCheckAny | exportDecimals | lines | textNotes | textSourceURL | filename |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4.78 | Das Kapital | Karl Marx | 1867 | de | https://do-me.github.io/SemanticFinder/?hf=Das_Kapital_c1a84fba | Xenova/multilingual-e5-small | True | 80 | Words | 2003807 | 3164 | 5 | 28673 | https://ia601605.us.archive.org/13/items/KarlMarxDasKapitalpdf/KAPITAL1.pdf | Das_Kapital_c1a84fba.json.gz | |||||

| 2.58 | Divina Commedia | Dante | 1321 | it | https://do-me.github.io/SemanticFinder/?hf=Divina_Commedia_d5a0fa67 | Xenova/multilingual-e5-base | True | 50 | Words | 383782 | 1179 | 5 | 6225 | http://www.letteratura-italiana.com/pdf/divina%20commedia/08%20Inferno%20in%20versione%20italiana.pdf | Divina_Commedia_d5a0fa67.json.gz | |||||

| 11.92 | Don Quijote | Miguel de Cervantes | 1605 | es | https://do-me.github.io/SemanticFinder/?hf=Don_Quijote_14a0b44 | Xenova/multilingual-e5-base | True | 25 | Words | 1047150 | 7186 | 4 | 12005 | https://parnaseo.uv.es/lemir/revista/revista19/textos/quijote_1.pdf | Don_Quijote_14a0b44.json.gz | |||||

| 0.06 | Hansel and Gretel | Brothers Grimm | 1812 | en | https://do-me.github.io/SemanticFinder/?hf=Hansel_and_Gretel_4de079eb | TaylorAI/gte-tiny | True | 100 | Chars | 5304 | 55 | 5 | 9 | https://www.grimmstories.com/en/grimm_fairy-tales/hansel_and_gretel | Hansel_and_Gretel_4de079eb.json.gz | |||||

| 1.74 | IPCC Report 2023 | IPCC | 2023 | en | https://do-me.github.io/SemanticFinder/?hf=IPCC_Report_2023_2b260928 | Supabase/bge-small-en | True | 200 | Chars | 307811 | 1566 | 5 | 3230 | state of knowledge of climate change | https://report.ipcc.ch/ar6syr/pdf/IPCC_AR6_SYR_LongerReport.pdf | IPCC_Report_2023_2b260928.json.gz | ||||

| 25.56 | King James Bible | None | en | https://do-me.github.io/SemanticFinder/?hf=King_James_Bible_24f6dc4c | TaylorAI/gte-tiny | True | 200 | Chars | 4556163 | 23056 | 5 | 80496 | https://www.holybooks.com/wp-content/uploads/2010/05/The-Holy-Bible-King-James-Version.pdf | King_James_Bible_24f6dc4c.json.gz | ||||||

| 11.45 | King James Bible | None | en | https://do-me.github.io/SemanticFinder/?hf=King_James_Bible_6434a78d | TaylorAI/gte-tiny | True | 200 | Chars | 4556163 | 23056 | 2 | 80496 | https://www.holybooks.com/wp-content/uploads/2010/05/The-Holy-Bible-King-James-Version.pdf | King_James_Bible_6434a78d.json.gz | ||||||

| 39.32 | Les Misérables | Victor Hugo | 1862 | fr | https://do-me.github.io/SemanticFinder/?hf=Les_Misérables_2239df51 | Xenova/multilingual-e5-base | True | 25 | Words | 3236941 | 19463 | 5 | 74491 | All five acts included | https://beq.ebooksgratuits.com/vents/Hugo-miserables-1.pdf | Les_Misérables_2239df51.json.gz | ||||

| 0.46 | REGULATION (EU) 2023/138 | European Commission | 2022 | en | https://do-me.github.io/SemanticFinder/?hf=REGULATION_(EU)_2023_138_c00e7ff6 | Supabase/bge-small-en | True | 25 | Words | 76809 | 424 | 5 | 1323 | https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32023R0138&qid=1704492501351 | REGULATION_(EU)_2023_138_c00e7ff6.json.gz | |||||

| 0.07 | Universal Declaration of Human Rights | United Nations | 1948 | en | https://do-me.github.io/SemanticFinder/?hf=Universal_Declaration_of_Human_Rights_0a7da79a | TaylorAI/gte-tiny | True | \nArticle | Regex | 8623 | 63 | 5 | 109 | 30 articles | https://www.un.org/en/about-us/universal-declaration-of-human-rights | Universal_Declaration_of_Human_Rights_0a7da79a.json.gz |

You can create indices yourself with one two clicks and save them. If it's something private, keep it for yourself, if it's a classic book or something you think other's might be interested in consider a PR on the Huggingface Repo or get in touch with us. Book requests are happily met if you provide us a good source link where we can do copy & paste. Simply open an issue here with [Book Request] or similar or contact us.

It goes without saying that no discriminating content will be tolerated.

Clone the repository and install dependencies with

npm install

Then run with

npm run start

If you want to build instead, run

npm run build

Afterwards, you'll find the index.html, main.css and bundle.js in dist.

Download the Chrome extension from Chrome webstore and pin it. Right click the extension icon for options:

- choose distiluse-base-multilingual-cased-v2 for multilingual usage (default is English-only)

- set a higher number for min characters to split by for larger texts

If you want to build the browser extension locally, clone the repo and cd in extension directory then run:

npm install-

npm run buildfor a static build or -

npm run devfor the auto-refreshing development version - go to Chrome extension settings with

chrome://extensions - select

Load Unpackedand choose thebuildfolder - pin the extension in Chrome so you can access it easily. If it doesn't work for you, feel free to open an issue.

Tested on the entire book of Moby Dick with 660.000 characters ~13.000 lines or ~111.000 words. Initial embedding generation takes 1-2 mins on my old i7-8550U CPU with 1000 characters as segment size. Following queries take only ~2 seconds! If you want to query larger text instead or keep an entire library of books indexed use a proper vector database instead.

You can customize everything!

- Input text & search term(s)

- Hybrid search (semantic search & full-text search)

- Segment length (the bigger the faster, the smaller the slower)

- Highlight colors (currently hard-coded)

- Number of highlights are based on the threshold value. The lower, the more results.

- Live updates

- Easy integration of other ML-models thanks to transformers.js

- Data privacy-friendly - your input text data is not sent to a server, it stays in your browser!

- Basic search through anything, like your personal notes (my initial motivation by the way, a huge notes.txt file I couldn't handle anymore)

- Remember peom analysis in school? Often you look for possible Leitmotifs or recurring categories like food in Hänsel & Gretel

- One could package everything nicely and use it e.g. instead of JavaScript search engines such as Lunr.js (also being used in mkdocs-material).

- Integration in mkdocs (mkdocs-material) experimental:

- when building the docs, slice all

.md-files in chunks (length defined inmkdocs.yaml). Should be fairly large (>800 characters) for lower response time. It's also possible to build n indices with first a coarse index (mabye per document/.md-file if the used model supports the length) and then a rfined one for the document chunks - build the index by calculating the embeddings for all docs/chunks

- when a user queries the docs, a switch can toggle (fast) full-text standard search (atm with lunr.js) or experimental semantic search

- if the latter is being toggled, the client loads the model (all-MiniLM-L6-v2 has ~30mb)

- like in SemanticFinder, the embedding is created client-side and the cosine similarity calculated

- the high-scored results are returned just like with lunr.js so the user shouldn't even notice a differenc ein the UI

- when building the docs, slice all

- Electron- or browser-based apps could be augmented with semantic search, e.g. VS Code, Atom or mobile apps.

- Integration in personal wikis such as Obsidian, tiddlywiki etc. would save you the tedious tagging/keywords/categorisation work or could at least improve your structure further

- Search your own browser history (thanks @Snapdeus)

- Integration in chat apps

- Allow PDF-uploads (conversion from PDF to text)

- Integrate with Speech-to-Text whisper model from transformers.js to allow audio uploads.

- Thanks to CodeMirror one could even use syntax highlighting for programming languages such as Python, JavaScript etc.

Transformers.js is doing all the heavy lifting of tokenizing the input and running the model. Without it, this demo would have been impossible.

Input

- Text, as much as your browser can handle! The demo uses a part of "Hänsel & Gretel" but it can handle hundreds of PDF pages

- A search term or phrase

- The number of characters the text should be segmented in

- A similarity threshold value. Results with lower similarity score won't be displayed.

Output

- Three highlighted string segments, the darker the higher the similarity score.

Pipeline

- All scripts are loaded. The model is loaded once from HuggingFace, after cached in the browser.

- A user inputs some text and a search term or phrase.

- Depending on the approximate length to consider (unit=characters), the text is split into segments. Words themselves are never split, that's why it's approximative.

- The search term embedding is created.

- For each segment of the text, the embedding is created.

- Meanwhile, the cosine similarity is calculated between every segment embedding and the search term embedding. It's written to a dictionary with the segment as key and the score as value.

- For every iteration, the progress bar and the highlighted sections are updated in real-time depending on the highest scores in the array.

- The embeddings are cached in the dictionary so that subsequent queries are quite fast. The calculation of the cosine similarity is fairly speedy in comparison to the embedding generation.

- Only if the user changes the segment length, the embeddings must be recalculated.

PRs welcome!

- [x] similarity score cutoff/threshold

- [x] add option for more highlights (e.g. all above certain score)

- [x] add stop button

- [x] MaterialUI for input fields or proper labels

- [x] create a demo without CDNs

- [x] separate one html properly in html, js, css

- [x] add npm installation

- [x] option for loading embeddings from file or generally allow sharing embeddings in some way

- [x] simplify chunking function so the original text can be loaded without issues

- [ ] improve the color range

- [ ] rewrite the cosine similarity function in Rust, port to WASM and load as a module for possible speedup (experimental)

- [ ] UI overhaul

- [ ] polish code

- [x] - jQuery/vanilla JS mixed

- [ ] - clean up functions

- [ ] - add more comments

- [ ] add possible use cases

- [ ] package as a standalone application (maybe with custom model choice; to be downloaded once from HF hub, then saved locally)

- [ ] possible integration as example in transformers.js homepage

Gource image created with:

gource -1280x720 --title "SemanticFinder" --seconds-per-day 0.03 --auto-skip-seconds 0.03 --bloom-intensity 0.5 --max-user-speed 500 --highlight-dirs --multi-sampling --highlight-colour 00FF00 For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for SemanticFinder

Similar Open Source Tools

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

FFAIVideo

FFAIVideo is a lightweight node.js project that utilizes popular AI LLM to intelligently generate short videos. It supports multiple AI LLM models such as OpenAI, Moonshot, Azure, g4f, Google Gemini, etc. Users can input text to automatically synthesize exciting video content with subtitles, background music, and customizable settings. The project integrates Microsoft Edge's online text-to-speech service for voice options and uses Pexels website for video resources. Installation of FFmpeg is essential for smooth operation. Inspired by MoneyPrinterTurbo, MoneyPrinter, and MsEdgeTTS, FFAIVideo is designed for front-end developers with minimal dependencies and simple usage.

cambrian

Cambrian-1 is a fully open project focused on exploring multimodal Large Language Models (LLMs) with a vision-centric approach. It offers competitive performance across various benchmarks with models at different parameter levels. The project includes training configurations, model weights, instruction tuning data, and evaluation details. Users can interact with Cambrian-1 through a Gradio web interface for inference. The project is inspired by LLaVA and incorporates contributions from Vicuna, LLaMA, and Yi. Cambrian-1 is licensed under Apache 2.0 and utilizes datasets and checkpoints subject to their respective original licenses.

MathEval

MathEval is a benchmark designed for evaluating the mathematical capabilities of large models. It includes over 20 evaluation datasets covering various mathematical domains with more than 30,000 math problems. The goal is to assess the performance of large models across different difficulty levels and mathematical subfields. MathEval serves as a reliable reference for comparing mathematical abilities among large models and offers guidance on enhancing their mathematical capabilities in the future.

LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

willow-inference-server

Willow Inference Server (WIS) is a highly optimized language inference server implementation focused on enabling performant, cost-effective self-hosting of state-of-the-art models for speech and language tasks. It supports ASR and TTS tasks, runs on CUDA with low-end device support, and offers various transport options like REST, WebRTC, and Web Sockets. WIS is memory optimized, leverages CTranslate2 for Whisper support, and enables custom TTS voices. The server automatically detects available CUDA VRAM and optimizes functionality accordingly. Users can programmatically select Whisper models and parameters for each request to balance speed and quality.

RustySEO

RustySEO is a free, modern SEO/GEO toolkit designed to help users crawl and analyze websites and server logs without crawl limits. It is an all-in-one, cross-platform marketing toolkit for comprehensive SEO & GEO analysis, providing actionable insights into marketing and SEO strategies. The tool offers features such as shallow & deep crawl, technical diagnostics, on-page SEO analysis, dashboards, reporting, topic and keyword generators, AI chatbot, crawl history, image conversion and optimization, and more. RustySEO aims to be a robust, free alternative to costly commercial SEO tools, with integrations like Google PageSpeed Insights, Google Gemini, and more.

TableLLM

TableLLM is a large language model designed for efficient tabular data manipulation tasks in real office scenarios. It can generate code solutions or direct text answers for tasks like insert, delete, update, query, merge, and chart operations on tables embedded in spreadsheets or documents. The model has been fine-tuned based on CodeLlama-7B and 13B, offering two scales: TableLLM-7B and TableLLM-13B. Evaluation results show its performance on benchmarks like WikiSQL, Spider, and self-created table operation benchmark. Users can use TableLLM for code and text generation tasks on tabular data.

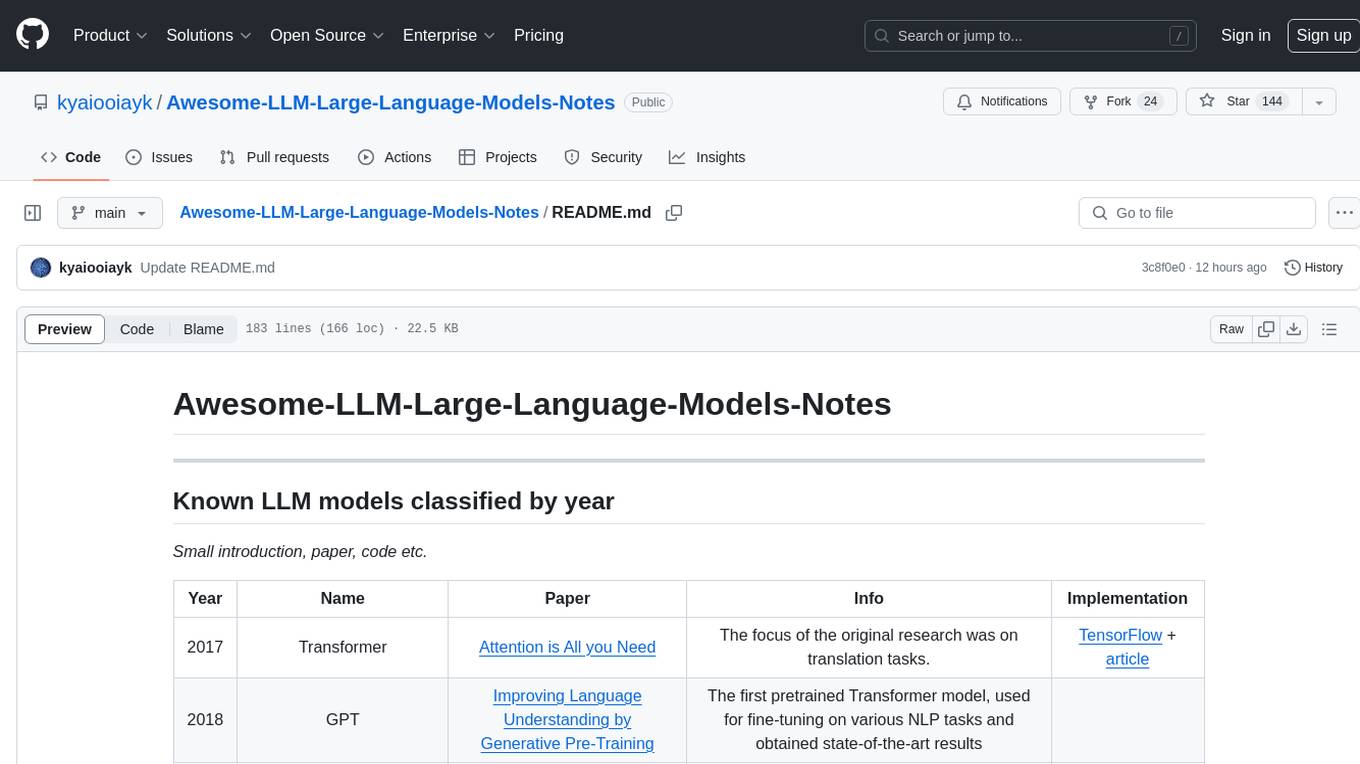

Awesome-LLM-Large-Language-Models-Notes

Awesome-LLM-Large-Language-Models-Notes is a repository that provides a comprehensive collection of information on various Large Language Models (LLMs) classified by year, size, and name. It includes details on known LLM models, their papers, implementations, and specific characteristics. The repository also covers LLM models classified by architecture, must-read papers, blog articles, tutorials, and implementations from scratch. It serves as a valuable resource for individuals interested in understanding and working with LLMs in the field of Natural Language Processing (NLP).

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

llm-datasets

LLM Datasets is a repository containing high-quality datasets, tools, and concepts for LLM fine-tuning. It provides datasets with characteristics like accuracy, diversity, and complexity to train large language models for various tasks. The repository includes datasets for general-purpose, math & logic, code, conversation & role-play, and agent & function calling domains. It also offers guidance on creating high-quality datasets through data deduplication, data quality assessment, data exploration, and data generation techniques.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

Awesome-AITools

This repo collects AI-related utilities. ## All Categories * All Categories * ChatGPT and other closed-source LLMs * AI Search engine * Open Source LLMs * GPT/LLMs Applications * LLM training platform * Applications that integrate multiple LLMs * AI Agent * Writing * Programming Development * Translation * AI Conversation or AI Voice Conversation * Image Creation * Speech Recognition * Text To Speech * Voice Processing * AI generated music or sound effects * Speech translation * Video Creation * Video Content Summary * OCR(Optical Character Recognition)

imodels

Python package for concise, transparent, and accurate predictive modeling. All sklearn-compatible and easy to use. _For interpretability in NLP, check out our new package:imodelsX _

LLaVA-MORE

LLaVA-MORE is a new family of Multimodal Language Models (MLLMs) that integrates recent language models with diverse visual backbones. The repository provides a unified training protocol for fair comparisons across all architectures and releases training code and scripts for distributed training. It aims to enhance Multimodal LLM performance and offers various models for different tasks. Users can explore different visual backbones like SigLIP and methods for managing image resolutions (S2) to improve the connection between images and language. The repository is a starting point for expanding the study of Multimodal LLMs and enhancing new features in the field.

ai-hands-on

A complete, hands-on guide to becoming an AI Engineer. This repository is designed to help you learn AI from first principles, build real neural networks, and understand modern LLM systems end-to-end. Progress through math, PyTorch, deep learning, transformers, RAG, and OCR with clean, intuitive Jupyter notebooks guiding you at every step. Suitable for beginners and engineers leveling up, providing clarity, structure, and intuition to build real AI systems.

For similar tasks

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

ocular

Ocular is a set of modules and tools that allow you to build rich, reliable, and performant Generative AI-Powered Search Platforms without the need to reinvent Search Architecture. We help you build you spin up customized internal search in days not months.

nucliadb

NucliaDB is a robust database that allows storing and searching on unstructured data. It is an out of the box hybrid search database, utilizing vector, full text and graph indexes. NucliaDB is written in Rust and Python. We designed it to index large datasets and provide multi-teanant support. When utilizing NucliaDB with Nuclia cloud, you are able to the power of an NLP database without the hassle of data extraction, enrichment and inference. We do all the hard work for you.

Scriberr

Scriberr is a self-hostable AI audio transcription app that utilizes open-source Whisper models from OpenAI for transcribing audio files locally on user's hardware. It offers fast transcription with customizable compute settings, local transcription on device, API endpoints for automation, and integration with other tools. Users can optionally summarize transcripts using ChatGPT or Ollama, with support for custom prompts. The app is mobile-ready, simple, and easy to use, with planned features including speaker diarization, audio recording, file actions, full text fuzzy search, tag-based organization, follow-along text with playback, edit summaries, export options, and support for other languages. Despite being in beta, Scriberr is functional and usable, albeit with some rough edges and minor bugs.

lector

A composable, headless PDF viewer toolkit for React applications, powered by PDF.js. Build feature-rich PDF viewing experiences with full control over the UI and functionality. It is responsive and mobile-friendly, fully customizable UI components, supports text selection and search functionality, page thumbnails and outline navigation, dark mode, pan and zoom controls, form filling support, internal and external link handling. Contributions are welcome in areas like performance optimizations, accessibility improvements, mobile/touch interactions, documentation, and examples. Inspired by open-source projects like react-pdf-headless and pdfreader. Licensed under MIT by Unriddle AI.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.