ramalama

RamaLama is an open-source developer tool that simplifies the local serving of AI models from any source and facilitates their use for inference in production, all through the familiar language of containers.

Stars: 2584

The Ramalama project simplifies working with AI by utilizing OCI containers. It automatically detects GPU support, pulls necessary software in a container, and runs AI models. Users can list, pull, run, and serve models easily. The tool aims to support various GPUs and platforms in the future, making AI setup hassle-free.

README:

RamaLama strives to make working with AI simple, straightforward, and familiar by using OCI containers.

RamaLama is an open-source tool that simplifies the local use and serving of AI models for inference from any source through the familiar approach of containers. It allows engineers to use container-centric development patterns and benefits to extend to AI use cases.

RamaLama eliminates the need to configure the host system by instead pulling a container image specific to the GPUs discovered on the host system, and allowing you to work with various models and platforms.

- Eliminates the complexity for users to configure the host system for AI.

- Detects and pulls an accelerated container image specific to the GPUs on the host system, handling dependencies and hardware optimization.

- RamaLama supports multiple AI model registries, including OCI Container Registries.

- Models are treated similarly to how Podman and Docker treat container images.

- Use common container commands to work with AI models.

- Run AI models securely in rootless containers, isolating the model from the underlying host.

- Keep data secure by defaulting to no network access and removing all temporary data on application exits.

- Interact with models via REST API or as a chatbot.

Download the self-contained macOS installer that includes Python and all dependencies:

- Download the latest

.pkginstaller from Releases - Double-click to install, or run:

sudo installer -pkg RamaLama-*-macOS-Installer.pkg -target /

See macOS Installation Guide for detailed instructions.

RamaLama is available in Fedora and later. To install it, run:

sudo dnf install ramalama

RamaLama is available via PyPI at https://pypi.org/project/ramalama

pip install ramalama

Install RamaLama by running:

curl -fsSL https://ramalama.ai/install.sh | bash

RamaLama supports Windows with Docker Desktop or Podman Desktop:

pip install ramalamaRequirements:

- Python 3.10 or later

- Docker Desktop or Podman Desktop with WSL2 backend

- For GPU support, see NVIDIA GPU Setup for WSL2

Note: Windows support requires running containers via Docker/Podman. The model store uses hardlinks (no admin required) or falls back to file copies if hardlinks are unavailable.

If you installed RamaLama using pip, you can uninstall it with:

pip uninstall ramalamaIf you installed RamaLama using DNF:

sudo dnf remove ramalamaTo remove RamaLama installed via the .pkg installer:

# Remove the executable

sudo rm /usr/local/bin/ramalama

# Remove configuration and data files

sudo rm -rf /usr/local/share/ramalama

# Remove man pages (optional)

sudo rm /usr/local/share/man/man1/ramalama*.1

sudo rm /usr/local/share/man/man5/ramalama*.5

sudo rm /usr/local/share/man/man7/ramalama*.7

# Remove shell completions (optional)

sudo rm /usr/local/share/bash-completion/completions/ramalama

sudo rm /usr/local/share/fish/vendor_completions.d/ramalama.fish

sudo rm /usr/local/share/zsh/site-functions/_ramalamaSee the macOS Installation Guide for more details.

After uninstalling RamaLama using any method above, you may want to remove downloaded models and configuration files from your home directory:

# Remove downloaded models and data (can be large)

rm -rf ~/.local/share/ramalama

# Remove configuration files

rm -rf ~/.config/ramalama

# If you ran RamaLama as root, also remove:

sudo rm -rf /var/lib/ramalamaNote: The model data directory (~/.local/share/ramalama) can be quite large depending on how many models you've downloaded. Make sure you want to remove these files before running the commands above.

| Accelerator | Image |

|---|---|

| GGML_VK_VISIBLE_DEVICES (or CPU) | quay.io/ramalama/ramalama |

| HIP_VISIBLE_DEVICES | quay.io/ramalama/rocm |

| CUDA_VISIBLE_DEVICES | quay.io/ramalama/cuda |

| ASAHI_VISIBLE_DEVICES | quay.io/ramalama/asahi |

| INTEL_VISIBLE_DEVICES | quay.io/ramalama/intel-gpu |

| ASCEND_VISIBLE_DEVICES | quay.io/ramalama/cann |

| MUSA_VISIBLE_DEVICES | quay.io/ramalama/musa |

On first run, RamaLama inspects your system for GPU support, falling back to CPU if none are present. RamaLama uses container engines like Podman or Docker to pull the appropriate OCI image with all necessary software to run an AI Model for your system setup.

How does RamaLama select the right image?

After initialization, RamaLama runs AI Models within a container based on the OCI image. RamaLama pulls container images specific to the GPUs discovered on your system. These images are tied to the minor version of RamaLama.

- For example, RamaLama version 1.2.3 on an NVIDIA system pulls quay.io/ramalama/cuda:1.2. To override the default image, use the

--imageoption.

RamaLama then pulls AI Models from model registries, starting a chatbot or REST API service from a simple single command. Models are treated similarly to how Podman and Docker treat container images.

| Hardware | Enabled |

|---|---|

| CPU | ✓ |

| Apple Silicon GPU (Linux / Asahi) | ✓ |

| Apple Silicon GPU (macOS) | ✓ llama.cpp or MLX |

| Apple Silicon GPU (podman-machine) | ✓ |

| Nvidia GPU (cuda) | ✓ See note below |

| AMD GPU (rocm, vulkan) | ✓ |

| Ascend NPU (Linux) | ✓ |

| Intel ARC GPUs (Linux) | ✓ See note below |

| Intel GPUs (vulkan / Linux) | ✓ |

| Moore Threads GPU (musa / Linux) | ✓ See note below |

| Windows (with Docker/Podman) | ✓ Requires WSL2 |

On systems with NVIDIA GPUs, see ramalama-cuda documentation for the correct host system configuration.

The following Intel GPUs are auto-detected by RamaLama:

| GPU ID | Description |

|---|---|

0xe20b |

Intel® Arc™ B580 Graphics |

0xe20c |

Intel® Arc™ B570 Graphics |

0x7d51 |

Intel® Graphics - Arrow Lake-H |

0x7dd5 |

Intel® Graphics - Meteor Lake |

0x7d55 |

Intel® Arc™ Graphics - Meteor Lake |

See the Intel hardware table for more information.

On systems with Moore Threads GPUs, see ramalama-musa documentation for the correct host system configuration.

The MLX runtime provides optimized inference for Apple Silicon Macs. MLX requires:

- macOS operating system

- Apple Silicon hardware (M1, M2, M3, or later)

- Usage with

--nocontaineroption (containers are not supported) - The

mlx-lmuv package installed on the host system as a uv tool

To install and run Phi-4 on MLX, use uv. If uv is not installed, you can install it with curl -LsSf https://astral.sh/uv/install.sh | sh:

uv tool install mlx-lm

# or upgrade to the latest version:

uv tool upgrade mlx-lm

ramalama --runtime=mlx serve hf://mlx-community/Unsloth-Phi-4-4bitWhen both Podman and Docker are installed, RamaLama defaults to Podman. The RAMALAMA_CONTAINER_ENGINE=docker environment variable can override this behaviour. When neither are installed, RamaLama will attempt to run the model with software on the local system.

Because RamaLama defaults to running AI models inside rootless containers using Podman or Docker, these containers isolate the AI models from information on the underlying host. With RamaLama containers, the AI model is mounted as a volume into the container in read-only mode.

This results in the process running the model (llama.cpp or vLLM) being isolated from the host. Additionally, since ramalama run uses the --network=none option, the container cannot reach the network and leak any information out of the system. Finally, containers are run with the --rm option, which means any content written during container execution is deleted when the application exits.

- Container Isolation – AI models run within isolated containers, preventing direct access to the host system.

- Read-Only Volume Mounts – The AI model is mounted in read-only mode, which means that processes inside the container cannot modify the host files.

-

No Network Access – ramalama run is executed with

--network=none, meaning the model has no outbound connectivity for which information can be leaked. -

Auto-Cleanup – Containers run with

--rm, wiping out any temporary data once the session ends. - Drop All Linux Capabilities – No access to Linux capabilities to attack the underlying host.

- No New Privileges – Linux Kernel feature that disables container processes from gaining additional privileges.

RamaLama supports multiple AI model registries types called transports.

| Transports | Web Site |

|---|---|

| HuggingFace | huggingface.co |

| ModelScope | modelscope.cn |

| Ollama | ollama.com |

| RamaLama Labs Container Registry | ramalama.com |

| OCI Container Registries | opencontainers.org |

Examples: quay.io, Docker Hub, Pulp, and Artifactory

|

RamaLama uses the Ollama registry transport by default

How to change transports.

Use the RAMALAMA_TRANSPORT environment variable to modify the default. export RAMALAMA_TRANSPORT=huggingface Changes RamaLama to use huggingface transport.

Individual model transports can be modified when specifying a model via the huggingface://, oci://, modelscope://, ollama://, or rlcr:// prefix.

Example:

ramalama pull huggingface://afrideva/Tiny-Vicuna-1B-GGUF/tiny-vicuna-1b.q2_k.gguf

To make it easier for users, RamaLama uses shortname files, which contain alias names for fully specified AI Models, allowing users to refer to models using shorter names.

More information on shortnames.

RamaLama reads shortnames.conf files if they exist. These files contain a list of name-value pairs that specify the model. The following table specifies the order in which RamaLama reads the files. Any duplicate names that exist override previously defined shortnames.

| Shortnames type | Path |

|---|---|

| Development | ./shortnames.conf |

| User (Config) | $HOME/.config/ramalama/shortnames.conf |

| User (Local Share) | $HOME/.local/share/ramalama/shortnames.conf |

| Administrators | /etc/ramalama/shortnames.conf |

| Distribution | /usr/share/ramalama/shortnames.conf |

| Local Distribution | /usr/local/share/ramalama/shortnames.conf |

$ cat /usr/share/ramalama/shortnames.conf

[shortnames]

"tiny" = "ollama://tinyllama"

"granite" = "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf"

"granite:7b" = "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf"

"ibm/granite" = "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf"

"merlinite" = "huggingface://instructlab/merlinite-7b-lab-GGUF/merlinite-7b-lab-Q4_K_M.gguf"

"merlinite:7b" = "huggingface://instructlab/merlinite-7b-lab-GGUF/merlinite-7b-lab-Q4_K_M.gguf"

...

-

Benchmark specified AI Model

$ ramalama bench granite3-moe

-

List all containers running AI Models

$ ramalama containersReturns for example:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 85ad75ecf866 quay.io/ramalama/ramalama:latest /usr/bin/ramalama... 5 hours ago Up 5 hours 0.0.0.0:8080->8080/tcp ramalama_s3Oh6oDfOP 85ad75ecf866 quay.io/ramalama/ramalama:latest /usr/bin/ramalama... 4 minutes ago Exited (0) 4 minutes ago granite-server

-

List all containers in a particular format

$ ramalama ps --noheading --format "{{ .Names }}"Returns for example:

ramalama_s3Oh6oDfOP granite-server

-

Generate an oci model out of an Ollama model.

$ ramalama convert ollama://tinyllama:latest oci://quay.io/rhatdan/tiny:latestReturns for example:

Building quay.io/rhatdan/tiny:latest... STEP 1/2: FROM scratch STEP 2/2: COPY sha256:2af3b81862c6be03c769683af18efdadb2c33f60ff32ab6f83e42c043d6c7816 /model --> Using cache 69db4a10191c976d2c3c24da972a2a909adec45135a69dbb9daeaaf2a3a36344 COMMIT quay.io/rhatdan/tiny:latest --> 69db4a10191c Successfully tagged quay.io/rhatdan/tiny:latest 69db4a10191c976d2c3c24da972a2a909adec45135a69dbb9daeaaf2a3a36344

-

Generate and run an OCI model with a quantized GGUF converted from Safetensors.

Generate OCI model

$ ramalama --image quay.io/ramalama/ramalama-rag convert --gguf Q4_K_M hf://ibm-granite/granite-3.2-2b-instruct oci://quay.io/kugupta/granite-3.2-q4-k-m:latestReturns for example:

Converting /Users/kugupta/.local/share/ramalama/models/huggingface/ibm-granite/granite-3.2-2b-instruct to quay.io/kugupta/granite-3.2-q4-k-m:latest... Building quay.io/kugupta/granite-3.2-q4-k-m:latest...Run the generated model

$ ramalama run oci://quay.io/kugupta/granite-3.2-q4-k-m:latest

-

Info with no container engine.

$ ramalama infoReturns for example:

{ "Accelerator": "cuda", "Engine": { "Name": "" }, "Image": "quay.io/ramalama/cuda:0.7", "Inference": { "Default": "llama.cpp", "Engines": { "llama.cpp": "/usr/share/ramalama/inference-spec/engines/llama.cpp.yaml", "mlx": "/usr/share/ramalama/inference-spec/engines/mlx.yaml", "vllm": "/usr/share/ramalama/inference-spec/engines/vllm.yaml" }, "Schema": { "1-0-0": "/usr/share/ramalama/inference-spec/schema/schema.1-0-0.json" } }, "Shortnames": { "Names": { "cerebrum": "huggingface://froggeric/Cerebrum-1.0-7b-GGUF/Cerebrum-1.0-7b-Q4_KS.gguf", "deepseek": "ollama://deepseek-r1", "dragon": "huggingface://llmware/dragon-mistral-7b-v0/dragon-mistral-7b-q4_k_m.gguf", "gemma3": "hf://bartowski/google_gemma-3-4b-it-GGUF/google_gemma-3-4b-it-IQ2_M.gguf", "gemma3:12b": "hf://bartowski/google_gemma-3-12b-it-GGUF/google_gemma-3-12b-it-IQ2_M.gguf", "gemma3:1b": "hf://bartowski/google_gemma-3-1b-it-GGUF/google_gemma-3-1b-it-IQ2_M.gguf", "gemma3:27b": "hf://bartowski/google_gemma-3-27b-it-GGUF/google_gemma-3-27b-it-IQ2_M.gguf", "gemma3:4b": "hf://bartowski/google_gemma-3-4b-it-GGUF/google_gemma-3-4b-it-IQ2_M.gguf", "granite": "ollama://granite3.1-dense", "granite-code": "hf://ibm-granite/granite-3b-code-base-2k-GGUF/granite-3b-code-base.Q4_K_M.gguf", "granite-code:20b": "hf://ibm-granite/granite-20b-code-base-8k-GGUF/granite-20b-code-base.Q4_K_M.gguf", "granite-code:34b": "hf://ibm-granite/granite-34b-code-base-8k-GGUF/granite-34b-code-base.Q4_K_M.gguf", "granite-code:3b": "hf://ibm-granite/granite-3b-code-base-2k-GGUF/granite-3b-code-base.Q4_K_M.gguf", "granite-code:8b": "hf://ibm-granite/granite-8b-code-base-4k-GGUF/granite-8b-code-base.Q4_K_M.gguf", "granite-lab-7b": "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf", "granite-lab-8b": "huggingface://ibm-granite/granite-8b-code-base-GGUF/granite-8b-code-base.Q4_K_M.gguf", "granite-lab:7b": "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf", "granite:2b": "ollama://granite3.1-dense:2b", "granite:7b": "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf", "granite:8b": "ollama://granite3.1-dense:8b", "hermes": "huggingface://NousResearch/Hermes-2-Pro-Mistral-7B-GGUF/Hermes-2-Pro-Mistral-7B.Q4_K_M.gguf", "ibm/granite": "ollama://granite3.1-dense:8b", "ibm/granite:2b": "ollama://granite3.1-dense:2b", "ibm/granite:7b": "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf", "ibm/granite:8b": "ollama://granite3.1-dense:8b", "merlinite": "huggingface://instructlab/merlinite-7b-lab-GGUF/merlinite-7b-lab-Q4_K_M.gguf", "merlinite-lab-7b": "huggingface://instructlab/merlinite-7b-lab-GGUF/merlinite-7b-lab-Q4_K_M.gguf", "merlinite-lab:7b": "huggingface://instructlab/merlinite-7b-lab-GGUF/merlinite-7b-lab-Q4_K_M.gguf", "merlinite:7b": "huggingface://instructlab/merlinite-7b-lab-GGUF/merlinite-7b-lab-Q4_K_M.gguf", "mistral": "huggingface://TheBloke/Mistral-7B-Instruct-v0.2-GGUF/mistral-7b-instruct-v0.2.Q4_K_M.gguf", "mistral:7b": "huggingface://TheBloke/Mistral-7B-Instruct-v0.2-GGUF/mistral-7b-instruct-v0.2.Q4_K_M.gguf", "mistral:7b-v1": "huggingface://TheBloke/Mistral-7B-Instruct-v0.1-GGUF/mistral-7b-instruct-v0.1.Q5_K_M.gguf", "mistral:7b-v2": "huggingface://TheBloke/Mistral-7B-Instruct-v0.2-GGUF/mistral-7b-instruct-v0.2.Q4_K_M.gguf", "mistral:7b-v3": "huggingface://MaziyarPanahi/Mistral-7B-Instruct-v0.3-GGUF/Mistral-7B-Instruct-v0.3.Q4_K_M.gguf", "mistral_code_16k": "huggingface://TheBloke/Mistral-7B-Code-16K-qlora-GGUF/mistral-7b-code-16k-qlora.Q4_K_M.gguf", "mistral_codealpaca": "huggingface://TheBloke/Mistral-7B-codealpaca-lora-GGUF/mistral-7b-codealpaca-lora.Q4_K_M.gguf", "mixtao": "huggingface://MaziyarPanahi/MixTAO-7Bx2-MoE-Instruct-v7.0-GGUF/MixTAO-7Bx2-MoE-Instruct-v7.0.Q4_K_M.gguf", "openchat": "huggingface://TheBloke/openchat-3.5-0106-GGUF/openchat-3.5-0106.Q4_K_M.gguf", "openorca": "huggingface://TheBloke/Mistral-7B-OpenOrca-GGUF/mistral-7b-openorca.Q4_K_M.gguf", "phi2": "huggingface://MaziyarPanahi/phi-2-GGUF/phi-2.Q4_K_M.gguf", "smollm:135m": "ollama://smollm:135m", "tiny": "ollama://tinyllama" }, "Files": [ "/usr/share/ramalama/shortnames.conf", "/home/dwalsh/.config/ramalama/shortnames.conf", ] }, "Store": "/usr/share/ramalama", "UseContainer": true, "Version": "0.7.5" }

-

Info with Podman engine.

$ ramalama infoReturns for example:

{ "Accelerator": "cuda", "Engine": { "Info": { "host": { "arch": "amd64", "buildahVersion": "1.39.4", "cgroupControllers": [ "cpu", "io", "memory", "pids" ], "cgroupManager": "systemd", "cgroupVersion": "v2", "conmon": { "package": "conmon-2.1.13-1.fc42.x86_64", "path": "/usr/bin/conmon", "version": "conmon version 2.1.13, commit: " }, "cpuUtilization": { "idlePercent": 97.36, "systemPercent": 0.64, "userPercent": 2 }, "cpus": 32, "databaseBackend": "sqlite", "distribution": { "distribution": "fedora", "variant": "workstation", "version": "42" }, "eventLogger": "journald", "freeLocks": 2043, "hostname": "danslaptop", "idMappings": { "gidmap": [ { "container_id": 0, "host_id": 3267, "size": 1 }, { "container_id": 1, "host_id": 524288, "size": 65536 } ], "uidmap": [ { "container_id": 0, "host_id": 3267, "size": 1 }, { "container_id": 1, "host_id": 524288, "size": 65536 } ] }, "kernel": "6.14.2-300.fc42.x86_64", "linkmode": "dynamic", "logDriver": "journald", "memFree": 65281908736, "memTotal": 134690979840, "networkBackend": "netavark", "networkBackendInfo": { "backend": "netavark", "dns": { "package": "aardvark-dns-1.14.0-1.fc42.x86_64", "path": "/usr/libexec/podman/aardvark-dns", "version": "aardvark-dns 1.14.0" }, "package": "netavark-1.14.1-1.fc42.x86_64", "path": "/usr/libexec/podman/netavark", "version": "netavark 1.14.1" }, "ociRuntime": { "name": "crun", "package": "crun-1.21-1.fc42.x86_64", "path": "/usr/bin/crun", "version": "crun version 1.21\ncommit: 10269840aa07fb7e6b7e1acff6198692d8ff5c88\nrundir: /run/user/3267/crun\nspec: 1.0.0\n+SYSTEMD +SELINUX +APPARMOR +CAP +SECCOMP +EBPF +CRIU +LIBKRUN +WASM:wasmedge +YAJL" }, "os": "linux", "pasta": { "executable": "/bin/pasta", "package": "passt-0^20250415.g2340bbf-1.fc42.x86_64", "version": "" }, "remoteSocket": { "exists": true, "path": "/run/user/3267/podman/podman.sock" }, "rootlessNetworkCmd": "pasta", "security": { "apparmorEnabled": false, "capabilities": "CAP_CHOWN,CAP_DAC_OVERRIDE,CAP_FOWNER,CAP_FSETID,CAP_KILL,CAP_NET_BIND_SERVICE,CAP_SETFCAP,CAP_SETGID,CAP_SETPCAP,CAP_SETUID,CAP_SYS_CHROOT", "rootless": true, "seccompEnabled": true, "seccompProfilePath": "/usr/share/containers/seccomp.json", "selinuxEnabled": true }, "serviceIsRemote": false, "slirp4netns": { "executable": "/bin/slirp4netns", "package": "slirp4netns-1.3.1-2.fc42.x86_64", "version": "slirp4netns version 1.3.1\ncommit: e5e368c4f5db6ae75c2fce786e31eef9da6bf236\nlibslirp: 4.8.0\nSLIRP_CONFIG_VERSION_MAX: 5\nlibseccomp: 2.5.5" }, "swapFree": 8589930496, "swapTotal": 8589930496, "uptime": "116h 35m 40.00s (Approximately 4.83 days)", "variant": "" }, "plugins": { "authorization": null, "log": [ "k8s-file", "none", "passthrough", "journald" ], "network": [ "bridge", "macvlan", "ipvlan" ], "volume": [ "local" ] }, "registries": { "search": [ "registry.fedoraproject.org", "registry.access.redhat.com", "docker.io" ] }, "store": { "configFile": "/home/dwalsh/.config/containers/storage.conf", "containerStore": { "number": 5, "paused": 0, "running": 0, "stopped": 5 }, "graphDriverName": "overlay", "graphOptions": {}, "graphRoot": "/usr/share/containers/storage", "graphRootAllocated": 2046687182848, "graphRootUsed": 399990419456, "graphStatus": { "Backing Filesystem": "btrfs", "Native Overlay Diff": "true", "Supports d_type": "true", "Supports shifting": "false", "Supports volatile": "true", "Using metacopy": "false" }, "imageCopyTmpDir": "/var/tmp", "imageStore": { "number": 297 }, "runRoot": "/run/user/3267/containers", "transientStore": false, "volumePath": "/usr/share/containers/storage/volumes" }, "version": { "APIVersion": "5.4.2", "BuildOrigin": "Fedora Project", "Built": 1743552000, "BuiltTime": "Tue Apr 1 19:00:00 2025", "GitCommit": "be85287fcf4590961614ee37be65eeb315e5d9ff", "GoVersion": "go1.24.1", "Os": "linux", "OsArch": "linux/amd64", "Version": "5.4.2" } }, "Name": "podman" }, "Image": "quay.io/ramalama/cuda:0.7", "Inference": { "Default": "llama.cpp", "Engines": { "llama.cpp": "/usr/share/ramalama/inference-spec/engines/llama.cpp.yaml", "mlx": "/usr/share/ramalama/inference-spec/engines/mlx.yaml", "vllm": "/usr/share/ramalama/inference-spec/engines/vllm.yaml" }, "Schema": { "1-0-0": "/usr/share/ramalama/inference-spec/schema/schema.1-0-0.json" } }, "Shortnames": { "Names": { "cerebrum": "huggingface://froggeric/Cerebrum-1.0-7b-GGUF/Cerebrum-1.0-7b-Q4_KS.gguf", "deepseek": "ollama://deepseek-r1", "dragon": "huggingface://llmware/dragon-mistral-7b-v0/dragon-mistral-7b-q4_k_m.gguf", "gemma3": "hf://bartowski/google_gemma-3-4b-it-GGUF/google_gemma-3-4b-it-IQ2_M.gguf", "gemma3:12b": "hf://bartowski/google_gemma-3-12b-it-GGUF/google_gemma-3-12b-it-IQ2_M.gguf", "gemma3:1b": "hf://bartowski/google_gemma-3-1b-it-GGUF/google_gemma-3-1b-it-IQ2_M.gguf", "gemma3:27b": "hf://bartowski/google_gemma-3-27b-it-GGUF/google_gemma-3-27b-it-IQ2_M.gguf", "gemma3:4b": "hf://bartowski/google_gemma-3-4b-it-GGUF/google_gemma-3-4b-it-IQ2_M.gguf", "granite": "ollama://granite3.1-dense", "granite-code": "hf://ibm-granite/granite-3b-code-base-2k-GGUF/granite-3b-code-base.Q4_K_M.gguf", "granite-code:20b": "hf://ibm-granite/granite-20b-code-base-8k-GGUF/granite-20b-code-base.Q4_K_M.gguf", "granite-code:34b": "hf://ibm-granite/granite-34b-code-base-8k-GGUF/granite-34b-code-base.Q4_K_M.gguf", "granite-code:3b": "hf://ibm-granite/granite-3b-code-base-2k-GGUF/granite-3b-code-base.Q4_K_M.gguf", "granite-code:8b": "hf://ibm-granite/granite-8b-code-base-4k-GGUF/granite-8b-code-base.Q4_K_M.gguf", "granite-lab-7b": "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf", "granite-lab-8b": "huggingface://ibm-granite/granite-8b-code-base-GGUF/granite-8b-code-base.Q4_K_M.gguf", "granite-lab:7b": "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf", "granite:2b": "ollama://granite3.1-dense:2b", "granite:7b": "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf", "granite:8b": "ollama://granite3.1-dense:8b", "hermes": "huggingface://NousResearch/Hermes-2-Pro-Mistral-7B-GGUF/Hermes-2-Pro-Mistral-7B.Q4_K_M.gguf", "ibm/granite": "ollama://granite3.1-dense:8b", "ibm/granite:2b": "ollama://granite3.1-dense:2b", "ibm/granite:7b": "huggingface://instructlab/granite-7b-lab-GGUF/granite-7b-lab-Q4_K_M.gguf", "ibm/granite:8b": "ollama://granite3.1-dense:8b", "merlinite": "huggingface://instructlab/merlinite-7b-lab-GGUF/merlinite-7b-lab-Q4_K_M.gguf", "merlinite-lab-7b": "huggingface://instructlab/merlinite-7b-lab-GGUF/merlinite-7b-lab-Q4_K_M.gguf", "merlinite-lab:7b": "huggingface://instructlab/merlinite-7b-lab-GGUF/merlinite-7b-lab-Q4_K_M.gguf", "merlinite:7b": "huggingface://instructlab/merlinite-7b-lab-GGUF/merlinite-7b-lab-Q4_K_M.gguf", "mistral": "huggingface://TheBloke/Mistral-7B-Instruct-v0.2-GGUF/mistral-7b-instruct-v0.2.Q4_K_M.gguf", "mistral:7b": "huggingface://TheBloke/Mistral-7B-Instruct-v0.2-GGUF/mistral-7b-instruct-v0.2.Q4_K_M.gguf", "mistral:7b-v1": "huggingface://TheBloke/Mistral-7B-Instruct-v0.1-GGUF/mistral-7b-instruct-v0.1.Q5_K_M.gguf", "mistral:7b-v2": "huggingface://TheBloke/Mistral-7B-Instruct-v0.2-GGUF/mistral-7b-instruct-v0.2.Q4_K_M.gguf", "mistral:7b-v3": "huggingface://MaziyarPanahi/Mistral-7B-Instruct-v0.3-GGUF/Mistral-7B-Instruct-v0.3.Q4_K_M.gguf", "mistral_code_16k": "huggingface://TheBloke/Mistral-7B-Code-16K-qlora-GGUF/mistral-7b-code-16k-qlora.Q4_K_M.gguf", "mistral_codealpaca": "huggingface://TheBloke/Mistral-7B-codealpaca-lora-GGUF/mistral-7b-codealpaca-lora.Q4_K_M.gguf", "mixtao": "huggingface://MaziyarPanahi/MixTAO-7Bx2-MoE-Instruct-v7.0-GGUF/MixTAO-7Bx2-MoE-Instruct-v7.0.Q4_K_M.gguf", "openchat": "huggingface://TheBloke/openchat-3.5-0106-GGUF/openchat-3.5-0106.Q4_K_M.gguf", "openorca": "huggingface://TheBloke/Mistral-7B-OpenOrca-GGUF/mistral-7b-openorca.Q4_K_M.gguf", "phi2": "huggingface://MaziyarPanahi/phi-2-GGUF/phi-2.Q4_K_M.gguf", "smollm:135m": "ollama://smollm:135m", "tiny": "ollama://tinyllama" }, "Files": [ "/usr/share/ramalama/shortnames.conf", "/home/dwalsh/.config/ramalama/shortnames.conf", ] }, "Store": "/usr/share/ramalama", "UseContainer": true, "Version": "0.7.5" }

-

Using jq to print specific `ramalama info` content.

$ ramalama info | jq .Shortnames.Names.mixtaoReturns for example:

"huggingface://MaziyarPanahi/MixTAO-7Bx2-MoE-Instruct-v7.0-GGUF/MixTAO-7Bx2-MoE-Instruct-v7.0.Q4_K_M.gguf"

-

Inspect the smollm:135m model for basic information.

$ ramalama inspect smollm:135mReturns for example:

smollm:135m Path: /var/lib/ramalama/models/ollama/smollm:135m Registry: ollama Format: GGUF Version: 3 Endianness: little Metadata: 39 entries Tensors: 272 entries

-

Inspect the smollm:135m model for all information in json format.

$ ramalama inspect smollm:135m --all --jsonReturns for example:

{ "Name": "smollm:135m", "Path": "/home/mengel/.local/share/ramalama/models/ollama/smollm:135m", "Registry": "ollama", "Format": "GGUF", "Version": 3, "LittleEndian": true, "Metadata": { "general.architecture": "llama", "general.base_model.0.name": "SmolLM 135M", "general.base_model.0.organization": "HuggingFaceTB", "general.base_model.0.repo_url": "https://huggingface.co/HuggingFaceTB/SmolLM-135M", ... }, "Tensors": [ { "dimensions": [ 576, 49152 ], "n_dimensions": 2, "name": "token_embd.weight", "offset": 0, "type": 8 }, ... ] }

-

You can `list` all models pulled into local storage.

$ ramalama listReturns for example:

NAME MODIFIED SIZE ollama://smollm:135m 16 hours ago 5.5M huggingface://afrideva/Tiny-Vicuna-1B-GGUF/tiny-vicuna-1b.q2_k.gguf 14 hours ago 460M ollama://moondream:latest 6 days ago 791M ollama://phi4:latest 6 days ago 8.43 GB ollama://tinyllama:latest 1 week ago 608.16 MB ollama://granite3-moe:3b 1 week ago 1.92 GB ollama://granite3-moe:latest 3 months ago 1.92 GB ollama://llama3.1:8b 2 months ago 4.34 GB ollama://llama3.1:latest 2 months ago 4.34 GB

-

Log in to quay.io/username oci registry

$ export RAMALAMA_TRANSPORT=quay.io/username $ ramalama login -u username

-

Log in to Ollama registry

$ export RAMALAMA_TRANSPORT=ollama $ ramalama login

-

Log in to huggingface registry

$ export RAMALAMA_TRANSPORT=huggingface $ ramalama login --token=XYZLogging in to Hugging Face requires the

hf tool. For installation and usage instructions, see the documentation of the Hugging Face command line interface.

-

Log out from quay.io/username oci repository

$ ramalama logout quay.io/username

-

Log out from Ollama registry

$ ramalama logout ollama

-

Log out from huggingface

$ ramalama logout huggingface

-

Calculate the perplexity of an AI Model.

Perplexity measures how well the model can predict the next token with lower values being better

$ ramalama perplexity granite3-moe

-

Pull a model

You can

pulla model using thepullcommand. By default, it pulls from the Ollama registry.$ ramalama pull granite3-moe

-

Push specified AI Model (OCI-only at present)

A model can from RamaLama model storage in Huggingface, Ollama, or OCI Model format. The model can also just be a model stored on disk

$ ramalama push oci://quay.io/rhatdan/tiny:latest

Generate and convert Retrieval Augmented Generation (RAG) data from provided documents into an OCI Image.

[!NOTE] this command does not work without a container engine.

-

Generate RAG data from provided documents and convert into an OCI Image.

This command uses a specific container image containing the docling tool to convert the specified content into a RAG vector database. If the image does not exist locally, RamaLama will pull the image down and launch a container to process the data.

Positional arguments:

PATH Files/Directory containing PDF, DOCX, PPTX, XLSX, HTML, AsciiDoc & Markdown formatted files to be processed. Can be specified multiple times.

IMAGE OCI Image name to contain processed rag data

ramalama rag ./README.md https://github.com/containers/podman/blob/main/README.md quay.io/ramalama/myrag 100% |███████████████████████████████████████████████████████| 114.00 KB/ 0.00 B 922.89 KB/s 59m 59s Building quay.io/ramalama/myrag... adding vectordb... c857ebc65c641084b34e39b740fdb6a2d9d2d97be320e6aa9439ed0ab8780fe0The image can then be used with:

ramalama run --rag quay.io/ramalama/myrag instructlab/merlinite-7b-lab

-

Specify one or more AI Models to be removed from local storage.

$ ramalama rm ollama://tinyllama

-

Remove all AI Models from local storage.

$ ramalama rm --all

-

Run a chatbot on a model using the run command. By default, it pulls from the Ollama registry.

Note: RamaLama will inspect your machine for native GPU support and then will use a container engine like Podman to pull an OCI container image with the appropriate code and libraries to run the AI Model. This can take a long time to setup, but only on the first run.

$ ramalama run instructlab/merlinite-7b-lab

-

After the initial container image has been downloaded, you can interact with different models using the container image.

$ ramalama run granite3-moeReturns for example:

> Write a hello world application in python print("Hello World")

-

In a different terminal window see the running podman container.

$ podman ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 91df4a39a360 quay.io/ramalama/ramalama:latest /home/dwalsh/rama... 4 minutes ago Up 4 minutes gifted_volhard

-

Serve a model and connect via a browser.

$ ramalama serve llama3When the web UI is enabled, you can connect via your browser at: 127.0.0.1:< port > The default serving port will be 8080 if available, otherwise a free random port in the range 8081-8090. If you wish, you can specify a port to use with --port/-p.

-

Run two AI Models at the same time. Notice both are running within Podman Containers.

$ ramalama serve -d -p 8080 --name mymodel ollama://smollm:135m 09b0e0d26ed28a8418fb5cd0da641376a08c435063317e89cf8f5336baf35cfa $ ramalama serve -d -n example --port 8081 oci://quay.io/mmortari/gguf-py-example/v1/example.gguf 3f64927f11a5da5ded7048b226fbe1362ee399021f5e8058c73949a677b6ac9c $ podman ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 09b0e0d26ed2 quay.io/ramalama/ramalama:latest /usr/bin/ramalama... 32 seconds ago Up 32 seconds 0.0.0.0:8081->8081/tcp ramalama_sTLNkijNNP 3f64927f11a5 quay.io/ramalama/ramalama:latest /usr/bin/ramalama... 17 seconds ago Up 17 seconds 0.0.0.0:8082->8082/tcp ramalama_YMPQvJxN97

-

To disable the web UI, use the `--webui` off flag.

$ ramalama serve --webui off llama3

-

Stop a running model if it is running in a container.

$ ramalama stop mymodel

-

Stop all running models running in containers.

$ ramalama stop --all

-

Print the version of RamaLama.

$ ramalama versionReturns for example:

ramalama version 1.2.3

| Command | Description |

|---|---|

| ramalama(1) | primary RamaLama man page |

| ramalama-bench(1) | benchmark specified AI Model |

| ramalama-chat(1) | chat with specified OpenAI REST API |

| ramalama-containers(1) | list all RamaLama containers |

| ramalama-convert(1) | convert AI Model from local storage to OCI Image |

| ramalama-info(1) | display RamaLama configuration information |

| ramalama-inspect(1) | inspect the specified AI Model |

| ramalama-list(1) | list all downloaded AI Models |

| ramalama-login(1) | login to remote registry |

| ramalama-logout(1) | logout from remote registry |

| ramalama-perplexity(1) | calculate perplexity for specified AI Model |

| ramalama-pull(1) | pull AI Model from Model registry to local storage |

| ramalama-push(1) | push AI Model from local storage to remote registry |

| ramalama-rag(1) | generate and convert Retrieval Augmented Generation (RAG) data from provided documents into an OCI Image |

| ramalama-rm(1) | remove AI Model from local storage |

| ramalama-run(1) | run specified AI Model as a chatbot |

| ramalama-serve(1) | serve REST API on specified AI Model |

| ramalama-stop(1) | stop named container that is running AI Model |

| ramalama-version(1) | display version of RamaLama |

+---------------------------+

| |

| ramalama run granite3-moe |

| |

+-------+-------------------+

|

|

| +------------------+ +------------------+

| | Pull inferencing | | Pull model layer |

+-----------| runtime (cuda) |---------->| granite3-moe |

+------------------+ +------------------+

| Repo options: |

+-+-------+------+-+

| | |

v v v

+---------+ +------+ +----------+

| Hugging | | OCI | | Ollama |

| Face | | | | Registry |

+-------+-+ +---+--+ +-+--------+

| | |

v v v

+------------------+

| Start with |

| cuda runtime |

| and |

| granite3-moe |

+------------------+

Regarding this alpha, everything is under development, so expect breaking changes. If you need to reset your installation, see the Uninstall section above for instructions on removing RamaLama and cleaning up all data files, then reinstall.

- On certain versions of Python on macOS, certificates may not installed correctly, potentially causing SSL errors (e.g., when accessing huggingface.co). To resolve this, run the

Install Certificatescommand, typically as follows:

/Applications/Python 3.x/Install Certificates.command

This project wouldn't be possible without the help of other projects like:

so if you like this tool, give some of these repos a ⭐, and hey, give us a ⭐ too while you are at it.

For general questions and discussion, please use RamaLama's

For discussions around issues/bugs and features, you can use the GitHub Issues and PRs tracking system.

We host a public community and developer meetup on Discord every other week to discuss project direction and provide an open forum for users to get help, ask questions, and showcase new features.

Join on Discord Meeting Agenda

See the full Roadmap.

Open to contributors

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ramalama

Similar Open Source Tools

ramalama

The Ramalama project simplifies working with AI by utilizing OCI containers. It automatically detects GPU support, pulls necessary software in a container, and runs AI models. Users can list, pull, run, and serve models easily. The tool aims to support various GPUs and platforms in the future, making AI setup hassle-free.

vlmrun-hub

VLMRun Hub is a versatile tool for managing and running virtual machines in a centralized manner. It provides a user-friendly interface to easily create, start, stop, and monitor virtual machines across multiple hosts. With VLMRun Hub, users can efficiently manage their virtualized environments and streamline their workflow. The tool offers flexibility and scalability, making it suitable for both small-scale personal projects and large-scale enterprise deployments.

functionary

Functionary is a language model that interprets and executes functions/plugins. It determines when to execute functions, whether in parallel or serially, and understands their outputs. Function definitions are given as JSON Schema Objects, similar to OpenAI GPT function calls. It offers documentation and examples on functionary.meetkai.com. The newest model, meetkai/functionary-medium-v3.1, is ranked 2nd in the Berkeley Function-Calling Leaderboard. Functionary supports models with different context lengths and capabilities for function calling and code interpretation. It also provides grammar sampling for accurate function and parameter names. Users can deploy Functionary models serverlessly using Modal.com.

Bindu

Bindu is an operating layer for AI agents that provides identity, communication, and payment capabilities. It delivers a production-ready service with a convenient API to connect, authenticate, and orchestrate agents across distributed systems using open protocols: A2A, AP2, and X402. Built with a distributed architecture, Bindu makes it fast to develop and easy to integrate with any AI framework. Transform any agent framework into a fully interoperable service for communication, collaboration, and commerce in the Internet of Agents.

chatgpt-exporter

A script to export the chat history of ChatGPT. Supports exporting to text, HTML, Markdown, PNG, and JSON formats. Also allows for exporting multiple conversations at once.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

sparrow

Sparrow is an innovative open-source solution for efficient data extraction and processing from various documents and images. It seamlessly handles forms, invoices, receipts, and other unstructured data sources. Sparrow stands out with its modular architecture, offering independent services and pipelines all optimized for robust performance. One of the critical functionalities of Sparrow - pluggable architecture. You can easily integrate and run data extraction pipelines using tools and frameworks like LlamaIndex, Haystack, or Unstructured. Sparrow enables local LLM data extraction pipelines through Ollama or Apple MLX. With Sparrow solution you get API, which helps to process and transform your data into structured output, ready to be integrated with custom workflows. Sparrow Agents - with Sparrow you can build independent LLM agents, and use API to invoke them from your system. **List of available agents:** * **llamaindex** - RAG pipeline with LlamaIndex for PDF processing * **vllamaindex** - RAG pipeline with LLamaIndex multimodal for image processing * **vprocessor** - RAG pipeline with OCR and LlamaIndex for image processing * **haystack** - RAG pipeline with Haystack for PDF processing * **fcall** - Function call pipeline * **unstructured-light** - RAG pipeline with Unstructured and LangChain, supports PDF and image processing * **unstructured** - RAG pipeline with Weaviate vector DB query, Unstructured and LangChain, supports PDF and image processing * **instructor** - RAG pipeline with Unstructured and Instructor libraries, supports PDF and image processing. Works great for JSON response generation

firecrawl

Firecrawl is an API service that empowers AI applications with clean data from any website. It features advanced scraping, crawling, and data extraction capabilities. The repository is still in development, integrating custom modules into the mono repo. Users can run it locally but it's not fully ready for self-hosted deployment yet. Firecrawl offers powerful capabilities like scraping, crawling, mapping, searching, and extracting structured data from single pages, multiple pages, or entire websites with AI. It supports various formats, actions, and batch scraping. The tool is designed to handle proxies, anti-bot mechanisms, dynamic content, media parsing, change tracking, and more. Firecrawl is available as an open-source project under the AGPL-3.0 license, with additional features offered in the cloud version.

ext-apps

The @modelcontextprotocol/ext-apps repository contains the SDK and specification for MCP Apps Extension (SEP-1865). MCP Apps are a proposed standard to allow MCP Servers to display interactive UI elements in conversational MCP clients/chatbots. The repository includes SDKs for both app developers and host developers, along with examples showcasing real-world use cases. Users can build interactive UIs that run inside MCP-enabled chat clients and embed/communicate with MCP Apps in their chat applications. The SDK extends the Model Context Protocol by letting tools declare UI resources and enables bidirectional communication between the host and the UI. The repository also provides Agent Skills for building MCP Apps and instructions for installing them in AI coding agents.

gemini-openai-proxy

Gemini-OpenAI-Proxy is a proxy software designed to convert OpenAI API protocol calls into Google Gemini Pro protocol, allowing software using OpenAI protocol to utilize Gemini Pro models seamlessly. It provides an easy integration of Gemini Pro's powerful features without the need for complex development work.

LocalAGI

LocalAGI is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. It provides a complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. With LocalAGI, you can create customizable AI assistants, automations, chat bots, and agents that run 100% locally, without the need for cloud services or API keys. The platform offers features like no-code agents, web-based interface, advanced agent teaming, connectors for various platforms, comprehensive REST API, short & long-term memory capabilities, planning & reasoning, periodic tasks scheduling, memory management, multimodal support, extensible custom actions, fully customizable models, observability, and more.

optscale

OptScale is an open-source FinOps and MLOps platform that provides cloud cost optimization for all types of organizations and MLOps capabilities like experiment tracking, model versioning, ML leaderboards.

ScaleLLM

ScaleLLM is a cutting-edge inference system engineered for large language models (LLMs), meticulously designed to meet the demands of production environments. It extends its support to a wide range of popular open-source models, including Llama3, Gemma, Bloom, GPT-NeoX, and more. ScaleLLM is currently undergoing active development. We are fully committed to consistently enhancing its efficiency while also incorporating additional features. Feel free to explore our **_Roadmap_** for more details. ## Key Features * High Efficiency: Excels in high-performance LLM inference, leveraging state-of-the-art techniques and technologies like Flash Attention, Paged Attention, Continuous batching, and more. * Tensor Parallelism: Utilizes tensor parallelism for efficient model execution. * OpenAI-compatible API: An efficient golang rest api server that compatible with OpenAI. * Huggingface models: Seamless integration with most popular HF models, supporting safetensors. * Customizable: Offers flexibility for customization to meet your specific needs, and provides an easy way to add new models. * Production Ready: Engineered with production environments in mind, ScaleLLM is equipped with robust system monitoring and management features to ensure a seamless deployment experience.

ai-wechat-bot

Gewechat is a project based on the Gewechat project to implement a personal WeChat channel, using the iPad protocol for login. It can obtain wxid and send voice messages, which is more stable than the itchat protocol. The project provides documentation for the API. Users can deploy the Gewechat service and use the ai-wechat-bot project to interface with it. Configuration parameters for Gewechat and ai-wechat-bot need to be set in the config.json file. Gewechat supports sending voice messages, with limitations on the duration of received voice messages. The project has restrictions such as requiring the server to be in the same province as the device logging into WeChat, limited file download support, and support only for text and image messages.

manga-image-translator

Translate texts in manga/images. Some manga/images will never be translated, therefore this project is born. * Image/Manga Translator * Samples * Online Demo * Disclaimer * Installation * Pip/venv * Poetry * Additional instructions for **Windows** * Docker * Hosting the web server * Using as CLI * Setting Translation Secrets * Using with Nvidia GPU * Building locally * Usage * Batch mode (default) * Demo mode * Web Mode * Api Mode * Related Projects * Docs * Recommended Modules * Tips to improve translation quality * Options * Language Code Reference * Translators Reference * GPT Config Reference * Using Gimp for rendering * Api Documentation * Synchronous mode * Asynchronous mode * Manual translation * Next steps * Support Us * Thanks To All Our Contributors :

For similar tasks

ramalama

The Ramalama project simplifies working with AI by utilizing OCI containers. It automatically detects GPU support, pulls necessary software in a container, and runs AI models. Users can list, pull, run, and serve models easily. The tool aims to support various GPUs and platforms in the future, making AI setup hassle-free.

client-js

The Mistral JavaScript client is a library that allows you to interact with the Mistral AI API. With this client, you can perform various tasks such as listing models, chatting with streaming, chatting without streaming, and generating embeddings. To use the client, you can install it in your project using npm and then set up the client with your API key. Once the client is set up, you can use it to perform the desired tasks. For example, you can use the client to chat with a model by providing a list of messages. The client will then return the response from the model. You can also use the client to generate embeddings for a given input. The embeddings can then be used for various downstream tasks such as clustering or classification.

OllamaSharp

OllamaSharp is a .NET binding for the Ollama API, providing an intuitive API client to interact with Ollama. It offers support for all Ollama API endpoints, real-time streaming, progress reporting, and an API console for remote management. Users can easily set up the client, list models, pull models with progress feedback, stream completions, and build interactive chats. The project includes a demo console for exploring and managing the Ollama host.

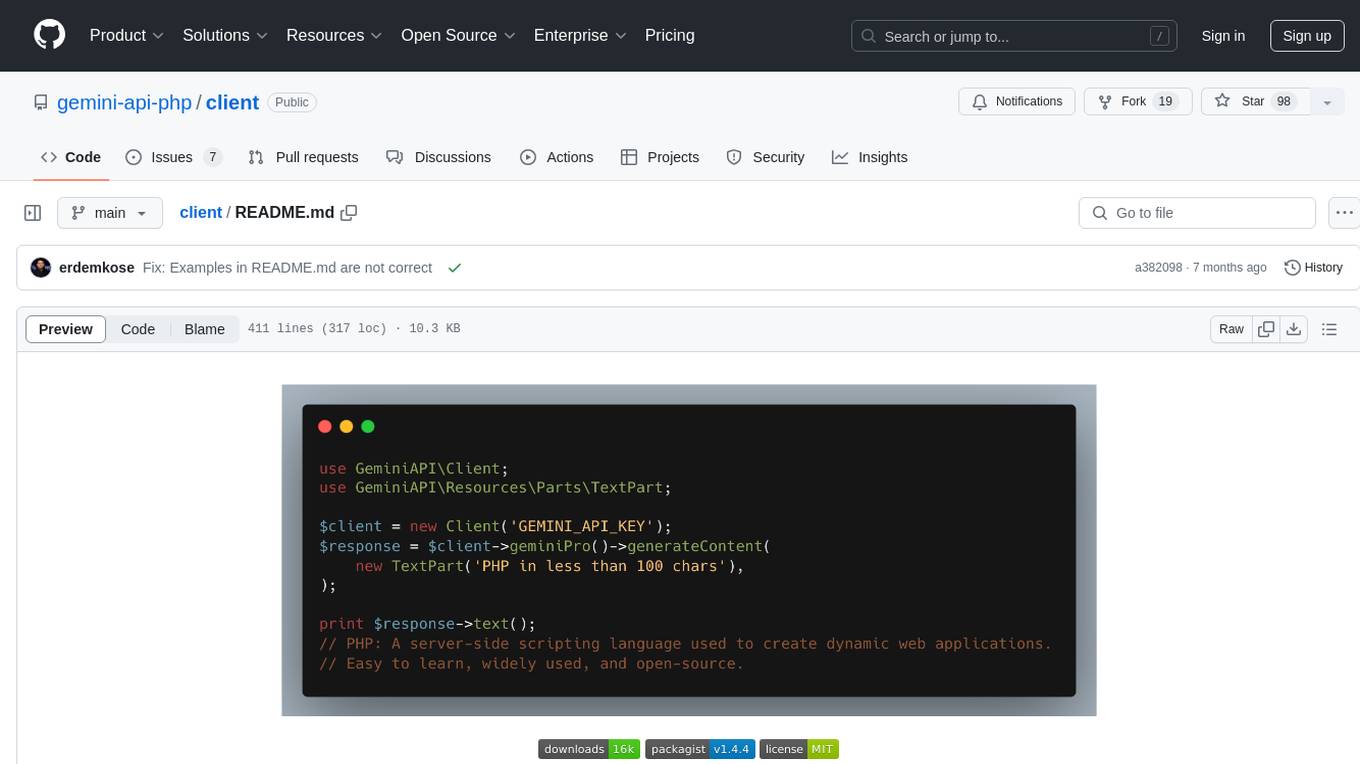

client

Gemini API PHP Client is a library that allows you to interact with Google's generative AI models, such as Gemini Pro and Gemini Pro Vision. It provides functionalities for basic text generation, multimodal input, chat sessions, streaming responses, tokens counting, listing models, and advanced usages like safety settings and custom HTTP client usage. The library requires an API key to access Google's Gemini API and can be installed using Composer. It supports various features like generating content, starting chat sessions, embedding content, counting tokens, and listing available models.

jvm-openai

jvm-openai is a minimalistic unofficial OpenAI API client for the JVM, written in Java. It serves as a Java client for OpenAI API with a focus on simplicity and minimal dependencies. The tool provides support for various OpenAI APIs and endpoints, including Audio, Chat, Embeddings, Fine-tuning, Batch, Files, Uploads, Images, Models, Moderations, Assistants, Threads, Messages, Runs, Run Steps, Vector Stores, Vector Store Files, Vector Store File Batches, Invites, Users, Projects, Project Users, Project Service Accounts, Project API Keys, and Audit Logs. Users can easily integrate this tool into their Java projects to interact with OpenAI services efficiently.

ollama-r

The Ollama R library provides an easy way to integrate R with Ollama for running language models locally on your machine. It supports working with standard data structures for different LLMs, offers various output formats, and enables integration with other libraries/tools. The library uses the Ollama REST API and requires the Ollama app to be installed, with GPU support for accelerating LLM inference. It is inspired by Ollama Python and JavaScript libraries, making it familiar for users of those languages. The installation process involves downloading the Ollama app, installing the 'ollamar' package, and starting the local server. Example usage includes testing connection, downloading models, generating responses, and listing available models.

openai-scala-client

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, chat completion, vision, and voice routines. It provides a single service called OpenAIService that supports various calls such as Models, Completions, Chat Completions, Edits, Images, Embeddings, Batches, Audio, Files, Fine-tunes, Moderations, Assistants, Threads, Thread Messages, Runs, Run Steps, Vector Stores, Vector Store Files, and Vector Store File Batches. The library aims to be self-contained with minimal dependencies and supports API-compatible providers like Azure OpenAI, Azure AI, Anthropic, Google Vertex AI, Groq, Grok, Fireworks AI, OctoAI, TogetherAI, Cerebras, Mistral, Deepseek, Ollama, FastChat, and more.

ai-models

The `ai-models` command is a tool used to run AI-based weather forecasting models. It provides functionalities to install, run, and manage different AI models for weather forecasting. Users can easily install and run various models, customize model settings, download assets, and manage input data from different sources such as ECMWF, CDS, and GRIB files. The tool is designed to optimize performance by running on GPUs and provides options for better organization of assets and output files. It offers a range of command line options for users to interact with the models and customize their forecasting tasks.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.