arcade-ai

Arcade Tool Development Kit (TDK), Worker, Evals, and CLI

Stars: 654

Arcade AI is a developer-focused tooling and API platform designed to enhance the capabilities of LLM applications and agents. It simplifies the process of connecting agentic applications with user data and services, allowing developers to concentrate on building their applications. The platform offers prebuilt toolkits for interacting with various services, supports multiple authentication providers, and provides access to different language models. Users can also create custom toolkits and evaluate their tools using Arcade AI. Contributions are welcome, and self-hosting is possible with the provided documentation.

README:

Documentation • Tools • Quickstart • Contact Us

Arcade is a developer platform that lets you build, deploy, and manage tools for AI agents.

This repository contains the core Arcade libraries, organized as separate packages for maximum flexibility and modularity:

-

arcade-core - Core platform functionality and schemas | Source code |

pip install arcade-core| -

arcade-tdk - Tool Development Kit with the

@tooldecorator | Source code |pip install arcade-tdk| -

arcade-serve - Serving infrastructure for workers and MCP servers | Source code |

pip install arcade-serve| -

arcade-evals - Evaluation framework for testing tool performance | Source code |

pip install 'arcade-ai[evals]| -

arcade-cli - Command-line interface for the Arcade platform | Source code |

pip install arcade-ai|

To learn more about Arcade.dev, check out our documentation.

Pst. hey, you, give us a star if you like it!

For development, install all packages with dependencies using uv workspace:

# Install all packages and dev dependencies

uv sync --extra all --dev

# Or use the Makefile (includes pre-commit hooks)

make installFor production use, install individual packages as needed:

pip install arcade-ai # CLI

pip install 'arcade-ai[evals]' # CLI + Evaluation framework

pip install 'arcade-ai[all]' # CLI + Serving infra + eval framework + TDK

pip install arcade_serve # Serving infrastructure

pip install arcade-tdk # Tool Development KitUse the Makefile for standard tasks:

# Run tests

make test

# Run linting and type checking

make check

# Build all packages

make build

# See all available commands

make help-

ArcadeAI/arcade-py: The Python client for interacting with Arcade.

-

ArcadeAI/arcade-js: The JavaScript client for interacting with Arcade.

-

ArcadeAI/arcade-go: The Go client for interacting with Arcade.

- Discord: Join our Discord community for real-time support and discussions.

- GitHub: Contribute or report issues on the Arcade GitHub repository.

- Documentation: Find in-depth guides and API references at Arcade Documentation.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for arcade-ai

Similar Open Source Tools

arcade-ai

Arcade AI is a developer-focused tooling and API platform designed to enhance the capabilities of LLM applications and agents. It simplifies the process of connecting agentic applications with user data and services, allowing developers to concentrate on building their applications. The platform offers prebuilt toolkits for interacting with various services, supports multiple authentication providers, and provides access to different language models. Users can also create custom toolkits and evaluate their tools using Arcade AI. Contributions are welcome, and self-hosting is possible with the provided documentation.

langchain

LangChain is a framework for building LLM-powered applications that simplifies AI application development by chaining together interoperable components and third-party integrations. It helps developers connect LLMs to diverse data sources, swap models easily, and future-proof decisions as technology evolves. LangChain's ecosystem includes tools like LangSmith for agent evals, LangGraph for complex task handling, and LangGraph Platform for deployment and scaling. Additional resources include tutorials, how-to guides, conceptual guides, a forum, API reference, and chat support.

ai-app-lab

The ai-app-lab is a high-code Python SDK Arkitect designed for enterprise developers with professional development capabilities. It provides a toolset and workflow set for developing large model applications tailored to specific business scenarios. The SDK offers highly customizable application orchestration, quality business tools, one-stop development and hosting services, security enhancements, and AI prototype application code examples. It caters to complex enterprise development scenarios, enabling the creation of highly customized intelligent applications for various industries.

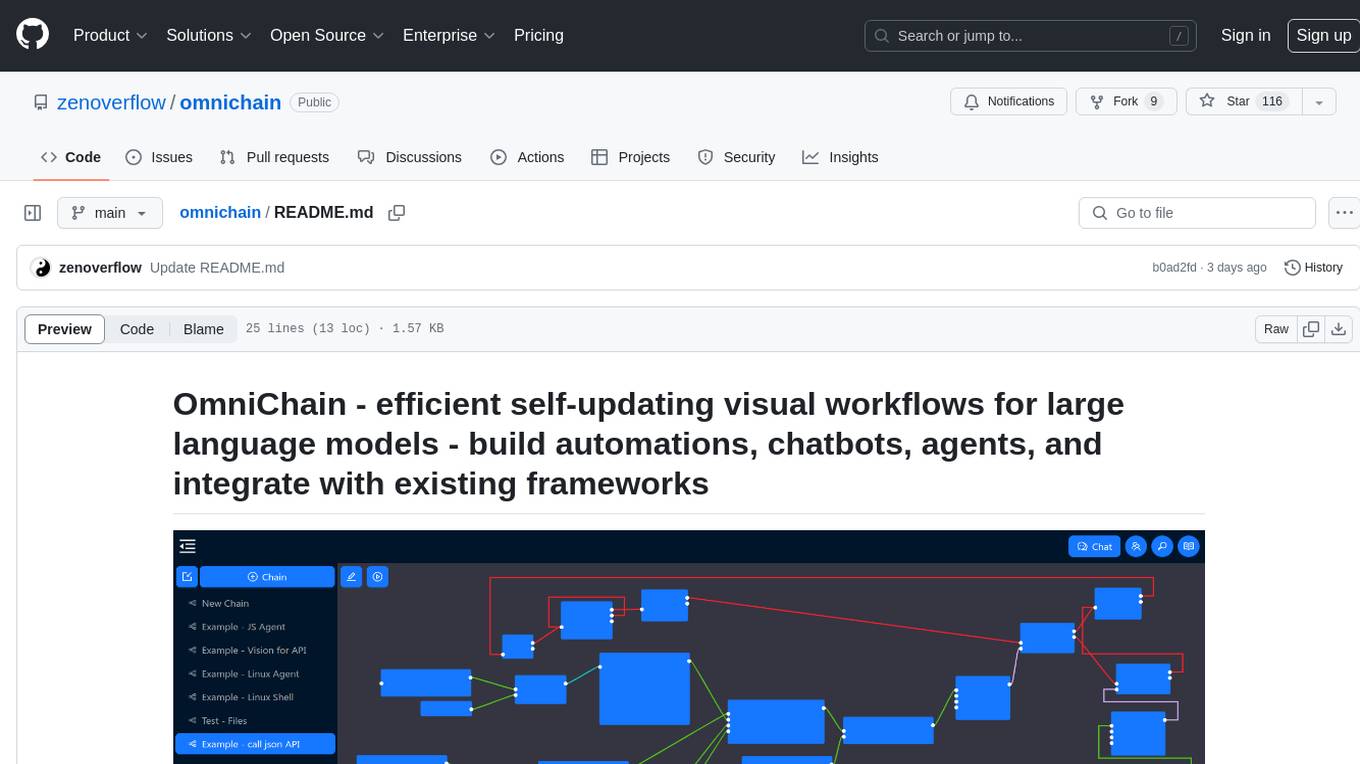

omnichain

OmniChain is a tool for building efficient self-updating visual workflows using AI language models, enabling users to automate tasks, create chatbots, agents, and integrate with existing frameworks. It allows users to create custom workflows guided by logic processes, store and recall information, and make decisions based on that information. The tool enables users to create tireless robot employees that operate 24/7, access the underlying operating system, generate and run NodeJS code snippets, and create custom agents and logic chains. OmniChain is self-hosted, open-source, and available for commercial use under the MIT license, with no coding skills required.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

trubrics-sdk

Trubrics-sdk is a software development kit designed to facilitate the integration of analytics features into applications. It provides a set of tools and functionalities that enable developers to easily incorporate analytics capabilities, such as data collection, analysis, and reporting, into their software products. The SDK streamlines the process of implementing analytics solutions, allowing developers to focus on building and enhancing their applications' functionality and user experience. By leveraging trubrics-sdk, developers can quickly and efficiently integrate robust analytics features, gaining valuable insights into user behavior and application performance.

crewAI-tools

This repository provides a guide for setting up tools for crewAI agents to enhance functionality. It offers steps to equip agents with ready-to-use tools and create custom ones. Tools are expected to return strings for generating responses. Users can create tools by subclassing BaseTool or using the tool decorator. Contributions are welcome to enrich the toolset, and guidelines are provided for contributing. The development setup includes installing dependencies, activating virtual environment, setting up pre-commit hooks, running tests, static type checking, packaging, and local installation. The goal is to empower AI solutions through advanced tooling.

sdk-python

Strands Agents is a lightweight and flexible SDK that takes a model-driven approach to building and running AI agents. It supports various model providers, offers advanced capabilities like multi-agent systems and streaming support, and comes with built-in MCP server support. Users can easily create tools using Python decorators, integrate MCP servers seamlessly, and leverage multiple model providers for different AI tasks. The SDK is designed to scale from simple conversational assistants to complex autonomous workflows, making it suitable for a wide range of AI development needs.

python-sdk

Python SDK is a software development kit that provides tools and resources for developers to interact with Python programming language. It simplifies the process of integrating Python code into applications and services, offering a wide range of functionalities and libraries to streamline development workflows. With Python SDK, developers can easily access and manipulate data, create automation scripts, build web applications, and perform various tasks efficiently. It is designed to enhance the productivity and flexibility of Python developers by providing a comprehensive set of tools and utilities for software development.

tools

This repository contains a collection of various tools and utilities that can be used for different purposes. It includes scripts, programs, and resources to assist with tasks related to software development, data analysis, automation, and more. The tools are designed to be versatile and easy to use, providing solutions for common challenges faced by developers and users alike.

odoo-llm

This repository provides a comprehensive framework for integrating Large Language Models (LLMs) into Odoo. It enables seamless interaction with AI providers like OpenAI, Anthropic, Ollama, and Replicate for chat completions, text embeddings, and more within the Odoo environment. The architecture includes external AI clients connecting via `llm_mcp_server` and Odoo AI Chat with built-in chat interface. The core module `llm` offers provider abstraction, model management, and security, along with tools for CRUD operations and domain-specific tool packs. Various AI providers, infrastructure components, and domain-specific tools are available for different tasks such as content generation, knowledge base management, and AI assistants creation.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

airstate

AirState is a straightforward software development kit that enables users to integrate real-time collaboration functionalities into their web applications. With its user-friendly interface and robust capabilities, AirState simplifies the process of incorporating live collaboration features, making it an ideal choice for developers seeking to enhance the interactive elements of their projects. The SDK offers a seamless solution for creating engaging and interactive web experiences, allowing users to easily implement real-time collaboration tools without the need for extensive coding knowledge or complex configurations. By leveraging AirState, developers can streamline the development process and deliver dynamic web applications that facilitate real-time communication and collaboration among users.

LocalLLMClient

LocalLLMClient is a Swift package designed to interact with local Large Language Models (LLMs) on Apple platforms. It supports GGUF, MLX models, and the FoundationModels framework, providing streaming API, multimodal capabilities, and tool calling functionalities. Users can easily integrate this tool to work with various models for text generation and processing. The package also includes advanced features for low-level API control and multimodal image processing. LocalLLMClient is experimental and subject to API changes, offering support for iOS, macOS, and Linux platforms.

vibe

Vibe Design System is a collection of packages for React.js development, providing components, styles, and guidelines to streamline the development process and enhance user experience. It includes a Core component library, Icons library, Testing utilities, Codemods, and more. The system also features an MCP server for intelligent assistance with component APIs, usage examples, icons, and best practices. Vibe 2 is no longer actively maintained, with users encouraged to upgrade to Vibe 3 for the latest improvements and ongoing support.

AimRT

AimRT is a basic runtime framework for modern robotics, developed in modern C++ with lightweight and easy deployment. It integrates research and development for robot applications in various deployment scenarios, providing debugging tools and observability support. AimRT offers a plug-in development interface compatible with ROS2, HTTP, Grpc, and other ecosystems for progressive system upgrades.

For similar tasks

arcade-ai

Arcade AI is a developer-focused tooling and API platform designed to enhance the capabilities of LLM applications and agents. It simplifies the process of connecting agentic applications with user data and services, allowing developers to concentrate on building their applications. The platform offers prebuilt toolkits for interacting with various services, supports multiple authentication providers, and provides access to different language models. Users can also create custom toolkits and evaluate their tools using Arcade AI. Contributions are welcome, and self-hosting is possible with the provided documentation.

prism

Prism is a Laravel package that simplifies the integration of Large Language Models (LLMs) into applications. It offers a user-friendly interface for text generation, managing multi-step conversations, and leveraging AI tools from different providers. With Prism, developers can focus on creating exceptional AI applications without being bogged down by technical complexities.

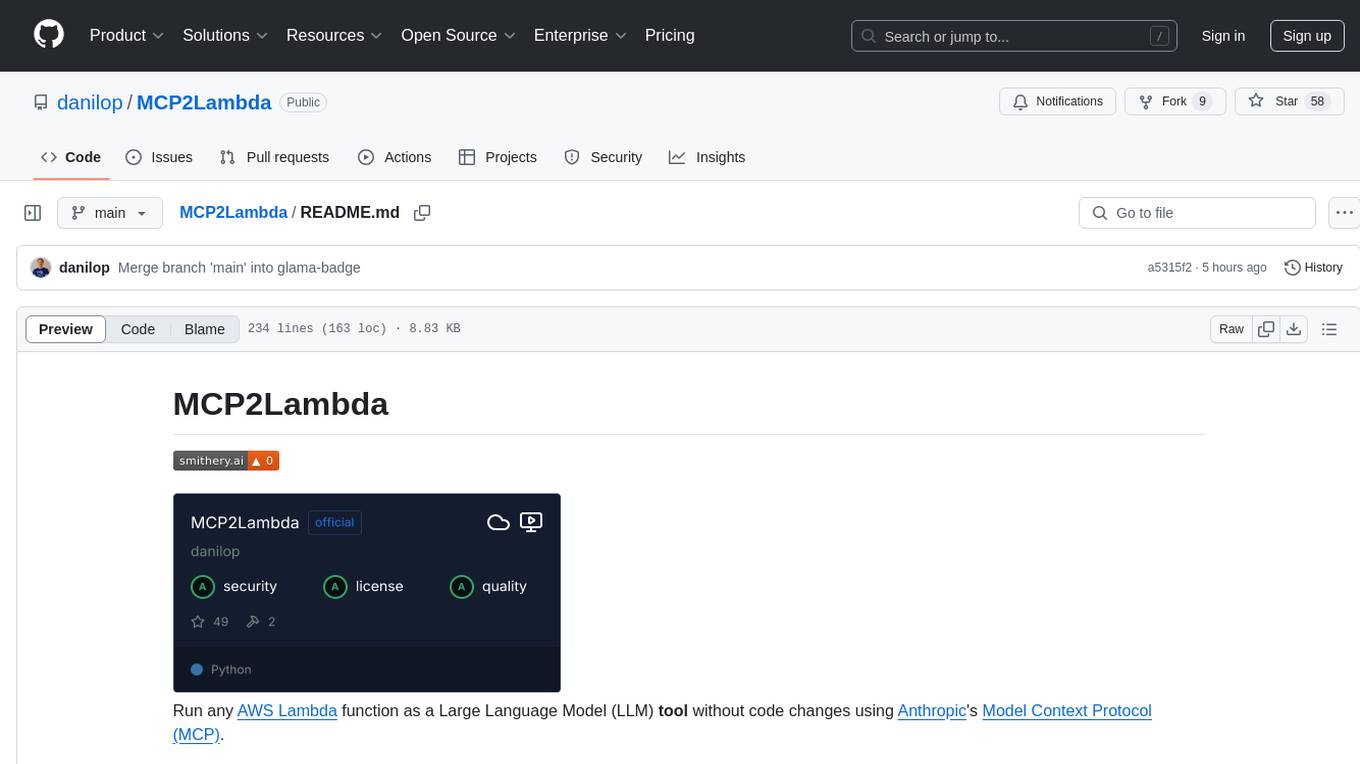

MCP2Lambda

MCP2Lambda is a server that acts as a bridge between MCP clients and AWS Lambda functions, allowing generative AI models to access and run Lambda functions as tools. It enables Large Language Models (LLMs) to interact with Lambda functions without code changes, providing access to private resources, AWS services, private networks, and the public internet. The server supports autodiscovery of Lambda functions and their invocation by name with parameters. It standardizes AI model access to external tools using the MCP protocol.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.