baibot

🤖 A Matrix bot for using different capabilities (text-generation, text-to-speech, speech-to-text, image-generation, etc.) of AI / Large Language Models (OpenAI, Anthropic, etc.)

Stars: 155

Baibot is a versatile chatbot framework designed to simplify the process of creating and deploying chatbots. It provides a user-friendly interface for building custom chatbots with various functionalities such as natural language processing, conversation flow management, and integration with external APIs. Baibot is highly customizable and can be easily extended to suit different use cases and industries. With Baibot, developers can quickly create intelligent chatbots that can interact with users in a seamless and engaging manner, enhancing user experience and automating customer support processes.

README:

🤖 baibot is an AI (Large Language Model) bot for Matrix built by etke.cc (managed Matrix servers).

The name is pronounced 'bye'-bot and is a play on AI, referencing the fictional character 🇧🇬 Bai Ganyo.

It's designed as a more private and featureful alternative to matrix-chatgpt-bot. It's influenced by chaz, but does not use the AIChat CLI tool and instead does everything in-process, without forking.

-

🎨 Encourages provider choice (Anthropic, Groq, LocalAI, OpenAI and ☁️ many more) as well as mixing & matching models:

-

Supports different use purposes (depending on the ☁️ provider & model):

- 💬 text-generation: communicating with you via text (though certain models may "see" images as well)

- 🦻 speech-to-text: turning your voice messages into text

- 🗣️ text-to-speech: turning bot or users text messages into voice messages

- 🖌️ image-generation: creating and editing images based on instructions

-

🪄 Supports seamless voice interaction (turning user voice messages into text, answering in text, then turning that text back into voice)

-

🦻 Supports transcribe-only mode (turning user voice messages into text, without doing text-generation)

-

🗣️ Supports text-to-speech-only mode (turning user text messages into voice, without doing text-generation)

-

🔒 Supports encryption for Matrix communication and Account-Data-stored configuration

-

♻️ Supports context-management handling on some models (automatically adjusting the message history length, etc.)

-

🛠️ Allows customizing much of the bot's configuration at runtime (using commands sent via chat)

-

👥 Actively maintained by the team at etke.cc

You can find more screenshots on the 🌟 Features and other 📚 Documentation pages, as well as in the docs/screenshots directory.

🗲 For a quick experiment, you can refer to the 🧑💻 development documentation which contains information on how to build and run the bot (and its various dependency services) locally.

For a real installation, see the 🚀 Installation documentation which contains information on 🐋 Running in a container and 🖥️️️️️ Running a binary.

See the bot's 📚 documentation for more information on how to use and configure the bot.

See the bot's 🧑💻 development documentation for more information on how to develop on the bot.

This bot evolves over time, sometimes with backward-incompatible changes.

When updating the bot, refer to the changelog to catch up with what's new.

-

Matrix room: #baibot:etke.cc

-

GitHub issues: etkecc/baibot/issues

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for baibot

Similar Open Source Tools

baibot

Baibot is a versatile chatbot framework designed to simplify the process of creating and deploying chatbots. It provides a user-friendly interface for building custom chatbots with various functionalities such as natural language processing, conversation flow management, and integration with external APIs. Baibot is highly customizable and can be easily extended to suit different use cases and industries. With Baibot, developers can quickly create intelligent chatbots that can interact with users in a seamless and engaging manner, enhancing user experience and automating customer support processes.

chat.md

This repository contains a chatbot tool that utilizes natural language processing to interact with users. The tool is designed to understand and respond to user input in a conversational manner, providing information and assistance. It can be integrated into various applications to enhance user experience and automate customer support. The chatbot tool is user-friendly and customizable, making it suitable for businesses looking to improve customer engagement and streamline communication.

chatmcp

Chatmcp is a chatbot framework for building conversational AI applications. It provides a flexible and extensible platform for creating chatbots that can interact with users in a natural language. With Chatmcp, developers can easily integrate chatbot functionality into their applications, enabling users to communicate with the system through text-based conversations. The framework supports various natural language processing techniques and allows for the customization of chatbot behavior and responses. Chatmcp simplifies the development of chatbots by providing a set of pre-built components and tools that streamline the creation process. Whether you are building a customer support chatbot, a virtual assistant, or a chat-based game, Chatmcp offers the necessary features and capabilities to bring your conversational AI ideas to life.

duckduckgo-ai-chat

This repository contains a chatbot tool powered by AI technology. The chatbot is designed to interact with users in a conversational manner, providing information and assistance on various topics. Users can engage with the chatbot to ask questions, seek recommendations, or simply have a casual conversation. The AI technology behind the chatbot enables it to understand natural language inputs and provide relevant responses, making the interaction more intuitive and engaging. The tool is versatile and can be customized for different use cases, such as customer support, information retrieval, or entertainment purposes. Overall, the chatbot offers a user-friendly and interactive experience, leveraging AI to enhance communication and engagement.

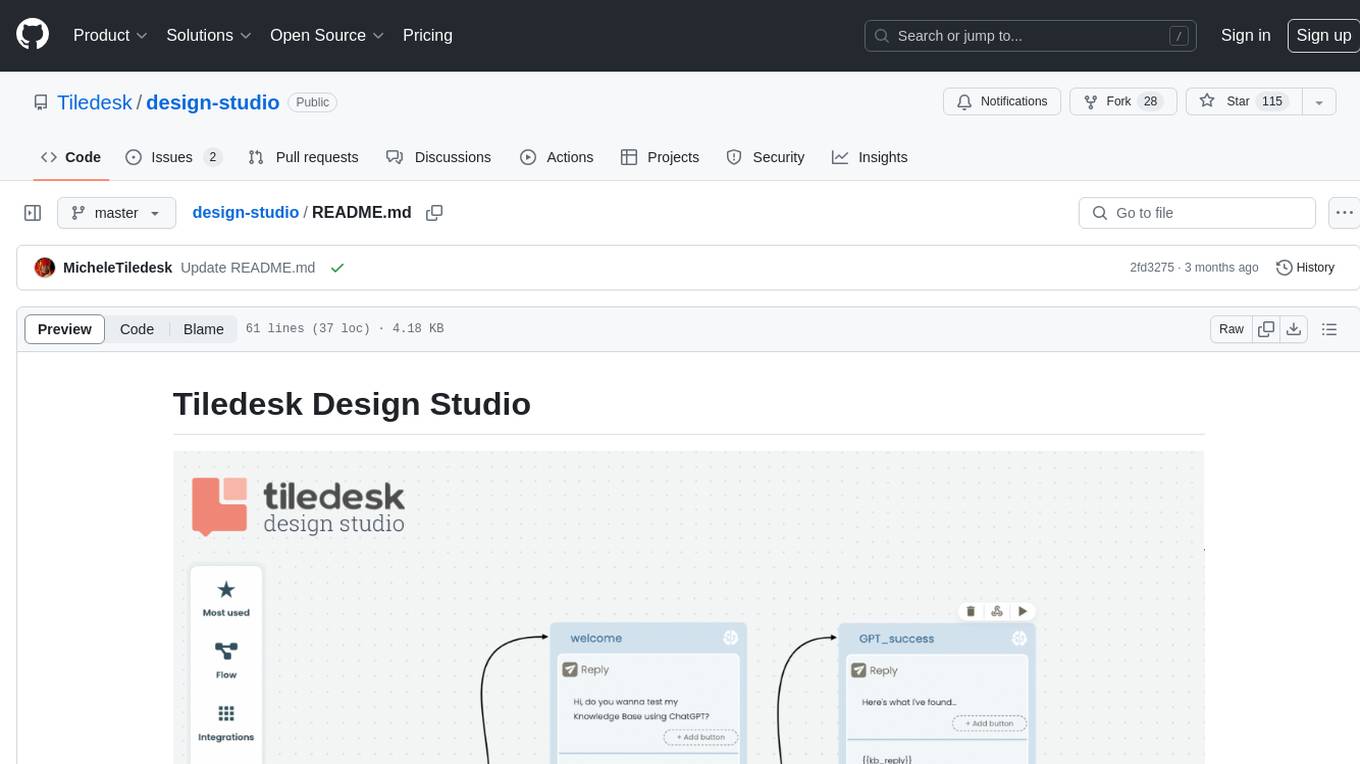

design-studio

Tiledesk Design Studio is an open-source, no-code development platform for creating chatbots and conversational apps. It offers a user-friendly, drag-and-drop interface with pre-ready actions and integrations. The platform combines the power of LLM/GPT AI with a flexible 'graph' approach for creating conversations and automations with ease. Users can automate customer conversations, prototype conversations, integrate ChatGPT, enhance user experience with multimedia, provide personalized product recommendations, set conditions, use random replies, connect to other tools like HubSpot CRM, integrate with WhatsApp, send emails, and seamlessly enhance existing setups.

omnichain

OmniChain is a tool for building efficient self-updating visual workflows using AI language models, enabling users to automate tasks, create chatbots, agents, and integrate with existing frameworks. It allows users to create custom workflows guided by logic processes, store and recall information, and make decisions based on that information. The tool enables users to create tireless robot employees that operate 24/7, access the underlying operating system, generate and run NodeJS code snippets, and create custom agents and logic chains. OmniChain is self-hosted, open-source, and available for commercial use under the MIT license, with no coding skills required.

humanlayer

HumanLayer is a Python toolkit designed to enable AI agents to interact with humans in tool-based and asynchronous workflows. By incorporating humans-in-the-loop, agentic tools can access more powerful and meaningful tasks. The toolkit provides features like requiring human approval for function calls, human as a tool for contacting humans, omni-channel contact capabilities, granular routing, and support for various LLMs and orchestration frameworks. HumanLayer aims to ensure human oversight of high-stakes function calls, making AI agents more reliable and safe in executing impactful tasks.

llama.ui

llama.ui is an open-source desktop application that provides a beautiful, user-friendly interface for interacting with large language models powered by llama.cpp. It is designed for simplicity and privacy, allowing users to chat with powerful quantized models on their local machine without the need for cloud services. The project offers multi-provider support, conversation management with indexedDB storage, rich UI components including markdown rendering and file attachments, advanced features like PWA support and customizable generation parameters, and is privacy-focused with all data stored locally in the browser.

FastGPT

FastGPT is a knowledge base Q&A system based on the LLM large language model, providing out-of-the-box data processing, model calling and other capabilities. At the same time, you can use Flow to visually arrange workflows to achieve complex Q&A scenarios!

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

promptl

Promptl is a versatile command-line tool designed to streamline the process of creating and managing prompts for user input in various programming projects. It offers a simple and efficient way to prompt users for information, validate their input, and handle different scenarios based on their responses. With Promptl, developers can easily integrate interactive prompts into their scripts, applications, and automation workflows, enhancing user experience and improving overall usability. The tool provides a range of customization options and features, making it suitable for a wide range of use cases across different programming languages and environments.

odoo-llm

This repository provides a comprehensive framework for integrating Large Language Models (LLMs) into Odoo. It enables seamless interaction with AI providers like OpenAI, Anthropic, Ollama, and Replicate for chat completions, text embeddings, and more within the Odoo environment. The architecture includes external AI clients connecting via `llm_mcp_server` and Odoo AI Chat with built-in chat interface. The core module `llm` offers provider abstraction, model management, and security, along with tools for CRUD operations and domain-specific tool packs. Various AI providers, infrastructure components, and domain-specific tools are available for different tasks such as content generation, knowledge base management, and AI assistants creation.

MaiBot

MaiBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, with LLM providing conversation abilities, MongoDB for data persistence support, and NapCat as the QQ protocol endpoint support. The project is in active development stage, with features like chat functionality, emoji functionality, schedule management, memory function, knowledge base function, and relationship function planned for future updates. The project aims to create a 'life form' active in QQ group chats, focusing on companionship and creating a more human-like presence rather than a perfect assistant. The application generates content from AI models, so users are advised to discern carefully and not use it for illegal purposes.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

For similar tasks

basdonax-ai-rag

Basdonax AI RAG v1.0 is a repository that contains all the necessary resources to create your own AI-powered secretary using the RAG from Basdonax AI. It leverages open-source models from Meta and Microsoft, namely 'Llama3-7b' and 'Phi3-4b', allowing users to upload documents and make queries. This tool aims to simplify life for individuals by harnessing the power of AI. The installation process involves choosing between different data models based on GPU capabilities, setting up Docker, pulling the desired model, and customizing the assistant prompt file. Once installed, users can access the RAG through a local link and enjoy its functionalities.

TableLLM

TableLLM is a large language model designed for efficient tabular data manipulation tasks in real office scenarios. It can generate code solutions or direct text answers for tasks like insert, delete, update, query, merge, and chart operations on tables embedded in spreadsheets or documents. The model has been fine-tuned based on CodeLlama-7B and 13B, offering two scales: TableLLM-7B and TableLLM-13B. Evaluation results show its performance on benchmarks like WikiSQL, Spider, and self-created table operation benchmark. Users can use TableLLM for code and text generation tasks on tabular data.

awesome-agents

Awesome Agents is a curated list of open source AI agents designed for various tasks such as private interactions with documents, chat implementations, autonomous research, human-behavior simulation, code generation, HR queries, domain-specific research, and more. The agents leverage Large Language Models (LLMs) and other generative AI technologies to provide solutions for complex tasks and projects. The repository includes a diverse range of agents for different use cases, from conversational chatbots to AI coding engines, and from autonomous HR assistants to vision task solvers.

Lumi-AI

Lumi AI is a friendly AI sidekick with a human-like personality that offers features like file upload and analysis, web search, local chat storage, custom instructions, changeable conversational style, enhanced context retention, voice query input, and various tools. The project has been developed with contributions from a team of developers, designers, and testers, and is licensed under Apache 2.0 and MIT licenses.

awesome-rag

Awesome RAG is a curated list of retrieval-augmented generation (RAG) in large language models. It includes papers, surveys, general resources, lectures, talks, tutorials, workshops, tools, and other collections related to retrieval-augmented generation. The repository aims to provide a comprehensive overview of the latest advancements, techniques, and applications in the field of RAG.

ai2-scholarqa-lib

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

baibot

Baibot is a versatile chatbot framework designed to simplify the process of creating and deploying chatbots. It provides a user-friendly interface for building custom chatbots with various functionalities such as natural language processing, conversation flow management, and integration with external APIs. Baibot is highly customizable and can be easily extended to suit different use cases and industries. With Baibot, developers can quickly create intelligent chatbots that can interact with users in a seamless and engaging manner, enhancing user experience and automating customer support processes.

openclaw

OpenClaw is a personal AI assistant that runs on your own devices, answering you on various channels like WhatsApp, Telegram, Slack, Discord, and more. It can speak and listen on different platforms and render a live Canvas you control. The Gateway serves as the control plane, while the assistant is the main product. It provides a local, fast, and always-on single-user assistant experience. The preferred setup involves running the onboarding wizard in your terminal to guide you through setting up the gateway, workspace, channels, and skills. The tool supports various models and authentication methods, with a focus on security and privacy.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

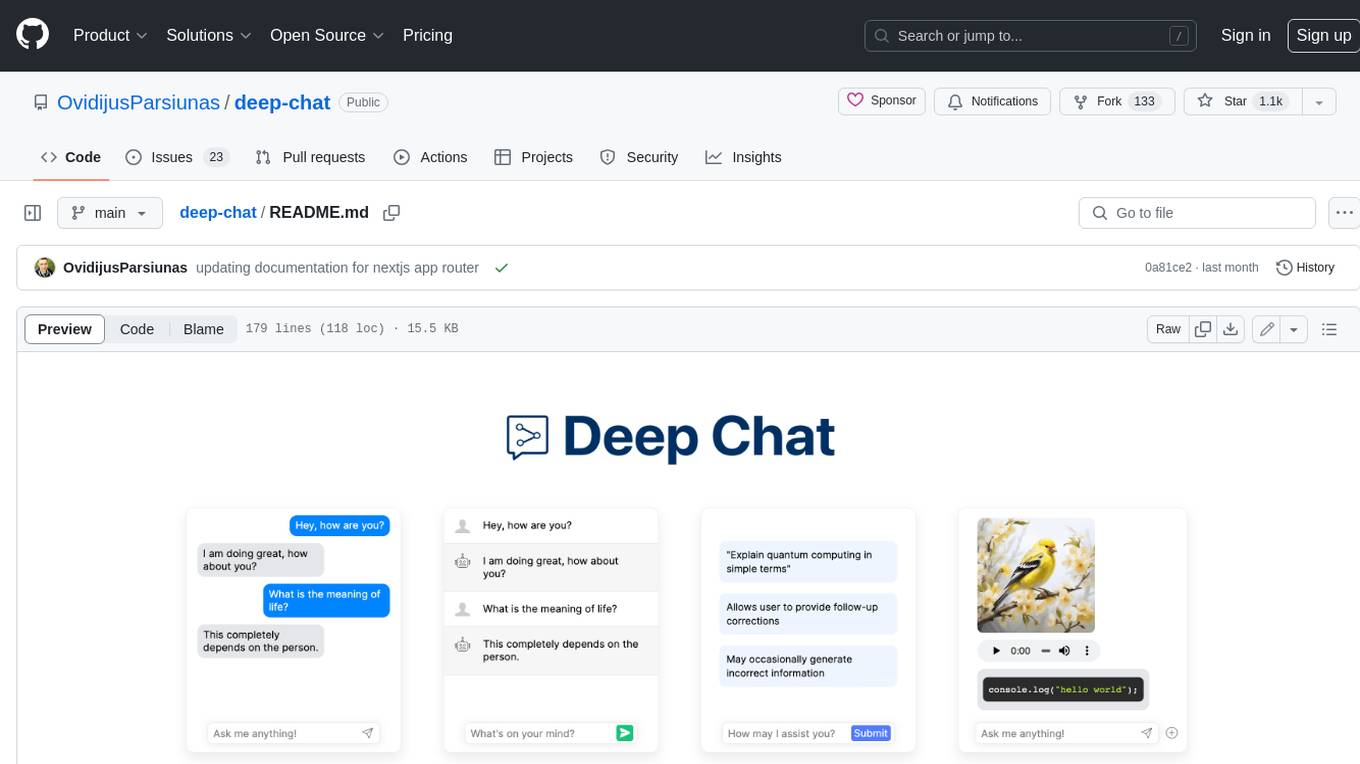

deep-chat

Deep Chat is a fully customizable AI chat component that can be injected into your website with minimal to no effort. Whether you want to create a chatbot that leverages popular APIs such as ChatGPT or connect to your own custom service, this component can do it all! Explore deepchat.dev to view all of the available features, how to use them, examples and more!

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

chatgpt-web

ChatGPT Web is a web application that provides access to the ChatGPT API. It offers two non-official methods to interact with ChatGPT: through the ChatGPTAPI (using the `gpt-3.5-turbo-0301` model) or through the ChatGPTUnofficialProxyAPI (using a web access token). The ChatGPTAPI method is more reliable but requires an OpenAI API key, while the ChatGPTUnofficialProxyAPI method is free but less reliable. The application includes features such as user registration and login, synchronization of conversation history, customization of API keys and sensitive words, and management of users and keys. It also provides a user interface for interacting with ChatGPT and supports multiple languages and themes.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

UFO

UFO is a UI-focused dual-agent framework to fulfill user requests on Windows OS by seamlessly navigating and operating within individual or spanning multiple applications.