tools

A set of tools that gives agents powerful capabilities.

Stars: 935

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more. The tools cover a wide range of functionalities including file operations, shell integration, memory storage, web infrastructure, HTTP client, Slack client, Python execution, mathematical tools, AWS integration, image and video processing, audio output, environment management, task scheduling, advanced reasoning, swarm intelligence, dynamic MCP client, parallel tool execution, browser automation, diagram creation, RSS feed management, and computer automation.

README:

Documentation ◆ Samples ◆ Python SDK ◆ Tools ◆ Agent Builder ◆ MCP Server

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more.

- 📁 File Operations - Read, write, and edit files with syntax highlighting and intelligent modifications

- 🖥️ Shell Integration - Execute and interact with shell commands securely

- 🧠 Memory - Store user and agent memories across agent runs to provide personalized experiences with both Mem0, Amazon Bedrock Knowledge Bases, Elasticsearch, and MongoDB Atlas

- 🕸️ Web Infrastructure - Perform web searches, extract page content, and crawl websites with Tavily and Exa-powered tools

- 🌐 HTTP Client - Make API requests with comprehensive authentication support

- 💬 Slack Client - Real-time Slack events, message processing, and Slack API access

- 🐍 Python Execution - Run Python code snippets with state persistence, user confirmation for code execution, and safety features

- 🧮 Mathematical Tools - Perform advanced calculations with symbolic math capabilities

- ☁️ AWS Integration - Seamless access to AWS services

- 🖼️ Image Processing - Generate and process images for AI applications

- 🎥 Video Processing - Use models and agents to generate dynamic videos

- 🎙️ Audio Output - Enable models to generate audio and speak

- 🔄 Environment Management - Handle environment variables safely

- 📝 Journaling - Create and manage structured logs and journals

- ⏱️ Task Scheduling - Schedule and manage cron jobs

- 🧠 Advanced Reasoning - Tools for complex thinking and reasoning capabilities

- 🐝 Swarm Intelligence - Coordinate multiple AI agents for parallel problem solving with shared memory

- 🔌 Dynamic MCP Client -

⚠️ Dynamically connect to external MCP servers and load remote tools (use with caution - see security warnings) - 🔄 Multiple tools in Parallel - Call multiple other tools at the same time in parallel with Batch Tool

- 🔍 Browser Tool - Tool giving an agent access to perform automated actions on a browser (chromium)

- 📈 Diagram - Create AWS cloud diagrams, basic diagrams, or UML diagrams using python libraries

- 📰 RSS Feed Manager - Subscribe, fetch, and process RSS feeds with content filtering and persistent storage

- 🖱️ Computer Tool - Automate desktop actions including mouse movements, keyboard input, screenshots, and application management

pip install strands-agents-toolsTo install the dependencies for optional tools:

pip install strands-agents-tools[mem0_memory, use_browser, rss, use_computer]# Clone the repository

git clone https://github.com/strands-agents/tools.git

cd tools

# Create and activate virtual environment

python3 -m venv .venv

source .venv/bin/activate # On Windows: venv\Scripts\activate

# Install in development mode

pip install -e ".[dev]"

# Install pre-commit hooks

pre-commit installBelow is a comprehensive table of all available tools, how to use them with an agent, and typical use cases:

| Tool | Agent Usage | Use Case |

|---|---|---|

| a2a_client | provider = A2AClientToolProvider(known_agent_urls=["http://localhost:9000"]); agent = Agent(tools=provider.tools) |

Discover and communicate with A2A-compliant agents, send messages between agents |

| file_read | agent.tool.file_read(path="path/to/file.txt") |

Reading configuration files, parsing code files, loading datasets |

| file_write | agent.tool.file_write(path="path/to/file.txt", content="file content") |

Writing results to files, creating new files, saving output data |

| editor | agent.tool.editor(command="view", path="path/to/file.py") |

Advanced file operations like syntax highlighting, pattern replacement, and multi-file edits |

| shell* | agent.tool.shell(command="ls -la") |

Executing shell commands, interacting with the operating system, running scripts |

| http_request | agent.tool.http_request(method="GET", url="https://api.example.com/data") |

Making API calls, fetching web data, sending data to external services |

| tavily_search | agent.tool.tavily_search(query="What is artificial intelligence?", search_depth="advanced") |

Real-time web search optimized for AI agents with a variety of custom parameters |

| tavily_extract | agent.tool.tavily_extract(urls=["www.tavily.com"], extract_depth="advanced") |

Extract clean, structured content from web pages with advanced processing and noise removal |

| tavily_crawl | agent.tool.tavily_crawl(url="www.tavily.com", max_depth=2, instructions="Find API docs") |

Crawl websites intelligently starting from a base URL with filtering and extraction |

| tavily_map | agent.tool.tavily_map(url="www.tavily.com", max_depth=2, instructions="Find all pages") |

Map website structure and discover URLs starting from a base URL without content extraction |

| exa_search | agent.tool.exa_search(query="Best project management tools", text=True) |

Intelligent web search with auto mode (default) that combines neural and keyword search for optimal results |

| exa_get_contents | agent.tool.exa_get_contents(urls=["https://example.com/article"], text=True, summary={"query": "key points"}) |

Extract full content and summaries from specific URLs with live crawling fallback |

| python_repl* | agent.tool.python_repl(code="import pandas as pd\ndf = pd.read_csv('data.csv')\nprint(df.head())") |

Running Python code snippets, data analysis, executing complex logic with user confirmation for security |

| calculator | agent.tool.calculator(expression="2 * sin(pi/4) + log(e**2)") |

Performing mathematical operations, symbolic math, equation solving |

| code_interpreter | code_interpreter = AgentCoreCodeInterpreter(region="us-west-2"); agent = Agent(tools=[code_interpreter.code_interpreter]) |

Execute code in isolated sandbox environments with multi-language support (Python, JavaScript, TypeScript), persistent sessions, and file operations |

| use_aws | agent.tool.use_aws(service_name="s3", operation_name="list_buckets", parameters={}, region="us-west-2") |

Interacting with AWS services, cloud resource management |

| retrieve | agent.tool.retrieve(text="What is STRANDS?") |

Retrieving information from Amazon Bedrock Knowledge Bases with optional metadata |

| nova_reels | agent.tool.nova_reels(action="create", text="A cinematic shot of mountains", s3_bucket="my-bucket") |

Create high-quality videos using Amazon Bedrock Nova Reel with configurable parameters via environment variables |

| agent_core_memory | agent.tool.agent_core_memory(action="record", content="Hello, I like vegetarian food") |

Store and retrieve memories with Amazon Bedrock Agent Core Memory service |

| mem0_memory | agent.tool.mem0_memory(action="store", content="Remember I like to play tennis", user_id="alex") |

Store user and agent memories across agent runs to provide personalized experience |

| bright_data | agent.tool.bright_data(action="scrape_as_markdown", url="https://example.com") |

Web scraping, search queries, screenshot capture, and structured data extraction from websites and different data feeds |

| memory | agent.tool.memory(action="retrieve", query="product features") |

Store, retrieve, list, and manage documents in Amazon Bedrock Knowledge Bases with configurable parameters via environment variables |

| environment | agent.tool.environment(action="list", prefix="AWS_") |

Managing environment variables, configuration management |

| generate_image_stability | agent.tool.generate_image_stability(prompt="A tranquil pool") |

Creating images using Stability AI models |

| generate_image | agent.tool.generate_image(prompt="A sunset over mountains") |

Creating AI-generated images for various applications |

| image_reader | agent.tool.image_reader(image_path="path/to/image.jpg") |

Processing and reading image files for AI analysis |

| journal | agent.tool.journal(action="write", content="Today's progress notes") |

Creating structured logs, maintaining documentation |

| think | agent.tool.think(thought="Complex problem to analyze", cycle_count=3) |

Advanced reasoning, multi-step thinking processes |

| load_tool | agent.tool.load_tool(path="path/to/custom_tool.py", name="custom_tool") |

Dynamically loading custom tools and extensions |

| swarm | agent.tool.swarm(task="Analyze this problem", swarm_size=3, coordination_pattern="collaborative") |

Coordinating multiple AI agents to solve complex problems through collective intelligence |

| current_time | agent.tool.current_time(timezone="US/Pacific") |

Get the current time in ISO 8601 format for a specified timezone |

| sleep | agent.tool.sleep(seconds=5) |

Pause execution for the specified number of seconds, interruptible with SIGINT (Ctrl+C) |

| agent_graph | agent.tool.agent_graph(agents=["agent1", "agent2"], connections=[{"from": "agent1", "to": "agent2"}]) |

Create and visualize agent relationship graphs for complex multi-agent systems |

| cron* | agent.tool.cron(action="schedule", name="task", schedule="0 * * * *", command="backup.sh") |

Schedule and manage recurring tasks with cron job syntax **Does not work on Windows |

| slack | agent.tool.slack(action="post_message", channel="general", text="Hello team!") |

Interact with Slack workspace for messaging and monitoring |

| speak | agent.tool.speak(text="Operation completed successfully", style="green", mode="polly") |

Output status messages with rich formatting and optional text-to-speech |

| stop | agent.tool.stop(message="Process terminated by user request") |

Gracefully terminate agent execution with custom message |

| handoff_to_user | agent.tool.handoff_to_user(message="Please confirm action", breakout_of_loop=False) |

Hand off control to user for confirmation, input, or complete task handoff |

| use_llm | agent.tool.use_llm(prompt="Analyze this data", system_prompt="You are a data analyst") |

Create nested AI loops with customized system prompts for specialized tasks |

| workflow | agent.tool.workflow(action="create", name="data_pipeline", steps=[{"tool": "file_read"}, {"tool": "python_repl"}]) |

Define, execute, and manage multi-step automated workflows |

| mcp_client | agent.tool.mcp_client(action="connect", connection_id="my_server", transport="stdio", command="python", args=["server.py"]) |

|

| batch | agent.tool.batch(invocations=[{"name": "current_time", "arguments": {"timezone": "Europe/London"}}, {"name": "stop", "arguments": {}}]) |

Call multiple other tools in parallel. |

| browser | browser = LocalChromiumBrowser(); agent = Agent(tools=[browser.browser]) |

Web scraping, automated testing, form filling, web automation tasks |

| diagram | agent.tool.diagram(diagram_type="cloud", nodes=[{"id": "s3", "type": "S3"}], edges=[]) |

Create AWS cloud architecture diagrams, network diagrams, graphs, and UML diagrams (all 14 types) |

| rss | agent.tool.rss(action="subscribe", url="https://example.com/feed.xml", feed_id="tech_news") |

Manage RSS feeds: subscribe, fetch, read, search, and update content from various sources |

| use_computer | agent.tool.use_computer(action="click", x=100, y=200, app_name="Chrome") |

Desktop automation, GUI interaction, screen capture |

| search_video | agent.tool.search_video(query="people discussing AI") |

Semantic video search using TwelveLabs' Marengo model |

| chat_video | agent.tool.chat_video(prompt="What are the main topics?", video_id="video_123") |

Interactive video analysis using TwelveLabs' Pegasus model |

| mongodb_memory | agent.tool.mongodb_memory(action="record", content="User prefers vegetarian pizza", connection_string="mongodb+srv://...", database_name="memories") |

Store and retrieve memories using MongoDB Atlas with semantic search via AWS Bedrock Titan embeddings |

* These tools do not work on windows

from strands import Agent

from strands_tools import file_read, file_write, editor

agent = Agent(tools=[file_read, file_write, editor])

agent.tool.file_read(path="config.json")

agent.tool.file_write(path="output.txt", content="Hello, world!")

agent.tool.editor(command="view", path="script.py")This tool is different from the static MCP server implementation in the Strands SDK (see MCP Tools Documentation) which uses pre-configured, trusted MCP servers.

from strands import Agent

from strands_tools import mcp_client

agent = Agent(tools=[mcp_client])

# Connect to a custom MCP server via stdio

agent.tool.mcp_client(

action="connect",

connection_id="my_tools",

transport="stdio",

command="python",

args=["my_mcp_server.py"]

)

# List available tools on the server

tools = agent.tool.mcp_client(

action="list_tools",

connection_id="my_tools"

)

# Call a tool from the MCP server

result = agent.tool.mcp_client(

action="call_tool",

connection_id="my_tools",

tool_name="calculate",

tool_args={"x": 10, "y": 20}

)

# Connect to a SSE-based server

agent.tool.mcp_client(

action="connect",

connection_id="web_server",

transport="sse",

server_url="http://localhost:8080/sse"

)

# Connect to a streamable HTTP server

agent.tool.mcp_client(

action="connect",

connection_id="http_server",

transport="streamable_http",

server_url="https://api.example.com/mcp",

headers={"Authorization": "Bearer token"},

timeout=60

)

# Load MCP tools into agent's registry for direct access

# ⚠️ WARNING: This loads external tools directly into the agent

agent.tool.mcp_client(

action="load_tools",

connection_id="my_tools"

)

# Now you can call MCP tools directly as: agent.tool.calculate(x=10, y=20)Note: shell does not work on Windows.

from strands import Agent

from strands_tools import shell

agent = Agent(tools=[shell])

# Execute a single command

result = agent.tool.shell(command="ls -la")

# Execute a sequence of commands

results = agent.tool.shell(command=["mkdir -p test_dir", "cd test_dir", "touch test.txt"])

# Execute commands with error handling

agent.tool.shell(command="risky-command", ignore_errors=True)from strands import Agent

from strands_tools import http_request

agent = Agent(tools=[http_request])

# Make a simple GET request

response = agent.tool.http_request(

method="GET",

url="https://api.example.com/data"

)

# POST request with authentication

response = agent.tool.http_request(

method="POST",

url="https://api.example.com/resource",

headers={"Content-Type": "application/json"},

body=json.dumps({"key": "value"}),

auth_type="Bearer",

auth_token="your_token_here"

)

# Convert HTML webpages to markdown for better readability

response = agent.tool.http_request(

method="GET",

url="https://example.com/article",

convert_to_markdown=True

)from strands import Agent

from strands_tools.tavily import (

tavily_search, tavily_extract, tavily_crawl, tavily_map

)

# For async usage, call the corresponding *_async function with await.

# Synchronous usage

agent = Agent(tools=[tavily_search, tavily_extract, tavily_crawl, tavily_map])

# Real-time web search

result = agent.tool.tavily_search(

query="Latest developments in renewable energy",

search_depth="advanced",

topic="news",

max_results=10,

include_raw_content=True

)

# Extract content from multiple URLs

result = agent.tool.tavily_extract(

urls=["www.tavily.com", "www.apple.com"],

extract_depth="advanced",

format="markdown"

)

# Advanced crawl with instructions and filtering

result = agent.tool.tavily_crawl(

url="www.tavily.com",

max_depth=2,

limit=50,

instructions="Find all API documentation and developer guides",

extract_depth="advanced",

include_images=True

)

# Basic website mapping

result = agent.tool.tavily_map(url="www.tavily.com")from strands import Agent

from strands_tools.exa import exa_search, exa_get_contents

agent = Agent(tools=[exa_search, exa_get_contents])

# Basic search (auto mode is default and recommended)

result = agent.tool.exa_search(

query="Best project management software",

text=True

)

# Company-specific search when needed

result = agent.tool.exa_search(

query="Anthropic AI safety research",

category="company",

include_domains=["anthropic.com"],

num_results=5,

summary={"query": "key research areas and findings"}

)

# News search with date filtering

result = agent.tool.exa_search(

query="AI regulation policy updates",

category="news",

start_published_date="2024-01-01T00:00:00.000Z",

text=True

)

# Get detailed content from specific URLs

result = agent.tool.exa_get_contents(

urls=[

"https://example.com/blog-post",

"https://github.com/microsoft/semantic-kernel"

],

text={"maxCharacters": 5000, "includeHtmlTags": False},

summary={

"query": "main points and practical applications"

},

subpages=2,

extras={"links": 5, "imageLinks": 2}

)

# Structured summary with JSON schema

result = agent.tool.exa_get_contents(

urls=["https://example.com/article"],

summary={

"query": "main findings and recommendations",

"schema": {

"type": "object",

"properties": {

"main_points": {"type": "string", "description": "Key points from the article"},

"recommendations": {"type": "string", "description": "Suggested actions or advice"},

"conclusion": {"type": "string", "description": "Overall conclusion"},

"relevance": {"type": "string", "description": "Why this matters"}

},

"required": ["main_points", "conclusion"]

}

}

)Note: python_repl does not work on Windows.

from strands import Agent

from strands_tools import python_repl

agent = Agent(tools=[python_repl])

# Execute Python code with state persistence

result = agent.tool.python_repl(code="""

import pandas as pd

# Load and process data

data = pd.read_csv('data.csv')

processed = data.groupby('category').mean()

processed.head()

""")from strands import Agent

from strands_tools.code_interpreter import AgentCoreCodeInterpreter

# Create the code interpreter tool

bedrock_agent_core_code_interpreter = AgentCoreCodeInterpreter(region="us-west-2")

agent = Agent(tools=[bedrock_agent_core_code_interpreter.code_interpreter])

# Create a session

agent.tool.code_interpreter({

"action": {

"type": "initSession",

"description": "Data analysis session",

"session_name": "analysis-session"

}

})

# Execute Python code

agent.tool.code_interpreter({

"action": {

"type": "executeCode",

"session_name": "analysis-session",

"code": "print('Hello from sandbox!')",

"language": "python"

}

})from strands import Agent

from strands_tools import swarm

agent = Agent(tools=[swarm])

# Create a collaborative swarm of agents to tackle a complex problem

result = agent.tool.swarm(

task="Generate creative solutions for reducing plastic waste in urban areas",

swarm_size=5,

coordination_pattern="collaborative"

)

# Create a competitive swarm for diverse solution generation

result = agent.tool.swarm(

task="Design an innovative product for smart home automation",

swarm_size=3,

coordination_pattern="competitive"

)

# Hybrid approach combining collaboration and competition

result = agent.tool.swarm(

task="Develop marketing strategies for a new sustainable fashion brand",

swarm_size=4,

coordination_pattern="hybrid"

)from strands import Agent

from strands_tools import use_aws

agent = Agent(tools=[use_aws])

# List S3 buckets

result = agent.tool.use_aws(

service_name="s3",

operation_name="list_buckets",

parameters={},

region="us-east-1",

label="List all S3 buckets"

)

# Get the contents of a specific S3 bucket

result = agent.tool.use_aws(

service_name="s3",

operation_name="list_objects_v2",

parameters={"Bucket": "example-bucket"}, # Replace with your actual bucket name

region="us-east-1",

label="List objects in a specific S3 bucket"

)

# Get the list of EC2 subnets

result = agent.tool.use_aws(

service_name="ec2",

operation_name="describe_subnets",

parameters={},

region="us-east-1",

label="List all subnets"

)from strands import Agent

from strands_tools import retrieve

agent = Agent(tools=[retrieve])

# Basic retrieval without metadata

result = agent.tool.retrieve(

text="What is artificial intelligence?"

)

# Retrieval with metadata enabled

result = agent.tool.retrieve(

text="What are the latest developments in machine learning?",

enableMetadata=True

)

# Using environment variable to set default metadata behavior

# Set RETRIEVE_ENABLE_METADATA_DEFAULT=true in your environment

result = agent.tool.retrieve(

text="Tell me about cloud computing"

# enableMetadata will default to the environment variable value

)import os

import sys

from strands import Agent

from strands_tools import batch, http_request, use_aws

# Example usage of the batch with http_request and use_aws tools

agent = Agent(tools=[batch, http_request, use_aws])

result = agent.tool.batch(

invocations=[

{"name": "http_request", "arguments": {"method": "GET", "url": "https://api.ipify.org?format=json"}},

{

"name": "use_aws",

"arguments": {

"service_name": "s3",

"operation_name": "list_buckets",

"parameters": {},

"region": "us-east-1",

"label": "List S3 Buckets"

}

},

]

)from strands import Agent

from strands_tools import search_video, chat_video

agent = Agent(tools=[search_video, chat_video])

# Search for video content using natural language

result = agent.tool.search_video(

query="people discussing AI technology",

threshold="high",

group_by="video",

page_limit=5

)

# Chat with existing video (no index_id needed)

result = agent.tool.chat_video(

prompt="What are the main topics discussed in this video?",

video_id="existing-video-id"

)

# Chat with new video file (index_id required for upload)

result = agent.tool.chat_video(

prompt="Describe what happens in this video",

video_path="/path/to/video.mp4",

index_id="your-index-id" # or set TWELVELABS_PEGASUS_INDEX_ID env var

)from strands import Agent

from strands_tools.agent_core_memory import AgentCoreMemoryToolProvider

provider = AgentCoreMemoryToolProvider(

memory_id="memory-123abc", # Required

actor_id="user-456", # Required

session_id="session-789", # Required

namespace="default", # Required

region="us-west-2" # Optional, defaults to us-west-2

)

agent = Agent(tools=provider.tools)

# Create a new memory

result = agent.tool.agent_core_memory(

action="record",

content="I am allergic to shellfish"

)

# Search for relevant memories

result = agent.tool.agent_core_memory(

action="retrieve",

query="user preferences"

)

# List all memories

result = agent.tool.agent_core_memory(

action="list"

)

# Get a specific memory by ID

result = agent.tool.agent_core_memory(

action="get",

memory_record_id="mr-12345"

)from strands import Agent

from strands_tools.browser import LocalChromiumBrowser

# Create browser tool

browser = LocalChromiumBrowser()

agent = Agent(tools=[browser.browser])

# Simple navigation

result = agent.tool.browser({

"action": {

"type": "navigate",

"url": "https://example.com"

}

})

# Initialize a session first

result = agent.tool.browser({

"action": {

"type": "initSession",

"session_name": "main-session",

"description": "Web automation session"

}

})from strands import Agent

from strands_tools import handoff_to_user

agent = Agent(tools=[handoff_to_user])

# Request user confirmation and continue

response = agent.tool.handoff_to_user(

message="I need your approval to proceed with deleting these files. Type 'yes' to confirm.",

breakout_of_loop=False

)

# Complete handoff to user (stops agent execution)

agent.tool.handoff_to_user(

message="Task completed. Please review the results and take any necessary follow-up actions.",

breakout_of_loop=True

)from strands import Agent

from strands_tools.a2a_client import A2AClientToolProvider

# Initialize the A2A client provider with known agent URLs

provider = A2AClientToolProvider(known_agent_urls=["http://localhost:9000"])

agent = Agent(tools=provider.tools)

# Use natural language to interact with A2A agents

response = agent("discover available agents and send a greeting message")

# The agent will automatically use the available tools:

# - discover_agent(url) to find agents

# - list_discovered_agents() to see all discovered agents

# - send_message(message_text, target_agent_url) to communicatefrom strands import Agent

from strands_tools import diagram

agent = Agent(tools=[diagram])

# Create an AWS cloud architecture diagram

result = agent.tool.diagram(

diagram_type="cloud",

nodes=[

{"id": "users", "type": "Users", "label": "End Users"},

{"id": "cloudfront", "type": "CloudFront", "label": "CDN"},

{"id": "s3", "type": "S3", "label": "Static Assets"},

{"id": "api", "type": "APIGateway", "label": "API Gateway"},

{"id": "lambda", "type": "Lambda", "label": "Backend Service"}

],

edges=[

{"from": "users", "to": "cloudfront"},

{"from": "cloudfront", "to": "s3"},

{"from": "users", "to": "api"},

{"from": "api", "to": "lambda"}

],

title="Web Application Architecture"

)

# Create a UML class diagram

result = agent.tool.diagram(

diagram_type="class",

elements=[

{

"name": "User",

"attributes": ["+id: int", "-name: string", "#email: string"],

"methods": ["+login(): bool", "+logout(): void"]

},

{

"name": "Order",

"attributes": ["+id: int", "-items: List", "-total: float"],

"methods": ["+addItem(item): void", "+calculateTotal(): float"]

}

],

relationships=[

{"from": "User", "to": "Order", "type": "association", "multiplicity": "1..*"}

],

title="E-commerce Domain Model"

)from strands import Agent

from strands_tools import rss

agent = Agent(tools=[rss])

# Subscribe to a feed

result = agent.tool.rss(

action="subscribe",

url="https://news.example.com/rss/technology"

)

# List all subscribed feeds

feeds = agent.tool.rss(action="list")

# Read entries from a specific feed

entries = agent.tool.rss(

action="read",

feed_id="news_example_com_technology",

max_entries=5,

include_content=True

)

# Search across all feeds

search_results = agent.tool.rss(

action="search",

query="machine learning",

max_entries=10

)

# Fetch feed content without subscribing

latest_news = agent.tool.rss(

action="fetch",

url="https://blog.example.org/feed",

max_entries=3

)from strands import Agent

from strands_tools import use_computer

agent = Agent(tools=[use_computer])

# Find mouse position

result = agent.tool.use_computer(action="mouse_position")

# Automate adding text

result = agent.tool.use_computer(action="type", text="Hello, world!", app_name="Notepad")

# Analyze current computer screen

result = agent.tool.use_computer(action="analyze_screen")

result = agent.tool.use_computer(action="open_app", app_name="Calculator")

result = agent.tool.use_computer(action="close_app", app_name="Calendar")

result = agent.tool.use_computer(

action="hotkey",

hotkey_str="command+ctrl+f", # For macOS

app_name="Chrome"

)Note: This tool requires AWS account credentials to generate embeddings using Amazon Bedrock Titan models.

from strands import Agent

from strands_tools.elasticsearch_memory import elasticsearch_memory

# Create agent with direct tool usage

agent = Agent(tools=[elasticsearch_memory])

# Store a memory with semantic embeddings

result = agent.tool.elasticsearch_memory(

action="record",

content="User prefers vegetarian pizza with extra cheese",

metadata={"category": "food_preferences", "type": "dietary"},

cloud_id="your-elasticsearch-cloud-id",

api_key="your-api-key",

index_name="memories",

namespace="user_123"

)

# Search memories using semantic similarity (vector search)

result = agent.tool.elasticsearch_memory(

action="retrieve",

query="food preferences and dietary restrictions",

max_results=5,

cloud_id="your-elasticsearch-cloud-id",

api_key="your-api-key",

index_name="memories",

namespace="user_123"

)

# Use configuration dictionary for cleaner code

config = {

"cloud_id": "your-elasticsearch-cloud-id",

"api_key": "your-api-key",

"index_name": "memories",

"namespace": "user_123"

}

# List all memories with pagination

result = agent.tool.elasticsearch_memory(

action="list",

max_results=10,

**config

)

# Get specific memory by ID

result = agent.tool.elasticsearch_memory(

action="get",

memory_id="mem_1234567890_abcd1234",

**config

)

# Delete a memory

result = agent.tool.elasticsearch_memory(

action="delete",

memory_id="mem_1234567890_abcd1234",

**config

)

# Use Elasticsearch Serverless (URL-based connection)

result = agent.tool.elasticsearch_memory(

action="record",

content="User prefers vegetarian pizza",

es_url="https://your-serverless-cluster.es.region.aws.elastic.cloud:443",

api_key="your-api-key",

index_name="memories",

namespace="user_123"

)Note: This tool requires AWS account credentials to generate embeddings using Amazon Bedrock Titan models.

from strands import Agent

from strands_tools.mongodb_memory import mongodb_memory

# Create agent with direct tool usage

agent = Agent(tools=[mongodb_memory])

# Store a memory with semantic embeddings

result = agent.tool.mongodb_memory(

action="record",

content="User prefers vegetarian pizza with extra cheese",

metadata={"category": "food_preferences", "type": "dietary"},

connection_string="mongodb+srv://username:[email protected]/?retryWrites=true&w=majority",

database_name="memories",

collection_name="user_memories",

namespace="user_123"

)

# Search memories using semantic similarity (vector search)

result = agent.tool.mongodb_memory(

action="retrieve",

query="food preferences and dietary restrictions",

max_results=5,

connection_string="mongodb+srv://username:[email protected]/?retryWrites=true&w=majority",

database_name="memories",

collection_name="user_memories",

namespace="user_123"

)

# Use configuration dictionary for cleaner code

config = {

"connection_string": "mongodb+srv://username:[email protected]/?retryWrites=true&w=majority",

"database_name": "memories",

"collection_name": "user_memories",

"namespace": "user_123"

}

# List all memories with pagination

result = agent.tool.mongodb_memory(

action="list",

max_results=10,

**config

)

# Get specific memory by ID

result = agent.tool.mongodb_memory(

action="get",

memory_id="mem_1234567890_abcd1234",

**config

)

# Delete a memory

result = agent.tool.mongodb_memory(

action="delete",

memory_id="mem_1234567890_abcd1234",

**config

)

# Use environment variables for connection

# Set MONGODB_ATLAS_CLUSTER_URI in your environment

result = agent.tool.mongodb_memory(

action="record",

content="User prefers vegetarian pizza",

database_name="memories",

collection_name="user_memories",

namespace="user_123"

)Agents Tools provides extensive customization through environment variables. This allows you to configure tool behavior without modifying code, making it ideal for different environments (development, testing, production).

These variables affect multiple tools:

| Environment Variable | Description | Default | Affected Tools |

|---|---|---|---|

| BYPASS_TOOL_CONSENT | Bypass consent for tool invocation, set to "true" to enable | false | All tools that require consent (e.g. shell, file_write, python_repl) |

| STRANDS_TOOL_CONSOLE_MODE | Enable rich UI for tools, set to "enabled" to enable | disabled | All tools that have optional rich UI |

| AWS_REGION | Default AWS region for AWS operations | us-west-2 | use_aws, retrieve, generate_image, memory, nova_reels |

| AWS_PROFILE | AWS profile name to use from ~/.aws/credentials | default | use_aws, retrieve |

| LOG_LEVEL | Logging level (DEBUG, INFO, WARNING, ERROR) | INFO | All tools |

| Environment Variable | Description | Default |

|---|---|---|

| CALCULATOR_MODE | Default calculation mode | evaluate |

| CALCULATOR_PRECISION | Number of decimal places for results | 10 |

| CALCULATOR_SCIENTIFIC | Whether to use scientific notation for numbers | False |

| CALCULATOR_FORCE_NUMERIC | Force numeric evaluation of symbolic expressions | False |

| CALCULATOR_FORCE_SCIENTIFIC_THRESHOLD | Threshold for automatic scientific notation | 1e21 |

| CALCULATOR_DERIVE_ORDER | Default order for derivatives | 1 |

| CALCULATOR_SERIES_POINT | Default point for series expansion | 0 |

| CALCULATOR_SERIES_ORDER | Default order for series expansion | 5 |

| Environment Variable | Description | Default |

|---|---|---|

| DEFAULT_TIMEZONE | Default timezone for current_time tool | UTC |

| Environment Variable | Description | Default |

|---|---|---|

| MAX_SLEEP_SECONDS | Maximum allowed sleep duration in seconds | 300 |

| Environment Variable | Description | Default |

|---|---|---|

| TAVILY_API_KEY | Tavily API key (required for all Tavily functionality) | None |

- Visit https://www.tavily.com/ to create a free account and API key.

| Environment Variable | Description | Default |

|---|---|---|

| EXA_API_KEY | Exa API key (required for all Exa functionality) | None |

- Visit https://dashboard.exa.ai/api-keys to create a free account and API key.

The Mem0 Memory Tool supports three different backend configurations:

-

Mem0 Platform:

- Uses the Mem0 Platform API for memory management

- Requires a Mem0 API key

-

OpenSearch (Recommended for AWS environments):

- Uses OpenSearch as the vector store backend

- Requires AWS credentials and OpenSearch configuration

-

FAISS (Default for local development):

- Uses FAISS as the local vector store backend

- Requires faiss-cpu package for local vector storage

-

Neptune Analytics (Optional Graph backend for search enhancement):

- Uses Neptune Analytics as the graph store backend to enhance memory recall.

- Requires AWS credentials and Neptune Analytics configuration

# Configure your Neptune Analytics graph ID in the .env file: export NEPTUNE_ANALYTICS_GRAPH_IDENTIFIER=sample-graph-id # Configure your Neptune Analytics graph ID in Python code: import os os.environ['NEPTUNE_ANALYTICS_GRAPH_IDENTIFIER'] = "g-sample-graph-id"

| Environment Variable | Description | Default | Required For |

|---|---|---|---|

| MEM0_API_KEY | Mem0 Platform API key | None | Mem0 Platform |

| OPENSEARCH_HOST | OpenSearch Host URL | None | OpenSearch |

| AWS_REGION | AWS Region for OpenSearch | us-west-2 | OpenSearch |

| NEPTUNE_ANALYTICS_GRAPH_IDENTIFIER | Neptune Analytics Graph Identifier | None | Neptune Analytics |

| DEV | Enable development mode (bypasses confirmations) | false | All modes |

| MEM0_LLM_PROVIDER | LLM provider for memory processing | aws_bedrock | All modes |

| MEM0_LLM_MODEL | LLM model for memory processing | anthropic.claude-3-5-haiku-20241022-v1:0 | All modes |

| MEM0_LLM_TEMPERATURE | LLM temperature (0.0-2.0) | 0.1 | All modes |

| MEM0_LLM_MAX_TOKENS | LLM maximum tokens | 2000 | All modes |

| MEM0_EMBEDDER_PROVIDER | Embedder provider for vector embeddings | aws_bedrock | All modes |

| MEM0_EMBEDDER_MODEL | Embedder model for vector embeddings | amazon.titan-embed-text-v2:0 | All modes |

Note:

- If

MEM0_API_KEYis set, the tool will use the Mem0 Platform - If

OPENSEARCH_HOSTis set, the tool will use OpenSearch - If neither is set, the tool will default to FAISS (requires

faiss-cpupackage) - If

NEPTUNE_ANALYTICS_GRAPH_IDENTIFIERis set, the tool will configure Neptune Analytics as graph store to enhance memory search - LLM configuration applies to all backend modes and allows customization of the language model used for memory processing

| Environment Variable | Description | Default |

|---|---|---|

| BRIGHTDATA_API_KEY | Bright Data API Key | None |

| BRIGHTDATA_ZONE | Bright Data Web Unlocker Zone | web_unlocker1 |

| Environment Variable | Description | Default |

|---|---|---|

| MEMORY_DEFAULT_MAX_RESULTS | Default maximum results for list operations | 50 |

| MEMORY_DEFAULT_MIN_SCORE | Default minimum relevance score for filtering results | 0.4 |

| Environment Variable | Description | Default |

|---|---|---|

| NOVA_REEL_DEFAULT_SEED | Default seed for video generation | 0 |

| NOVA_REEL_DEFAULT_FPS | Default frames per second for generated videos | 24 |

| NOVA_REEL_DEFAULT_DIMENSION | Default video resolution in WIDTHxHEIGHT format | 1280x720 |

| NOVA_REEL_DEFAULT_MAX_RESULTS | Default maximum number of jobs to return for list action | 10 |

| Environment Variable | Description | Default |

|---|---|---|

| PYTHON_REPL_BINARY_MAX_LEN | Maximum length for binary content before truncation | 100 |

| PYTHON_REPL_INTERACTIVE | Whether to enable interactive PTY mode | None |

| PYTHON_REPL_RESET_STATE | Whether to reset the REPL state before execution | None |

| PYTHON_REPL_PERSISTENCE_DIR | Set Directory for python_repl tool to write state file | None |

| Environment Variable | Description | Default |

|---|---|---|

| SHELL_DEFAULT_TIMEOUT | Default timeout in seconds for shell commands | 900 |

| Environment Variable | Description | Default |

|---|---|---|

| SLACK_DEFAULT_EVENT_COUNT | Default number of events to retrieve | 42 |

| STRANDS_SLACK_AUTO_REPLY | Enable automatic replies to messages | false |

| STRANDS_SLACK_LISTEN_ONLY_TAG | Only process messages containing this tag | None |

| Environment Variable | Description | Default |

|---|---|---|

| SPEAK_DEFAULT_STYLE | Default style for status messages | green |

| SPEAK_DEFAULT_MODE | Default speech mode (fast/polly) | fast |

| SPEAK_DEFAULT_VOICE_ID | Default Polly voice ID | Joanna |

| SPEAK_DEFAULT_OUTPUT_PATH | Default audio output path | speech_output.mp3 |

| SPEAK_DEFAULT_PLAY_AUDIO | Whether to play audio by default | True |

| Environment Variable | Description | Default |

|---|---|---|

| EDITOR_DIR_TREE_MAX_DEPTH | Maximum depth for directory tree visualization | 2 |

| EDITOR_DEFAULT_STYLE | Default style for output panels | default |

| EDITOR_DEFAULT_LANGUAGE | Default language for syntax highlighting | python |

| EDITOR_DISABLE_BACKUP | Skip creating .bak backup files during edit operations | false |

| Environment Variable | Description | Default |

|---|---|---|

| ENV_VARS_MASKED_DEFAULT | Default setting for masking sensitive values | true |

| Environment Variable | Description | Default |

|---|---|---|

| STRANDS_MCP_TIMEOUT | Default timeout in seconds for MCP operations | 30.0 |

| Environment Variable | Description | Default |

|---|---|---|

| FILE_READ_RECURSIVE_DEFAULT | Default setting for recursive file searching | true |

| FILE_READ_CONTEXT_LINES_DEFAULT | Default number of context lines around search matches | 2 |

| FILE_READ_START_LINE_DEFAULT | Default starting line number for lines mode | 0 |

| FILE_READ_CHUNK_OFFSET_DEFAULT | Default byte offset for chunk mode | 0 |

| FILE_READ_DIFF_TYPE_DEFAULT | Default diff type for file comparisons | unified |

| FILE_READ_USE_GIT_DEFAULT | Default setting for using git in time machine mode | true |

| FILE_READ_NUM_REVISIONS_DEFAULT | Default number of revisions to show in time machine mode | 5 |

| Environment Variable | Description | Default |

|---|---|---|

| STRANDS_DEFAULT_WAIT_TIME | Default setting for wait time with actions | 1 |

| STRANDS_BROWSER_MAX_RETRIES | Default number of retries to perform when an action fails | 3 |

| STRANDS_BROWSER_RETRY_DELAY | Default retry delay time for retry mechanisms | 1 |

| STRANDS_BROWSER_SCREENSHOTS_DIR | Default directory where screenshots will be saved | screenshots |

| STRANDS_BROWSER_USER_DATA_DIR | Default directory where data for reloading a browser instance is stored | ~/.browser_automation |

| STRANDS_BROWSER_HEADLESS | Default headless setting for launching browsers | false |

| STRANDS_BROWSER_WIDTH | Default width of the browser | 1280 |

| STRANDS_BROWSER_HEIGHT | Default height of the browser | 800 |

| Environment Variable | Description | Default |

|---|---|---|

| STRANDS_RSS_MAX_ENTRIES | Default setting for maximum number of entries per feed | 100 |

| STRANDS_RSS_UPDATE_INTERVAL | Default amount of time between updating rss feeds in minutes | 60 |

| STRANDS_RSS_STORAGE_PATH | Default storage path where rss feeds are stored locally | strands_rss_feeds (this may vary based on your system) |

| Environment Variable | Description | Default |

|---|---|---|

| RETRIEVE_ENABLE_METADATA_DEFAULT | Default setting for enabling metadata in retrieve tool responses | false |

| Environment Variable | Description | Default |

|---|---|---|

| TWELVELABS_API_KEY | TwelveLabs API key for video analysis | None |

| TWELVELABS_MARENGO_INDEX_ID | Default index ID for search_video tool | None |

| TWELVELABS_PEGASUS_INDEX_ID | Default index ID for chat_video tool | None |

| Environment Variable | Description | Default |

|---|---|---|

| MONGODB_ATLAS_CLUSTER_URI | MongoDB Atlas connection string | None |

| MONGODB_DEFAULT_DATABASE | Default database name for MongoDB operations | memories |

| MONGODB_DEFAULT_COLLECTION | Default collection name for MongoDB operations | user_memories |

| MONGODB_DEFAULT_NAMESPACE | Default namespace for memory isolation | default |

| MONGODB_DEFAULT_MAX_RESULTS | Default maximum results for list operations | 50 |

| MONGODB_DEFAULT_MIN_SCORE | Default minimum relevance score for filtering results | 0.4 |

Note: This tool requires AWS account credentials to generate embeddings using Amazon Bedrock Titan models.

This is a community-driven project, powered by passionate developers like you. We enthusiastically welcome contributions from everyone, regardless of experience level—your unique perspective is valuable to us!

- Find your first opportunity: If you're new to the project, explore our labeled "good first issues" for beginner-friendly tasks.

- Understand our workflow: Review our Contributing Guide to learn about our development setup, coding standards, and pull request process.

- Make your impact: Contributions come in many forms—fixing bugs, enhancing documentation, improving performance, adding features, writing tests, or refining the user experience.

- Submit your work: When you're ready, submit a well-documented pull request, and our maintainers will provide feedback to help get your changes merged.

Your questions, insights, and ideas are always welcome!

Together, we're building something meaningful that impacts real users. We look forward to collaborating with you!

This project is licensed under the Apache License 2.0 - see the LICENSE file for details.

See CONTRIBUTING for more information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for tools

Similar Open Source Tools

tools

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more. The tools cover a wide range of functionalities including file operations, shell integration, memory storage, web infrastructure, HTTP client, Slack client, Python execution, mathematical tools, AWS integration, image and video processing, audio output, environment management, task scheduling, advanced reasoning, swarm intelligence, dynamic MCP client, parallel tool execution, browser automation, diagram creation, RSS feed management, and computer automation.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

ai-manus

AI Manus is a general-purpose AI Agent system that supports running various tools and operations in a sandbox environment. It offers deployment with minimal dependencies, supports multiple tools like Terminal, Browser, File, Web Search, and messaging tools, allocates separate sandboxes for tasks, manages session history, supports stopping and interrupting conversations, file upload and download, and is multilingual. The system also provides user login and authentication. The project primarily relies on Docker for development and deployment, with model capability requirements and recommended Deepseek and GPT models.

huf

HUF is an AI-native engine designed to centralize intelligence and execution into a single engine, enabling AI to operate inside real business systems. It offers multi-provider AI connectivity, intelligent tools, knowledge grounding, event-driven execution, visual workflow builder, full auditability, and cost control. HUF can be used as AI infrastructure for products, internal intelligence platform, automation & orchestration engine, embedded AI layer for SaaS, and enterprise AI control plane. Core capabilities include agent system, knowledge management, trigger system, visual flow builder, and observability. The tech stack includes Frappe Framework, Python 3.10+, LiteLLM, SQLite FTS5, React 18, TypeScript, Tailwind CSS, and MariaDB.

Sage

Sage is a production-ready, modular, and intelligent multi-agent orchestration framework for complex problem solving. It intelligently breaks down complex tasks into manageable subtasks through seamless agent collaboration. Sage provides Deep Research Mode for comprehensive analysis and Rapid Execution Mode for quick task completion. It offers features like intelligent task decomposition, agent orchestration, extensible tool system, dual execution modes, interactive web interface, advanced token tracking, rich configuration, developer-friendly APIs, and robust error recovery mechanisms. Sage supports custom workflows, multi-agent collaboration, custom agent development, agent flow orchestration, rule preferences system, message manager for smart token optimization, task manager for comprehensive state management, advanced file system operations, advanced tool system with plugin architecture, token usage & cost monitoring, and rich configuration system. It also includes real-time streaming & monitoring, advanced tool development, error handling & reliability, performance monitoring, MCP server integration, and security features.

holmesgpt

HolmesGPT is an open-source DevOps assistant powered by OpenAI or any tool-calling LLM of your choice. It helps in troubleshooting Kubernetes, incident response, ticket management, automated investigation, and runbook automation in plain English. The tool connects to existing observability data, is compliance-friendly, provides transparent results, supports extensible data sources, runbook automation, and integrates with existing workflows. Users can install HolmesGPT using Brew, prebuilt Docker container, Python Poetry, or Docker. The tool requires an API key for functioning and supports OpenAI, Azure AI, and self-hosted LLMs.

open-webui-tools

Open WebUI Tools Collection is a set of tools for structured planning, arXiv paper search, Hugging Face text-to-image generation, prompt enhancement, and multi-model conversations. It enhances LLM interactions with academic research, image generation, and conversation management. Tools include arXiv Search Tool and Hugging Face Image Generator. Function Pipes like Planner Agent offer autonomous plan generation and execution. Filters like Prompt Enhancer improve prompt quality. Installation and configuration instructions are provided for each tool and pipe.

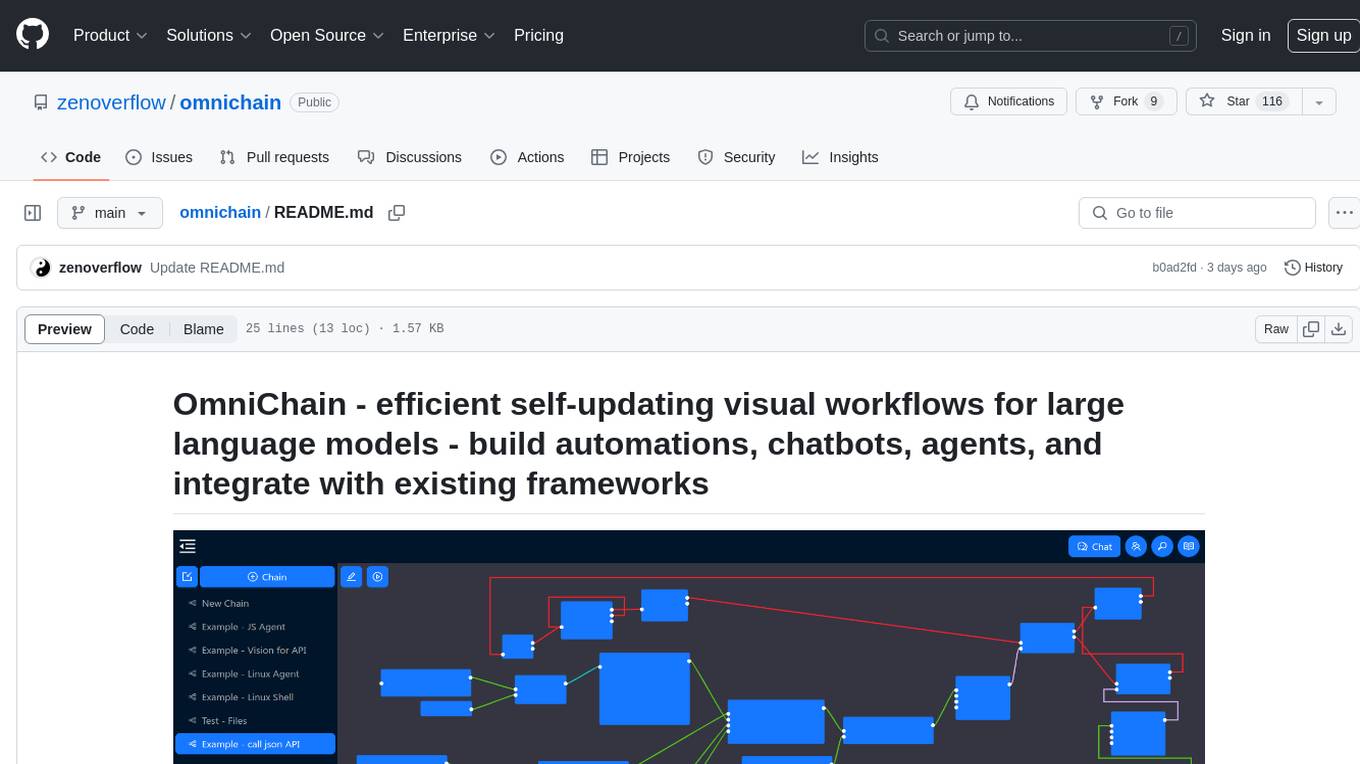

omnichain

OmniChain is a tool for building efficient self-updating visual workflows using AI language models, enabling users to automate tasks, create chatbots, agents, and integrate with existing frameworks. It allows users to create custom workflows guided by logic processes, store and recall information, and make decisions based on that information. The tool enables users to create tireless robot employees that operate 24/7, access the underlying operating system, generate and run NodeJS code snippets, and create custom agents and logic chains. OmniChain is self-hosted, open-source, and available for commercial use under the MIT license, with no coding skills required.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

atomic-agents

The Atomic Agents framework is a modular and extensible tool designed for creating powerful applications. It leverages Pydantic for data validation and serialization. The framework follows the principles of Atomic Design, providing small and single-purpose components that can be combined. It integrates with Instructor for AI agent architecture and supports various APIs like Cohere, Anthropic, and Gemini. The tool includes documentation, examples, and testing features to ensure smooth development and usage.

orchestkit

OrchestKit is a powerful and flexible orchestration tool designed to streamline and automate complex workflows. It provides a user-friendly interface for defining and managing orchestration tasks, allowing users to easily create, schedule, and monitor workflows. With support for various integrations and plugins, OrchestKit enables seamless automation of tasks across different systems and applications. Whether you are a developer looking to automate deployment processes or a system administrator managing complex IT operations, OrchestKit offers a comprehensive solution to simplify and optimize your workflow management.

arcade-ai

Arcade AI is a developer-focused tooling and API platform designed to enhance the capabilities of LLM applications and agents. It simplifies the process of connecting agentic applications with user data and services, allowing developers to concentrate on building their applications. The platform offers prebuilt toolkits for interacting with various services, supports multiple authentication providers, and provides access to different language models. Users can also create custom toolkits and evaluate their tools using Arcade AI. Contributions are welcome, and self-hosting is possible with the provided documentation.

DB-GPT

DB-GPT is an open source AI native data app development framework with AWEL(Agentic Workflow Expression Language) and agents. It aims to build infrastructure in the field of large models, through the development of multiple technical capabilities such as multi-model management (SMMF), Text2SQL effect optimization, RAG framework and optimization, Multi-Agents framework collaboration, AWEL (agent workflow orchestration), etc. Which makes large model applications with data simpler and more convenient.

LocalLLMClient

LocalLLMClient is a Swift package designed to interact with local Large Language Models (LLMs) on Apple platforms. It supports GGUF, MLX models, and the FoundationModels framework, providing streaming API, multimodal capabilities, and tool calling functionalities. Users can easily integrate this tool to work with various models for text generation and processing. The package also includes advanced features for low-level API control and multimodal image processing. LocalLLMClient is experimental and subject to API changes, offering support for iOS, macOS, and Linux platforms.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

For similar tasks

tools

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more. The tools cover a wide range of functionalities including file operations, shell integration, memory storage, web infrastructure, HTTP client, Slack client, Python execution, mathematical tools, AWS integration, image and video processing, audio output, environment management, task scheduling, advanced reasoning, swarm intelligence, dynamic MCP client, parallel tool execution, browser automation, diagram creation, RSS feed management, and computer automation.

goclaw

goclaw is a powerful AI Agent framework written in Go language. It provides a complete tool system for FileSystem, Shell, Web, and Browser with Docker sandbox support and permission control. The framework includes a skill system compatible with OpenClaw and AgentSkills specifications, supporting automatic discovery and environment gating. It also offers persistent session storage, multi-channel support for Telegram, WhatsApp, Feishu, QQ, and WeWork, flexible configuration with YAML/JSON support, multiple LLM providers like OpenAI, Anthropic, and OpenRouter, WebSocket Gateway, Cron scheduling, and Browser automation based on Chrome DevTools Protocol.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.