ai-manus

AI Manus is a general-purpose AI Agent system that supports running various tools and operations in a sandbox environment.

Stars: 976

AI Manus is a general-purpose AI Agent system that supports running various tools and operations in a sandbox environment. It offers deployment with minimal dependencies, supports multiple tools like Terminal, Browser, File, Web Search, and messaging tools, allocates separate sandboxes for tasks, manages session history, supports stopping and interrupting conversations, file upload and download, and is multilingual. The system also provides user login and authentication. The project primarily relies on Docker for development and deployment, with model capability requirements and recommended Deepseek and GPT models.

README:

English | 中文 | Official Site | Documents

AI Manus is a general-purpose AI Agent system that supports running various tools and operations in a sandbox environment.

Enjoy your own agent with AI Manus!

👏 Join QQ Group(1005477581)

❤️ Like AI Manus? Give it a star 🌟 or Sponsor to support the development!

https://github.com/user-attachments/assets/37060a09-c647-4bcb-920c-959f7fa73ebe

- Task: Latest LLM papers

https://github.com/user-attachments/assets/4e35bc4d-024a-4617-8def-a537a94bd285

- Task: Write a complex Python example

https://github.com/user-attachments/assets/765ea387-bb1c-4dc2-b03e-716698feef77

- Deployment: Minimal deployment requires only an LLM service, with no dependency on other external services.

- Tools: Supports Terminal, Browser, File, Web Search, and messaging tools with real-time viewing and takeover capabilities, supports external MCP tool integration.

- Sandbox: Each task is allocated a separate sandbox that runs in a local Docker environment.

- Task Sessions: Session history is managed through MongoDB/Redis, supporting background tasks.

- Conversations: Supports stopping and interrupting, file upload and download.

- Multilingual: Supports both Chinese and English.

- Authentication: User login and authentication.

- Tools: Support for Deploy & Expose.

- Sandbox: Support for mobile and Windows computer access.

- Deployment: Support for K8s and Docker Swarm multi-cluster deployment.

When a user initiates a conversation:

- Web sends a request to create an Agent to the Server, which creates a Sandbox through

/var/run/docker.sockand returns a session ID. - The Sandbox is an Ubuntu Docker environment that starts Chrome browser and API services for tools like File/Shell.

- Web sends user messages to the session ID, and when the Server receives user messages, it forwards them to the PlanAct Agent for processing.

- During processing, the PlanAct Agent calls relevant tools to complete tasks.

- All events generated during Agent processing are sent back to Web via SSE.

When users browse tools:

- Browser:

- The Sandbox's headless browser starts a VNC service through xvfb and x11vnc, and converts VNC to websocket through websockify.

- Web's NoVNC component connects to the Sandbox through the Server's Websocket Forward, enabling browser viewing.

- Other tools: Other tools work on similar principles.

This project primarily relies on Docker for development and deployment, requiring a relatively new version of Docker:

- Docker 20.10+

- Docker Compose

Model capability requirements:

- Compatible with OpenAI interface

- Support for FunctionCall

- Support for Json Format output

Deepseek and GPT models are recommended.

Docker Compose is recommended for deployment:

services:

frontend:

image: simpleyyt/manus-frontend

ports:

- "5173:80"

depends_on:

- backend

restart: unless-stopped

networks:

- manus-network

environment:

- BACKEND_URL=http://backend:8000

backend:

image: simpleyyt/manus-backend

depends_on:

- sandbox

restart: unless-stopped

volumes:

- /var/run/docker.sock:/var/run/docker.sock:ro

#- ./mcp.json:/etc/mcp.json # Mount MCP servers directory

networks:

- manus-network

environment:

# OpenAI API base URL

- API_BASE=https://api.openai.com/v1

# OpenAI API key, replace with your own

- API_KEY=sk-xxxx

# LLM model name

- MODEL_NAME=gpt-4o

# LLM temperature parameter, controls randomness

- TEMPERATURE=0.7

# Maximum tokens for LLM response

- MAX_TOKENS=2000

# MongoDB connection URI

#- MONGODB_URI=mongodb://mongodb:27017

# MongoDB database name

#- MONGODB_DATABASE=manus

# MongoDB username (optional)

#- MONGODB_USERNAME=

# MongoDB password (optional)

#- MONGODB_PASSWORD=

# Redis server hostname

#- REDIS_HOST=redis

# Redis server port

#- REDIS_PORT=6379

# Redis database number

#- REDIS_DB=0

# Redis password (optional)

#- REDIS_PASSWORD=

# Sandbox server address (optional)

#- SANDBOX_ADDRESS=

# Docker image used for the sandbox

- SANDBOX_IMAGE=simpleyyt/manus-sandbox

# Prefix for sandbox container names

- SANDBOX_NAME_PREFIX=sandbox

# Time-to-live for sandbox containers in minutes

- SANDBOX_TTL_MINUTES=30

# Docker network for sandbox containers

- SANDBOX_NETWORK=manus-network

# Chrome browser arguments for sandbox (optional)

#- SANDBOX_CHROME_ARGS=

# HTTPS proxy for sandbox (optional)

#- SANDBOX_HTTPS_PROXY=

# HTTP proxy for sandbox (optional)

#- SANDBOX_HTTP_PROXY=

# No proxy hosts for sandbox (optional)

#- SANDBOX_NO_PROXY=

# Search engine configuration

# Options: baidu, google, bing

- SEARCH_PROVIDER=bing

# Google search configuration, only used when SEARCH_PROVIDER=google

#- GOOGLE_SEARCH_API_KEY=

#- GOOGLE_SEARCH_ENGINE_ID=

# Auth configuration

# Options: password, none, local

- AUTH_PROVIDER=password

# Password auth configuration, only used when AUTH_PROVIDER=password

- PASSWORD_SALT=

- PASSWORD_HASH_ROUNDS=10

# Local auth configuration, only used when AUTH_PROVIDER=local

#- [email protected]

#- LOCAL_AUTH_PASSWORD=admin

# JWT configuration

- JWT_SECRET_KEY=your-secret-key-here

- JWT_ALGORITHM=HS256

- JWT_ACCESS_TOKEN_EXPIRE_MINUTES=30

- JWT_REFRESH_TOKEN_EXPIRE_DAYS=7

# Email configuration

# Only used when AUTH_PROVIDER=password

#- EMAIL_HOST=smtp.gmail.com

#- EMAIL_PORT=587

#- [email protected]

#- EMAIL_PASSWORD=your-password

#- [email protected]

# MCP configuration file path

#- MCP_CONFIG_PATH=/etc/mcp.json

# Application log level

- LOG_LEVEL=INFO

sandbox:

image: simpleyyt/manus-sandbox

command: /bin/sh -c "exit 0" # prevent sandbox from starting, ensure image is pulled

restart: "no"

networks:

- manus-network

mongodb:

image: mongo:7.0

volumes:

- mongodb_data:/data/db

restart: unless-stopped

#ports:

# - "27017:27017"

networks:

- manus-network

redis:

image: redis:7.0

restart: unless-stopped

networks:

- manus-network

volumes:

mongodb_data:

name: manus-mongodb-data

networks:

manus-network:

name: manus-network

driver: bridgeSave as docker-compose.yml file, and run:

docker compose up -dNote: If you see

sandbox-1 exited with code 0, this is normal, as it ensures the sandbox image is successfully pulled locally.

Open your browser and visit http://localhost:5173 to access Manus.

This project consists of three independent sub-projects:

-

frontend: manus frontend -

backend: Manus backend -

sandbox: Manus sandbox

- Download the project:

git clone https://github.com/simpleyyt/ai-manus.git

cd ai-manus- Copy the configuration file:

cp .env.example .env- Modify the configuration file:

# Model provider configuration

API_KEY=

API_BASE=http://mockserver:8090/v1

# Model configuration

MODEL_NAME=deepseek-chat

TEMPERATURE=0.7

MAX_TOKENS=2000

# MongoDB configuration

#MONGODB_URI=mongodb://mongodb:27017

#MONGODB_DATABASE=manus

#MONGODB_USERNAME=

#MONGODB_PASSWORD=

# Redis configuration

#REDIS_HOST=redis

#REDIS_PORT=6379

#REDIS_DB=0

#REDIS_PASSWORD=

# Sandbox configuration

#SANDBOX_ADDRESS=

SANDBOX_IMAGE=simpleyyt/manus-sandbox

SANDBOX_NAME_PREFIX=sandbox

SANDBOX_TTL_MINUTES=30

SANDBOX_NETWORK=manus-network

#SANDBOX_CHROME_ARGS=

#SANDBOX_HTTPS_PROXY=

#SANDBOX_HTTP_PROXY=

#SANDBOX_NO_PROXY=

# Search engine configuration

# Options: baidu, google, bing

SEARCH_PROVIDER=bing

# Google search configuration, only used when SEARCH_PROVIDER=google

#GOOGLE_SEARCH_API_KEY=

#GOOGLE_SEARCH_ENGINE_ID=

# Auth configuration

# Options: password, none, local

AUTH_PROVIDER=password

# Password auth configuration, only used when AUTH_PROVIDER=password

PASSWORD_SALT=

PASSWORD_HASH_ROUNDS=10

# Local auth configuration, only used when AUTH_PROVIDER=local

#[email protected]

#LOCAL_AUTH_PASSWORD=admin

# JWT configuration

JWT_SECRET_KEY=your-secret-key-here

JWT_ALGORITHM=HS256

JWT_ACCESS_TOKEN_EXPIRE_MINUTES=30

JWT_REFRESH_TOKEN_EXPIRE_DAYS=7

# Email configuration

# Only used when AUTH_PROVIDER=password

#EMAIL_HOST=smtp.gmail.com

#EMAIL_PORT=587

#[email protected]

#EMAIL_PASSWORD=your-password

#[email protected]

# MCP configuration

#MCP_CONFIG_PATH=/etc/mcp.json

# Log configuration

LOG_LEVEL=INFO- Run in debug mode:

# Equivalent to docker compose -f docker-compose-development.yaml up

./dev.sh upAll services will run in reload mode, and code changes will be automatically reloaded. The exposed ports are as follows:

- 5173: Web frontend port

- 8000: Server API service port

- 8080: Sandbox API service port

- 5900: Sandbox VNC port

- 9222: Sandbox Chrome browser CDP port

Note: In Debug mode, only one sandbox will be started globally

- When dependencies change (requirements.txt or package.json), clean up and rebuild:

# Clean up all related resources

./dev.sh down -v

# Rebuild images

./dev.sh build

# Run in debug mode

./dev.sh upexport IMAGE_REGISTRY=your-registry-url

export IMAGE_TAG=latest

# Build images

./run build

# Push to the corresponding image repository

./run pushFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-manus

Similar Open Source Tools

ai-manus

AI Manus is a general-purpose AI Agent system that supports running various tools and operations in a sandbox environment. It offers deployment with minimal dependencies, supports multiple tools like Terminal, Browser, File, Web Search, and messaging tools, allocates separate sandboxes for tasks, manages session history, supports stopping and interrupting conversations, file upload and download, and is multilingual. The system also provides user login and authentication. The project primarily relies on Docker for development and deployment, with model capability requirements and recommended Deepseek and GPT models.

nekro-agent

Nekro Agent is an AI chat plugin and proxy execution bot that is highly scalable, offers high freedom, and has minimal deployment requirements. It features context-aware chat for group/private chats, custom character settings, sandboxed execution environment, interactive image resource handling, customizable extension development interface, easy deployment with docker-compose, integration with Stable Diffusion for AI drawing capabilities, support for various file types interaction, hot configuration updates and command control, native multimodal understanding, visual application management control panel, CoT (Chain of Thought) support, self-triggered timers and holiday greetings, event notification understanding, and more. It allows for third-party extensions and AI-generated extensions, and includes features like automatic context trigger based on LLM, and a variety of basic commands for bot administrators.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

tools

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more. The tools cover a wide range of functionalities including file operations, shell integration, memory storage, web infrastructure, HTTP client, Slack client, Python execution, mathematical tools, AWS integration, image and video processing, audio output, environment management, task scheduling, advanced reasoning, swarm intelligence, dynamic MCP client, parallel tool execution, browser automation, diagram creation, RSS feed management, and computer automation.

odoo-llm

This repository provides a comprehensive framework for integrating Large Language Models (LLMs) into Odoo. It enables seamless interaction with AI providers like OpenAI, Anthropic, Ollama, and Replicate for chat completions, text embeddings, and more within the Odoo environment. The architecture includes external AI clients connecting via `llm_mcp_server` and Odoo AI Chat with built-in chat interface. The core module `llm` offers provider abstraction, model management, and security, along with tools for CRUD operations and domain-specific tool packs. Various AI providers, infrastructure components, and domain-specific tools are available for different tasks such as content generation, knowledge base management, and AI assistants creation.

mastra

Mastra is an opinionated Typescript framework designed to help users quickly build AI applications and features. It provides primitives such as workflows, agents, RAG, integrations, syncs, and evals. Users can run Mastra locally or deploy it to a serverless cloud. The framework supports various LLM providers, offers tools for building language models, workflows, and accessing knowledge bases. It includes features like durable graph-based state machines, retrieval-augmented generation, integrations, syncs, and automated tests for evaluating LLM outputs.

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

chatluna

Chatluna is a machine learning model plugin that provides chat services with large language models. It is highly extensible, supports multiple output formats, and offers features like custom conversation presets, rate limiting, and context awareness. Users can deploy Chatluna under Koishi without additional configuration. The plugin supports various models/platforms like OpenAI, Azure OpenAI, Google Gemini, and more. It also provides preset customization using YAML files and allows for easy forking and development within Koishi projects. However, the project lacks web UI, HTTP server, and project documentation, inviting contributions from the community.

tunacode

TunaCode CLI is an AI-powered coding assistant that provides a command-line interface for developers to enhance their coding experience. It offers features like model selection, parallel execution for faster file operations, and various commands for code management. The tool aims to improve coding efficiency and provide a seamless coding environment for developers.

foundationallm

FoundationaLLM is a platform designed for deploying, scaling, securing, and governing generative AI in enterprises. It allows users to create AI agents grounded in enterprise data, integrate REST APIs, experiment with various large language models, centrally manage AI agents and their assets, deploy scalable vectorization data pipelines, enable non-developer users to create their own AI agents, control access with role-based access controls, and harness capabilities from Azure AI and Azure OpenAI. The platform simplifies integration with enterprise data sources, provides fine-grain security controls, scalability, extensibility, and addresses the challenges of delivering enterprise copilots or AI agents.

llama.ui

llama.ui is an open-source desktop application that provides a beautiful, user-friendly interface for interacting with large language models powered by llama.cpp. It is designed for simplicity and privacy, allowing users to chat with powerful quantized models on their local machine without the need for cloud services. The project offers multi-provider support, conversation management with indexedDB storage, rich UI components including markdown rendering and file attachments, advanced features like PWA support and customizable generation parameters, and is privacy-focused with all data stored locally in the browser.

llm-agents.nix

Nix packages for AI coding agents and development tools. Automatically updated daily. This repository provides a wide range of AI coding agents and tools that can be used in the terminal environment. The tools cover various functionalities such as code assistance, AI-powered development agents, CLI tools for AI coding, workflow and project management, code review, utilities like search tools and browser automation, and usage analytics for AI coding sessions. The repository also includes experimental features like sandboxed execution, provider abstraction, and tool composition to explore how Nix can enhance AI-powered development.

traceroot

TraceRoot is a tool that helps engineers debug production issues 10× faster using AI-powered analysis of traces, logs, and code context. It accelerates the debugging process with AI-powered insights, integrates seamlessly into the development workflow, provides real-time trace and log analysis, code context understanding, and intelligent assistance. Features include ease of use, LLM flexibility, distributed services, AI debugging interface, and integration support. Users can get started with TraceRoot Cloud for a 7-day trial or self-host the tool. SDKs are available for Python and JavaScript/TypeScript.

hyper-mcp

hyper-mcp is a fast and secure MCP server that enables adding AI capabilities to applications through WebAssembly plugins. It supports writing plugins in various languages, distributing them via standard OCI registries, and running them in resource-constrained environments. The tool offers sandboxing with WASM for limiting access, cross-platform compatibility, and deployment flexibility. Security features include sandboxed plugins, memory-safe execution, secure plugin distribution, and fine-grained access control. Users can configure the tool for global or project-specific use, start the server with different transport options, and utilize available plugins for tasks like time calculations, QR code generation, hash generation, IP retrieval, and webpage fetching.

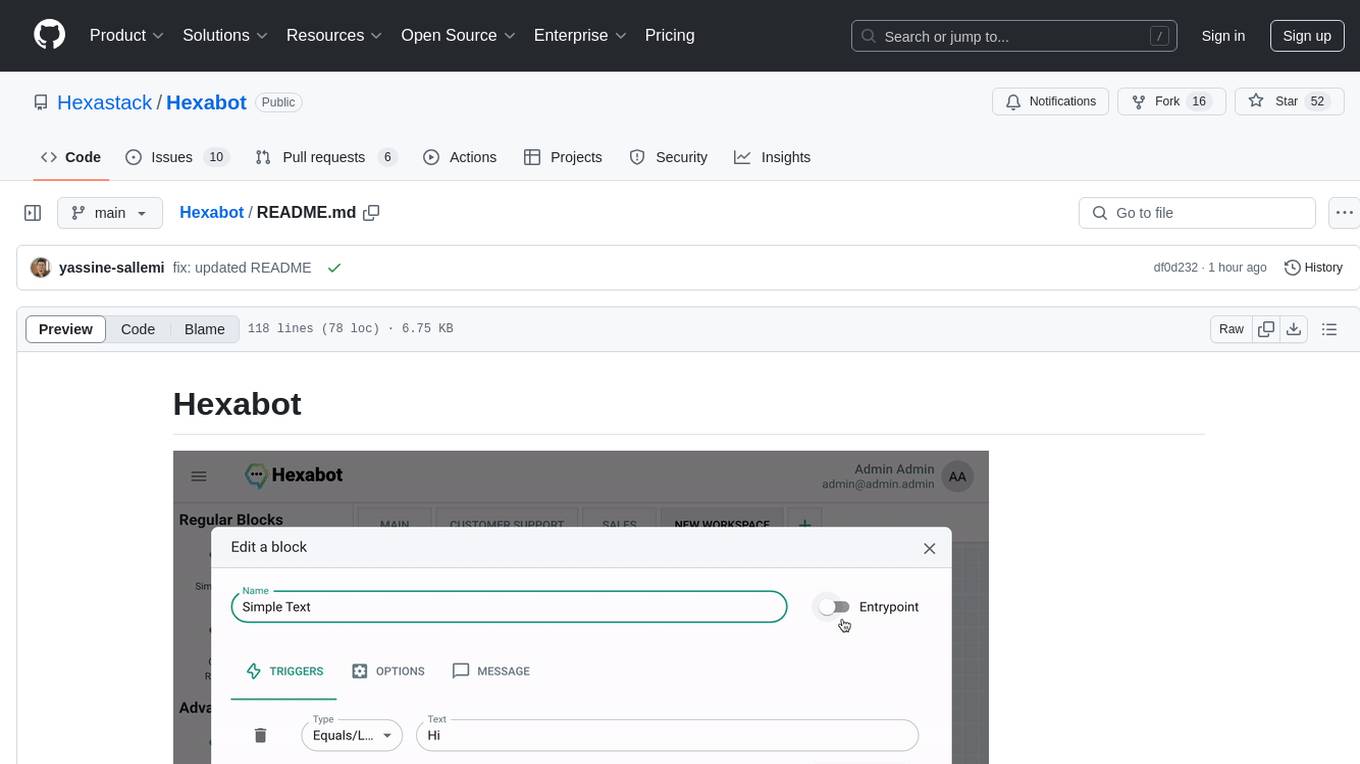

Hexabot

Hexabot Community Edition is an open-source chatbot solution designed for flexibility and customization, offering powerful text-to-action capabilities. It allows users to create and manage AI-powered, multi-channel, and multilingual chatbots with ease. The platform features an analytics dashboard, multi-channel support, visual editor, plugin system, NLP/NLU management, multi-lingual support, CMS integration, user roles & permissions, contextual data, subscribers & labels, and inbox & handover functionalities. The directory structure includes frontend, API, widget, NLU, and docker components. Prerequisites for running Hexabot include Docker and Node.js. The installation process involves cloning the repository, setting up the environment, and running the application. Users can access the UI admin panel and live chat widget for interaction. Various commands are available for managing the Docker services. Detailed documentation and contribution guidelines are provided for users interested in contributing to the project.

arcade-ai

Arcade AI is a developer-focused tooling and API platform designed to enhance the capabilities of LLM applications and agents. It simplifies the process of connecting agentic applications with user data and services, allowing developers to concentrate on building their applications. The platform offers prebuilt toolkits for interacting with various services, supports multiple authentication providers, and provides access to different language models. Users can also create custom toolkits and evaluate their tools using Arcade AI. Contributions are welcome, and self-hosting is possible with the provided documentation.

For similar tasks

LLMs-at-DoD

This repository contains tutorials for using Large Language Models (LLMs) in the U.S. Department of Defense. The tutorials utilize open-source frameworks and LLMs, allowing users to run them in their own cloud environments. The repository is maintained by the Defense Digital Service and welcomes contributions from users.

ai-manus

AI Manus is a general-purpose AI Agent system that supports running various tools and operations in a sandbox environment. It offers deployment with minimal dependencies, supports multiple tools like Terminal, Browser, File, Web Search, and messaging tools, allocates separate sandboxes for tasks, manages session history, supports stopping and interrupting conversations, file upload and download, and is multilingual. The system also provides user login and authentication. The project primarily relies on Docker for development and deployment, with model capability requirements and recommended Deepseek and GPT models.

kilocode

Kilo Code is an open-source VS Code AI agent that allows users to generate code from natural language, check its own work, run terminal commands, automate the browser, and utilize the latest AI models. It offers features like task automation, automated refactoring, and integration with MCP servers. Users can access 400+ AI models and benefit from transparent pricing. Kilo Code is a fork of Roo Code and Cline, with improvements and unique features developed independently.

MiniSearch

MiniSearch is a minimalist search engine with integrated browser-based AI. It is privacy-focused, easy to use, cross-platform, integrated, time-saving, efficient, optimized, and open-source. MiniSearch can be used for a variety of tasks, including searching the web, finding files on your computer, and getting answers to questions. It is a great tool for anyone who wants a fast, private, and easy-to-use search engine.

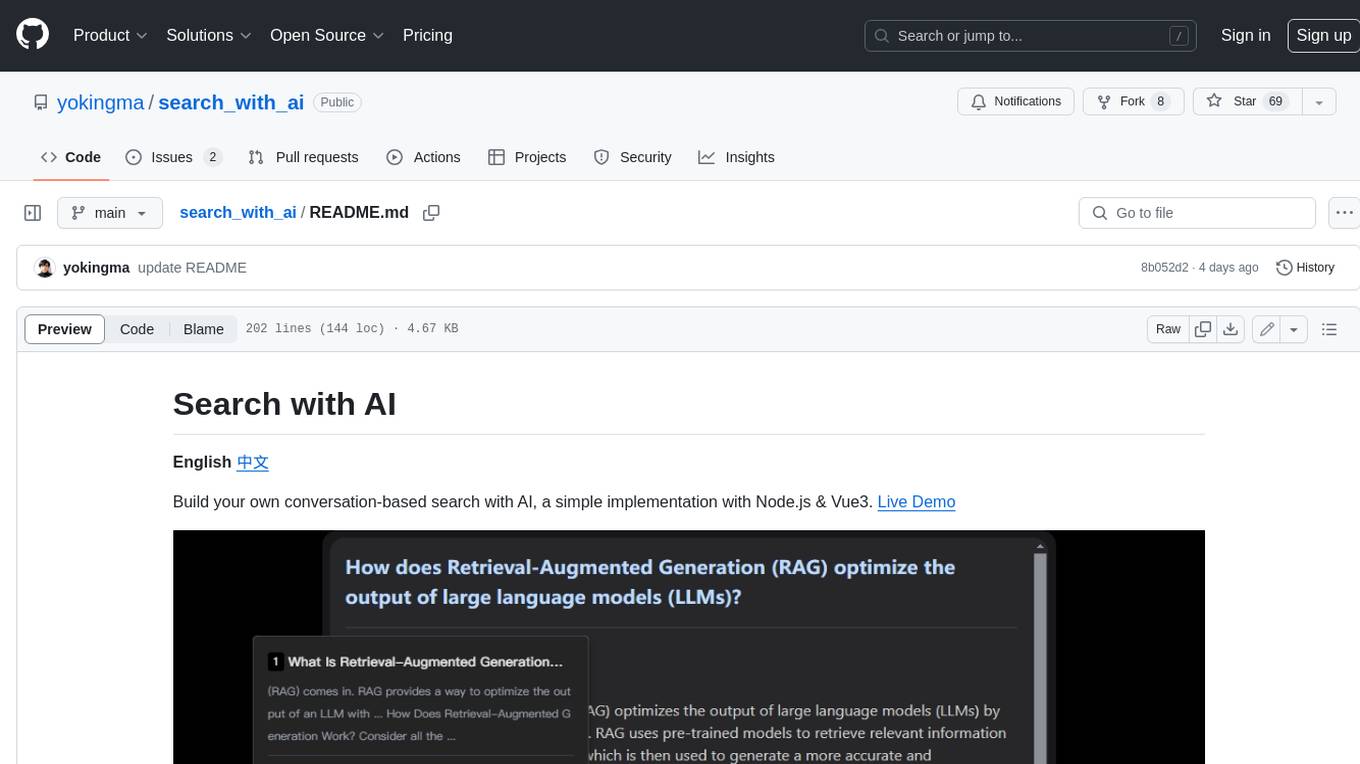

search_with_ai

Build your own conversation-based search with AI, a simple implementation with Node.js & Vue3. Live Demo Features: * Built-in support for LLM: OpenAI, Google, Lepton, Ollama(Free) * Built-in support for search engine: Bing, Sogou, Google, SearXNG(Free) * Customizable pretty UI interface * Support dark mode * Support mobile display * Support local LLM with Ollama * Support i18n * Support Continue Q&A with contexts.

search2ai

S2A allows your large model API to support networking, searching, news, and web page summarization. It currently supports OpenAI, Gemini, and Moonshot (non-streaming). The large model will determine whether to connect to the network based on your input, and it will not connect to the network for searching every time. You don't need to install any plugins or replace keys. You can directly replace the custom address in your commonly used third-party client. You can also deploy it yourself, which will not affect other functions you use, such as drawing and voice.

Tiger

Tiger is a community-driven project developing a reusable and integrated tool ecosystem for LLM Agent Revolution. It utilizes Upsonic for isolated tool storage, profiling, and automatic document generation. With Tiger, you can create a customized environment for your agents or leverage the robust and publicly maintained Tiger curated by the community itself.

chat-xiuliu

Chat-xiuliu is a bidirectional voice assistant powered by ChatGPT, capable of accessing the internet, executing code, reading/writing files, and supporting GPT-4V's image recognition feature. It can also call DALL·E 3 to generate images. The project is a fork from a background of a virtual cat girl named Xiuliu, with removed live chat interaction and added voice input. It can receive questions from microphone or interface, answer them vocally, upload images and PDFs, process tasks through function calls, remember conversation content, search the web, generate images using DALL·E 3, read/write local files, execute JavaScript code in a sandbox, open local files or web pages, customize the cat girl's speaking style, save conversation screenshots, and support Azure OpenAI and other API endpoints in openai format. It also supports setting proxies and various AI models like GPT-4, GPT-3.5, and DALL·E 3.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.