Tiger

Neuralink for your AI Agents - LangChain - Autogen - CrewAI

Stars: 365

Tiger is a community-driven project developing a reusable and integrated tool ecosystem for LLM Agent Revolution. It utilizes Upsonic for isolated tool storage, profiling, and automatic document generation. With Tiger, you can create a customized environment for your agents or leverage the robust and publicly maintained Tiger curated by the community itself.

README:

Setup •

Integrations: crewAI Integration • LangChain Integration • AutoGen Integration • Telegram Integration •

Sources: Currently Tools • Custom Tools (On-Prem Docker) • Public Dashboard •

Tiger is a community-driven project developing a reusable and integrated tool ecosystem for LLM Agent Revolution. It utilizes Upsonic for isolated tool storage, profillibg and for the automatic generation of documents. With Tiger, you can create a customized environment for your agents or leverage the robust and publicly maintained Tiger 🐅 curated by the community itself.

Tiger, influenced by Neuralink, provides an AI-oriented computer interface with threads connected to the LLM interface. It offers a platform for AIs to control a computer by simply 'thinking'.

With Tiger, your LLM agents can write and execute code, use search engines, manage your calendar, control your mouse and keyboard, speak into your headphones, and much more. Essentially, anything conceived by your agent, Tiger will transform into concrete actions. This embodies the core philosophy of the Tiger project – to harness AI intelligence to generate tangible actions and support standard infrastructures. Our goals include:

- Providing a Utility point for agent tools across any framework that utilizes a function call mechanism,

- Building and nurturing a Community of tool support across diverse technologies and disciplines,

- Developing a Free, Open and MIT licensed tool library for the AI agent ecosystem.

Tiger projects have a general usage public library at tiger.upsonic.co. Its include the tools that in tools library. For usage this you can use the standart connection that in upsonic python library. After installing the upsonic library we will use the Tiger object wand integrate to your agents.

- Tiger requires equal or higher python version to 3.8

pip3 install upsonicWe are working on Upsonic and the tools that inside the tools folder is sending to public tiger in each release. We are aiming to create tools without any api key and just like normal human events like searching on google with mouse, keyboard and browser.

-

App

- open

- close

-

browser

- open_url

-

Interpreter

- python

- check_package

- execute

- install_package

- sh

- execute

- python

-

Search

- duckduckgo

- read_website

-

System

- os_name

- architecture

- python_version

- clipboard

- copy

-

Knowledge

- put

- pull

- delete

- index

- reset

-

Communication

- telegram

- as_user

- delete_message

- get_last_dialogs

- get_last_messages

- send_message

- signin

- as_user

- telegram

If you want to add functions to public and strongest Tiger you can see to Adding Tools section.

For the public Tiger you can see the functions and their documentations and readmes in tiger.upsonic.co. You can use this place for documentation also.

Auth

- username: tiger

- password: tiger

Thanks to Upsonic we just write the codes and its gives us an storage system with detailed documentation and cpu ram usage for each function. Also you can make search and use functions in your other projects with connection code.

Auth

- username: tiger

- password: tiger

Tiger project aim is being available for most popular agent framworks like crewAI. In this example you can see the easiest tool integration for an AI agent. We are asking for who is Onur Atakan ULUSOY and waits.

pip3 install crewai 'crewai[tools]'# Geting the tiger tools

from upsonic import Tiger

tools = Tiger().crewai()

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-4-0125-preview", api_key=OPENAI_API_KEY)

from crewai import Agent, Task, Crew, Process

researcher = Agent(

role='Senior Research Analyst',

goal='Uncover cutting-edge developments in AI and data science',

backstory="You are graduated from Research section of University",

verbose=True,

allow_delegation=False,

tools=tools,

llm=llm

)

task1 = Task(

description="""Who is Onur Atakan ULUSOY""",

expected_output="Full analysis report of Onur Atakan ULUSOY and putting the report to knowledge",

agent=researcher

)

crew = Crew(

agents=[researcher],

tasks=[task1],

verbose=2,

)

result = crew.kickoff()pip3 install langchain langchain-openaiTiger is able to make a collabration for sharing tools with LangChain agents with this your agents will able to use Tiger functions. In this example we are asking for an multiplation question and the agent will use the tiger and after that its write a python code and tiger will give the result in behind. With this agent will able to make mathematical operations in just two lines of code.

# Geting the tiger tools

from upsonic import Tiger

tools = Tiger().langchain()

# Generating Agent and executor with tiger tool set

from langchain_openai import ChatOpenAI

from langchain import hub

from langchain.agents import AgentExecutor, create_openai_functions_agent

llm = ChatOpenAI(model="gpt-4-0125-preview", api_key=OPENAI_API_KEY)

prompt = hub.pull("hwchase17/openai-functions-agent")

agent = create_openai_functions_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

# Asking for 15231 * 64231

agent_executor.invoke({"input": "What is the result of 15231 * 64231"})pip3 install pyautogenTiger is also have a integration with AutoGen agents. You can put a tiger to your AutoGen agents. In this examples we will use the 'interpreter.python' module and with this your autogen agent able to run and view result of python codes. With this your agent will able to wait 2 second as we request.

# Generating Agents with tiger tool set

from typing_extensions import Annotated

import autogen

config_list = [

{

'model': 'gpt-4-0125-preview',

'api_key': OPENAI_API_KEY,

},

]

llm_config = {

"config_list": config_list,

"timeout": 120,

}

chatbot = autogen.AssistantAgent(

name="chatbot",

system_message="For coding tasks, only use the functions you have been provided with. Reply TERMINATE when the task is done.",

llm_config=llm_config,

)

user_proxy = autogen.UserProxyAgent(

name="user_proxy",

is_termination_msg=lambda x: x.get("content", "") and x.get("content", "").rstrip().endswith("TERMINATE"),

human_input_mode="NEVER",

max_consecutive_auto_reply=10,

)

# Geting the tiger tools

from upsonic import Tiger

Tiger().autogen(chatbot, userproxy)

# Asking sleep 2 second

user_proxy.initiate_chat(

chatbot,

message="What is Upsonic.co",

)The user who wants to use telegram functionalities in their LLM agents must trig the signin function before all. For this you can use this function and its will ask for phone number and verification code.

from upsonic import Tiger

Tiger().get("communication.telegram.as_user.signin__user")()Tiger project is open to any contribution for public tiger, also in the bottom we have another way to create your own, offline tiger. For adding the public tiger you should create a pull request with your new tool.

-

Create a python file in

tiger/toolssection. for ex:tiger/tools/interpreter/python/execute.py -

Write your function in this format

#imports

def my_function(query:str) -> str:

return query + " hi"

tool_name = "test.my_function"

tool_obj = my_function

tool_requirements = ["beautifulsoup4==4.12.3"]- Create the pull request. When its merged its will be available at public Tiger and dashboard.

For creating your own tiger you should install a Upsonic On-Prem docker container. Its will give a dashboard for viewing your own tools and will make documentation automatic. After that you should use the Upsonic Client to connect your On-Prem for this you should get the connection code from your dashboard and finaly you use the tiger function in upsonic client.

#from upsonic import Tiger

#Tiger().autogen(chatbot, userproxy)

# to

#Your Upsonic Connection Code

upsonic.autogen(chatbot, userproxy)For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Tiger

Similar Open Source Tools

Tiger

Tiger is a community-driven project developing a reusable and integrated tool ecosystem for LLM Agent Revolution. It utilizes Upsonic for isolated tool storage, profiling, and automatic document generation. With Tiger, you can create a customized environment for your agents or leverage the robust and publicly maintained Tiger curated by the community itself.

testzeus-hercules

Hercules is the world’s first open-source testing agent designed to handle the toughest testing tasks for modern web applications. It turns simple Gherkin steps into fully automated end-to-end tests, making testing simple, reliable, and efficient. Hercules adapts to various platforms like Salesforce and is suitable for CI/CD pipelines. It aims to democratize and disrupt test automation, making top-tier testing accessible to everyone. The tool is transparent, reliable, and community-driven, empowering teams to deliver better software. Hercules offers multiple ways to get started, including using PyPI package, Docker, or building and running from source code. It supports various AI models, provides detailed installation and usage instructions, and integrates with Nuclei for security testing and WCAG for accessibility testing. The tool is production-ready, open core, and open source, with plans for enhanced LLM support, advanced tooling, improved DOM distillation, community contributions, extensive documentation, and a bounty program.

llamabot

LlamaBot is a Pythonic bot interface to Large Language Models (LLMs), providing an easy way to experiment with LLMs in Jupyter notebooks and build Python apps utilizing LLMs. It supports all models available in LiteLLM. Users can access LLMs either through local models with Ollama or by using API providers like OpenAI and Mistral. LlamaBot offers different bot interfaces like SimpleBot, ChatBot, QueryBot, and ImageBot for various tasks such as rephrasing text, maintaining chat history, querying documents, and generating images. The tool also includes CLI demos showcasing its capabilities and supports contributions for new features and bug reports from the community.

rosa

ROSA is an AI Agent designed to interact with ROS-based robotics systems using natural language queries. It can generate system reports, read and parse ROS log files, adapt to new robots, and run various ROS commands using natural language. The tool is versatile for robotics research and development, providing an easy way to interact with robots and the ROS environment.

Memori

Memori is a memory fabric designed for enterprise AI that seamlessly integrates into existing software and infrastructure. It is agnostic to LLM, datastore, and framework, providing support for major foundational models and databases. With features like vectorized memories, in-memory semantic search, and a knowledge graph, Memori simplifies the process of attributing LLM interactions and managing sessions. It offers Advanced Augmentation for enhancing memories at different levels and supports various platforms, frameworks, database integrations, and datastores. Memori is designed to reduce development overhead and provide efficient memory management for AI applications.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

langchain

LangChain is a framework for developing Elixir applications powered by language models. It enables applications to connect language models to other data sources and interact with the environment. The library provides components for working with language models and off-the-shelf chains for specific tasks. It aims to assist in building applications that combine large language models with other sources of computation or knowledge. LangChain is written in Elixir and is not aimed for parity with the JavaScript and Python versions due to differences in programming paradigms and design choices. The library is designed to make it easy to integrate language models into applications and expose features, data, and functionality to the models.

giskard-oss

Giskard-oss is an Evaluation & Testing framework for AI systems that aims to control risks of performance, bias, and security issues. It focuses on LLM systems, with plans for a new scan and a rewrite of RAGET for version 3. The repository is structured as a Python workspace with three packages: giskard-core, giskard-checks, and giskard-agents. Developers can use the Makefile for common tasks, and contributions from the AI community are welcome. The project encourages stars for visibility and offers sponsorship options for support.

nobodywho

NobodyWho is a plugin for the Godot game engine that enables interaction with local LLMs for interactive storytelling. Users can install it from Godot editor or GitHub releases page, providing their own LLM in GGUF format. The plugin consists of `NobodyWhoModel` node for model file, `NobodyWhoChat` node for chat interaction, and `NobodyWhoEmbedding` node for generating embeddings. It offers a programming interface for sending text to LLM, receiving responses, and starting the LLM worker.

neo4j-graphrag-python

The Neo4j GraphRAG package for Python is an official repository that provides features for creating and managing vector indexes in Neo4j databases. It aims to offer developers a reliable package with long-term commitment, maintenance, and fast feature updates. The package supports various Python versions and includes functionalities for creating vector indexes, populating them, and performing similarity searches. It also provides guidelines for installation, examples, and development processes such as installing dependencies, making changes, and running tests.

Bard-API

The Bard API is a Python package that returns responses from Google Bard through the value of a cookie. It is an unofficial API that operates through reverse-engineering, utilizing cookie values to interact with Google Bard for users struggling with frequent authentication problems or unable to authenticate via Google Authentication. The Bard API is not a free service, but rather a tool provided to assist developers with testing certain functionalities due to the delayed development and release of Google Bard's API. It has been designed with a lightweight structure that can easily adapt to the emergence of an official API. Therefore, using it for any other purposes is strongly discouraged. If you have access to a reliable official PaLM-2 API or Google Generative AI API, replace the provided response with the corresponding official code. Check out https://github.com/dsdanielpark/Bard-API/issues/262.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

storm

STORM is a LLM system that writes Wikipedia-like articles from scratch based on Internet search. While the system cannot produce publication-ready articles that often require a significant number of edits, experienced Wikipedia editors have found it helpful in their pre-writing stage. **Try out our [live research preview](https://storm.genie.stanford.edu/) to see how STORM can help your knowledge exploration journey and please provide feedback to help us improve the system 🙏!**

kwaak

Kwaak is a tool that allows users to run a team of autonomous AI agents locally from their own machine. It enables users to write code, improve test coverage, update documentation, and enhance code quality while focusing on building innovative projects. Kwaak is designed to run multiple agents in parallel, interact with codebases, answer questions about code, find examples, write and execute code, create pull requests, and more. It is free and open-source, allowing users to bring their own API keys or models via Ollama. Kwaak is part of the bosun.ai project, aiming to be a platform for autonomous code improvement.

giskard

Giskard is an open-source Python library that automatically detects performance, bias & security issues in AI applications. The library covers LLM-based applications such as RAG agents, all the way to traditional ML models for tabular data.

For similar tasks

Tiger

Tiger is a community-driven project developing a reusable and integrated tool ecosystem for LLM Agent Revolution. It utilizes Upsonic for isolated tool storage, profiling, and automatic document generation. With Tiger, you can create a customized environment for your agents or leverage the robust and publicly maintained Tiger curated by the community itself.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

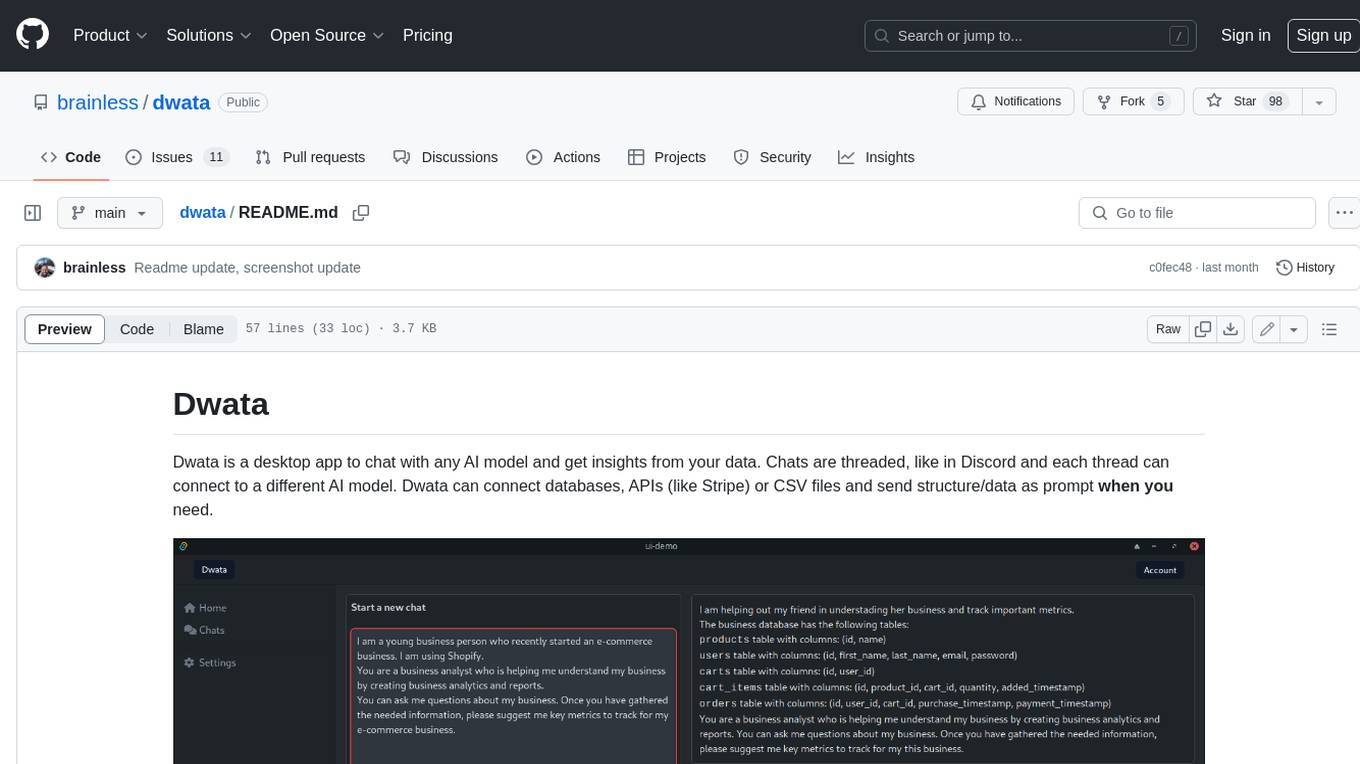

dwata

Dwata is a desktop application that allows users to chat with any AI model and gain insights from their data. Chats are organized into threads, similar to Discord, with each thread connecting to a different AI model. Dwata can connect to databases, APIs (such as Stripe), or CSV files and send structured data as prompts when needed. The AI's response will often include SQL or Python code, which can be used to extract the desired insights. Dwata can validate AI-generated SQL to ensure that the tables and columns referenced are correct and can execute queries against the database from within the application. Python code (typically using Pandas) can also be executed from within Dwata, although this feature is still in development. Dwata supports a range of AI models, including OpenAI's GPT-4, GPT-4 Turbo, and GPT-3.5 Turbo; Groq's LLaMA2-70b and Mixtral-8x7b; Phind's Phind-34B and Phind-70B; Anthropic's Claude; and Ollama's Llama 2, Mistral, and Phi-2 Gemma. Dwata can compare chats from different models, allowing users to see the responses of multiple models to the same prompts. Dwata can connect to various data sources, including databases (PostgreSQL, MySQL, MongoDB), SaaS products (Stripe, Shopify), CSV files/folders, and email (IMAP). The desktop application does not collect any private or business data without the user's explicit consent.

SWE-agent

SWE-agent is a tool that turns language models (e.g. GPT-4) into software engineering agents capable of fixing bugs and issues in real GitHub repositories. It achieves state-of-the-art performance on the full test set by resolving 12.29% of issues. The tool is built and maintained by researchers from Princeton University. SWE-agent provides a command line tool and a graphical web interface for developers to interact with. It introduces an Agent-Computer Interface (ACI) to facilitate browsing, viewing, editing, and executing code files within repositories. The tool includes features such as a linter for syntax checking, a specialized file viewer, and a full-directory string searching command to enhance the agent's capabilities. SWE-agent aims to improve prompt engineering and ACI design to enhance the performance of language models in software engineering tasks.

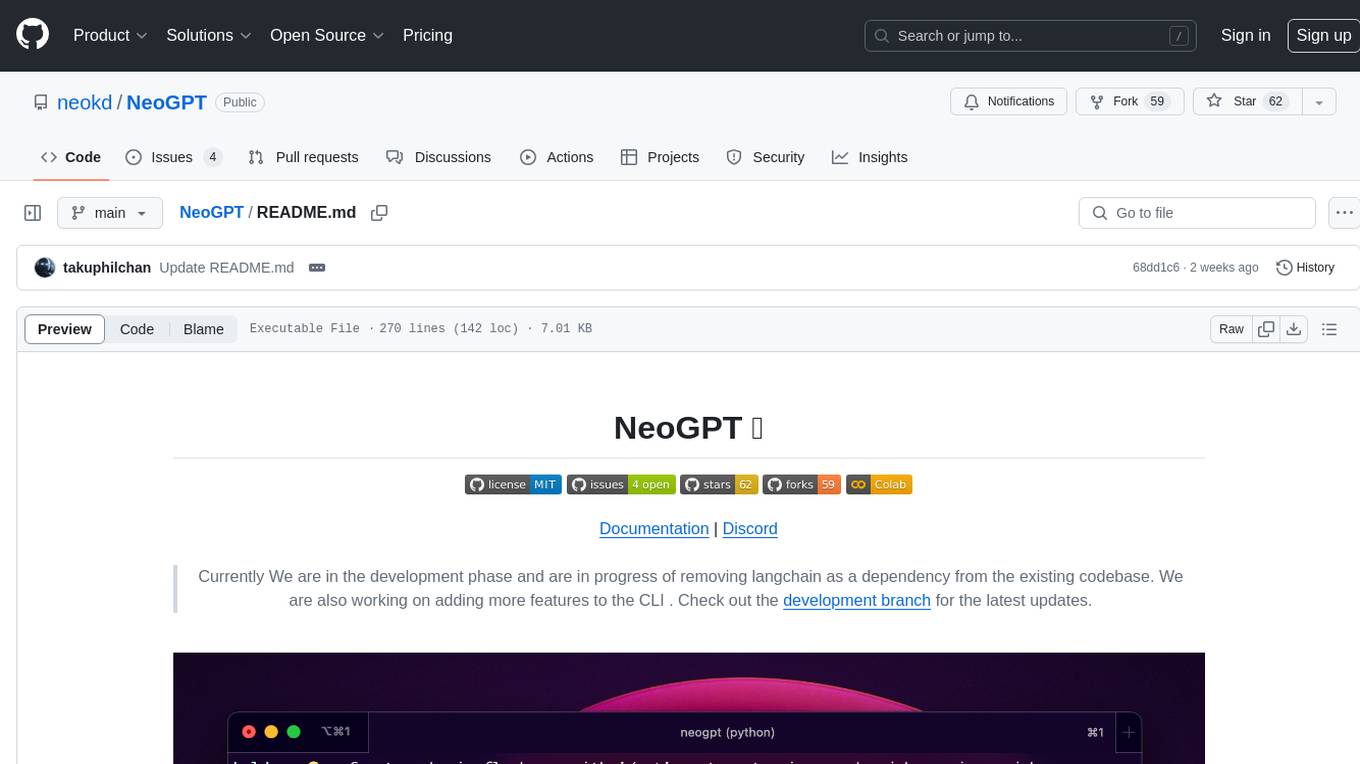

NeoGPT

NeoGPT is an AI assistant that transforms your local workspace into a powerhouse of productivity from your CLI. With features like code interpretation, multi-RAG support, vision models, and LLM integration, NeoGPT redefines how you work and create. It supports executing code seamlessly, multiple RAG techniques, vision models, and interacting with various language models. Users can run the CLI to start using NeoGPT and access features like Code Interpreter, building vector database, running Streamlit UI, and changing LLM models. The tool also offers magic commands for chat sessions, such as resetting chat history, saving conversations, exporting settings, and more. Join the NeoGPT community to experience a new era of efficiency and contribute to its evolution.

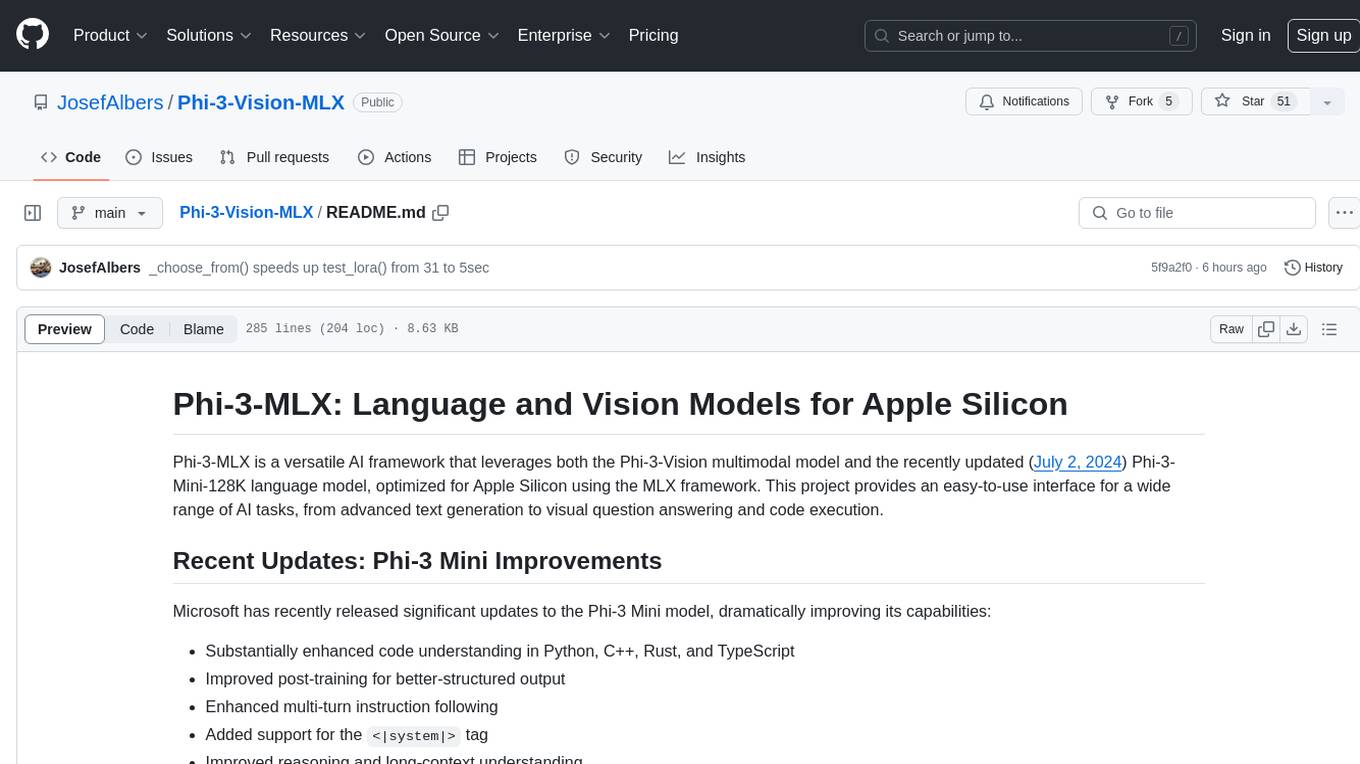

Phi-3-Vision-MLX

Phi-3-MLX is a versatile AI framework that leverages both the Phi-3-Vision multimodal model and the Phi-3-Mini-128K language model optimized for Apple Silicon using the MLX framework. It provides an easy-to-use interface for a wide range of AI tasks, from advanced text generation to visual question answering and code execution. The project features support for batched generation, flexible agent system, custom toolchains, model quantization, LoRA fine-tuning capabilities, and API integration for extended functionality.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.