llm-sandbox

Lightweight and portable LLM sandbox runtime (code interpreter) Python library.

Stars: 151

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

README:

Securely Execute LLM-Generated Code with Ease

LLM Sandbox is a lightweight and portable sandbox environment designed to run large language model (LLM) generated code in a safe and isolated manner using Docker containers. This project aims to provide an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs.

- Easy Setup: Quickly create sandbox environments with minimal configuration.

- Isolation: Run your code in isolated Docker containers to prevent interference with your host system.

- Flexibility: Support for multiple programming languages.

- Portability: Use predefined Docker images or custom Dockerfiles.

- Scalability: Support Kubernetes and remote Docker host.

- Ensure you have Poetry installed.

- Add the package to your project:

poetry add llm-sandbox # or poetry add llm-sandbox[kubernetes] or poetry add llm-sandbox[podman] or poetry add llm-sandbox[docker]- Ensure you have pip installed.

- Install the package:

pip install llm-sandbox # or pip install llm-sandbox[kubernetes] or pip install llm-sandbox[podman] or pip install llm-sandbox[docker]See CHANGELOG.md for more details.

The SandboxSession class manages the lifecycle of the sandbox environment, including the creation and destruction of Docker containers. Here's a typical lifecycle:

-

Initialization: Create a

SandboxSessionobject with the desired configuration. -

Open Session: Call the

open()method to build/pull the Docker image and start the Docker container. -

Run Code: Use the

run()method to execute code inside the sandbox. Currently, it supports Python, Java, JavaScript, C++, Go, and Ruby. See examples for more details. -

Close Session: Call the

close()method to stop and remove the Docker container. If thekeep_templateflag is set toTrue, the Docker image will not be removed, and the last container state will be committed to the image.

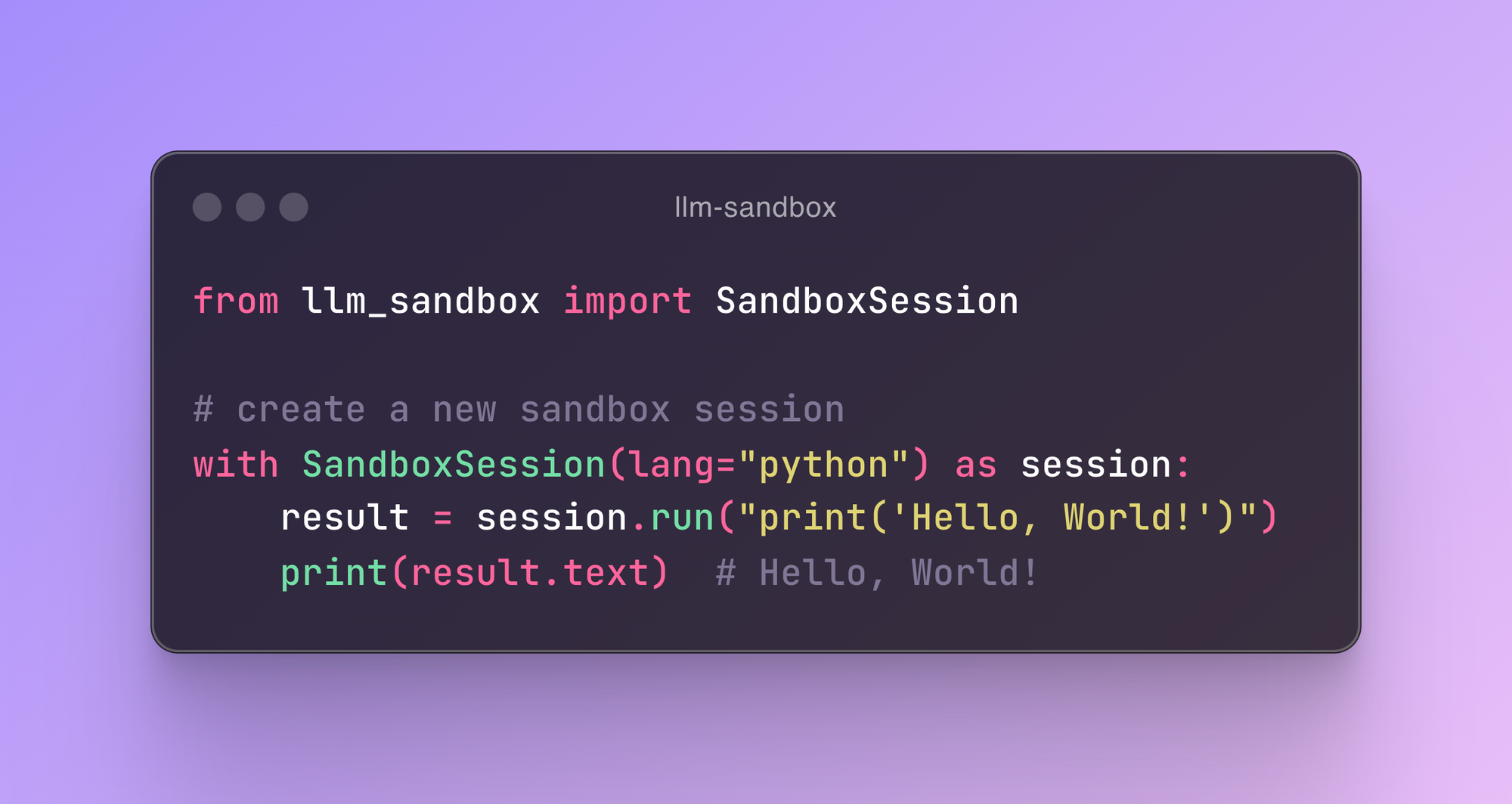

from llm_sandbox import SandboxSession

# Create a new sandbox session

with SandboxSession(image="python:3.9.19-bullseye", keep_template=True, lang="python") as session:

result = session.run("print('Hello, World!')")

print(result)

# With custom Dockerfile

with SandboxSession(dockerfile="Dockerfile", keep_template=True, lang="python") as session:

result = session.run("print('Hello, World!')")

print(result)

# Or default image

with SandboxSession(lang="python", keep_template=True) as session:

result = session.run("print('Hello, World!')")

print(result)LLM Sandbox also supports copying files between the host and the sandbox:

from llm_sandbox import SandboxSession

with SandboxSession(lang="python", keep_template=True) as session:

# Copy a file from the host to the sandbox

session.copy_to_runtime("test.py", "/sandbox/test.py")

# Run the copied Python code in the sandbox

result = session.execute_command("python /sandbox/test.py")

print(result)

# Copy a file from the sandbox to the host

session.copy_from_runtime("/sandbox/output.txt", "output.txt")from llm_sandbox import SandboxSession

pod_manifest = {

"apiVersion": "v1",

"kind": "Pod",

"metadata": {

"name": "test",

"namespace": "test",

"labels": {"app": "sandbox"},

},

"spec": {

"containers": [

{

"name": "sandbox-container",

"image": "test",

"tty": True,

"volumeMounts": {

"name": "tmp",

"mountPath": "/tmp",

},

}

],

"volumes": [{"name": "tmp", "emptyDir": {"sizeLimit": "5Gi"}}],

},

}

with SandboxSession(

backend="kubernetes",

image="python:3.9.19-bullseye",

dockerfile=None,

lang="python",

keep_template=False,

verbose=False,

pod_manifest=pod_manifest,

) as session:

result = session.run("print('Hello, World!')")

print(result)import docker

from llm_sandbox import SandboxSession

tls_config = docker.tls.TLSConfig(

client_cert=("path/to/cert.pem", "path/to/key.pem"),

ca_cert="path/to/ca.pem",

verify=True

)

docker_client = docker.DockerClient(base_url="tcp://<your_host>:<port>", tls=tls_config)

with SandboxSession(

client=docker_client,

image="python:3.9.19-bullseye",

keep_template=True,

lang="python",

) as session:

result = session.run("print('Hello, World!')")

print(result)from kubernetes import client, config

from llm_sandbox import SandboxSession

# Use local kubeconfig

config.load_kube_config()

k8s_client = client.CoreV1Api()

with SandboxSession(

client=k8s_client,

backend="kubernetes",

image="python:3.9.19-bullseye",

lang="python",

pod_manifest=pod_manifest, # None by default

) as session:

result = session.run("print('Hello from Kubernetes!')")

print(result)from llm_sandbox import SandboxSession

with SandboxSession(

backend="podman",

lang="python",

image="python:3.9.19-bullseye"

) as session:

result = session.run("print('Hello from Podman!')")

print(result)from typing import Optional, List

from llm_sandbox import SandboxSession

from langchain import hub

from langchain_openai import ChatOpenAI

from langchain.tools import tool

from langchain.agents import AgentExecutor, create_tool_calling_agent

@tool

def run_code(lang: str, code: str, libraries: Optional[List] = None) -> str:

"""

Run code in a sandboxed environment.

:param lang: The language of the code.

:param code: The code to run.

:param libraries: The libraries to use, it is optional.

:return: The output of the code.

"""

with SandboxSession(lang=lang, verbose=False) as session: # type: ignore[attr-defined]

return session.run(code, libraries).text

if __name__ == "__main__":

llm = ChatOpenAI(model="gpt-4o", temperature=0)

prompt = hub.pull("hwchase17/openai-functions-agent")

tools = [run_code]

agent = create_tool_calling_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

output = agent_executor.invoke(

{

"input": "Write python code to calculate Pi number by Monte Carlo method then run it."

}

)

print(output)

output = agent_executor.invoke(

{

"input": "Write python code to calculate the factorial of a number then run it."

}

)

print(output)

output = agent_executor.invoke(

{"input": "Write python code to calculate the Fibonacci sequence then run it."}

)

print(output)from typing import Optional, List

from llm_sandbox import SandboxSession

from llama_index.llms.openai import OpenAI

from llama_index.core.tools import FunctionTool

from llama_index.core.agent import FunctionCallingAgentWorker

import nest_asyncio

nest_asyncio.apply()

def run_code(lang: str, code: str, libraries: Optional[List] = None) -> str:

"""

Run code in a sandboxed environment.

:param lang: The language of the code, must be one of ['python', 'java', 'javascript', 'cpp', 'go', 'ruby'].

:param code: The code to run.

:param libraries: The libraries to use, it is optional.

:return: The output of the code.

"""

with SandboxSession(lang=lang, verbose=False) as session: # type: ignore[attr-defined]

return session.run(code, libraries).text

if __name__ == "__main__":

llm = OpenAI(model="gpt-4o", temperature=0)

code_execution_tool = FunctionTool.from_defaults(fn=run_code)

agent_worker = FunctionCallingAgentWorker.from_tools(

[code_execution_tool],

llm=llm,

verbose=True,

allow_parallel_tool_calls=False,

)

agent = agent_worker.as_agent()

response = agent.chat(

"Write python code to calculate Pi number by Monte Carlo method then run it."

)

print(response)

response = agent.chat(

"Write python code to calculate the factorial of a number then run it."

)

print(response)

response = agent.chat(

"Write python code to calculate the Fibonacci sequence then run it."

)

print(response)

response = agent.chat("Calculate the sum of the first 10000 numbers.")

print(response)We welcome contributions! Here are some areas we're looking to improve:

- [ ] Add support for more programming languages

- [ ] Enhance security scanning patterns

- [ ] Improve resource monitoring accuracy

- [ ] Add more AI framework integrations

- [ ] Implement container pooling for better performance

- [ ] Add support for distributed execution

- [ ] Enhance logging and monitoring features

This project is licensed under the MIT License. See the LICENSE file for details.

See CHANGELOG.md for a list of changes and improvements in each version.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-sandbox

Similar Open Source Tools

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

instructor

Instructor is a tool that provides structured outputs from Large Language Models (LLMs) in a reliable manner. It simplifies the process of extracting structured data by utilizing Pydantic for validation, type safety, and IDE support. With Instructor, users can define models and easily obtain structured data without the need for complex JSON parsing, error handling, or retries. The tool supports automatic retries, streaming support, and extraction of nested objects, making it production-ready for various AI applications. Trusted by a large community of developers and companies, Instructor is used by teams at OpenAI, Google, Microsoft, AWS, and YC startups.

clarifai-python

The Clarifai Python SDK offers a comprehensive set of tools to integrate Clarifai's AI platform to leverage computer vision capabilities like classification , detection ,segementation and natural language capabilities like classification , summarisation , generation , Q&A ,etc into your applications. With just a few lines of code, you can leverage cutting-edge artificial intelligence to unlock valuable insights from visual and textual content.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

FlashLearn

FlashLearn is a tool that provides a simple interface and orchestration for incorporating Agent LLMs into workflows and ETL pipelines. It allows data transformations, classifications, summarizations, rewriting, and custom multi-step tasks using LLMs. Each step and task has a compact JSON definition, making pipelines easy to understand and maintain. FlashLearn supports LiteLLM, Ollama, OpenAI, DeepSeek, and other OpenAI-compatible clients.

solana-agent-kit

Solana Agent Kit is an open-source toolkit designed for connecting AI agents to Solana protocols. It enables agents, regardless of the model used, to autonomously perform various Solana actions such as trading tokens, launching new tokens, lending assets, sending compressed airdrops, executing blinks, and more. The toolkit integrates core blockchain features like token operations, NFT management via Metaplex, DeFi integration, Solana blinks, AI integration features with LangChain, autonomous modes, and AI tools. It provides ready-to-use tools for blockchain operations, supports autonomous agent actions, and offers features like memory management, real-time feedback, and error handling. Solana Agent Kit facilitates tasks such as deploying tokens, creating NFT collections, swapping tokens, lending tokens, staking SOL, and sending SPL token airdrops via ZK compression. It also includes functionalities for fetching price data from Pyth and relies on key Solana and Metaplex libraries for its operations.

langchainrb

Langchain.rb is a Ruby library that makes it easy to build LLM-powered applications. It provides a unified interface to a variety of LLMs, vector search databases, and other tools, making it easy to build and deploy RAG (Retrieval Augmented Generation) systems and assistants. Langchain.rb is open source and available under the MIT License.

instructor

Instructor is a Python library that makes it a breeze to work with structured outputs from large language models (LLMs). Built on top of Pydantic, it provides a simple, transparent, and user-friendly API to manage validation, retries, and streaming responses. Get ready to supercharge your LLM workflows!

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

LightRAG

LightRAG is a repository hosting the code for LightRAG, a system that supports seamless integration of custom knowledge graphs, Oracle Database 23ai, Neo4J for storage, and multiple file types. It includes features like entity deletion, batch insert, incremental insert, and graph visualization. LightRAG provides an API server implementation for RESTful API access to RAG operations, allowing users to interact with it through HTTP requests. The repository also includes evaluation scripts, code for reproducing results, and a comprehensive code structure.

agent-sdk-go

Agent Go SDK is a powerful Go framework for building production-ready AI agents that seamlessly integrates memory management, tool execution, multi-LLM support, and enterprise features into a flexible, extensible architecture. It offers core capabilities like multi-model intelligence, modular tool ecosystem, advanced memory management, and MCP integration. The SDK is enterprise-ready with built-in guardrails, complete observability, and support for enterprise multi-tenancy. It provides a structured task framework, declarative configuration, and zero-effort bootstrapping for development experience. The SDK supports environment variables for configuration and includes features like creating agents with YAML configuration, auto-generating agent configurations, using MCP servers with an agent, and CLI tool for headless usage.

deep-searcher

DeepSearcher is a tool that combines reasoning LLMs and Vector Databases to perform search, evaluation, and reasoning based on private data. It is suitable for enterprise knowledge management, intelligent Q&A systems, and information retrieval scenarios. The tool maximizes the utilization of enterprise internal data while ensuring data security, supports multiple embedding models, and provides support for multiple LLMs for intelligent Q&A and content generation. It also includes features like private data search, vector database management, and document loading with web crawling capabilities under development.

pocketgroq

PocketGroq is a tool that provides advanced functionalities for text generation, web scraping, web search, and AI response evaluation. It includes features like an Autonomous Agent for answering questions, web crawling and scraping capabilities, enhanced web search functionality, and flexible integration with Ollama server. Users can customize the agent's behavior, evaluate responses using AI, and utilize various methods for text generation, conversation management, and Chain of Thought reasoning. The tool offers comprehensive methods for different tasks, such as initializing RAG, error handling, and tool management. PocketGroq is designed to enhance development processes and enable the creation of AI-powered applications with ease.

acte

Acte is a framework designed to build GUI-like tools for AI Agents. It aims to address the issues of cognitive load and freedom degrees when interacting with multiple APIs in complex scenarios. By providing a graphical user interface (GUI) for Agents, Acte helps reduce cognitive load and constraints interaction, similar to how humans interact with computers through GUIs. The tool offers APIs for starting new sessions, executing actions, and displaying screens, accessible via HTTP requests or the SessionManager class.

For similar tasks

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

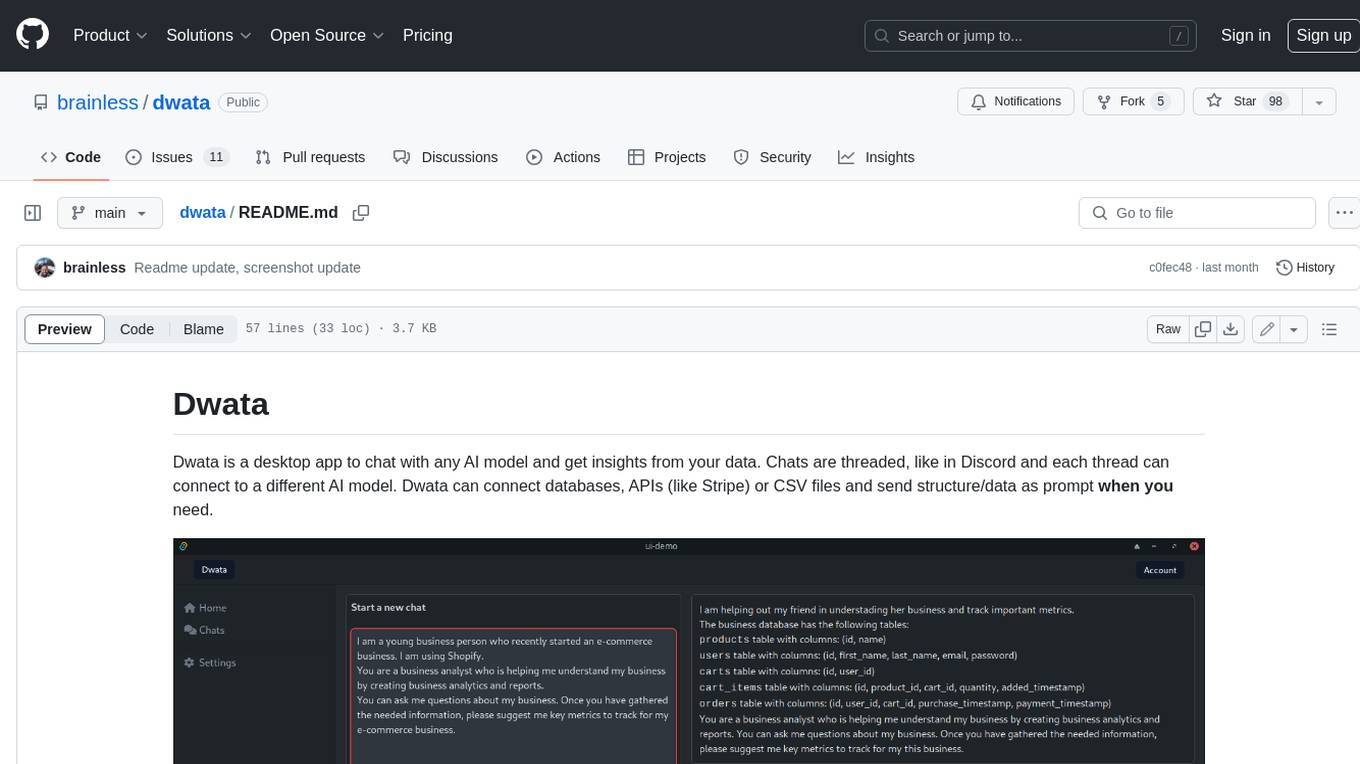

dwata

Dwata is a desktop application that allows users to chat with any AI model and gain insights from their data. Chats are organized into threads, similar to Discord, with each thread connecting to a different AI model. Dwata can connect to databases, APIs (such as Stripe), or CSV files and send structured data as prompts when needed. The AI's response will often include SQL or Python code, which can be used to extract the desired insights. Dwata can validate AI-generated SQL to ensure that the tables and columns referenced are correct and can execute queries against the database from within the application. Python code (typically using Pandas) can also be executed from within Dwata, although this feature is still in development. Dwata supports a range of AI models, including OpenAI's GPT-4, GPT-4 Turbo, and GPT-3.5 Turbo; Groq's LLaMA2-70b and Mixtral-8x7b; Phind's Phind-34B and Phind-70B; Anthropic's Claude; and Ollama's Llama 2, Mistral, and Phi-2 Gemma. Dwata can compare chats from different models, allowing users to see the responses of multiple models to the same prompts. Dwata can connect to various data sources, including databases (PostgreSQL, MySQL, MongoDB), SaaS products (Stripe, Shopify), CSV files/folders, and email (IMAP). The desktop application does not collect any private or business data without the user's explicit consent.

Tiger

Tiger is a community-driven project developing a reusable and integrated tool ecosystem for LLM Agent Revolution. It utilizes Upsonic for isolated tool storage, profiling, and automatic document generation. With Tiger, you can create a customized environment for your agents or leverage the robust and publicly maintained Tiger curated by the community itself.

SWE-agent

SWE-agent is a tool that turns language models (e.g. GPT-4) into software engineering agents capable of fixing bugs and issues in real GitHub repositories. It achieves state-of-the-art performance on the full test set by resolving 12.29% of issues. The tool is built and maintained by researchers from Princeton University. SWE-agent provides a command line tool and a graphical web interface for developers to interact with. It introduces an Agent-Computer Interface (ACI) to facilitate browsing, viewing, editing, and executing code files within repositories. The tool includes features such as a linter for syntax checking, a specialized file viewer, and a full-directory string searching command to enhance the agent's capabilities. SWE-agent aims to improve prompt engineering and ACI design to enhance the performance of language models in software engineering tasks.

NeoGPT

NeoGPT is an AI assistant that transforms your local workspace into a powerhouse of productivity from your CLI. With features like code interpretation, multi-RAG support, vision models, and LLM integration, NeoGPT redefines how you work and create. It supports executing code seamlessly, multiple RAG techniques, vision models, and interacting with various language models. Users can run the CLI to start using NeoGPT and access features like Code Interpreter, building vector database, running Streamlit UI, and changing LLM models. The tool also offers magic commands for chat sessions, such as resetting chat history, saving conversations, exporting settings, and more. Join the NeoGPT community to experience a new era of efficiency and contribute to its evolution.

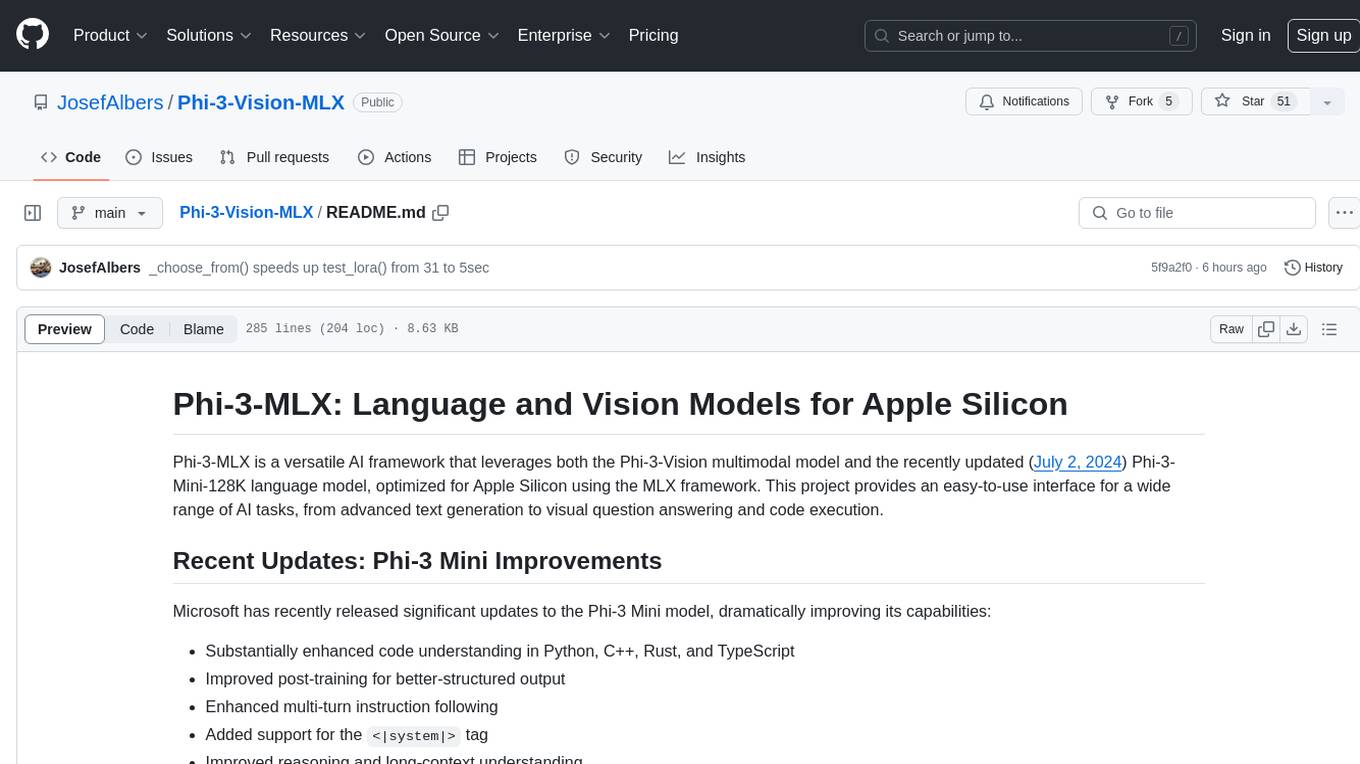

Phi-3-Vision-MLX

Phi-3-MLX is a versatile AI framework that leverages both the Phi-3-Vision multimodal model and the Phi-3-Mini-128K language model optimized for Apple Silicon using the MLX framework. It provides an easy-to-use interface for a wide range of AI tasks, from advanced text generation to visual question answering and code execution. The project features support for batched generation, flexible agent system, custom toolchains, model quantization, LoRA fine-tuning capabilities, and API integration for extended functionality.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.