lovelaice

An AI-powered assistant for your terminal and editor

Stars: 54

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

README:

Lovelaice is an LLM-powered bot that sits in your terminal. It has access to your files and it can run bash commands for you. It can also access the Internet and search for answers to general questions, as well as technical ones.

Install with pip:

pip install lovelaice

Before using Lovelaice, you will need an API key for OpenAI and a model.

Run lovelaice --config to set up lovelaice for the first time.

$ lovelaice --config

The API base URL (in case you're not using OpenAI)

base_url: <ENTER OR LEAVE DEFAULT>

The API key to authenticate with the LLM provider

api_key: <ENTER YOUR API KEY>

The concrete LLM model to use

model (gpt-4o): <ENTER OR LEAVE DEFAULT>

You can also define a custom base URL (if you are using an alternative, OpenAI-compatible provider such as Fireworks AI or Mistral AI, or a local LLM server such as LMStudio or vLLM). Leave it blank if you are using OpenAI's API.

Once configured, you'll find a .lovelaice.yml file in your home directory

(or wherever you ran lovelaice --config). These configuration files will stack up,

so you can have a general configuration in your home folder, and a project-specific

configuration in any sub-folder.

You can use lovelaice from the command line to ask anything.

Lovelaice understands many different types of requests, and will

employ different tools according to the question.

You can also use Lovelaice in interactive mode just by typing lovelaice without a query.

It will launch a conversation loop that you can close at any time with Ctrl+D.

Remember to run lovelaice --help for a full description of all commands.

You can use lovelaice a basic completion model, passing --complete or -c for short.

$ lovelaice -c Once upon a time, in a small village

Once upon a time, in a small village nestled in the rolling hills of Provence,

there was a tiny, exquisite perfume shop. The sign above the door read

"Maison de Rêve" – House of Dreams. The shop was owned by a kind-hearted and

talented perfumer named Colette, who spent her days crafting enchanting fragrances

that transported those who wore them to a world of beauty and wonder.

[...]

You can also run lovelaice --complete-files [files] and have lovelaice instead run completion on text file. It will scan the file for the first occurrence of the +++ token and run completion using the previous text as prompt.

Additionally, you can add --watch to leave lovelaice running in the background, watching for file changes to all of the arguments to --complete-files. Every time a file changes, it will run completion there.

In conjunction with a sensible text editor (e.g. one that reloads files when changed on disk), this feature allows you to have an almost seamless integration between lovelaice and your editor. Just type a +++ anywhere you want lovelaice to complete, hit Ctrl+S, and wait for a few seconds. No need for fancy editor plugins.

You can ask a casual question about virtually anything:

$ lovelaice what is the meaning of life

The meaning of life is a philosophical and metaphysical question

related to the significance of living or existence in general.

Many different people, cultures, and religions have different

beliefs about the purpose and meaning of life.

[...]

You can also ask lovelaice to do something in your terminal:

$ lovelaice how much free space do i have

:: Using Bash

Running the following code:

$ df -h | grep '/$' | awk '{ print $4 }'

[y]es / [N]o y

5,5G

You have approximately 5.5 Gigabytes of free space left on your filesystem.

You can ask a general question about programming:

$ lovelaice how to make an async iterator in python

:: Using Codegen

In Python, you can create an asynchronous iterator using the `async for` statement and the `async def` syntax. Asynchronous iterators are useful when you want to iterate over a sequence of asynchronous tasks, such as fetching data from a web service.

Here's a general explanation of how to create an asynchronous iterator in Python:

1. Define an asynchronous generator function using the `async def` syntax.

2. Inside the function, use the `async for` statement to iterate over the asynchronous tasks.

3. Use the `yield` keyword to return each item from the generator function.

Here's an example of an asynchronous iterator that generates a sequence of integers:

```python

async def async_integer_generator():

i = 0

while True:

yield i

i += 1

await asyncio.sleep(0.1)

```

[...]

Overall, creating an asynchronous iterator in Python is a powerful way to iterate over a sequence of asynchronous tasks. By using the `async def` syntax, the `async for` statement, and the `yield` keyword, you can create an efficient and flexible iterator that can handle a wide range of use cases.

And if you ask it something math-related it can generate and run Python for you:

$ lovelaice throw three d20 dices and return the middle value

:: Using Interpreter

Will run the following code:

def solve():

values = [random.randint(1, 20) for _ in range(3)]

values.sort()

return values[1]

result = solve()

[y]es / [N]o y

Result: 14

NOTE: Lovelaice will always require you to explicitly agree to run any code. Make sure that you understand what the code will do, otherwise there is no guarantee your computer won't suddenly grow a hand and slap you in the face, like, literally.

So far Lovelaice has both general-purpose chat capabilites, and access to bash. Here is a list of things you can try:

- Chat with Lovelaice about casual stuff

- Ask Lovelaice questions about your system, distribution, free space, etc.

- Order Lovelaice to create folders, install packages, update apps, etc.

- Order Lovelaice to set settings, turn off the wifi, restart the computer, etc.

- Order Lovelaice to stage, commit, push, show diffs, etc.

- Ask Lovelaice to solve some math equation, generate random numbers, etc.

In general, you can attempt to ask Lovelaice to do just about anything that requires bash, and it will try its best. Your imagination is the only limit.

Here are some features under active development:

- JSON mode, reply with a JSON object if invoked with

--json. - Integrate with

instructorfor robust JSON responses. - Integrate with

richfor better CLI experience. - Keep conversation history in context.

- Separate tools from skills to improve intent detection.

- Read files and answer questions about their content.

- Recover from mistakes and suggest solutions (e.g., installing libraries).

- Create and modify notes, emails, calendar entries, etc.

- Read your emails and send emails on your behalf (with confirmation!).

- Tell you about the weather (https://open-meteo.com/).

- Scaffold coding projects, creating files and running initialization commands.

- Search in Google, crawl webpages, and answer questions using that content.

- VSCode extension.

- Transcribe audio.

- Extract structured information from plain text.

- Understand Excel sheets and draw graphs.

- Call APIs and download data, using the OpenAPI.json specification.

- More powerful planning, e.g., use several tools for a single task.

- Learning mode to add instructions for concrete skills.

- v0.3.6: Basic API (completion only)

- v0.3.5: Structured configuration in YAML

- v0.3.4: Basic functionality and tools

Code is MIT. Just fork, clone, edit, and open a PR. All suggestions, bug reports, and contributions are welcome.

What models do you use?

Currently, all OpenAI-compatible APIs are supported, which should cover most use cases, including major commercial LLM providers as well as local serves.

If you want to use a custom API that is not OpenAI-compatible, you can easily setup a proxy with LiteLLM.

I do not have specific plans to add any other API, because maintaining different APIs could become hell, but you are free to submit a PR for your favorite API and it might get included.

Is this safe?

Lovelaice will never run code without your explicit consent. That being said, LLMs are known for making subtle mistakes, so never trust that the code does what Lovelaice says it do. Always read the code and make sure you understand what it does.

When in doubt, adopt the same stance as if you were copy/pasting code from a random blog somehwere in the web (because that is exactly what you're doing).

Which model(s) shoud I use?

I have tested lovelaice extensibly using Llama 3.1 70b, from Fireworks AI.

It is fairly versatile model that is still affordable enough for full-time use. It works well for coding, general chat, bash generation, creative storytelling, and basically anything I use lovelaice on a daily basis.

Thus, probably any model with a similar general performance to Llama 70b will work similarly well. You are unlikely to need, e.g., a 400b model unless you want a pretty complex task. However, using very small models, e.g., 11b or less, may lead to degraded performance, especially because these models may fail to even detect which is the best tool to use. In any case, there is no substitute for experimentation.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lovelaice

Similar Open Source Tools

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

serena

Serena is a powerful coding agent that integrates with existing LLMs to provide essential semantic code retrieval and editing tools. It is free to use and does not require API keys or subscriptions. Serena can be used for coding tasks such as analyzing, planning, and editing code directly on your codebase. It supports various programming languages and offers semantic code analysis capabilities through language servers. Serena can be integrated with different LLMs using the model context protocol (MCP) or Agno framework. The tool provides a range of functionalities for code retrieval, editing, and execution, making it a versatile coding assistant for developers.

kork

Kork is an experimental Langchain chain that helps build natural language APIs powered by LLMs. It allows assembling a natural language API from python functions, generating a prompt for correct program writing, executing programs safely, and controlling the kind of programs LLMs can generate. The language is limited to variable declarations, function invocations, and arithmetic operations, ensuring predictability and safety in production settings.

TinyTroupe

TinyTroupe is an experimental Python library that leverages Large Language Models (LLMs) to simulate artificial agents called TinyPersons with specific personalities, interests, and goals in simulated environments. The focus is on understanding human behavior through convincing interactions and customizable personas for various applications like advertisement evaluation, software testing, data generation, project management, and brainstorming. The tool aims to enhance human imagination and provide insights for better decision-making in business and productivity scenarios.

boxcars

Boxcars is a Ruby gem that enables users to create new systems with AI composability, incorporating concepts such as LLMs, Search, SQL, Rails Active Record, Vector Search, and more. It allows users to work with Boxcars, Trains, Prompts, Engines, and VectorStores to solve problems and generate text results. The gem is designed to be user-friendly for beginners and can be extended with custom concepts. Boxcars is actively seeking ways to enhance security measures to prevent malicious actions. Users can use Boxcars for tasks like running calculations, performing searches, generating Ruby code for math operations, and interacting with APIs like OpenAI, Anthropic, and Google SERP.

claude.vim

Claude.vim is a Vim plugin that integrates Claude, an AI pair programmer, into your Vim workflow. It allows you to chat with Claude about what to build or how to debug problems, and Claude offers opinions, proposes modifications, or even writes code. The plugin provides a chat/instruction-centric interface optimized for human collaboration, with killer features like access to chat history and vimdiff interface. It can refactor code, modify or extend selected pieces of code, execute complex tasks by reading documentation, cloning git repositories, and more. Note that it is early alpha software and expected to rapidly evolve.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

ezkl

EZKL is a library and command-line tool for doing inference for deep learning models and other computational graphs in a zk-snark (ZKML). It enables the following workflow: 1. Define a computational graph, for instance a neural network (but really any arbitrary set of operations), as you would normally in pytorch or tensorflow. 2. Export the final graph of operations as an .onnx file and some sample inputs to a .json file. 3. Point ezkl to the .onnx and .json files to generate a ZK-SNARK circuit with which you can prove statements such as: > "I ran this publicly available neural network on some private data and it produced this output" > "I ran my private neural network on some public data and it produced this output" > "I correctly ran this publicly available neural network on some public data and it produced this output" In the backend we use the collaboratively-developed Halo2 as a proof system. The generated proofs can then be verified with much less computational resources, including on-chain (with the Ethereum Virtual Machine), in a browser, or on a device.

brokk

Brokk is a code assistant designed to understand code semantically, allowing LLMs to work effectively on large codebases. It offers features like agentic search, summarizing related classes, parsing stack traces, adding source for usages, and autonomously fixing errors. Users can interact with Brokk through different panels and commands, enabling them to manipulate context, ask questions, search codebase, run shell commands, and more. Brokk helps with tasks like debugging regressions, exploring codebase, AI-powered refactoring, and working with dependencies. It is particularly useful for making complex, multi-file edits with o1pro.

lumigator

Lumigator is an open-source platform developed by Mozilla.ai to help users select the most suitable language model for their specific needs. It supports the evaluation of summarization tasks using sequence-to-sequence models such as BART and BERT, as well as causal models like GPT and Mistral. The platform aims to make model selection transparent, efficient, and empowering by providing a framework for comparing LLMs using task-specific metrics to evaluate how well a model fits a project's needs. Lumigator is in the early stages of development and plans to expand support to additional machine learning tasks and use cases in the future.

cookbook

This repository contains community-driven practical examples of building AI applications and solving various tasks with AI using open-source tools and models. Everyone is welcome to contribute, and we value everybody's contribution! There are several ways you can contribute to the Open-Source AI Cookbook: Submit an idea for a desired example/guide via GitHub Issues. Contribute a new notebook with a practical example. Improve existing examples by fixing issues/typos. Before contributing, check currently open issues and pull requests to avoid working on something that someone else is already working on.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

ultravox

Ultravox is a fast multimodal Language Model (LLM) that can understand both text and human speech in real-time without the need for a separate Audio Speech Recognition (ASR) stage. By extending Meta's Llama 3 model with a multimodal projector, Ultravox converts audio directly into a high-dimensional space used by Llama 3, enabling quick responses and potential understanding of paralinguistic cues like timing and emotion in human speech. The current version (v0.3) has impressive speed metrics and aims for further enhancements. Ultravox currently converts audio to streaming text and plans to emit speech tokens for direct audio conversion. The tool is open for collaboration to enhance this functionality.

kobold_assistant

Kobold-Assistant is a fully offline voice assistant interface to KoboldAI's large language model API. It can work online with the KoboldAI horde and online speech-to-text and text-to-speech models. The assistant, called Jenny by default, uses the latest coqui 'jenny' text to speech model and openAI's whisper speech recognition. Users can customize the assistant name, speech-to-text model, text-to-speech model, and prompts through configuration. The tool requires system packages like GCC, portaudio development libraries, and ffmpeg, along with Python >=3.7, <3.11, and runs on Ubuntu/Debian systems. Users can interact with the assistant through commands like 'serve' and 'list-mics'.

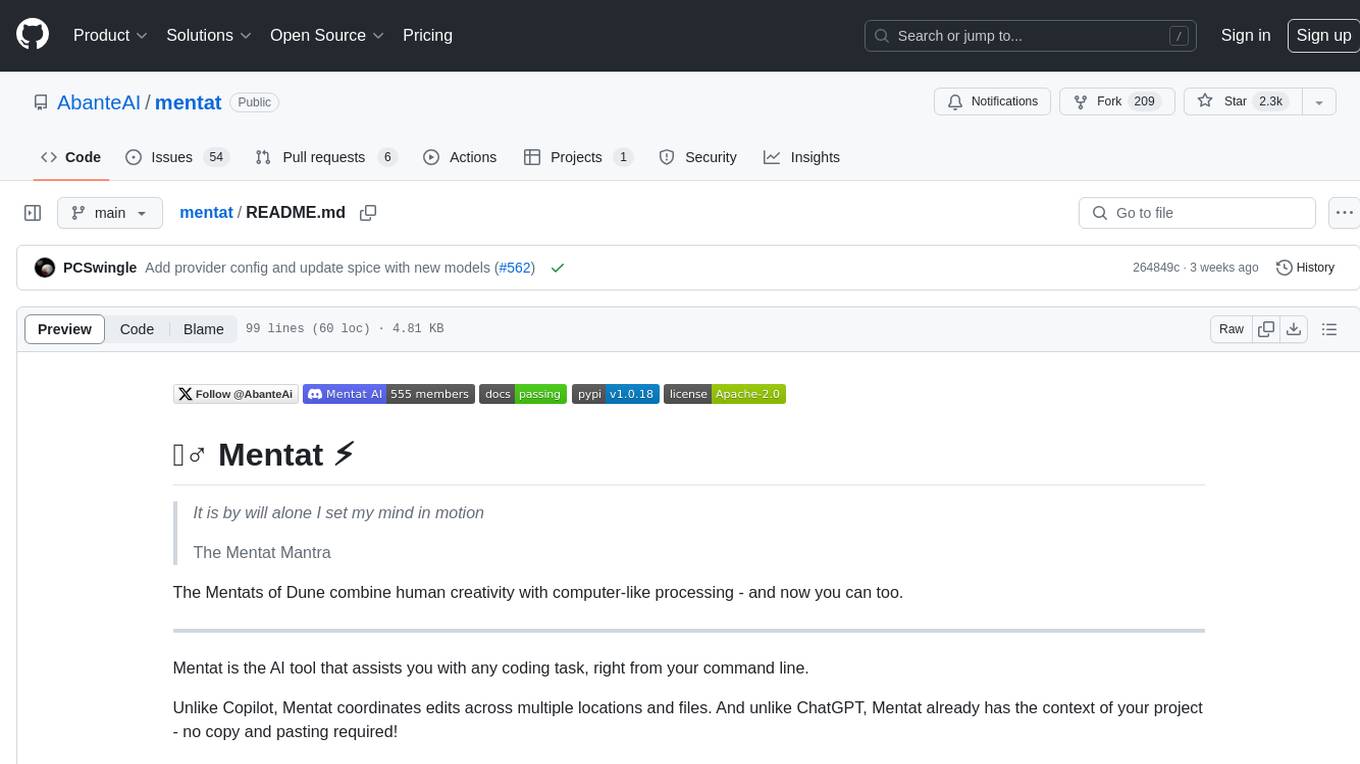

mentat

Mentat is an AI tool designed to assist with coding tasks directly from the command line. It combines human creativity with computer-like processing to help users understand new codebases, add new features, and refactor existing code. Unlike other tools, Mentat coordinates edits across multiple locations and files, with the context of the project already in mind. The tool aims to enhance the coding experience by providing seamless assistance and improving edit quality.

ultimate-rvc

Ultimate RVC is an extension of AiCoverGen, offering new features and improvements for generating audio content using RVC. It is designed for users looking to integrate singing functionality into AI assistants/chatbots/vtubers, create character voices for songs or books, and train voice models. The tool provides easy setup, voice conversion enhancements, TTS functionality, voice model training suite, caching system, UI improvements, and support for custom configurations. It is available for local and Google Colab use, with a PyPI package for easy access. The tool also offers CLI usage and customization through environment variables.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.