rosa

ROSA is an AI Agent designed to interact with ROS1- and ROS2-based robotics systems using natural language queries. ROSA helps robot developers inspect, diagnose, understand, and operate robots.

Stars: 256

ROSA is an AI Agent designed to interact with ROS-based robotics systems using natural language queries. It can generate system reports, read and parse ROS log files, adapt to new robots, and run various ROS commands using natural language. The tool is versatile for robotics research and development, providing an easy way to interact with robots and the ROS environment.

README:

ROSA is an AI Agent designed to interact with ROS-based robotics systems

using natural language queries.

ROSA is an AI agent that can be used to interact with ROS1 and ROS2 systems in order to carry out various tasks. It is built using the open-source Langchain framework, and can be adapted to work with different robots and environments, making it a versatile tool for robotics research and development.

- [x] Support for both ROS1 and ROS2

- [x] Generate system reports using fuzzy templates

- [x] Read, parse, and summarize ROS log files

- [x] Use natural language to run various ROS commands and tools, for example:

- "Give me a list of nodes, categorize them into

navigation,perception,control, andother" - "Show me a list of topics that have publishers but no subscribers"

- "Set the

/velocityparameter to10" - "Echo the

/robot/statustopic" - "What is the message type of the

/robot/statustopic?"

- "Give me a list of nodes, categorize them into

- [x] Control the TurtleSim robot in simulation using ROSA

- [x] Easily adapt ROSA for your robot by adding new tools and prompts

- [ ] Use multi-modal models for vision, scene understanding, and more (in-progress)

- [ ] Web-based user interface with support for voice commands (in-progress)

- [ ] Text and speech modalities for human-robot interaction (in-progress)

ROSA already supports any robot built with ROS1 or ROS2, but we are also working on custom agents for some popular robots. These custom agents go beyond the basic ROSA functionality to provide more advanced capabilities and features.

- [x] Custom Agent for the TurtleSim robot (see turtle_agent)

- [ ] Custom Agent for NASA JPL's Open Source Rover

- [ ] Custom Agent for the TurtleBot

- [ ] Custom Agent for the Spot Robot

This guide provides a quick way to get started with ROSA.

- Python 3.9 or higher

- ROS Noetic (or higher)

Note: ROS Noetic uses Python3.8, but LangChain requires Python3.9 or higher. To use ROSA with ROS Noetic, you will need to create a virtual environment with Python3.9 or higher and install ROSA in that environment.

pip3 install jpl-rosafrom rosa import ROSA

llm = get_your_llm_here()

rosa = ROSA(ros_version=1, llm=llm)

rosa.invoke("Show me a list of topics that have publishers but no subscribers")We have included a demo that uses ROSA to control the TurtleSim robot in simulation. To run the demo, you will need to have Docker installed on your machine.

The following video shows ROSA reasoning about how to draw a 5-point star, then executing the necessary commands to do so.

https://github.com/user-attachments/assets/77b97014-6d2e-4123-8d0b-ea0916d93a4e

- Clone this repository

- Configure the LLM in

src/turtle_agent/scripts/llm.py - Run the demo script:

./demo.sh - Build and start the turtle agent:

catkin build && source devel/setup.bash && roslaunch turtle_agent agent- Run example queries:

examples

ROSA is designed to be easily adaptable to different robots and environments. To adapt ROSA for your robot, you

can either (1) create a new class that inherits from the ROSA class, or (2) create a new instance of the ROSA class

and pass in the necessary parameters. The first option is recommended if you need to make significant changes to the

agent's behavior, while the second option is recommended if you want to use the agent with minimal changes.

In either case, ROSA is adapted by providing it with a new set of tools and/or prompts. The tools are used to interact with the robot and the ROS environment, while the prompts are used to guide the agents behavior.

There are two methods for adding tools to ROSA:

- Pass in a list of @tool functions using the

toolsparameter. - Pass in a list of Python packages containing @tool functions using the

tool_packagesparameter.

The first method is recommended if you have a small number of tools, while the second method is recommended if you have a large number of tools or if you want to organize your tools into separate packages.

Hint: check src/turtle_agent/scripts/turtle_agent.py for examples on how to use both methods.

To add prompts to ROSA, you need to create a new instance of the RobotSystemPrompts class and pass it to the ROSA

constructor using the prompts parameter. The RobotSystemPrompts class contains the following attributes:

-

embodiment_and_persona: Gives the agent a sense of identity and helps it understand its role. -

about_your_operators: Provides information about the operators who interact with the robot, which can help the agent understand the context of the interaction. -

critical_instructions: Provides critical instructions that the agent should follow to ensure the safety and well-being of the robot and its operators. -

constraints_and_guardrails: Gives the robot a sense of its limitations and informs its decision-making process. -

about_your_environment: Provides information about the physical and digital environment in which the robot operates. -

about_your_capabilities: Describes what the robot can and cannot do, which can help the agent understand its limitations. -

nuance_and_assumptions: Provides information about the nuances and assumptions that the agent should consider when interacting with the robot. -

mission_and_objectives: Describes the mission and objectives of the robot, which can help the agent understand its purpose and goals. -

environment_variables: Provides information about the environment variables that the agent should consider when interacting with the robot. e.g. $ROS_MASTER_URI, or $ROS_IP.

Here is a quick and easy example showing how to add new tools and prompts to ROSA:

from langchain.agents import tool

from rosa import ROSA, RobotSystemPrompts

@tool

def move_forward(distance: float) -> str:

"""

Move the robot forward by the specified distance.

:param distance: The distance to move the robot forward.

"""

# Your code here ...

return f"Moving forward by {distance} units."

prompts = RobotSystemPrompts(

embodiment_and_persona="You are a cool robot that can move forward."

)

llm = get_your_llm_here()

rosa = ROSA(ros_version=1, llm=llm, tools=[move_forward], prompts=prompts)

rosa.invoke("Move forward by 2 units.")See our CHANGELOG.md for a history of our changes.

See our releases page for our key versioned releases.

Questions about ROSA? Please see our FAQ section in the Wiki.

Interested in contributing to our project? Please see our: CONTRIBUTING.md

For guidance on how to interact with our team, please see our code of conduct located at: CODE_OF_CONDUCT.md

For guidance on our governance approach, including decision-making process and our various roles, please see our governance model at: GOVERNANCE.md

See our: LICENSE

Key points of contact are:

Copyright (c) 2024. Jet Propulsion Laboratory. All rights reserved.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for rosa

Similar Open Source Tools

rosa

ROSA is an AI Agent designed to interact with ROS-based robotics systems using natural language queries. It can generate system reports, read and parse ROS log files, adapt to new robots, and run various ROS commands using natural language. The tool is versatile for robotics research and development, providing an easy way to interact with robots and the ROS environment.

giskard

Giskard is an open-source Python library that automatically detects performance, bias & security issues in AI applications. The library covers LLM-based applications such as RAG agents, all the way to traditional ML models for tabular data.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

RepoAgent

RepoAgent is an LLM-powered framework designed for repository-level code documentation generation. It automates the process of detecting changes in Git repositories, analyzing code structure through AST, identifying inter-object relationships, replacing Markdown content, and executing multi-threaded operations. The tool aims to assist developers in understanding and maintaining codebases by providing comprehensive documentation, ultimately improving efficiency and saving time.

RAVE

RAVE is a variational autoencoder for fast and high-quality neural audio synthesis. It can be used to generate new audio samples from a given dataset, or to modify the style of existing audio samples. RAVE is easy to use and can be trained on a variety of audio datasets. It is also computationally efficient, making it suitable for real-time applications.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

guidellm

GuideLLM is a powerful tool for evaluating and optimizing the deployment of large language models (LLMs). By simulating real-world inference workloads, GuideLLM helps users gauge the performance, resource needs, and cost implications of deploying LLMs on various hardware configurations. This approach ensures efficient, scalable, and cost-effective LLM inference serving while maintaining high service quality. Key features include performance evaluation, resource optimization, cost estimation, and scalability testing.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

ArcticTraining

ArcticTraining is a framework designed to simplify and accelerate the post-training process for large language models (LLMs). It offers modular trainer designs, simplified code structures, and integrated pipelines for creating and cleaning synthetic data, enabling users to enhance LLM capabilities like code generation and complex reasoning with greater efficiency and flexibility.

lerobot

LeRobot is a state-of-the-art AI library for real-world robotics in PyTorch. It aims to provide models, datasets, and tools to lower the barrier to entry to robotics, focusing on imitation learning and reinforcement learning. LeRobot offers pretrained models, datasets with human-collected demonstrations, and simulation environments. It plans to support real-world robotics on affordable and capable robots. The library hosts pretrained models and datasets on the Hugging Face community page.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

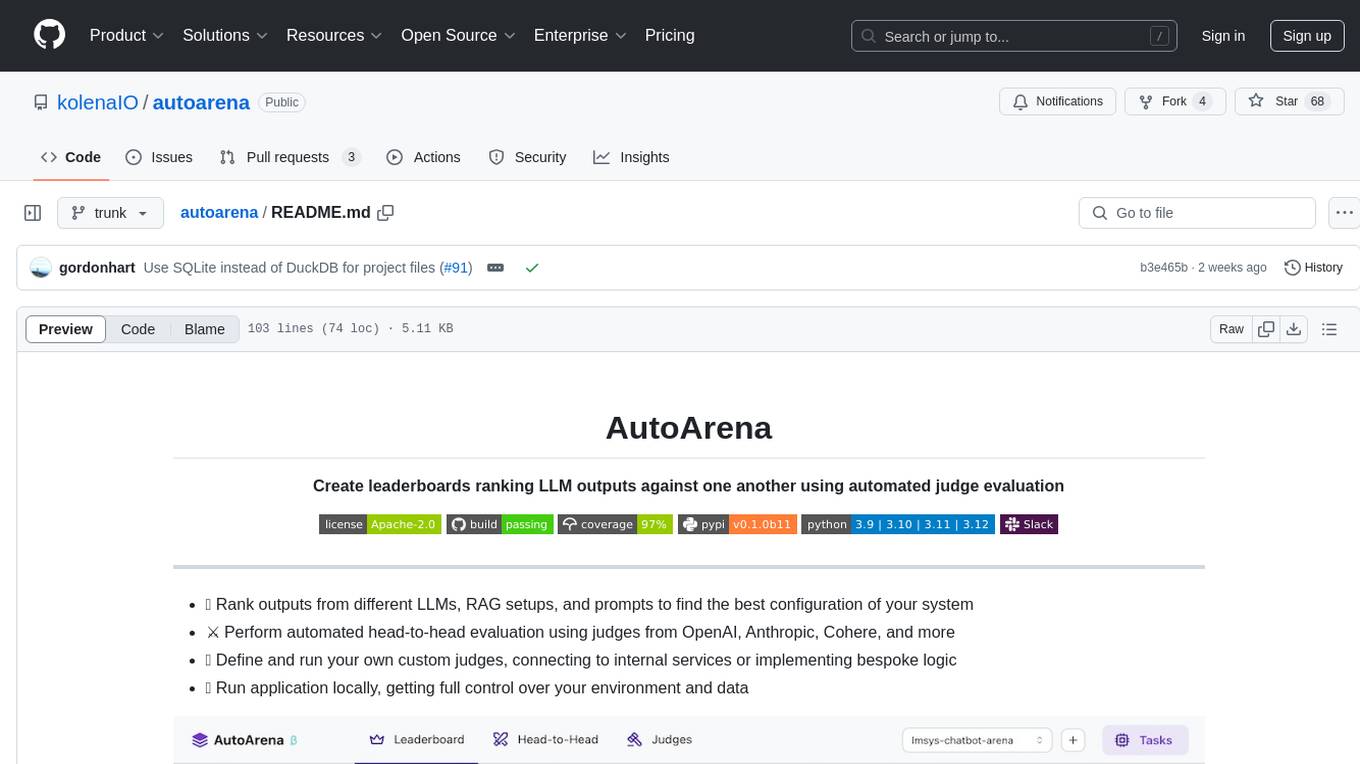

autoarena

AutoArena is a tool designed to create leaderboards ranking Language Model outputs against one another using automated judge evaluation. It allows users to rank outputs from different LLMs, RAG setups, and prompts to find the best configuration of their system. Users can perform automated head-to-head evaluation using judges from various platforms like OpenAI, Anthropic, and Cohere. Additionally, users can define and run custom judges, connect to internal services, or implement bespoke logic. AutoArena enables users to run the application locally, providing full control over their environment and data.

VoiceStreamAI

VoiceStreamAI is a Python 3-based server and JavaScript client solution for near-realtime audio streaming and transcription using WebSocket. It employs Huggingface's Voice Activity Detection (VAD) and OpenAI's Whisper model for accurate speech recognition. The system features real-time audio streaming, modular design for easy integration of VAD and ASR technologies, customizable audio chunk processing strategies, support for multilingual transcription, and secure sockets support. It uses a factory and strategy pattern implementation for flexible component management and provides a unit testing framework for robust development.

lhotse

Lhotse is a Python library designed to make speech and audio data preparation flexible and accessible. It aims to attract a wider community to speech processing tasks by providing a Python-centric design and an expressive command-line interface. Lhotse offers standard data preparation recipes, PyTorch Dataset classes for speech tasks, and efficient data preparation for model training with audio cuts. It supports data augmentation, feature extraction, and feature-space cut mixing. The tool extends Kaldi's data preparation recipes with seamless PyTorch integration, human-readable text manifests, and convenient Python classes.

neuron-ai

Neuron AI is a PHP framework that provides an Agent class for creating fully functional agents to perform tasks like analyzing text for SEO optimization. The framework manages advanced mechanisms such as memory, tools, and function calls. Users can extend the Agent class to create custom agents and interact with them to get responses based on the underlying LLM. Neuron AI aims to simplify the development of AI-powered applications by offering a structured framework with documentation and guidelines for contributions under the MIT license.

cover-agent

CodiumAI Cover Agent is a tool designed to help increase code coverage by automatically generating qualified tests to enhance existing test suites. It utilizes Generative AI to streamline development workflows and is part of a suite of utilities aimed at automating the creation of unit tests for software projects. The system includes components like Test Runner, Coverage Parser, Prompt Builder, and AI Caller to simplify and expedite the testing process, ensuring high-quality software development. Cover Agent can be run via a terminal and is planned to be integrated into popular CI platforms. The tool outputs debug files locally, such as generated_prompt.md, run.log, and test_results.html, providing detailed information on generated tests and their status. It supports multiple LLMs and allows users to specify the model to use for test generation.

For similar tasks

rosa

ROSA is an AI Agent designed to interact with ROS-based robotics systems using natural language queries. It can generate system reports, read and parse ROS log files, adapt to new robots, and run various ROS commands using natural language. The tool is versatile for robotics research and development, providing an easy way to interact with robots and the ROS environment.

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

DB-GPT

DB-GPT is an open source AI native data app development framework with AWEL(Agentic Workflow Expression Language) and agents. It aims to build infrastructure in the field of large models, through the development of multiple technical capabilities such as multi-model management (SMMF), Text2SQL effect optimization, RAG framework and optimization, Multi-Agents framework collaboration, AWEL (agent workflow orchestration), etc. Which makes large model applications with data simpler and more convenient.

Chat2DB

Chat2DB is an AI-driven data development and analysis platform that enables users to communicate with databases using natural language. It supports a wide range of databases, including MySQL, PostgreSQL, Oracle, SQLServer, SQLite, MariaDB, ClickHouse, DM, Presto, DB2, OceanBase, Hive, KingBase, MongoDB, Redis, and Snowflake. Chat2DB provides a user-friendly interface that allows users to query databases, generate reports, and explore data using natural language commands. It also offers a variety of features to help users improve their productivity, such as auto-completion, syntax highlighting, and error checking.

aide

AIDE (Advanced Intrusion Detection Environment) is a tool for monitoring file system changes. It can be used to detect unauthorized changes to monitored files and directories. AIDE was written to be a simple and free alternative to Tripwire. Features currently included in AIDE are as follows: o File attributes monitored: permissions, inode, user, group file size, mtime, atime, ctime, links and growing size. o Checksums and hashes supported: SHA1, MD5, RMD160, and TIGER. CRC32, HAVAL and GOST if Mhash support is compiled in. o Plain text configuration files and database for simplicity. o Rules, variables and macros that can be customized to local site or system policies. o Powerful regular expression support to selectively include or exclude files and directories to be monitored. o gzip database compression if zlib support is compiled in. o Free software licensed under the GNU General Public License v2.

OpsPilot

OpsPilot is an AI-powered operations navigator developed by the WeOps team. It leverages deep learning and LLM technologies to make operations plans interactive and generalize and reason about local operations knowledge. OpsPilot can be integrated with web applications in the form of a chatbot and primarily provides the following capabilities: 1. Operations capability precipitation: By depositing operations knowledge, operations skills, and troubleshooting actions, when solving problems, it acts as a navigator and guides users to solve operations problems through dialogue. 2. Local knowledge Q&A: By indexing local knowledge and Internet knowledge and combining the capabilities of LLM, it answers users' various operations questions. 3. LLM chat: When the problem is beyond the scope of OpsPilot's ability to handle, it uses LLM's capabilities to solve various long-tail problems.

aimeos-typo3

Aimeos is a professional, full-featured, and high-performance e-commerce extension for TYPO3. It can be installed in an existing TYPO3 website within 5 minutes and can be adapted, extended, overwritten, and customized to meet specific needs.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.