Disciplined-AI-Software-Development

This methodology provides a structured approach for collaborating with AI systems on software development projects. It addresses common issues like code bloat, architectural drift, and context dilution through systematic constraints and validation checkpoints.

Stars: 258

Disciplined AI Software Development is a comprehensive repository that provides guidelines and best practices for developing AI software in a disciplined manner. It covers topics such as project organization, code structure, documentation, testing, and deployment strategies to ensure the reliability, scalability, and maintainability of AI applications. The repository aims to help developers and teams navigate the complexities of AI development by offering practical advice and examples to follow.

README:

Disciplined AI Software Development Methodology © 2025 by Jay Baleine is licensed under CC BY-SA 4.0

A structured approach for working with AI on development projects. This methodology addresses common issues like code bloat, architectural drift, and context dilution through systematic constraints.

AI systems work on Question → Answer patterns. When you ask for broad, multi-faceted implementations, you typically get:

- Functions that work but lack structure

- Repeated code across components

- Architectural inconsistency over sessions

- Context dilution causing output drift

- More debugging time than planning time

The methodology uses four stages with systematic constraints and validation checkpoints. Each stage builds on empirical data rather than assumptions.

Planning saves debugging time. Planning thoroughly upfront typically prevents days of fixing architectural issues later.

Set up your AI model's custom instructions using AI-PREFERENCES.XML. This establishes behavioral constraints and uncertainty flagging with

Share METHODOLOGY.XML with the AI to structure your project plan. Work together to:

- Define scope and completion criteria

- Identify components and dependencies

- Structure phases based on logical progression

- Generate systematic tasks with measurable checkpoints

Output: A development plan following dependency chains with modular boundaries.

Work phase by phase, section by section. Each request follows: "Can you implement [specific component]?" with focused objectives.

File size stays ≤150 lines. This constraint provides:

- Smaller context windows for processing

- Focused implementation over multi-function attempts

- Easier sharing and debugging

Implementation flow:

Request specific component → AI processes → Validate → Benchmark → Continue

The benchmarking suite (built first) provides performance data throughout development. Feed this data back to the AI for optimization decisions based on measurements rather than guesswork.

Decision Processing: AI handles "Can you do A?" more reliably than "Can you do A, B, C, D, E, F, G, H?"

Context Management: Small files and bounded problems prevent the AI from juggling multiple concerns simultaneously.

Empirical Validation: Performance data replaces subjective assessment. Decisions come from measurable outcomes.

Systematic Constraints: Architectural checkpoints, file size limits, and dependency gates force consistent behavior.

-

Discord Bot Template - Production-ready bot foundation with plugin architecture, security, API management, and comprehensive testing. 46 files, all under 150 lines, with benchmarking suite and automated compliance checking. (View Project Structure)

-

PhiCode Runtime - Programming language runtime engine with transpilation, caching, security validation, and Rust acceleration. Complex system maintaining architectural discipline across 70+ modules. (View Project Structure)

-

PhiPipe - CI/CD regression detection system with statistical analysis, GitHub integration, and concurrent processing. Go-based service handling performance baselines and automated regression alerts. (View Project Structure)

You can compare the methodology principles to the codebase structure to see how the approach translates to working code.

- Configure AI with AI-PREFERENCES.XML as custom instructions

- Share METHODOLOGY.XML for planning session

- Collaborate on project structure and phases

- Generate systematic development plan

- Build Phase 0 benchmarking infrastructure first

- Work through phases sequentially

- Implement one component per interaction

- Run benchmarks and share results with AI

- Validate architectural compliance continuously

- Performance regression detection

- Architectural principle validation

- Code duplication auditing

- File size compliance checking

- Dependency boundary verification

Use the included project extraction tool systematically to generate structured snapshots of your codebase:

python scripts/project_extract.pyConfiguration Options:

-

SEPARATE_FILES = False: Single THE_PROJECT.md file (recommended for small codebases) -

SEPARATE_FILES = True: Multiple files per directory (recommended for large codebases and focused folder work) -

INCLUDE_PATHS: Directories and files to analyze -

EXCLUDE_PATTERNS: Skip cache directories, build artifacts, and generated files

Output:

- Complete file contents with syntax highlighting

- File line counts with architectural warnings (

⚠️ for 140-150 lines,‼️ for >150 lines on code files) - Tree structure visualization

- Ready-to-share

output examples can be found here

Use the tool to share a complete or partial project state with the AI system, track architectural compliance, and create focused development context.

AI Behavior: The methodology reduces architectural drift and context degradation compared to unstructured approaches. AI still needs occasional reminders about principles - this is normal.

Development Flow: Systematic planning tends to reduce debugging cycles. Focused implementation helps minimize feature bloat. Performance data supports optimization decisions.

Code Quality: Architectural consistency across components, measurable performance characteristics, maintainable structure as projects scale.

LLM Models - Q&A Documentation

Explore the detailed Q&A for each AI model: Grok 3 , Claude Sonnet 4 , DeepSeek-V3 , Gemini 2.5 Flash

All models were asked the exact same questions using the methodology documents as file uploads. This evaluation focuses on methodology understanding and operational behavior, no code was generated. The Q&A documents capture responses across workflow patterns, tool usage, communication adherence, and collaborative context retention. Full evaluation results and comparative analysis are available in Methodology Comprehension Analysis: Model Evaluation.

🚩 Note: This analysis does not include any code generation.

- Methodology understanding and workflow patterns

- Context retention and collaborative interaction

- Communication adherence and AI preference compliance

- Project initialization and Phase 0 requirements

- Tool usage and technology stack compatibility

- Quality enforcement and violation handling

- User experience across different skill levels

Share the three core documents with your AI model:

- AI-PREFERENCES.XML - Behavioral constraints

- METHODOLOGY.XML - Technical framework

- README.XML - Implementation guidance

The current document provides human-readable formatting for documentation review. For machine parsing, use the XML format.

Ask targeted questions:

- "How would Phase 0 apply to [project type]?"

- "What does the 150-line constraint mean for [specific component]?"

- "How should I structure phases for [project description]?"

- "Can you help decompose this project using the methodology?"

This will help foster understanding of how your AI model interprets the guidelines.

Test constraint variations:

- File size limits (100 vs 150 vs 200 lines)

- Communication constraint adjustments

- Phase 0 requirement modifications

- Quality gate threshold changes

Analyze outcomes:

- Document behavior changes and development results

- Compare debugging time across different approaches

- Track architectural compliance over extended sessions

- Monitor context retention and behavioral drift

You can ask the model to analyze the current session and identify violations. Additionally, you want to know which adjustments could be beneficial for further enforcement or to detect ambiguity in the constraints.

Collaborative refinement: Work with your AI to identify improvements based on your context. Treat constraint changes as experiments and measure their impact on collaboration effectiveness, code quality, and development velocity.

Progress indicators:

- Reduced specific violations over time

- Consistent file size compliance without reminders

- Sustained AI behavioral adherence through extended sessions

What problem led you to create this methodology?

I kept having to restate my preferences and architectural requirements to AI systems. It didn't matter which language or project I was working on - the AI would consistently produce either bloated monolithic code or underdeveloped implementations with issues throughout.

This led me to examine the meta-principles driving code quality and software architecture. I questioned whether pattern matching in AI models might be more effective when focused on underlying software principles rather than surface-level syntax. Since pattern matching is logic-driven and machines fundamentally operate on simple question-answer pairs, I realized that functions with multiple simultaneous questions were overwhelming the system.

The breakthrough came from understanding that everything ultimately transpiles to binary - a series of "can you do this? → yes/no" decisions. This insight shaped my approach: instead of issuing commands, ask focused questions in proper context. Rather than mentally managing complex setups alone, collaborate with AI to devise systematic plans.

How did you discover these specific constraints work?

Through extensive trial and error. AI systems will always tend to drift even under constraints, but they're significantly more accurate with structured boundaries than without them. You occasionally need to remind the AI of its role to prevent deviation - like managing a well-intentioned toddler that knows the rules but sometimes pushes boundaries trying to satisfy you.

These tools are far from perfect, but they're effective instruments for software development when properly constrained.

What failures or frustrations shaped this approach?

Maintenance hell was the primary driver. I grew tired of responses filled with excessive praise: "You have found the solution!", "You have redefined the laws of physics with your paradigm-shifting script!" This verbose fluff wastes time, tokens, and patience without contributing to productive development.

Instead of venting frustration on social media about AI being "just a dumb tool," I decided to find methods that actually work. My approach may not help everyone, but I hope it benefits those who share similar AI development frustrations.

How consistently do you follow your own methodology?

Since creating the documentation, I haven't deviated. Whenever I see the model producing more lines than my methodology restricts, I immediately interrupt generation with a flag: "

What happens when you deviate from it?

I become genuinely uncomfortable. Once I see things starting to degrade or become tangled, I compulsively need to organize and optimize. Deviation simply isn't an option anymore.

Which principles do you find hardest to maintain?

Not cursing at the AI when it drifts during complex algorithms! But seriously, it's a machine - it's not perfect, and neither are we.

When did you start using AI for programming?

In August 2024, I created a RuneLite theme pack, but one of the plugin overlays didn't match my custom layout. I opened a GitHub issue (creating my first GitHub account to do so) requesting a customization option. The response was: "It's not a priority - if you want it, build it yourself."

I used ChatGPT to guide me through forking RuneLite and creating a plugin. This experience sparked intense interest in underlying software principles rather than just syntax.

How has your approach evolved over time?

I view development like a book: syntax is the cover, logic is the content itself. Rather than learning syntax structures, I focused on core meta-principles - how software interacts, how logic flows, different algorithm types. I quickly realized everything reduces to the same foundation: question and answer sequences.

Large code structures are essentially chaotic meetings - one coordinator fielding questions and answers from multiple sources, trying to provide correct responses without mix-ups or misinterpretation. If this applies to human communication, it must apply to software principles.

What were your biggest mistakes with AI collaboration?

Expecting it to intuitively understand my requirements, provide perfect fixes, be completely honest, and act like a true expert. This was all elaborate roleplay that produced poor code. While fine for single-purpose scripts, it failed completely for scalable codebases.

I learned not to feed requirements and hope for the best. Instead, I needed to collaborate actively - create plans, ask for feedback on content clarity, and identify uncertainties. This gradual process taught me the AI's actual capabilities and most effective collaboration methods.

Why 150 lines exactly?

Multiple benefits: easy readability, clear understanding, modularity enforcement, architectural clarity, simple maintenance, component testing, optimal AI context retention, reusability, and KISS principle adherence.

How did you determine Phase 0 requirements?

From meta-principles of software: if it displays, it must run; if it runs, it can be measured; if it can be measured, it can be optimized; if it can be optimized, it can be reliable; if it can be reliable, it can be trusted.

Regardless of project type, anything requiring architecture needs these foundations. You must ensure changes don't negatively impact the entire system. A single line modification in a nested function might work perfectly but cause 300ms boot time regression for all users.

By testing during development, you catch inefficiencies early. Integration from the start means simply hooking up new components and running tests via command line - minimal time investment with actual value returned. I prefer validation and consistency throughout development rather than programming blind.

How do you handle projects that don't fit the methodology?

I adapt them to fit, or if truly impossible, I adjust the method itself. This is one methodology - I can generate countless variations as needed. Having spent 6700+ hours in AI interactions across multiple domains (not just software), I've developed strong system comprehension that enables creating adjusted methodologies on demand.

What's the learning curve for new users?

I cannot accurately answer this question. I've learned that I'm neurologically different - what I perceive as easy or obvious isn't always the case for others. This question is better addressed by someone who has actually used this methodology to determine its learning curve.

When shouldn't someone use this approach?

If you're not serious about projects, despise AI, dislike planning, don't care about modularization, or are just writing simple scripts. However, for anything requiring reliability, I believe this is currently the most effective method.

You still need programming fundamentals to use this methodology effectively - it's significantly more structured than ad-hoc approaches.

---

config:

layout: elk

theme: neo-dark

---

flowchart TD

A["Project Idea"] --> B["🤖 Stage 1: AI Configuration<br>AI-PREFERENCES.md Custom Instructions"]

B --> C["Stage 2: Collaborative Planning<br>Share METHODOLOGY.md"]

C --> D["Define Scope & Completion Criteria"]

D --> E["Identify Components & Dependencies"]

E --> F["Structure Phases Based on Logic"]

F --> G["Document Edge Cases - No Implementation"]

G --> H["Generate Development Plan with Checkpoints"]

H --> I["🔧 Stage 3: Phase 0 Infrastructure<br>MANDATORY BEFORE ANY CODE"]

I --> J["Benchmarking Suite + Regression Detection"]

J --> K["GitHub Workflows + Quality Gates"]

K --> L["Test Suite Infrastructure + Stress Tests"]

L --> M["Documentation Generation System"]

M --> N["Centralized Configuration + Constants"]

N --> O["📁 project_extract.py Setup<br>Single/Multiple File Config"]

O --> P["Initial Project State Extraction"]

P --> Q["Share Context with AI"]

Q --> R["Start Development Session<br>Pre-Session Compliance Audit"]

R --> S{"Next Phase Available?"}

S -- No --> Z["Project Complete"]

S -- Yes --> T["Select Single Component<br>Target ≤150 Lines"]

T --> U{"Multi-Language Required?"}

U -- Yes --> V["Document Performance Justification<br>Measurable Benefits Required"]

V --> W["Request AI Implementation"]

U -- No --> W

W --> X{"AI Uncertainty Flag?"}

X -- ⚠️ Yes --> Y["Request Clarification<br>Provide Additional Context"]

Y --> W

X -- Clear --> AA["Stage 3: Systematic Implementation"]

AA --> BB{"Automated Size Check<br>validate-phase Script"}

BB -- >150 Lines --> CC["AUTOMATED: Split Required<br>Maintain SoC Boundaries"]

CC --> W

BB -- ≤150 Lines --> DD["Incremental Compliance Check<br>DRY/KISS/SoC Validation"]

DD --> EE{"Architectural Principles Pass?"}

EE -- No --> FF["Flag Specific Violations<br>Reference Methodology"]

FF --> W

EE -- Yes --> GG["📊 Stage 4: Data-Driven Iteration<br>Run Benchmark Suite + Save Baselines"]

GG --> HH["Compare Against Historical Timeline<br>Regression Analysis"]

HH --> II{"Performance Gate Pass?"}

II -- Regression Detected --> JJ["Share Performance Data<br>Request Optimization"]

JJ --> W

II -- Pass --> KK["Integration Test<br>Verify System Boundaries"]

KK --> LL{"Cross-Platform Validation?"}

LL -- Fail --> MM["Address Deployment Constraints<br>Real-World Considerations"]

MM --> W

LL -- Pass --> NN{"More Components in Phase?"}

NN -- Yes --> T

NN -- No --> OO["🚦 Phase Quality Gate<br>Full Architecture Audit"]

OO --> PP["Production Simulation<br>Resource Cleanup + Load Test"]

PP --> QQ{"All Quality Gates Pass?"}

QQ -- No --> RR["Document Failed Checkpoints<br>Block Phase Progression"]

RR --> T

QQ -- Yes --> SS["End Development Session<br>Technical Debt Assessment"]

SS --> TT["📁 Extract Updated Project State<br>Generate Fresh Context"]

TT --> UU["Phase Results Documentation<br>Metrics + Outcomes + Timeline"]

UU --> VV["Update Development Plan<br>Mark Phase Complete"]

VV --> S

WW["validate-phase<br>AUTOMATED: File Size + Structure"] -.-> BB

XX["dry-audit<br>AUTOMATED: Cross-Module Duplication"] -.-> DD

YY["CI/CD Workflows<br>AUTOMATED: Merge Gates"] -.-> GG

ZZ["Performance Timeline<br>AUTOMATED: Historical Data"] -.-> HH

AAA["Dependency Validator<br>AUTOMATED: Import Boundaries"] -.-> KK

BBB["Architecture Auditor<br>AUTOMATED: SoC Compliance"] -.-> OO

WW -. BUILD FAILURE .-> CC

YY -. MERGE BLOCKED .-> JJ

BBB -. AUDIT FAILURE .-> RR

style Y fill:#7d5f00

style CC fill:#770000

style FF fill:#7d5f00

style JJ fill:#7d5f00

style MM fill:#770000

style RR fill:#770000For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Disciplined-AI-Software-Development

Similar Open Source Tools

Disciplined-AI-Software-Development

Disciplined AI Software Development is a comprehensive repository that provides guidelines and best practices for developing AI software in a disciplined manner. It covers topics such as project organization, code structure, documentation, testing, and deployment strategies to ensure the reliability, scalability, and maintainability of AI applications. The repository aims to help developers and teams navigate the complexities of AI development by offering practical advice and examples to follow.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

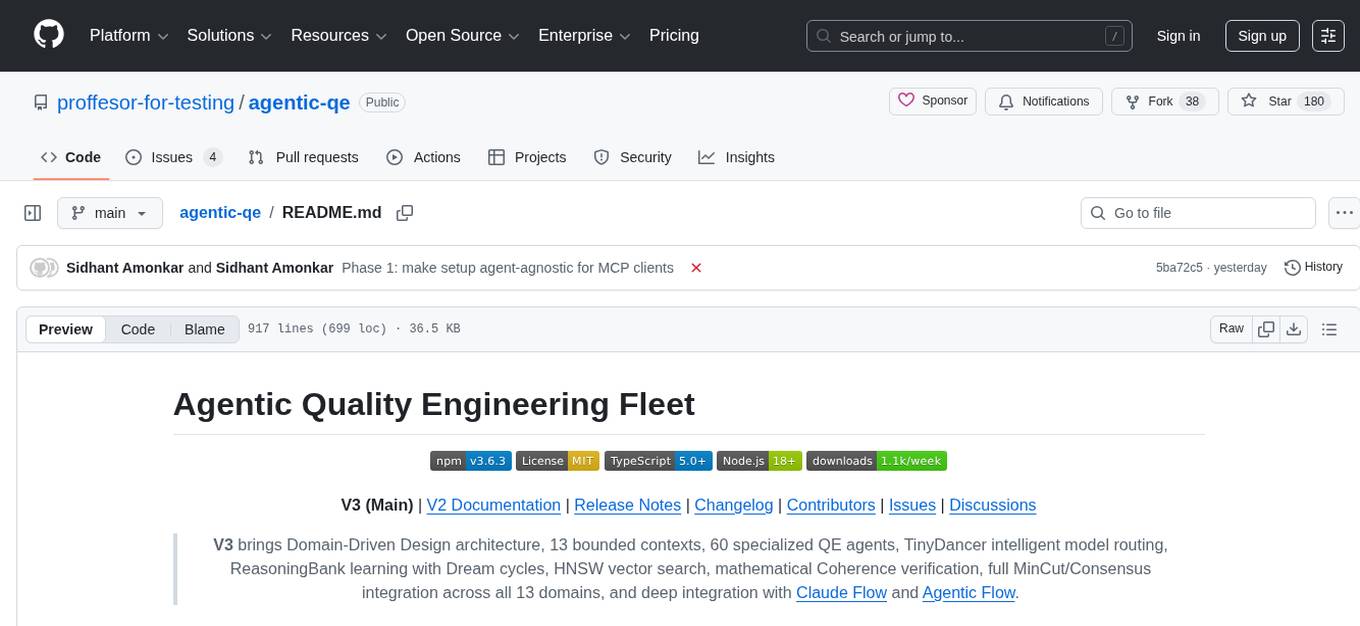

agentic-qe

Agentic Quality Engineering Fleet (Agentic QE) is a comprehensive tool designed for quality engineering tasks. It offers a Domain-Driven Design architecture with 13 bounded contexts and 60 specialized QE agents. The tool includes features like TinyDancer intelligent model routing, ReasoningBank learning with Dream cycles, HNSW vector search, Coherence Verification, and integration with other tools like Claude Flow and Agentic Flow. It provides capabilities for test generation, coverage analysis, quality assessment, defect intelligence, requirements validation, code intelligence, security compliance, contract testing, visual accessibility, chaos resilience, learning optimization, and enterprise integration. The tool supports various protocols, LLM providers, and offers a vast library of QE skills for different testing scenarios.

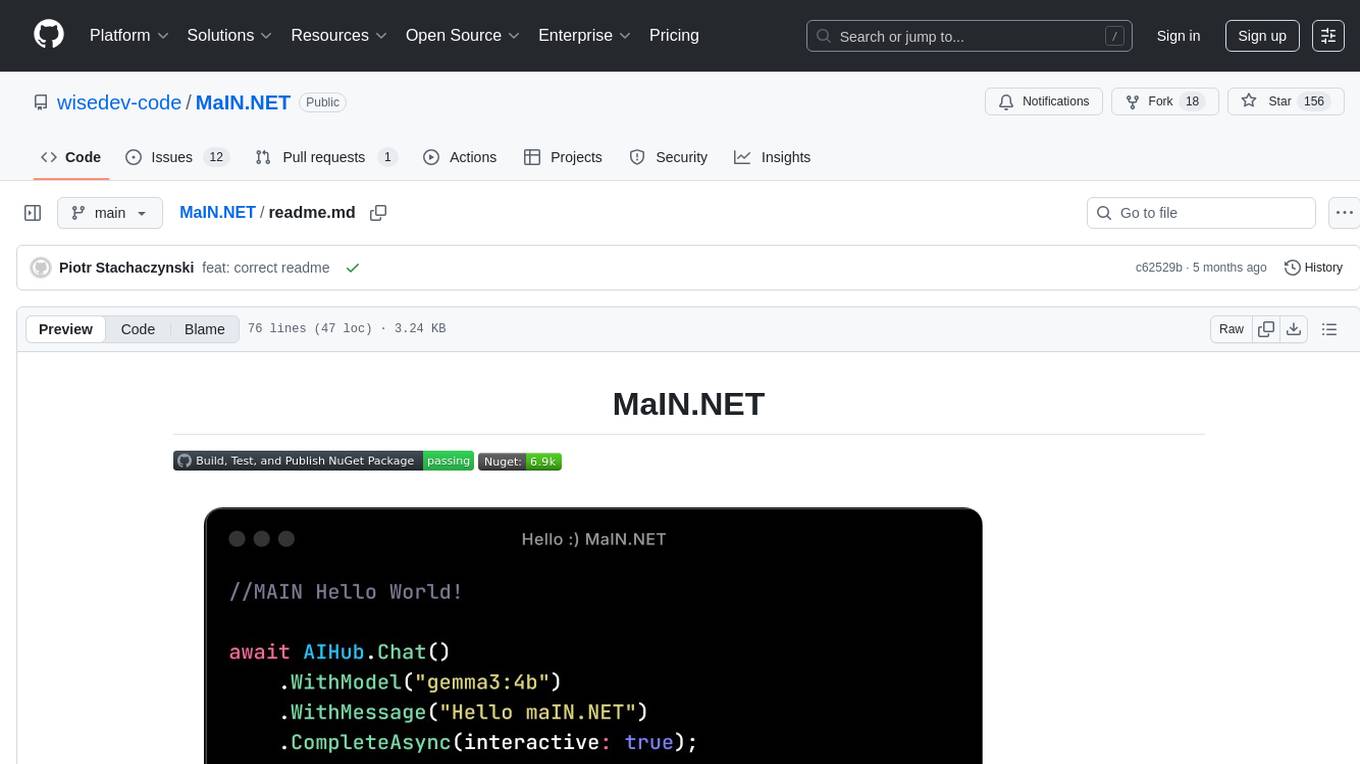

MaIN.NET

MaIN.NET (Modular Artificial Intelligence Network) is a versatile .NET package designed to streamline the integration of large language models (LLMs) into advanced AI workflows. It offers a flexible and robust foundation for developing chatbots, automating processes, and exploring innovative AI techniques. The package connects diverse AI methods into one unified ecosystem, empowering developers with a low-code philosophy to create powerful AI applications with ease.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

langchain

LangChain is a framework for building LLM-powered applications that simplifies AI application development by chaining together interoperable components and third-party integrations. It helps developers connect LLMs to diverse data sources, swap models easily, and future-proof decisions as technology evolves. LangChain's ecosystem includes tools like LangSmith for agent evals, LangGraph for complex task handling, and LangGraph Platform for deployment and scaling. Additional resources include tutorials, how-to guides, conceptual guides, a forum, API reference, and chat support.

deepteam

Deepteam is a powerful open-source tool designed for deep learning projects. It provides a user-friendly interface for training, testing, and deploying deep neural networks. With Deepteam, users can easily create and manage complex models, visualize training progress, and optimize hyperparameters. The tool supports various deep learning frameworks and allows seamless integration with popular libraries like TensorFlow and PyTorch. Whether you are a beginner or an experienced deep learning practitioner, Deepteam simplifies the development process and accelerates model deployment.

ai-collection

The ai-collection repository is a collection of various artificial intelligence projects and tools aimed at helping developers and researchers in the field of AI. It includes implementations of popular AI algorithms, datasets for training machine learning models, and resources for learning AI concepts. The repository serves as a valuable resource for anyone interested in exploring the applications of artificial intelligence in different domains.

cs-self-learning

This repository serves as an archive for computer science learning notes, codes, and materials. It covers a wide range of topics including basic knowledge, AI, backend & big data, tools, and other related areas. The content is organized into sections and subsections for easy navigation and reference. Users can find learning resources, programming practices, and tutorials on various subjects such as languages, data structures & algorithms, AI, frameworks, databases, development tools, and more. The repository aims to support self-learning and skill development in the field of computer science.

bisheng

Bisheng is a leading open-source **large model application development platform** that empowers and accelerates the development and deployment of large model applications, helping users enter the next generation of application development with the best possible experience.

ai-workshop-code

The ai-workshop-code repository contains code examples and tutorials for various artificial intelligence concepts and algorithms. It serves as a practical resource for individuals looking to learn and implement AI techniques in their projects. The repository covers a wide range of topics, including machine learning, deep learning, natural language processing, computer vision, and reinforcement learning. By exploring the code and following the tutorials, users can gain hands-on experience with AI technologies and enhance their understanding of how these algorithms work in practice.

spring-ai-alibaba-examples

This repository contains examples showcasing various uses of Spring AI Alibaba, from basic to advanced, and best practices for AI projects. It welcomes contributions related to Spring AI Alibaba usage examples, API usage, Spring AI usage examples, and best practices for AI projects. The project structure is designed to modularize functions for easy access and use.

AReaL

AReaL (Ant Reasoning RL) is an open-source reinforcement learning system developed at the RL Lab, Ant Research. It is designed for training Large Reasoning Models (LRMs) in a fully open and inclusive manner. AReaL provides reproducible experiments for 1.5B and 7B LRMs, showcasing its scalability and performance across diverse computational budgets. The system follows an iterative training process to enhance model performance, with a focus on mathematical reasoning tasks. AReaL is equipped to adapt to different computational resource settings, enabling users to easily configure and launch training trials. Future plans include support for advanced models, optimizations for distributed training, and exploring research topics to enhance LRMs' reasoning capabilities.

ai-app-lab

The ai-app-lab is a high-code Python SDK Arkitect designed for enterprise developers with professional development capabilities. It provides a toolset and workflow set for developing large model applications tailored to specific business scenarios. The SDK offers highly customizable application orchestration, quality business tools, one-stop development and hosting services, security enhancements, and AI prototype application code examples. It caters to complex enterprise development scenarios, enabling the creation of highly customized intelligent applications for various industries.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

mcp-fundamentals

The mcp-fundamentals repository is a collection of fundamental concepts and examples related to microservices, cloud computing, and DevOps. It covers topics such as containerization, orchestration, CI/CD pipelines, and infrastructure as code. The repository provides hands-on exercises and code samples to help users understand and apply these concepts in real-world scenarios. Whether you are a beginner looking to learn the basics or an experienced professional seeking to refresh your knowledge, mcp-fundamentals has something for everyone.

For similar tasks

Midori-AI

Midori AI is a cutting-edge initiative dedicated to advancing the field of artificial intelligence through research, development, and community engagement. They focus on creating innovative AI solutions, exploring novel approaches, and empowering users to harness the power of AI. Key areas of focus include cluster-based AI, AI setup assistance, AI development for Discord bots, model serving and hosting, novel AI memory architectures, and Carly - a fully simulated human with advanced AI capabilities. They have also developed the Midori AI Subsystem to streamline AI workloads by providing simplified deployment, standardized configurations, isolation for AI systems, and a growing library of backends and tools.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

Disciplined-AI-Software-Development

Disciplined AI Software Development is a comprehensive repository that provides guidelines and best practices for developing AI software in a disciplined manner. It covers topics such as project organization, code structure, documentation, testing, and deployment strategies to ensure the reliability, scalability, and maintainability of AI applications. The repository aims to help developers and teams navigate the complexities of AI development by offering practical advice and examples to follow.

language-ai-engineering-lab

The Language AI Engineering Lab is a structured repository focusing on Generative AI, guiding users from language fundamentals to production-ready Language AI systems. It covers topics like NLP, Transformers, Large Language Models, and offers hands-on learning paths, practical implementations, and end-to-end projects. The repository includes in-depth concepts, diagrams, code examples, and videos to support learning. It also provides learning objectives for various areas of Language AI engineering, such as NLP, Transformers, LLM training, prompt engineering, context management, RAG pipelines, context engineering, evaluation, model context protocol, LLM orchestration, agentic AI systems, multimodal models, MLOps, LLM data engineering, and domain applications like IVR and voice systems.

cody

Cody is a free, open-source AI coding assistant that can write and fix code, provide AI-generated autocomplete, and answer your coding questions. Cody fetches relevant code context from across your entire codebase to write better code that uses more of your codebase's APIs, impls, and idioms, with less hallucination.

auto-dev-vscode

AutoDev for VSCode is an AI-powered coding wizard with multilingual support, auto code generation, and a bug-slaying assistant. It offers customizable prompts and features like Auto Dev/Testing/Document/Agent. The tool aims to enhance coding productivity and efficiency by providing intelligent assistance and automation capabilities within the Visual Studio Code environment.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

code2prompt

code2prompt is a command-line tool that converts your codebase into a single LLM prompt with a source tree, prompt templating, and token counting. It automates generating LLM prompts from codebases of any size, customizing prompt generation with Handlebars templates, respecting .gitignore, filtering and excluding files using glob patterns, displaying token count, including Git diff output, copying prompt to clipboard, saving prompt to an output file, excluding files and folders, adding line numbers to source code blocks, and more. It helps streamline the process of creating LLM prompts for code analysis, generation, and other tasks.

For similar jobs

Qwen-TensorRT-LLM

Qwen-TensorRT-LLM is a project developed for the NVIDIA TensorRT Hackathon 2023, focusing on accelerating inference for the Qwen-7B-Chat model using TRT-LLM. The project offers various functionalities such as FP16/BF16 support, INT8 and INT4 quantization options, Tensor Parallel for multi-GPU parallelism, web demo setup with gradio, Triton API deployment for maximum throughput/concurrency, fastapi integration for openai requests, CLI interaction, and langchain support. It supports models like qwen2, qwen, and qwen-vl for both base and chat models. The project also provides tutorials on Bilibili and blogs for adapting Qwen models in NVIDIA TensorRT-LLM, along with hardware requirements and quick start guides for different model types and quantization methods.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

ai-edge-torch

AI Edge Torch is a Python library that supports converting PyTorch models into a .tflite format for on-device applications on Android, iOS, and IoT devices. It offers broad CPU coverage with initial GPU and NPU support, closely integrating with PyTorch and providing good coverage of Core ATen operators. The library includes a PyTorch converter for model conversion and a Generative API for authoring mobile-optimized PyTorch Transformer models, enabling easy deployment of Large Language Models (LLMs) on mobile devices.

awesome-RK3588

RK3588 is a flagship 8K SoC chip by Rockchip, integrating Cortex-A76 and Cortex-A55 cores with NEON coprocessor for 8K video codec. This repository curates resources for developing with RK3588, including official resources, RKNN models, projects, development boards, documentation, tools, and sample code.

cl-waffe2

cl-waffe2 is an experimental deep learning framework in Common Lisp, providing fast, systematic, and customizable matrix operations, reverse mode tape-based Automatic Differentiation, and neural network model building and training features accelerated by a JIT Compiler. It offers abstraction layers, extensibility, inlining, graph-level optimization, visualization, debugging, systematic nodes, and symbolic differentiation. Users can easily write extensions and optimize their networks without overheads. The framework is designed to eliminate barriers between users and developers, allowing for easy customization and extension.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

depthai

This repository contains a demo application for DepthAI, a tool that can load different networks, create pipelines, record video, and more. It provides documentation for installation and usage, including running programs through Docker. Users can explore DepthAI features via command line arguments or a clickable QT interface. Supported models include various AI models for tasks like face detection, human pose estimation, and object detection. The tool collects anonymous usage statistics by default, which can be disabled. Users can report issues to the development team for support and troubleshooting.