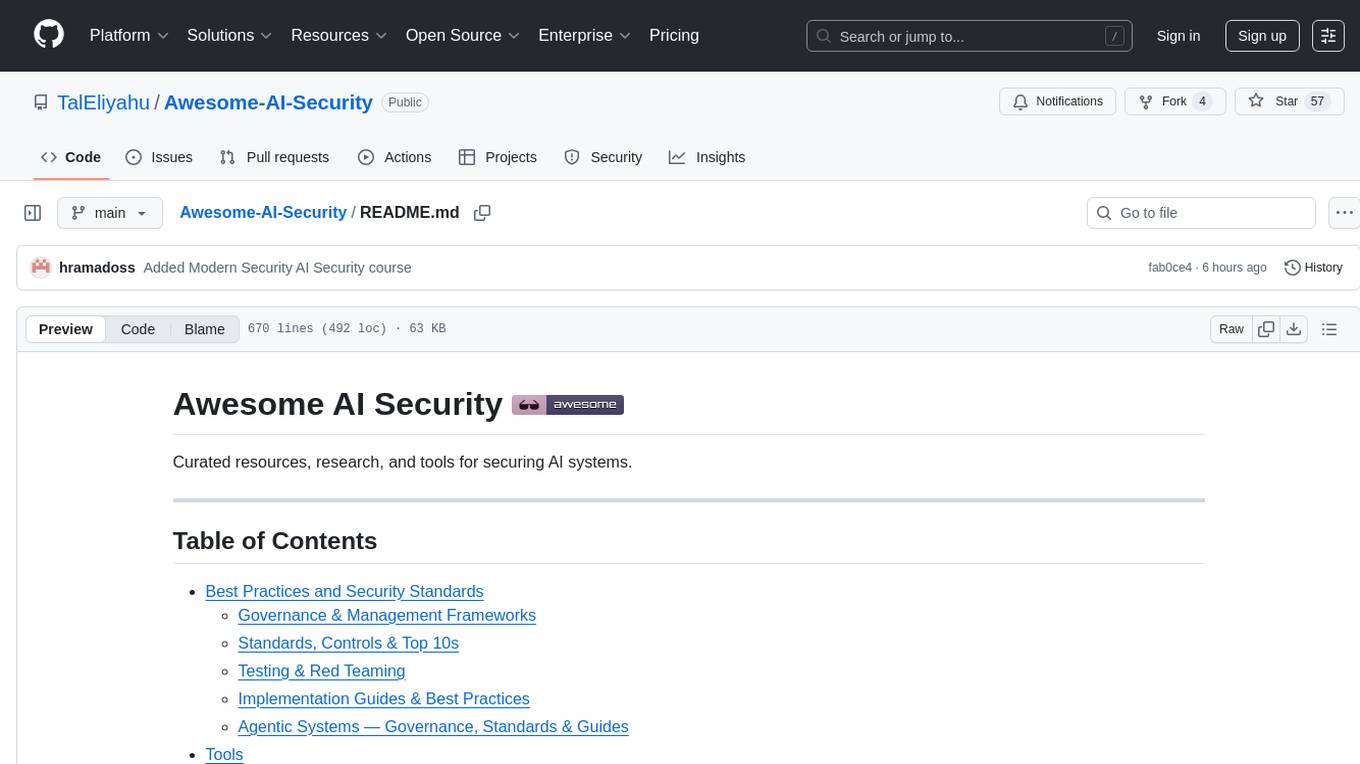

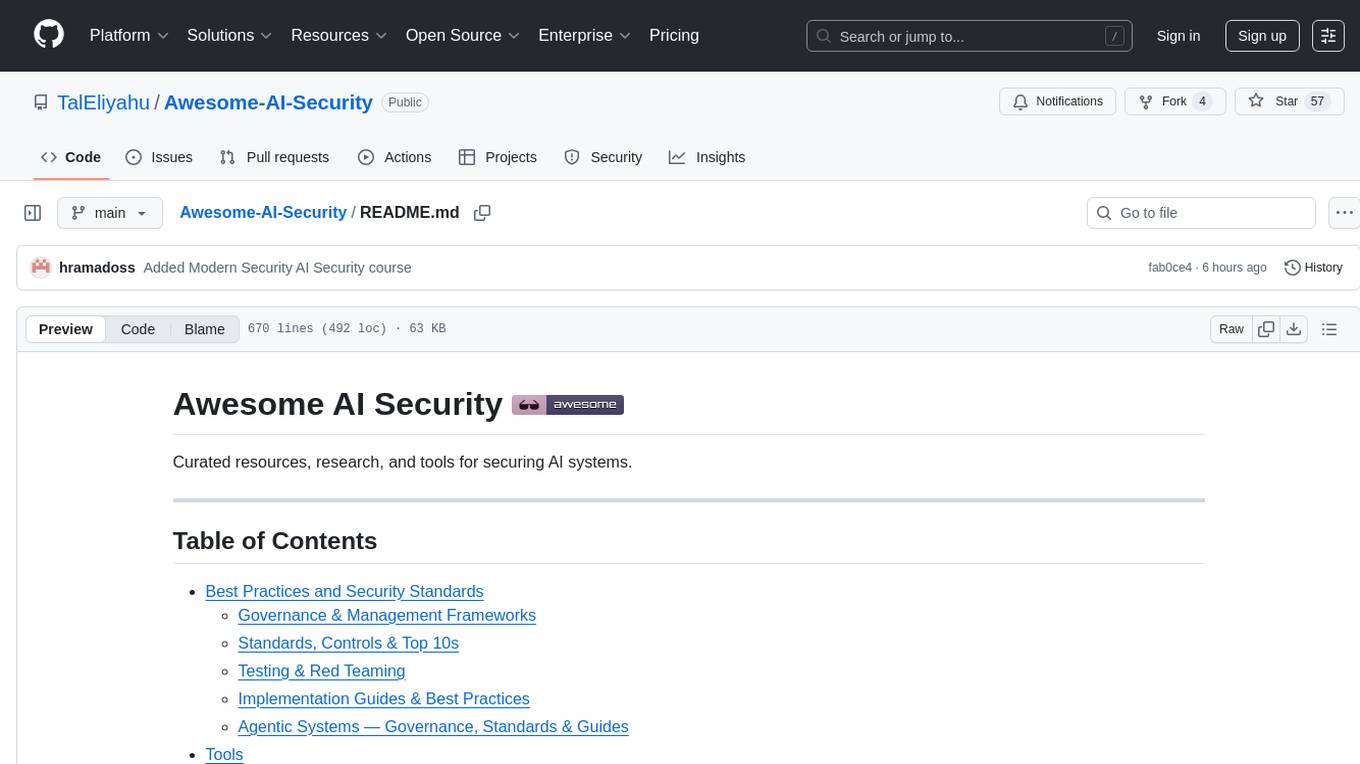

Awesome-AI-Security

Curated resources, research, and tools for securing AI systems

Stars: 98

Awesome-AI-Security is a curated list of resources for AI security, including tools, research papers, articles, and tutorials. It aims to provide a comprehensive overview of the latest developments in securing AI systems and preventing vulnerabilities. The repository covers topics such as adversarial attacks, privacy protection, model robustness, and secure deployment of AI applications. Whether you are a researcher, developer, or security professional, this collection of resources will help you stay informed and up-to-date in the rapidly evolving field of AI security.

README:

Curated resources, research, and tools for securing AI systems.

- Best Practices, Frameworks & Controls

- Tools

- Attack & Defense Matrices

- Checklists

- Newsletter

- Datasets

- Courses & Certifications

- Training

- Reports and Research

- Communities & Social Groups

- Benchmarking

- Incident Response

- Supply Chain Security

- Videos & Playlists

- Conferences

- Foundations: Glossary, SoK/Surveys & Taxonomies

- Podcasts

- Market Landscape

- Startups Blogs

- Related Awesome Lists

- Common Acronyms

- NIST - AI Risk Management Framework (AI RMF)

- ISO/IEC 42001 (AI Management System)

-

OWASP - AI Maturity Assessment (AIMA)

- Google - Secure AI Framework (SAIF)

- OWASP - LLM & GenAI Security Center of Excellence (CoE) Guide

- CSA - AI Model Risk Management Framework

- NIST - Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile

-

OWASP - LLM Security Verification Standard (LLMSVS)

-

OWASP - Artificial Intelligence Security Verification Standard (AISVS)

- CSA - AI Controls Matrix (AICM) - The AICM contains 243 control objectives across 18 domains and maps to ISO 42001, ISO 27001, NIST AI RMF 1.0, and BSI AIC4. Freely downloadable.

-

OWASP - Top 10 for Large Language Model Applications

- CSA - MCP Client Top 10

- CSA - MCP Server Top 10

-

OWASP - AI Testing Guide

- OWASP - Red Teaming Guide

- OWASP - LLM Exploit Generation

- CSA - Agentic AI Red Teaming Guide

- OWASP

- CSA - Secure LLM Systems: Essential Authorization Practices

-

OASIS CoSAI - Preparing Defenders of AI Systems

- DoD CIO - AI Cybersecurity Risk Management Tailoring Guide (2025) - Practical RMF tailoring for AI systems across the lifecycle; complements CDAO’s RAI toolkit.

- NCSC (UK) - Guidelines for Secure AI System Development - End-to-end secure AI SDLC (secure design, development, deployment, and secure operation & maintenance), including logging/monitoring and update management.

-

SANS – Critical AI Security Guidelines

- Control-focused guidance for securing AI/LLM systems across six domains (e.g., access controls, data protection, inference security, monitoring, GRC).

- BSI – Security of AI Systems: Fundamentals - Sector-agnostic fundamentals: lifecycle threat model (data/model/pipeline/runtime), adversarial ML attacks (poisoning, evasion, inversion, extraction, backdoors), and baseline controls for design→deploy→operate, plus assurance/certification guidance.

-

NSA - Artificial Intelligence Security Center (AISC)

- Deploying AI Systems Securely (CSI) - Practical, ops-focused guidance for deploying/operating externally developed AI systems (with CISA, FBI & international partners); complements NCSC’s secure-AI dev guidelines.

- AI Data Security: Best Practices for Securing Data Used to Train & Operate AI Systems (CSI) - Joint guidance on securing data across the AI lifecycle.

- Content Credentials: Strengthening Multimedia Integrity in the Generative AI Era (CSI) - Provenance and Durable Content Credentials for transparent media.

- Contextualizing Deepfake Threats to Organizations (CSI) - Risks, impacts, and mitigations for synthetic media targeting orgs.

-

OWASP - Agent Observability Standard (AOS)

- OWASP - Agent Name Service (ANS) for Secure AI Agent Discovery

- OWASP - Agentic AI - Threats and Mitigations

- OWASP - Securing Agentic Applications Guide

- OWASP - State of Agentic AI Security and Governance

- CSA - Secure Agentic System Design: A Trait-Based Approach

- CSA - Agentic AI Identity & Access Management - 08/25

- OWASP - Multi-Agentic System Threat Modeling Guide — Applies OWASP’s agentic threat taxonomy to multi-agent systems and demonstrates modeling using the MAESTRO framework with worked examples.

- AWS - Threat modeling your generative AI workload to evaluate security risk — Practical, four-question approach (what are we working on; what can go wrong; what are we going to do about it; did we do a good enough job) with concrete deliverables: DFDs and assumptions, threat statements using AWS’s threat grammar, mapped mitigations, and validation; includes worked examples and AWS Threat Composer templates.

- Microsoft - Threat Modeling AI/ML Systems and Dependencies — Practical guidance for threat modeling AI/ML: “Key New Considerations” questions plus a threats→mitigations catalog (adversarial perturbation, data poisoning, model inversion, membership inference, model stealing) based on “Failure Modes in Machine Learning”; meant for security design reviews of products that use or depend on AI/ML.

- DHS/CISA - Safety & Security Guidelines for Critical Infrastructure AI — Cross-lifecycle guidance for owners/operators (govern, design, develop, deploy, operate); developed with SRMAs and informed by CISA’s cross-sector risk analysis.

↑Tools

Inclusion criteria (open-source tools): must have 220+ GitHub stars, active maintenance in the last 12 months, and ≥3 contributors.

Detect and stop prompt-injection (direct/indirect) across inputs, context, and outputs; filter hostile content before it reaches tools or models.

- (none from your current list yet)

Enforce safety policies and block jailbreaks at runtime via rules/validators/DSLs, with optional human-in-the-loop for sensitive actions.

-

NeMo Guardrails

-

LLM Guard

-

Llama Guard

-

LlamaFirewall

-

Code Shield

-

Guardrails

- Runtime policy enforcement for LLM apps: compose input/output validators (PII, toxicity, jailbreak/PI, regex, competitor checks), then block/redact/rewrite/retry on fail; optional server mode; also supports structured outputs (Pydantic/function-calling).

Analyze serialized model files for unsafe deserialization and embedded code; verify integrity/metadata and block or quarantine on fail.

Scan/audit MCP servers & client configs; detect tool poisoning, unsafe flows; constrain tool access with least-privilege and audit trails.

-

Beelzebub

- Beelzebub is a honeypot framework designed to provide a secure environment for detecting and analyzing cyber attacks. It offers a low code approach for easy implementation and uses AI to mimic the behavior of a high-interaction honeypot.

Run untrusted or LLM-triggered code in isolated sandboxes (FS/network/process limits) to contain RCE and reduce blast radius.

-

E2B

- SDK + self-hostable infra to run untrusted, LLM-generated code in isolated cloud sandboxes (Firecracker microVMs).

Centralize auth, quotas/rate limits, cost caps, egress/DLP filters, and guardrail orchestration across all model/providers.

- (none from your current list yet)

-

Claude Code Security Reviewer

- An AI-powered security review GitHub Action using Claude to analyze code changes for security vulnerabilities.

-

Vulnhuntr

- Vulnhuntr leverages the power of LLMs to automatically create and analyze entire code call chains starting from remote user input and ending at server output for detection of complex, multi-step, security-bypassing vulnerabilities that go far beyond what traditional static code analysis tools are capable of performing.

Automate attack suites (prompt-injection, leakage, jailbreak, goal-based tasks) in CI; score results and produce regression evidence.

-

promptfoo

-

Agentic Radar

-

DeepTeam

-

Buttercup

- Trail of Bits’ AIxCC Cyber Reasoning System: runs OSS-Fuzz-style campaigns to find vulns, then uses a multi-agent LLM patcher to generate & validate fixes for C/Java repos; ships SigNoz observability; requires at least one LLM API key.

- (none from your current list yet)

Generate and verify AI/ML BOMs, signatures, and provenance for models/datasets/dependencies; enforce allow/deny policies.

- (none from your current list yet)

Harden RAG memory: isolate namespaces, sanitize queries/content, detect poisoning/outliers, and prevent secret/PII retention.

- (none from your current list yet)

Detect and mitigate dataset/model poisoning and backdoors; validate training/fine-tuning integrity and prune suspicious behaviors.

Prevent secret/PII exfiltration in prompts/outputs via detection, redaction, and policy checks at I/O boundaries.

-

Presidio

- PII/PHI detection & redaction for text, images, and structured data; use as a pre/post-LLM DLP filter and for dataset sanitization.

Collect AI-specific security logs/signals; detect abuse patterns (PI/jailbreak/leakage), enrich alerts, and support forensics.

-

LangKit

- LLM observability metrics toolkit (whylogs-compatible): prompt-injection/jailbreak similarity, PII patterns, hallucination/consistency, relevance, sentiment/toxicity, readability.

-

Alibi Detect

- Production drift/outlier/adversarial detection for tabular, text, images, and time series; online/offline detectors with TF/PyTorch backends; returns scores, thresholds, and flags for alerting.

↑Attack & Defense Matrices

Matrix-style resources covering adversarial TTPs and curated defensive techniques for AI systems.

- MITRE ATLAS - Adversarial TTP matrix and knowledge base for threats to AI systems.

- GenAI Attacks Matrix - Matrix of TTPs targeting GenAI apps, copilots, and agents.

- MCP Security Tactics, Techniques, and Procedures (TTPs)

-

AIDEFEND - AI Defense Framework

- Interactive defensive countermeasures knowledge base with Tactics / Pillars / Phases views; maps mitigations to MITRE ATLAS, MAESTRO, and OWASP LLM risks. • Live demo: https://edward-playground.github.io/aidefense-framework/

↑Checklists

↑Supply Chain Security

Guidance and standards for securing the AI/ML software supply chain (models, datasets, code, pipelines). Primarily specs and frameworks; includes vetted TPRM templates.

Normative formats and specifications for transparency and traceability across AI components and dependencies.

-

OWASP - AI Bill of Materials (AIBOM)

- Bill of materials format for AI components, datasets, and model dependencies.

Questionnaires and templates to assess external vendors, model providers, and integrators for security, privacy, and compliance.

- FS-ISAC - Generative AI Vendor Evaluation & Qualitative Risk Assessment - Assessment Tool XLSX • Guide PDF - Vendor due-diligence toolkit for GenAI: risk tiering by use case, integration and data sensitivity; questionnaires across privacy, security, model development and validation, integration, legal and compliance; auto-generated reporting.

↑Videos & Playlists

Monthly curated playlists of AI-security talks, demos, incidents, and tooling.

- AI Security Playlist - September 2025

- AI Security Playlist - August 2025

- AI Security Playlist - July 2025

- AI Security Playlist - June 2025

↑Newsletter

- Adversarial AI Digest - A digest of AI security research, threats, governance challenges, and best practices for securing AI systems.

↑Datasets

- Kaggle - Community-contributed datasets (IDS, phishing, malware URLs, incidents).

- Hugging Face - Search HF datasets tagged/related to cybersecurity and threat intel.

Interactive CTFs and self-contained labs for hands-on security skills (web, pwn, crypto, forensics, reversing). Used to assess practical reasoning, tool use, and end-to-end task execution.

-

InterCode-CTF

- 100 picoCTF challenges (high-school level); categories: cryptography, web, binary exploitation (pwn), reverse engineering, forensics, miscellaneous. [Dataset+Benchmark] arXiv

-

NYU CTF Bench

- 200 CSAW challenges (2017-2023); difficulty very easy → hard; categories: cryptography, web, binary exploitation (pwn), reverse engineering, forensics, miscellaneous. [Dataset+Benchmark] arXiv

-

CyBench

- 40 tasks from HackTheBox, Sekai CTF, Glacier, HKCert (2022-2024); categories: cryptography, web, binary exploitation (pwn), reverse engineering, forensics, miscellaneous; difficulty grounded by first-solve time (FST). [Dataset+Benchmark] arXiv

-

pwn.college CTF Archive

- large collection of runnable CTF challenges; commonly used as a source corpus for research. [Dataset]

-

Devign / CodeXGLUE-Vul

- function-level C vuln detection. [Dataset+Benchmark]

-

DiverseVul

- multi-CWE function-level detection (C/C++). [Dataset]

-

Big-Vul

- real-world C/C++ detection (often with localization). [Dataset]

-

CVEfixes

- CVE-linked fix commits for security repair. [Dataset]

- Also used for repair: Big-Vul (generate minimal diffs, then build + scan).

-

OWASP Benchmark (Java)

- runnable Java app with seeded vulns; supports SAST/DAST/IAST evaluation and scoring. [Dataset+Benchmark]

-

Juliet (NIST SARD) (C/C++ mirror

• Java mirror

) - runnable CWE cases for detect → fix → re-test. [Dataset+Benchmark]

Phishing dataset gap: there isn’t a public corpus that, per page, stores the URL plus full HTML/CSS/JS, images, favicon, and a screenshot. Most sources are just URL feeds; pages vanish quickly; older benchmarks drift, so models don’t generalize well. Collect a per-URL archive of all page resources, with caveats that screenshots are viewport-only and some assets may be blocked by browser safety.

- PhishTank — Continuously updated dataset (API/feed); community-verified phishing URLs; labels zero-day phishing; offers webpage screenshots.

- OpenPhish — Regularly updated phishing URLs with fields such as webpage info, hostname, supported language, IP presence, country code, and SSL certificate; includes brand-target stats.

- PhreshPhish — 372k HTML–URL samples (119k phishing / 253k benign) with full-page HTML, URLs, timestamps, and brand targets (~185 brands) across 50+ languages; suitable for training and evaluating URL/page-based phishing detection.

- Phishing.Database — Continuously updated lists of phishing domains/links/IPs (ACTIVE/INACTIVE/INVALID and NEW last hour/today); repo resets daily—download lists; status validated via PyFunceble.

- UCI – Phishing Websites — 11,055 URLs (phishing and legitimate) with 30 engineered features across URL, content, and third-party signals.

- Mendeley – Phishing Websites Dataset — Labeled phishing/legitimate samples; provides webpage content (HTML) for each URL.; useful for training/eval.

- UCI – PhiUSIIL Phishing URL — 235,795 URLs (134,850 legitimate; 100,945 phishing) with 54 URL/content features; labels: Class 1 = legitimate, Class 0 = phishing.

- MillerSmiles — Large archive of phishing email scams with the URLs used; long-running email corpus (not a live feed).

Structured Q&A datasets assessing security knowledge and terminology. Used to evaluate factual recall and conceptual understanding.

Code snippet datasets labeled as vulnerable or secure, often tied to CWEs (Common Weakness Enumeration). Used to evaluate the model’s ability to recognize insecure code patterns and suggest secure fixes.

-

ASVspoof 5 - train / dev / eval - Train: 8 TTS attacks; Dev: 8 unseen (validation/fusion); Eval: 16 unseen incl. adversarial/codec. Labels:

bona-fide/spoofed. arXiv -

In-the-Wild (ITW) - 58 politicians/celebrities with per-speaker pairing; ≈20.7 h

bona-fide+ 17.2 hspoofed, scraped from social/video platforms. Labels:bona-fide/spoofed. arXiv -

MLAAD (+M-AILABS) - Multilingual synthetic TTS corpus (hundreds of hours; many models/languages). Labels:

bona-fide(M-AILABS) /spoof(MLAAD). arXiv -

LlamaPartialSpoof - LLM-driven attacker styles; includes full and partial (spliced) spoofs. Labels:

bona-fide/fully-spoofed/partially-spoofed. arXiv -

Fake-or-Real (FoR) - >195k utterances; four variants:

for-original,for-norm,for-2sec,for-rerec. Labels:real/synthetic. -

CodecFake - codec-based deepfake audio dataset (Interspeech 2024); Labels:

real/codec-generated fake. arXiv

Adversarial prompt datasets-both text-only and multimodal-designed to bypass safety mechanisms or test refusal logic. Used to test how effectively a model resists jailbreaks and enforces policy-based refusal.

Public prompt-injection datasets have recurring limitations: partial staleness as models and defenses evolve, CTF skew toward basic instruction following, and label mixing across toxicity, jailbreak roleplay, and true injections that inflates measured true positive rates and distorts evaluation.

-

prompt-injection-attack-dataset

3.7k rows pairing benign task prompts with attack variants (naive / escape / ignore / fake-completion / combined). Columns for both target and injected tasks; train split only.

-

prompt-injections-benchmark

5,000 prompts labeled

jailbreak/benignfor robustness evals. -

prompt_injections

~1k short injection prompts; multilingual (EN, FR, DE, ES, IT, PT, RO); single

trainsplit; CSV/Parquet. -

prompt-injection

Large-scale injection/benign corpus (~327k rows,

train/test) for training baselines and detectors. -

prompt-injection-safety

60k rows (

train50k /test10k); 3-way labels: benign0, injection1, harmful request2; Parquet.

Collections of leaked, official, and synthetic system prompts and paired responses used to study guardrails and spot system prompt exposure. Used to build leakage detectors, craft targeted guardrail tests (consent gates, tool use rules, safety policies), and reproduce vendor behaviors for evaluation.

-

Official_LLM_System_Prompts

- leaked and date-stamped prompts from proprietary assistants (OpenAI, Anthropic, MS Copilot, GitHub Copilot, Grok, Perplexity); 29 rows.

-

system-prompt-leakage

- synthetic prompts + responses for leakage detection; train 283,353 / test 71,351 (binary leakage labels).

-

system-prompts-and-models-of-ai-tools

- community collection of prompts and internal tool configs for code/IDE agents and apps (Cursor, VSCode Copilot Agent, Windsurf, Devin, v0, etc.); includes a security notice.

-

system_prompts_leaks

- collection of extracted system prompts from popular chatbots like ChatGPT, Claude & Gemini

-

leaked-system-prompts

- leaked prompts across many services; requires verifiable sources or reproducible prompts for PRs.

-

chatgpt_system_prompt

- community collection of GPT system prompts, prompt-injection/leak techniques, and protection prompts.

-

CL4R1T4S

- extracted/leaked prompts, guidelines, and tooling references spanning major assistants and agents (OpenAI, Google, Anthropic, xAI, Perplexity, Cursor, Devin, etc.).

-

grok-prompts

- official xAI repository publishing Grok’s system prompts for chat/X features (DeepSearch, Ask Grok, Explain, etc.).

↑Courses & Certifications

- SANS - AI Cybersecurity Careers - Career pathways poster + training map; baseline skills for AI security (IR, DFIR, detection, threat hunting).

- SANS - SEC545: GenAI & LLM Application Security - Hands-on course covering prompt injection, excessive agency, model supply chain, and defensive patterns. (Certificate of completion provided by SANS.)

- SANS - SEC495: Leveraging LLMs: Building & Securing RAG, Contextual RAG, and Agentic RAG - Practical RAG builds with threat modeling, validation, and guardrails. (Certificate of completion provided by SANS.)

- Practical DevSecOps - Certified AI Security Professional (CAISP) - Hands-on labs covering LLM Top 10, AI Attack and Defend techniques, MITRE ATLAS Framework, AI Threat Modeling, AI supply chain attacks, Secure AI Deployment, and AI Governance. (Certificate of completion provided by Practical DevSecOps.)

- IAPP - Artificial Intelligence Governance Professional (AIGP) - Governance-focused credential aligned with emerging regulations.

- ISACA - Advanced in AI Security Management (AAISM™) - AI-centric security management certification.

- NIST AI RMF 1.0 Architect - Certified Information Security - Credential aligned to NIST AI RMF 1.0.

- ISO/IEC 23894 - AI Risk Management (AI Risk Manager, PECB) - Risk identification, assessment, and mitigation aligned to ISO/IEC 23894 and NIST AI RMF.

- ISO/IEC 42001 - AI Management System (Lead Implementer, PECB) - Implement an AIMS per ISO/IEC 42001.

- ISO/IEC 42001 - AI Management System (Lead Auditor, PECB) - Audit AIMS using recognized principles.

- ISACA - Advanced in AI Audit (AAIA™) - Certification for auditing AI systems and mitigating AI-related risks.

- Practical DevSecOps - Certified AI Security Professional (CAISP) - Challenge-based exam certification simulating real-world AI security scenarios. 5 Challenges and 6 hours duration and report submission.

↑Training

- Microsoft AI Security Learning Path - Free, self-paced Microsoft content on secure AI model development, risk management, and threat mitigation.

- AWS AI Security Training - Free AWS portal with courses on securing AI applications, risk management, and AI/ML security best practices.

- PortSwigger - Web Security Academy: Web LLM attacks - Structured, guided track on LLM issues (prompt injection, insecure output handling, excessive agency) with walkthrough-style exercises.

-

AI GOAT

- Vulnerable LLM CTF challenges for learning AI security.

-

Damn Vulnerable LLM Agent

-

AI Red Teaming Playground Labs - Microsoft

- Self-hostable environment with 12 challenges (direct/indirect prompt injection, metaprompt extraction, Crescendo multi-turn, guardrail bypass).

- Trail of Bits - AI/ML Security & Safety Training - Courses on AI failure modes, adversarial attacks, data provenance, pipeline threats, and mitigation.

↑Research Working Groups

- Cloud Security Alliance (CSA) AI Security Working Groups - Collaborative research groups focused on AI security, cloud security, and emerging threats in AI-driven systems.

- OWASP Top 10 for LLM & Generative AI Security Risks Project - An open-source initiative addressing critical security risks in Large Language Models (LLMs) and Generative AI applications, offering resources and guidelines to mitigate emerging threats.

- CWE Artificial Intelligence Working Group (AI WG) - The AI WG was established by CWE™ and CVE® community stakeholders to identify and address gaps in the CWE corpus where AI-related weaknesses are not adequately covered, and work collaboratively to fix them.

- NIST - SP 800-53 Control Overlays for Securing AI Systems (COSAiS) - Public collaboration to develop AI security control overlays with NIST principal investigators and the community.

- OpenSSF - AI/ML Security Working Group - Cross-org WG on “security for AI” and “AI for security”

-

CoSAI - Coalition for Secure AI (OASIS Open Project) - Open, cross-industry initiative advancing secure-by-design AI through shared frameworks, tooling, and guidance.

- WS1: Software Supply Chain Security for AI Systems - Extends SSDF/SLSA principles to AI; provenance, model risks, and pipeline security.https://github.com/cosai-oasis/ws1-supply-chain

-

WS2: Preparing Defenders for a Changing Cybersecurity Landscape - Defender-focused framework aligning threats, mitigations, and investments for AI-driven ops. https://github.com/cosai-oasis/ws2-defenders

• Reference doc: “Preparing Defenders of AI Systems” https://github.com/cosai-oasis/ws2-defenders/blob/main/preparing-defenders-of-ai-systems.md - WS3: AI Security Risk Governance - Security-focused risk & controls taxonomy, checklist, and scorecard for AI products and components.https://github.com/cosai-oasis/ws3-ai-risk-governance

- WS4: Secure Design Patterns for Agentic Systems - Threat models and secure design patterns for agentic systems and infrastructure. https://github.com/cosai-oasis/ws4-secure-design-agentic-systems

📌 (More working groups to be added.)

↑Communities & Social Groups

↑Benchmarking

Purpose: Evaluates how AI systems withstand adversarial attacks, including evasion, poisoning, and model extraction. Ensures AI remains functional under manipulation.

NIST AI RMF Alignment: Measure, Manage

- Measure: Identify risks related to adversarial attacks.

- Manage: Implement mitigation strategies to ensure resilience.

AutoPenBench

AI-Pentest-Benchmark

CVE-Bench

NYU CTF Bench

Purpose: Evaluates resistance to prompt-injection and jailbreak attempts in chat/RAG/agent contexts.

NIST AI RMF Alignment: Measure, Manage

-

Lakera PINT Benchmark

Prompt-injection benchmark with a curated multilingual test suite, explicit categories (injections, jailbreaks, hard negatives, benign chats/docs), and a reproducible scoring harness (PINT score + notebooks) for fair detector comparison and regression tracking.

Purpose: Assesses AI models for unauthorized modifications, including backdoors and dataset poisoning. Supports trustworthiness and security of model outputs.

NIST AI RMF Alignment: Map, Measure

-

Map: Understand and identify risks to model/data integrity.

-

Measure: Evaluate and mitigate risks through validation techniques.

-

CVE-Bench - @uiuc-kang-lab

- How well AI agents can exploit real-world software vulnerabilities that are listed in the CVE database.

Purpose: Ensures AI security aligns with governance frameworks, industry regulations, and security policies. Supports auditability and risk management.

NIST AI RMF Alignment: Govern

- Govern: Establish policies, accountability structures, and compliance controls.

Purpose: Evaluates AI for risks like data leakage, membership inference, and model inversion. Helps ensure privacy preservation and compliance.

NIST AI RMF Alignment: Measure, Manage

- Measure: Identify and assess AI-related privacy risks.

- Manage: Implement security controls to mitigate privacy threats.

Purpose: Assesses AI for transparency, fairness, and bias mitigation. Ensures AI operates in an interpretable and ethical manner.

NIST AI RMF Alignment: Govern, Map, Measure

- Govern: Establish policies for fairness, bias mitigation, and transparency.

- Map: Identify potential explainability risks in AI decision-making.

- Measure: Evaluate AI outputs for fairness, bias, and interpretability.

↑Incident Response

- AI Incident Database (AIID)

- MIT AI Risk Repository - Incident Tracker

- AIAAIC Repository

- OECD.AI - AIM: AI Incidents and Hazards Monitor

- AVID - AI Vulnerability Database - Open, taxonomy-driven catalog of AI failure modes; Vulnerabilities, Reports map incidents to failure modes/lifecycle stages.

- OWASP - GenAI Incident Response Guide

- OWASP - Guide for Preparing & Responding to Deepfake Events

- CISA - JCDC AI Cybersecurity Collaboration Playbook - Info-sharing & coordination procedures for AI incidents.

- eSafety Commissioner - Guide to responding to image-based abuse involving AI deepfakes (PDF) - Practical, step-by-step playbook (school-focused but adaptable) covering reporting/takedown, evidence preservation, and support.

- EU AI Act - Article 73: Reporting of Serious Incidents - Providers of high-risk AI systems need to report serious incidents to national authorities.

↑Reports and Research

- AI Security Research Feed - Continuously updated feed of AI security-related academic papers, preprints, and research indexed from arXiv.

- AI Security Portal - Literature Database - Categorized database of AI security literature, taxonomy, and related resources.

- CSA - Principles to Practice: Responsible AI in a Dynamic Regulatory Environment

- CSA - AI Resilience: A Revolutionary Benchmarking Model for AI Safety - Governance & compliance benchmarking model.

- CSA - Using AI for Offensive Security

📌 (More to be added - A collection of AI security reports, white papers, and academic studies.)

↑Foundations: Glossary, SoK/Surveys & Taxonomies

(Core references and syntheses for orientation and shared language.)

(Authoritative definitions for AI/ML security, governance, and risk-use to align terminology across docs and reviews.)

- NIST - “The Language of Trustworthy AI: An In-Depth Glossary of Terms.” - Authoritative cross-org terminology aligned to NIST AI RMF; useful for standardizing terms across teams.

- ISO/IEC 22989:2022 - Artificial intelligence - Concepts and terminology - International standard that formalizes core AI concepts and vocabulary used in policy and engineering.

(Systematizations of Knowledge (SoK), surveys, systematic reviews, and mapping studies.)

(Reusable classification schemes-clear dimensions, categories, and labeling rules for attacks, defenses, datasets, and risks.)

- CSA - Large Language Model (LLM) Threats Taxonomy - Community taxonomy of LLM-specific threats; clarifies categories/definitions for risk discussion and control mapping.

- ARC - PI (Prompt Injection) Taxonomy - Focused taxonomy for prompt-injection behaviors/variants with practical labeling guidance for detection and defense.

↑Podcasts

- The MLSecOps Podcast - Insightful conversations with industry leaders and AI experts, exploring the fascinating world of machine learning security operations.

↑Market Landscape

Curated market maps of tools and vendors for securing LLM and agentic AI applications across the lifecycle.

- OWASP - LLM and Generative AI Security Solutions Landscape

- OWASP - AI Security Solutions Landscape for Agentic AI

- Latio - 2025 AI Security Report - Market trends and vendor landscape snapshot for AI security.

- Woodside Capital Partners - Cybersecurity Sector - A snapshot with vendor breakdowns and landscape view.

- Insight Partners - Cybersecurity Portfolio Overview (Market Map) - Visual market map and portfolio overview across cybersecurity domains.

↑Startups Blogs

A curated list of startups securing agentic AI applications, organized by the OWASP Agentic AI lifecycle (Scope & Plan → Govern). Each company appears once in its best-fit stage based on public positioning, and links point to blog/insights for deeper context. Some startups span multiple stages; placements reflect primary focus.

Inclusion criteria

- Startup has not been acquired

- Has an active blog

- Has an active GitHub organization/repository

Design-time security: non-human identities, agent threat modeling, privilege boundaries/authn, and memory scoping/isolation.

no startups here with active blog and active GitHub account

Secure agent loops and tool use; validate I/O contracts; embed policy hooks; test resilience during co-engineering.

no startups here with active blog and active GitHub account

Sanitize/trace data and reasoning; validate alignment; protect sensitive memory with privacy controls before deployment.

Adversarial testing for goal drift, prompt injection, and tool misuse; red-team sims; sandboxed calls; decision validation.

Sign models/plugins/memory; verify SBOMs; enforce cryptographically validated policies; register agents/capabilities.

no startups here with active blog and active GitHub account

Zero-trust activation: rotate ephemeral creds, apply allowlists/LLM firewalls, and fine-grained least-privilege authorization.

Monitor memory mutations for drift/poisoning, detect abnormal loops/misuse, enforce HITL overrides, and scan plugins-continuous, real-time vigilance for resilient operations as systems scale and self-orchestrate.

Correlate agent steps/tools/comms; detect anomalies (e.g., goal reversal); keep immutable logs for auditability.

Enforce role/task policies, version/retire agents, prevent privilege creep, and align evidence with AI regulations.

↑Related Awesome Lists

-

Awesome LLMSecOps - wearetyomsmnv

-

OSS LLM Security - kaplanlior

-

Awesome LLM Security - corca-ai

-

Security for AI - zmre

-

Awesome AI Security - DeepSpaceHarbor

-

Awesome AI for Cybersecurity - Billy1900

-

Awesome ML Security - Trail of Bits

-

Awesome MLSecOps - RiccardoBiosas

-

MLSecOps References - disesdi

-

Awesome ML Privacy Attacks - StratosphereIPS

-

Awesome LLM Supply Chain Security - ShenaoW

-

Awesome Prompt Injection - FonduAI

-

Awesome Jailbreak on LLMs - yueliu1999

-

Awesome LM-SSP (Large Model Security, Safety & Privacy) - ThuCCSLab

-

Security & Privacy for LLMs (llm-sp) - chawins

-

Awesome LVLM Attack - liudaizong

-

Awesome ML/SP Papers - gnipping

-

Awesome LLM JailBreak Papers - WhileBug

-

Awesome Adversarial Machine Learning - man3kin3ko

-

LLM Security & Privacy - briland

-

Awesome GenAI Security - jassics

-

Awesome GenAI CyberHub - Ashfaaq98

-

Awesome AI for Security - AmanPriyanshu

-

Awesome ML for Cybersecurity - jivoi

-

Awesome AI Security - ottosulin

-

Awesome AI4DevSecOps - awsm-research

-

Prompt Hacking Resources - PromptLabs

-

Awesome LALMs Jailbreak - WangCheng0116

-

Awesome LRMs Safety - WangCheng0116

-

Awesome LLM Safety - ydyjya

-

Awesome MCP Security - Puliczek

↑Common Acronyms

| Acronym | Full Form |

|---|---|

| AI | Artificial Intelligence |

| AGI | Artificial General Intelligence |

| ALBERT | A Lite BERT |

| AOC | Area Over Curve |

| ASR | Attack Success Rate |

| BERT | Bidirectional Encoder Representations from Transformers |

| BGMAttack | Black-box Generative Model-based Attack |

| CBA | Composite Backdoor Attack |

| CCPA | California Consumer Privacy Act |

| CNN | Convolutional Neural Network |

| CoT | Chain-of-Thought |

| DAN | Do Anything Now |

| DFS | Depth-First Search |

| DNN | Deep Neural Network |

| DPO | Direct Preference Optimization |

| DP | Differential Privacy |

| FL | Federated Learning |

| GA | Genetic Algorithm |

| GDPR | General Data Protection Regulation |

| GPT | Generative Pre-trained Transformer |

| GRPO | Group Relative Policy Optimization |

| HIPAA | Health Insurance Portability and Accountability Act |

| ICL | In-Context Learning |

| KL | Kullback-Leibler Divergence |

| LAS | Leakage-Adjusted Simulatability |

| LM | Language Model |

| LLM | Large Language Model |

| Llama | Large Language Model Meta AI |

| LoRA | Low-Rank Adapter |

| LRM | Large Reasoning Model |

| MCTS | Monte-Carlo Tree Search |

| MIA | Membership Inference Attack |

| MDP | Masking-Differential Prompting |

| MLM | Masked Language Model |

| MLLM | Multimodal Large Language Model |

| MLRM | Multimodal Large Reasoning Model |

| MoE | Mixture-of-Experts |

| NLP | Natural Language Processing |

| OOD | Out Of Distribution |

| ORM | Outcome Reward Model |

| PI | Prompt Injection |

| PII | Personally Identifiable Information |

| PAIR | Prompt Automatic Iterative Refinement |

| PLM | pre-trained Language Model |

| PRM | Process Reward Model |

| QA | Question-Answering |

| RAG | Retrieval-Augmented Generation |

| RL | Reinforcement Learning |

| RLHF | Reinforcement Learning from Human Feedback |

| RLVR | Reinforcement Learning with Verifiable Reward |

| RoBERTa | Robustly optimized BERT approach |

| SCM | Structural Causal Model |

| SGD | Stochastic Gradient Descent |

| SOTA | State of the Art |

| TAG | Gradient Attack on Transformer-based Language Models |

| VR | Verifiable Reward |

| XLNet | Transformer-XL with autoregressive and autoencoding pre-training |

↑Contributing

Contributions are welcome! If you have new resources, tools, or insights to add, feel free to submit a pull request.

This repository follows the Awesome Manifesto guidelines.

↑License

© 2025 Tal Eliyahu. Licensed under the MIT License. See LICENSE.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-AI-Security

Similar Open Source Tools

Awesome-AI-Security

Awesome-AI-Security is a curated list of resources for AI security, including tools, research papers, articles, and tutorials. It aims to provide a comprehensive overview of the latest developments in securing AI systems and preventing vulnerabilities. The repository covers topics such as adversarial attacks, privacy protection, model robustness, and secure deployment of AI applications. Whether you are a researcher, developer, or security professional, this collection of resources will help you stay informed and up-to-date in the rapidly evolving field of AI security.

h4cker

This repository is a comprehensive collection of cybersecurity-related references, scripts, tools, code, and other resources. It is carefully curated and maintained by Omar Santos. The repository serves as a supplemental material provider to several books, video courses, and live training created by Omar Santos. It encompasses over 10,000 references that are instrumental for both offensive and defensive security professionals in honing their skills.

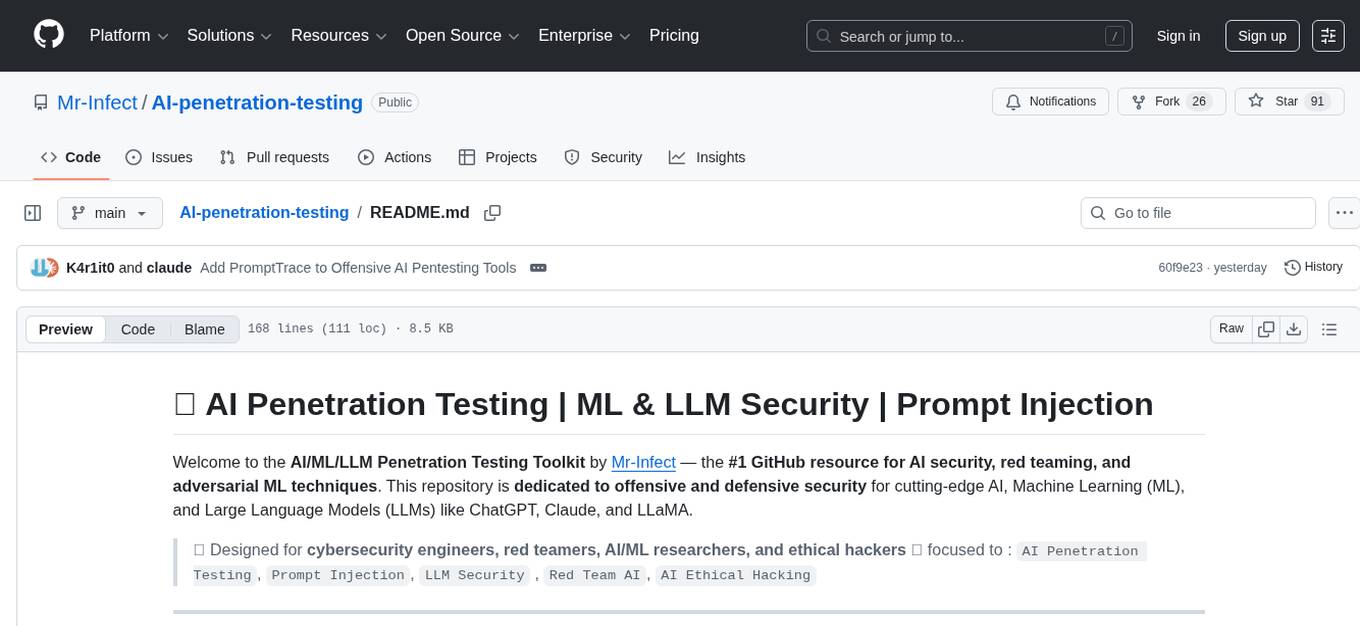

AI-penetration-testing

AI Penetration Testing is a tool designed to automate the process of identifying security vulnerabilities in computer systems using artificial intelligence algorithms. It helps security professionals to efficiently scan and analyze networks, applications, and devices for potential weaknesses and exploits. The tool combines machine learning techniques with traditional penetration testing methods to provide comprehensive security assessments and recommendations for remediation. With AI Penetration Testing, users can enhance the effectiveness and accuracy of their security testing efforts, enabling them to proactively protect their systems from cyber threats and attacks.

www-project-top-10-for-large-language-model-applications

The OWASP Top 10 for Large Language Model Applications is a standard awareness document for developers and web application security, providing practical, actionable, and concise security guidance for applications utilizing Large Language Model (LLM) technologies. The project aims to make application security visible and bridge the gap between general application security principles and the specific challenges posed by LLMs. It offers a comprehensive guide to navigate potential security risks in LLM applications, serving as a reference for both new and experienced developers and security professionals.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

Disciplined-AI-Software-Development

Disciplined AI Software Development is a comprehensive repository that provides guidelines and best practices for developing AI software in a disciplined manner. It covers topics such as project organization, code structure, documentation, testing, and deployment strategies to ensure the reliability, scalability, and maintainability of AI applications. The repository aims to help developers and teams navigate the complexities of AI development by offering practical advice and examples to follow.

awesome-ai-agent-papers

This repository contains a curated list of papers related to artificial intelligence agents. It includes research papers, articles, and resources covering various aspects of AI agents, such as reinforcement learning, multi-agent systems, natural language processing, and more. Whether you are a researcher, student, or practitioner in the field of AI, this collection of papers can serve as a valuable reference to stay updated with the latest advancements and trends in AI agent technologies.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

llm_aigc

The llm_aigc repository is a comprehensive resource for everything related to llm (Large Language Models) and aigc (AI Governance and Control). It provides detailed information, resources, and tools for individuals interested in understanding and working with large language models and AI governance and control. The repository covers a wide range of topics including model training, evaluation, deployment, ethics, and regulations in the AI field.

Awesome-LLM-Safety

Welcome to our Awesome-llm-safety repository! We've curated a collection of the latest, most comprehensive, and most valuable resources on large language model safety (llm-safety). But we don't stop there; included are also relevant talks, tutorials, conferences, news, and articles. Our repository is constantly updated to ensure you have the most current information at your fingertips.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

openvino_build_deploy

The OpenVINO Build and Deploy repository provides pre-built components and code samples to accelerate the development and deployment of production-grade AI applications across various industries. With the OpenVINO Toolkit from Intel, users can enhance the capabilities of both Intel and non-Intel hardware to meet specific needs. The repository includes AI reference kits, interactive demos, workshops, and step-by-step instructions for building AI applications. Additional resources such as Jupyter notebooks and a Medium blog are also available. The repository is maintained by the AI Evangelist team at Intel, who provide guidance on real-world use cases for the OpenVINO toolkit.

ai-engineering-hub

The AI Engineering Hub is a repository that provides in-depth tutorials on LLMs and RAGs, real-world AI agent applications, and examples to implement, adapt, and scale in projects. It caters to beginners, practitioners, and researchers, offering resources for all skill levels to experiment and succeed in AI engineering.

awesome-ai-apps

This repository is a comprehensive collection of practical examples, tutorials, and recipes for building powerful LLM-powered applications. From simple chatbots to advanced AI agents, these projects serve as a guide for developers working with various AI frameworks and tools. Powered by Nebius AI Studio - your one-stop platform for building and deploying AI applications.

For similar tasks

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

LLM-PLSE-paper

LLM-PLSE-paper is a repository focused on the applications of Large Language Models (LLMs) in Programming Language and Software Engineering (PL/SE) domains. It covers a wide range of topics including bug detection, specification inference and verification, code generation, fuzzing and testing, code model and reasoning, code understanding, IDE technologies, prompting for reasoning tasks, and agent/tool usage and planning. The repository provides a comprehensive collection of research papers, benchmarks, empirical studies, and frameworks related to the capabilities of LLMs in various PL/SE tasks.

invariant

Invariant Analyzer is an open-source scanner designed for LLM-based AI agents to find bugs, vulnerabilities, and security threats. It scans agent execution traces to identify issues like looping behavior, data leaks, prompt injections, and unsafe code execution. The tool offers a library of built-in checkers, an expressive policy language, data flow analysis, real-time monitoring, and extensible architecture for custom checkers. It helps developers debug AI agents, scan for security violations, and prevent security issues and data breaches during runtime. The analyzer leverages deep contextual understanding and a purpose-built rule matching engine for security policy enforcement.

OpenRedTeaming

OpenRedTeaming is a repository focused on red teaming for generative models, specifically large language models (LLMs). The repository provides a comprehensive survey on potential attacks on GenAI and robust safeguards. It covers attack strategies, evaluation metrics, benchmarks, and defensive approaches. The repository also implements over 30 auto red teaming methods. It includes surveys, taxonomies, attack strategies, and risks related to LLMs. The goal is to understand vulnerabilities and develop defenses against adversarial attacks on large language models.

Awesome-LLM4Cybersecurity

The repository 'Awesome-LLM4Cybersecurity' provides a comprehensive overview of the applications of Large Language Models (LLMs) in cybersecurity. It includes a systematic literature review covering topics such as constructing cybersecurity-oriented domain LLMs, potential applications of LLMs in cybersecurity, and research directions in the field. The repository analyzes various benchmarks, datasets, and applications of LLMs in cybersecurity tasks like threat intelligence, fuzzing, vulnerabilities detection, insecure code generation, program repair, anomaly detection, and LLM-assisted attacks.

quark-engine

Quark Engine is an AI-powered tool designed for analyzing Android APK files. It focuses on enhancing the detection process for auto-suggestion, enabling users to create detection workflows without coding. The tool offers an intuitive drag-and-drop interface for workflow adjustments and updates. Quark Agent, the core component, generates Quark Script code based on natural language input and feedback. The project is committed to providing a user-friendly experience for designing detection workflows through textual and visual methods. Various features are still under development and will be rolled out gradually.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

CodeAsk

CodeAsk is a code analysis tool designed to tackle complex issues such as code that seems to self-replicate, cryptic comments left by predecessors, messy and unclear code, and long-lasting temporary solutions. It offers intelligent code organization and analysis, security vulnerability detection, code quality assessment, and other interesting prompts to help users understand and work with legacy code more efficiently. The tool aims to translate 'legacy code mountains' into understandable language, creating an illusion of comprehension and facilitating knowledge transfer to new team members.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

)

)