Best AI tools for< Prevent Attacks >

10 - AI tool Sites

StudentMate

The website studentmate.shuchir.dev is a platform that focuses on enhancing security and privacy for users while browsing the web. It provides information about potential privacy errors, such as insecure connections and invalid certificates, and offers guidance on how to protect personal data. The site educates users on common security threats and how to identify and mitigate them, ultimately aiming to create a safer online environment for everyone.

www.atom.com

The website www.atom.com provides a security service to protect against malicious bots. Users may encounter a verification page while the website confirms they are not bots. The service ensures performance and security by Cloudflare, requiring users to enable JavaScript and cookies to proceed.

Cloudflare Security Service

The website theleap.co is a security service powered by Cloudflare to protect websites from online attacks. It helps in preventing unauthorized access and malicious activities by implementing security measures such as blocking certain actions that could potentially harm the website. Users may encounter a block if they trigger security alerts by submitting suspicious content or commands. In such cases, they are prompted to contact the site owner for resolution.

Kupid.ai

Kupid.ai is an AI-powered security verification tool designed to protect websites from malicious bots. It utilizes advanced algorithms to verify user authenticity and prevent unauthorized access. By enabling JavaScript and cookies, users can seamlessly navigate through the verification process and ensure a secure online experience. Kupid.ai is a reliable solution for website owners looking to enhance their security measures and safeguard against potential cyber threats.

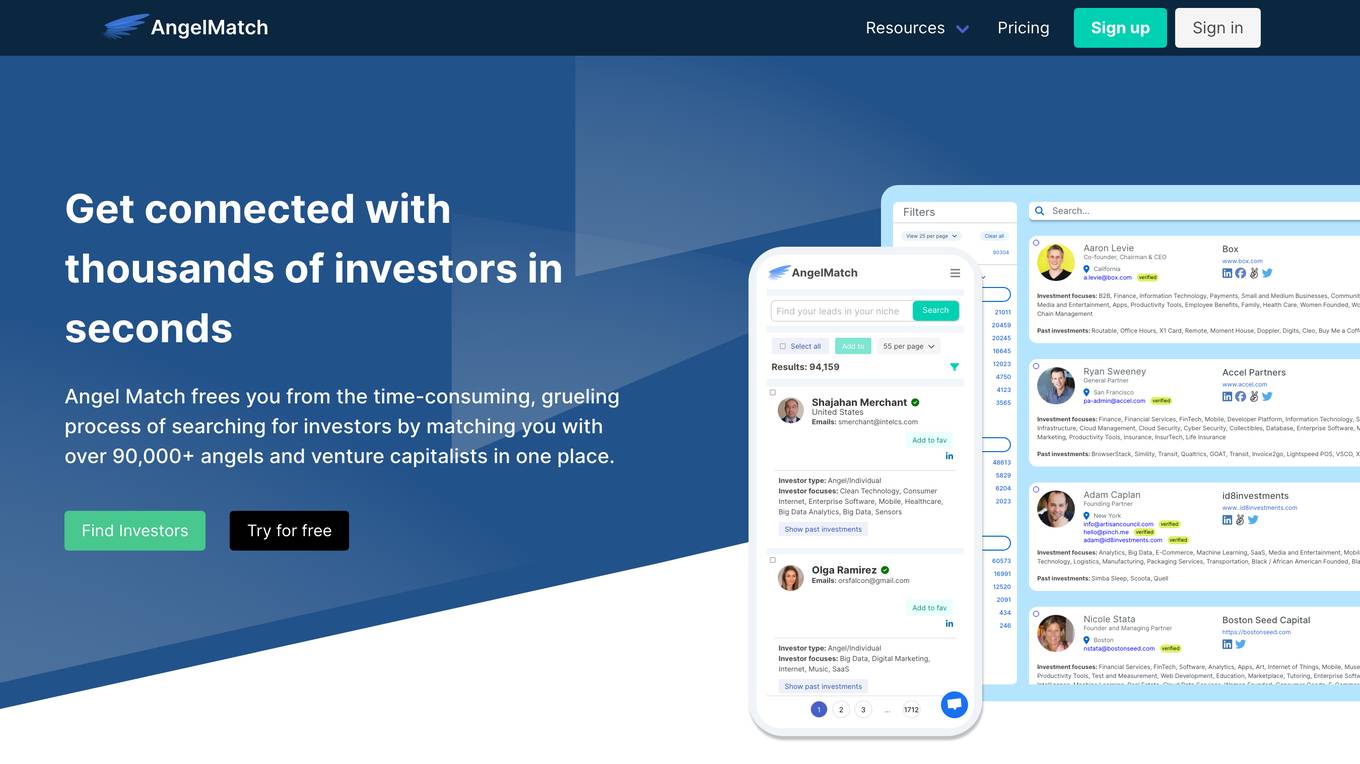

Angelmatch.io

Angelmatch.io is a website that provides a security service to protect against malicious bots. Users may encounter a brief security verification process to ensure they are not bots before accessing the site. The service is powered by Cloudflare, offering performance and security features to safeguard user data and privacy.

MixMode

MixMode is the world's most advanced AI for threat detection, offering a dynamic threat detection platform that utilizes patented Third Wave AI technology. It provides real-time detection of known and novel attacks with high precision, self-supervised learning capabilities, and context-awareness to defend against modern threats. MixMode empowers modern enterprises with unprecedented speed and scale in threat detection, delivering unrivaled capabilities without the need for predefined rules or human input. The platform is trusted by top security teams and offers rapid deployment, customization to individual network dynamics, and state-of-the-art AI-driven threat detection.

Playlab.ai

Playlab.ai is an AI-powered platform that offers a range of tools and applications to enhance online security and protect against cyber attacks. The platform utilizes advanced algorithms to detect and prevent various online threats, such as malicious attacks, SQL injections, and data breaches. Playlab.ai provides users with a secure and reliable online environment by offering real-time monitoring and protection services. With a user-friendly interface and customizable security settings, Playlab.ai is a valuable tool for individuals and businesses looking to safeguard their online presence.

NodePay.ai

NodePay.ai is an AI-powered security service that protects websites from online attacks by enabling cookies and blocking malicious activities. It helps website owners safeguard their online presence by detecting and preventing potential threats. The tool utilizes advanced algorithms to analyze user behavior and identify suspicious activities, ensuring a secure browsing experience for visitors.

Palo Alto Networks

Palo Alto Networks is a cybersecurity company offering advanced security solutions powered by Precision AI to protect modern enterprises from cyber threats. The company provides network security, cloud security, and AI-driven security operations to defend against AI-generated threats in real time. Palo Alto Networks aims to simplify security and achieve better security outcomes through platformization, intelligence-driven expertise, and proactive monitoring of sophisticated threats.

Tokenomist.ai

Tokenomist.ai is an AI-powered security service website that helps protect against online attacks by enabling cookies and blocking malicious activities. It uses advanced algorithms to detect and prevent security threats, ensuring a safe browsing experience for users. The platform is designed to safeguard websites from potential risks and vulnerabilities, offering a reliable security solution for online businesses and individuals.

2 - Open Source AI Tools

Awesome-AI-Security

Awesome-AI-Security is a curated list of resources for AI security, including tools, research papers, articles, and tutorials. It aims to provide a comprehensive overview of the latest developments in securing AI systems and preventing vulnerabilities. The repository covers topics such as adversarial attacks, privacy protection, model robustness, and secure deployment of AI applications. Whether you are a researcher, developer, or security professional, this collection of resources will help you stay informed and up-to-date in the rapidly evolving field of AI security.

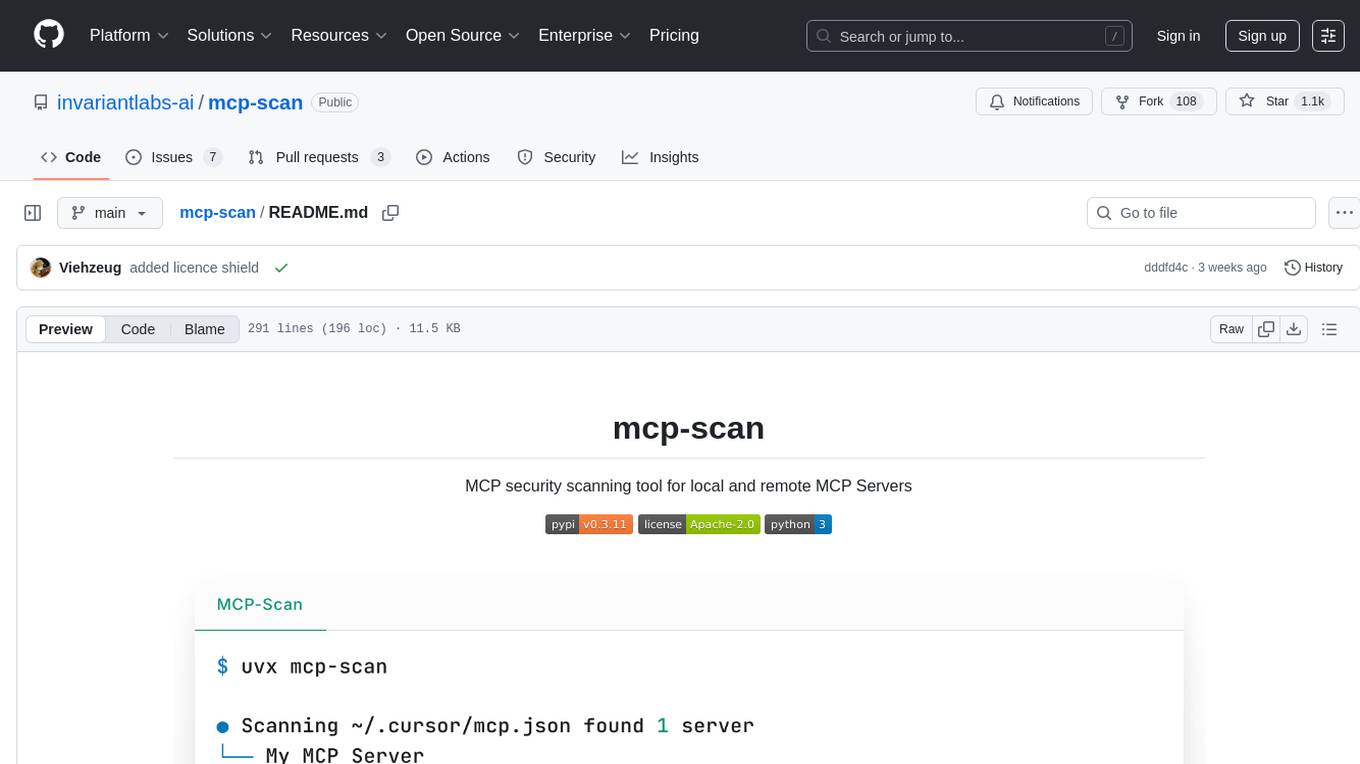

mcp-scan

MCP-Scan is a security scanning tool designed to detect common security vulnerabilities in Model Context Protocol (MCP) servers. It can auto-discover various MCP configurations, scan both local and remote servers for security issues like prompt injection attacks, tool poisoning attacks, and toxic flows. The tool operates in two main modes - 'scan' for static scanning of installed servers and 'proxy' for real-time monitoring and guardrailing of MCP connections. It offers features like scanning for specific attacks, enforcing guardrailing policies, auditing MCP traffic, and detecting changes to MCP tools. MCP-Scan does not store or log usage data and can be used to enhance the security of MCP environments.

20 - OpenAI Gpts

MITRE Interpreter

This GPT helps you understand and apply the MITRE ATT&CK Framework, whether you are familiar with the concepts or not.

Online Doc

You are a virtual general practitioner who makes a basic diagnosis based on the consultant's description and gives advice on treatment and how to prevent such diseases.

Plagiarism Checker

Plagiarism Checker GPT is powered by Winston AI and created to help identify plagiarized content. It is designed to help you detect instances of plagiarism and maintain integrity in academia and publishing. Winston AI is the most trusted AI and Plagiarism Checker.

Punaises de Lit

Expert sur les punaises de lit, conseils d'identification et mesures à prendre en cas d'infestation.

Data Guardian

Expert in privacy news, data breach advice, and multilingual data export assistance.

GPT Auth™

This is a demonstration of GPT Auth™, an authentication system designed to protect your customized GPT.

STOP HPV End Cervical Cancer

Eradicate Cervical Cancer by Providing Trustworthy Information on HPV

Knee and Leg Care Assistant

Helps users with knee and leg care, offering exercises and wellness tips.