repomix

📦 Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. Perfect for when you need to feed your codebase to Large Language Models (LLMs) or other AI tools like Claude, ChatGPT, DeepSeek, Perplexity, Gemini, Gemma, Llama, Grok, and more.

Stars: 21883

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

README:

Use Repomix online! 👉 repomix.com

Need discussion? Join us on Discord!

Share your experience and tips

Stay updated on new features

Get help with configuration and usage

📦 Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file.

It is perfect for when you need to feed your codebase to Large Language Models (LLMs) or other AI tools like Claude,

ChatGPT, DeepSeek, Perplexity, Gemini, Gemma, Llama, Grok, and more.

Please consider sponsoring me.

We're honored! Repomix has been nominated for the Powered by AI category at the JSNation Open Source Awards 2025.

This wouldn't have been possible without all of you using and supporting Repomix. Thank you!

- Try Repomix in your browser at repomix.com

- Join our Discord Server for support and discussion

We look forward to seeing you there!

- AI-Optimized: Formats your codebase in a way that's easy for AI to understand and process.

- Token Counting: Provides token counts for each file and the entire repository, useful for LLM context limits.

- Simple to Use: You need just one command to pack your entire repository.

- Customizable: Easily configure what to include or exclude.

-

Git-Aware: Automatically respects your

.gitignore,.ignore, and.repomixignorefiles. - Security-Focused: Incorporates Secretlint for robust security checks to detect and prevent inclusion of sensitive information.

-

Code Compression: The

--compressoption uses Tree-sitter to extract key code elements, reducing token count while preserving structure.

You can try Repomix instantly in your project directory without installation:

npx repomix@latestOr install globally for repeated use:

# Install using npm

npm install -g repomix

# Alternatively using yarn

yarn global add repomix

# Alternatively using bun

bun add -g repomix

# Alternatively using Homebrew (macOS/Linux)

brew install repomix

# Then run in any project directory

repomixThat's it! Repomix will generate a repomix-output.xml file in your current directory, containing your entire

repository in an AI-friendly format.

You can then send this file to an AI assistant with a prompt like:

This file contains all the files in the repository combined into one.

I want to refactor the code, so please review it first.

When you propose specific changes, the AI might be able to generate code accordingly. With features like Claude's Artifacts, you could potentially output multiple files, allowing for the generation of multiple interdependent pieces of code.

Happy coding! 🚀

Want to try it quickly? Visit the official website at repomix.com. Simply enter your repository name, fill in any optional details, and click the Pack button to see your generated output.

The website offers several convenient features:

- Customizable output format (XML, Markdown, or Plain Text)

- Instant token count estimation

- Much more!

Get instant access to Repomix directly from any GitHub repository! Our Chrome extension adds a convenient "Repomix" button to GitHub repository pages.

- Chrome Extension: Repomix - Chrome Web Store

- Firefox Add-on: Repomix - Firefox Add-ons

- One-click access to Repomix for any GitHub repository

- More exciting features coming soon!

A community-maintained VSCode extension called Repomix Runner (created by massdo) lets you run Repomix right inside your editor with just a few clicks. Run it on any folder, manage outputs seamlessly, and control everything through VSCode's intuitive interface.

Want your output as a file or just the content? Need automatic cleanup? This extension has you covered. Plus, it works smoothly with your existing repomix.config.json.

Try it now on the VSCode Marketplace! Source code is available on GitHub.

If you're using Python, you might want to check out Gitingest, which is better suited for Python ecosystem and data

science workflows:

https://github.com/cyclotruc/gitingest

To pack your entire repository:

repomixTo pack a specific directory:

repomix path/to/directoryTo pack specific files or directories using glob patterns:

repomix --include "src/**/*.ts,**/*.md"To exclude specific files or directories:

repomix --ignore "**/*.log,tmp/"To pack a remote repository:

repomix --remote https://github.com/yamadashy/repomix

# You can also use GitHub shorthand:

repomix --remote yamadashy/repomix

# You can specify the branch name, tag, or commit hash:

repomix --remote https://github.com/yamadashy/repomix --remote-branch main

# Or use a specific commit hash:

repomix --remote https://github.com/yamadashy/repomix --remote-branch 935b695

# Another convenient way is specifying the branch's URL

repomix --remote https://github.com/yamadashy/repomix/tree/main

# Commit's URL is also supported

repomix --remote https://github.com/yamadashy/repomix/commit/836abcd7335137228ad77feb28655d85712680f1

To pack files from a file list (pipe via stdin):

# Using find command

find src -name "*.ts" -type f | repomix --stdin

# Using git to get tracked files

git ls-files "*.ts" | repomix --stdin

# Using grep to find files containing specific content

grep -l "TODO" **/*.ts | repomix --stdin

# Using ripgrep to find files with specific content

rg -l "TODO|FIXME" --type ts | repomix --stdin

# Using ripgrep (rg) to find files

rg --files --type ts | repomix --stdin

# Using sharkdp/fd to find files

fd -e ts | repomix --stdin

# Using fzf to select from all files

fzf -m | repomix --stdin

# Interactive file selection with fzf

find . -name "*.ts" -type f | fzf -m | repomix --stdin

# Using ls with glob patterns

ls src/**/*.ts | repomix --stdin

# From a file containing file paths

cat file-list.txt | repomix --stdin

# Direct input with echo

echo -e "src/index.ts\nsrc/utils.ts" | repomix --stdinThe --stdin option allows you to pipe a list of file paths to Repomix, giving you ultimate flexibility in selecting which files to pack.

When using --stdin, the specified files are effectively added to the include patterns. This means that the normal include and ignore behavior still applies - files specified via stdin will still be excluded if they match ignore patterns.

[!NOTE] When using

--stdin, file paths can be relative or absolute, and Repomix will automatically handle path resolution and deduplication.

To include git logs in the output:

# Include git logs with default count (50 commits)

repomix --include-logs

# Include git logs with specific commit count

repomix --include-logs --include-logs-count 10

# Combine with diffs for comprehensive git context

repomix --include-logs --include-diffsThe git logs include commit dates, messages, and file paths for each commit, providing valuable context for AI analysis of code evolution and development patterns.

To compress the output:

repomix --compress

# You can also use it with remote repositories:

repomix --remote yamadashy/repomix --compressTo initialize a new configuration file (repomix.config.json):

repomix --initOnce you have generated the packed file, you can use it with Generative AI tools like ChatGPT, DeepSeek, Perplexity, Gemini, Gemma, Llama, Grok, and more.

You can also run Repomix using Docker.

This is useful if you want to run Repomix in an isolated environment or prefer using containers.

Basic usage (current directory):

docker run -v .:/app -it --rm ghcr.io/yamadashy/repomixTo pack a specific directory:

docker run -v .:/app -it --rm ghcr.io/yamadashy/repomix path/to/directoryProcess a remote repository and output to a output directory:

docker run -v ./output:/app -it --rm ghcr.io/yamadashy/repomix --remote https://github.com/yamadashy/repomixOnce you have generated the packed file with Repomix, you can use it with AI tools like ChatGPT, DeepSeek, Perplexity, Gemini, Gemma, Llama, Grok, and more. Here are some example prompts to get you started:

For a comprehensive code review and refactoring suggestions:

This file contains my entire codebase. Please review the overall structure and suggest any improvements or refactoring opportunities, focusing on maintainability and scalability.

To generate project documentation:

Based on the codebase in this file, please generate a detailed README.md that includes an overview of the project, its main features, setup instructions, and usage examples.

For generating test cases:

Analyze the code in this file and suggest a comprehensive set of unit tests for the main functions and classes. Include edge cases and potential error scenarios.

Evaluate code quality and adherence to best practices:

Review the codebase for adherence to coding best practices and industry standards. Identify areas where the code could be improved in terms of readability, maintainability, and efficiency. Suggest specific changes to align the code with best practices.

Get a high-level understanding of the library

This file contains the entire codebase of library. Please provide a comprehensive overview of the library, including its main purpose, key features, and overall architecture.

Feel free to modify these prompts based on your specific needs and the capabilities of the AI tool you're using.

Check out our community discussion where users share:

- Which AI tools they're using with Repomix

- Effective prompts they've discovered

- How Repomix has helped them

- Tips and tricks for getting the most out of AI code analysis

Feel free to join the discussion and share your own experiences! Your insights could help others make better use of Repomix.

Repomix generates a single file with clear separators between different parts of your codebase.

To enhance AI comprehension, the output file begins with an AI-oriented explanation, making it easier for AI models to

understand the context and structure of the packed repository.

The XML format structures the content in a hierarchical manner:

This file is a merged representation of the entire codebase, combining all repository files into a single document.

<file_summary>

(Metadata and usage AI instructions)

</file_summary>

<directory_structure>

src/

cli/

cliOutput.ts

index.ts

(...remaining directories)

</directory_structure>

<files>

<file path="src/index.js">

// File contents here

</file>

(...remaining files)

</files>

<instruction>

(Custom instructions from `output.instructionFilePath`)

</instruction>For those interested in the potential of XML tags in AI contexts:

https://docs.anthropic.com/en/docs/build-with-claude/prompt-engineering/use-xml-tags

When your prompts involve multiple components like context, instructions, and examples, XML tags can be a game-changer. They help Claude parse your prompts more accurately, leading to higher-quality outputs.

This means that the XML output from Repomix is not just a different format, but potentially a more effective way to feed your codebase into AI systems for analysis, code review, or other tasks.

To generate output in Markdown format, use the --style markdown option:

repomix --style markdownThe Markdown format structures the content in a hierarchical manner:

This file is a merged representation of the entire codebase, combining all repository files into a single document.

# File Summary

(Metadata and usage AI instructions)

# Repository Structure

```

src/

cli/

cliOutput.ts

index.ts

```

(...remaining directories)

# Repository Files

## File: src/index.js

```

// File contents here

```

(...remaining files)

# Instruction

(Custom instructions from `output.instructionFilePath`)This format provides a clean, readable structure that is both human-friendly and easily parseable by AI systems.

To generate output in JSON format, use the --style json option:

repomix --style jsonThe JSON format structures the content as a hierarchical JSON object with camelCase property names:

{

"fileSummary": {

"generationHeader": "This file is a merged representation of the entire codebase, combined into a single document by Repomix.",

"purpose": "This file contains a packed representation of the entire repository's contents...",

"fileFormat": "The content is organized as follows...",

"usageGuidelines": "- This file should be treated as read-only...",

"notes": "- Some files may have been excluded based on .gitignore, .ignore, and .repomixignore rules..."

},

"userProvidedHeader": "Custom header text if specified",

"directoryStructure": "src/\n cli/\n cliOutput.ts\n index.ts\n config/\n configLoader.ts",

"files": {

"src/index.js": "// File contents here",

"src/utils.js": "// File contents here"

},

"instruction": "Custom instructions from instructionFilePath"

}This format is ideal for:

- Programmatic processing: Easy to parse and manipulate with JSON libraries

- API integration: Direct consumption by web services and applications

- AI tool compatibility: Structured format for machine learning and AI systems

-

Data analysis: Straightforward extraction of specific information using tools like

jq

The JSON format makes it easy to extract specific information programmatically:

# List all file paths

cat repomix-output.json | jq -r '.files | keys[]'

# Count total number of files

cat repomix-output.json | jq '.files | keys | length'

# Extract specific file content

cat repomix-output.json | jq -r '.files["README.md"]'

cat repomix-output.json | jq -r '.files["src/index.js"]'

# Find files by extension

cat repomix-output.json | jq -r '.files | keys[] | select(endswith(".ts"))'

# Get files containing specific text

cat repomix-output.json | jq -r '.files | to_entries[] | select(.value | contains("function")) | .key'

# Extract directory structure

cat repomix-output.json | jq -r '.directoryStructure'

# Get file summary information

cat repomix-output.json | jq '.fileSummary.purpose'

cat repomix-output.json | jq -r '.fileSummary.generationHeader'

# Extract user-provided header (if exists)

cat repomix-output.json | jq -r '.userProvidedHeader // "No header provided"'

# Create a file list with sizes

cat repomix-output.json | jq -r '.files | to_entries[] | "\(.key): \(.value | length) characters"'To generate output in plain text format, use the --style plain option:

repomix --style plainThis file is a merged representation of the entire codebase, combining all repository files into a single document.

================================================================

File Summary

================================================================

(Metadata and usage AI instructions)

================================================================

Directory Structure

================================================================

src/

cli/

cliOutput.ts

index.ts

config/

configLoader.ts

(...remaining directories)

================================================================

Files

================================================================

================

File: src/index.js

================

// File contents here

================

File: src/utils.js

================

// File contents here

(...remaining files)

================================================================

Instruction

================================================================

(Custom instructions from `output.instructionFilePath`)

-

-v, --version: Show version information and exit

-

--verbose: Enable detailed debug logging (shows file processing, token counts, and configuration details) -

--quiet: Suppress all console output except errors (useful for scripting) -

--stdout: Write packed output directly to stdout instead of a file (suppresses all logging) -

--stdin: Read file paths from stdin, one per line (specified files are processed directly) -

--copy: Copy the generated output to system clipboard after processing -

--token-count-tree [threshold]: Show file tree with token counts; optional threshold to show only files with ≥N tokens (e.g., --token-count-tree 100) -

--top-files-len <number>: Number of largest files to show in summary (default: 5, e.g., --top-files-len 20)

-

-o, --output <file>: Output file path (default: repomix-output.xml, use "-" for stdout) -

--style <style>: Output format: xml, markdown, json, or plain (default: xml) -

--parsable-style: Escape special characters to ensure valid XML/Markdown (needed when output contains code that breaks formatting) -

--compress: Extract essential code structure (classes, functions, interfaces) using Tree-sitter parsing -

--output-show-line-numbers: Prefix each line with its line number in the output -

--no-file-summary: Omit the file summary section from output -

--no-directory-structure: Omit the directory tree visualization from output -

--no-files: Generate metadata only without file contents (useful for repository analysis) -

--remove-comments: Strip all code comments before packing -

--remove-empty-lines: Remove blank lines from all files -

--truncate-base64: Truncate long base64 data strings to reduce output size -

--header-text <text>: Custom text to include at the beginning of the output -

--instruction-file-path <path>: Path to file containing custom instructions to include in output -

--split-output <size>: Split output into multiple numbered files (e.g., repomix-output.1.xml, repomix-output.2.xml); size like 500kb, 2mb, or 1.5mb -

--include-empty-directories: Include folders with no files in directory structure -

--include-full-directory-structure: Show complete directory tree in output, including files not matched by --include patterns -

--no-git-sort-by-changes: Don't sort files by git change frequency (default: most changed files first) -

--include-diffs: Add git diff section showing working tree and staged changes -

--include-logs: Add git commit history with messages and changed files -

--include-logs-count <count>: Number of recent commits to include with --include-logs (default: 50)

-

--include <patterns>: Include only files matching these glob patterns (comma-separated, e.g., "src/**/.js,.md") -

-i, --ignore <patterns>: Additional patterns to exclude (comma-separated, e.g., "*.test.js,docs/**") -

--no-gitignore: Don't use .gitignore rules for filtering files -

--no-dot-ignore: Don't use .ignore rules for filtering files -

--no-default-patterns: Don't apply built-in ignore patterns (node_modules, .git, build dirs, etc.)

-

--remote <url>: Clone and pack a remote repository (GitHub URL or user/repo format) -

--remote-branch <name>: Specific branch, tag, or commit to use (default: repository's default branch)

-

-c, --config <path>: Use custom config file instead of repomix.config.json -

--init: Create a new repomix.config.json file with defaults -

--global: With --init, create config in home directory instead of current directory

-

--no-security-check: Skip scanning for sensitive data like API keys and passwords

-

--token-count-encoding <encoding>: Tokenizer model for counting: o200k_base (GPT-4o), cl100k_base (GPT-3.5/4), etc. (default: o200k_base)

-

--mcp: Run as Model Context Protocol server for AI tool integration

-

--skill-generate [name]: Generate Claude Agent Skills format output to.claude/skills/<name>/directory (name auto-generated if omitted) -

--skill-output <path>: Specify skill output directory path directly (skips location prompt) -

-f, --force: Skip all confirmation prompts (e.g., skill directory overwrite)

# Basic usage

repomix

# Custom output

repomix -o output.xml --style xml

# Output to stdout

repomix --stdout > custom-output.txt

# Send output to stdout, then pipe into another command (for example, simonw/llm)

repomix --stdout | llm "Please explain what this code does."

# Custom output with compression

repomix --compress

# Process specific files

repomix --include "src/**/*.ts" --ignore "**/*.test.ts"

# Split output into multiple files (max size per part)

repomix --split-output 20mb

# Remote repository with branch

repomix --remote https://github.com/user/repo/tree/main

# Remote repository with commit

repomix --remote https://github.com/user/repo/commit/836abcd7335137228ad77feb28655d85712680f1

# Remote repository with shorthand

repomix --remote user/repoTo update a globally installed Repomix:

# Using npm

npm update -g repomix

# Using yarn

yarn global upgrade repomix

# Using bun

bun update -g repomixUsing npx repomix is generally more convenient as it always uses the latest version.

Repomix supports processing remote Git repositories without the need for manual cloning. This feature allows you to quickly analyze any public Git repository with a single command.

To process a remote repository, use the --remote option followed by the repository URL:

repomix --remote https://github.com/yamadashy/repomixYou can also use GitHub's shorthand format:

repomix --remote yamadashy/repomixYou can specify the branch name, tag, or commit hash:

# Using --remote-branch option

repomix --remote https://github.com/yamadashy/repomix --remote-branch main

# Using branch's URL

repomix --remote https://github.com/yamadashy/repomix/tree/mainOr use a specific commit hash:

# Using --remote-branch option

repomix --remote https://github.com/yamadashy/repomix --remote-branch 935b695

# Using commit's URL

repomix --remote https://github.com/yamadashy/repomix/commit/836abcd7335137228ad77feb28655d85712680f1The --compress option utilizes Tree-sitter to perform intelligent code extraction, focusing on essential function and class signatures while removing implementation details. This can help reduce token count while retaining important structural information.

repomix --compressFor example, this code:

import { ShoppingItem } from './shopping-item';

/**

* Calculate the total price of shopping items

*/

const calculateTotal = (

items: ShoppingItem[]

) => {

let total = 0;

for (const item of items) {

total += item.price * item.quantity;

}

return total;

}

// Shopping item interface

interface Item {

name: string;

price: number;

quantity: number;

}Will be compressed to:

import { ShoppingItem } from './shopping-item';

⋮----

/**

* Calculate the total price of shopping items

*/

const calculateTotal = (

items: ShoppingItem[]

) => {

⋮----

// Shopping item interface

interface Item {

name: string;

price: number;

quantity: number;

}[!NOTE] This is an experimental feature that we'll be actively improving based on user feedback and real-world usage

Understanding your codebase's token distribution is crucial for optimizing AI interactions. Use the --token-count-tree option to visualize token usage across your project:

repomix --token-count-treeThis displays a hierarchical view of your codebase with token counts:

🔢 Token Count Tree:

────────────────────

└── src/ (70,925 tokens)

├── cli/ (12,714 tokens)

│ ├── actions/ (7,546 tokens)

│ └── reporters/ (990 tokens)

└── core/ (41,600 tokens)

├── file/ (10,098 tokens)

└── output/ (5,808 tokens)

You can also set a minimum token threshold to focus on larger files:

repomix --token-count-tree 1000 # Only show files/directories with 1000+ tokensThis helps you:

- Identify token-heavy files that might exceed AI context limits

-

Optimize file selection using

--includeand--ignorepatterns - Plan compression strategies by targeting the largest contributors

- Balance content vs. context when preparing code for AI analysis

When working with large codebases, the packed output may exceed file size limits imposed by some AI tools (e.g., Google AI Studio's 1MB limit). Use --split-output to automatically split the output into multiple files:

repomix --split-output 1mbThis generates numbered files like:

repomix-output.1.xmlrepomix-output.2.xmlrepomix-output.3.xml

Size can be specified with units: 500kb, 1mb, 2mb, 1.5mb, etc. Decimal values are supported.

[!NOTE] Files are grouped by top-level directory to maintain context. A single file or directory will never be split across multiple output files.

Repomix supports the Model Context Protocol (MCP), allowing AI assistants to directly interact with your codebase. When run as an MCP server, Repomix provides tools that enable AI assistants to package local or remote repositories for analysis without requiring manual file preparation.

repomix --mcpTo use Repomix as an MCP server with AI assistants like Claude, you need to configure the MCP settings:

For VS Code:

You can install the Repomix MCP server in VS Code using one of these methods:

- Using the Install Badge:

- Using the Command Line:

code --add-mcp '{"name":"repomix","command":"npx","args":["-y","repomix","--mcp"]}'For VS Code Insiders:

code-insiders --add-mcp '{"name":"repomix","command":"npx","args":["-y","repomix","--mcp"]}'For Cline (VS Code extension):

Edit the cline_mcp_settings.json file:

{

"mcpServers": {

"repomix": {

"command": "npx",

"args": [

"-y",

"repomix",

"--mcp"

]

}

}

}For Cursor:

In Cursor, add a new MCP server from Cursor Settings > MCP > + Add new global MCP server with a configuration similar to Cline.

For Claude Desktop:

Edit the claude_desktop_config.json file with similar configuration to Cline's config.

For Claude Code:

To configure Repomix as an MCP server in Claude Code, use the following command:

claude mcp add repomix -- npx -y repomix --mcpAlternatively, you can use the official Repomix plugins (see Claude Code Plugins section below).

Using Docker instead of npx:

You can use Docker as an alternative to npx for running Repomix as an MCP server:

{

"mcpServers": {

"repomix-docker": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"ghcr.io/yamadashy/repomix",

"--mcp"

]

}

}

}Once configured, your AI assistant can directly use Repomix's capabilities to analyze codebases without manual file preparation, making code analysis workflows more efficient.

When running as an MCP server, Repomix provides the following tools:

- pack_codebase: Package a local code directory into a consolidated XML file for AI analysis

- Parameters:

-

directory: Absolute path to the directory to pack -

compress: (Optional, default: false) Enable Tree-sitter compression to extract essential code signatures and structure while removing implementation details. Reduces token usage by ~70% while preserving semantic meaning. Generally not needed since grep_repomix_output allows incremental content retrieval. Use only when you specifically need the entire codebase content for large repositories. -

includePatterns: (Optional) Specify files to include using fast-glob patterns. Multiple patterns can be comma-separated (e.g., "/*.{js,ts}", "src/,docs/**"). Only matching files will be processed. -

ignorePatterns: (Optional) Specify additional files to exclude using fast-glob patterns. Multiple patterns can be comma-separated (e.g., "test/,*.spec.js", "node_modules/,dist/**"). These patterns supplement .gitignore, .ignore, and built-in exclusions. -

topFilesLength: (Optional, default: 10) Number of largest files by size to display in the metrics summary for codebase analysis.

-

- attach_packed_output: Attach an existing Repomix packed output file for AI analysis

- Parameters:

-

path: Path to a directory containing repomix-output.xml or direct path to a packed repository XML file -

topFilesLength: (Optional, default: 10) Number of largest files by size to display in the metrics summary

-

- Features:

- Accepts either a directory containing a repomix-output.xml file or a direct path to an XML file

- Registers the file with the MCP server and returns the same structure as the pack_codebase tool

- Provides secure access to existing packed outputs without requiring re-processing

- Useful for working with previously generated packed repositories

- pack_remote_repository: Fetch, clone, and package a GitHub repository into a consolidated XML file for AI analysis

- Parameters:

-

remote: GitHub repository URL or user/repo format (e.g., "yamadashy/repomix", "https://github.com/user/repo", or "https://github.com/user/repo/tree/branch") -

compress: (Optional, default: false) Enable Tree-sitter compression to extract essential code signatures and structure while removing implementation details. Reduces token usage by ~70% while preserving semantic meaning. Generally not needed since grep_repomix_output allows incremental content retrieval. Use only when you specifically need the entire codebase content for large repositories. -

includePatterns: (Optional) Specify files to include using fast-glob patterns. Multiple patterns can be comma-separated (e.g., "/*.{js,ts}", "src/,docs/**"). Only matching files will be processed. -

ignorePatterns: (Optional) Specify additional files to exclude using fast-glob patterns. Multiple patterns can be comma-separated (e.g., "test/,*.spec.js", "node_modules/,dist/**"). These patterns supplement .gitignore, .ignore, and built-in exclusions. -

topFilesLength: (Optional, default: 10) Number of largest files by size to display in the metrics summary for codebase analysis.

-

- read_repomix_output: Read the contents of a Repomix-generated output file. Supports partial reading with line range specification for large files.

- Parameters:

-

outputId: ID of the Repomix output file to read -

startLine: (Optional) Starting line number (1-based, inclusive). If not specified, reads from beginning. -

endLine: (Optional) Ending line number (1-based, inclusive). If not specified, reads to end.

-

- Features:

- Specifically designed for web-based environments or sandboxed applications

- Retrieves the content of previously generated outputs using their ID

- Provides secure access to packed codebase without requiring file system access

- Supports partial reading for large files

- grep_repomix_output: Search for patterns in a Repomix output file using grep-like functionality with JavaScript RegExp syntax

- Parameters:

-

outputId: ID of the Repomix output file to search -

pattern: Search pattern (JavaScript RegExp regular expression syntax) -

contextLines: (Optional, default: 0) Number of context lines to show before and after each match. Overridden by beforeLines/afterLines if specified. -

beforeLines: (Optional) Number of context lines to show before each match (like grep -B). Takes precedence over contextLines. -

afterLines: (Optional) Number of context lines to show after each match (like grep -A). Takes precedence over contextLines. -

ignoreCase: (Optional, default: false) Perform case-insensitive matching

-

- Features:

- Uses JavaScript RegExp syntax for powerful pattern matching

- Supports context lines for better understanding of matches

- Allows separate control of before/after context lines

- Case-sensitive and case-insensitive search options

- file_system_read_file: Read a file from the local file system using an absolute path. Includes built-in security validation to detect and prevent access to files containing sensitive information.

- Parameters:

-

path: Absolute path to the file to read

-

- Security features:

- Implements security validation using Secretlint

- Prevents access to files containing sensitive information (API keys, passwords, secrets)

- Validates absolute paths to prevent directory traversal attacks

- file_system_read_directory: List the contents of a directory using an absolute path. Returns a formatted list showing files and subdirectories with clear indicators.

- Parameters:

-

path: Absolute path to the directory to list

-

- Features:

- Shows files and directories with clear indicators (

[FILE]or[DIR]) - Provides safe directory traversal with proper error handling

- Validates paths and ensures they are absolute

- Useful for exploring project structure and understanding codebase organization

- Shows files and directories with clear indicators (

Repomix provides official plugins for Claude Code that integrate seamlessly with the AI-powered development environment.

1. Add the Repomix plugin marketplace:

/plugin marketplace add yamadashy/repomix

2. Install plugins:

# Install MCP server plugin (recommended foundation)

/plugin install repomix-mcp@repomix

# Install commands plugin (extends functionality)

/plugin install repomix-commands@repomix

# Install repository explorer plugin (AI-powered analysis)

/plugin install repomix-explorer@repomix

Note: The repomix-mcp plugin is recommended as a foundation. The repomix-commands plugin provides convenient slash commands, while repomix-explorer adds AI-powered analysis capabilities. While you can install them independently, using all three provides the most comprehensive experience.

Alternatively, use the interactive plugin installer:

/plugin

This will open an interactive interface where you can browse and install available plugins.

1. repomix-mcp (MCP Server Plugin)

Foundation plugin that provides AI-powered codebase analysis through MCP server integration.

Features:

- Pack local and remote repositories

- Search through packed outputs

- Read files with built-in security scanning (Secretlint)

- Automatic Tree-sitter compression (~70% token reduction)

2. repomix-commands (Slash Commands Plugin)

Provides convenient slash commands for quick operations with natural language support.

Available Commands:

-

/repomix-commands:pack-local- Pack local codebase with various options -

/repomix-commands:pack-remote- Pack and analyze remote GitHub repositories

Example usage:

/repomix-commands:pack-local

Pack this project as markdown with compression

/repomix-commands:pack-remote yamadashy/repomix

Pack only TypeScript files from the yamadashy/repomix repository

3. repomix-explorer (AI Analysis Agent Plugin)

AI-powered repository analysis agent that intelligently explores codebases using Repomix CLI.

Features:

- Natural language codebase exploration and analysis

- Intelligent pattern discovery and code structure understanding

- Incremental analysis using grep and targeted file reading

- Automatic context management for large repositories

Available Commands:

-

/repomix-explorer:explore-local- Analyze local codebase with AI assistance -

/repomix-explorer:explore-remote- Analyze remote GitHub repositories with AI assistance

Example usage:

/repomix-explorer:explore-local ./src

Find all authentication-related code

/repomix-explorer:explore-remote facebook/react

Show me the main component architecture

The agent automatically:

- Runs

npx repomix@latestto pack the repository - Uses Grep and Read tools to efficiently search the output

- Provides comprehensive analysis without consuming excessive context

- Seamless Integration: Claude can directly analyze codebases without manual preparation

- Natural Language: Use conversational commands instead of remembering CLI syntax

-

Always Latest: Automatically uses

npx repomix@latestfor up-to-date features - Security Built-in: Automatic Secretlint scanning prevents sensitive data exposure

- Token Optimization: Tree-sitter compression for large codebases

For more details, see the plugin documentation in the .claude/plugins/ directory.

Repomix can generate Claude Agent Skills format output, creating a structured Skills directory that can be used as a reusable codebase reference for AI assistants. This feature is particularly powerful when you want to reference implementations from remote repositories.

# Generate Skills from local directory

repomix --skill-generate

# Generate with custom Skills name

repomix --skill-generate my-project-reference

# Generate from remote repository

repomix --remote https://github.com/user/repo --skill-generateWhen you run the command, Repomix prompts you to choose where to save the Skills:

-

Personal Skills (

~/.claude/skills/) - Available across all projects on your machine -

Project Skills (

.claude/skills/) - Shared with your team via git

For CI pipelines and automation scripts, you can skip all interactive prompts using --skill-output and --force:

# Specify output directory directly

repomix --skill-generate --skill-output ./my-skills

# Skip overwrite confirmation with --force

repomix --skill-generate --skill-output ./my-skills --force

# Full non-interactive example

repomix --remote user/repo --skill-generate my-skill --skill-output ./output --forceThe Skills are generated with the following structure:

.claude/skills/<skill-name>/

├── SKILL.md # Main Skills metadata & documentation

└── references/

├── summary.md # Purpose, format, and statistics

├── project-structure.md # Directory tree with line counts

├── files.md # All file contents (grep-friendly)

└── tech-stack.md # Languages, frameworks, dependencies

- SKILL.md: Contains Skills metadata, file/line/token counts, overview, and usage instructions

- summary.md: Explains the Skills' purpose, usage guidelines, and provides statistics breakdown by file type and language

- project-structure.md: Directory tree with line counts per file for easy file discovery

- files.md: All file contents with syntax highlighting headers, optimized for grep-friendly searching

-

tech-stack.md: Auto-detected tech stack from dependency files (

package.json,requirements.txt,Cargo.toml, etc.)

If no name is provided, Repomix auto-generates one:

repomix src/ --skill-generate # → repomix-reference-src

repomix --remote user/repo --skill-generate # → repomix-reference-repo

repomix --skill-generate CustomName # → custom-name (normalized to kebab-case)Skills generation respects all standard Repomix options:

# Generate Skills with file filtering

repomix --skill-generate --include "src/**/*.ts" --ignore "**/*.test.ts"

# Generate Skills with compression

repomix --skill-generate --compress

# Generate Skills from remote repository

repomix --remote yamadashy/repomix --skill-generateRepomix provides a ready-to-use Repomix Explorer skill that enables AI coding assistants to analyze and explore codebases using Repomix CLI. This skill is designed to work with various AI tools including Claude Code, Cursor, Codex, GitHub Copilot, and more.

npx add-skill yamadashy/repomix --skill repomix-explorerThis command installs the skill to your AI assistant's skills directory (e.g., .claude/skills/), making it immediately available.

Once installed, you can analyze codebases with natural language instructions.

Analyze remote repositories:

"What's the structure of this repo?

https://github.com/facebook/react"

Explore local codebases:

"What's in this project?

~/projects/my-app"

This is useful not only for understanding codebases, but also when you want to implement features by referencing your other repositories.

Repomix supports multiple configuration file formats for flexibility and ease of use.

Repomix will automatically search for configuration files in the following priority order:

-

TypeScript (

repomix.config.ts,repomix.config.mts,repomix.config.cts) -

JavaScript/ES Module (

repomix.config.js,repomix.config.mjs,repomix.config.cjs) -

JSON (

repomix.config.json5,repomix.config.jsonc,repomix.config.json)

Create a repomix.config.json file in your project root:

repomix --initThis will create a repomix.config.json file with default settings.

TypeScript configuration files provide the best developer experience with full type checking and IDE support.

Installation:

To use TypeScript or JavaScript configuration with defineConfig, you need to install Repomix as a dev dependency:

npm install -D repomixExample:

// repomix.config.ts

import { defineConfig } from 'repomix';

export default defineConfig({

output: {

filePath: 'output.xml',

style: 'xml',

removeComments: true,

},

ignore: {

customPatterns: ['**/node_modules/**', '**/dist/**'],

},

});Benefits:

- ✅ Full TypeScript type checking in your IDE

- ✅ Excellent IDE autocomplete and IntelliSense

- ✅ Use dynamic values (timestamps, environment variables, etc.)

Dynamic Values Example:

// repomix.config.ts

import { defineConfig } from 'repomix';

// Generate timestamp-based filename

const timestamp = new Date().toISOString().slice(0, 19).replace(/[:.]/g, '-');

export default defineConfig({

output: {

filePath: `output-${timestamp}.xml`,

style: 'xml',

},

});JavaScript configuration files work the same as TypeScript, supporting defineConfig and dynamic values.

Here's an explanation of the configuration options:

| Option | Description | Default |

|---|---|---|

input.maxFileSize |

Maximum file size in bytes to process. Files larger than this will be skipped | 50000000 |

output.filePath |

The name of the output file | "repomix-output.xml" |

output.style |

The style of the output (xml, markdown, json, plain) |

"xml" |

output.parsableStyle |

Whether to escape the output based on the chosen style schema. Note that this can increase token count. | false |

output.compress |

Whether to perform intelligent code extraction to reduce token count | false |

output.headerText |

Custom text to include in the file header | null |

output.instructionFilePath |

Path to a file containing detailed custom instructions | null |

output.fileSummary |

Whether to include a summary section at the beginning of the output | true |

output.directoryStructure |

Whether to include the directory structure in the output | true |

output.files |

Whether to include file contents in the output | true |

output.removeComments |

Whether to remove comments from supported file types | false |

output.removeEmptyLines |

Whether to remove empty lines from the output | false |

output.showLineNumbers |

Whether to add line numbers to each line in the output | false |

output.truncateBase64 |

Whether to truncate long base64 data strings (e.g., images) to reduce token count | false |

output.copyToClipboard |

Whether to copy the output to system clipboard in addition to saving the file | false |

output.splitOutput |

Split output into multiple numbered files by maximum size per part (e.g., 1000000 for ~1MB). Keeps each file under the limit and avoids splitting files across parts |

Not set |

output.topFilesLength |

Number of top files to display in the summary. If set to 0, no summary will be displayed | 5 |

output.tokenCountTree |

Whether to display file tree with token count summaries. Can be boolean or number (minimum token count threshold) | false |

output.includeEmptyDirectories |

Whether to include empty directories in the repository structure | false |

output.includeFullDirectoryStructure |

When using include patterns, whether to display the complete directory tree (respecting ignore patterns) while still processing only the included files. Provides full repository context for AI analysis |

false |

output.git.sortByChanges |

Whether to sort files by git change count (files with more changes appear at the bottom) | true |

output.git.sortByChangesMaxCommits |

Maximum number of commits to analyze for git changes | 100 |

output.git.includeDiffs |

Whether to include git diffs in the output (includes both work tree and staged changes separately) | false |

output.git.includeLogs |

Whether to include git logs in the output (includes commit history with dates, messages, and file paths) | false |

output.git.includeLogsCount |

Number of git log commits to include | 50 |

include |

Patterns of files to include (using glob patterns) | [] |

ignore.useGitignore |

Whether to use patterns from the project's .gitignore file |

true |

ignore.useDotIgnore |

Whether to use patterns from the project's .ignore file |

true |

ignore.useDefaultPatterns |

Whether to use default ignore patterns | true |

ignore.customPatterns |

Additional patterns to ignore (using glob patterns) | [] |

security.enableSecurityCheck |

Whether to perform security checks on files | true |

tokenCount.encoding |

Token count encoding used by OpenAI's tiktoken tokenizer (e.g., o200k_base for GPT-4o, cl100k_base for GPT-4/3.5). See tiktoken model.py for encoding details. |

"o200k_base" |

The configuration file supports JSON5 syntax, which allows:

- Comments (both single-line and multi-line)

- Trailing commas in objects and arrays

- Unquoted property names

- More relaxed string syntax

You can enable schema validation for your configuration file by adding the $schema property:

{

"$schema": "https://repomix.com/schemas/latest/schema.json",

"output": {

"filePath": "repomix-output.xml",

"style": "xml"

}

}This provides auto-completion and validation in editors that support JSON schema.

Example configuration:

{

"$schema": "https://repomix.com/schemas/latest/schema.json",

"input": {

"maxFileSize": 50000000

},

"output": {

"filePath": "repomix-output.xml",

"style": "xml",

"parsableStyle": false,

"compress": false,

"headerText": "Custom header information for the packed file.",

"fileSummary": true,

"directoryStructure": true,

"files": true,

"removeComments": false,

"removeEmptyLines": false,

"topFilesLength": 5,

"tokenCountTree": false, // or true, or a number like 10 for minimum token threshold

"showLineNumbers": false,

"truncateBase64": false,

"copyToClipboard": false,

"splitOutput": null, // or a number like 1000000 for ~1MB per file

"includeEmptyDirectories": false,

"git": {

"sortByChanges": true,

"sortByChangesMaxCommits": 100,

"includeDiffs": false,

"includeLogs": false,

"includeLogsCount": 50

}

},

"include": ["**/*"],

"ignore": {

"useGitignore": true,

"useDefaultPatterns": true,

// Patterns can also be specified in .repomixignore

"customPatterns": [

"additional-folder",

"**/*.log"

],

},

"security": {

"enableSecurityCheck": true

},

"tokenCount": {

"encoding": "o200k_base"

}

}To create a global configuration file:

repomix --init --globalThe global configuration file will be created in:

- Windows:

%LOCALAPPDATA%\Repomix\repomix.config.json - macOS/Linux:

$XDG_CONFIG_HOME/repomix/repomix.config.jsonor~/.config/repomix/repomix.config.json

Note: Local configuration (if present) takes precedence over global configuration.

Repomix now supports specifying files to include using glob patterns. This allows for more flexible and powerful file selection:

- Use

**/*.jsto include all JavaScript files in any directory - Use

src/**/*to include all files within thesrcdirectory and its subdirectories - Combine multiple patterns like

["src/**/*.js", "**/*.md"]to include JavaScript files insrcand all Markdown files

Repomix offers multiple methods to set ignore patterns for excluding specific files or directories during the packing process:

-

.gitignore: By default, patterns listed in your project's

.gitignorefiles and.git/info/excludeare used. This behavior can be controlled with theignore.useGitignoresetting or the--no-gitignorecli option. -

.ignore: You can use a

.ignorefile in your project root, following the same format as.gitignore. This file is respected by tools like ripgrep and the silver searcher, reducing the need to maintain multiple ignore files. This behavior can be controlled with theignore.useDotIgnoresetting or the--no-dot-ignorecli option. -

Default patterns: Repomix includes a default list of commonly excluded files and directories (e.g., node_modules,

.git, binary files). This feature can be controlled with the

ignore.useDefaultPatternssetting or the--no-default-patternscli option. Please see defaultIgnore.ts for more details. -

.repomixignore: You can create a

.repomixignorefile in your project root to define Repomix-specific ignore patterns. This file follows the same format as.gitignore. -

Custom patterns: Additional ignore patterns can be specified using the

ignore.customPatternsoption in the configuration file. You can overwrite this setting with the-i, --ignorecommand line option.

Priority Order (from highest to lowest):

- Custom patterns (

ignore.customPatterns) - Ignore files (

.repomixignore,.ignore,.gitignore, and.git/info/exclude):- When in nested directories, files in deeper directories have higher priority

- When in the same directory, these files are merged in no particular order

- Default patterns (if

ignore.useDefaultPatternsis true and--no-default-patternsis not used)

This approach allows for flexible file exclusion configuration based on your project's needs. It helps optimize the size of the generated pack file by ensuring the exclusion of security-sensitive files and large binary files, while preventing the leakage of confidential information.

Note: Binary files are not included in the packed output by default, but their paths are listed in the "Repository Structure" section of the output file. This provides a complete overview of the repository structure while keeping the packed file efficient and text-based.

The output.instructionFilePath option allows you to specify a separate file containing detailed instructions or

context about your project. This allows AI systems to understand the specific context and requirements of your project,

potentially leading to more relevant and tailored analysis or suggestions.

Here's an example of how you might use this feature:

- Create a file named

repomix-instruction.mdin your project root:

# Coding Guidelines

- Follow the Airbnb JavaScript Style Guide

- Suggest splitting files into smaller, focused units when appropriate

- Add comments for non-obvious logic. Keep all text in English

- All new features should have corresponding unit tests

# Generate Comprehensive Output

- Include all content without abbreviation, unless specified otherwise

- Optimize for handling large codebases while maintaining output quality- In your

repomix.config.json, add theinstructionFilePathoption:

{

"output": {

"instructionFilePath": "repomix-instruction.md",

// other options...

}

}When Repomix generates the output, it will include the contents of repomix-instruction.md in a dedicated section.

Note: The instruction content is appended at the end of the output file. This placement can be particularly effective

for AI systems. For those interested in understanding why this might be beneficial, Anthropic provides some insights in

their documentation:

https://docs.anthropic.com/en/docs/build-with-claude/prompt-engineering/long-context-tips

Put long-form data at the top: Place your long documents and inputs (~20K+ tokens) near the top of your prompt, above your query, instructions, and examples. This can significantly improve Claude's performance across all models. Queries at the end can improve response quality by up to 30% in tests, especially with complex, multi-document inputs.

When output.removeComments is set to true, Repomix will attempt to remove comments from supported file types. This

feature can help reduce the size of the output file and focus on the essential code content.

Supported languages include:

HTML, CSS, JavaScript, TypeScript, Vue, Svelte, Python, PHP, Ruby, C, C#, Java, Go, Rust, Swift, Kotlin, Dart, Shell,

and YAML.

Note: The comment removal process is conservative to avoid accidentally removing code. In complex cases, some comments might be retained.

Repomix includes a security check feature that uses Secretlint to detect potentially sensitive information in your files. This feature helps you identify possible security risks before sharing your packed repository.

The security check results will be displayed in the CLI output after the packing process is complete. If any suspicious files are detected, you'll see a list of these files along with a warning message.

Example output:

🔍 Security Check:

──────────────────

2 suspicious file(s) detected:

1. src/utils/test.txt

2. tests/utils/secretLintUtils.test.ts

Please review these files for potentially sensitive information.

By default, Repomix's security check feature is enabled. You can disable it by setting security.enableSecurityCheck to

false in your configuration file:

{

"security": {

"enableSecurityCheck": false

}

}Or using the --no-security-check command line option:

repomix --no-security-check[!NOTE] Disabling security checks may expose sensitive information. Use this option with caution and only when necessary, such as when working with test files or documentation that contains example credentials.

You can also use Repomix in your GitHub Actions workflows. This is useful for automating the process of packing your codebase for AI analysis.

Basic usage:

- name: Pack repository with Repomix

uses: yamadashy/repomix/.github/actions/repomix@main

with:

output: repomix-output.xml

style: xmlUse --style to generate output in different formats:

- name: Pack repository with Repomix

uses: yamadashy/repomix/.github/actions/repomix@main

with:

output: repomix-output.md

style: markdown- name: Pack repository with Repomix (JSON format)

uses: yamadashy/repomix/.github/actions/repomix@main

with:

output: repomix-output.json

style: jsonPack specific directories with compression:

- name: Pack repository with Repomix

uses: yamadashy/repomix/.github/actions/repomix@main

with:

directories: src tests

include: "**/*.ts,**/*.md"

ignore: "**/*.test.ts"

output: repomix-output.txt

compress: trueUpload the output file as an artifact:

- name: Pack repository with Repomix

uses: yamadashy/repomix/.github/actions/repomix@main

with:

directories: src

output: repomix-output.txt

compress: true

- name: Upload Repomix output

uses: actions/upload-artifact@v4

with:

name: repomix-output

path: repomix-output.txtComplete workflow example:

name: Pack repository with Repomix

on:

workflow_dispatch:

push:

branches: [ main ]

pull_request:

branches: [ main ]

jobs:

pack-repo:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Pack repository with Repomix

uses: yamadashy/repomix/.github/actions/repomix@main

with:

output: repomix-output.xml

- name: Upload Repomix output

uses: actions/upload-artifact@v4

with:

name: repomix-output.xml

path: repomix-output.xml

retention-days: 30See the complete workflow example here.

| Name | Description | Default |

|---|---|---|

directories |

Space-separated list of directories to process (e.g., src tests docs) |

. |

include |

Comma-separated glob patterns to include files (e.g., **/*.ts,**/*.md) |

"" |

ignore |

Comma-separated glob patterns to ignore files (e.g., **/*.test.ts,**/node_modules/**) |

"" |

output |

Relative path for the packed file (extension determines format: .txt, .md, .xml) |

repomix-output.xml |

compress |

Enable smart compression to reduce output size by pruning implementation details | true |

style |

Output style (xml, markdown, json, plain) |

xml |

additional-args |

Extra raw arguments for the repomix CLI (e.g., --no-file-summary --no-security-check) |

"" |

repomix-version |

Version of the npm package to install (supports semver ranges, tags, or specific versions like 0.2.25) |

latest |

| Name | Description |

|---|---|

output_file |

Path to the generated output file. Can be used in subsequent steps for artifact upload, LLM processing, or other operations. The file contains a formatted representation of your codebase based on the specified options. |

In addition to using Repomix as a CLI tool, you can also use it as a library in your Node.js applications.

npm install repomiximport { runCli, type CliOptions } from 'repomix';

// Process current directory with custom options

async function packProject() {

const options = {

output: 'output.xml',

style: 'xml',

compress: true,

quiet: true

} as CliOptions;

const result = await runCli(['.'], process.cwd(), options);

return result.packResult;

}import { runCli, type CliOptions } from 'repomix';

// Clone and process a GitHub repo

async function processRemoteRepo(repoUrl) {

const options = {

remote: repoUrl,

output: 'output.xml',

compress: true

} as CliOptions;

return await runCli(['.'], process.cwd(), options);

}If you need more control, you can use the low-level APIs:

import { searchFiles, collectFiles, processFiles, TokenCounter } from 'repomix';

async function analyzeFiles(directory) {

// Find and collect files

const { filePaths } = await searchFiles(directory, { /* config */ });

const rawFiles = await collectFiles(filePaths, directory);

const processedFiles = await processFiles(rawFiles, { /* config */ });

// Count tokens

const tokenCounter = new TokenCounter('o200k_base');

// Return analysis results

return processedFiles.map(file => ({

path: file.path,

tokens: tokenCounter.countTokens(file.content)

}));

}For more examples, check the source code at website/server/src/remoteRepo.ts which demonstrates how repomix.com uses the library.

When bundling repomix with tools like Rolldown or esbuild, some dependencies must remain external and WASM files need to be copied:

External dependencies (cannot be bundled):

-

tinypool- Spawns worker threads using file paths -

tiktoken- Loads WASM files dynamically at runtime

WASM files to copy:

-

web-tree-sitter.wasm→ Same directory as bundled JS (required for code compression feature) - Tree-sitter language files → Directory specified by

REPOMIX_WASM_DIRenvironment variable

For a working example, see website/server/scripts/bundle.mjs.

We welcome contributions from the community! To get started, please refer to our Contributing Guide.

See our Privacy Policy.

This project is licensed under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for repomix

Similar Open Source Tools

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

flapi

flAPI is a powerful service that automatically generates read-only APIs for datasets by utilizing SQL templates. Built on top of DuckDB, it offers features like automatic API generation, support for Model Context Protocol (MCP), connecting to multiple data sources, caching, security implementation, and easy deployment. The tool allows users to create APIs without coding and enables the creation of AI tools alongside REST endpoints using SQL templates. It supports unified configuration for REST endpoints and MCP tools/resources, concurrent servers for REST API and MCP server, and automatic tool discovery. The tool also provides DuckLake-backed caching for modern, snapshot-based caching with features like full refresh, incremental sync, retention, compaction, and audit logs.

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

models.dev

Models.dev is an open-source database providing detailed specifications, pricing, and capabilities of various AI models. It serves as a centralized platform for accessing information on AI models, allowing users to contribute and utilize the data through an API. The repository contains data stored in TOML files, organized by provider and model, along with SVG logos. Users can contribute by adding new models following specific guidelines and submitting pull requests for validation. The project aims to maintain an up-to-date and comprehensive database of AI model information.

genaiscript

GenAIScript is a scripting environment designed to facilitate file ingestion, prompt development, and structured data extraction. Users can define metadata and model configurations, specify data sources, and define tasks to extract specific information. The tool provides a convenient way to analyze files and extract desired content in a structured format. It offers a user-friendly interface for working with data and automating data extraction processes, making it suitable for various data processing tasks.

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

clarifai-python

The Clarifai Python SDK offers a comprehensive set of tools to integrate Clarifai's AI platform to leverage computer vision capabilities like classification , detection ,segementation and natural language capabilities like classification , summarisation , generation , Q&A ,etc into your applications. With just a few lines of code, you can leverage cutting-edge artificial intelligence to unlock valuable insights from visual and textual content.

sonarqube-mcp-server

The SonarQube MCP Server is a Model Context Protocol (MCP) server that enables seamless integration with SonarQube Server or Cloud for code quality and security. It supports the analysis of code snippets directly within the agent context. The server provides various tools for analyzing code, managing issues, accessing metrics, and interacting with SonarQube projects. It also supports advanced features like dependency risk analysis, enterprise portfolio management, and system health checks. The server can be configured for different transport modes, proxy settings, and custom certificates. Telemetry data collection can be disabled if needed.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

ai

A TypeScript toolkit for building AI-driven video workflows on the server, powered by Mux! @mux/ai provides purpose-driven workflow functions and primitive functions that integrate with popular AI/LLM providers like OpenAI, Anthropic, and Google. It offers pre-built workflows for tasks like generating summaries and tags, content moderation, chapter generation, and more. The toolkit is cost-effective, supports multi-modal analysis, tone control, and configurable thresholds, and provides full TypeScript support. Users can easily configure credentials for Mux and AI providers, as well as cloud infrastructure like AWS S3 for certain workflows. @mux/ai is production-ready, offers composable building blocks, and supports universal language detection.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

Lumos

Lumos is a Chrome extension powered by a local LLM co-pilot for browsing the web. It allows users to summarize long threads, news articles, and technical documentation. Users can ask questions about reviews and product pages. The tool requires a local Ollama server for LLM inference and embedding database. Lumos supports multimodal models and file attachments for processing text and image content. It also provides options to customize models, hosts, and content parsers. The extension can be easily accessed through keyboard shortcuts and offers tools for automatic invocation based on prompts.

For similar tasks

tokencost

Tokencost is a clientside tool for calculating the USD cost of using major Large Language Model (LLMs) APIs by estimating the cost of prompts and completions. It helps track the latest price changes of major LLM providers, accurately count prompt tokens before sending OpenAI requests, and easily integrate to get the cost of a prompt or completion with a single function. Users can calculate prompt and completion costs using OpenAI requests, count tokens in prompts formatted as message lists or string prompts, and refer to a cost table with updated prices for various LLM models. The tool also supports callback handlers for LLM wrapper/framework libraries like LlamaIndex and Langchain.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

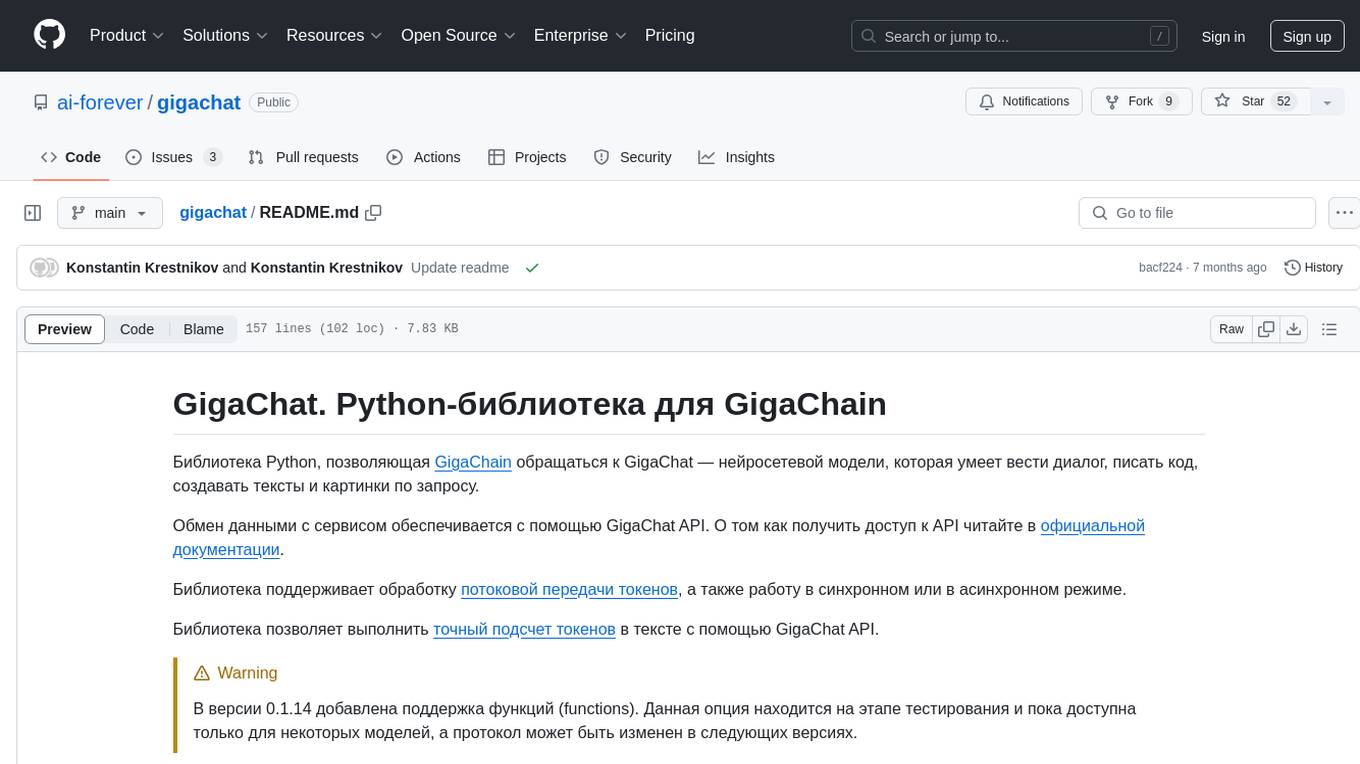

gigachat

GigaChat is a Python library that allows GigaChain to interact with GigaChat, a neural network model capable of engaging in dialogue, writing code, creating texts, and images on demand. Data exchange with the service is facilitated through the GigaChat API. The library supports processing token streaming, as well as working in synchronous or asynchronous mode. It enables precise token counting in text using the GigaChat API.

client

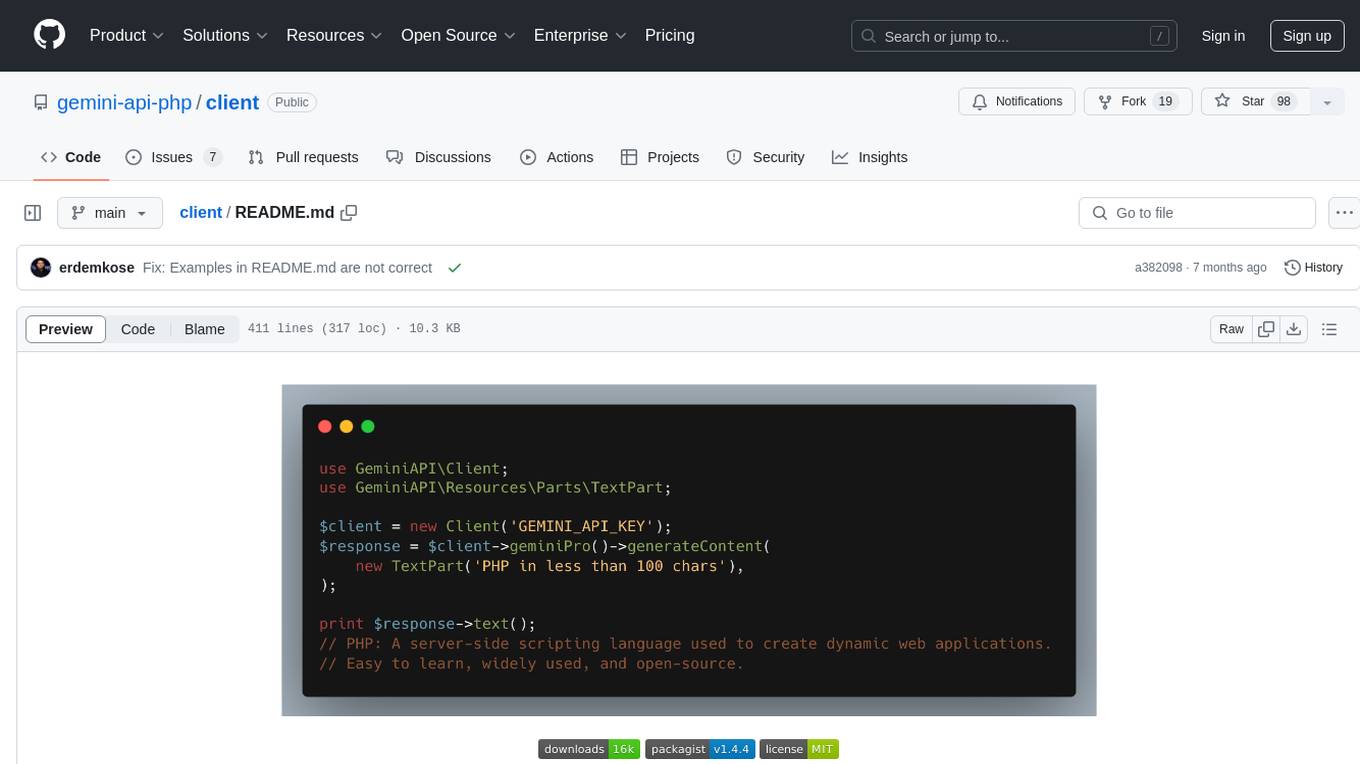

Gemini API PHP Client is a library that allows you to interact with Google's generative AI models, such as Gemini Pro and Gemini Pro Vision. It provides functionalities for basic text generation, multimodal input, chat sessions, streaming responses, tokens counting, listing models, and advanced usages like safety settings and custom HTTP client usage. The library requires an API key to access Google's Gemini API and can be installed using Composer. It supports various features like generating content, starting chat sessions, embedding content, counting tokens, and listing available models.

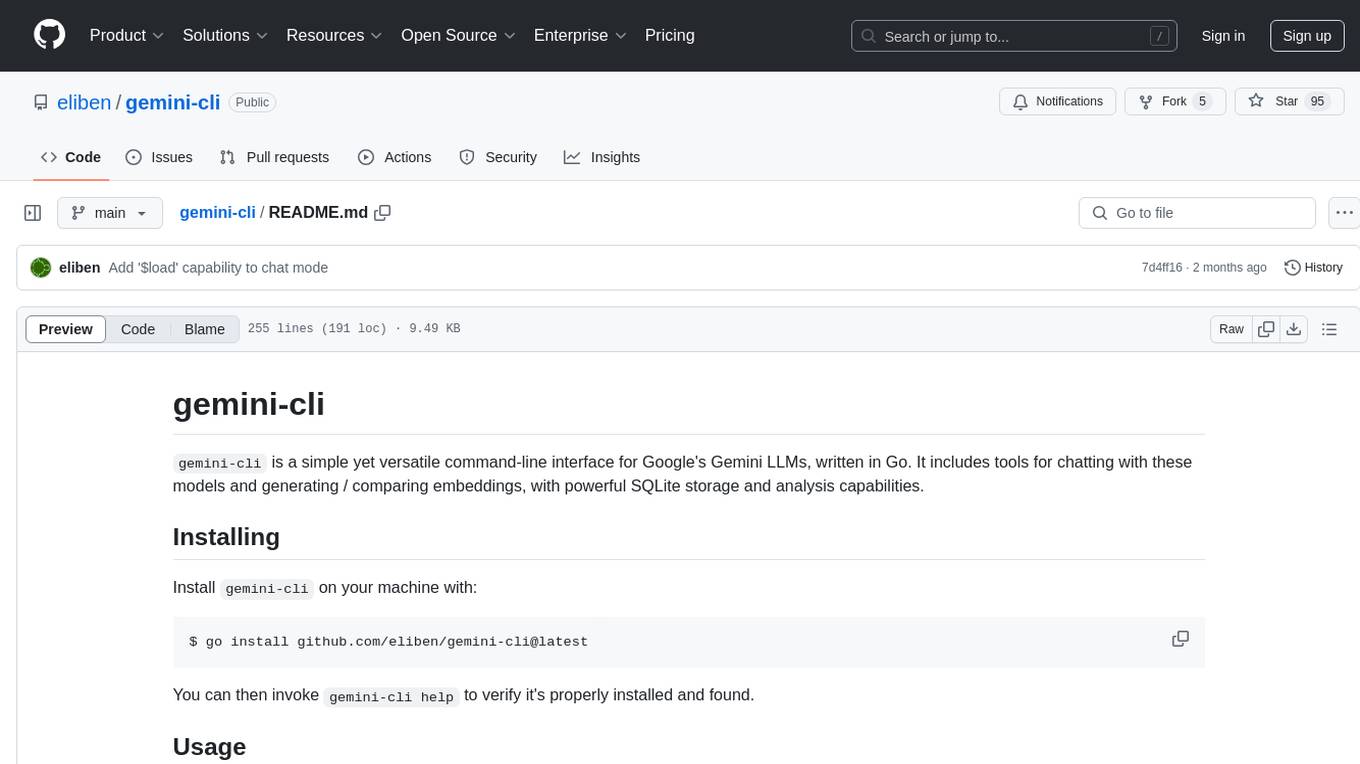

gemini-cli

gemini-cli is a versatile command-line interface for Google's Gemini LLMs, written in Go. It includes tools for chatting with models, generating/comparing embeddings, and storing data in SQLite for analysis. Users can interact with Gemini models through various subcommands like prompt, chat, counttok, embed content, embed db, and embed similar.

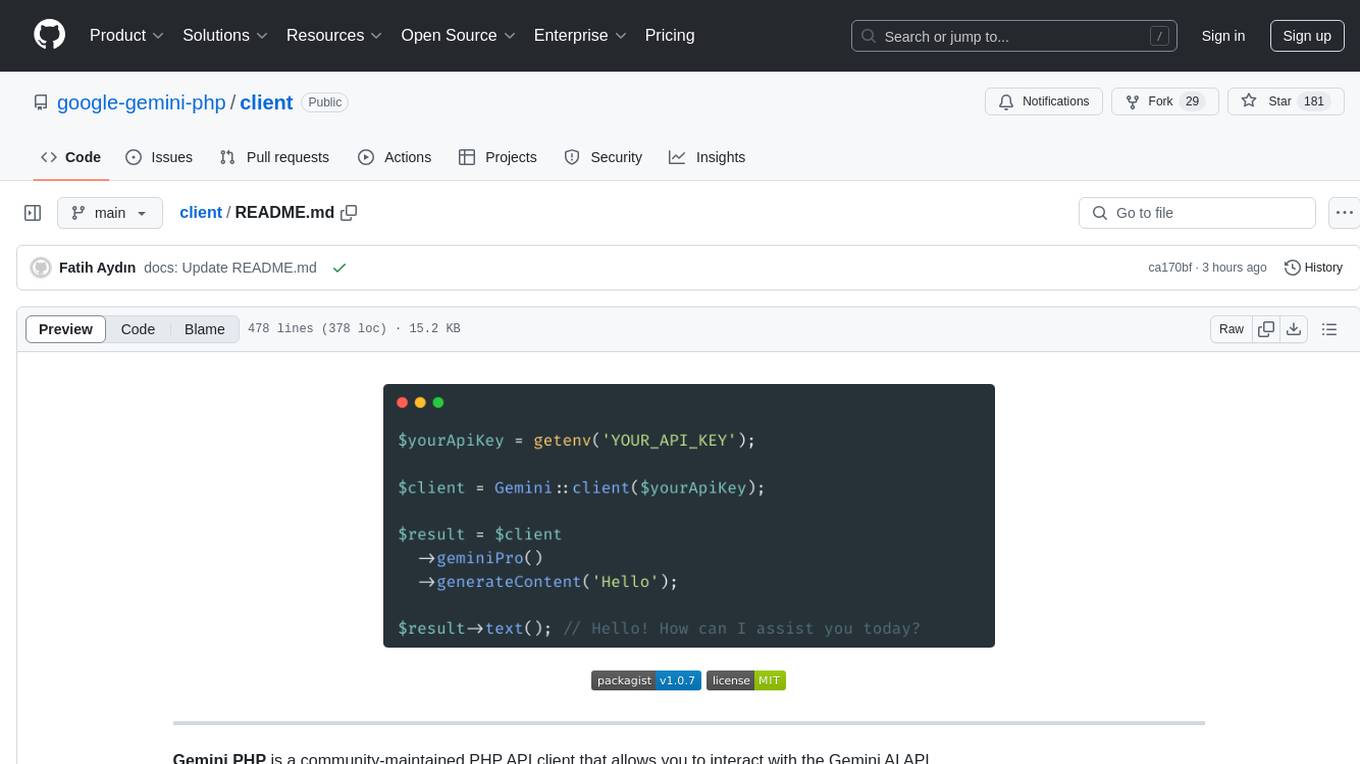

client

Gemini PHP is a PHP API client for interacting with the Gemini AI API. It allows users to generate content, chat, count tokens, configure models, embed resources, list models, get model information, troubleshoot timeouts, and test API responses. The client supports various features such as text-only input, text-and-image input, multi-turn conversations, streaming content generation, token counting, model configuration, and embedding techniques. Users can interact with Gemini's API to perform tasks related to natural language generation and text analysis.

ai21-python

The AI21 Labs Python SDK is a comprehensive tool for interacting with the AI21 API. It provides functionalities for chat completions, conversational RAG, token counting, error handling, and support for various cloud providers like AWS, Azure, and Vertex. The SDK offers both synchronous and asynchronous usage, along with detailed examples and documentation. Users can quickly get started with the SDK to leverage AI21's powerful models for various natural language processing tasks.

Tiktoken

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby