Tiktoken

This project implements token calculation for OpenAI's gpt-4 and gpt-3.5-turbo model, specifically using `cl100k_base` encoding.

Stars: 78

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

README:

This implementation aims for maximum performance, especially in the token count operation.

There's also a benchmark console app here for easy tracking of this.

We will be happy to accept any PR.

o200k_basecl100k_baser50k_basep50k_basep50k_edit

using Tiktoken;

var encoder = ModelToEncoder.For("gpt-4o"); // or explicitly using new Encoder(new O200KBase())

var tokens = encoder.Encode("hello world"); // [15339, 1917]

var text = encoder.Decode(tokens); // hello world

var numberOfTokens = encoder.CountTokens(text); // 2

var stringTokens = encoder.Explore(text); // ["hello", " world"]You can view the reports for each version here

BenchmarkDotNet v0.14.0, macOS Sequoia 15.1 (24B83) [Darwin 24.1.0]

Apple M1 Pro, 1 CPU, 10 logical and 10 physical cores

.NET SDK 9.0.100

[Host] : .NET 9.0.0 (9.0.24.52809), Arm64 RyuJIT AdvSIMD

DefaultJob : .NET 9.0.0 (9.0.24.52809), Arm64 RyuJIT AdvSIMD

| Method | Categories | Data | Mean | Ratio | Gen0 | Gen1 | Allocated | Alloc Ratio |

|---|---|---|---|---|---|---|---|---|

| SharpTokenV2_0_3_ | CountTokens | 1. (...)57. [19866] | 567,130.0 ns | 1.00 | 2.9297 | - | 20115 B | 1.00 |

| TiktokenSharpV1_1_5_ | CountTokens | 1. (...)57. [19866] | 483,976.7 ns | 0.85 | 64.4531 | 5.8594 | 404648 B | 20.12 |

| MicrosoftMLTokenizerV1_0_0_ | CountTokens | 1. (...)57. [19866] | 427,733.2 ns | 0.75 | - | - | 297 B | 0.01 |

| TokenizerLibV1_3_3_ | CountTokens | 1. (...)57. [19866] | 773,467.5 ns | 1.36 | 246.0938 | 83.9844 | 1547675 B | 76.94 |

| Tiktoken_ | CountTokens | 1. (...)57. [19866] | 271,564.3 ns | 0.48 | 23.4375 | - | 148313 B | 7.37 |

| SharpTokenV2_0_3_ | CountTokens | Hello, World! | 380.0 ns | 1.00 | 0.0405 | - | 256 B | 1.00 |

| TiktokenSharpV1_1_5_ | CountTokens | Hello, World! | 263.8 ns | 0.69 | 0.0505 | - | 320 B | 1.25 |

| MicrosoftMLTokenizerV1_0_0_ | CountTokens | Hello, World! | 305.7 ns | 0.80 | 0.0153 | - | 96 B | 0.38 |

| TokenizerLibV1_3_3_ | CountTokens | Hello, World! | 509.6 ns | 1.34 | 0.2356 | 0.0010 | 1480 B | 5.78 |

| Tiktoken_ | CountTokens | Hello, World! | 175.7 ns | 0.46 | 0.0191 | - | 120 B | 0.47 |

| SharpTokenV2_0_3_ | CountTokens | King(...)edy. [275] | 5,990.7 ns | 1.00 | 0.0763 | - | 520 B | 1.00 |

| TiktokenSharpV1_1_5_ | CountTokens | King(...)edy. [275] | 4,516.5 ns | 0.75 | 0.8011 | - | 5064 B | 9.74 |

| MicrosoftMLTokenizerV1_0_0_ | CountTokens | King(...)edy. [275] | 3,871.2 ns | 0.65 | 0.0153 | - | 96 B | 0.18 |

| TokenizerLibV1_3_3_ | CountTokens | King(...)edy. [275] | 7,465.8 ns | 1.25 | 3.0823 | 0.1373 | 19344 B | 37.20 |

| Tiktoken_ | CountTokens | King(...)edy. [275] | 2,744.5 ns | 0.46 | 0.3128 | - | 1976 B | 3.80 |

| SharpTokenV2_0_3_Encode | Encode | 1. (...)57. [19866] | 568,150.3 ns | 1.00 | 2.9297 | - | 20115 B | 1.00 |

| TiktokenSharpV1_1_5_Encode | Encode | 1. (...)57. [19866] | 444,972.1 ns | 0.78 | 64.4531 | 5.8594 | 404649 B | 20.12 |

| MicrosoftMLTokenizerV1_0_0_Encode | Encode | 1. (...)57. [19866] | 410,970.9 ns | 0.72 | 10.2539 | 0.4883 | 66137 B | 3.29 |

| TokenizerLibV1_3_3_Encode | Encode | 1. (...)57. [19866] | 770,068.9 ns | 1.36 | 246.0938 | 90.8203 | 1547675 B | 76.94 |

| Tiktoken_Encode | Encode | 1. (...)57. [19866] | 290,030.9 ns | 0.51 | 33.6914 | 1.4648 | 214465 B | 10.66 |

| SharpTokenV2_0_3_Encode | Encode | Hello, World! | 381.2 ns | 1.00 | 0.0405 | - | 256 B | 1.00 |

| TiktokenSharpV1_1_5_Encode | Encode | Hello, World! | 260.2 ns | 0.68 | 0.0505 | - | 320 B | 1.25 |

| MicrosoftMLTokenizerV1_0_0_Encode | Encode | Hello, World! | 325.1 ns | 0.85 | 0.0267 | - | 168 B | 0.66 |

| TokenizerLibV1_3_3_Encode | Encode | Hello, World! | 511.6 ns | 1.34 | 0.2356 | - | 1480 B | 5.78 |

| Tiktoken_Encode | Encode | Hello, World! | 241.4 ns | 0.63 | 0.0801 | - | 504 B | 1.97 |

| SharpTokenV2_0_3_Encode | Encode | King(...)edy. [275] | 5,957.3 ns | 1.00 | 0.0763 | - | 520 B | 1.00 |

| TiktokenSharpV1_1_5_Encode | Encode | King(...)edy. [275] | 4,523.8 ns | 0.76 | 0.8011 | - | 5064 B | 9.74 |

| MicrosoftMLTokenizerV1_0_0_Encode | Encode | King(...)edy. [275] | 4,069.8 ns | 0.68 | 0.1144 | - | 744 B | 1.43 |

| TokenizerLibV1_3_3_Encode | Encode | King(...)edy. [275] | 7,207.8 ns | 1.21 | 3.0823 | 0.1373 | 19344 B | 37.20 |

| Tiktoken_Encode | Encode | King(...)edy. [275] | 2,945.7 ns | 0.49 | 0.4654 | - | 2936 B | 5.65 |

Priority place for bugs: https://github.com/tryAGI/LangChain/issues

Priority place for ideas and general questions: https://github.com/tryAGI/LangChain/discussions

Discord: https://discord.gg/Ca2xhfBf3v

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Tiktoken

Similar Open Source Tools

Tiktoken

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

Chinese-LLaMA-Alpaca

This project open sources the **Chinese LLaMA model and the Alpaca large model fine-tuned with instructions**, to further promote the open research of large models in the Chinese NLP community. These models **extend the Chinese vocabulary based on the original LLaMA** and use Chinese data for secondary pre-training, further enhancing the basic Chinese semantic understanding ability. At the same time, the Chinese Alpaca model further uses Chinese instruction data for fine-tuning, significantly improving the model's understanding and execution of instructions.

ai-app

The 'ai-app' repository is a comprehensive collection of tools and resources related to artificial intelligence, focusing on topics such as server environment setup, PyCharm and Anaconda installation, large model deployment and training, Transformer principles, RAG technology, vector databases, AI image, voice, and music generation, and AI Agent frameworks. It also includes practical guides and tutorials on implementing various AI applications. The repository serves as a valuable resource for individuals interested in exploring different aspects of AI technology.

llms-from-scratch-cn

This repository provides a detailed tutorial on how to build your own large language model (LLM) from scratch. It includes all the code necessary to create a GPT-like LLM, covering the encoding, pre-training, and fine-tuning processes. The tutorial is written in a clear and concise style, with plenty of examples and illustrations to help you understand the concepts involved. It is suitable for developers and researchers with some programming experience who are interested in learning more about LLMs and how to build them.

teaching-boyfriend-llm

The 'teaching-boyfriend-llm' repository contains study notes on LLM (Large Language Models) for the purpose of advancing towards AGI (Artificial General Intelligence). The notes are a collaborative effort towards understanding and implementing LLM technology.

MindChat

MindChat is a psychological large language model designed to help individuals relieve psychological stress and solve mental confusion, ultimately improving mental health. It aims to provide a relaxed and open conversation environment for users to build trust and understanding. MindChat offers privacy, warmth, safety, timely, and convenient conversation settings to help users overcome difficulties and challenges, achieve self-growth, and development. The tool is suitable for both work and personal life scenarios, providing comprehensive psychological support and therapeutic assistance to users while strictly protecting user privacy. It combines psychological knowledge with artificial intelligence technology to contribute to a healthier, more inclusive, and equal society.

DISC-LawLLM

DISC-LawLLM is a legal domain large model that aims to provide professional, intelligent, and comprehensive **legal services** to users. It is developed and open-sourced by the Data Intelligence and Social Computing Lab (Fudan-DISC) at Fudan University.

VoiceBench

VoiceBench is a repository containing code and data for benchmarking LLM-Based Voice Assistants. It includes a leaderboard with rankings of various voice assistant models based on different evaluation metrics. The repository provides setup instructions, datasets, evaluation procedures, and a curated list of awesome voice assistants. Users can submit new voice assistant results through the issue tracker for updates on the ranking list.

gpt_server

The GPT Server project leverages the basic capabilities of FastChat to provide the capabilities of an openai server. It perfectly adapts more models, optimizes models with poor compatibility in FastChat, and supports loading vllm, LMDeploy, and hf in various ways. It also supports all sentence_transformers compatible semantic vector models, including Chat templates with function roles, Function Calling (Tools) capability, and multi-modal large models. The project aims to reduce the difficulty of model adaptation and project usage, making it easier to deploy the latest models with minimal code changes.

Chinese-LLaMA-Alpaca-2

Chinese-LLaMA-Alpaca-2 is a large Chinese language model developed by Meta AI. It is based on the Llama-2 model and has been further trained on a large dataset of Chinese text. Chinese-LLaMA-Alpaca-2 can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. Here are some of the key features of Chinese-LLaMA-Alpaca-2: * It is the largest Chinese language model ever trained, with 13 billion parameters. * It is trained on a massive dataset of Chinese text, including books, news articles, and social media posts. * It can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. * It is open-source and available for anyone to use. Chinese-LLaMA-Alpaca-2 is a powerful tool that can be used to improve the performance of a wide range of natural language processing tasks. It is a valuable resource for researchers and developers working in the field of artificial intelligence.

JiwuChat

JiwuChat is a lightweight multi-platform chat application built on Tauri2 and Nuxt3, with various real-time messaging features, AI group chat bots (such as 'iFlytek Spark', 'KimiAI' etc.), WebRTC audio-video calling, screen sharing, and AI shopping functions. It supports seamless cross-device communication, covering text, images, files, and voice messages, also supporting group chats and customizable settings. It provides light/dark mode for efficient social networking.

easy-learn-ai

Easy AI is a modern web application platform focused on AI education, aiming to help users understand complex artificial intelligence concepts through a concise and intuitive approach. The platform integrates multiple learning modules, providing a comprehensive AI knowledge system from basic concepts to practical applications.

Awesome-LLM-Eval

Awesome-LLM-Eval: a curated list of tools, benchmarks, demos, papers for Large Language Models (like ChatGPT, LLaMA, GLM, Baichuan, etc) Evaluation on Language capabilities, Knowledge, Reasoning, Fairness and Safety.

KeepChatGPT

KeepChatGPT is a plugin designed to enhance the data security capabilities and efficiency of ChatGPT. It aims to make your chat experience incredibly smooth, eliminating dozens or even hundreds of unnecessary steps, and permanently getting rid of various errors and warnings. It offers innovative features such as automatic refresh, activity maintenance, data security, audit cancellation, conversation cloning, endless conversations, page purification, large screen display, full screen display, tracking interception, rapid changes, and detailed insights. The plugin ensures that your AI experience is secure, smooth, efficient, concise, and seamless.

sanic-web

Sanic-Web is a lightweight, end-to-end, and easily customizable large model application project built on technologies such as Dify, Ollama & Vllm, Sanic, and Text2SQL. It provides a one-stop solution for developing large model applications, supporting graphical data-driven Q&A using ECharts, handling table-based Q&A with CSV files, and integrating with third-party RAG systems for general knowledge Q&A. As a lightweight framework, Sanic-Web enables rapid iteration and extension to facilitate the quick implementation of large model projects.

XiaoFeiShu

XiaoFeiShu is a specialized automation software developed closely following the quality user rules of Xiaohongshu. It provides a set of automation workflows for Xiaohongshu operations, avoiding the issues of traditional RPA being mechanical, rule-based, and easily detected. The software is easy to use, with simple operation and powerful functionality.

For similar tasks

arena-hard-auto

Arena-Hard-Auto-v0.1 is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. The tool prompts GPT-4-Turbo as a judge to compare models' responses against a baseline model (default: GPT-4-0314). Arena-Hard-Auto employs an automatic judge as a cheaper and faster approximator to human preference. It has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks. Users can evaluate their models' performance on Chatbot Arena by using Arena-Hard-Auto.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

ai-hub

AI Hub Project aims to continuously test and evaluate mainstream large language models, while accumulating and managing various effective model invocation prompts. It has integrated all mainstream large language models in China, including OpenAI GPT-4 Turbo, Baidu ERNIE-Bot-4, Tencent ChatPro, MiniMax abab5.5-chat, and more. The project plans to continuously track, integrate, and evaluate new models. Users can access the models through REST services or Java code integration. The project also provides a testing suite for translation, coding, and benchmark testing.

long-context-attention

Long-Context-Attention (YunChang) is a unified sequence parallel approach that combines the strengths of DeepSpeed-Ulysses-Attention and Ring-Attention to provide a versatile and high-performance solution for long context LLM model training and inference. It addresses the limitations of both methods by offering no limitation on the number of heads, compatibility with advanced parallel strategies, and enhanced performance benchmarks. The tool is verified in Megatron-LM and offers best practices for 4D parallelism, making it suitable for various attention mechanisms and parallel computing advancements.

marlin

Marlin is a highly optimized FP16xINT4 matmul kernel designed for large language model (LLM) inference, offering close to ideal speedups up to batchsizes of 16-32 tokens. It is suitable for larger-scale serving, speculative decoding, and advanced multi-inference schemes like CoT-Majority. Marlin achieves optimal performance by utilizing various techniques and optimizations to fully leverage GPU resources, ensuring efficient computation and memory management.

MMC

This repository, MMC, focuses on advancing multimodal chart understanding through large-scale instruction tuning. It introduces a dataset supporting various tasks and chart types, a benchmark for evaluating reasoning capabilities over charts, and an assistant achieving state-of-the-art performance on chart QA benchmarks. The repository provides data for chart-text alignment, benchmarking, and instruction tuning, along with existing datasets used in experiments. Additionally, it offers a Gradio demo for the MMCA model.

Tiktoken

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

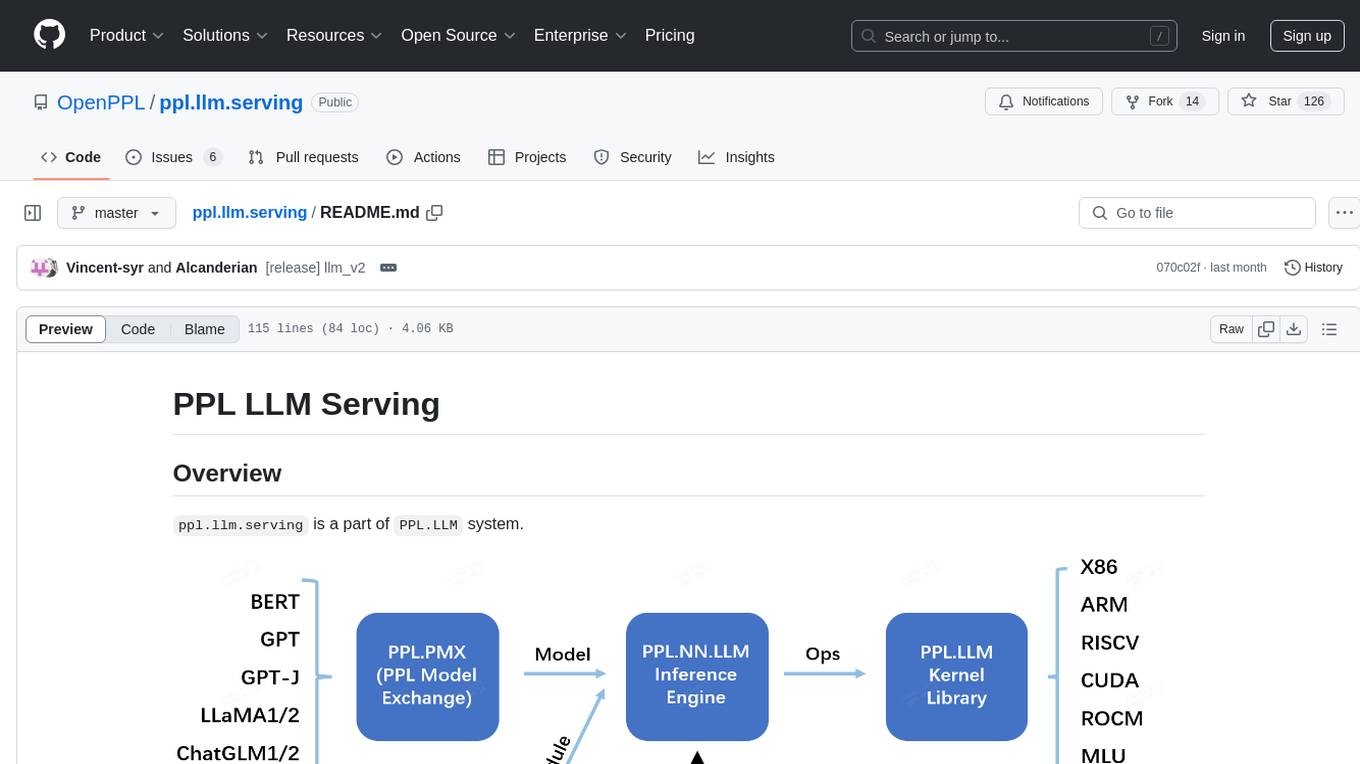

ppl.llm.serving

ppl.llm.serving is a serving component for Large Language Models (LLMs) within the PPL.LLM system. It provides a server based on gRPC and supports inference for LLaMA. The repository includes instructions for prerequisites, quick start guide, model exporting, server setup, client usage, benchmarking, and offline inference. Users can refer to the LLaMA Guide for more details on using this serving component.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.