Awesome-Jailbreak-on-LLMs

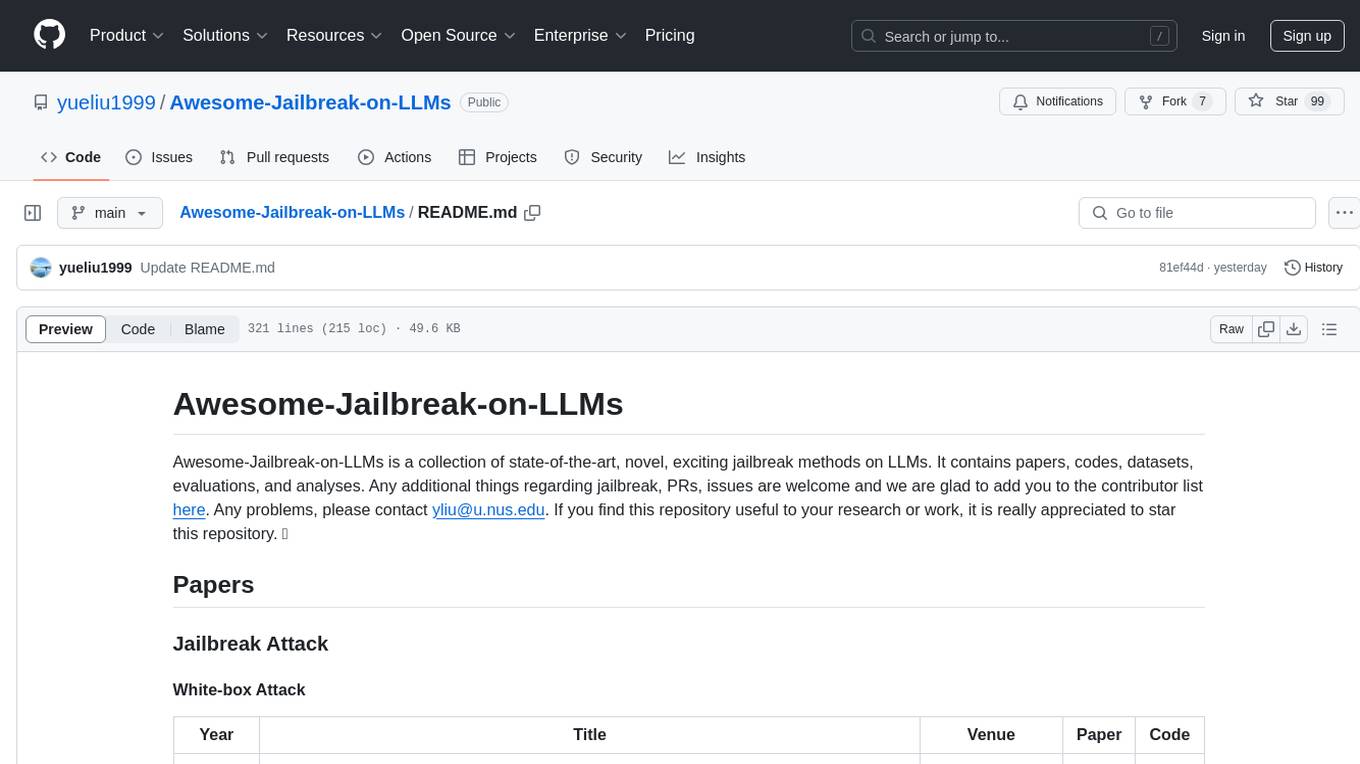

Awesome-Jailbreak-on-LLMs is a collection of state-of-the-art, novel, exciting jailbreak methods on LLMs. It contains papers, codes, datasets, evaluations, and analyses.

Stars: 507

Awesome-Jailbreak-on-LLMs is a collection of state-of-the-art, novel, and exciting jailbreak methods on Large Language Models (LLMs). The repository contains papers, codes, datasets, evaluations, and analyses related to jailbreak attacks on LLMs. It serves as a comprehensive resource for researchers and practitioners interested in exploring various jailbreak techniques and defenses in the context of LLMs. Contributions such as additional jailbreak-related content, pull requests, and issue reports are welcome, and contributors are acknowledged. For any inquiries or issues, contact [email protected]. If you find this repository useful for your research or work, consider starring it to show appreciation.

README:

Awesome-Jailbreak-on-LLMs is a collection of state-of-the-art, novel, exciting jailbreak methods on LLMs. It contains papers, codes, datasets, evaluations, and analyses. Any additional things regarding jailbreak, PRs, issues are welcome and we are glad to add you to the contributor list here. Any problems, please contact [email protected]. If you find this repository useful to your research or work, it is really appreciated to star this repository and cite our papers here. ✨

| Time | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2025.03 | FlipAttack: Jailbreak LLMs via Flipping (FlipAttack) | ICLR Workshop'25 | link | link |

| 2024.11 | The Dark Side of Trust: Authority Citation-Driven Jailbreak Attacks on Large Language Models | arXiv | link | link |

| 2024.11 | Playing Language Game with LLMs Leads to Jailbreaking | arXiv | link | link |

| 2024.11 | GASP: Efficient Black-Box Generation of Adversarial Suffixes for Jailbreaking LLMs (GASP) | arXiv | link | link |

| 2024.11 | LLM STINGER: Jailbreaking LLMs using RL fine-tuned LLMs | arXiv | link | - |

| 2024.11 | SequentialBreak: Large Language Models Can be Fooled by Embedding Jailbreak Prompts into Sequential Prompt | arXiv | link | link |

| 2024.11 | Diversity Helps Jailbreak Large Language Models | arXiv | link | - |

| 2024.11 | Plentiful Jailbreaks with String Compositions | arXiv | link | - |

| 2024.11 | Transferable Ensemble Black-box Jailbreak Attacks on Large Language Models | arXiv | link | link |

| 2024.11 | Stealthy Jailbreak Attacks on Large Language Models via Benign Data Mirroring | arXiv | link | - |

| 2024.10 | Endless Jailbreaks with Bijection | arXiv | link | - |

| 2024.10 | Harnessing Task Overload for Scalable Jailbreak Attacks on Large Language Models | arXiv | link | - |

| 2024.10 | You Know What I'm Saying: Jailbreak Attack via Implicit Reference | arXiv | link | link |

| 2024.10 | Deciphering the Chaos: Enhancing Jailbreak Attacks via Adversarial Prompt Translation | arXiv | link | link |

| 2024.10 | AutoDAN-Turbo: A Lifelong Agent for Strategy Self-Exploration to Jailbreak LLMs (AutoDAN-Turbo) | arXiv | link | link |

| 2024.10 | PathSeeker: Exploring LLM Security Vulnerabilities with a Reinforcement Learning-Based Jailbreak Approach (PathSeeker) | arXiv | link | - |

| 2024.10 | Read Over the Lines: Attacking LLMs and Toxicity Detection Systems with ASCII Art to Mask Profanity | arXiv | link | link |

| 2024.09 | AdaPPA: Adaptive Position Pre-Fill Jailbreak Attack Approach Targeting LLMs | arXiv | link | link |

| 2024.09 | Effective and Evasive Fuzz Testing-Driven Jailbreaking Attacks against LLMs | arXiv | link | - |

| 2024.09 | Jailbreaking Large Language Models with Symbolic Mathematics | arXiv | link | - |

| 2024.08 | Advancing Adversarial Suffix Transfer Learning on Aligned Large Language Models | arXiv | link | - |

| 2024.08 | Hide Your Malicious Goal Into Benign Narratives: Jailbreak Large Language Models through Neural Carrier Articles | arXiv | link | - |

| 2024.08 | h4rm3l: A Dynamic Benchmark of Composable Jailbreak Attacks for LLM Safety Assessment (h4rm3l) | arXiv | link | link |

| 2024.08 | EnJa: Ensemble Jailbreak on Large Language Models (EnJa) | arXiv | link | - |

| 2024.07 | Knowledge-to-Jailbreak: One Knowledge Point Worth One Attack | arXiv | link | link |

| 2024.07 | Single Character Perturbations Break LLM Alignment | arXiv | link | link |

| 2024.07 | A False Sense of Safety: Unsafe Information Leakage in 'Safe' AI Responses | arXiv | link | - |

| 2024.07 | Virtual Context: Enhancing Jailbreak Attacks with Special Token Injection (Virtual Context) | arXiv | link | - |

| 2024.07 | SoP: Unlock the Power of Social Facilitation for Automatic Jailbreak Attack (SoP) | arXiv | link | link |

| 2024.06 | Improved Few-Shot Jailbreaking Can Circumvent Aligned Language Models and Their Defenses (I-FSJ) | NeurIPS'24 | link | link |

| 2024.06 | When LLM Meets DRL: Advancing Jailbreaking Efficiency via DRL-guided Search (RLbreaker) | NeurIPS'24 | link | - |

| 2024.06 | Agent Smith: A Single Image Can Jailbreak One Million Multimodal LLM Agents Exponentially Fast (Agent Smith) | ICML'24 | link | link |

| 2024.06 | Covert Malicious Finetuning: Challenges in Safeguarding LLM Adaptation | ICML'24 | link | - |

| 2024.06 | ArtPrompt: ASCII Art-based Jailbreak Attacks against Aligned LLMs (ArtPrompt) | ACL'24 | link | link |

| 2024.06 | From Noise to Clarity: Unraveling the Adversarial Suffix of Large Language Model Attacks via Translation of Text Embeddings (ASETF) | arXiv | link | - |

| 2024.06 | CodeAttack: Revealing Safety Generalization Challenges of Large Language Models via Code Completion (CodeAttack) | ACL'24 | link | - |

| 2024.06 | Making Them Ask and Answer: Jailbreaking Large Language Models in Few Queries via Disguise and Reconstruction (DRA) | USENIX Security'24 | link | link |

| 2024.06 | AutoJailbreak: Exploring Jailbreak Attacks and Defenses through a Dependency Lens (AutoJailbreak) | arXiv | link | - |

| 2024.06 | Jailbreaking Leading Safety-Aligned LLMs with Simple Adaptive Attacks | arXiv | link | link |

| 2024.06 | GPTFUZZER: Red Teaming Large Language Models with Auto-Generated Jailbreak Prompts (GPTFUZZER) | arXiv | link | link |

| 2024.06 | A Wolf in Sheep’s Clothing: Generalized Nested Jailbreak Prompts can Fool Large Language Models Easily (ReNeLLM) | NAACL'24 | link | link |

| 2024.06 | QROA: A Black-Box Query-Response Optimization Attack on LLMs (QROA) | arXiv | link | link |

| 2024.06 | Poisoned LangChain: Jailbreak LLMs by LangChain (PLC) | arXiv | link | link |

| 2024.05 | Multilingual Jailbreak Challenges in Large Language Models | ICLR'24 | link | link |

| 2024.05 | DeepInception: Hypnotize Large Language Model to Be Jailbreaker (DeepInception) | EMNLP'24 | link | link |

| 2024.05 | GPT-4 Jailbreaks Itself with Near-Perfect Success Using Self-Explanation (IRIS) | ACL'24 | link | - |

| 2024.05 | GUARD: Role-playing to Generate Natural-language Jailbreakings to Test Guideline Adherence of LLMs (GUARD) | arXiv | link | - |

| 2024.05 | "Do Anything Now": Characterizing and Evaluating In-The-Wild Jailbreak Prompts on Large Language Models (DAN) | CCS'24 | link | link |

| 2024.05 | Gpt-4 is too smart to be safe: Stealthy chat with llms via cipher (SelfCipher) | ICLR'24 | link | link |

| 2024.05 | Jailbreaking Large Language Models Against Moderation Guardrails via Cipher Characters (JAM) | NeurIPS'24 | link | - |

| 2024.05 | Jailbreak and Guard Aligned Language Models with Only Few In-Context Demonstrations (ICA) | arXiv | link | - |

| 2024.04 | Many-shot jailbreaking (MSJ) | NeurIPS'24 Anthropic | link | - |

| 2024.04 | PANDORA: Detailed LLM jailbreaking via collaborated phishing agents with decomposed reasoning (PANDORA) | ICLR Workshop'24 | link | - |

| 2024.04 | Fuzzllm: A novel and universal fuzzing framework for proactively discovering jailbreak vulnerabilities in large language models (FuzzLLM) | ICASSP'24 | link | link |

| 2024.04 | Sandwich attack: Multi-language mixture adaptive attack on llms (Sandwich attack) | TrustNLP'24 | link | - |

| 2024.03 | Tastle: Distract large language models for automatic jailbreak attack (TASTLE) | arXiv | link | - |

| 2024.03 | DrAttack: Prompt Decomposition and Reconstruction Makes Powerful LLM Jailbreakers (DrAttack) | EMNLP'24 | link | link |

| 2024.02 | PRP: Propagating Universal Perturbations to Attack Large Language Model Guard-Rails (PRP) | arXiv | link | - |

| 2024.02 | CodeChameleon: Personalized Encryption Framework for Jailbreaking Large Language Models (CodeChameleon) | arXiv | link | link |

| 2024.02 | PAL: Proxy-Guided Black-Box Attack on Large Language Models (PAL) | arXiv | link | link |

| 2024.02 | Jailbreaking Proprietary Large Language Models using Word Substitution Cipher | arXiv | link | - |

| 2024.02 | Query-Based Adversarial Prompt Generation | arXiv | link | - |

| 2024.02 | Leveraging the Context through Multi-Round Interactions for Jailbreaking Attacks (Contextual Interaction Attack) | arXiv | link | - |

| 2024.02 | Semantic Mirror Jailbreak: Genetic Algorithm Based Jailbreak Prompts Against Open-source LLMs (SMJ) | arXiv | link | - |

| 2024.02 | Cognitive Overload: Jailbreaking Large Language Models with Overloaded Logical Thinking | NAACL'24 | link | link |

| 2024.01 | Low-Resource Languages Jailbreak GPT-4 | NeurIPS Workshop'24 | link | - |

| 2024.01 | How Johnny Can Persuade LLMs to Jailbreak Them: Rethinking Persuasion to Challenge AI Safety by Humanizing LLMs (PAP) | arXiv | link | link |

| 2023.12 | Tree of Attacks: Jailbreaking Black-Box LLMs Automatically (TAP) | NeurIPS'24 | link | link |

| 2023.12 | Make Them Spill the Beans! Coercive Knowledge Extraction from (Production) LLMs | arXiv | link | - |

| 2023.12 | Ignore This Title and HackAPrompt: Exposing Systemic Vulnerabilities of LLMs through a Global Scale Prompt Hacking Competition | ACL'24 | link | - |

| 2023.11 | Scalable and Transferable Black-Box Jailbreaks for Language Models via Persona Modulation (Persona) | NeurIPS Workshop'23 | link | - |

| 2023.10 | Jailbreaking Black Box Large Language Models in Twenty Queries (PAIR) | NeurIPS'24 | link | link |

| 2023.10 | Adversarial Demonstration Attacks on Large Language Models (advICL) | EMNLP'24 | link | - |

| 2023.10 | MASTERKEY: Automated Jailbreaking of Large Language Model Chatbots (MASTERKEY) | NDSS'24 | link | link |

| 2023.10 | Attack Prompt Generation for Red Teaming and Defending Large Language Models (SAP) | EMNLP'23 | link | link |

| 2023.10 | An LLM can Fool Itself: A Prompt-Based Adversarial Attack (PromptAttack) | ICLR'24 | link | link |

| 2023.09 | Multi-step Jailbreaking Privacy Attacks on ChatGPT (MJP) | EMNLP Findings'23 | link | link |

| 2023.09 | Open Sesame! Universal Black Box Jailbreaking of Large Language Models (GA) | Applied Sciences'24 | link | - |

| 2023.05 | Not what you’ve signed up for: Compromising Real-World LLM-Integrated Applications with Indirect Prompt Injection | CCS'23 | link | link |

| 2022.11 | Ignore Previous Prompt: Attack Techniques For Language Models (PromptInject) | NeurIPS WorkShop'22 | link | link |

| Year | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2024.11 | AmpleGCG-Plus: A Strong Generative Model of Adversarial Suffixes to Jailbreak LLMs with Higher Success Rates in Fewer Attempts | arXiv | link | - |

| 2024.11 | DROJ: A Prompt-Driven Attack against Large Language Models | arXiv | link | link |

| 2024.11 | SQL Injection Jailbreak: a structural disaster of large language models | arXiv | link | link |

| 2024.10 | Functional Homotopy: Smoothing Discrete Optimization via Continuous Parameters for LLM Jailbreak Attacks | arXiv | link | - |

| 2024.10 | AttnGCG: Enhancing Jailbreaking Attacks on LLMs with Attention Manipulation | arXiv | link | link |

| 2024.10 | Jailbreak Instruction-Tuned LLMs via end-of-sentence MLP Re-weighting | arXiv | link | - |

| 2024.10 | Boosting Jailbreak Transferability for Large Language Models (SI-GCG) | arXiv | link | - |

| 2024.10 | Iterative Self-Tuning LLMs for Enhanced Jailbreaking Capabilities (ADV-LLM) | arXiv | link | link |

| 2024.08 | Probing the Safety Response Boundary of Large Language Models via Unsafe Decoding Path Generation (JVD) | arXiv | link | - |

| 2024.08 | Jailbreak Open-Sourced Large Language Models via Enforced Decoding (EnDec) | ACL'24 | link | - |

| 2024.07 | Refusal in Language Models Is Mediated by a Single Direction | arXiv | Link | Link |

| 2024.07 | Revisiting Character-level Adversarial Attacks for Language Models | ICML'24 | link | link |

| 2024.07 | Badllama 3: removing safety finetuning from Llama 3 in minutes (Badllama 3) | arXiv | link | - |

| 2024.07 | SOS! Soft Prompt Attack Against Open-Source Large Language Models | arXiv | link | - |

| 2024.06 | COLD-Attack: Jailbreaking LLMs with Stealthiness and Controllability (COLD-Attack) | ICML'24 | link | link |

| 2024.06 | Improved Techniques for Optimization-Based Jailbreaking on Large Language Models (I-GCG) | arXiv | link | link |

| 2024.05 | Semantic-guided Prompt Organization for Universal Goal Hijacking against LLMs | arXiv | link | |

| 2024.05 | Efficient LLM Jailbreak via Adaptive Dense-to-sparse Constrained Optimization | NeurIPS'24 | Link | - |

| 2024.05 | AutoDAN: Generating Stealthy Jailbreak Prompts on Aligned Large Language Models (AutoDAN) | ICLR'24 | link | link |

| 2024.05 | AmpleGCG: Learning a Universal and Transferable Generative Model of Adversarial Suffixes for Jailbreaking Both Open and Closed LLMs (AmpleGCG) | arXiv | link | link |

| 2024.05 | Boosting jailbreak attack with momentum (MAC) | ICLR Workshop'24 | link | link |

| 2024.04 | AdvPrompter: Fast Adaptive Adversarial Prompting for LLMs (AdvPrompter) | arXiv | link | link |

| 2024.03 | Universal Jailbreak Backdoors from Poisoned Human Feedback | ICLR'24 | link | - |

| 2024.02 | Attacking large language models with projected gradient descent (PGD) | arXiv | link | - |

| 2024.02 | Open the Pandora's Box of LLMs: Jailbreaking LLMs through Representation Engineering (JRE) | arXiv | link | - |

| 2024.02 | Curiosity-driven red-teaming for large language models (CRT) | arXiv | link | link |

| 2023.12 | AutoDAN: Interpretable Gradient-Based Adversarial Attacks on Large Language Models (AutoDAN) | arXiv | link | link |

| 2023.10 | Catastrophic jailbreak of open-source llms via exploiting generation | ICLR'24 | link | link |

| 2023.06 | Automatically Auditing Large Language Models via Discrete Optimization (ARCA) | ICML'23 | link | link |

| 2023.07 | Universal and Transferable Adversarial Attacks on Aligned Language Models (GCG) | arXiv | link | link |

| Time | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2024.11 | MRJ-Agent: An Effective Jailbreak Agent for Multi-Round Dialogue | arXiv | link | - |

| 2024.10 | Jigsaw Puzzles: Splitting Harmful Questions to Jailbreak Large Language Models (JSP) | arXiv | link | link |

| 2024.10 | Multi-round jailbreak attack on large language | arXiv | link | - |

| 2024.10 | Derail Yourself: Multi-turn LLM Jailbreak Attack through Self-discovered Clues | arXiv | link | link |

| 2024.09 | LLM Defenses Are Not Robust to Multi-Turn Human Jailbreaks Yet | arXiv | link | link |

| 2024.09 | RED QUEEN: Safeguarding Large Language Models against Concealed Multi-Turn Jailbreaking | arXiv | link | link |

| 2024.08 | FRACTURED-SORRY-Bench: Framework for Revealing Attacks in Conversational Turns Undermining Refusal Efficacy and Defenses over SORRY-Bench (Automated Multi-shot Jailbreaks) | arXiv | link | - |

| 2024.08 | Emerging Vulnerabilities in Frontier Models: Multi-Turn Jailbreak Attacks | arXiv | link | link |

| 2024.05 | CoA: Context-Aware based Chain of Attack for Multi-Turn Dialogue LLM (CoA) | arXiv | link | link |

| 2024.04 | Great, Now Write an Article About That: The Crescendo Multi-Turn LLM Jailbreak Attack (Crescendo) | Microsoft Azure | link | - |

| Time | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2024.09 | Unleashing Worms and Extracting Data: Escalating the Outcome of Attacks against RAG-based Inference in Scale and Severity Using Jailbreaking | arXiv | link | link |

| 2024.02 | Pandora: Jailbreak GPTs by Retrieval Augmented Generation Poisoning (Pandora) | arXiv | link | - |

| Time | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2024.11 | Jailbreak Attacks and Defenses against Multimodal Generative Models: A Survey | arXiv | link | link |

| 2024.10 | Chain-of-Jailbreak Attack for Image Generation Models via Editing Step by Step | arXiv | link | - |

| 2024.10 | ColJailBreak: Collaborative Generation and Editing for Jailbreaking Text-to-Image Deep Generation | NeurIPS'24 | Link | - |

| 2024.08 | Jailbreaking Text-to-Image Models with LLM-Based Agents (Atlas) | arXiv | link | - |

| 2024.07 | Image-to-Text Logic Jailbreak: Your Imagination can Help You Do Anything | arXiv | link | - |

| 2024.06 | Jailbreak Vision Language Models via Bi-Modal Adversarial Prompt | arXiv | link | link |

| 2024.05 | Voice Jailbreak Attacks Against GPT-4o | arXiv | link | link |

| 2024.05 | Automatic Jailbreaking of the Text-to-Image Generative AI Systems | ICML'24 Workshop | link | link |

| 2024.04 | Image hijacks: Adversarial images can control generative models at runtime | arXiv | link | link |

| 2024.03 | An image is worth 1000 lies: Adversarial transferability across prompts on vision-language models (CroPA) | ICLR'24 | link | link |

| 2024.03 | Jailbreak in pieces: Compositional adversarial attacks on multi-modal language model | ICLR'24 | link | - |

| 2024.03 | Rethinking model ensemble in transfer-based adversarial attacks | ICLR'24 | link | link |

| 2024.02 | VLATTACK: Multimodal Adversarial Attacks on Vision-Language Tasks via Pre-trained Models | NeurIPS'23 | link | link |

| 2024.02 | Jailbreaking Attack against Multimodal Large Language Model | arXiv | link | - |

| 2024.01 | Jailbreaking GPT-4V via Self-Adversarial Attacks with System Prompts | arXiv | link | - |

| 2024.03 | Visual Adversarial Examples Jailbreak Aligned Large Language Models | AAAI'24 | link | - |

| 2023.12 | OT-Attack: Enhancing Adversarial Transferability of Vision-Language Models via Optimal Transport Optimization (OT-Attack) | arXiv | link | - |

| 2023.12 | FigStep: Jailbreaking Large Vision-language Models via Typographic Visual Prompts (FigStep) | arXiv | link | link |

| 2023.11 | SneakyPrompt: Jailbreaking Text-to-image Generative Models | S&P'24 | link | link |

| 2023.11 | On Evaluating Adversarial Robustness of Large Vision-Language Models | NeurIPS'23 | link | link |

| 2023.10 | How Robust is Google's Bard to Adversarial Image Attacks? | arXiv | link | link |

| 2023.08 | AdvCLIP: Downstream-agnostic Adversarial Examples in Multimodal Contrastive Learning (AdvCLIP) | ACM MM'23 | link | link |

| 2023.07 | Set-level Guidance Attack: Boosting Adversarial Transferability of Vision-Language Pre-training Models (SGA) | ICCV'23 | link | link |

| 2023.07 | On the Adversarial Robustness of Multi-Modal Foundation Models | ICCV Workshop'23 | link | - |

| 2022.10 | Towards Adversarial Attack on Vision-Language Pre-training Models | arXiv | link | link |

| Time | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2024.12 | Shaping the Safety Boundaries: Understanding and Defending Against Jailbreaks in Large Language Models | arXiv'24 | link | - |

| 2024.10 | Safety-Aware Fine-Tuning of Large Language Models | arXiv'24 | link | - |

| 2024.10 | MoJE: Mixture of Jailbreak Experts, Naive Tabular Classifiers as Guard for Prompt Attacks | AAAI'24 | link | - |

| 2024.08 | BaThe: Defense against the Jailbreak Attack in Multimodal Large Language Models by Treating Harmful Instruction as Backdoor Trigger (BaThe) | arXiv | link | - |

| 2024.07 | DART: Deep Adversarial Automated Red Teaming for LLM Safety | arXiv | link | - |

| 2024.07 | Eraser: Jailbreaking Defense in Large Language Models via Unlearning Harmful Knowledge (Eraser) | arXiv | link | link |

| 2024.07 | Safe Unlearning: A Surprisingly Effective and Generalizable Solution to Defend Against Jailbreak Attacks | arXiv | link | link |

| 2024.06 | Adversarial Tuning: Defending Against Jailbreak Attacks for LLMs | arXiv | Link | - |

| 2024.06 | Jatmo: Prompt Injection Defense by Task-Specific Finetuning (Jatmo) | arXiv | link | link |

| 2024.06 | Defending Large Language Models Against Jailbreaking Attacks Through Goal Prioritization (SafeDecoding) | ACL'24 | link | link |

| 2024.06 | Mitigating Fine-tuning based Jailbreak Attack with Backdoor Enhanced Safety Alignment | NeurIPS'24 | link | link |

| 2024.06 | On Prompt-Driven Safeguarding for Large Language Models (DRO) | ICML'24 | link | link |

| 2024.06 | Robust Prompt Optimization for Defending Language Models Against Jailbreaking Attacks (RPO) | NeurIPS'24 | link | - |

| 2024.06 | Fight Back Against Jailbreaking via Prompt Adversarial Tuning (PAT) | NeurIPS'24 | link | link |

| 2024.05 | Towards Comprehensive and Efficient Post Safety Alignment of Large Language Models via Safety Patching (SAFEPATCHING) | arXiv | link | - |

| 2024.05 | Detoxifying Large Language Models via Knowledge Editing (DINM) | ACL'24 | link | link |

| 2024.05 | Defending Large Language Models Against Jailbreak Attacks via Layer-specific Editing | arXiv | link | link |

| 2023.11 | MART: Improving LLM Safety with Multi-round Automatic Red-Teaming (MART) | ACL'24 | link | - |

| 2023.11 | Baseline defenses for adversarial attacks against aligned language models | arXiv | link | - |

| 2023.10 | Safe rlhf: Safe reinforcement learning from human feedback | arXiv | link | link |

| 2023.08 | Red-Teaming Large Language Models using Chain of Utterances for Safety-Alignment (RED-INSTRUCT) | arXiv | link | link |

| 2022.04 | Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback | Anthropic | link | - |

| Time | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2024.11 | Rapid Response: Mitigating LLM Jailbreaks with a Few Examples | arXiv | link | link |

| 2024.10 | RePD: Defending Jailbreak Attack through a Retrieval-based Prompt Decomposition Process (RePD) | arXiv | link | - |

| 2024.10 | Guide for Defense (G4D): Dynamic Guidance for Robust and Balanced Defense in Large Language Models (G4D) | arXiv | link | link |

| 2024.10 | Jailbreak Antidote: Runtime Safety-Utility Balance via Sparse Representation Adjustment in Large Language Models | arXiv | link | - |

| 2024.09 | HSF: Defending against Jailbreak Attacks with Hidden State Filtering | arXiv | link | link |

| 2024.08 | EEG-Defender: Defending against Jailbreak through Early Exit Generation of Large Language Models (EEG-Defender) | arXiv | link | - |

| 2024.08 | Prefix Guidance: A Steering Wheel for Large Language Models to Defend Against Jailbreak Attacks (PG) | arXiv | link | link |

| 2024.08 | Self-Evaluation as a Defense Against Adversarial Attacks on LLMs (Self-Evaluation) | arXiv | link | link |

| 2024.06 | Defending LLMs against Jailbreaking Attacks via Backtranslation (Backtranslation) | ACL Findings'24 | link | link |

| 2024.06 | SafeDecoding: Defending against Jailbreak Attacks via Safety-Aware Decoding (SafeDecoding) | ACL'24 | link | link |

| 2024.06 | Defending Against Alignment-Breaking Attacks via Robustly Aligned LLM | ACL'24 | link | - |

| 2024.06 | A Wolf in Sheep’s Clothing: Generalized Nested Jailbreak Prompts can Fool Large Language Models Easily (ReNeLLM) | NAACL'24 | link | link |

| 2024.06 | SMOOTHLLM: Defending Large Language Models Against Jailbreaking Attacks | arXiv | link | link |

| 2024.05 | Enhancing Large Language Models Against Inductive Instructions with Dual-critique Prompting (Dual-critique) | ACL'24 | link | link |

| 2024.05 | PARDEN, Can You Repeat That? Defending against Jailbreaks via Repetition (PARDEN) | ICML'24 | link | link |

| 2024.05 | LLM Self Defense: By Self Examination, LLMs Know They Are Being Tricked | ICLR Tiny Paper'24 | link | link |

| 2024.05 | GradSafe: Detecting Unsafe Prompts for LLMs via Safety-Critical Gradient Analysis (GradSafe) | ACL'24 | link | link |

| 2024.05 | Multilingual Jailbreak Challenges in Large Language Models | ICLR'24 | link | link |

| 2024.05 | Gradient Cuff: Detecting Jailbreak Attacks on Large Language Models by Exploring Refusal Loss Landscapes | NeurIPS'24 | link | - |

| 2024.05 | AutoDefense: Multi-Agent LLM Defense against Jailbreak Attacks | arXiv | link | link |

| 2024.05 | Bergeron: Combating adversarial attacks through a conscience-based alignment framework (Bergeron) | arXiv | link | link |

| 2024.05 | Jailbreak and Guard Aligned Language Models with Only Few In-Context Demonstrations (ICD) | arXiv | link | - |

| 2024.04 | Protecting your llms with information bottleneck | NeurIPS'24 | link | link |

| 2024.04 | Pruning for Protection: Increasing Jailbreak Resistance in Aligned LLMs Without Fine-Tuning | arXiv | link | link |

| 2024.02 | Certifying LLM Safety against Adversarial Prompting | arXiv | link | link |

| 2024.02 | Break the Breakout: Reinventing LM Defense Against Jailbreak Attacks with Self-Refinement | arXiv | link | - |

| 2024.02 | Defending large language models against jailbreak attacks via semantic smoothing (SEMANTICSMOOTH) | arXiv | link | link |

| 2024.01 | Intention Analysis Makes LLMs A Good Jailbreak Defender (IA) | arXiv | link | link |

| 2024.01 | How Johnny Can Persuade LLMs to Jailbreak Them: Rethinking Persuasion to Challenge AI Safety by Humanizing LLMs (PAP) | ACL'24 | link | link |

| 2023.12 | Defending ChatGPT against jailbreak attack via self-reminders (Self-Reminder) | Nature Machine Intelligence | link | link |

| 2023.11 | Detecting language model attacks with perplexity | arXiv | link | - |

| 2023.10 | RAIN: Your Language Models Can Align Themselves without Finetuning (RAIN) | ICLR'24 | link | link |

| Time | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2025.03 | GuardReasoner: Towards Reasoning-based LLM Safeguards (GuardReasoner) | ICLR Workshop'25 | link | link |

| 2025.02 | Constitutional Classifiers: Defending against Universal Jailbreaks across Thousands of Hours of Red Teaming | arXiv'25 | link | - |

| 2024.12 | Lightweight Safety Classification Using Pruned Language Models (Sentence-BERT) | arXiv'24 | link | - |

| 2024.11 | GuardFormer: Guardrail Instruction Pretraining for Efficient SafeGuarding (GuardFormer) | Meta | link | - |

| 2024.11 | Llama Guard 3 Vision: Safeguarding Human-AI Image Understanding Conversations (LLaMA Guard 3 Vision) | Meta | link | link |

| 2024.11 | AEGIS2.0: A Diverse AI Safety Dataset and Risks Taxonomy for Alignment of LLM Guardrails (Aegis2.0) | Nvidia, NeurIPS'24 Workshop | link | - |

| 2024.11 | Lightweight Safety Guardrails Using Fine-tuned BERT Embeddings (Sentence-BERT) | arXiv'24 | link | - |

| 2024.11 | STAND-Guard: A Small Task-Adaptive Content Moderation Model (STAND-Guard) | Microsoft | link | - |

| 2024.10 | VLMGuard: Defending VLMs against Malicious Prompts via Unlabeled Data | arXiv | link | - |

| 2024.09 | AEGIS: Online Adaptive AI Content Safety Moderation with Ensemble of LLM Experts (Aegis) | Nvidia | link | link |

| 2024.09 | Llama 3.2: Revolutionizing edge AI and vision with open, customizable models (LLaMA Guard 3) | Meta | link | link |

| 2024.08 | ShieldGemma: Generative AI Content Moderation Based on Gemma (ShieldGemma) | link | link | |

| 2024.07 | WildGuard: Open One-Stop Moderation Tools for Safety Risks, Jailbreaks, and Refusals of LLMs (WildGuard) | NeurIPS'24 | link | link |

| 2024.06 | GuardAgent: Safeguard LLM Agents by a Guard Agent via Knowledge-Enabled Reasoning (GuardAgent) | arXiv'24 | link | - |

| 2024.06 | R2-Guard: Robust Reasoning Enabled LLM Guardrail via Knowledge-Enhanced Logical Reasoning (R2-Guard) | arXiv | link | link |

| 2024.04 | Llama Guard 2 | Meta | link | link |

| 2024.03 | AdaShield: Safeguarding Multimodal Large Language Models from Structure-based Attack via Adaptive Shield Prompting (AdaShield) | ECCV'24 | link | link |

| 2023.12 | Llama Guard: LLM-based Input-Output Safeguard for Human-AI Conversations (LLaMA Guard) | Meta | link | link |

| Time | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2023.08 | Using GPT-4 for content moderation (GPT-4) | OpenAI | link | - |

| 2023.02 | A Holistic Approach to Undesired Content Detection in the Real World (OpenAI Moderation Endpoint) | AAAI OpenAI | link | link |

| 2022.02 | A New Generation of Perspective API: Efficient Multilingual Character-level Transformers (Perspective API) | KDD Google | link | link |

| - | Azure AI Content Safety | Microsoft Azure | - | link |

| - | Detoxify | unitary.ai | - | link |

| Time | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2025.02 | GuidedBench: Equipping Jailbreak Evaluation with Guidelines | arXiv | link | link |

| 2024.12 | Agent-SafetyBench: Evaluating the Safety of LLM Agents | arXiv | link | link |

| 2024.11 | Global Challenge for Safe and Secure LLMs Track 1 | arXiv | link | - |

| 2024.11 | JailbreakLens: Interpreting Jailbreak Mechanism in the Lens of Representation and Circuit | arXiv | link | - |

| 2024.11 | The VLLM Safety Paradox: Dual Ease in Jailbreak Attack and Defense | arXiv | link | - |

| 2024.11 | HarmLevelBench: Evaluating Harm-Level Compliance and the Impact of Quantization on Model Alignment | arXiv | link | - |

| 2024.11 | ChemSafetyBench: Benchmarking LLM Safety on Chemistry Domain | arXiv | link | link |

| 2024.11 | GuardBench: A Large-Scale Benchmark for Guardrail Models | EMNLP'24 | link | link |

| 2024.11 | What Features in Prompts Jailbreak LLMs? Investigating the Mechanisms Behind Attacks | arXiv | Link | link |

| 2024.11 | Benchmarking LLM Guardrails in Handling Multilingual Toxicity | arXiv | link | link |

| 2024.10 | JAILJUDGE: A Comprehensive Jailbreak Judge Benchmark with Multi-Agent Enhanced Explanation Evaluation Framework | arXiv | link | link |

| 2024.10 | Do LLMs Have Political Correctness? Analyzing Ethical Biases and Jailbreak Vulnerabilities in AI Systems | arXiv | link | link |

| 2024.10 | A Realistic Threat Model for Large Language Model Jailbreaks | arXiv | link | link |

| 2024.10 | ADVERSARIAL SUFFIXES MAY BE FEATURES TOO! | arXiv | link | link |

| 2024.09 | JAILJUDGE: A COMPREHENSIVE JAILBREAK | arXiv | Link | Link |

| 2024.09 | Multimodal Pragmatic Jailbreak on Text-to-image Models | arXiv | link | link |

| 2024.08 | ShieldGemma: Generative AI Content Moderation Based on Gemma (ShieldGemma) | arXiv | link | link |

| 2024.08 | MMJ-Bench: A Comprehensive Study on Jailbreak Attacks and Defenses for Vision Language Models (MMJ-Bench) | arXiv | link | link |

| 2024.08 | Mission Impossible: A Statistical Perspective on Jailbreaking LLMs | NeurIPS'24 | Link | - |

| 2024.07 | Operationalizing a Threat Model for Red-Teaming Large Language Models (LLMs) | arXiv | link | link |

| 2024.07 | JailBreakV-28K: A Benchmark for Assessing the Robustness of MultiModal Large Language Models against Jailbreak Attacks | arXiv | link | link |

| 2024.07 | Jailbreak Attacks and Defenses Against Large Language Models: A Survey | arXiv | link | - |

| 2024.06 | "Not Aligned" is Not "Malicious": Being Careful about Hallucinations of Large Language Models' Jailbreak | arXiv | link | link |

| 2024.06 | WildTeaming at Scale: From In-the-Wild Jailbreaks to (Adversarially) Safer Language Models (WildTeaming) | NeurIPS'24 | link | link |

| 2024.06 | From LLMs to MLLMs: Exploring the Landscape of Multimodal Jailbreaking | arXiv | link | - |

| 2024.06 | AI Agents Under Threat: A Survey of Key Security Challenges and Future Pathways | arXiv | link | - |

| 2024.06 | MM-SafetyBench: A Benchmark for Safety Evaluation of Multimodal Large Language Models (MM-SafetyBench) | arXiv | link | - |

| 2024.06 | ArtPrompt: ASCII Art-based Jailbreak Attacks against Aligned LLMs (VITC) | ACL'24 | link | link |

| 2024.06 | Bag of Tricks: Benchmarking of Jailbreak Attacks on LLMs | NeurIPS'24 | link | link |

| 2024.06 | JailbreakZoo: Survey, Landscapes, and Horizons in Jailbreaking Large Language and Vision-Language Models (JailbreakZoo) | arXiv | link | link |

| 2024.06 | Fundamental limitations of alignment in large language models | arXiv | link | - |

| 2024.06 | JailbreakBench: An Open Robustness Benchmark for Jailbreaking Large Language Models (JailbreakBench) | NeurIPS'24 | link | link |

| 2024.06 | Towards Understanding Jailbreak Attacks in LLMs: A Representation Space Analysis | arXiv | link | link |

| 2024.06 | JailbreakEval: An Integrated Toolkit for Evaluating Jailbreak Attempts Against Large Language Models (JailbreakEval) | arXiv | link | link |

| 2024.05 | Rethinking How to Evaluate Language Model Jailbreak | arXiv | link | link |

| 2024.05 | Enhancing Large Language Models Against Inductive Instructions with Dual-critique Prompting (INDust) | arXiv | link | link |

| 2024.05 | Prompt Injection attack against LLM-integrated Applications | arXiv | link | - |

| 2024.05 | Tricking LLMs into Disobedience: Formalizing, Analyzing, and Detecting Jailbreaks | LREC-COLING'24 | link | link |

| 2024.05 | LLM Jailbreak Attack versus Defense Techniques--A Comprehensive Study | NDSS'24 | link | - |

| 2024.05 | Jailbreaking ChatGPT via Prompt Engineering: An Empirical Study | arXiv | link | - |

| 2024.05 | Detoxifying Large Language Models via Knowledge Editing (SafeEdit) | ACL'24 | link | link |

| 2024.04 | JailbreakLens: Visual Analysis of Jailbreak Attacks Against Large Language Models (JailbreakLens) | arXiv | link | - |

| 2024.03 | How (un) ethical are instruction-centric responses of LLMs? Unveiling the vulnerabilities of safety guardrails to harmful queries (TECHHAZARDQA) | arXiv | link | link |

| 2024.03 | Don’t Listen To Me: Understanding and Exploring Jailbreak Prompts of Large Language Models | USENIX Security | link | - |

| 2024.03 | EasyJailbreak: A Unified Framework for Jailbreaking Large Language Models (EasyJailbreak) | arXiv | link | link |

| 2024.02 | Comprehensive Assessment of Jailbreak Attacks Against LLMs | arXiv | link | - |

| 2024.02 | SPML: A DSL for Defending Language Models Against Prompt Attacks | arXiv | link | - |

| 2024.02 | Coercing LLMs to do and reveal (almost) anything | arXiv | link | - |

| 2024.02 | A STRONGREJECT for Empty Jailbreaks (StrongREJECT) | NeurIPS'24 | link | link |

| 2024.02 | ToolSword: Unveiling Safety Issues of Large Language Models in Tool Learning Across Three Stages | ACL'24 | link | link |

| 2024.02 | HarmBench: A Standardized Evaluation Framework for Automated Red Teaming and Robust Refusal (HarmBench) | arXiv | link | link |

| 2023.12 | Goal-Oriented Prompt Attack and Safety Evaluation for LLMs | arXiv | link | link |

| 2023.12 | The Art of Defending: A Systematic Evaluation and Analysis of LLM Defense Strategies on Safety and Over-Defensiveness | arXiv | link | - |

| 2023.12 | A Comprehensive Survey of Attack Techniques, Implementation, and Mitigation Strategies in Large Language Models | UbiSec'23 | link | - |

| 2023.11 | Summon a Demon and Bind it: A Grounded Theory of LLM Red Teaming in the Wild | arXiv | link | - |

| 2023.11 | How many unicorns are in this image? a safety evaluation benchmark for vision llms | arXiv | link | link |

| 2023.11 | Exploiting Large Language Models (LLMs) through Deception Techniques and Persuasion Principles | arXiv | link | - |

| 2023.10 | Explore, establish, exploit: Red teaming language models from scratch | arXiv | link | - |

| 2023.10 | Survey of Vulnerabilities in Large Language Models Revealed by Adversarial Attacks | arXiv | link | - |

| 2023.10 | Fine-tuning aligned language models compromises safety, even when users do not intend to! (HEx-PHI) | ICLR'24 (oral) | link | link |

| 2023.08 | Red-Teaming Large Language Models using Chain of Utterances for Safety-Alignment (RED-EVAL) | arXiv | link | link |

| 2023.08 | Use of LLMs for Illicit Purposes: Threats, Prevention Measures, and Vulnerabilities | arXiv | link | - |

| 2023.07 | Jailbroken: How Does LLM Safety Training Fail? (Jailbroken) | NeurIPS'23 | link | - |

| 2023.08 | Use of LLMs for Illicit Purposes: Threats, Prevention Measures, and Vulnerabilities | arXiv | link | - |

| 2023.08 | From chatgpt to threatgpt: Impact of generative ai in cybersecurity and privacy | IEEE Access | link | - |

| 2023.07 | Llm censorship: A machine learning challenge or a computer security problem? | arXiv | link | - |

| 2023.07 | Universal and Transferable Adversarial Attacks on Aligned Language Models (AdvBench) | arXiv | link | link |

| 2023.06 | DecodingTrust: A Comprehensive Assessment of Trustworthiness in GPT Models | NeurIPS'23 | link | link |

| 2023.04 | Safety Assessment of Chinese Large Language Models | arXiv | link | link |

| 2023.02 | Exploiting Programmatic Behavior of LLMs: Dual-Use Through Standard Security Attacks | arXiv | link | - |

| 2022.11 | Red Teaming Language Models to Reduce Harms: Methods, Scaling Behaviors, and Lessons Learned | arXiv | link | - |

| 2022.02 | Red Teaming Language Models with Language Models | arXiv | link | - |

| Time | Title | Venue | Paper | Code |

|---|---|---|---|---|

| 2024.11 | Attacking Vision-Language Computer Agents via Pop-ups | arXiv | link | link |

| 2024.10 | Jailbreaking LLM-Controlled Robots (ROBOPAIR) | arXiv | link | link |

| 2024.10 | SMILES-Prompting: A Novel Approach to LLM Jailbreak Attacks in Chemical Synthesis | arXiv | link | link |

| 2024.10 | Cheating Automatic LLM Benchmarks: Null Models Achieve High Win Rates | arXiv | link | link |

| 2024.09 | RoleBreak: Character Hallucination as a Jailbreak Attack in Role-Playing Systems | arXiv | link | - |

| 2024.08 | A Jailbroken GenAI Model Can Cause Substantial Harm: GenAI-powered Applications are Vulnerable to PromptWares (APwT) | arXiv | link | - |

If you find this repository helpful to your research, it is really appreciated to cite our papers. ✨

@article{liuyue_GuardReasoner,

title={GuardReasoner: Towards Reasoning-based LLM Safeguards},

author={Liu, Yue and Gao, Hongcheng and Zhai, Shengfang and Jun, Xia and Wu, Tianyi and Xue, Zhiwei and Chen, Yulin and Kawaguchi, Kenji and Zhang, Jiaheng and Hooi, Bryan},

journal={arXiv preprint arXiv:2501.18492},

year={2025}

}

@article{liuyue_FlipAttack,

title={FlipAttack: Jailbreak LLMs via Flipping},

author={Liu, Yue and He, Xiaoxin and Xiong, Miao and Fu, Jinlan and Deng, Shumin and Hooi, Bryan},

journal={arXiv preprint arXiv:2410.02832},

year={2024}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-Jailbreak-on-LLMs

Similar Open Source Tools

Awesome-Jailbreak-on-LLMs

Awesome-Jailbreak-on-LLMs is a collection of state-of-the-art, novel, and exciting jailbreak methods on Large Language Models (LLMs). The repository contains papers, codes, datasets, evaluations, and analyses related to jailbreak attacks on LLMs. It serves as a comprehensive resource for researchers and practitioners interested in exploring various jailbreak techniques and defenses in the context of LLMs. Contributions such as additional jailbreak-related content, pull requests, and issue reports are welcome, and contributors are acknowledged. For any inquiries or issues, contact [email protected]. If you find this repository useful for your research or work, consider starring it to show appreciation.

Awesome-LLMs-for-Video-Understanding

Awesome-LLMs-for-Video-Understanding is a repository dedicated to exploring Video Understanding with Large Language Models. It provides a comprehensive survey of the field, covering models, pretraining, instruction tuning, and hybrid methods. The repository also includes information on tasks, datasets, and benchmarks related to video understanding. Contributors are encouraged to add new papers, projects, and materials to enhance the repository.

Awesome-Knowledge-Distillation-of-LLMs

A collection of papers related to knowledge distillation of large language models (LLMs). The repository focuses on techniques to transfer advanced capabilities from proprietary LLMs to smaller models, compress open-source LLMs, and refine their performance. It covers various aspects of knowledge distillation, including algorithms, skill distillation, verticalization distillation in fields like law, medical & healthcare, finance, science, and miscellaneous domains. The repository provides a comprehensive overview of the research in the area of knowledge distillation of LLMs.

cool-ai-stuff

This repository contains an uncensored list of free to use APIs and sites for several AI models. > _This list is mainly managed by @zukixa, the queen of zukijourney, so any decisions may have bias!~_ > > **Scroll down for the sites, APIs come first!** * * * > [!WARNING] > We are not endorsing _any_ of the listed services! Some of them might be considered controversial. We are not responsible for any legal, technical or any other damage caused by using the listed services. Data is provided without warranty of any kind. **Use these at your own risk!** * * * # APIs Table of Contents #### Overview of Existing APIs #### Overview of Existing APIs -- Top LLM Models Available #### Overview of Existing APIs -- Top Image Models Available #### Overview of Existing APIs -- Top Other Features & Models Available #### Overview of Existing APIs -- Available Donator Perks * * * ## API List:* *: This list solely covers all providers I (@zukixa) was able to collect metrics in. Any mistakes are not my responsibility, as I am either banned, or not aware of x API. \ 1: Last Updated 4/14/24 ### Overview of APIs: | Service | # of Users1 | Link | Stablity | NSFW Ok? | Open Source? | Owner(s) | Other Notes | | ----------- | ---------- | ------------------------------------------ | ------------------------------------------ | --------------------------- | ------------------------------------------------------ | -------------------------- | ----------------------------------------------------------------------------------------------------------- | | zukijourney| 4441 | D | High | On /unf/, not /v1/ | ✅, Here | @zukixa | Largest & Oldest GPT-4 API still continuously around. Offers other popular AI-related Bots too. | | Hyzenberg| 1234 | D | High | Forbidden | ❌ | @thatlukinhasguy & @voidiii | Experimental sister API to Zukijourney. Successor to HentAI | | NagaAI | 2883 | D | High | Forbidden | ❌ | @zentixua | Honorary successor to ChimeraGPT, the largest API in history (15k users). | | WebRaftAI | 993 | D | High | Forbidden | ❌ | @ds_gamer | Largest API by model count. Provides a lot of service/hosting related stuff too. | | KrakenAI | 388 | D | High | Discouraged | ❌ | @paninico | It is an API of all time. | | ShuttleAI | 3585 | D | Medium | Generally Permitted | ❌ | @xtristan | Faked GPT-4 Before 1, 2 | | Mandrill | 931 | D | Medium | Enterprise-Tier-Only | ❌ | @fredipy | DALL-E-3 access pioneering API. Has some issues with speed & stability nowadays. | oxygen | 742 | D | Medium | Donator-Only | ❌ | @thesketchubuser | Bri'ish 🤮 & Fren'sh 🤮 | | Skailar | 399 | D | Medium | Forbidden | ❌ | @aquadraws | Service is the personification of the word 'feature creep'. Lots of things announced, not much operational. |

VoiceBench

VoiceBench is a repository containing code and data for benchmarking LLM-Based Voice Assistants. It includes a leaderboard with rankings of various voice assistant models based on different evaluation metrics. The repository provides setup instructions, datasets, evaluation procedures, and a curated list of awesome voice assistants. Users can submit new voice assistant results through the issue tracker for updates on the ranking list.

CogVLM2

CogVLM2 is a new generation of open source models that offer significant improvements in benchmarks such as TextVQA and DocVQA. It supports 8K content length, image resolution up to 1344 * 1344, and both Chinese and English languages. The project provides basic calling methods, fine-tuning examples, and OpenAI API format calling examples to help developers quickly get started with the model.

step_into_llm

The 'step_into_llm' repository is dedicated to the 昇思MindSpore technology open class, which focuses on exploring cutting-edge technologies, combining theory with practical applications, expert interpretations, open sharing, and empowering competitions. The repository contains course materials, including slides and code, for the ongoing second phase of the course. It covers various topics related to large language models (LLMs) such as Transformer, BERT, GPT, GPT2, and more. The course aims to guide developers interested in LLMs from theory to practical implementation, with a special emphasis on the development and application of large models.

MOSS-TTS

MOSS-TTS Family is an open-source speech and sound generation model family designed for high-fidelity, high-expressiveness, and complex real-world scenarios. It includes five production-ready models: MOSS-TTS, MOSS-TTSD, MOSS-VoiceGenerator, MOSS-TTS-Realtime, and MOSS-SoundEffect, each serving specific purposes in speech generation, dialogue, voice design, real-time interactions, and sound effect generation. The models offer features like long-speech generation, fine-grained control over phonemes and duration, multilingual synthesis, voice cloning, and real-time voice agents.

LLamaTuner

LLamaTuner is a repository for the Efficient Finetuning of Quantized LLMs project, focusing on building and sharing instruction-following Chinese baichuan-7b/LLaMA/Pythia/GLM model tuning methods. The project enables training on a single Nvidia RTX-2080TI and RTX-3090 for multi-round chatbot training. It utilizes bitsandbytes for quantization and is integrated with Huggingface's PEFT and transformers libraries. The repository supports various models, training approaches, and datasets for supervised fine-tuning, LoRA, QLoRA, and more. It also provides tools for data preprocessing and offers models in the Hugging Face model hub for inference and finetuning. The project is licensed under Apache 2.0 and acknowledges contributions from various open-source contributors.

LLM4EC

LLM4EC is an interdisciplinary research repository focusing on the intersection of Large Language Models (LLM) and Evolutionary Computation (EC). It provides a comprehensive collection of papers and resources exploring various applications, enhancements, and synergies between LLM and EC. The repository covers topics such as LLM-assisted optimization, EA-based LLM architecture search, and applications in code generation, software engineering, neural architecture search, and other generative tasks. The goal is to facilitate research and development in leveraging LLM and EC for innovative solutions in diverse domains.

llms-from-scratch-cn

This repository provides a detailed tutorial on how to build your own large language model (LLM) from scratch. It includes all the code necessary to create a GPT-like LLM, covering the encoding, pre-training, and fine-tuning processes. The tutorial is written in a clear and concise style, with plenty of examples and illustrations to help you understand the concepts involved. It is suitable for developers and researchers with some programming experience who are interested in learning more about LLMs and how to build them.

llama-stack

Llama Stack defines and standardizes core building blocks for AI application development, providing a unified API layer, plugin architecture, prepackaged distributions, developer interfaces, and standalone applications. It offers flexibility in infrastructure choice, consistent experience with unified APIs, and a robust ecosystem with integrated distribution partners. The tool simplifies building, testing, and deploying AI applications with various APIs and environments, supporting local development, on-premises, cloud, and mobile deployments.

ClawRouter

ClawRouter is a tool designed to route every request to the cheapest model that can handle it, offering a wallet-based system with 30+ models available without the need for API keys. It provides 100% local routing with 14-dimension weighted scoring, zero external calls for routing decisions, and supports various models from providers like OpenAI, Anthropic, Google, DeepSeek, xAI, and Moonshot. Users can pay per request with USDC on Base, benefiting from an open-source, MIT-licensed, fully inspectable routing logic. The tool is optimized for agent swarm and multi-step workflows, offering cost-efficient solutions for parallel web research, multi-agent orchestration, and long-running automation tasks.

Awesome-LLM-Eval

Awesome-LLM-Eval: a curated list of tools, benchmarks, demos, papers for Large Language Models (like ChatGPT, LLaMA, GLM, Baichuan, etc) Evaluation on Language capabilities, Knowledge, Reasoning, Fairness and Safety.

AI-Competition-Collections

AI-Competition-Collections is a repository that collects and curates various experiences and tips from AI competitions. It includes posts on competition experiences in computer vision, NLP, speech, and other AI-related fields. The repository aims to provide valuable insights and techniques for individuals participating in AI competitions, covering topics such as image classification, object detection, OCR, adversarial attacks, and more.

For similar tasks

Awesome-Jailbreak-on-LLMs

Awesome-Jailbreak-on-LLMs is a collection of state-of-the-art, novel, and exciting jailbreak methods on Large Language Models (LLMs). The repository contains papers, codes, datasets, evaluations, and analyses related to jailbreak attacks on LLMs. It serves as a comprehensive resource for researchers and practitioners interested in exploring various jailbreak techniques and defenses in the context of LLMs. Contributions such as additional jailbreak-related content, pull requests, and issue reports are welcome, and contributors are acknowledged. For any inquiries or issues, contact [email protected]. If you find this repository useful for your research or work, consider starring it to show appreciation.

For similar jobs

LLM-and-Law

This repository is dedicated to summarizing papers related to large language models with the field of law. It includes applications of large language models in legal tasks, legal agents, legal problems of large language models, data resources for large language models in law, law LLMs, and evaluation of large language models in the legal domain.

start-llms

This repository is a comprehensive guide for individuals looking to start and improve their skills in Large Language Models (LLMs) without an advanced background in the field. It provides free resources, online courses, books, articles, and practical tips to become an expert in machine learning. The guide covers topics such as terminology, transformers, prompting, retrieval augmented generation (RAG), and more. It also includes recommendations for podcasts, YouTube videos, and communities to stay updated with the latest news in AI and LLMs.

aiverify

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against internationally recognised principles through standardised tests. It offers a new API Connector feature to bypass size limitations, test various AI frameworks, and configure connection settings for batch requests. The toolkit operates within an enterprise environment, conducting technical tests on common supervised learning models for tabular and image datasets. It does not define AI ethical standards or guarantee complete safety from risks or biases.

Awesome-LLM-Watermark

This repository contains a collection of research papers related to watermarking techniques for text and images, specifically focusing on large language models (LLMs). The papers cover various aspects of watermarking LLM-generated content, including robustness, statistical understanding, topic-based watermarks, quality-detection trade-offs, dual watermarks, watermark collision, and more. Researchers have explored different methods and frameworks for watermarking LLMs to protect intellectual property, detect machine-generated text, improve generation quality, and evaluate watermarking techniques. The repository serves as a valuable resource for those interested in the field of watermarking for LLMs.

LLM-LieDetector

This repository contains code for reproducing experiments on lie detection in black-box LLMs by asking unrelated questions. It includes Q/A datasets, prompts, and fine-tuning datasets for generating lies with language models. The lie detectors rely on asking binary 'elicitation questions' to diagnose whether the model has lied. The code covers generating lies from language models, training and testing lie detectors, and generalization experiments. It requires access to GPUs and OpenAI API calls for running experiments with open-source models. Results are stored in the repository for reproducibility.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

langtest

LangTest is a comprehensive evaluation library for custom LLM and NLP models. It aims to deliver safe and effective language models by providing tools to test model quality, augment training data, and support popular NLP frameworks. LangTest comes with benchmark datasets to challenge and enhance language models, ensuring peak performance in various linguistic tasks. The tool offers more than 60 distinct types of tests with just one line of code, covering aspects like robustness, bias, representation, fairness, and accuracy. It supports testing LLMS for question answering, toxicity, clinical tests, legal support, factuality, sycophancy, and summarization.

Awesome-Jailbreak-on-LLMs

Awesome-Jailbreak-on-LLMs is a collection of state-of-the-art, novel, and exciting jailbreak methods on Large Language Models (LLMs). The repository contains papers, codes, datasets, evaluations, and analyses related to jailbreak attacks on LLMs. It serves as a comprehensive resource for researchers and practitioners interested in exploring various jailbreak techniques and defenses in the context of LLMs. Contributions such as additional jailbreak-related content, pull requests, and issue reports are welcome, and contributors are acknowledged. For any inquiries or issues, contact [email protected]. If you find this repository useful for your research or work, consider starring it to show appreciation.