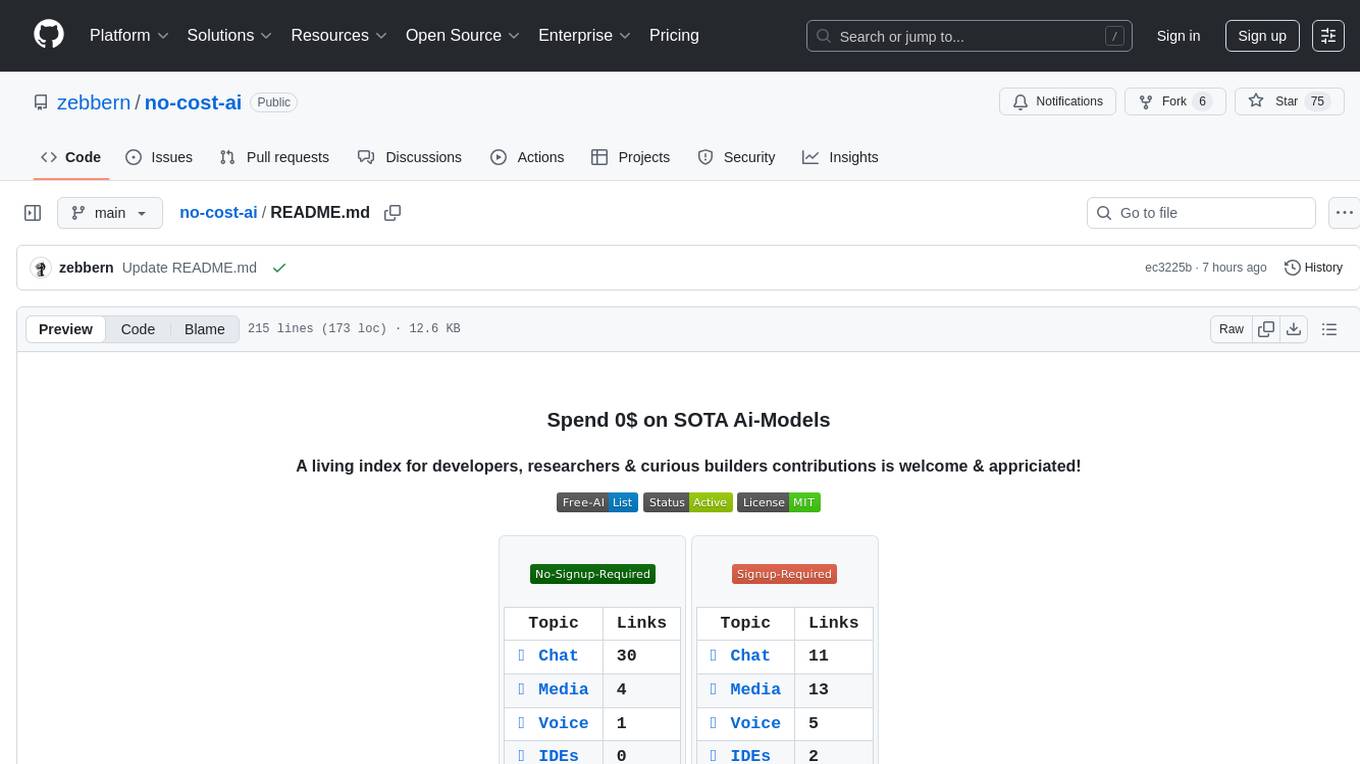

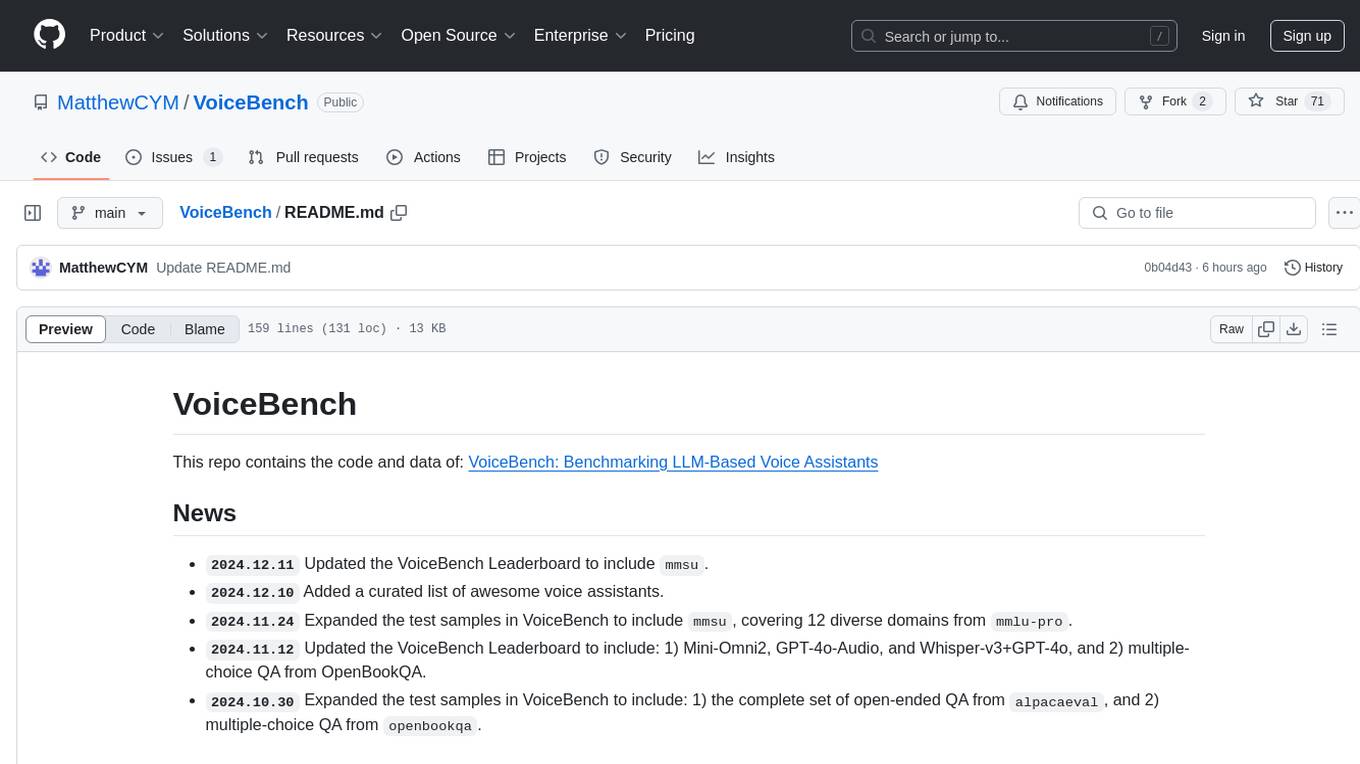

VoiceBench

VoiceBench: Benchmarking LLM-Based Voice Assistants

Stars: 119

VoiceBench is a repository containing code and data for benchmarking LLM-Based Voice Assistants. It includes a leaderboard with rankings of various voice assistant models based on different evaluation metrics. The repository provides setup instructions, datasets, evaluation procedures, and a curated list of awesome voice assistants. Users can submit new voice assistant results through the issue tracker for updates on the ranking list.

README:

This repo contains the code and data of: VoiceBench: Benchmarking LLM-Based Voice Assistants

-

2024.12.11Updated the VoiceBench Leaderboard to includemmsu. -

2024.12.10Added a curated list of awesome voice assistants. -

2024.11.24Expanded the test samples in VoiceBench to includemmsu, covering 12 diverse domains frommmlu-pro. -

2024.11.12Updated the VoiceBench Leaderboard to include: 1) Mini-Omni2, GPT-4o-Audio, and Whisper-v3+GPT-4o, and 2) multiple-choice QA from OpenBookQA. -

2024.10.30Expanded the test samples in VoiceBench to include: 1) the complete set of open-ended QA fromalpacaeval, and 2) multiple-choice QA fromopenbookqa.

| Rank | Model | AlpacaEval | CommonEval | SD-QA | MMSU | OpenBookQA | IFEval | AdvBench | Overall |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Whisper-v3-large+GPT-4o | 4.80 | 4.47 | 75.77 | 81.69 | 92.97 | 76.51 | 98.27 | 87.23 |

| 2 | GPT-4o-Audio | 4.78 | 4.49 | 75.50 | 80.25 | 89.23 | 76.02 | 98.65 | 86.42 |

| 3 | GPT-4o-mini-Audio | 4.75 | 4.24 | 67.36 | 72.90 | 84.84 | 72.90 | 98.27 | 82.30 |

| 4 | Whisper-v3-large+LLaMA-3.1-8B | 4.53 | 4.04 | 70.43 | 62.43 | 81.54 | 69.53 | 98.08 | 79.06 |

| 5 | Whisper-v3-turbo+LLaMA-3.1-8B | 4.55 | 4.02 | 58.23 | 62.04 | 72.09 | 71.12 | 98.46 | 76.16 |

| 6 | Ultravox-v0.5-LLaMA-3.1-8B | 4.59 | 4.11 | 58.68 | 54.16 | 68.35 | 66.51 | 98.65 | 74.34 |

| 7 | MiniCPM-o | 4.42 | 4.15 | 50.72 | 54.78 | 78.02 | 49.25 | 97.69 | 71.69 |

| 8 | Ultravox-v0.4.1-LLaMA-3.1-8B | 4.55 | 3.90 | 53.35 | 47.17 | 65.27 | 66.88 | 98.46 | 71.45 |

| 9 | Baichuan-Omni-1.5 | 4.50 | 4.05 | 43.40 | 57.25 | 74.51 | 54.54 | 97.31 | 71.14 |

| 10 | Whisper-v3-turbo+LLaMA-3.2-3B | 4.45 | 3.82 | 49.28 | 51.37 | 60.66 | 69.71 | 98.08 | 70.66 |

| 11 | VITA-1.5 | 4.21 | 3.66 | 38.88 | 52.15 | 71.65 | 38.14 | 97.69 | 65.13 |

| 12 | MERaLiON | 4.50 | 3.77 | 55.06 | 34.95 | 27.23 | 62.93 | 94.81 | 62.91 |

| 13 | Lyra-Base | 3.85 | 3.50 | 38.25 | 49.74 | 72.75 | 36.28 | 59.62 | 57.66 |

| 14 | GLM-4-Voice | 3.97 | 3.42 | 36.98 | 39.75 | 53.41 | 25.92 | 88.08 | 55.99 |

| 15 | DiVA | 3.67 | 3.54 | 57.05 | 25.76 | 25.49 | 39.15 | 98.27 | 55.70 |

| 16 | Qwen2-Audio | 3.74 | 3.43 | 35.71 | 35.72 | 49.45 | 26.33 | 96.73 | 55.35 |

| 17 | Freeze-Omni | 4.03 | 3.46 | 53.45 | 28.14 | 30.98 | 23.40 | 97.30 | 54.72 |

| 18 | KE-Omni-v1.5 | 3.82 | 3.20 | 31.20 | 32.27 | 58.46 | 15.00 | 100.00 | 53.90 |

| 19 | Step-Audio | 4.13 | 3.09 | 44.21 | 28.33 | 33.85 | 27.96 | 69.62 | 49.77 |

| 20 | Megrez-3B-Omni | 3.50 | 2.95 | 25.95 | 27.03 | 28.35 | 25.71 | 87.69 | 46.25 |

| 21 | Lyra-Mini | 2.99 | 2.69 | 19.89 | 31.42 | 41.54 | 20.91 | 80.00 | 43.91 |

| 22 | Ichigo | 3.79 | 3.17 | 36.53 | 25.63 | 26.59 | 21.59 | 57.50 | 43.86 |

| 23 | LLaMA-Omni | 3.70 | 3.46 | 39.69 | 25.93 | 27.47 | 14.87 | 11.35 | 37.51 |

| 24 | VITA-1.0 | 3.38 | 2.15 | 27.94 | 25.70 | 29.01 | 22.82 | 26.73 | 34.68 |

| 25 | SLAM-Omni | 1.90 | 1.79 | 4.16 | 26.06 | 25.27 | 13.38 | 94.23 | 33.84 |

| 26 | Mini-Omni2 | 2.32 | 2.18 | 9.31 | 24.27 | 26.59 | 11.56 | 57.50 | 31.32 |

| 27 | Mini-Omni | 1.95 | 2.02 | 13.92 | 24.69 | 26.59 | 13.58 | 37.12 | 27.90 |

| 28 | Moshi | 2.01 | 1.60 | 15.64 | 24.04 | 25.93 | 10.12 | 44.23 | 27.47 |

We encourage you to submit new voice assistant results directly through the issue tracker. The ranking list will be updated accordingly.

conda create -n voicebench python=3.10

conda activate voicebench

pip install torch==2.1.2 torchvision==0.16.2 torchaudio==2.1.2 --index-url https://download.pytorch.org/whl/cu121

pip install xformers==0.0.23 --no-deps

pip install -r requirements.txtThe data used in this project is available at VoiceBench Dataset hosted on Hugging Face.

You can access it directly via the link and integrate it into your project by using the Hugging Face datasets library.

To load the dataset in your Python environment:

from datasets import load_dataset

# Load the VoiceBench dataset

# Available subset: alpacaeval, commoneval, sd-qa, ifeval, advbench, ...

dataset = load_dataset("hlt-lab/voicebench", 'alpacaeval')| Subset | # Samples | Audio Source | Task Type |

|---|---|---|---|

| alpacaeval | 199 | Google TTS | Open-Ended QA |

| alpacaeval_full | 636 | Google TTS | Open-Ended QA |

| commoneval | 200 | Human | Open-Ended QA |

| openbookqa | 455 | Google TTS | Multiple-Choice QA |

| mmsu | 3,074 | Google TTS | Multiple-Choice QA |

| sd-qa | 553 | Human | Reference-Based QA |

| mtbench | 46 | Google TTS | Multi-Turn QA |

| ifeval | 345 | Google TTS | Instruction Following |

| advbench | 520 | Google TTS | Safety |

PS: alpacaeval contains helpful_base and vicuna data, while alpacaeval_full is constructed with the complete data. alpacaeval_full is used in the leaderboard.

To obtain the responses from the voice assistant model, run the following command:

python main.py --model naive --data alpacaeval --split test --modality audioSupported Arguments:

-

--model: Specifies the model to use for generating responses. Replacenaivewith the model you want to test (e.g.,qwen2,diva). -

--data: Selects the subset of the dataset. Replacealpacaevalwith other subsets likecommoneval,sd-qa, etc., depending on your evaluation needs. -

--split: Chooses the data split to evaluate.- For most datasets (

alpacaeval,commoneval,ifeval,advbench), usetestas the value. - For the

sd-qasubset, you should provide a region code instead oftest, such asausfor Australia,usafor the United States, etc.

- For most datasets (

-

--modality: Useaudiofor spoken instructions,textfor text-based instructions.

This will generate the output and save it to a file named naive-alpacaeval-test-audio.jsonl.

For datasets alpacaeval, commoneval, and sd-qa, we use gpt-4o-mini to evaluate the responses. Run the following command to get the GPT score:

python api_judge.py --src_file naive-alpacaeval-test-audio.jsonlThe GPT evaluation scores will be saved to result-naive-alpacaeval-test-audio.jsonl.

Note: This step should be skipped for other datasets, as they are not evaluated using GPT-4.

To generate the final evaluation results, run:

python evaluate.py --src_file result-naive-alpacaeval-test-audio.jsonl --evaluator openSupported Arguments:

-

--evaluator: Specifies the evaluator type:- Use

openforalpacaevalandcommoneval. - Use

qaforsd-qa. - Use

ifevalforifeval. - Use

harmforadvbench. - Use

mcqforopenbookqaandmmsu.

- Use

If you use the VoiceBench in your research, please cite the following paper:

@article{chen2024voicebench,

title={VoiceBench: Benchmarking LLM-Based Voice Assistants},

author={Chen, Yiming and Yue, Xianghu and Zhang, Chen and Gao, Xiaoxue and Tan, Robby T. and Li, Haizhou},

journal={arXiv preprint arXiv:2410.17196},

year={2024}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for VoiceBench

Similar Open Source Tools

VoiceBench

VoiceBench is a repository containing code and data for benchmarking LLM-Based Voice Assistants. It includes a leaderboard with rankings of various voice assistant models based on different evaluation metrics. The repository provides setup instructions, datasets, evaluation procedures, and a curated list of awesome voice assistants. Users can submit new voice assistant results through the issue tracker for updates on the ranking list.

ClawRouter

ClawRouter is a tool designed to route every request to the cheapest model that can handle it, offering a wallet-based system with 30+ models available without the need for API keys. It provides 100% local routing with 14-dimension weighted scoring, zero external calls for routing decisions, and supports various models from providers like OpenAI, Anthropic, Google, DeepSeek, xAI, and Moonshot. Users can pay per request with USDC on Base, benefiting from an open-source, MIT-licensed, fully inspectable routing logic. The tool is optimized for agent swarm and multi-step workflows, offering cost-efficient solutions for parallel web research, multi-agent orchestration, and long-running automation tasks.

Awesome-LLMs-for-Video-Understanding

Awesome-LLMs-for-Video-Understanding is a repository dedicated to exploring Video Understanding with Large Language Models. It provides a comprehensive survey of the field, covering models, pretraining, instruction tuning, and hybrid methods. The repository also includes information on tasks, datasets, and benchmarks related to video understanding. Contributors are encouraged to add new papers, projects, and materials to enhance the repository.

LLamaTuner

LLamaTuner is a repository for the Efficient Finetuning of Quantized LLMs project, focusing on building and sharing instruction-following Chinese baichuan-7b/LLaMA/Pythia/GLM model tuning methods. The project enables training on a single Nvidia RTX-2080TI and RTX-3090 for multi-round chatbot training. It utilizes bitsandbytes for quantization and is integrated with Huggingface's PEFT and transformers libraries. The repository supports various models, training approaches, and datasets for supervised fine-tuning, LoRA, QLoRA, and more. It also provides tools for data preprocessing and offers models in the Hugging Face model hub for inference and finetuning. The project is licensed under Apache 2.0 and acknowledges contributions from various open-source contributors.

agentkit-samples

AgentKit Samples is a repository containing a series of examples and tutorials to help users understand, implement, and integrate various functionalities of AgentKit into their applications. The platform offers a complete solution for building, deploying, and maintaining AI agents, significantly reducing the complexity of developing intelligent applications. The repository provides different levels of examples and tutorials, including basic tutorials for understanding AgentKit's concepts and use cases, as well as more complex examples for experienced developers.

Awesome-LLM-Eval

Awesome-LLM-Eval: a curated list of tools, benchmarks, demos, papers for Large Language Models (like ChatGPT, LLaMA, GLM, Baichuan, etc) Evaluation on Language capabilities, Knowledge, Reasoning, Fairness and Safety.

LightMem

LightMem is a lightweight and efficient memory management framework designed for Large Language Models and AI Agents. It provides a simple yet powerful memory storage, retrieval, and update mechanism to help you quickly build intelligent applications with long-term memory capabilities. The framework is minimalist in design, ensuring minimal resource consumption and fast response times. It offers a simple API for easy integration into applications with just a few lines of code. LightMem's modular architecture supports custom storage engines and retrieval strategies, making it flexible and extensible. It is compatible with various cloud APIs like OpenAI and DeepSeek, as well as local models such as Ollama and vLLM.

go-cyber

Cyber is a superintelligence protocol that aims to create a decentralized and censorship-resistant internet. It uses a novel consensus mechanism called CometBFT and a knowledge graph to store and process information. Cyber is designed to be scalable, secure, and efficient, and it has the potential to revolutionize the way we interact with the internet.

MOSS-TTS

MOSS-TTS Family is an open-source speech and sound generation model family designed for high-fidelity, high-expressiveness, and complex real-world scenarios. It includes five production-ready models: MOSS-TTS, MOSS-TTSD, MOSS-VoiceGenerator, MOSS-TTS-Realtime, and MOSS-SoundEffect, each serving specific purposes in speech generation, dialogue, voice design, real-time interactions, and sound effect generation. The models offer features like long-speech generation, fine-grained control over phonemes and duration, multilingual synthesis, voice cloning, and real-time voice agents.

llms-from-scratch-cn

This repository provides a detailed tutorial on how to build your own large language model (LLM) from scratch. It includes all the code necessary to create a GPT-like LLM, covering the encoding, pre-training, and fine-tuning processes. The tutorial is written in a clear and concise style, with plenty of examples and illustrations to help you understand the concepts involved. It is suitable for developers and researchers with some programming experience who are interested in learning more about LLMs and how to build them.

llm-export

llm-export is a tool for exporting llm models to onnx and mnn formats. It has features such as passing onnxruntime correctness tests, optimizing the original code to support dynamic shapes, reducing constant parts, optimizing onnx models using OnnxSlim for performance improvement, and exporting lora weights to onnx and mnn formats. Users can clone the project locally, clone the desired LLM project locally, and use LLMExporter to export the model. The tool supports various export options like exporting the entire model as one onnx model, exporting model segments as multiple models, exporting model vocabulary to a text file, exporting specific model layers like Embedding and lm_head, testing the model with queries, validating onnx model consistency with onnxruntime, converting onnx models to mnn models, and more. Users can specify export paths, skip optimization steps, and merge lora weights before exporting.

Awesome-Interpretability-in-Large-Language-Models

This repository is a collection of resources focused on interpretability in large language models (LLMs). It aims to help beginners get started in the area and keep researchers updated on the latest progress. It includes libraries, blogs, tutorials, forums, tools, programs, papers, and more related to interpretability in LLMs.

aidea-server

AIdea Server is an open-source Golang-based server that integrates mainstream large language models and drawing models. It supports various functionalities including OpenAI's GPT-3.5 and GPT-4, Anthropic's Claude instant and Claude 2.1, Google's Gemini Pro, as well as Chinese models like Tongyi Qianwen, Wenxin Yiyuan, and more. It also supports open-source large models like Yi 34B, Llama2, and AquilaChat 7B. Additionally, it provides features for text-to-image, super-resolution, coloring black and white images, generating art fonts and QR codes, among others.

denodo-ai-sdk

Denodo AI SDK is a tool that enables users to create AI chatbots and agents that provide accurate and context-aware answers using enterprise data. It connects to the Denodo Platform, supports popular LLMs and vector stores, and includes a sample chatbot and simple APIs for quick setup. The tool also offers benchmarks for evaluating LLM performance and provides guidance on configuring DeepQuery for different LLM providers.

no-cost-ai

No-cost-ai is a repository dedicated to providing a comprehensive list of free AI models and tools for developers, researchers, and curious builders. It serves as a living index for accessing state-of-the-art AI models without any cost. The repository includes information on various AI applications such as chat interfaces, media generation, voice and music tools, AI IDEs, and developer APIs and platforms. Users can find links to free models, their limits, and usage instructions. Contributions to the repository are welcome, and users are advised to use the listed services at their own risk due to potential changes in models, limitations, and reliability of free services.

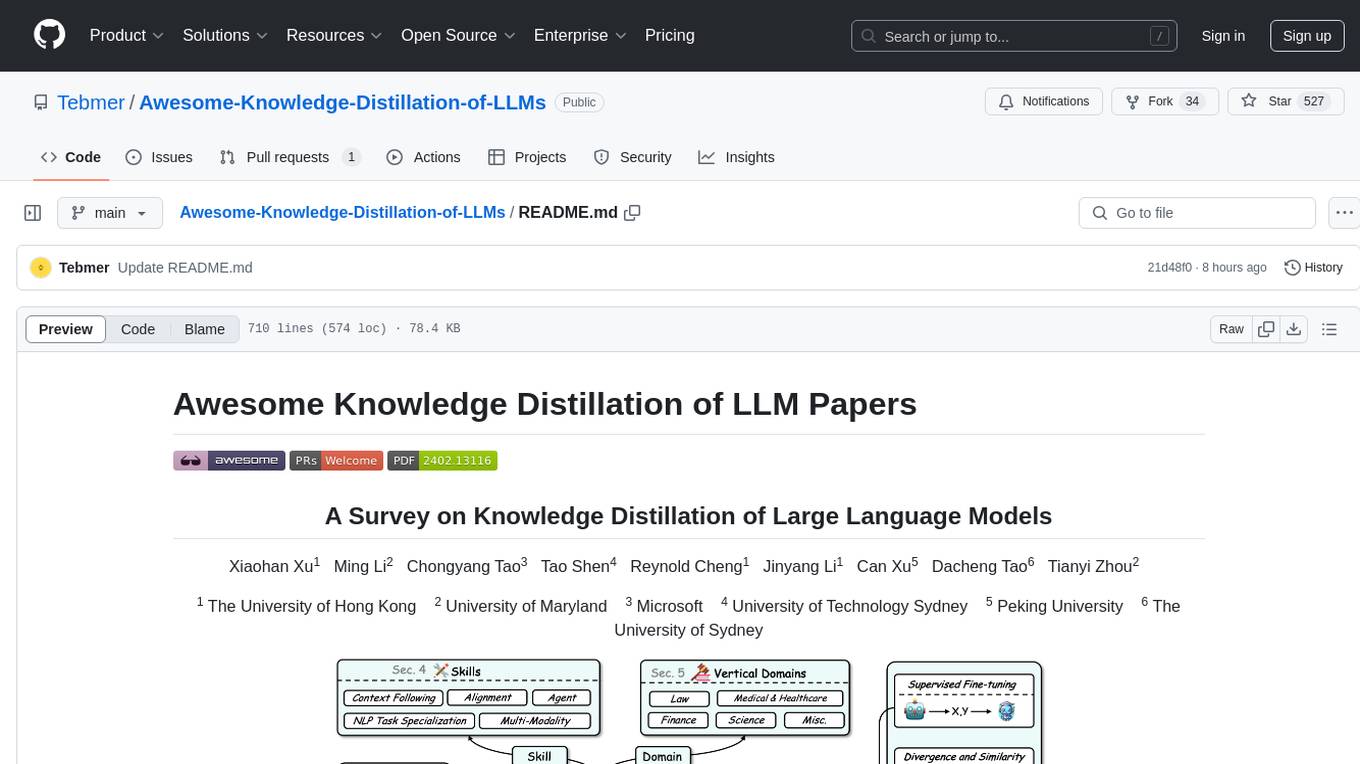

Awesome-Knowledge-Distillation-of-LLMs

A collection of papers related to knowledge distillation of large language models (LLMs). The repository focuses on techniques to transfer advanced capabilities from proprietary LLMs to smaller models, compress open-source LLMs, and refine their performance. It covers various aspects of knowledge distillation, including algorithms, skill distillation, verticalization distillation in fields like law, medical & healthcare, finance, science, and miscellaneous domains. The repository provides a comprehensive overview of the research in the area of knowledge distillation of LLMs.

For similar tasks

VoiceBench

VoiceBench is a repository containing code and data for benchmarking LLM-Based Voice Assistants. It includes a leaderboard with rankings of various voice assistant models based on different evaluation metrics. The repository provides setup instructions, datasets, evaluation procedures, and a curated list of awesome voice assistants. Users can submit new voice assistant results through the issue tracker for updates on the ranking list.

orate

Orate is an AI toolkit designed for speech processing tasks. It allows users to generate realistic, human-like speech and transcribe audio using a unified API that integrates with popular AI providers such as OpenAI, ElevenLabs, and AssemblyAI. The toolkit can be easily installed using npm or other package managers. For more details, visit the website.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.