Awesome-Model-Merging-Methods-Theories-Applications

Model Merging in LLMs, MLLMs, and Beyond: Methods, Theories, Applications and Opportunities. arXiv:2408.07666.

Stars: 519

A comprehensive repository focusing on 'Model Merging in LLMs, MLLMs, and Beyond', providing an exhaustive overview of model merging methods, theories, applications, and future research directions. The repository covers various advanced methods, applications in foundation models, different machine learning subfields, and tasks like pre-merging methods, architecture transformation, weight alignment, basic merging methods, and more.

README:

A comprehensive list of papers about 'Model Merging in LLMs, MLLMs, and Beyond: Methods, Theories, Applications and Opportunities. Arxiv, 2024.'.

[!IMPORTANT] Contributions welcome:

If you have a relevant paper not included in the library, or have any clarification about the content of the paper, please contact us! Or, you may also consider submitting 'Pull requests' directly, thank you!

If you think your paper is more suitable for another category, please contact us or submit 'Pull requests'.

If your paper is accepted, you may consider updating the relevant information.

Thank you!

- 🔥🔥🔥 We marked the papers that used model size $\geq$ 7B in experiments.

Model merging is an efficient empowerment technique in the machine learning community that does not require the collection of raw training data and does not require expensive computation. As model merging becomes increasingly prevalent across various fields, it is crucial to understand the available model merging techniques comprehensively. However, there is a significant gap in the literature regarding a systematic and thorough review of these techniques. To address this gap, this survey provides a comprehensive overview of model merging methods and theories, their applications in various domains and settings, and future research directions. Specifically, we first propose a new taxonomic approach that exhaustively discusses existing model merging methods. Secondly, we discuss the application of model merging techniques in large language models, multimodal large language models, and 10+ machine learning subfields, including continual learning, multi-task learning, few-shot learning, etc. Finally, we highlight the remaining challenges of model merging and discuss future research directions.

If you find our paper or this resource helpful, please consider cite:

@article{Survery_ModelMerging_2024,

title={Model Merging in LLMs, MLLMs, and Beyond: Methods, Theories, Applications and Opportunities},

author={Yang, Enneng and Shen, Li and Guo, Guibing and Wang, Xingwei and Cao, Xiaochun and Zhang, Jie and Tao, Dacheng},

journal={arXiv preprint arXiv:2408.07666},

year={2024}

}

Thanks!

- Survey

- Benchmark/Evaluation

- Advanced Methods

- Application of Model Merging in Foundation Models

- Application of Model Merging in Different Machine Learning Subfields

- Other Applications

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Fine-Tuning Attention Modules Only: Enhancing Weight Disentanglement in Task Arithmetic | 2025 | ICLR | |

| Fine-Tuning Linear Layers Only Is a Simple yet Effective Way for Task Arithmetic | 2024 | Arxiv | |

| Tangent Transformers for Composition,Privacy and Removal | 2024 | ICLR | |

| Parameter Efficient Multi-task Model Fusion with Partial Linearization | 2024 | ICLR | |

| Task Arithmetic in the Tangent Space: Improved Editing of Pre-Trained Models | 2023 | NeurIPS |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Unraveling LoRA Interference: Orthogonal Subspaces for Robust Model Merging | 2025 | Llama3-8B | |

| Efficient Model Editing with Task-Localized Sparse Fine-tuning | 2024 |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Mitigating Parameter Interference in Model Merging via Sharpness-Aware Fine-Tuning | 2025 |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Model Assembly Learning with Heterogeneous Layer Weight Merging | 2025 | ICLR Workshop | |

| Training-free Heterogeneous Model Merging | 2025 | Arxiv | |

| Knowledge fusion of large language models | 2024 | ICLR | Llama-2 7B, OpenLLaMA 7B, MPT 7B |

| Knowledge Fusion of Chat LLMs: A Preliminary Technical Report | 2024 | Arxiv | NH2-Mixtral-8x7B, NH2-Solar-10.7B, and OpenChat-3.5-7B |

| On Cross-Layer Alignment for Model Fusion of Heterogeneous Neural Networks | 2023 | ICASSP | |

| GAN Cocktail: mixing GANs without dataset access | 2022 | ECCV |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Composing parameter-efficient modules with arithmetic operation | 2023 | NeurIPS | |

| Editing models with task arithmetic | 2023 | ICLR | |

| Model fusion via optimal transport | 2020 | NeurIPS | |

| Weight averaging for neural networks and local resampling schemes | 1996 | AAAI Workshop | |

| Acceleration of stochastic approximation by averaging | 1992 | IAM Journal on Control and Optimization | |

| Animating rotation with quaternion curves (Spherical Linear Interpolation (SLERP) Model Merging) | 1985 | SIGGRAPH Computer Graphics |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Multi-Task Model Fusion via Adaptive Merging | 2025 | ICASSP | |

| Tint Your Models Task-wise for Improved Multi-task Model Merging | 2024 | Arxiv | |

| Parameter-Efficient Interventions for Enhanced Model Merging | 2024 | Arxiv | |

| Rethink the Evaluation Protocol of Model Merging on Classification Task | 2024 | Arxiv | |

| SurgeryV2: Bridging the Gap Between Model Merging and Multi-Task Learning with Deep Representation Surgery | 2024 | Arxiv | |

| Representation Surgery for Multi-Task Model Merging | 2024 | ICML |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Model Merging for Knowledge Editing | 2025 | ACL | Qwen2.5-7B-Instruct |

| Exact Unlearning of Finetuning Data via Model Merging at Scale | 2025 | Arxiv | |

| ZJUKLAB at SemEval-2025 Task 4: Unlearning via Model Merging | 2025 | Arxiv | OLMo-7B-0724-Instruct |

| Exact Unlearning of Finetuning Data via Model Merging at Scale | 2025 | ICLR 2025 Workshop MCDC | |

| NegMerge: Consensual Weight Negation for Strong Machine Unlearning | 2024 | Arxiv | |

| Split, Unlearn, Merge: Leveraging Data Attributes for More Effective Unlearning in LLMs | 2024 | Arxiv | ZEPHYR-7B-BETA, LLAMA2-7B |

| Towards Safer Large Language Models through Machine Unlearning | 2024 | ACL | LLAMA2-7B, LLAMA2-13B |

| Editing models with task arithmetic | 2023 | ICLR | |

| Forgetting before Learning: Utilizing Parametric Arithmetic for Knowledge Updating in Large Language Model | 2023 | Arxiv | LLAMA2-7B, LLAMA-7B, BLOOM-7B |

| Fuse to Forget: Bias Reduction and Selective Memorization through Model Fusion | 2023 | Arxiv |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Sub-MoE: Efficient Mixture-of-Expert LLMs Compression via Subspace Expert Merging | 2025 | Arxiv | Mixtral 8x7B, Qwen3- 235B-A22B, Qwen1.5-MoE-A2.7B, and DeepSeekMoE-16B-Base |

| Merging Experts into One: Improving Computational Efficiency of Mixture of Experts | 2023 | EMNLP |

Note: The following papers are from: LLM Merging Competition at NeurIPS 2024

| Paper Title | Year | Conference/Journal | Models |

|---|---|---|---|

| Llm merging: Building llms efficiently through merging | 2024 | LLM Merging Competition at NeurIPS | - |

| Towards an approach combining Knowledge Graphs and Prompt Engineering for Merging Large Language Models | 2024 | LLM Merging Competition at NeurIPS | meta-llama/Llama-2-7b; microsoft_phi1/2/3 |

| Model Merging using Geometric Median of Task Vectors | 2024 | LLM Merging Competition at NeurIPS | flan_t5_xl |

| Interpolated Layer-Wise Merging for NeurIPS 2024 LLM Merging Competition | 2024 | LLM Merging Competition at NeurIPS | suzume-llama-3-8B-multilingual-orpo-borda-top75, Barcenas-Llama3-8bORPO, Llama-3-8B-Ultra-Instruct-SaltSprinkle, MAmmoTH2-8B-Plus, Daredevil-8B |

| A Model Merging Method | 2024 | LLM Merging Competition at NeurIPS | - |

| Differentiable DARE-TIES for NeurIPS 2024 LLM Merging Competition | 2024 | LLM Merging Competition at NeurIPS | suzume-llama-3-8B-multilingualorpo-borda-top75, MAmmoTH2-8B-Plus and Llama-3-Refueled |

| LLM Merging Competition Technical Report: Efficient Model Merging with Strategic Model Selection, Merging, and Hyperparameter Optimization | 2024 | LLM Merging Competition at NeurIPS | MaziyarPanahi/Llama3-8B-Instruct-v0.8, MaziyarPanahi/Llama-3-8B-Instruct-v0.9, shenzhiwang/Llama3-8B-Chinese-Chat, lightblue/suzume-llama-3-8B-multilingual |

| Simple Llama Merge: What Kind of LLM Do We Need? | 2024 | LLM Merging Competition at NeurIPS | Hermes-2-Pro-Llama-3-8B, and Daredevil-8B |

| LLM Merging Competition Technical Report for NeurIPS 2024: Efficiently Building Large Language Models through Merging | 2024 | LLM Merging Competition at NeurIPS | Mistral-7B-Instruct94 v2, Llama3-8B-Instruct, Flan-T5-large, Gemma-7B-Instruct, and WizardLM-2-7B |

| MoD: A Distribution-Based Approach for Merging Large Language Models | 2024 | LLM Merging Competition at NeurIPS | Qwen2.5-1.5B and Qwen2.5-7B |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Jointly training large autoregressive multimodal models | 2024 | ICLR | |

| Model Composition for Multimodal Large Language Models | 2024 | ACL | Vicuna-7B-v1.5 |

| π-Tuning: Transferring Multimodal Foundation Models with Optimal Multi-task Interpolation | 2023 | ICML | |

| An Empirical Study of Multimodal Model Merging | 2023 | EMNLP | |

| UnIVAL: Unified Model for Image, Video, Audio and Language Tasks | 2023 | TMLR |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Multimodal Attention Merging for Improved Speech Recognition and Audio Event Classification | 2024 | ICASSP Workshop |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Linear Combination of Saved Checkpoints Makes Consistency and Diffusion Models Better | 2024 | Arxiv | |

| A Unified Module for Accelerating STABLE-DIFFUSION: LCM-LORA | 2024 | Arxiv |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Decouple-Then-Merge: Towards Better Training for Diffusion Models | 2024 | Arxiv | |

| SELMA: Learning and Merging Skill-Specific Text-to-Image Experts with Auto-Generated Data | 2024 | Arxiv |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Extrapolating and Decoupling Image-to-Video Generation Models: Motion Modeling is Easier Than You Think | 2025 | CVPR | Dynamicrafter,SVD |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Pareto Merging: Multi-Objective Optimization for Preference-Aware Model Merging | 2025 | ICML | |

| Bone Soups: A Seek-and-Soup Model Merging Approach for Controllable Multi-Objective Generation | 2025 | Arxiv | LLaMA-2 7B |

| You Only Merge Once: Learning the Pareto Set of Preference-Aware Model Merging | 2024 | Arxiv | |

| Towards Efficient Pareto Set Approximation via Mixture of Experts Based Model Fusion | 2024 | Arxiv | |

| MAP: Low-compute Model Merging with Amortized Pareto Fronts via Quadratic Approximation | 2024 | Arxiv | Llama3-8B |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| DEM: Distribution Edited Model for Training with Mixed Data Distributions | 2024 | Arxiv | OpenLLaMA-7B, OpenLLaMA-13B |

| Merging Vision Transformers from Different Tasks and Domains | 2023 | Arxiv |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| ForkMerge: Mitigating Negative Transfer in Auxiliary-Task Learning | 2023 | NeurIPS |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Selecting and Merging: Towards Adaptable and Scalable Named Entity Recognition with Large Language Models | 2025 | Arxiv | Qwen2.5-7B, Llama3.1-8B |

| Harmonizing and Merging Source Models for CLIP-based Domain Generalization | 2025 | Arxiv | |

| Realistic Evaluation of Model Merging for Compositional Generalization | 2024 | Arxiv | |

| Layer-wise Model Merging for Unsupervised Domain Adaptation in Segmentation Tasks | 2024 | Arxiv | |

| Training-Free Model Merging for Multi-target Domain Adaptation | 2024 | Arxiv | |

| Domain Adaptation of Llama3-70B-Instruct through Continual Pre-Training and Model Merging: A Comprehensive Evaluation | 2024 | Arxiv | Llama3-70B |

| Ensemble of averages: Improving model selection and boosting performance in domain generalization | 2022 | NeurIPS | |

| Swad: Domain generalization by seeking flat minima | 2021 | NeurIPS |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Unlocking Tuning-Free Few-Shot Adaptability in Visual Foundation Models by Recycling Pre-Tuned LoRAs | 2025 | CVPR | |

| LoRA-Flow: Dynamic LoRA Fusion for Large Language Models in Generative Tasks | 2024 | ACL | Llama-2- 7B |

| LoraHub: Efficient Cross-Task Generalization via Dynamic LoRA Composition | 2024 | COLM | Llama-2-7B, Llama-2-13B |

| LoraRetriever: Input-Aware LoRA Retrieval and Composition for Mixed Tasks in the Wild | 2024 | ACL | |

| Does Combining Parameter-efficient Modules Improve Few-shot Transfer Accuracy? | 2024 | Arxiv | |

| MerA: Merging pretrained adapters for few-shot learning | 2023 | Arxiv | |

| Multi-Head Adapter Routing for Cross-Task Generalization | 2023 | NeurIPS |

| Paper Title | Year | Conference/Journal | Remark |

|---|---|---|---|

| Be Cautious When Merging Unfamiliar LLMs: A Phishing Model Capable of Stealing Privacy | 2025 | ACL | Llama-3.2-3b-it, Gemma-2-2b-it, Qwen-2.5-3b-it, and Phi-3.5-mini-it |

| Merge Hijacking: Backdoor Attacks to Model Merging of Large Language Models | 2025 | Arxiv | LLaMA3.1-8B |

| From Purity to Peril: Backdooring Merged Models From “Harmless” Benign Components | 2025 | Arxiv | LLaMA2-7B-chat, Mistral-7B-v0.1 |

| Merger-as-a-Stealer: Stealing Targeted PII from Aligned LLMs with Model Merging | 2025 | Arxiv | |

| Be Cautious When Merging Unfamiliar LLMs: A Phishing Model Capable of Stealing Privacy | 2025 | Arxiv | |

| LoBAM: LoRA-Based Backdoor Attack on Model Merging | 2024 | Arxiv | |

| BadMerging: Backdoor Attacks Against Model Merging | 2024 | CCS | |

| LoRA-as-an-Attack! Piercing LLM Safety Under The Share-and-Play Scenario | 2024 | ACL | Llama-2-7B |

Star History

We welcome all researchers to contribute to this repository 'model merging in foundation models or machine learning'.

If you have a related paper that was not added to the library, please contact us.

Email: [email protected] / [email protected]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-Model-Merging-Methods-Theories-Applications

Similar Open Source Tools

Awesome-Model-Merging-Methods-Theories-Applications

A comprehensive repository focusing on 'Model Merging in LLMs, MLLMs, and Beyond', providing an exhaustive overview of model merging methods, theories, applications, and future research directions. The repository covers various advanced methods, applications in foundation models, different machine learning subfields, and tasks like pre-merging methods, architecture transformation, weight alignment, basic merging methods, and more.

Awesome-LLM-Constrained-Decoding

Awesome-LLM-Constrained-Decoding is a curated list of papers, code, and resources related to constrained decoding of Large Language Models (LLMs). The repository aims to facilitate reliable, controllable, and efficient generation with LLMs by providing a comprehensive collection of materials in this domain.

Awesome-Resource-Efficient-LLM-Papers

A curated list of high-quality papers on resource-efficient Large Language Models (LLMs) with a focus on various aspects such as architecture design, pre-training, fine-tuning, inference, system design, and evaluation metrics. The repository covers topics like efficient transformer architectures, non-transformer architectures, memory efficiency, data efficiency, model compression, dynamic acceleration, deployment optimization, support infrastructure, and other related systems. It also provides detailed information on computation metrics, memory metrics, energy metrics, financial cost metrics, network communication metrics, and other metrics relevant to resource-efficient LLMs. The repository includes benchmarks for evaluating the efficiency of NLP models and references for further reading.

Awesome-Tabular-LLMs

This repository is a collection of papers on Tabular Large Language Models (LLMs) specialized for processing tabular data. It includes surveys, models, and applications related to table understanding tasks such as Table Question Answering, Table-to-Text, Text-to-SQL, and more. The repository categorizes the papers based on key ideas and provides insights into the advancements in using LLMs for processing diverse tables and fulfilling various tabular tasks based on natural language instructions.

LLM4EC

LLM4EC is an interdisciplinary research repository focusing on the intersection of Large Language Models (LLM) and Evolutionary Computation (EC). It provides a comprehensive collection of papers and resources exploring various applications, enhancements, and synergies between LLM and EC. The repository covers topics such as LLM-assisted optimization, EA-based LLM architecture search, and applications in code generation, software engineering, neural architecture search, and other generative tasks. The goal is to facilitate research and development in leveraging LLM and EC for innovative solutions in diverse domains.

Awesome-Knowledge-Distillation-of-LLMs

A collection of papers related to knowledge distillation of large language models (LLMs). The repository focuses on techniques to transfer advanced capabilities from proprietary LLMs to smaller models, compress open-source LLMs, and refine their performance. It covers various aspects of knowledge distillation, including algorithms, skill distillation, verticalization distillation in fields like law, medical & healthcare, finance, science, and miscellaneous domains. The repository provides a comprehensive overview of the research in the area of knowledge distillation of LLMs.

LLM-KG4QA

LLM-KG4QA is a repository focused on the integration of Large Language Models (LLMs) and Knowledge Graphs (KGs) for Question Answering (QA). It covers various aspects such as using KGs as background knowledge, reasoning guideline, and refiner/filter. The repository provides detailed information on pre-training, fine-tuning, and Retrieval Augmented Generation (RAG) techniques for enhancing QA performance. It also explores complex QA tasks like Explainable QA, Multi-Modal QA, Multi-Document QA, Multi-Hop QA, Multi-run and Conversational QA, Temporal QA, Multi-domain and Multilingual QA, along with advanced topics like Optimization and Data Management. Additionally, it includes benchmark datasets, industrial and scientific applications, demos, and related surveys in the field.

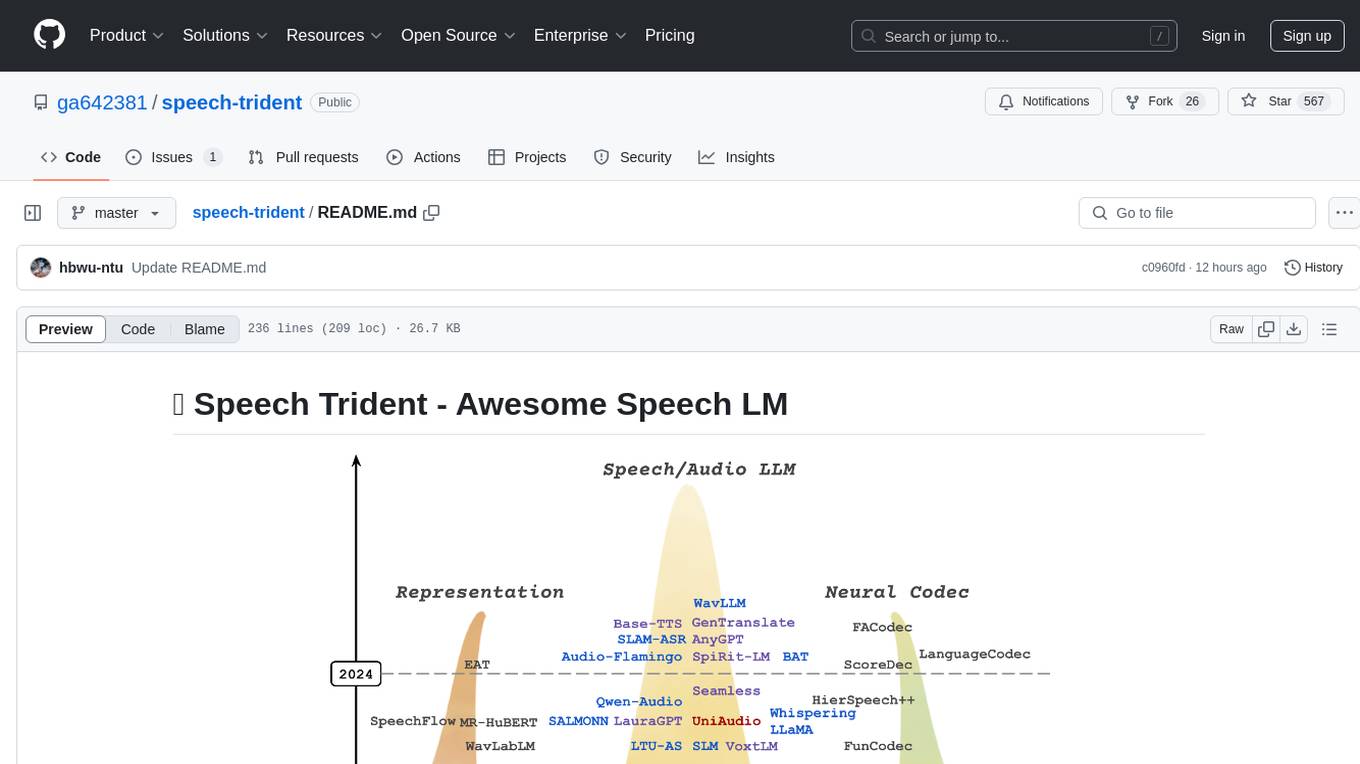

speech-trident

Speech Trident is a repository focusing on speech/audio large language models, covering representation learning, neural codec, and language models. It explores speech representation models, speech neural codec models, and speech large language models. The repository includes contributions from various researchers and provides a comprehensive list of speech/audio language models, representation models, and codec models.

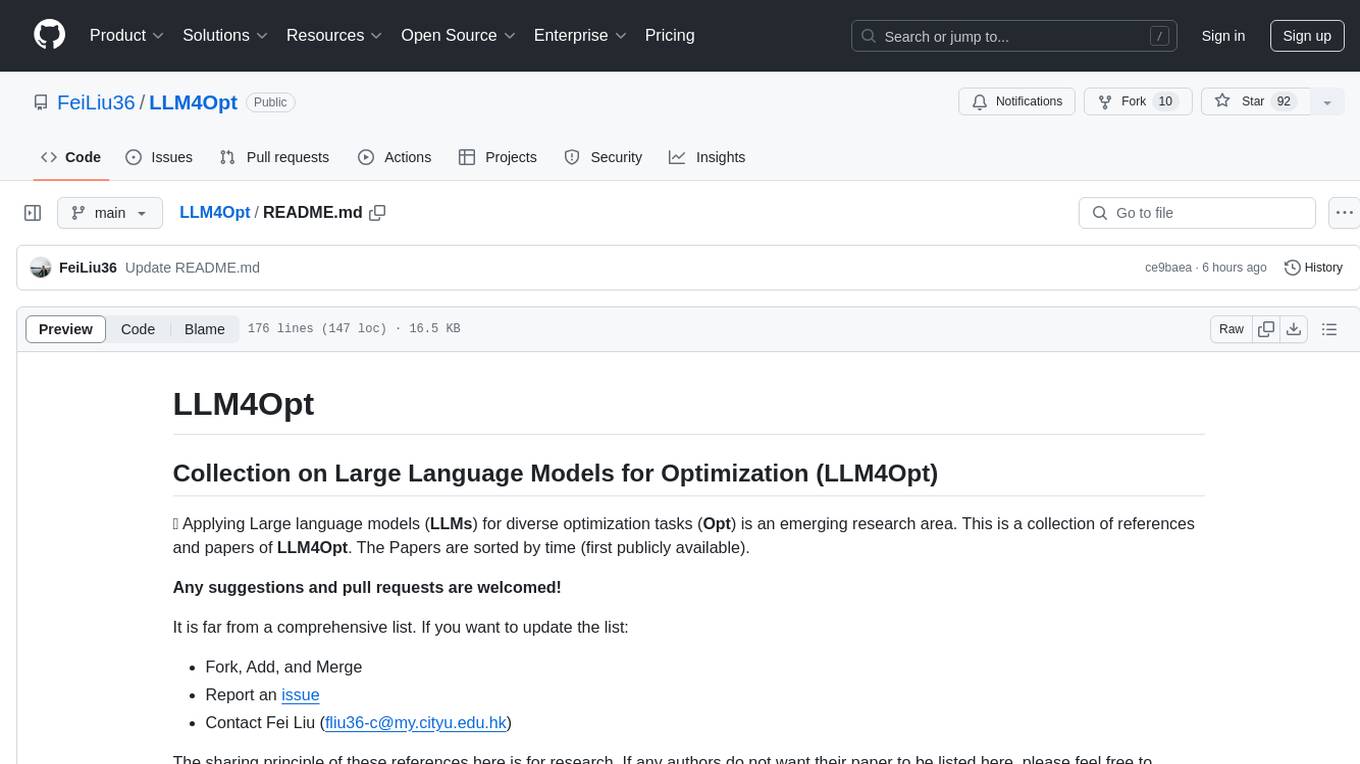

LLM4Opt

LLM4Opt is a collection of references and papers focusing on applying Large Language Models (LLMs) for diverse optimization tasks. The repository includes research papers, tutorials, workshops, competitions, and related collections related to LLMs in optimization. It covers a wide range of topics such as algorithm search, code generation, machine learning, science, industry, and more. The goal is to provide a comprehensive resource for researchers and practitioners interested in leveraging LLMs for optimization tasks.

are-copilots-local-yet

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

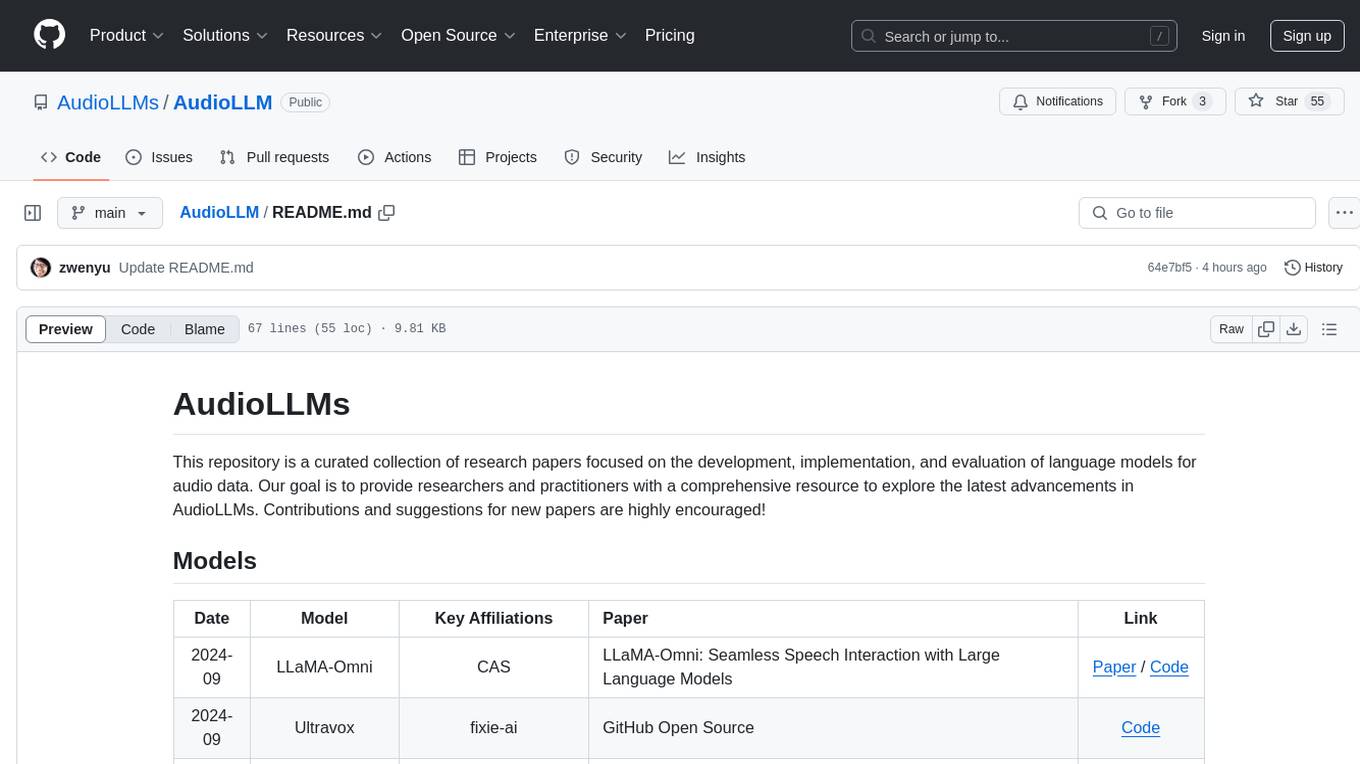

AudioLLM

AudioLLMs is a curated collection of research papers focusing on developing, implementing, and evaluating language models for audio data. The repository aims to provide researchers and practitioners with a comprehensive resource to explore the latest advancements in AudioLLMs. It includes models for speech interaction, speech recognition, speech translation, audio generation, and more. Additionally, it covers methodologies like multitask audioLLMs and segment-level Q-Former, as well as evaluation benchmarks like AudioBench and AIR-Bench. Adversarial attacks such as VoiceJailbreak are also discussed.

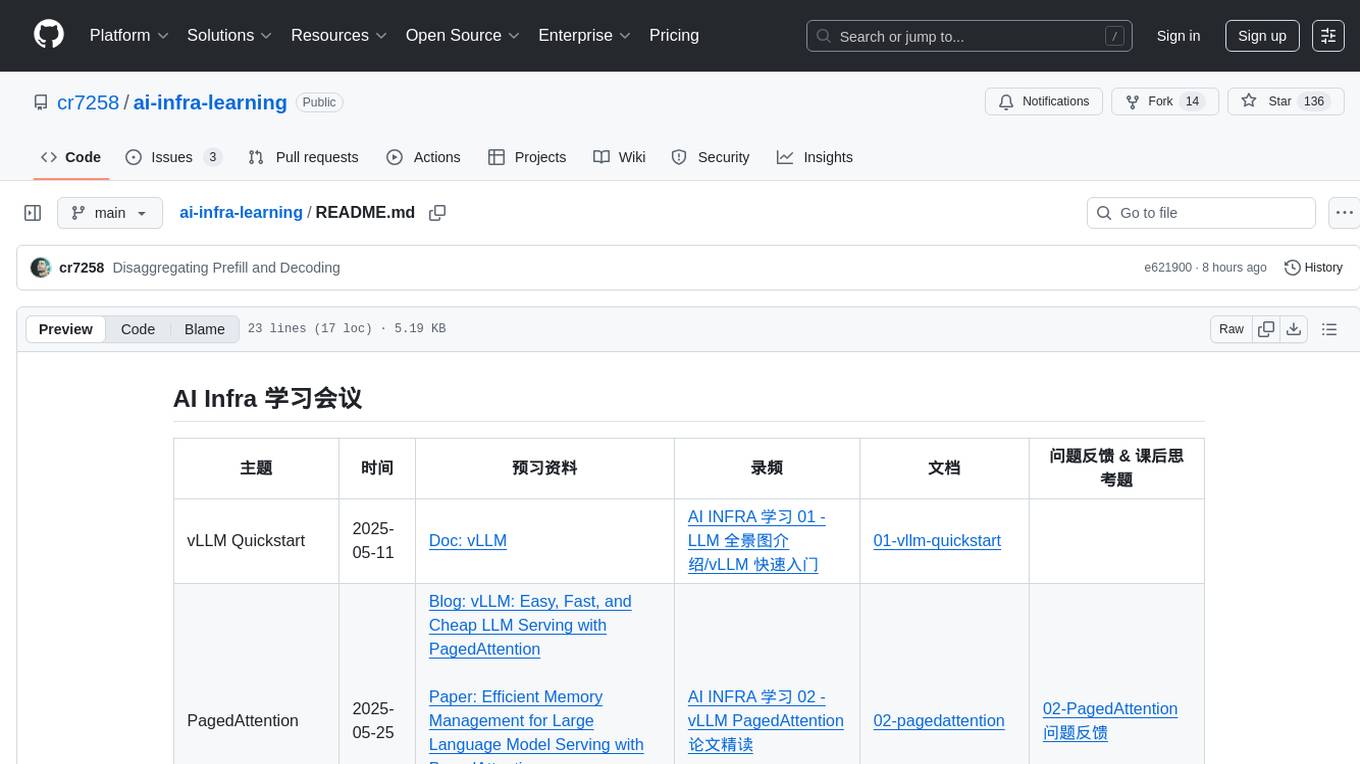

ai-infra-learning

AI Infra Learning is a repository focused on providing resources and materials for learning about various topics related to artificial intelligence infrastructure. The repository includes documentation, papers, videos, and blog posts covering different aspects of AI infrastructure, such as large language models, memory management, decoding techniques, and text generation. Users can access a wide range of materials to deepen their understanding of AI infrastructure and improve their skills in this field.

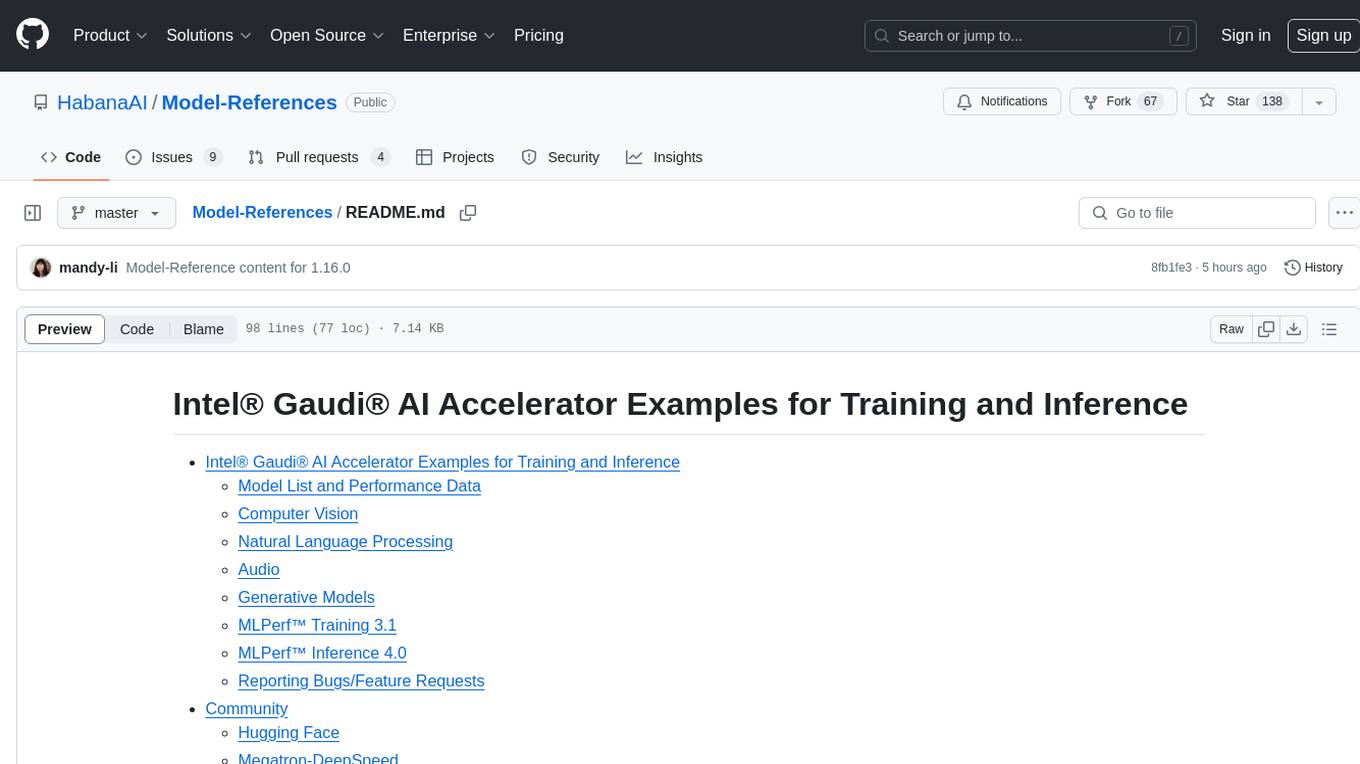

Model-References

The 'Model-References' repository contains examples for training and inference using Intel Gaudi AI Accelerator. It includes models for computer vision, natural language processing, audio, generative models, MLPerf™ training, and MLPerf™ inference. The repository provides performance data and model validation information for various frameworks like PyTorch. Users can find examples of popular models like ResNet, BERT, and Stable Diffusion optimized for Intel Gaudi AI accelerator.

CogVLM2

CogVLM2 is a new generation of open source models that offer significant improvements in benchmarks such as TextVQA and DocVQA. It supports 8K content length, image resolution up to 1344 * 1344, and both Chinese and English languages. The project provides basic calling methods, fine-tuning examples, and OpenAI API format calling examples to help developers quickly get started with the model.

nntrainer

NNtrainer is a software framework for training neural network models on devices with limited resources. It enables on-device fine-tuning of neural networks using user data for personalization. NNtrainer supports various machine learning algorithms and provides examples for tasks such as few-shot learning, ResNet, VGG, and product rating. It is optimized for embedded devices and utilizes CBLAS and CUBLAS for accelerated calculations. NNtrainer is open source and released under the Apache License version 2.0.

For similar tasks

Awesome-Model-Merging-Methods-Theories-Applications

A comprehensive repository focusing on 'Model Merging in LLMs, MLLMs, and Beyond', providing an exhaustive overview of model merging methods, theories, applications, and future research directions. The repository covers various advanced methods, applications in foundation models, different machine learning subfields, and tasks like pre-merging methods, architecture transformation, weight alignment, basic merging methods, and more.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

llm-structured-output

This repository contains a library for constraining LLM generation to structured output, enforcing a JSON schema for precise data types and property names. It includes an acceptor/state machine framework, JSON acceptor, and JSON schema acceptor for guiding decoding in LLMs. The library provides reference implementations using Apple's MLX library and examples for function calling tasks. The tool aims to improve LLM output quality by ensuring adherence to a schema, reducing unnecessary output, and enhancing performance through pre-emptive decoding. Evaluations show performance benchmarks and comparisons with and without schema constraints.

HookPHP

HookPHP is an open-source project that provides a PHP extension for hooking into various aspects of PHP applications. It allows developers to easily extend and customize the behavior of their PHP applications by providing hooks at key points in the execution flow. With HookPHP, developers can efficiently add custom functionality, modify existing behavior, and enhance the overall performance of their PHP applications. The project is licensed under the MIT license, making it accessible for developers to use and contribute to.

ai-gateway

Envoy AI Gateway is an open source project that utilizes Envoy Gateway to manage request traffic from application clients to Generative AI services. The project aims to provide a seamless and efficient solution for handling communication between clients and AI services. It is designed to enhance the performance and scalability of AI applications by leveraging the capabilities of Envoy Gateway. The project welcomes contributions from the community and encourages collaboration to further develop and improve the functionality of the AI Gateway.

aligner

Aligner is a model-agnostic alignment tool designed to efficiently correct responses from large language models. It redistributes initial answers to align with human intentions, improving performance across various LLMs. The tool can be applied with minimal training, enhancing upstream models and reducing hallucination. Aligner's 'copy and correct' method preserves the base structure while enhancing responses. It achieves significant performance improvements in helpfulness, harmlessness, and honesty dimensions, with notable success in boosting Win Rates on evaluation leaderboards.

AirLine

AirLine is a learnable edge-based line detection algorithm designed for various robotic tasks such as scene recognition, 3D reconstruction, and SLAM. It offers a novel approach to extracting line segments directly from edges, enhancing generalization ability for unseen environments. The algorithm balances efficiency and accuracy through a region-grow algorithm and local edge voting scheme for line parameterization. AirLine demonstrates state-of-the-art precision with significant runtime acceleration compared to other learning-based methods, making it ideal for low-power robots.

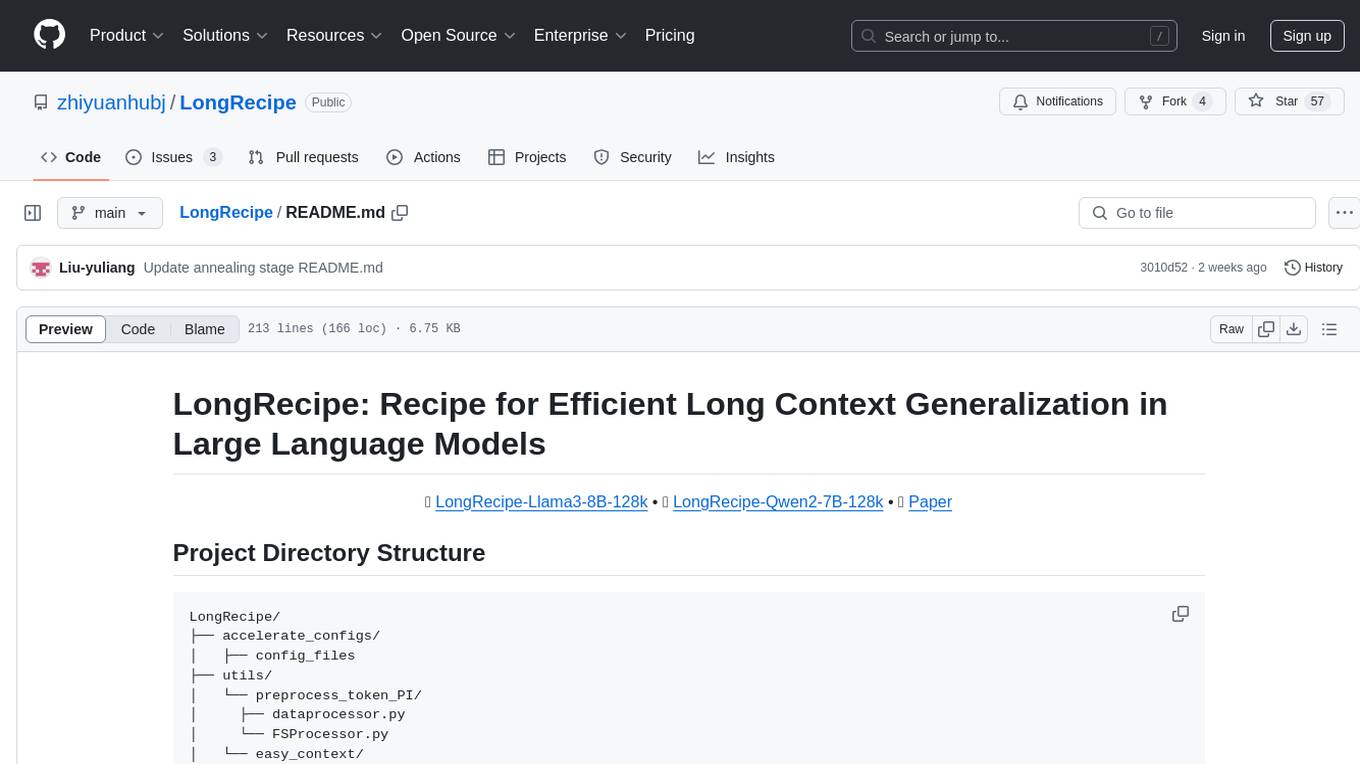

LongRecipe

LongRecipe is a tool designed for efficient long context generalization in large language models. It provides a recipe for extending the context window of language models while maintaining their original capabilities. The tool includes data preprocessing steps, model training stages, and a process for merging fine-tuned models to enhance foundational capabilities. Users can follow the provided commands and scripts to preprocess data, train models in multiple stages, and merge models effectively.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.