HookPHP

HookPHP基于C扩展搭建内置AI编程的架构系统-支持微服务部署|热插拔业务组件-集成业务模型|权限模型|UI组件库|多模板|多平台|多域名|多终端|多语言-含常驻内存|前后分离|API平台|LUA QQ群:679116380

Stars: 617

HookPHP is an open-source project that provides a PHP extension for hooking into various aspects of PHP applications. It allows developers to easily extend and customize the behavior of their PHP applications by providing hooks at key points in the execution flow. With HookPHP, developers can efficiently add custom functionality, modify existing behavior, and enhance the overall performance of their PHP applications. The project is licensed under the MIT license, making it accessible for developers to use and contribute to.

README:

[yaf]

extension=yaf

yaf.cache_config=1

yaf.use_namespace=1

The HookPHP project is open-sourced software licensed under the MIT license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for HookPHP

Similar Open Source Tools

HookPHP

HookPHP is an open-source project that provides a PHP extension for hooking into various aspects of PHP applications. It allows developers to easily extend and customize the behavior of their PHP applications by providing hooks at key points in the execution flow. With HookPHP, developers can efficiently add custom functionality, modify existing behavior, and enhance the overall performance of their PHP applications. The project is licensed under the MIT license, making it accessible for developers to use and contribute to.

sdk

The SDK repository contains a software development kit that provides tools, libraries, and documentation for developers to build applications for a specific platform or framework. It includes code samples, APIs, and other resources to streamline the development process and enhance the functionality of the applications. Developers can use the SDK to access platform-specific features, integrate with external services, and optimize performance. The repository is regularly updated to ensure compatibility with the latest platform updates and industry standards, making it a valuable resource for developers looking to create high-quality applications efficiently.

airconsole-api

The AirConsole Javascript API provides documentation and guides for developers to create projects that can be run on the AirConsole platform. The API allows developers to integrate features and functionalities specific to AirConsole, enabling them to build interactive and engaging games and applications for the platform. Developers can refer to the provided documentation and example projects to understand how to utilize the API effectively and create their own projects for AirConsole.

bytechef

ByteChef is an open-source, low-code, extendable API integration and workflow automation platform. It provides an intuitive UI Workflow Editor, event-driven & scheduled workflows, multiple flow controls, built-in code editor supporting Java, JavaScript, Python, and Ruby, rich component ecosystem, extendable with custom connectors, AI-ready with built-in AI components, developer-ready to expose workflows as APIs, version control friendly, self-hosted, scalable, and resilient. It allows users to build and visualize workflows, automate tasks across SaaS apps, internal APIs, and databases, and handle millions of workflows with high availability and fault tolerance.

bedrock-engineer

Bedrock Engineer is an AI assistant for software development tasks powered by Amazon Bedrock. It combines large language models with file system operations and web search functionality to support development processes. The autonomous AI agent provides interactive chat, file system operations, web search, project structure management, code analysis, code generation, data analysis, agent and tool customization, chat history management, and multi-language support. Users can select agents, customize them, select tools, and customize tools. The tool also includes a website generator for React.js, Vue.js, Svelte.js, and Vanilla.js, with support for inline styling, Tailwind.css, and Material UI. Users can connect to design system data sources and generate AWS Step Functions ASL definitions.

taipy

Taipy is an open-source Python library for easy, end-to-end application development, featuring what-if analyses, smart pipeline execution, built-in scheduling, and deployment tools.

airavata

Apache Airavata is a software framework for executing and managing computational jobs on distributed computing resources. It supports local clusters, supercomputers, national grids, academic and commercial clouds. Airavata utilizes service-oriented computing, distributed messaging, and workflow composition. It includes a server package with an API, client SDKs, and a general-purpose UI implementation called Apache Airavata Django Portal.

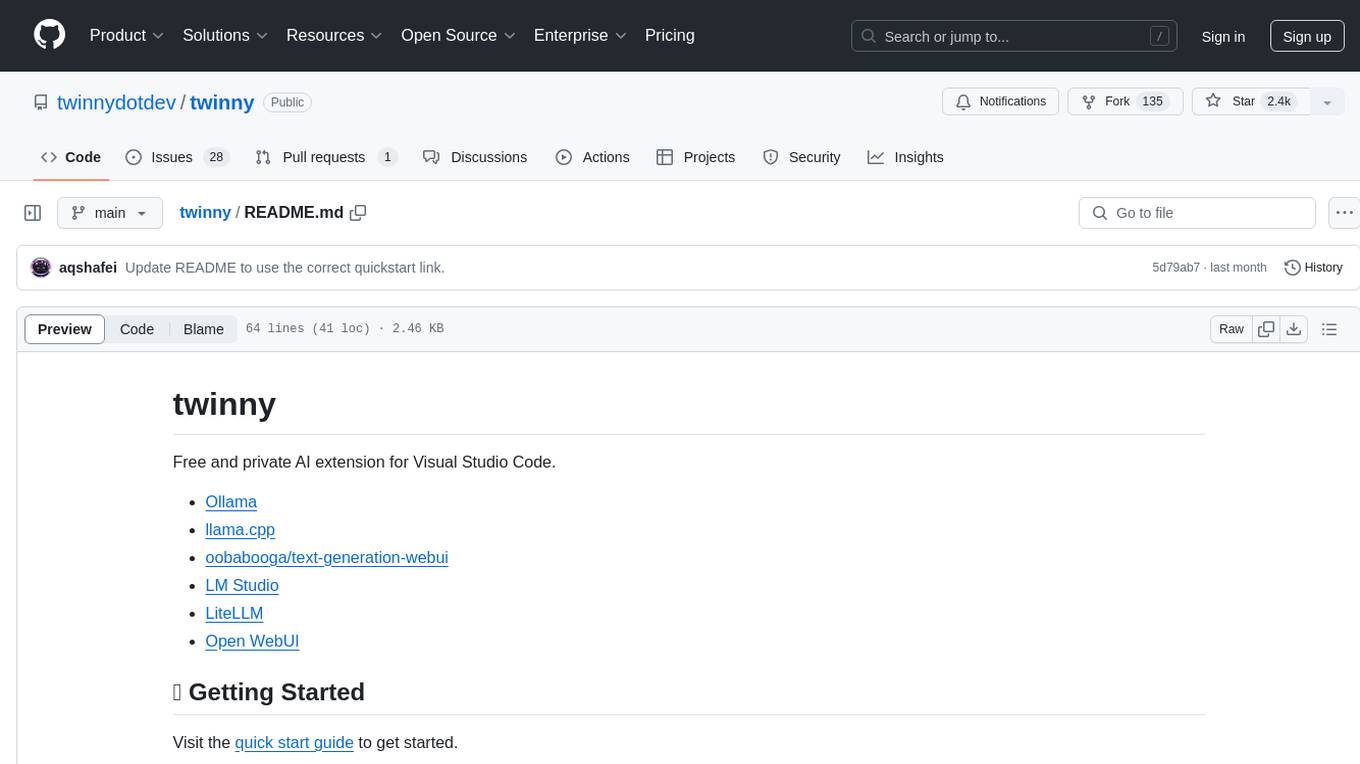

twinny

Twinny is a free and private AI extension for Visual Studio Code that offers AI-based code completion and code discussion features. It provides real-time code suggestions, function explanations, test generation, refactoring requests, and more. Twinny operates both online and offline, supports customizable API endpoints, conforms to OpenAI API standards, and offers various customization options for prompt templates, API providers, model names, and more. It is compatible with multiple APIs and allows users to accept code solutions directly in the editor, create new documents from code blocks, and copy generated code solution blocks. Twinny is open-source under the MIT license and welcomes contributions from the community.

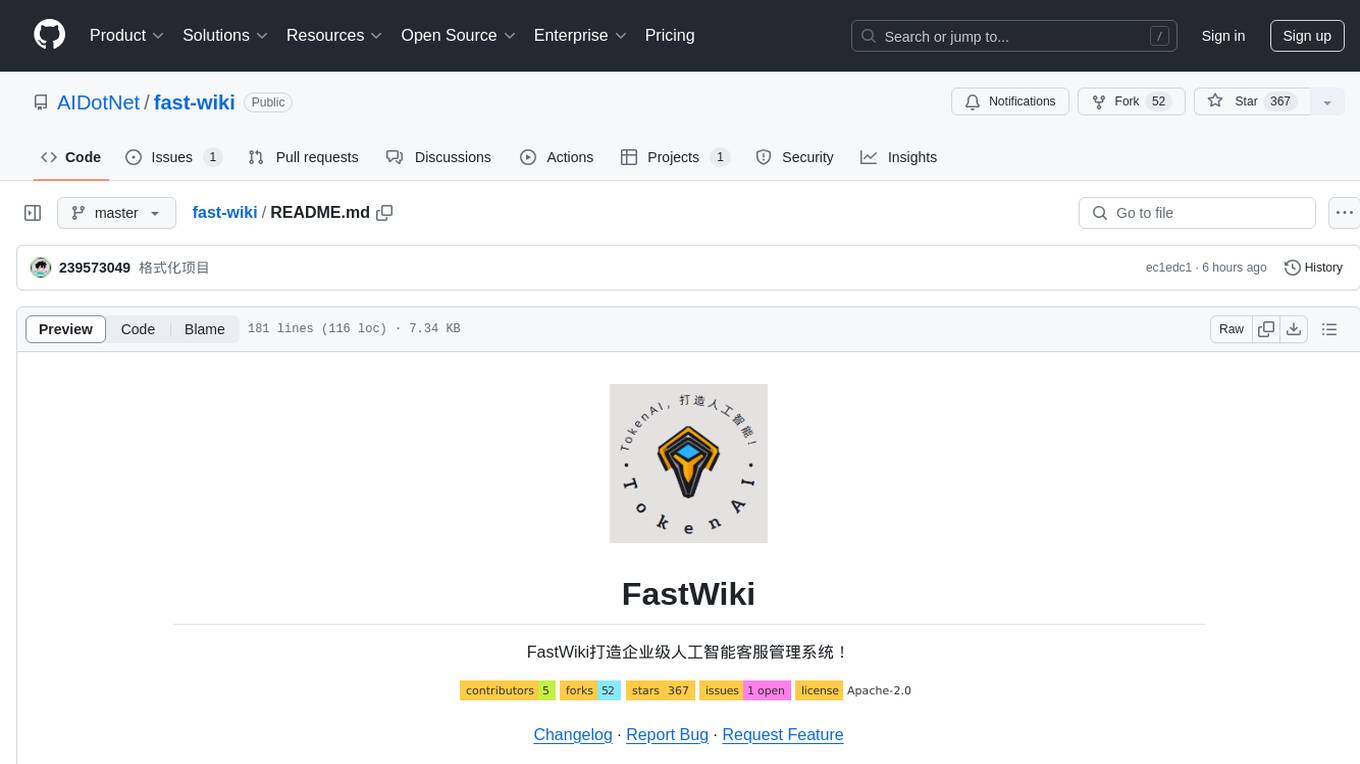

fast-wiki

FastWiki is an enterprise-level artificial intelligence customer service management system. It is a high-performance knowledge base system designed for large-scale information retrieval and intelligent search. Leveraging Microsoft's Semantic Kernel for deep learning and natural language processing, combined with .NET 8 and React framework, it provides an efficient, user-friendly, and scalable intelligent vector search platform. The system aims to offer an intelligent search solution that can understand and process complex queries, assisting users in quickly and accurately obtaining the needed information.

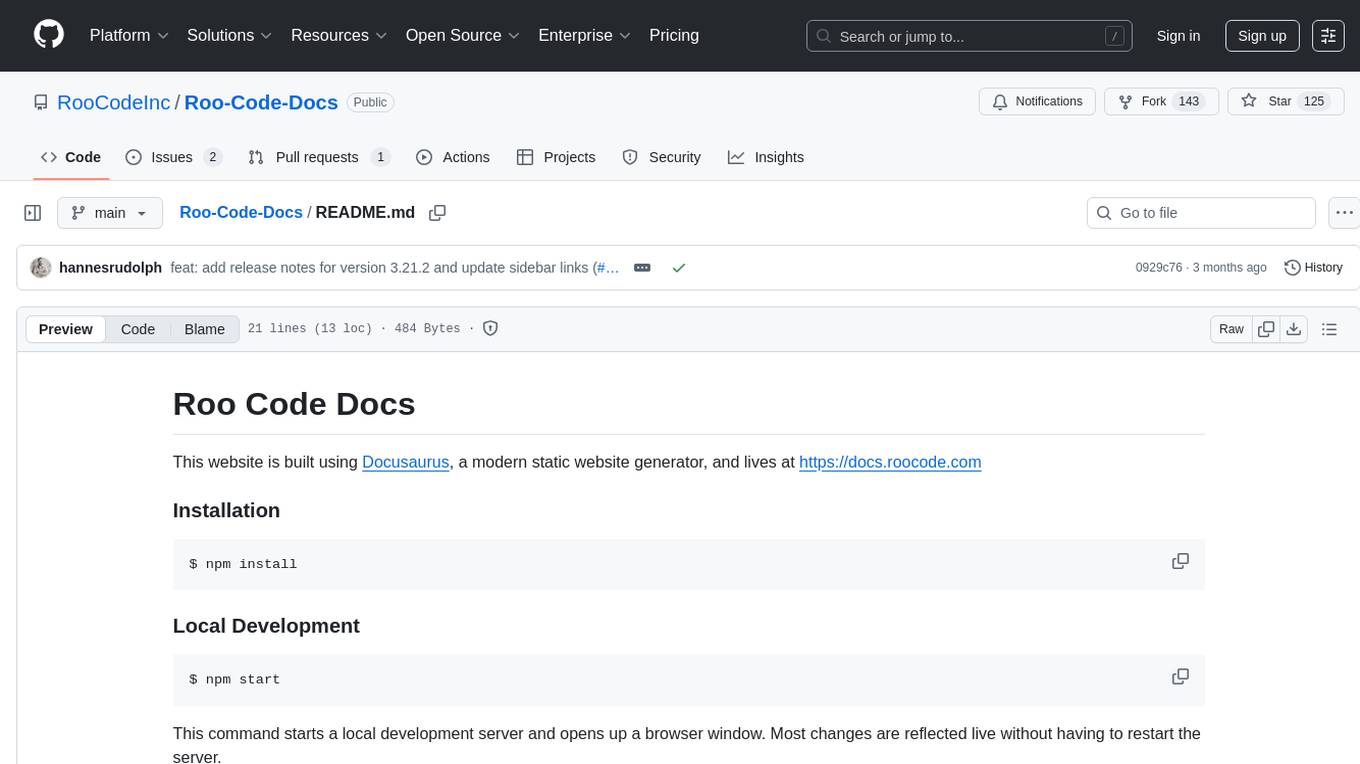

Roo-Code-Docs

Roo Code Docs is a website built using Docusaurus, a modern static website generator. It serves as a documentation platform for Roo Code, accessible at https://docs.roocode.com. The website provides detailed information and guides for users to navigate and utilize Roo Code effectively. With a clean and user-friendly interface, it offers a seamless experience for developers and users seeking information about Roo Code.

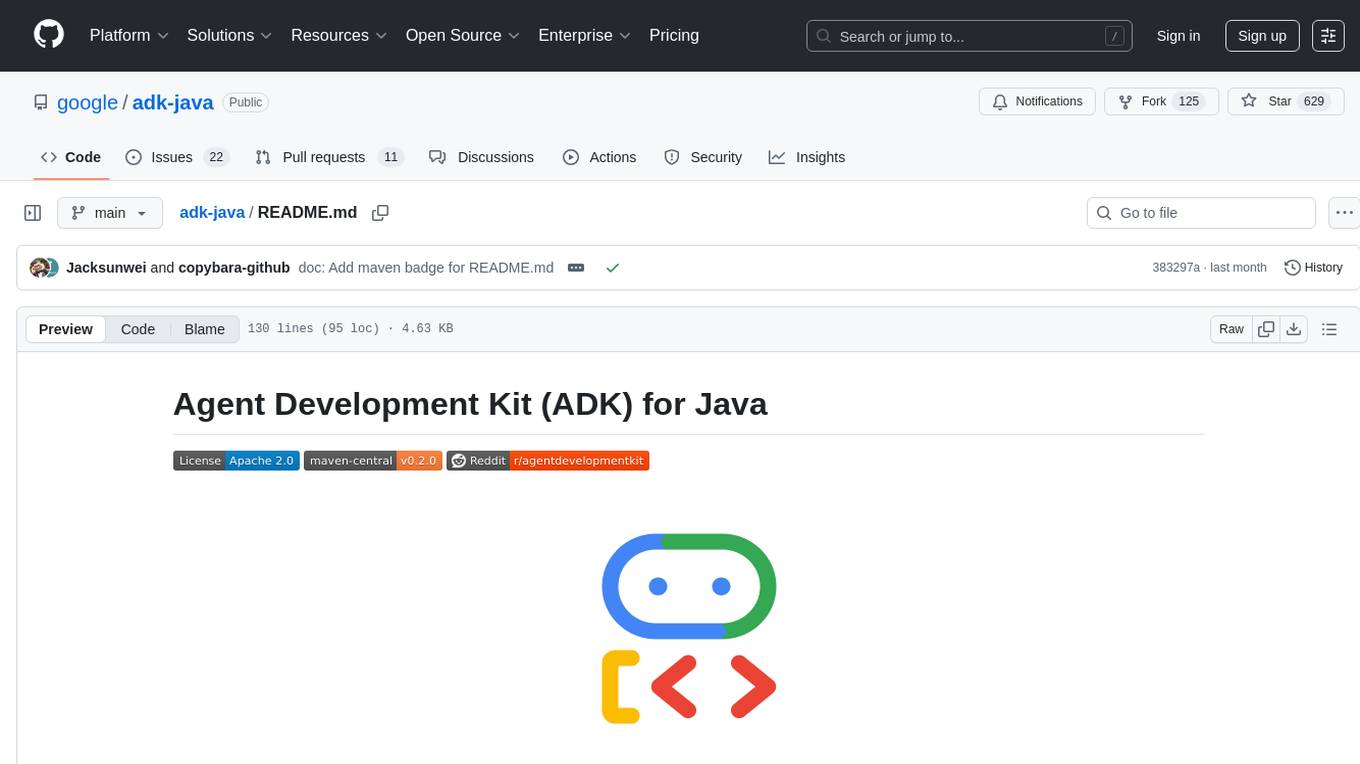

adk-java

Agent Development Kit (ADK) for Java is an open-source toolkit designed for developers to build, evaluate, and deploy sophisticated AI agents with flexibility and control. It allows defining agent behavior, orchestration, and tool use directly in code, enabling robust debugging, versioning, and deployment anywhere. The toolkit offers a rich tool ecosystem, code-first development approach, and support for modular multi-agent systems, making it ideal for creating advanced AI agents integrated with Google Cloud services.

super-agent-party

A 3D AI desktop companion with endless possibilities! This repository provides a platform for enhancing the LLM API without code modification, supporting seamless integration of various functionalities such as knowledge bases, real-time networking, multimodal capabilities, automation, and deep thinking control. It offers one-click deployment to multiple terminals, ecological tool interconnection, standardized interface opening, and compatibility across all platforms. Users can deploy the tool on Windows, macOS, Linux, or Docker, and access features like intelligent agent deployment, VRM desktop pets, Tavern character cards, QQ bot deployment, and developer-friendly interfaces. The tool supports multi-service providers, extensive tool integration, and ComfyUI workflows. Hardware requirements are minimal, making it suitable for various deployment scenarios.

OllamaKit

OllamaKit is a Swift library designed to simplify interactions with the Ollama API. It handles network communication and data processing, offering an efficient interface for Swift applications to communicate with the Ollama API. The library is optimized for use within Ollamac, a macOS app for interacting with Ollama models.

adk-python

Agent Development Kit (ADK) is an open-source, code-first Python toolkit for building, evaluating, and deploying sophisticated AI agents with flexibility and control. It is a flexible and modular framework optimized for Gemini and the Google ecosystem, but also compatible with other frameworks. ADK aims to make agent development feel more like software development, enabling developers to create, deploy, and orchestrate agentic architectures ranging from simple tasks to complex workflows.

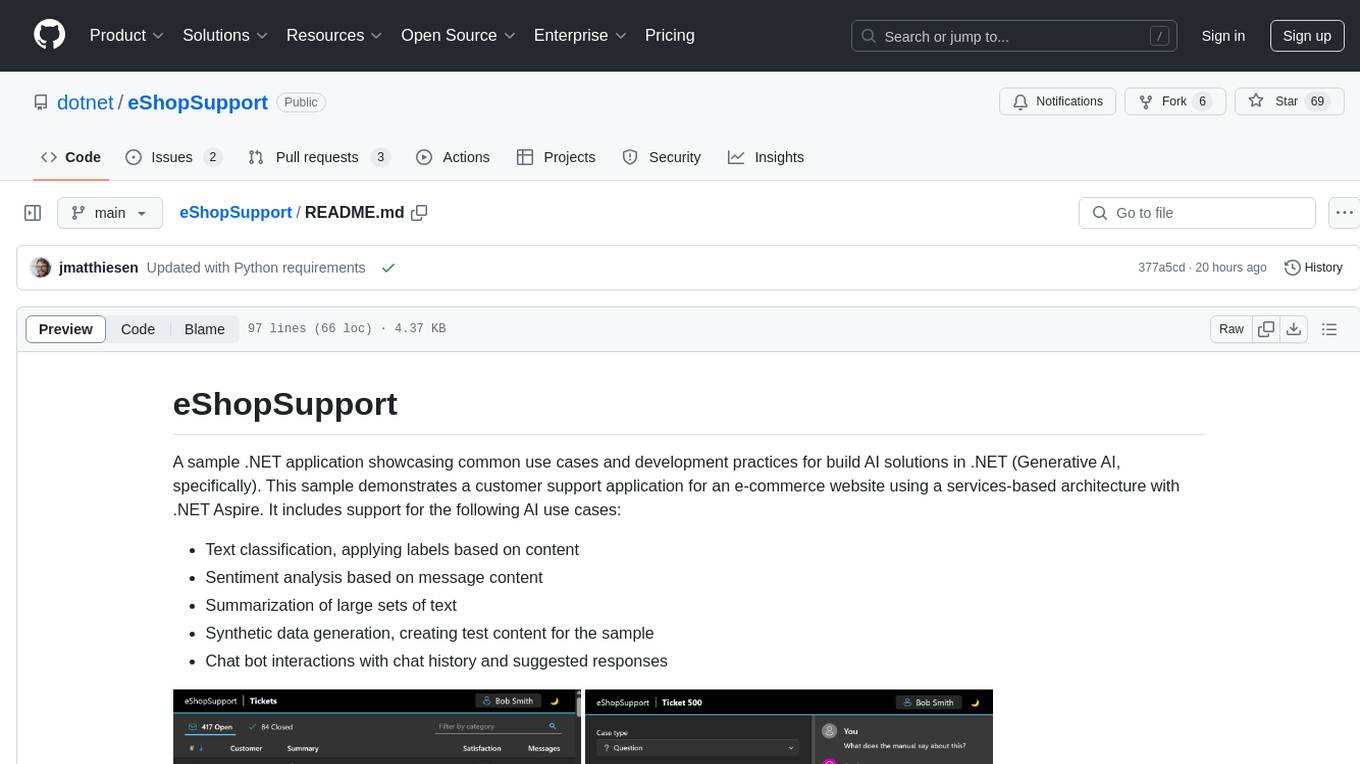

eShopSupport

eShopSupport is a sample .NET application showcasing common use cases and development practices for building AI solutions in .NET, specifically Generative AI. It demonstrates a customer support application for an e-commerce website using a services-based architecture with .NET Aspire. The application includes support for text classification, sentiment analysis, text summarization, synthetic data generation, and chat bot interactions. It also showcases development practices such as developing solutions locally, evaluating AI responses, leveraging Python projects, and deploying applications to the Cloud.

LayaAir

LayaAir engine, under the Layabox brand, is a 3D engine that supports full-platform publishing. It can be applied in various fields such as games, education, advertising, marketing, digital twins, metaverse, AR guides, VR scenes, architectural design, industrial design, etc.

For similar tasks

HookPHP

HookPHP is an open-source project that provides a PHP extension for hooking into various aspects of PHP applications. It allows developers to easily extend and customize the behavior of their PHP applications by providing hooks at key points in the execution flow. With HookPHP, developers can efficiently add custom functionality, modify existing behavior, and enhance the overall performance of their PHP applications. The project is licensed under the MIT license, making it accessible for developers to use and contribute to.

llm-d-inference-scheduler

This repository contains the Inference Scheduler, which makes optimized routing decisions for inference requests to the llm-d inference framework. It provides an 'Endpoint Picker (EPP)' component to the framework, scheduling incoming inference requests via a Kubernetes Gateway according to scheduler plugins. The EPP extends the Gateway API Inference Extension (GIE) project, adding custom features specific to llm-d, such as P/D Disaggregation. The scheduler architecture, routing logic, and plugin configuration are detailed in the Architecture Documentation. Contributions are welcome, and large changes should be discussed with maintainers first.

LeanCopilot

Lean Copilot is a tool that enables the use of large language models (LLMs) in Lean for proof automation. It provides features such as suggesting tactics/premises, searching for proofs, and running inference of LLMs. Users can utilize built-in models from LeanDojo or bring their own models to run locally or on the cloud. The tool supports platforms like Linux, macOS, and Windows WSL, with optional CUDA and cuDNN for GPU acceleration. Advanced users can customize behavior using Tactic APIs and Model APIs. Lean Copilot also allows users to bring their own models through ExternalGenerator or ExternalEncoder. The tool comes with caveats such as occasional crashes and issues with premise selection and proof search. Users can get in touch through GitHub Discussions for questions, bug reports, feature requests, and suggestions. The tool is designed to enhance theorem proving in Lean using LLMs.

langflow

Langflow is an open-source Python-powered visual framework designed for building multi-agent and RAG applications. It is fully customizable, language model agnostic, and vector store agnostic. Users can easily create flows by dragging components onto the canvas, connect them, and export the flow as a JSON file. Langflow also provides a command-line interface (CLI) for easy management and configuration, allowing users to customize the behavior of Langflow for development or specialized deployment scenarios. The tool can be deployed on various platforms such as Google Cloud Platform, Railway, and Render. Contributors are welcome to enhance the project on GitHub by following the contributing guidelines.

TypeGPT

TypeGPT is a Python application that enables users to interact with ChatGPT or Google Gemini from any text field in their operating system using keyboard shortcuts. It provides global accessibility, keyboard shortcuts for communication, and clipboard integration for larger text inputs. Users need to have Python 3.x installed along with specific packages and API keys from OpenAI for ChatGPT access. The tool allows users to run the program normally or in the background, manage processes, and stop the program. Users can use keyboard shortcuts like `/ask`, `/see`, `/stop`, `/chatgpt`, `/gemini`, `/check`, and `Shift + Cmd + Enter` to interact with the application in any text field. Customization options are available by modifying files like `keys.txt` and `system_prompt.txt`. Contributions are welcome, and future plans include adding support for other APIs and a user-friendly GUI.

flutter_gemma

Flutter Gemma is a family of lightweight, state-of-the art open models that bring the power of Google's Gemma language models directly to Flutter applications. It allows for local execution on user devices, supports both iOS and Android platforms, and offers LoRA support for tailored AI behavior. The tool provides a simple interface for integrating Gemma models into Flutter projects, enabling advanced AI capabilities without relying on external servers. Users can easily download pre-trained Gemma models, fine-tune them for specific use cases, and customize behavior using LoRA weights. The tool supports model and LoRA weight management, model initialization, response generation, and chat scenarios, with considerations for model size, LoRA weights, and production app deployment.

tiledesk-chatbot

Tiledesk Chatbot Engine is a Node.js-based framework for creating and managing interactive chatbots. It is designed to work seamlessly with the Tiledesk Design Studio, allowing easy design and customization of chatbot behavior. The engine is scalable, performant, and encourages collaboration and innovation through its open-source nature under the MIT license.

claude-code-settings

A repository collecting best practices for Claude Code settings and customization. It provides configuration files for customizing Claude Code's behavior and building an efficient development environment. The repository includes custom agents and skills for specific domains, interactive development workflow features, efficient development rules, and team workflow with Codex MCP. Users can leverage the provided configuration files and tools to enhance their development process and improve code quality.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.