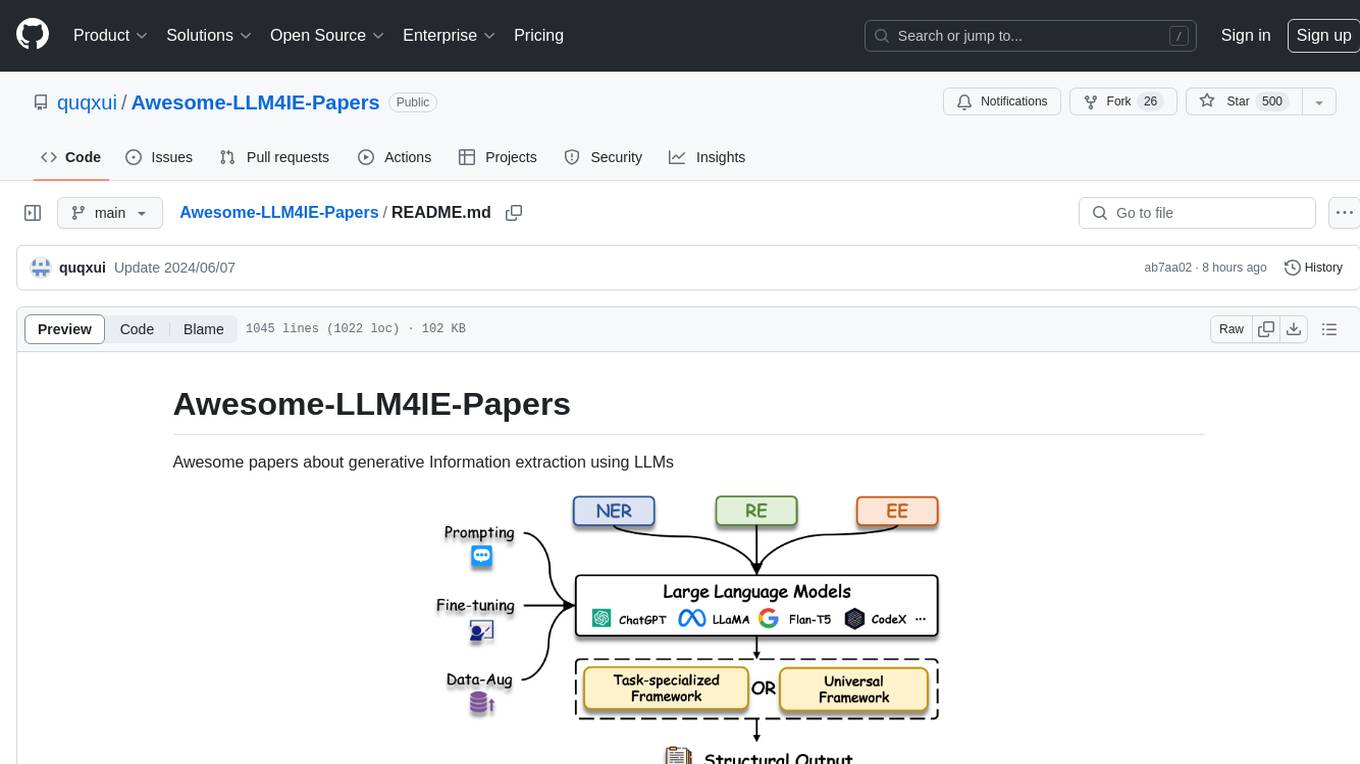

Awesome-LLM-Papers-Comprehensive-Topics

Awesome LLM Papers and repos on very comprehensive topics.

Stars: 172

README:

We provide awesome papers and repos on very comprehensive topics as follows.

CoT / VLM / Quantization / Grounding / Text2IMG&VID / Prompt Engineering / Prompt Tuning / Reasoning / Robot / Agent / Planning / Reinforcement-Learning / Feedback / In-Context-Learning / Few-Shot / Zero-Shot / Instruction Tuning / PEFT / RLHF / RAG / Embodied / VQA / Hallucination / Diffusion / Scaling / Context-Window / WorldModel / Memory / Zero-Shot / RoPE / Speech / Perception / Survey / Segmentation / Learge Action Model / Foundation / RoPE / LoRA / PPO / DPO

We strongly recommend checking our Notion table for an interactive experience.

| Category | Title | Links | Date |

|---|---|---|---|

| Zero-shot | Can Foundation Models Perform Zero-Shot Task Speci fication For Robot Manipulation? |

||

| World-model | Leveraging Pre-trained Large Language Models to Co nstruct and Utilize World Models for Model-based Task Planning |

ArXiv | 2023/05/07 |

| World-model | Learning and Leveraging World Models in Visual Rep resentation Learning |

||

| World-model | Language Models Meet World Models | ArXiv | |

| World-model | Learning to Model the World with Language | ArXiv | |

| World-model | Diffusion World Model | ArXiv | |

| World-model | Learning to Model the World with Language | ArXiv | |

| VisualPrompt | Ferret-v2: An Improved Baseline for Referring and Grounding with Large Language Models |

ArXiv | |

| VisualPrompt | Making Large Multimodal Models Understand Arbitrar y Visual Prompts |

ArXiv | |

| VisualPrompt | What does CLIP know about a red circle? Visual pro mpt engineering for VLMs |

ArXiv | |

| VisualPrompt | MOKA: Open-Vocabulary Robotic Manipulation through Mark-Based Visual Prompting |

ArXiv | |

| VisualPrompt | SoM : Set-of-Mark PromptingUnleashes Extraordinary Visual Grounding in GPT-4V |

ArXiv | |

| VisualPrompt | Set-of-Mark Prompting Unleashes Extraordinary Visu al Grounding in GPT-4V |

ArXiv, GitHub | |

| Video | MA-LMM: Memory-Augmented Large Multimodal Model fo r Long-Term Video Understanding |

ArXiv | |

| ViFM, Video | InternVideo2: Scaling Video Foundation Models for Multimodal Video Understanding |

ArXiv, GitHub | |

| VLM, World-model | Large World Model | ArXiv | |

| VLM, VQA | CogVLM: Visual Expert for Pretrained Language Mode ls |

ArXiv | 2023/11/06 |

| VLM, VQA | Chameleon: Plug-and-Play Compositional Reasoning w ith Large Language Models |

ArXiv | 2023/04/19 |

| VLM, VQA | DeepSeek-VL: Towards Real-World Vision-Language Un derstanding01 |

||

| VLM | PaLM: Scaling Language Modeling with Pathways | ArXiv | 2022/04/05 |

| VLM | ScreenAI: A Vision-Language Model for UI and Infog raphics Understanding |

||

| VLM | MoE-LLaVA: Mixture of Experts for Large Vision-Lan guage Models |

ArXiv, GitHub | |

| VLM | LLaVA-NeXT: Improved reasoning, OCR, and world kno wledge |

GitHub | |

| VLM | Mini-Gemini: Mining the Potential of Multi-modalit y Vision Language Models |

ArXiv | |

| Text-to-Image, World-model | World Model on Million-Length Video And Language W ith RingAttention |

ArXiv | |

| Tex2Img | Be Yourself: Bounded Attention for Multi-Subject T ext-to-Image Generation |

ArXiv | |

| Temporal | Explorative Inbetweening of Time and Space | ArXiv | |

| Survey, Video | Video Understanding with Large Language Models: A Survey |

ArXiv | |

| Survey, VLM | MM-LLMs: Recent Advances in MultiModal Large Langu age Models |

||

| Survey, Training | Understanding LLMs: A Comprehensive Overview from Training to Inference |

ArXiv | |

| Survey, TimeSeries | Large Models for Time Series and Spatio-Temporal D ata: A Survey and Outlook |

||

| Survey | Efficient Large Language Models: A Survey | ArXiv, GitHub | |

| Sora, Text-to-Video | Sora: A Review on Background, Technology, Limitati ons, and Opportunities of Large Vision Models |

||

| Sora, Text-to-Video | Mora: Enabling Generalist Video Generation via A M ulti-Agent Framework |

ArXiv | |

| Segmentation | LISA: Reasoning Segmentation via Large Language Mo del |

ArXiv | |

| Segmentation | GRES: Generalized Referring Expression Segmentatio n |

||

| Segmentation | Generalized Decoding for Pixel, Image, and Languag e |

ArXiv | |

| Segmentation | SEEM: Segment Everything Everywhere All at Once | ArXiv, GitHub | |

| Segmentation | SegGPT: Segmenting Everything In Context | ArXiv | |

| Segmentation | Grounded SAM: Assembling Open-World Models for Div erse Visual Tasks |

ArXiv | |

| Scaling | Leave No Context Behind: Efficient Infinite Contex t Transformers with Infini-attention |

ArXiv | |

| SLM, Scaling | Textbooks Are All You Need | ArXiv | |

| Robot, Zero-shot | BC-Z: Zero-Shot Task Generalization with Robotic I mitation Learning |

ArXiv | |

| Robot, Zero-shot | Universal Manipulation Interface: In-The-Wild Robo t Teaching Without In-The-Wild Robots |

ArXiv | |

| Robot, Zero-shot | Mirage: Cross-Embodiment Zero-Shot Policy Transfer with Cross-Painting |

ArXiv | |

| Robot, Task-Decompose, Zero-shot | Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents |

ArXiv | 2022/01/18 |

| Robot, Task-Decompose | SayPlan: Grounding Large Language Models using 3D Scene Graphs for Scalable Robot Task Planning |

ArXiv | 2023/07/12 |

| Robot, TAMP | LLM3:Large Language Model-based Task and Motion Pl anning with Motion Failure Reasoning |

ArXiv | |

| Robot, TAMP | Task and Motion Planning with Large Language Model s for Object Rearrangement |

ArXiv | |

| Robot, Survey | Toward General-Purpose Robots via Foundation Model s: A Survey and Meta-Analysis |

ArXiv | 2023/12/14 |

| Robot, Survey | Language-conditioned Learning for Robotic Manipula tion: A Survey |

ArXiv | 2023/12/17 |

| Robot, Survey | Robot Learning in the Era of Foundation Models: A Survey |

ArXiv | 2023/11/24 |

| Robot, Survey | Real-World Robot Applications of Foundation Models : A Review |

ArXiv | |

| Robot | OK-Robot: What Really Matters in Integrating Open- Knowledge Models for Robotics |

ArXiv | |

| Robot | RoCo: Dialectic Multi-Robot Collaboration with Lar ge Language Models |

ArXiv | |

| Robot | Interactive Language: Talking to Robots in Real Ti me |

ArXiv | |

| Robot | Reflexion: Language Agents with Verbal Reinforceme nt Learning |

ArXiv | 2023/03/20 |

| Robot | Generative Expressive Robot Behaviors using Large Language Models |

ArXiv | |

| Robot | RoboCat: A Self-Improving Generalist Agent for Rob otic Manipulation |

||

| Robot | Introspective Tips: Large Language Model for In-Co ntext Decision Making |

ArXiv | |

| Robot | PIVOT: Iterative Visual Prompting Elicits Actionab le Knowledge for VLMs |

ArXiv | |

| Robot | OCI-Robotics: Object-Centric Instruction Augmentat ion for Robotic Manipulation |

ArXiv | |

| Robot | DeliGrasp: Inferring Object Mass, Friction, and Co mpliance with LLMs for Adaptive and Minimally Deforming Grasp Policies |

ArXiv | |

| Robot | VoxPoser: Composable 3D Value Maps for Robotic Man ipulation with Language Models |

ArXiv | |

| Robot | Creative Robot Tool Use with Large Language Models | ArXiv | |

| Robot | AutoTAMP: Autoregressive Task and Motion Planning with LLMs as Translators and Checkers |

ArXiv | |

| RoPE | RoFormer: Enhanced Transformer with Rotary Positio n Embedding |

ArXiv | |

| Resource | [Resource] Paperswithcode | ArXiv | |

| Resource | [Resource] huggingface | ArXiv | |

| Resource | [Resource] dailyarxiv | ArXiv | |

| Resource | [Resource] Connectedpapers | ArXiv | |

| Resource | [Resource] Semanticscholar | ArXiv | |

| Resource | [Resource] AlphaSignal | ArXiv | |

| Resource | [Resource] arxiv-sanity | ArXiv | |

| Reinforcement-Learning, VIMA | FoMo Rewards: Can we cast foundation models as rew ard functions? |

ArXiv | |

| Reinforcement-Learning, Robot | Towards A Unified Agent with Foundation Models | ArXiv | |

| Reinforcement-Learning | Large Language Models Are Semi-Parametric Reinforc ement Learning Agents |

ArXiv | |

| Reinforcement-Learning | RLang: A Declarative Language for Describing Parti al World Knowledge to Reinforcement Learning Agents |

ArXiv | |

| Reasoning, Zero-shot | Large Language Models are Zero-Shot Reasoners | ArXiv | |

| Reasoning, VLM, VQA | MM-REACT: Prompting ChatGPT for Multimodal Reasoni ng and Action |

ArXiv | 2023/03/20 |

| Reasoning, Table | Large Language Models are few(1)-shot Table Reason ers |

ArXiv | |

| Reasoning, Symbolic | Symbol-LLM: Leverage Language Models for Symbolic System in Visual Human Activity Reasoning |

ArXiv | |

| Reasoning, Survey | Reasoning with Language Model Prompting: A Survey | ArXiv | |

| Reasoning, Robot | AlphaBlock: Embodied Finetuning for Vision-Languag e Reasoning in Robot Manipulation |

ArXiv | |

| Reasoning, Reward | LET’S REWARD STEP BY STEP: STEP-LEVEL REWARD MODEL AS THE NAVIGATORS FOR REASONING |

ArXiv | |

| Reasoning, Reinforcement-Learning | ReFT: Reasoning with Reinforced Fine-Tuning | ||

| Reasoning | Selection-Inference: Exploiting Large Language Mod els for Interpretable Logical Reasoning |

ArXiv | |

| Reasoning | ReConcile: Round-Table Conference Improves Reasoni ng via Consensus among Diverse LLMs. |

ArXiv | |

| Reasoning | Self-Discover: Large Language Models Self-Compose Reasoning Structures |

ArXiv | |

| Reasoning | Chain-of-Thought Reasoning Without Prompting | ArXiv | |

| Reasoning | Contrastive Chain-of-Thought Prompting | ArXiv | |

| Reasoning | Rephrase and Respond(RaR) | ||

| Reasoning | Take a Step Back: Evoking Reasoning via Abstractio n in Large Language Models |

ArXiv | |

| Reasoning | STaR: Bootstrapping Reasoning With Reasoning | ArXiv | 2022/05/28 |

| Reasoning | The Impact of Reasoning Step Length on Large Langu age Models |

ArXiv | |

| Reasoning | Beyond Natural Language: LLMs Leveraging Alternati ve Formats for Enhanced Reasoning and Communication |

ArXiv | |

| Reasoning | Large Language Models as General Pattern Machines | ArXiv | |

| RLHF, Reinforcement-Learning, Survey | A Survey of Reinforcement Learning from Human Feed back |

||

| RLHF | Secrets of RLHF in Large Language Models Part II: Reward Modeling |

ArXiv | |

| RAG, Temporal Logics | FreshLLMs: Refreshing Large Language Models with S earch Engine Augmentation |

ArXiv | |

| RAG, Survey | Large Language Models for Information Retrieval: A Survey |

||

| RAG, Survey | Retrieval-Augmented Generation for Large Language | ||

| RAG, Survey | Retrieval-Augmented Generation for Large Language Models: A Survey |

ArXiv | |

| RAG | Training Language Models with Memory Augmentation | ||

| RAG | Self-RAG: Learning to Retrieve, Generate, and Crit ique through Self-Reflection |

||

| RAG | RAG-Fusion: a New Take on Retrieval-Augmented Gene ration |

ArXiv | |

| RAG | RAFT: Adapting Language Model to Domain Specific R AG |

ArXiv | |

| RAG | Adaptive-RAG: Learning to Adapt Retrieval-Augmente d Large Language Models through Question Complexity |

ArXiv | |

| RAG | RAG vs Fine-tuning: Pipelines, Tradeoffs, and a Ca se Study on Agriculture |

ArXiv | |

| RAG | Fine-Tuning or Retrieval? Comparing Knowledge Inje ction in LLMs |

ArXiv | |

| Quantization, Scaling | SliceGPT: Compress Large Language Models by Deleti ng Rows and Columns |

ArXiv | |

| Prompting, Survey | A Systematic Survey of Prompt Engineering in Large Language Models: Techniques and Applications |

ArXiv | |

| Prompting, Robot, Zero-shot | Zero-Shot Task Generalization with Multi-Task Deep Reinforcement Learning |

ArXiv | |

| Prompting | Contrastive Chain-of-Thought Prompting | ArXiv | |

| PersonalCitation, Robot | Text2Motion: From Natural Language Instructions to Feasible Plans |

ArXiv | |

| Perception, Video, Vision | CLIP4Clip: An Empirical Study of CLIP for End to E nd Video Clip Retrieval |

ArXiv | |

| Perception, Task-Decompose | DoReMi: Grounding Language Model by Detecting and Recovering from Plan-Execution Misalignment |

ArXiv | 2023/07/01 |

| Perception, Robot, Segmentation | Language Segment-Anything | ||

| Perception, Robot | LiDAR-LLM: Exploring the Potential of Large Langua ge Models for 3D LiDAR Understanding |

ArXiv | 2023/12/21 |

| Perception, Reasoning, Robot | Reasoning Grasping via Multimodal Large Language M odel |

ArXiv | |

| Perception, Reasoning | DetGPT: Detect What You Need via Reasoning | ArXiv | |

| Perception, Reasoning | Lenna: Language Enhanced Reasoning Detection Assis tant |

ArXiv | |

| Perception | Simple Open-Vocabulary Object Detection with Visio n Transformers |

ArXiv | 2022/05/12 |

| Perception | Grounded Language-Image Pre-training | ArXiv | 2021/12/07 |

| Perception | Grounding DINO: Marrying DINO with Grounded Pre-Tr aining for Open-Set Object Detection |

ArXiv | 2023/03/09 |

| Perception | PointCLIP: Point Cloud Understanding by CLIP | ArXiv | 2021/12/04 |

| Perception | DINO: DETR with Improved DeNoising Anchor Boxes fo r End-to-End Object Detection |

ArXiv | |

| Perception | Recognize Anything: A Strong Image Tagging Model | ArXiv | |

| Perception | Simple Open-Vocabulary Object Detection with Visio n Transformers |

ArXiv | |

| Perception | Sigmoid Loss for Language Image Pre-Training | ArXiv | |

| Package | LlamaIndex | GitHub | |

| Package | LangChain | GitHub | |

| Package | h2oGPT | GitHub | |

| Package | Dify | GitHub | |

| Package | Alpaca-LoRA | GitHub | |

| Package | Promptlayer | GitHub | |

| Package | unsloth | GitHub | |

| Package | Instructor: Structured LLM Outputs | GitHub | |

| PRM | Let's Verify Step by Step | ArXiv | |

| PRM | Let's reward step by step: Step-Level reward model as the Navigators for Reasoning |

ArXiv | |

| PPO, RLHF, Reinforcement-Learning | Secrets of RLHF in Large Language Models Part I: P PO |

ArXiv | 2024/02/01 |

| Open-source, VLM | OpenFlamingo: An Open-Source Framework for Trainin g Large Autoregressive Vision-Language Models |

ArXiv | 2023/08/02 |

| Open-source, SLM | RecurrentGemma: Moving Past Transformers for Effic ient Open Language Models |

ArXiv | |

| Open-source, Perception | Grounding DINO: Marrying DINO with Grounded Pre-Tr aining for Open-Set Object Detection |

ArXiv | |

| Open-source | Gemma: Introducing new state-of-the-art open model s |

ArXiv | |

| Open-source | Mistral 7B | ArXiv | |

| Open-source | Qwen Technical Report | ArXiv | |

| Navigation, Reasoning, Vision | NavGPT: Explicit Reasoning in Vision-and-Language Navigation with Large Language Models |

ArXiv | |

| Natural-Language-as-Polices, Robot | RT-H: Action Hierarchies Using Language | ArXiv | |

| Multimodal, Robot, VLM | Open-World Object Manipulation using Pre-trained V ision-Language Models |

ArXiv | 2023/03/02 |

| Multimodal, Robot | MOMA-Force: Visual-Force Imitation for Real-World Mobile Manipulation |

ArXiv | 2023/08/07 |

| Multimodal, Robot | Flamingo: a Visual Language Model for Few-Shot Lea rning |

ArXiv | 2022/04/29 |

| Multi-Images, VLM | Mantis: Multi-Image Instruction Tuning | ArXiv, GitHub | |

| MoE | Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity |

ArXiv | |

| MoE | Sparse MoE as the New Dropout: Scaling Dense and S elf-Slimmable Transformers |

ArXiv | |

| Mixtral, MoE | Mixtral of Experts | ArXiv | |

| Memory, Robot | LLM as A Robotic Brain: Unifying Egocentric Memory and Control |

ArXiv | 2023/04/19 |

| Memory, Reinforcement-Learning | Semantic HELM: A Human-Readable Memory for Reinfor cement Learning |

||

| Math, Reasoning | DeepSeekMath: Pushing the Limits of Mathematical R easoning in Open Language Models |

ArXiv, GitHub | |

| Math, PRM | Math-Shepherd: Verify and Reinforce LLMs Step-by-s tep without Human Annotations |

ArXiv | |

| Math | WizardMath: Empowering Mathematical Reasoning for Large Language Models via Reinforced Evol-Instruct |

ArXiv | |

| Math | Llemma: An Open Language Model For Mathematics | ArXiv | |

| Low-level-action, Robot | SayTap: Language to Quadrupedal Locomotion | ArXiv | 2023/06/13 |

| Low-level-action, Robot | Prompt a Robot to Walk with Large Language Models | ArXiv | 2023/09/18 |

| LoRA, Scaling | LoRA: Low-Rank Adaptation of Large Language Models | ArXiv | |

| LoRA, Scaling | Vera: A General-Purpose Plausibility Estimation Mo del for Commonsense Statements |

ArXiv | |

| LoRA | LoRA Land: 310 Fine-tuned LLMs that Rival GPT-4, A Technical Report |

ArXiv | |

| Lab | Tencent AI Lab - AppAgent, WebVoyager | ||

| Lab | DeepWisdom - MetaGPT | ||

| Lab | Reworkd AI - AgentGPT | ||

| Lab | OpenBMB - ChatDev, XAgent, AgentVerse | ||

| Lab | XLANG NLP Lab - OpenAgents | ||

| Lab | Rutgers University, AGI Research - OpenAGI | ||

| Lab | Knowledge Engineering Group (KEG) & Data Mining at Tsinghua University - CogVLM |

||

| Lab | OpenGVLab | GitHub | |

| Lab | Imperial College London - Zeroshot trajectory | ||

| Lab | sensetime | ||

| Lab | tsinghua | ||

| Lab | Fudan NLP Group | ||

| Lab | Penn State University | ||

| LLaVA, VLM | TinyLLaVA: A Framework of Small-scale Large Multim odal Models |

ArXiv | |

| LLaVA, MoE, VLM | MoE-LLaVA: Mixture of Experts for Large Vision-Lan guage Models |

||

| LLaMA, Lightweight, Open-source | MobiLlama: Towards Accurate and Lightweight Fully Transparent GPT |

||

| LLM, Zero-shot | GPT4Vis: What Can GPT-4 Do for Zero-shot Visual Re cognition? |

ArXiv | 2023/11/27 |

| LLM, Temporal Logics | NL2TL: Transforming Natural Languages to Temporal Logics using Large Language Models |

ArXiv | 2023/05/12 |

| LLM, Survey | A Survey of Large Language Models | ArXiv | 2023/03/31 |

| LLM, Spacial | Can Large Language Models be Good Path Planners? A Benchmark and Investigation on Spatial-temporal Reasoning |

ArXiv | 2023/10/05 |

| LLM, Scaling | BitNet: Scaling 1-bit Transformers for Large Langu age Models |

ArXiv | |

| LLM, Robot, Task-Decompose | Do As I Can, Not As I Say: Grounding Language in R obotic Affordances |

ArXiv | 2022/04/04 |

| LLM, Robot, Survey | Large Language Models for Robotics: A Survey | ArXiv | |

| LLM, Reasoning, Survey | Towards Reasoning in Large Language Models: A Surv ey |

ArXiv | 2022/12/20 |

| LLM, Quantization | The Era of 1-bit LLMs: All Large Language Models a re in 1.58 Bits |

ArXiv | |

| LLM, PersonalCitation, Robot, Zero-shot | Language Models as Zero-Shot Trajectory Generators | ArXiv | |

| LLM, PersonalCitation, Robot | Tree-Planner: Efficient Close-loop Task Planning w ith Large Language Models01 |

||

| LLM, Open-source | A self-hosted, offline, ChatGPT-like chatbot, powe red by Llama 2. 100% private, with no data leaving your device. |

GitHub | |

| LLM, Open-source | OpenFlamingo: An Open-Source Framework for Trainin g Large Autoregressive Vision-Language Models |

ArXiv | 2023/08/02 |

| LLM, Open-source | InstructBLIP: Towards General-purpose Vision-Langu age Models with Instruction Tuning |

ArXiv | 2023/05/11 |

| LLM, Open-source | ChatBridge: Bridging Modalities with Large Languag e Model as a Language Catalyst |

ArXiv | 2023/05/25 |

| LLM, Memory | MemoryBank: Enhancing Large Language Models with L ong-Term Memory |

ArXiv | 2023/05/17 |

| LLM, Leaderboard | LMSYS Chatbot Arena Leaderboard | ||

| LLM | Language Models are Few-Shot Learners | ArXiv | 2020/05/28 |

| Intaractive, OpenGVLab, VLM | InternGPT: Solving Vision-Centric Tasks by Interac ting with ChatGPT Beyond Language |

ArXiv | 2023/05/09 |

| Instruction-Turning, Survey | Is Prompt All You Need? No. A Comprehensive and Br oader View of Instruction Learning |

||

| Instruction-Turning, Survey | Vision-Language Instruction Tuning: A Review and A nalysis |

ArXiv | |

| Instruction-Turning, Survey | A Closer Look at the Limitations of Instruction Tu ning |

ArXiv | |

| Instruction-Turning, Survey | A Survey on Data Selection for LLM Instruction Tun ing |

ArXiv | |

| Instruction-Turning, Self | Self-Instruct: Aligning Language Models with Self- Generated Instructions |

ArXiv | |

| Instruction-Turning, LLM, Zero-shot | Finetuned Language Models Are Zero-Shot Learners | ArXiv | 2021/09/03 |

| Instruction-Turning, LLM, Survey | Instruction Tuning for Large Language Models: A Su rvey |

||

| Instruction-Turning, LLM, PEFT | Visual Instruction Tuning | ArXiv | 2023/04/17 |

| Instruction-Turning, LLM, PEFT | LLaMA-Adapter: Efficient Fine-tuning of Language M odels with Zero-init Attention |

ArXiv | 2023/03/28 |

| Instruction-Turning, LLM | Training language models to follow instructions wi th human feedback |

ArXiv | 2022/03/04 |

| Instruction-Turning, LLM | MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models |

ArXiv | 2023/04/20 |

| Instruction-Turning, LLM | Self-Instruct: Aligning Language Models with Self- Generated Instructions |

ArXiv | 2022/12/20 |

| Instruction-Turning | A Closer Look at the Limitations of Instruction Tu ning |

||

| Instruction-Turning | Exploring Format Consistency for Instruction Tunin g |

||

| Instruction-Turning | Exploring the Benefits of Training Expert Language Models over Instruction Tuning |

ArXiv | 2023/02/06 |

| Instruction-Turning | Tuna: Instruction Tuning using Feedback from Large Language Models |

ArXiv | 2023/03/06 |

| In-Context-Learning, Vision | What Makes Good Examples for Visual In-Context Lea rning? |

||

| In-Context-Learning, Vision | Visual Prompting via Image Inpainting | ArXiv | |

| In-Context-Learning, Video | Prompting Visual-Language Models for Efficient Vid eo Understanding |

||

| In-Context-Learning, VQA | VisualCOMET: Reasoning about the Dynamic Context o f a Still Image |

ArXiv | 2020/04/22 |

| In-Context-Learning, VQA | SINC: Self-Supervised In-Context Learning for Visi on-Language Tasks |

ArXiv | 2023/07/15 |

| In-Context-Learning, Survey | A Survey on In-context Learning | ArXiv | |

| In-Context-Learning, Scaling | Structured Prompting: Scaling In-Context Learning to 1,000 Examples |

ArXiv | 2020/03/06 |

| In-Context-Learning, Scaling | Rethinking the Role of Scale for In-Context Learni ng: An Interpretability-based Case Study at 66 Billion Scale |

ArXiv | 2022/03/06 |

| In-Context-Learning, Reinforcement-Learning | AMAGO: Scalable In-Context Reinforcement Learning for Adaptive Agents |

ArXiv | |

| In-Context-Learning, Prompt-Tuning | Visual Prompt Tuning | ArXiv | |

| In-Context-Learning, Perception, Vision | Visual In-Context Prompting | ArXiv | |

| In-Context-Learning, Many-Shot, Reasoning | Many-Shot In-Context Learning | ArXiv | |

| In-Context-Learning, Instruction-Turning | In-Context Instruction Learning | ||

| In-Context-Learning | ReAct: Synergizing Reasoning and Acting in Languag e Models |

ArXiv | 2023/03/20 |

| In-Context-Learning | Small Models are Valuable Plug-ins for Large Langu age Models |

ArXiv | 2023/05/15 |

| In-Context-Learning | Generative Agents: Interactive Simulacra of Human Behavior |

ArXiv | 2023/04/07 |

| In-Context-Learning | Beyond the Imitation Game: Quantifying and extrapo lating the capabilities of language models |

ArXiv | 2022/06/09 |

| In-Context-Learning | What does CLIP know about a red circle? Visual pro mpt engineering for VLMs |

ArXiv | |

| In-Context-Learning | Can large language models explore in-context? | ArXiv | |

| Image, LLaMA, Perception | LLaMA-VID: An Image is Worth 2 Tokens in Large Lan guage Models |

ArXiv | |

| Hallucination, Survey | Combating Misinformation in the Age of LLMs: Oppor tunities and Challenges |

ArXiv | |

| Gym, PPO, Reinforcement-Learning, Survey | Can Language Agents Approach the Performance of RL ? An Empirical Study On OpenAI Gym |

ArXiv | |

| Grounding, Reinforcement-Learning | Grounding Large Language Models in Interactive Env ironments with Online Reinforcement Learning |

ArXiv | |

| Grounding, Reasoning | Visually Grounded Reasoning across Languages and C ultures |

ArXiv | |

| Grounding | V-IRL: Grounding Virtual Intelligence in Real Life | ||

| Google, Grounding | GLaMM: Pixel Grounding Large Multimodal Model | ArXiv | |

| Generation, Survey | Advances in 3D Generation: A Survey | ||

| Generation, Robot, Zero-shot | Zero-Shot Robotic Manipulation with Pretrained Ima ge-Editing Diffusion Models |

ArXiv | |

| Generation, Robot, Zero-shot | Towards Generalizable Zero-Shot Manipulationvia Tr anslating Human Interaction Plans |

||

| GPT4V, Robot, VLM | Closed-Loop Open-Vocabulary Mobile Manipulation wi th GPT-4V |

ArXiv | |

| GPT4, LLM | GPT-4 Technical Report | ArXiv | 2023/03/15 |

| GPT4, Instruction-Turning | INSTRUCTION TUNING WITH GPT-4 | ArXiv | |

| GPT4, Gemini, LLM | Gemini vs GPT-4V: A Preliminary Comparison and Com bination of Vision-Language Models Through Qualitative Cases |

ArXiv | 2023/12/22 |

| Foundation, Robot, Survey | Foundation Models in Robotics: Applications, Chall enges, and the Future |

ArXiv | 2023/12/13 |

| Foundation, LLaMA, Vision | VisionLLaMA: A Unified LLaMA Interface for Vision Tasks |

ArXiv | |

| Foundation, LLM, Open-source | Code Llama: Open Foundation Models for Code | ArXiv | |

| Foundation, LLM, Open-source | LLaMA: Open and Efficient Foundation Language Mode ls |

ArXiv | 2023/02/27 |

| Feedback, Robot | Correcting Robot Plans with Natural Language Feedb ack |

ArXiv | |

| Feedback, Robot | Learning to Learn Faster from Human Feedback with Language Model Predictive Control |

ArXiv | |

| Feedback, Robot | REFLECT: Summarizing Robot Experiences for Failure Explanation and Correction |

ArXiv | 2023/06/27 |

| Feedback, In-Context-Learning, Robot | InCoRo: In-Context Learning for Robotics Control w ith Feedback Loops |

ArXiv | |

| Evaluation, LLM, Survey | A Survey on Evaluation of Large Language Models | ArXiv | |

| Evaluation | simple-evals | GitHub | |

| End2End, Multimodal, Robot | VIMA: General Robot Manipulation with Multimodal P rompts |

ArXiv | 2022/10/06 |

| End2End, Multimodal, Robot | PaLM-E: An Embodied Multimodal Language Model | ArXiv | 2023/03/06 |

| End2End, Multimodal, Robot | Physically Grounded Vision-Language Models for Rob otic Manipulation |

ArXiv | 2023/09/05 |

| Enbodied | Embodied Question Answering | ArXiv | |

| Embodied, World-model | Language Models Meet World Models: Embodied Experi ences Enhance Language Models |

||

| Embodied, Robot, Task-Decompose | Embodied Task Planning with Large Language Models | ArXiv | 2023/07/04 |

| Embodied, Robot | Large Language Models as Generalizable Policies fo r Embodied Tasks |

ArXiv | |

| Embodied, Reasoning, Robot | Natural Language as Policies: Reasoning for Coordi nate-Level Embodied Control with LLMs |

ArXiv, GitHub | 2024/03/20 |

| Embodied, LLM, Robot, Survey | The Development of LLMs for Embodied Navigation | ArXiv | 2023/11/01 |

| Driving, Spacial | GPT-Driver: Learning to Drive with GPT | ArXiv | 2023/10/02 |

| Drive, Survey | A Survey on Multimodal Large Language Models for A utonomous Driving |

ArXiv | |

| Distilling, Survey | A Survey on Knowledge Distillation of Large Langua ge Models |

||

| Distilling | Distilling Step-by-Step! Outperforming Larger Lang uage Models with Less Training Data and Smaller Model Sizes01 |

ArXiv | |

| Diffusion, Text-to-Image | Mastering Text-to-Image Diffusion: Recaptioning, P lanning, and Generating with Multimodal LLMs |

ArXiv | |

| Diffusion, Survey | On the Design Fundamentals of Diffusion Models: A Survey |

ArXiv | |

| Diffusion, Robot | 3D Diffusion Policy | ArXiv | |

| Diffusion | A latent text-to-image diffusion model | ||

| Demonstration, GPT4, PersonalCitation, Robot, VLM | GPT-4V(ision) for Robotics: Multimodal Task Planni ng from Human Demonstration |

||

| Datatset, LLM, Survey | A Survey on Data Selection for Language Models | ArXiv | |

| Datatset, Instruction-Turning | REVO-LION: EVALUATING AND REFINING VISION LANGUAGE INSTRUCTION TUNING DATASETS |

||

| Datatset, Instruction-Turning | Synthetic Data (Almost) from Scratch: Generalized Instruction Tuning for Language Models |

||

| Datatset | PRM800K: A Process Supervision Dataset | GitHub | |

| Data-generation, Robot | GenSim: Generating Robotic Simulation Tasks via La rge Language Models |

ArXiv | 2023/10/02 |

| Data-generation, Robot | RoboGen: Towards Unleashing Infinite Data for Auto mated Robot Learning via Generative Simulation |

ArXiv | 2023/11/02 |

| DPO, PPO, RLHF | A Comprehensive Survey of LLM Alignment Techniques : RLHF, RLAIF, PPO, DPO and More |

ArXiv | |

| DPO | Is DPO Superior to PPO for LLM Alignment? A Compre hensive Study |

ArXiv | |

| Context-Window, Scaling | Infini-gram: Scaling Unbounded n-gram Language Mod els to a Trillion Tokens |

||

| Context-Window, Scaling | LONGNET: Scaling Transformers to 1,000,000,000 Tok ens |

ArXiv | 2023/07/01 |

| Context-Window, Reasoning, RoPE, Scaling | Resonance RoPE: Improving Context Length Generaliz ation of Large Language Models |

ArXiv | |

| Context-Window, LLM, RoPE, Scaling | LongRoPE: Extending LLM Context Window Beyond 2 Mi llion Tokens |

ArXiv | |

| Context-Window, Foundation, Gemini, LLM, Scaling | Gemini 1.5: Unlocking multimodal understanding acr oss millions of tokens of context |

||

| Context-Window, Foundation | Mamba: Linear-Time Sequence Modeling with Selectiv e State Spaces |

ArXiv | |

| Context-Window | RoFormer: Enhanced Transformer with Rotary Positio n Embedding |

ArXiv | |

| Context-Awere, Context-Window | DynaCon: Dynamic Robot Planner with Contextual Awa reness via LLMs |

ArXiv | |

| Computer-Resource, Scaling | FlashAttention: Fast and Memory-Efficient Exact At tention with IO-Awareness |

ArXiv | |

| Compress, Scaling | (Long)LLMLingua: Enhancing Large Language Model In ference via Prompt Compression |

ArXiv | |

| Compress, Quantization, Survey | A Survey on Model Compression for Large Language M odels |

ArXiv | |

| Compress, Prompting | Learning to Compress Prompts with Gist Tokens | ArXiv | |

| Code-as-Policies, VLM, VQA | Visual Programming: Compositional visual reasoning without training |

ArXiv | 2022/11/18 |

| Code-as-Policies, Robot | SMART-LLM: Smart Multi-Agent Robot Task Planning u sing Large Language Models |

ArXiv | 2023/09/18 |

| Code-as-Policies, Robot | RoboScript: Code Generation for Free-Form Manipula tion Tasks across Real and Simulation |

ArXiv | |

| Code-as-Policies, Robot | Creative Robot Tool Use with Large Language Models | ArXiv | |

| Code-as-Policies, Reinforcement-Learning, Reward | Code as Reward: Empowering Reinforcement Learning with VLMs |

ArXiv | |

| Code-as-Policies, Reasoning, VLM, VQA | ViperGPT: Visual Inference via Python Execution fo r Reasoning |

ArXiv | 2023/03/14 |

| Code-as-Policies, Reasoning | Chain of Code: Reasoning with a Language Model-Aug mented Code Emulator |

ArXiv | |

| Code-as-Policies, PersonalCitation, Robot, Zero-shot | Socratic Models: Composing Zero-Shot Multimodal Re asoning with Language |

ArXiv | 2022/04/01 |

| Code-as-Policies, PersonalCitation, Robot, State-Manage | Statler: State-Maintaining Language Models for Emb odied Reasoning |

ArXiv | 2023/06/30 |

| Code-as-Policies, PersonalCitation, Robot | ProgPrompt: Generating Situated Robot Task Plans u sing Large Language Models |

ArXiv | 2022/09/22 |

| Code-as-Policies, PersonalCitation, Robot | RoboCodeX:Multi-modal Code Generation forRobotic B ehavior Synthesis |

ArXiv | |

| Code-as-Policies, PersonalCitation, Robot | RoboGPT: an intelligent agent of making embodied l ong-term decisions for daily instruction tasks |

||

| Code-as-Policies, PersonalCitation, Robot | ChatGPT for Robotics: Design Principles and Model Abilities |

||

| Code-as-Policies, Multimodal, OpenGVLab, PersonalCitation, Robot | Instruct2Act: Mapping Multi-modality Instructions to Robotic Actions with Large Language Model |

ArXiv | 2023/05/18 |

| Code-as-Policies, Embodied, PersonalCitation, Robot | Code as Policies: Language Model Programs for Embo died Control |

ArXiv | 2022/09/16 |

| Code-as-Policies, Embodied, PersonalCitation, Reasoning, Robot, Task-Decompose | Inner Monologue: Embodied Reasoning through Planni ng with Language Models |

ArXiv | |

| Code-LLM, Front-End | Design2Code: How Far Are We From Automating Front- End Engineering? |

ArXiv | |

| Code-LLM | StarCoder 2 and The Stack v2: The Next Generation | ||

| Chain-of-Thought, Reasoning, Table | Chain-of-table: Evolving tables in the reasoning c hain for table understanding |

ArXiv | |

| Chain-of-Thought, Reasoning, Survey | Towards Understanding Chain-of-Thought Prompting: An Empirical Study of What Matters |

ArXiv | 2023/12/20 |

| Chain-of-Thought, Reasoning, Survey | A Survey of Chain of Thought Reasoning: Advances, Frontiers and Future |

ArXiv | 2023/09/27 |

| Chain-of-Thought, Reasoning | Chain-of-Thought Prompting Elicits Reasoning in La rge Language Models |

ArXiv | 2022/01/28 |

| Chain-of-Thought, Reasoning | Tree of Thoughts: Deliberate Problem Solving with Large Language Models |

ArXiv | 2023/05/17 |

| Chain-of-Thought, Reasoning | Multimodal Chain-of-Thought Reasoning in Language Models |

ArXiv | 2023/02/02 |

| Chain-of-Thought, Reasoning | Verify-and-Edit: A Knowledge-Enhanced Chain-of-Tho ught Framework |

ArXiv | 2023/05/05 |

| Chain-of-Thought, Reasoning | Skeleton-of-Thought: Large Language Models Can Do Parallel Decoding |

ArXiv | 2023/07/28 |

| Chain-of-Thought, Reasoning | Rethinking with Retrieval: Faithful Large Language Model Inference |

ArXiv | 2022/12/31 |

| Chain-of-Thought, Reasoning | Self-Consistency Improves Chain of Thought Reasoni ng in Language Models |

ArXiv | 2022/03/21 |

| Chain-of-Thought, Reasoning | Chain-of-Thought Hub: A Continuous Effort to Measu re Large Language Models' Reasoning Performance |

ArXiv | 2023/05/26 |

| Chain-of-Thought, Reasoning | Skeleton-of-Thought: Prompting LLMs for Efficient Parallel Generation |

ArXiv | |

| Chain-of-Thought, Prompting | Chain-of-Thought Reasoning Without Prompting | ArXiv | |

| Chain-of-Thought, Planning, Reasoning | SelfCheck: Using LLMs to Zero-Shot Check Their Own Step-by-Step Reasoning |

ArXiv | 2023/08/01 |

| Chain-of-Thought, In-Context-Learning, Self | Measuring and Narrowing the Compositionality Gap i n Language Models |

ArXiv | 2022/10/07 |

| Chain-of-Thought, In-Context-Learning, Self | Self-Polish: Enhance Reasoning in Large Language M odels via Problem Refinement |

ArXiv | 2023/05/23 |

| Chain-of-Thought, In-Context-Learning | Chain-of-Table: Evolving Tables in the Reasoning C hain for Table Understanding |

ArXiv | |

| Chain-of-Thought, In-Context-Learning | Self-Refine: Iterative Refinement with Self-Feedba ck |

ArXiv | 2023/03/30 |

| Chain-of-Thought, In-Context-Learning | Plan-and-Solve Prompting: Improving Zero-Shot Chai n-of-Thought Reasoning by Large Language Models |

ArXiv | 2023/05/06 |

| Chain-of-Thought, In-Context-Learning | PAL: Program-aided Language Models | ArXiv | 2022/11/18 |

| Chain-of-Thought, In-Context-Learning | Reasoning with Language Model is Planning with Wor ld Model |

ArXiv | 2023/05/24 |

| Chain-of-Thought, In-Context-Learning | Least-to-Most Prompting Enables Complex Reasoning in Large Language Models |

ArXiv | 2022/05/21 |

| Chain-of-Thought, In-Context-Learning | Complexity-Based Prompting for Multi-Step Reasonin g |

ArXiv | 2022/10/03 |

| Chain-of-Thought, In-Context-Learning | Maieutic Prompting: Logically Consistent Reasoning with Recursive Explanations |

ArXiv | 2022/05/24 |

| Chain-of-Thought, In-Context-Learning | Algorithm of Thoughts: Enhancing Exploration of Id eas in Large Language Models |

ArXiv | 2023/08/20 |

| Chain-of-Thought, GPT4, Reasoning, Robot | Look Before You Leap: Unveiling the Power ofGPT-4V in Robotic Vision-Language Planning |

ArXiv | 2023/11/29 |

| Chain-of-Thought, Embodied, Robot | EgoCOT: Embodied Chain-of-Thought Dataset for Visi on Language Pre-training |

ArXiv | |

| Chain-of-Thought, Embodied, PersonalCitation, Robot, Task-Decompose | EmbodiedGPT: Vision-Language Pre-Training via Embo died Chain of Thought |

ArXiv | 2023/05/24 |

| Chain-of-Thought, Code-as-Policies, PersonalCitation, Robot | Demo2Code: From Summarizing Demonstrations to Synt hesizing Code via Extended Chain-of-Thought |

ArXiv | |

| Chain-of-Thought, Code-as-Policies | Chain of Code: Reasoning with a Language Model-Aug mented Code Emulator |

ArXiv | |

| Caption, Video | PLLaVA : Parameter-free LLaVA Extension from Image s to Videos for Video Dense Captioning |

ArXiv | |

| Caption, VLM, VQA | Caption Anything: Interactive Image Description wi th Diverse Multimodal Controls |

ArXiv | 2023/05/04 |

| CRAG, RAG | Corrective Retrieval Augmented Generation | ArXiv | |

| Brain, Instruction-Turning | Instruction-tuning Aligns LLMs to the Human Brain | ArXiv | |

| Brain, Conscious | Could a Large Language Model be Conscious? | ||

| Brain | LLM-BRAIn: AI-driven Fast Generation of Robot Beha viour Tree based on Large Language Model |

ArXiv | |

| Brain | A Neuro-Mimetic Realization of the Common Model of Cognition via Hebbian Learning and Free Energy Minimization |

||

| Benchmark, Sora, Text-to-Video | LIDA: A Tool for Automatic Generation of Grammar-A gnostic Visualizations and Infographics using Large Language Models01 |

||

| Benchmark, In-Context-Learning | ARB: Advanced Reasoning Benchmark for Large Langua ge Models |

ArXiv | 2023/07/25 |

| Benchmark, In-Context-Learning | PlanBench: An Extensible Benchmark for Evaluating Large Language Models on Planning and Reasoning about Change |

ArXiv | 2022/06/21 |

| Benchmark, GPT4 | Sparks of Artificial General Intelligence: Early e xperiments with GPT-4 |

||

| Awesome Repo, VLM | awesome-vlm-architectures | GitHub | |

| Awesome Repo, Survey | LLMSurvey | GitHub | |

| Awesome Repo, Robot | Awesome-LLM-Robotics | GitHub | |

| Awesome Repo, Reasoning | Awesome LLM Reasoning | GitHub | |

| Awesome Repo, Reasoning | Awesome-Reasoning-Foundation-Models | GitHub | |

| Awesome Repo, RLHF, Reinforcement-Learning | Awesome RLHF (RL with Human Feedback) | GitHub | |

| Awesome Repo, Perception, VLM | Awesome Vision-Language Navigation | GitHub | |

| Awesome Repo, Package | Awesome LLMOps | GitHub | |

| Awesome Repo, Multimodal | Awesome-Multimodal-LLM | GitHub | |

| Awesome Repo, Multimodal | Awesome-Multimodal-Large-Language-Models | GitHub | |

| Awesome Repo, Math, Science | Awesome Scientific Language Models | GitHub | |

| Awesome Repo, LLM, Vision | LLM-in-Vision | GitHub | |

| Awesome Repo, LLM, VLM | Multimodal & Large Language Models | GitHub | |

| Awesome Repo, LLM, Survey | Awesome-LLM-Survey | GitHub | |

| Awesome Repo, LLM, Robot | Everything-LLMs-And-Robotics | GitHub | |

| Awesome Repo, LLM, Leaderboard | LLM-Leaderboard | GitHub | |

| Awesome Repo, LLM | Awesome-LLM | GitHub | |

| Awesome Repo, Korean | awesome-korean-llm | GitHub | |

| Awesome Repo, Japanese, LLM | 日本語LLMまとめ | GitHub | |

| Awesome Repo, In-Context-Learning | Paper List for In-context Learning | GitHub | |

| Awesome Repo, IROS, Robot | IROS2023PaperList | GitHub | |

| Awesome Repo, Hallucination, Survey | A Survey on Hallucination in Large Language Models : Principles, Taxonomy, Challenges, and Open Questions |

ArXiv, GitHub | |

| Awesome Repo, Embodied | Awesome Embodied Vision | GitHub | |

| Awesome Repo, Diffusion | Awesome-Diffusion-Models | GitHub | |

| Awesome Repo, Compress | Awesome LLM Compression | GitHub | |

| Awesome Repo, Chinese | Awesome-Chinese-LLM | GitHub | |

| Awesome Repo, Chain-of-Thought | Chain-of-ThoughtsPapers | GitHub | |

| Automate, Prompting | Large Language Models Are Human-Level Prompt Engin eers |

ArXiv | 2022/11/03 |

| Automate, Chain-of-Thought, Reasoning | Automatic Chain of Thought Prompting in Large Lang uage Models |

ArXiv | 2022/10/07 |

| Audio2Video, Diffusion, Generation, Video | EMO: Emote Portrait Alive - Generating Expressive Portrait Videos with Audio2Video Diffusion Model under Weak Conditions |

ArXiv | |

| Audio | Robust Speech Recognition via Large-Scale Weak Sup ervision |

||

| Apple, VLM | MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training |

ArXiv | |

| Apple, VLM | Guiding Instruction-based Image Editing via Multim odal Large Language Models |

ArXiv | |

| Apple, Robot | Large Language Models as Generalizable Policies fo r Embodied Tasks |

ArXiv | |

| Apple, LLM, Open-source | OpenELM: An Efficient Language Model Family with O pen Training and Inference Framework |

ArXiv | |

| Apple, LLM | ReALM: Reference Resolution As Language Modeling | ArXiv | |

| Apple, LLM | LLM in a flash: Efficient Large Language Model Inf erence with Limited Memory |

ArXiv | |

| Apple, In-Context-Learning, Perception | SAM-CLIP: Merging Vision Foundation Models towards Semantic and Spatial Understanding |

ArXiv | |

| Apple, Code-as-Policies, Robot | Executable Code Actions Elicit Better LLM Agents | ArXiv | |

| Apple | Ferret-v2: An Improved Baseline for Referring and Grounding with Large Language Models |

ArXiv | |

| Apple | Ferret-UI: Grounded Mobile UI Understanding with M ultimodal LLMs |

ArXiv | |

| Apple | Ferret: Refer and Ground Anything Anywhere at Any Granularity |

ArXiv | |

| Anything, LLM, Open-source, Perception, Segmentation | Segment Anything | ArXiv | 2023/04/05 |

| Anything, Depth | Depth Anything: Unleashing the Power of Large-Scal e Unlabeled Data |

ArXiv | |

| Anything, Caption, Perception, Segmentation | Segment and Caption Anything | ArXiv | |

| Anything, CLIP, Perception | SAM-CLIP: Merging Vision Foundation Models towards Semantic and Spatial Understanding |

ArXiv | |

| Agent-Project, Code-LLM | open-interpreter | GitHub | |

| Agent, Web | WebLINX: Real-World Website Navigation with Multi- Turn Dialogue |

ArXiv | |

| Agent, Web | WebVoyager: Building an End-to-End Web Agent with Large Multimodal Models |

ArXiv | |

| Agent, Web | OmniACT: A Dataset and Benchmark for Enabling Mult imodal Generalist Autonomous Agents for Desktop and Web |

ArXiv | |

| Agent, Web | OS-Copilot: Towards Generalist Computer Agents wit h Self-Improvement |

ArXiv | |

| Agent, Video-for-Agent | Video as the New Language for Real-World Decision Making |

||

| Agent, VLM | AssistGPT: A General Multi-modal Assistant that ca n Plan, Execute, Inspect, and Learn |

ArXiv | |

| Agent, Tool | Gorilla: Large Language Model Connected with Massi ve APIs |

ArXiv | |

| Agent, Tool | ToolLLM: Facilitating Large Language Models to Mas ter 16000+ Real-world APIs |

ArXiv | |

| Agent, Survey | A Survey on Large Language Model based Autonomous Agents |

ArXiv | 2023/08/22 |

| Agent, Survey | The Rise and Potential of Large Language Model Bas ed Agents: A Survey |

ArXiv | 2023/09/14 |

| Agent, Survey | Agent AI: Surveying the Horizons of Multimodal Int eraction |

ArXiv | |

| Agent, Survey | Large Multimodal Agents: A Survey | ArXiv | |

| Agent, Soft-Dev | Communicative Agents for Software Development | GitHub | |

| Agent, Soft-Dev | MetaGPT: Meta Programming for A Multi-Agent Collab orative Framework |

ArXiv | |

| Agent, Robot, Survey | A Survey on LLM-based Autonomous Agents | GitHub | |

| Agent, Reinforcement-Learning, Reward | Reward Design with Language Models | ArXiv | 2023/02/27 |

| Agent, Reinforcement-Learning, Reward | EAGER: Asking and Answering Questions for Automati c Reward Shaping in Language-guided RL |

ArXiv | 2022/06/20 |

| Agent, Reinforcement-Learning, Reward | Text2Reward: Automated Dense Reward Function Gener ation for Reinforcement Learning |

ArXiv | 2023/09/20 |

| Agent, Reinforcement-Learning | Eureka: Human-Level Reward Design via Coding Large Language Models |

ArXiv | 2023/10/19 |

| Agent, Reinforcement-Learning | Language to Rewards for Robotic Skill Synthesis | ArXiv | 2023/06/14 |

| Agent, Reinforcement-Learning | Language Instructed Reinforcement Learning for Hum an-AI Coordination |

ArXiv | 2023/04/13 |

| Agent, Reinforcement-Learning | Guiding Pretraining in Reinforcement Learning with Large Language Models |

ArXiv | 2023/02/13 |

| Agent, Reinforcement-Learning | STARLING: SELF-SUPERVISED TRAINING OF TEXTBASED RE INFORCEMENT LEARNING AGENT WITH LARGE LANGUAGE MODELS |

||

| Agent, Reasoning, Zero-shot | Agent Instructs Large Language Models to be Genera l Zero-Shot Reasoners |

ArXiv | 2023/10/05 |

| Agent, Reasoning | Pangu-Agent: A Fine-Tunable Generalist Agent with Structured Reasoning |

ArXiv | |

| Agent, Reasoning | AGENT INSTRUCTS LARGE LANGUAGE MODELS TO BE GENERA L ZERO-SHOT REASONERS |

ArXiv | |

| Agent, Multimodal, Robot | A Generalist Agent | ArXiv | 2022/05/12 |

| Agent, Multi | War and Peace (WarAgent): Large Language Model-bas ed Multi-Agent Simulation of World Wars |

ArXiv | |

| Agent, MobileApp | You Only Look at Screens: Multimodal Chain-of-Acti on Agents |

ArXiv, GitHub | |

| Agent, Minecraft, Reinforcement-Learning | RLAdapter: Bridging Large Language Models to Reinf orcement Learning in Open Worlds |

||

| Agent, Minecraft | Voyager: An Open-Ended Embodied Agent with Large L anguage Models |

ArXiv | 2023/05/25 |

| Agent, Minecraft | Describe, Explain, Plan and Select: Interactive Pl anning with Large Language Models Enables Open-World Multi-Task Agents |

ArXiv | 2023/02/03 |

| Agent, Minecraft | LARP: Language-Agent Role Play for Open-World Game s |

ArXiv | |

| Agent, Minecraft | Steve-Eye: Equipping LLM-based Embodied Agents wit h Visual Perception in Open Worlds |

ArXiv | |

| Agent, Minecraft | S-Agents: Self-organizing Agents in Open-ended Env ironment |

ArXiv | |

| Agent, Minecraft | Ghost in the Minecraft: Generally Capable Agents f or Open-World Environments via Large Language Models with Text-based Knowledge and Memory01 |

ArXiv | |

| Agent, Memory, RAG, Robot | RAP: Retrieval-Augmented Planning with Contextual Memory for Multimodal LLM Agents |

ArXiv | 2024/02/06 |

| Agent, Memory, Minecraft | JARVIS-1: Open-World Multi-task Agents with Memory -Augmented Multimodal Language Models |

ArXiv | 2023/11/10 |

| Agent, LLM, Planning | LLM-Planner: Few-Shot Grounded Planning for Embodi ed Agents with Large Language Models |

ArXiv | |

| Agent, Instruction-Turning | AgentTuning: Enabling Generalized Agent Abilities For LLMs |

ArXiv | |

| Agent, Game | LEARNING EMBODIED VISION-LANGUAGE PRO- GRAMMING FR OM INSTRUCTION, EXPLORATION, AND ENVIRONMENTAL FEEDBACK |

ArXiv | |

| Agent, GUI, Web | "What’s important here?": Opportunities and Challe nges of Using LLMs in Retrieving Informatio from Web Interfaces |

ArXiv | |

| Agent, GUI, MobileApp | Mobile-Agent: Autonomous Multi-Modal Mobile Device Agent with Visual Perception |

||

| Agent, GUI, MobileApp | AppAgent: Multimodal Agents as Smartphone Users | ArXiv | |

| Agent, GUI, MobileApp | You Only Look at Screens: Multimodal Chain-of-Acti on Agents |

||

| Agent, GUI | CogAgent: A Visual Language Model for GUI Agents | ArXiv | |

| Agent, GUI | ScreenAgent: A Computer Control Agent Driven by Vi sual Language Large Model |

GitHub | |

| Agent, GUI | SeeClick: Harnessing GUI Grounding for Advanced Vi sual GUI Agents |

ArXiv | |

| Agent, GPT4, Web | GPT-4V(ision) is a Generalist Web Agent, if Ground ed |

ArXiv | |

| Agent, Feedback, Reinforcement-Learning, Robot | Accelerating Reinforcement Learning of Robotic Man ipulations via Feedback from Large Language Models |

ArXiv | 2023/11/04 |

| Agent, Feedback, Reinforcement-Learning | AdaRefiner: Refining Decisions of Language Models with Adaptive Feedback |

ArXiv | 2023/09/29 |

| Agent, End2End, Game, Robot | An Interactive Agent Foundation Model | ArXiv | |

| Agent, Embodied, Survey | Application of Pretrained Large Language Models in Embodied Artificial Intelligence |

ArXiv | |

| Agent, Embodied, Robot | AutoRT: Embodied Foundation Models for Large Scale Orchestration of Robotic Agents |

ArXiv | |

| Agent, Embodied, Robot | OPEx: A Component-Wise Analysis of LLM-Centric Age nts in Embodied Instruction Following |

ArXiv | |

| Agent, Embodied | OpenAgents: An Open Platform for Language Agents i n the Wild |

ArXiv, GitHub | |

| Agent, Embodied | LLM-Planner: Few-Shot Grounded Planning for Embodi ed Agents with Large Language Models |

ArXiv | |

| Agent, Embodied | Embodied Multi-Modal Agent trained by an LLM from a Parallel TextWorld |

ArXiv | |

| Agent, Embodied | Octopus: Embodied Vision-Language Programmer from Environmental Feedback |

||

| Agent, Embodied | Embodied Task Planning with Large Language Models | ArXiv | |

| Agent, Diffusion, Speech | NaturalSpeech 3: Zero-Shot Speech Synthesis with F actorized Codec and Diffusion Models |

ArXiv | |

| Agent, Code-as-Policies | Executable Code Actions Elicit Better LLM Agents | ArXiv | 2024/01/24 |

| Agent, Code-LLM, Code-as-Policies, Survey | If LLM Is the Wizard, Then Code Is the Wand: A Sur vey on How Code Empowers Large Language Models to Serve as Intelligent Agents |

ArXiv | |

| Agent, Code-LLM | TaskWeaver: A Code-First Agent Framework | ||

| Agent, Blog | LLM Powered Autonomous Agents | ArXiv | |

| Agent, Awesome Repo, LLM | Awesome-Embodied-Agent-with-LLMs | GitHub | |

| Agent, Awesome Repo, LLM | CoALA: Awesome Language Agents | ArXiv, GitHub | |

| Agent, Awesome Repo, Embodied, Grounding | XLang Paper Reading | GitHub | |

| Agent, Awesome Repo | Awesome AI Agents | GitHub | |

| Agent, Awesome Repo | Autonomous Agents | GitHub | |

| Agent, Awesome Repo | Awesome-Papers-Autonomous-Agent | GitHub | |

| Agent, Awesome Repo | Awesome Large Multimodal Agents | GitHub | |

| Agent, Awesome Repo | LLM Agents Papers | GitHub | |

| Agent, Awesome Repo | Awesome LLM-Powered Agent | GitHub | |

| Agent | XAgent: An Autonomous Agent for Complex Task Solvi ng |

||

| Agent | LLM-Powered Hierarchical Language Agent for Real-t ime Human-AI Coordination |

ArXiv | |

| Agent | AgentVerse: Facilitating Multi-Agent Collaboration and Exploring Emergent Behaviors |

ArXiv | |

| Agent | Agents: An Open-source Framework for Autonomous La nguage Agents |

ArXiv, GitHub | |

| Agent | AutoAgents: A Framework for Automatic Agent Genera tion |

GitHub | |

| Agent | DSPy: Compiling Declarative Language Model Calls i nto Self-Improving Pipelines |

ArXiv | |

| Agent | AutoGen: Enabling Next-Gen LLM Applications via Mu lti-Agent Conversation |

ArXiv | |

| Agent | CAMEL: Communicative Agents for “Mind” Exploration of Large Language Model Society |

ArXiv | |

| Agent | XAgent: An Autonomous Agent for Complex Task Solvi ng |

ArXiv | |

| Agent | Generative Agents: Interactive Simulacra of Human Behavior |

ArXiv | |

| Agent | LLM+P: Empowering Large Language Models with Optim al Planning Proficiency |

ArXiv | 2023/04/22 |

| Agent | AgentSims: An Open-Source Sandbox for Large Langua ge Model Evaluation |

ArXiv | 2023/08/08 |

| Agent | Agents: An Open-source Framework for Autonomous La nguage Agents |

ArXiv | |

| Agent | MindAgent: Emergent Gaming Interaction | ArXiv | |

| Agent | InfiAgent: A Multi-Tool Agent for AI Operating Sys tems |

||

| Agent | Predictive Minds: LLMs As Atypical Active Inferenc e Agents |

||

| Agent | swarms | GitHub | |

| Agent | ScreenAgent: A Vision Language Model-driven Comput er Control Agent |

ArXiv | |

| Agent | AssistGPT: A General Multi-modal Assistant that ca n Plan, Execute, Inspect, and Learn |

ArXiv | |

| Agent | PromptAgent: Strategic Planning with Language Mode ls Enables Expert-level Prompt Optimization |

ArXiv | |

| Agent | Cognitive Architectures for Language Agents | ArXiv | |

| Agent | AIOS: LLM Agent Operating System | ArXiv | |

| Agent | LLM as OS, Agents as Apps: Envisioning AIOS, Agent s and the AIOS-Agent Ecosystem |

ArXiv | |

| Agent | Towards General Computer Control: A Multimodal Age nt for Red Dead Redemption II as a Case Study |

||

| Affordance, Segmentation | ManipVQA: Injecting Robotic Affordance and Physica lly Grounded Information into Multi-Modal Large Language Models |

ArXiv | |

| Action-Model, Agent, LAM | LaVague | GitHub | |

| Action-Generation, Generation, Prompting | Prompt a Robot to Walk with Large Language Models | ArXiv | |

| APIs, Agent, Tool | Gorilla: Large Language Model Connected with Massi ve APIs |

ArXiv | |

| AGI, Survey | Levels of AGI: Operationalizing Progress on the Pa th to AGI |

ArXiv | |

| AGI, Brain | When Brain-inspired AI Meets AGI | ArXiv | |

| AGI, Brain | Divergences between Language Models and Human Brai ns |

ArXiv | |

| AGI, Awesome Repo, Survey | Awesome-LLM-Papers-Toward-AGI | GitHub | |

| AGI, Agent | OpenAGI: When LLM Meets Domain Experts | ||

| 3D, Open-source, Perception, Robot | 3D-LLM: Injecting the 3D World into Large Language Models |

ArXiv | 2023/07/24 |

| 3D, GPT4, VLM | GPT-4V(ision) is a Human-Aligned Evaluator for Tex t-to-3D Generation |

ArXiv | |

| ChatEval: Towards Better LLM-based Evaluators thro ugh Multi-Agent Debate |

ArXiv | 2023/08/14 |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-LLM-Papers-Comprehensive-Topics

Similar Open Source Tools

ai-game-development-tools

Here we will keep track of the AI Game Development Tools, including LLM, Agent, Code, Writer, Image, Texture, Shader, 3D Model, Animation, Video, Audio, Music, Singing Voice and Analytics. 🔥 * Tool (AI LLM) * Game (Agent) * Code * Framework * Writer * Image * Texture * Shader * 3D Model * Avatar * Animation * Video * Audio * Music * Singing Voice * Speech * Analytics * Video Tool

GenAI-Learning

GenAI-Learning is a repository dedicated to providing resources and courses for individuals interested in Generative AI. It covers a wide range of topics from prompt engineering to user-centered design, offering courses on LLM Bootcamp, DeepLearning AI, Microsoft Copilot Learning, Amazon Generative AI, Google Cloud Skills, NVIDIA Learn, Oracle Cloud, and IBM AI Learn. The repository includes detailed course descriptions, partners, and topics for each course, making it a valuable resource for AI enthusiasts and professionals.

LLM4EC

LLM4EC is an interdisciplinary research repository focusing on the intersection of Large Language Models (LLM) and Evolutionary Computation (EC). It provides a comprehensive collection of papers and resources exploring various applications, enhancements, and synergies between LLM and EC. The repository covers topics such as LLM-assisted optimization, EA-based LLM architecture search, and applications in code generation, software engineering, neural architecture search, and other generative tasks. The goal is to facilitate research and development in leveraging LLM and EC for innovative solutions in diverse domains.

Awesome_LLM_System-PaperList

Since the emergence of chatGPT in 2022, the acceleration of Large Language Model has become increasingly important. Here is a list of papers on LLMs inference and serving.

LLM-KG4QA

LLM-KG4QA is a repository focused on the integration of Large Language Models (LLMs) and Knowledge Graphs (KGs) for Question Answering (QA). It covers various aspects such as using KGs as background knowledge, reasoning guideline, and refiner/filter. The repository provides detailed information on pre-training, fine-tuning, and Retrieval Augmented Generation (RAG) techniques for enhancing QA performance. It also explores complex QA tasks like Explainable QA, Multi-Modal QA, Multi-Document QA, Multi-Hop QA, Multi-run and Conversational QA, Temporal QA, Multi-domain and Multilingual QA, along with advanced topics like Optimization and Data Management. Additionally, it includes benchmark datasets, industrial and scientific applications, demos, and related surveys in the field.

LLM-Agent-Survey

Autonomous agents are designed to achieve specific objectives through self-guided instructions. With the emergence and growth of large language models (LLMs), there is a growing trend in utilizing LLMs as fundamental controllers for these autonomous agents. This repository conducts a comprehensive survey study on the construction, application, and evaluation of LLM-based autonomous agents. It explores essential components of AI agents, application domains in natural sciences, social sciences, and engineering, and evaluation strategies. The survey aims to be a resource for researchers and practitioners in this rapidly evolving field.

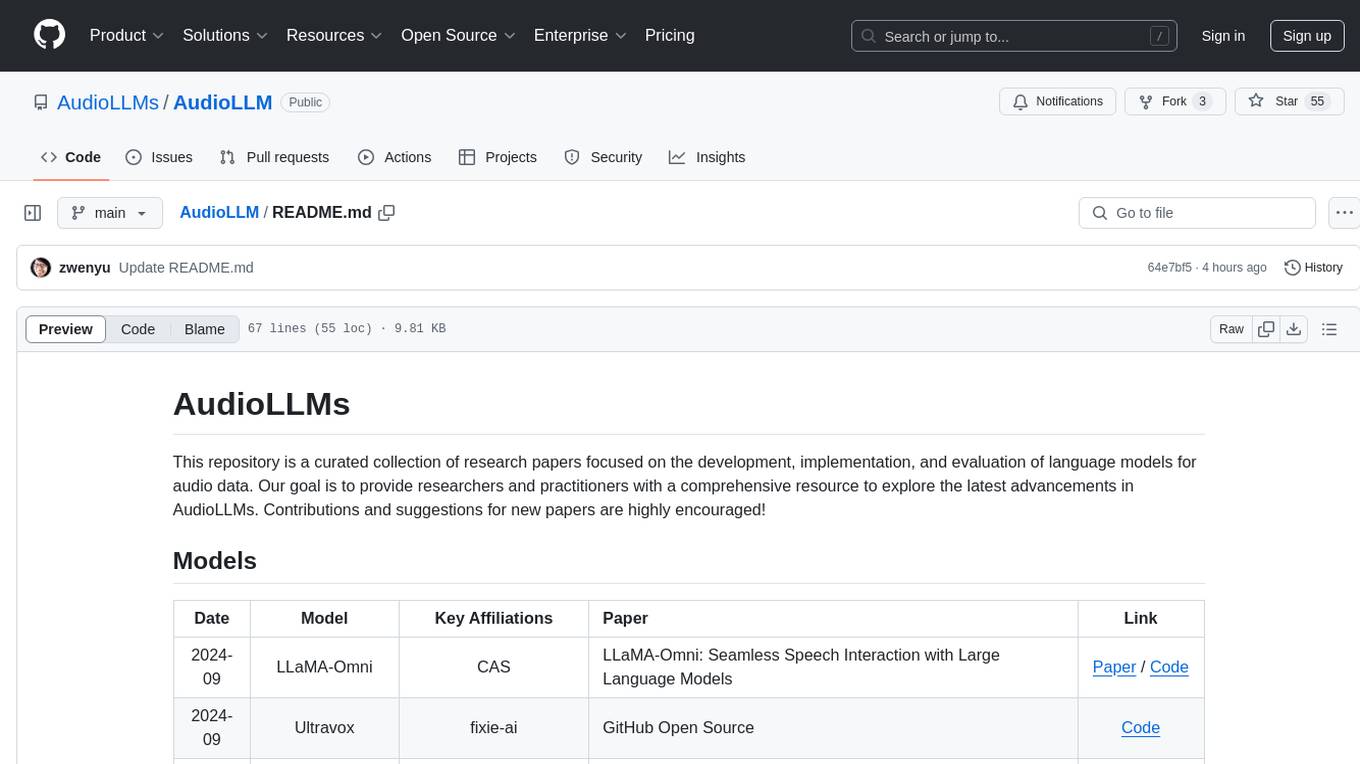

AudioLLM

AudioLLMs is a curated collection of research papers focusing on developing, implementing, and evaluating language models for audio data. The repository aims to provide researchers and practitioners with a comprehensive resource to explore the latest advancements in AudioLLMs. It includes models for speech interaction, speech recognition, speech translation, audio generation, and more. Additionally, it covers methodologies like multitask audioLLMs and segment-level Q-Former, as well as evaluation benchmarks like AudioBench and AIR-Bench. Adversarial attacks such as VoiceJailbreak are also discussed.

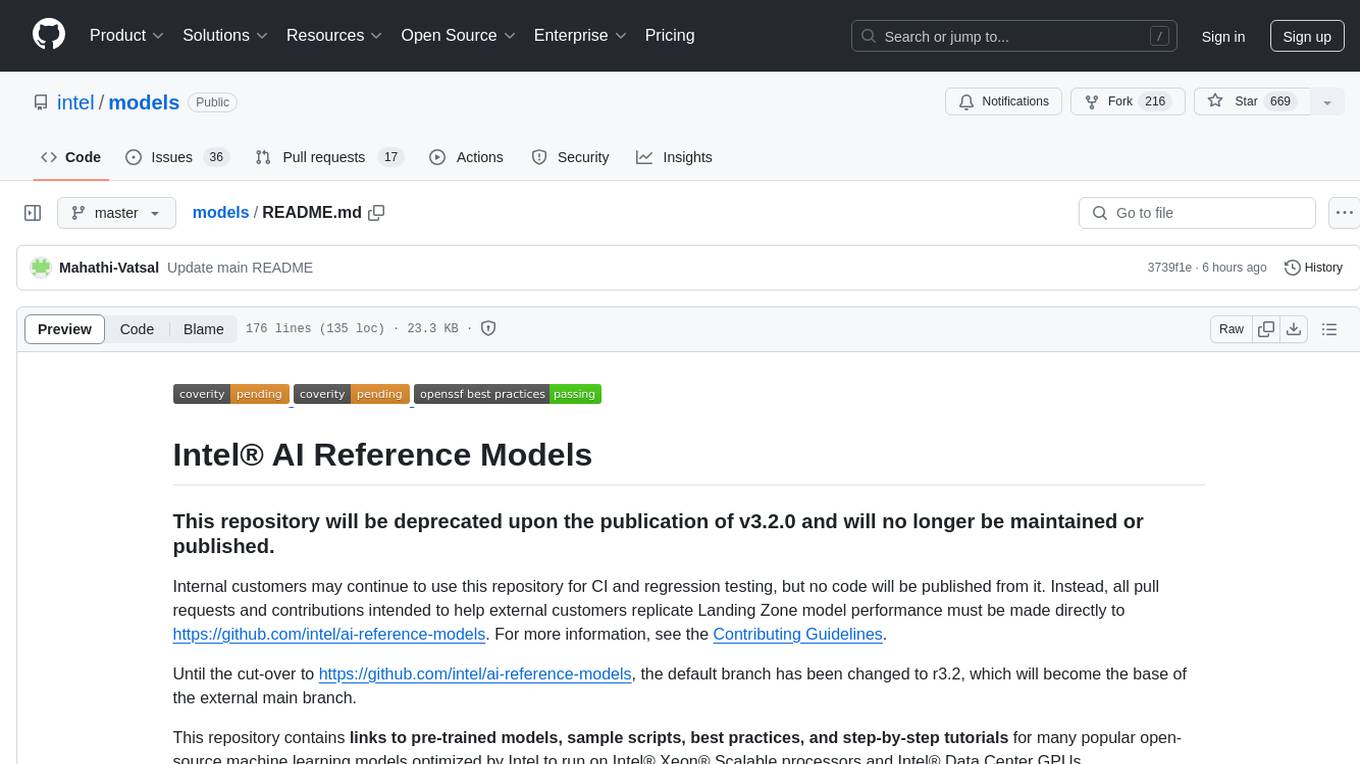

models

The Intel® AI Reference Models repository contains links to pre-trained models, sample scripts, best practices, and tutorials for popular open-source machine learning models optimized by Intel to run on Intel® Xeon® Scalable processors and Intel® Data Center GPUs. It aims to replicate the best-known performance of target model/dataset combinations in optimally-configured hardware environments. The repository will be deprecated upon the publication of v3.2.0 and will no longer be maintained or published.

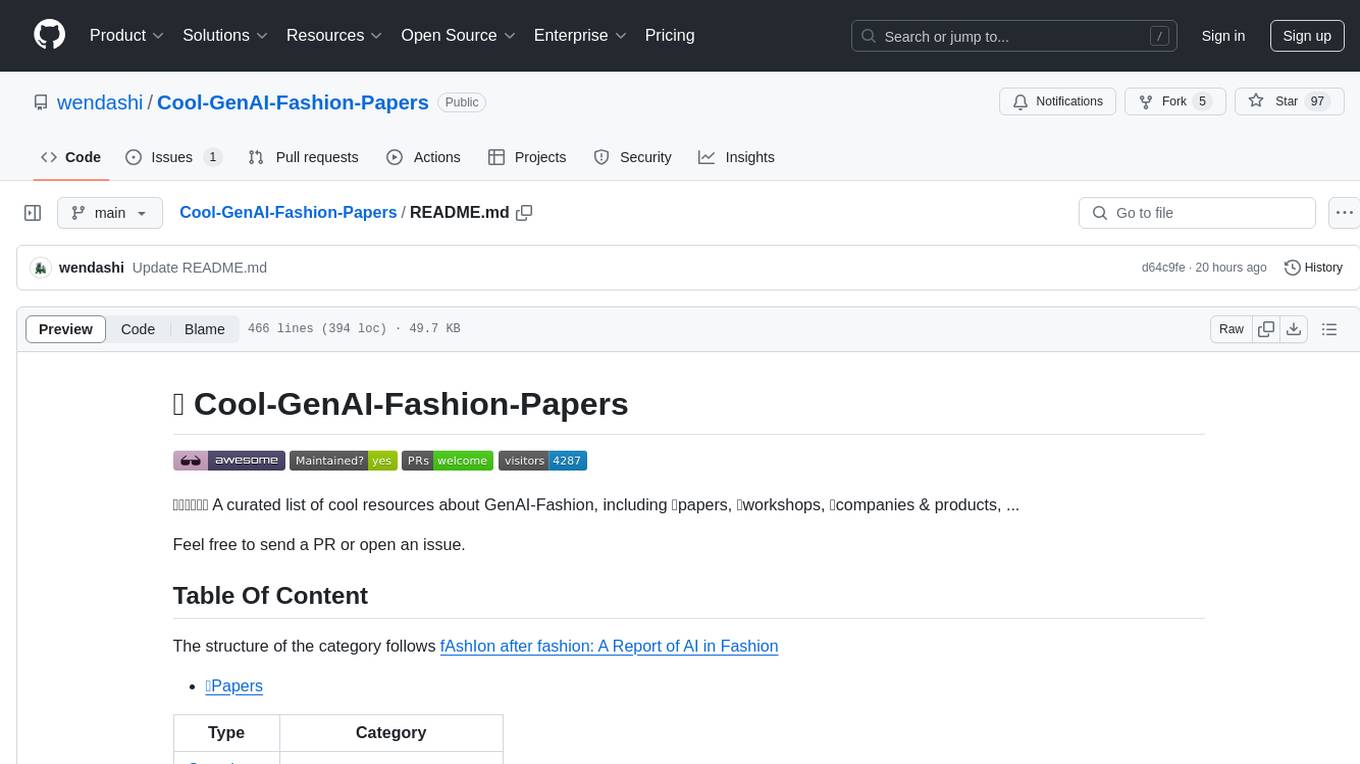

Cool-GenAI-Fashion-Papers

Cool-GenAI-Fashion-Papers is a curated list of resources related to GenAI-Fashion, including papers, workshops, companies, and products. It covers a wide range of topics such as fashion design synthesis, outfit recommendation, fashion knowledge extraction, trend analysis, and more. The repository provides valuable insights and resources for researchers, industry professionals, and enthusiasts interested in the intersection of AI and fashion.

ai-reference-models

The Intel® AI Reference Models repository contains links to pre-trained models, sample scripts, best practices, and tutorials for popular open-source machine learning models optimized by Intel to run on Intel® Xeon® Scalable processors and Intel® Data Center GPUs. The purpose is to quickly replicate complete software environments showcasing the AI capabilities of Intel platforms. It includes optimizations for popular deep learning frameworks like TensorFlow and PyTorch, with additional plugins/extensions for improved performance. The repository is licensed under Apache License Version 2.0.

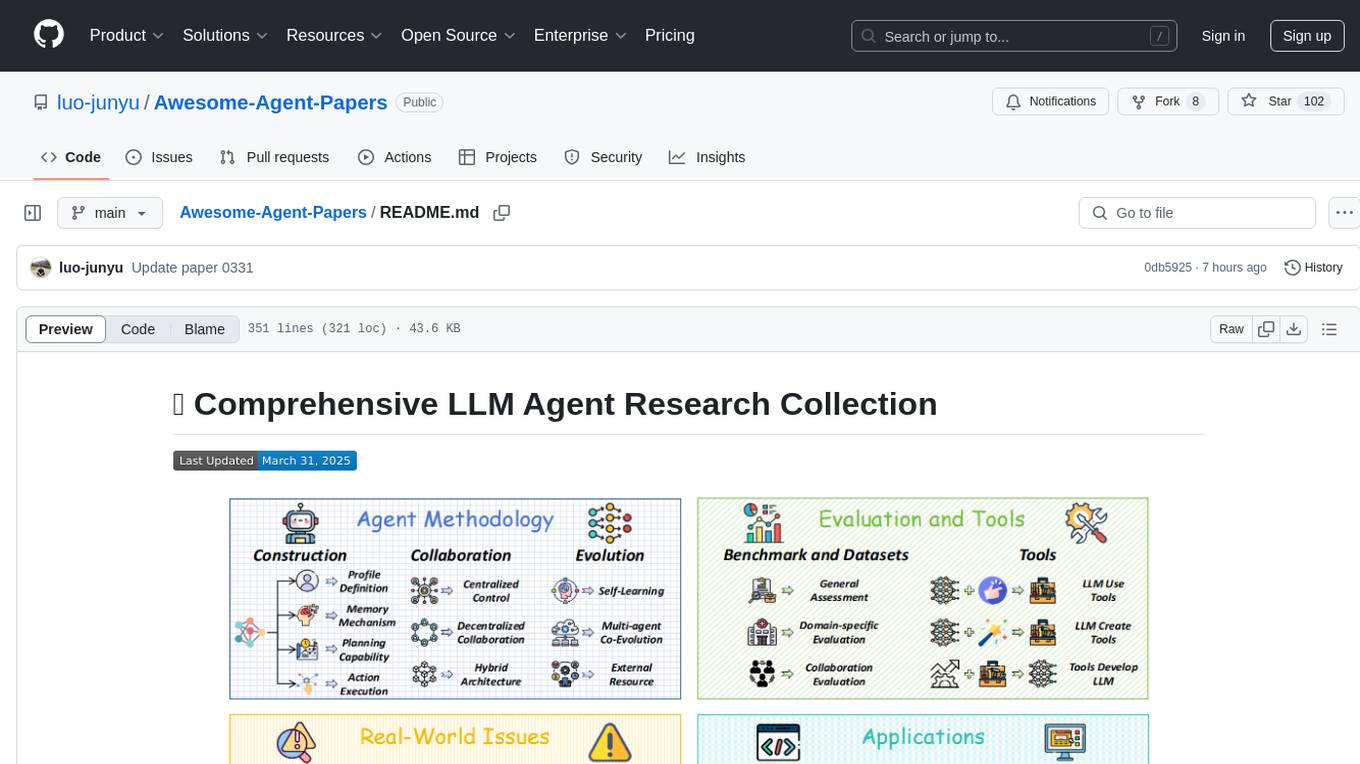

Awesome-Agent-Papers

This repository is a comprehensive collection of research papers on Large Language Model (LLM) agents, organized across key categories including agent construction, collaboration mechanisms, evolution, tools, security, benchmarks, and applications. The taxonomy provides a structured framework for understanding the field of LLM agents, bridging fragmented research threads by highlighting connections between agent design principles and emergent behaviors.

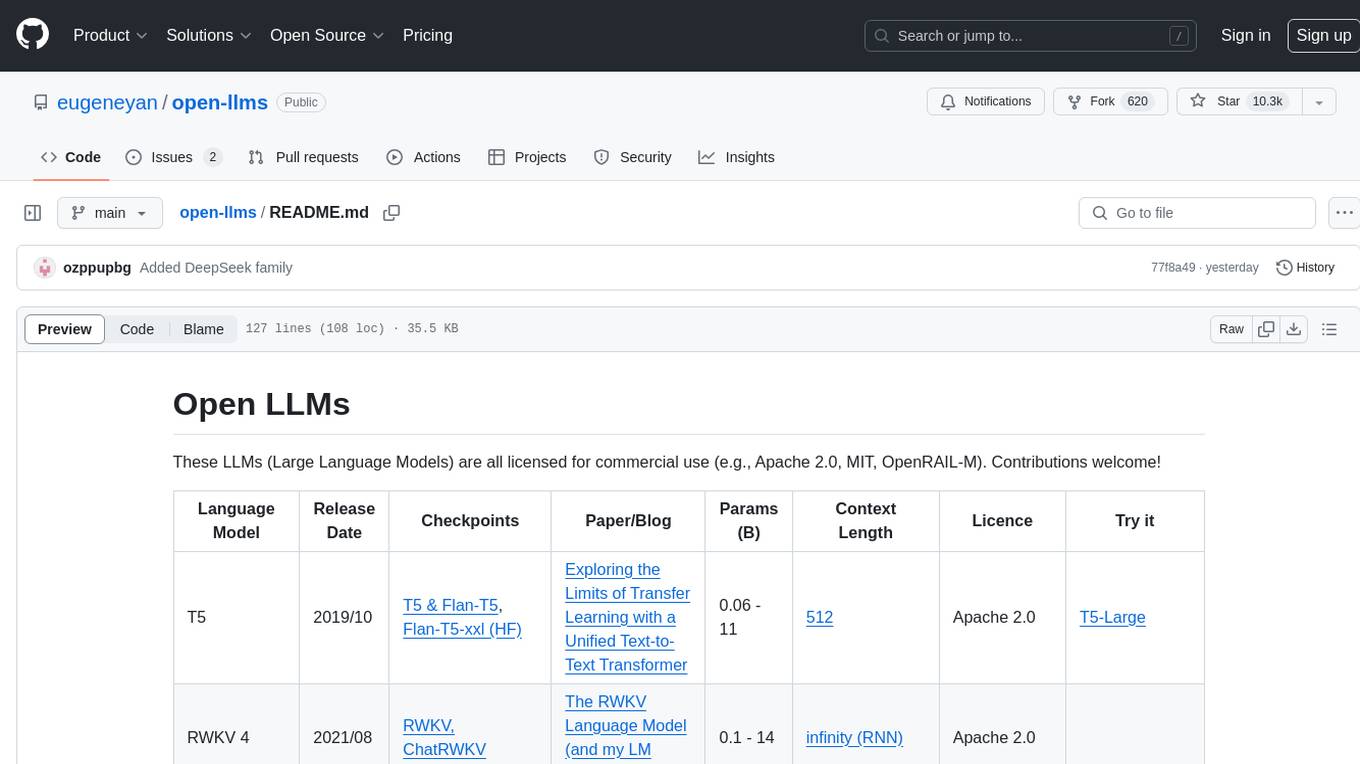

open-llms

Open LLMs is a repository containing various Large Language Models licensed for commercial use. It includes models like T5, GPT-NeoX, UL2, Bloom, Cerebras-GPT, Pythia, Dolly, and more. These models are designed for tasks such as transfer learning, language understanding, chatbot development, code generation, and more. The repository provides information on release dates, checkpoints, papers/blogs, parameters, context length, and licenses for each model. Contributions to the repository are welcome, and it serves as a resource for exploring the capabilities of different language models.

Awesome-Resource-Efficient-LLM-Papers

A curated list of high-quality papers on resource-efficient Large Language Models (LLMs) with a focus on various aspects such as architecture design, pre-training, fine-tuning, inference, system design, and evaluation metrics. The repository covers topics like efficient transformer architectures, non-transformer architectures, memory efficiency, data efficiency, model compression, dynamic acceleration, deployment optimization, support infrastructure, and other related systems. It also provides detailed information on computation metrics, memory metrics, energy metrics, financial cost metrics, network communication metrics, and other metrics relevant to resource-efficient LLMs. The repository includes benchmarks for evaluating the efficiency of NLP models and references for further reading.