CogVLM2

第二代 CogVLM多模态预训练对话模型

Stars: 83

CogVLM2 is a new generation of open source models that offer significant improvements in benchmarks such as TextVQA and DocVQA. It supports 8K content length, image resolution up to 1344 * 1344, and both Chinese and English languages. The project provides basic calling methods, fine-tuning examples, and OpenAI API format calling examples to help developers quickly get started with the model.

README:

👋 Join our Wechat · 💡Try it Online

📍Experience the larger-scale CogVLM model (GLM-4V) on the ZhipuAI Open Platform.

We launch a new generation of CogVLM2 series of models and open source two models based on Meta-Llama-3-8B-Instruct. Compared with the previous generation of CogVLM open source models, the CogVLM2 series of open source models have the following improvements:

- Significant improvements in many benchmarks such as

TextVQA,DocVQA. - Support 8K content length.

- Support image resolution up to 1344 * 1344.

- Provide an open source model version that supports both Chinese and English.

You can see the details of the CogVLM2 family of open source models in the table below:

| Model name | cogvlm2-llama3-chat-19B | cogvlm2-llama3-chinese-chat-19B |

|---|---|---|

| Base Model | Meta-Llama-3-8B-Instruct | Meta-Llama-3-8B-Instruct |

| Language | English | Chinese, English |

| Model size | 19B | 19B |

| Task | Image understanding, dialogue model | Image understanding, dialogue model |

| Model link | 🤗 Huggingface 🤖 ModelScope 💫 Wise Model | 🤗 Huggingface 🤖 ModelScope 💫 Wise Model |

| Demo Page | 📙 Official Page | 📙 Official Page 🤖 ModelScope |

| Int4 model | Not yet launched | Not yet launched |

| Text length | 8K | 8K |

| Image resolution | 1344 * 1344 | 1344 * 1344 |

Our open source models have achieved good results in many lists compared to the previous generation of CogVLM open source models. Its excellent performance can compete with some non-open source models, as shown in the table below:

| Model | Open Source | LLM Size | TextVQA | DocVQA | ChartQA | OCRbench | MMMU | MMVet | MMBench |

|---|---|---|---|---|---|---|---|---|---|

| CogVLM1.1 | ✅ | 7B | 69.7 | - | 68.3 | 590 | 37.3 | 52.0 | 65.8 |

| LLaVA-1.5 | ✅ | 13B | 61.3 | - | - | 337 | 37.0 | 35.4 | 67.7 |

| Mini-Gemini | ✅ | 34B | 74.1 | - | - | - | 48.0 | 59.3 | 80.6 |

| LLaVA-NeXT-LLaMA3 | ✅ | 8B | - | 78.2 | 69.5 | - | 41.7 | - | 72.1 |

| LLaVA-NeXT-110B | ✅ | 110B | - | 85.7 | 79.7 | - | 49.1 | - | 80.5 |

| InternVL-1.5 | ✅ | 20B | 80.6 | 90.9 | 83.8 | 720 | 46.8 | 55.4 | 82.3 |

| QwenVL-Plus | ❌ | - | 78.9 | 91.4 | 78.1 | 726 | 51.4 | 55.7 | 67.0 |

| Claude3-Opus | ❌ | - | - | 89.3 | 80.8 | 694 | 59.4 | 51.7 | 63.3 |

| Gemini Pro 1.5 | ❌ | - | 73.5 | 86.5 | 81.3 | - | 58.5 | - | - |

| GPT-4V | ❌ | - | 78.0 | 88.4 | 78.5 | 656 | 56.8 | 67.7 | 75.0 |

| CogVLM2-LLaMA3 | ✅ | 8B | 84.2 | 92.3 | 81.0 | 756 | 44.3 | 60.4 | 80.5 |

| CogVLM2-LLaMA3-Chinese | ✅ | 8B | 85.0 | 88.4 | 74.7 | 780 | 42.8 | 60.5 | 78.9 |

All reviews were obtained without using any external OCR tools ("pixel only").

This open source repos will help developers to quickly get started with the basic calling methods of the CogVLM2 open source model, fine-tuning examples, OpenAI API format calling examples, etc. The specific project structure is as follows, you can click to enter the corresponding tutorial link:

- basic_demo - Basic calling methods include model inference calling methods such as CLI, WebUI and OpenAI API. If you are using the CogVLM2 open source model for the first time, we recommend you start here.

- finetune_demo - Fine-tuning examples, including examples of fine-tuning language models.

This model is released under the CogVLM2 CogVLM2 LICENSE. For models built with Meta Llama 3, please also adhere to the LLAMA3_LICENSE.

If you find our work helpful, please consider citing the following papers

@misc{wang2023cogvlm,

title={CogVLM: Visual Expert for Pretrained Language Models},

author={Weihan Wang and Qingsong Lv and Wenmeng Yu and Wenyi Hong and Ji Qi and Yan Wang and Junhui Ji and Zhuoyi Yang and Lei Zhao and Xixuan Song and Jiazheng Xu and Bin Xu and Juanzi Li and Yuxiao Dong and Ming Ding and Jie Tang},

year={2023},

eprint={2311.03079},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for CogVLM2

Similar Open Source Tools

CogVLM2

CogVLM2 is a new generation of open source models that offer significant improvements in benchmarks such as TextVQA and DocVQA. It supports 8K content length, image resolution up to 1344 * 1344, and both Chinese and English languages. The project provides basic calling methods, fine-tuning examples, and OpenAI API format calling examples to help developers quickly get started with the model.

BizFinBench

BizFinBench is a benchmark tool designed for evaluating large language models (LLMs) in logic-heavy and precision-critical domains such as finance. It comprises over 100,000 bilingual financial questions rooted in real-world business scenarios. The tool covers five dimensions: numerical calculation, reasoning, information extraction, prediction recognition, and knowledge-based question answering, mapped to nine fine-grained categories. BizFinBench aims to assess the capacity of LLMs in real-world financial scenarios and provides insights into their strengths and limitations.

Model-References

The 'Model-References' repository contains examples for training and inference using Intel Gaudi AI Accelerator. It includes models for computer vision, natural language processing, audio, generative models, MLPerf™ training, and MLPerf™ inference. The repository provides performance data and model validation information for various frameworks like PyTorch. Users can find examples of popular models like ResNet, BERT, and Stable Diffusion optimized for Intel Gaudi AI accelerator.

llm-deploy

LLM-Deploy focuses on the theory and practice of model/LLM reasoning and deployment, aiming to be your partner in mastering the art of LLM reasoning and deployment. Whether you are a newcomer to this field or a senior professional seeking to deepen your skills, you can find the key path to successfully deploy large language models here. The project covers reasoning and deployment theories, model and service optimization practices, and outputs from experienced engineers. It serves as a valuable resource for algorithm engineers and individuals interested in reasoning deployment.

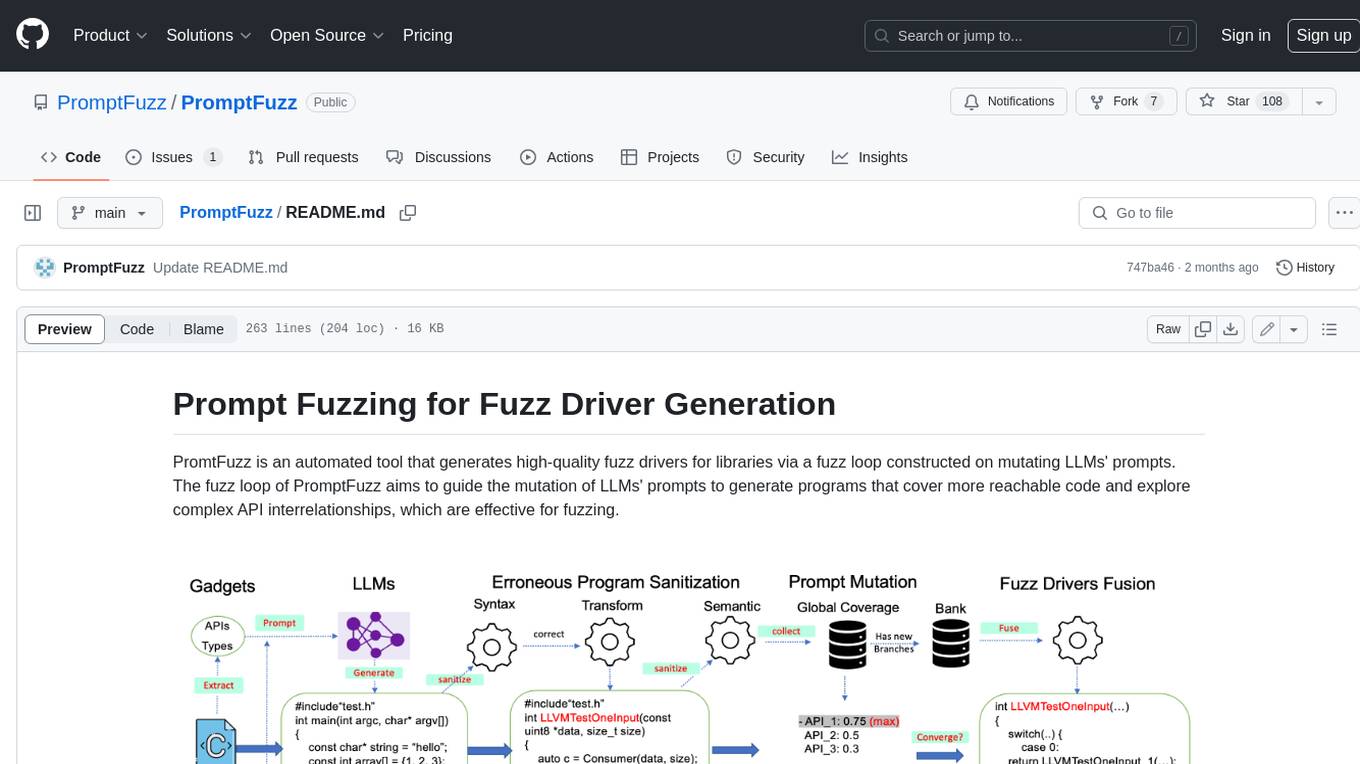

PromptFuzz

**Description:** PromptFuzz is an automated tool that generates high-quality fuzz drivers for libraries via a fuzz loop constructed on mutating LLMs' prompts. The fuzz loop of PromptFuzz aims to guide the mutation of LLMs' prompts to generate programs that cover more reachable code and explore complex API interrelationships, which are effective for fuzzing. **Features:** * **Multiply LLM support** : Supports the general LLMs: Codex, Inocder, ChatGPT, and GPT4 (Currently tested on ChatGPT). * **Context-based Prompt** : Construct LLM prompts with the automatically extracted library context. * **Powerful Sanitization** : The program's syntax, semantics, behavior, and coverage are thoroughly analyzed to sanitize the problematic programs. * **Prioritized Mutation** : Prioritizes mutating the library API combinations within LLM's prompts to explore complex interrelationships, guided by code coverage. * **Fuzz Driver Exploitation** : Infers API constraints using statistics and extends fixed API arguments to receive random bytes from fuzzers. * **Fuzz engine integration** : Integrates with grey-box fuzz engine: LibFuzzer. **Benefits:** * **High branch coverage:** The fuzz drivers generated by PromptFuzz achieved a branch coverage of 40.12% on the tested libraries, which is 1.61x greater than _OSS-Fuzz_ and 1.67x greater than _Hopper_. * **Bug detection:** PromptFuzz detected 33 valid security bugs from 49 unique crashes. * **Wide range of bugs:** The fuzz drivers generated by PromptFuzz can detect a wide range of bugs, most of which are security bugs. * **Unique bugs:** PromptFuzz detects uniquely interesting bugs that other fuzzers may miss. **Usage:** 1. Build the library using the provided build scripts. 2. Export the LLM API KEY if using ChatGPT or GPT4. 3. Generate fuzz drivers using the `fuzzer` command. 4. Run the fuzz drivers using the `harness` command. 5. Deduplicate and analyze the reported crashes. **Future Works:** * **Custom LLMs suport:** Support custom LLMs. * **Close-source libraries:** Apply PromptFuzz to close-source libraries by fine tuning LLMs on private code corpus. * **Performance** : Reduce the huge time cost required in erroneous program elimination.

sane-airscan

sane-airscan is a SANE backend that supports driverless scanning using Apple AirScan (eSCL) and Microsoft WSD protocols. It automatically chooses between the two protocols and has been tested with various devices from Brother, Canon, Dell, Kyocera, Lexmark, Epson, HP, OKI, Panasonic, Pantum, Ricoh, Samsung, and Xerox. The backend allows for automatic and manual device discovery and configuration, supports scanning from platen and ADF in color and grayscale modes, and works with both IPv4 and IPv6. It does not require installation and does not conflict with vendor-provided proprietary software.

Github-Ranking-AI

This repository provides a list of the most starred and forked repositories on GitHub. It is updated automatically and includes information such as the project name, number of stars, number of forks, language, number of open issues, description, and last commit date. The repository is divided into two sections: LLM and chatGPT. The LLM section includes repositories related to large language models, while the chatGPT section includes repositories related to the chatGPT chatbot.

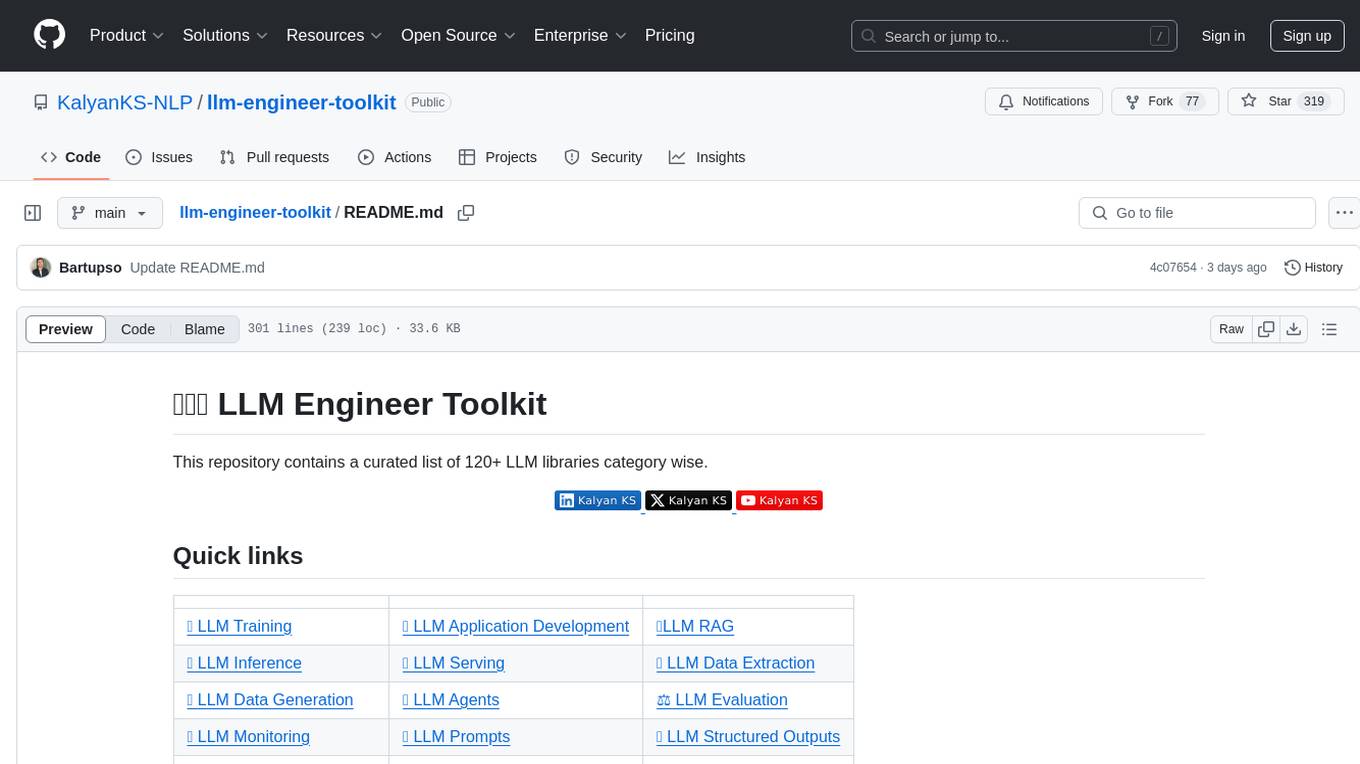

llm-engineer-toolkit

The LLM Engineer Toolkit is a curated repository containing over 120 LLM libraries categorized for various tasks such as training, application development, inference, serving, data extraction, data generation, agents, evaluation, monitoring, prompts, structured outputs, safety, security, embedding models, and other miscellaneous tools. It includes libraries for fine-tuning LLMs, building applications powered by LLMs, serving LLM models, extracting data, generating synthetic data, creating AI agents, evaluating LLM applications, monitoring LLM performance, optimizing prompts, handling structured outputs, ensuring safety and security, embedding models, and more. The toolkit covers a wide range of tools and frameworks to streamline the development, deployment, and optimization of large language models.

tiny-llm

tiny-llm is a course on LLM serving using MLX for system engineers. The codebase is focused on MLX array/matrix APIs to build model serving infrastructure from scratch and explore optimizations. The goal is to efficiently serve large language models like Qwen2 models. The course covers implementing components in Python, building an inference system similar to vLLM, and advanced topics on model interaction. The tool aims to provide hands-on experience in serving language models without high-level neural network APIs.

cool-ai-stuff

This repository contains an uncensored list of free to use APIs and sites for several AI models. > _This list is mainly managed by @zukixa, the queen of zukijourney, so any decisions may have bias!~_ > > **Scroll down for the sites, APIs come first!** * * * > [!WARNING] > We are not endorsing _any_ of the listed services! Some of them might be considered controversial. We are not responsible for any legal, technical or any other damage caused by using the listed services. Data is provided without warranty of any kind. **Use these at your own risk!** * * * # APIs Table of Contents #### Overview of Existing APIs #### Overview of Existing APIs -- Top LLM Models Available #### Overview of Existing APIs -- Top Image Models Available #### Overview of Existing APIs -- Top Other Features & Models Available #### Overview of Existing APIs -- Available Donator Perks * * * ## API List:* *: This list solely covers all providers I (@zukixa) was able to collect metrics in. Any mistakes are not my responsibility, as I am either banned, or not aware of x API. \ 1: Last Updated 4/14/24 ### Overview of APIs: | Service | # of Users1 | Link | Stablity | NSFW Ok? | Open Source? | Owner(s) | Other Notes | | ----------- | ---------- | ------------------------------------------ | ------------------------------------------ | --------------------------- | ------------------------------------------------------ | -------------------------- | ----------------------------------------------------------------------------------------------------------- | | zukijourney| 4441 | D | High | On /unf/, not /v1/ | ✅, Here | @zukixa | Largest & Oldest GPT-4 API still continuously around. Offers other popular AI-related Bots too. | | Hyzenberg| 1234 | D | High | Forbidden | ❌ | @thatlukinhasguy & @voidiii | Experimental sister API to Zukijourney. Successor to HentAI | | NagaAI | 2883 | D | High | Forbidden | ❌ | @zentixua | Honorary successor to ChimeraGPT, the largest API in history (15k users). | | WebRaftAI | 993 | D | High | Forbidden | ❌ | @ds_gamer | Largest API by model count. Provides a lot of service/hosting related stuff too. | | KrakenAI | 388 | D | High | Discouraged | ❌ | @paninico | It is an API of all time. | | ShuttleAI | 3585 | D | Medium | Generally Permitted | ❌ | @xtristan | Faked GPT-4 Before 1, 2 | | Mandrill | 931 | D | Medium | Enterprise-Tier-Only | ❌ | @fredipy | DALL-E-3 access pioneering API. Has some issues with speed & stability nowadays. | oxygen | 742 | D | Medium | Donator-Only | ❌ | @thesketchubuser | Bri'ish 🤮 & Fren'sh 🤮 | | Skailar | 399 | D | Medium | Forbidden | ❌ | @aquadraws | Service is the personification of the word 'feature creep'. Lots of things announced, not much operational. |

are-copilots-local-yet

Current trends and state of the art for using open & local LLM models as copilots to complete code, generate projects, act as shell assistants, automatically fix bugs, and more. This document is a curated list of local Copilots, shell assistants, and related projects, intended to be a resource for those interested in a survey of the existing tools and to help developers discover the state of the art for projects like these.

Awesome-LLM-Constrained-Decoding

Awesome-LLM-Constrained-Decoding is a curated list of papers, code, and resources related to constrained decoding of Large Language Models (LLMs). The repository aims to facilitate reliable, controllable, and efficient generation with LLMs by providing a comprehensive collection of materials in this domain.

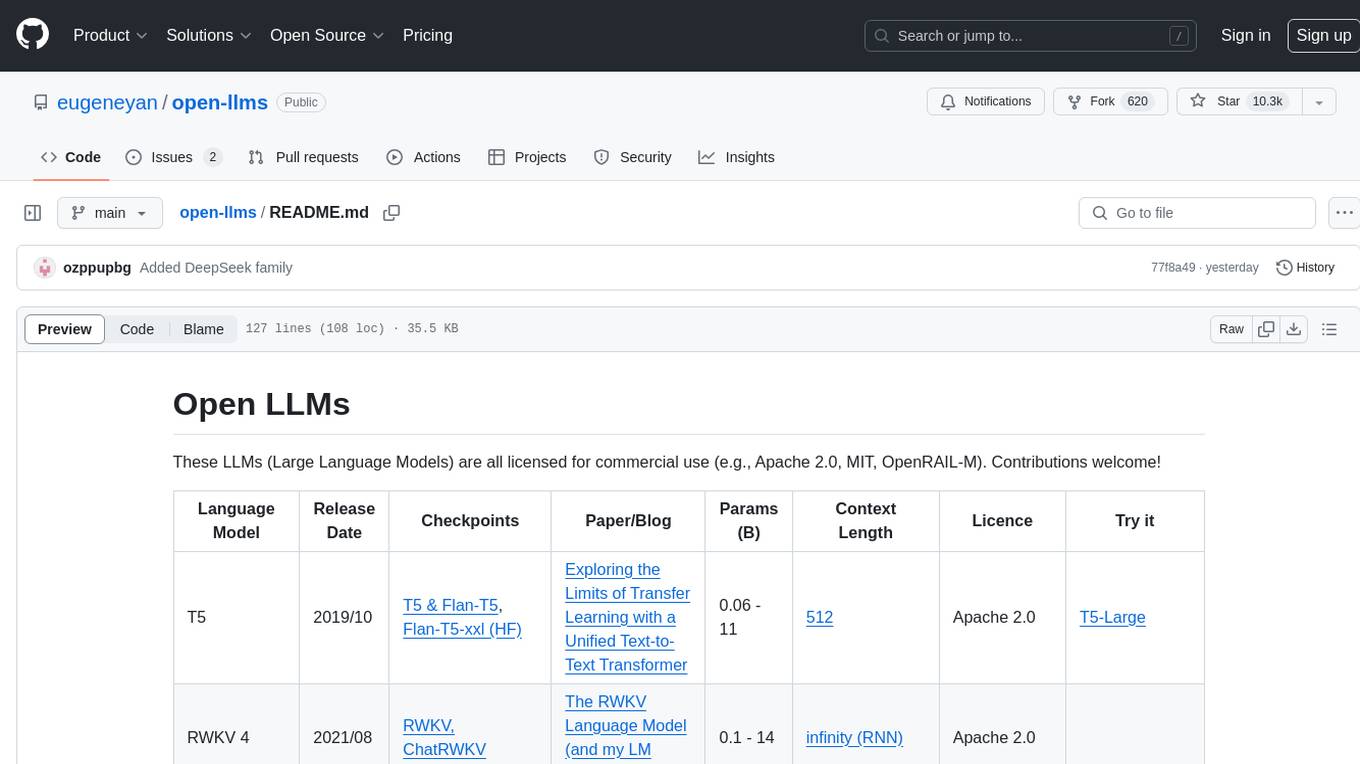

open-llms

Open LLMs is a repository containing various Large Language Models licensed for commercial use. It includes models like T5, GPT-NeoX, UL2, Bloom, Cerebras-GPT, Pythia, Dolly, and more. These models are designed for tasks such as transfer learning, language understanding, chatbot development, code generation, and more. The repository provides information on release dates, checkpoints, papers/blogs, parameters, context length, and licenses for each model. Contributions to the repository are welcome, and it serves as a resource for exploring the capabilities of different language models.

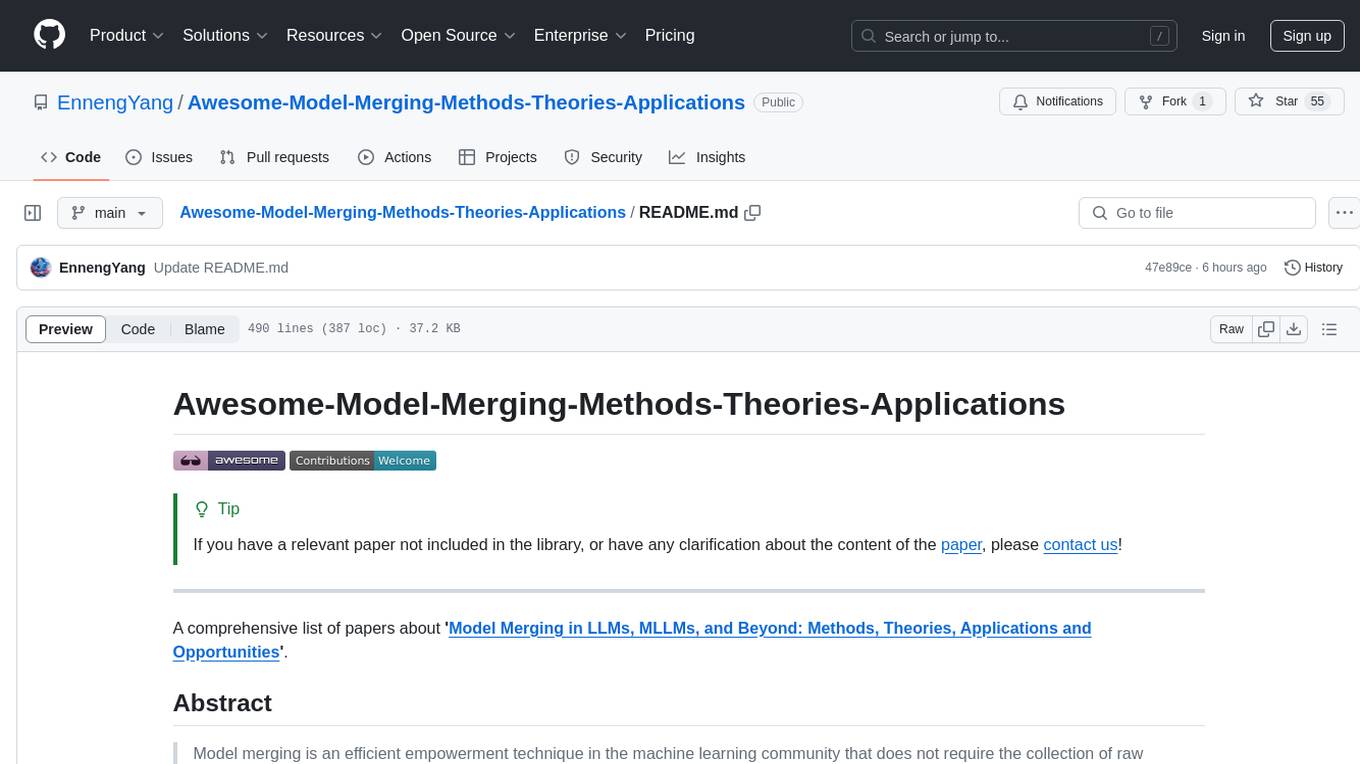

Awesome-Model-Merging-Methods-Theories-Applications

A comprehensive repository focusing on 'Model Merging in LLMs, MLLMs, and Beyond', providing an exhaustive overview of model merging methods, theories, applications, and future research directions. The repository covers various advanced methods, applications in foundation models, different machine learning subfields, and tasks like pre-merging methods, architecture transformation, weight alignment, basic merging methods, and more.

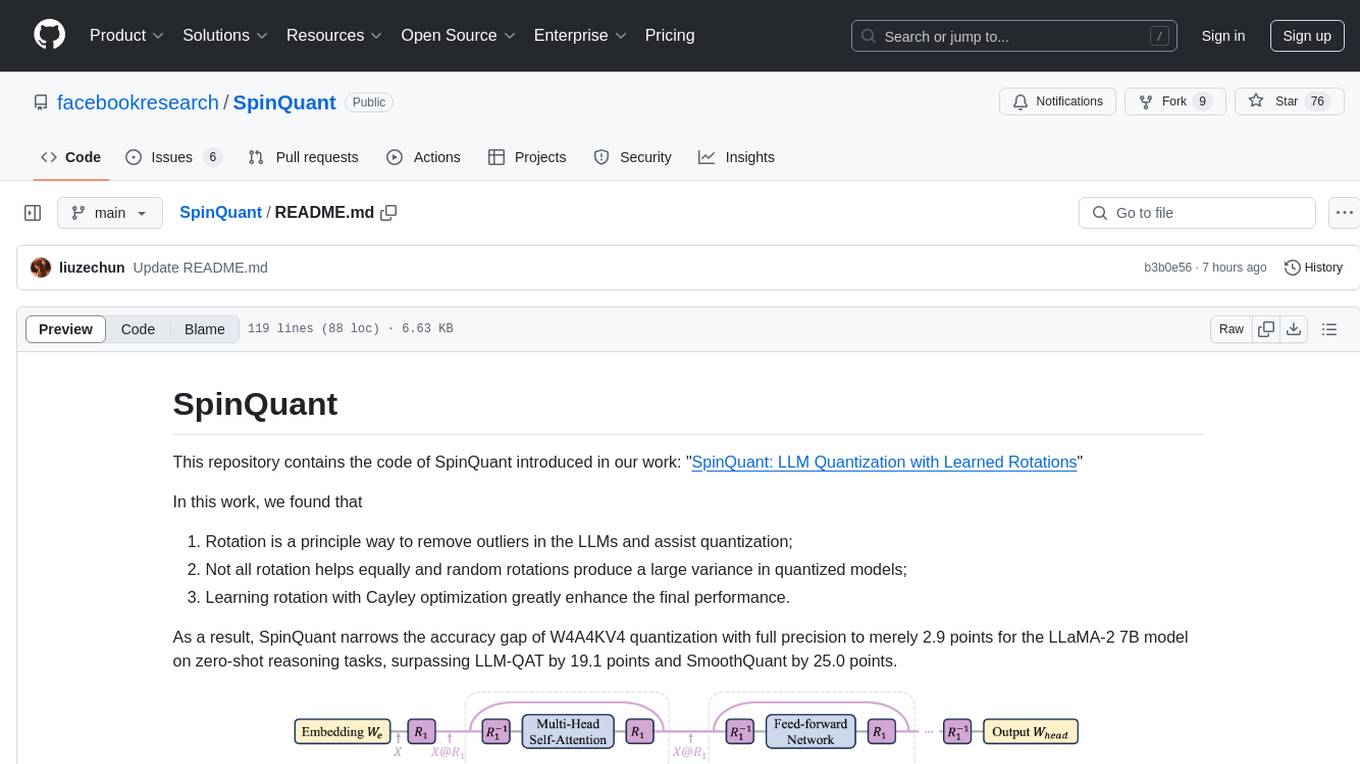

SpinQuant

SpinQuant is a tool designed for LLM quantization with learned rotations. It focuses on optimizing rotation matrices to enhance the performance of quantized models, narrowing the accuracy gap to full precision models. The tool implements rotation optimization and PTQ evaluation with optimized rotation, providing arguments for model name, batch sizes, quantization bits, and rotation options. SpinQuant is based on the findings that rotation helps in removing outliers and improving quantization, with specific enhancements achieved through learning rotation with Cayley optimization.

nntrainer

NNtrainer is a software framework for training neural network models on devices with limited resources. It enables on-device fine-tuning of neural networks using user data for personalization. NNtrainer supports various machine learning algorithms and provides examples for tasks such as few-shot learning, ResNet, VGG, and product rating. It is optimized for embedded devices and utilizes CBLAS and CUBLAS for accelerated calculations. NNtrainer is open source and released under the Apache License version 2.0.

For similar tasks

CogVLM2

CogVLM2 is a new generation of open source models that offer significant improvements in benchmarks such as TextVQA and DocVQA. It supports 8K content length, image resolution up to 1344 * 1344, and both Chinese and English languages. The project provides basic calling methods, fine-tuning examples, and OpenAI API format calling examples to help developers quickly get started with the model.

dstack

Dstack is an open-source orchestration engine for running AI workloads in any cloud. It supports a wide range of cloud providers (such as AWS, GCP, Azure, Lambda, TensorDock, Vast.ai, CUDO, RunPod, etc.) as well as on-premises infrastructure. With Dstack, you can easily set up and manage dev environments, tasks, services, and pools for your AI workloads.

one-click-llms

The one-click-llms repository provides templates for quickly setting up an API for language models. It includes advanced inferencing scripts for function calling and offers various models for text generation and fine-tuning tasks. Users can choose between Runpod and Vast.AI for different GPU configurations, with recommendations for optimal performance. The repository also supports Trelis Research and offers templates for different model sizes and types, including multi-modal APIs and chat models.

starcoder2-self-align

StarCoder2-Instruct is an open-source pipeline that introduces StarCoder2-15B-Instruct-v0.1, a self-aligned code Large Language Model (LLM) trained with a fully permissive and transparent pipeline. It generates instruction-response pairs to fine-tune StarCoder-15B without human annotations or data from proprietary LLMs. The tool is primarily finetuned for Python code generation tasks that can be verified through execution, with potential biases and limitations. Users can provide response prefixes or one-shot examples to guide the model's output. The model may have limitations with other programming languages and out-of-domain coding tasks.

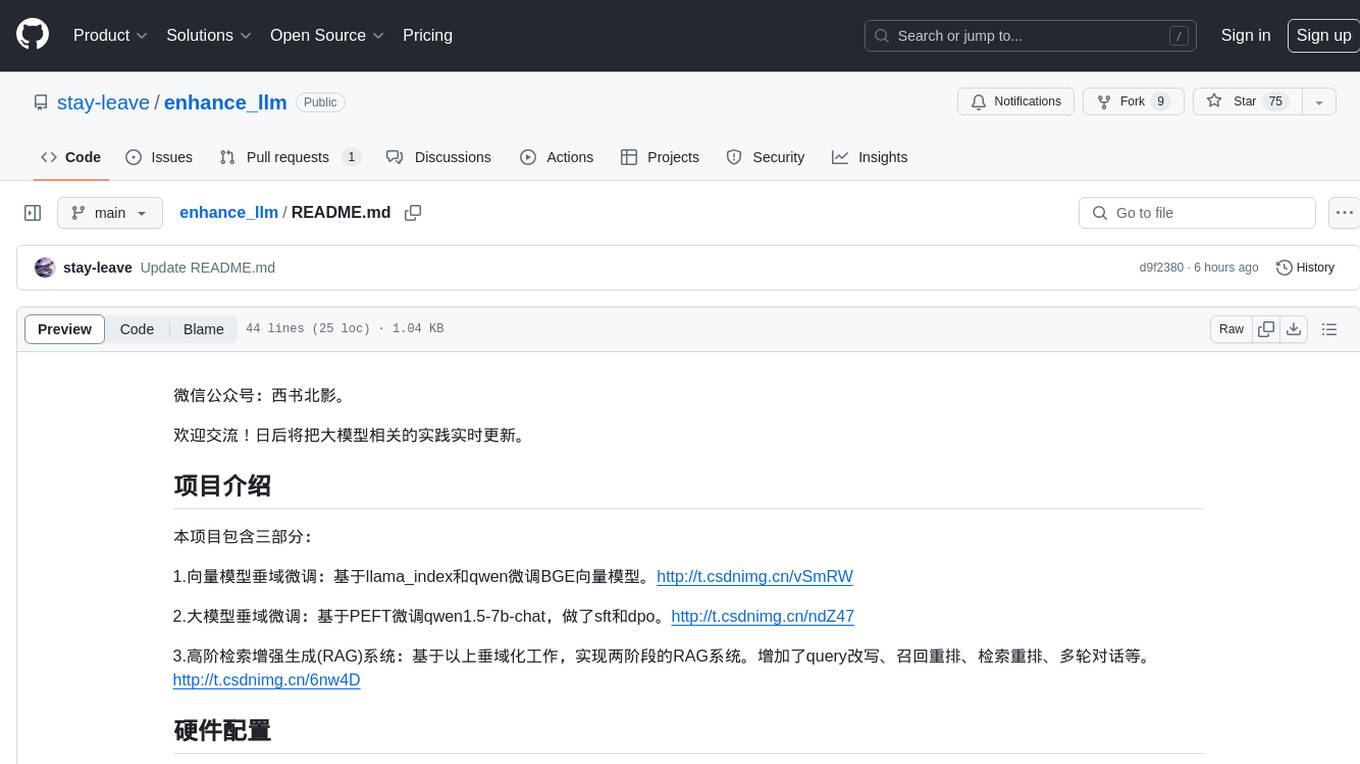

enhance_llm

The enhance_llm repository contains three main parts: 1. Vector model domain fine-tuning based on llama_index and qwen fine-tuning BGE vector model. 2. Large model domain fine-tuning based on PEFT fine-tuning qwen1.5-7b-chat, with sft and dpo. 3. High-order retrieval enhanced generation (RAG) system based on the above domain work, implementing a two-stage RAG system. It includes query rewriting, recall reordering, retrieval reordering, multi-turn dialogue, and more. The repository also provides hardware and environment configurations along with star history and licensing information.

fms-fsdp

The 'fms-fsdp' repository is a companion to the Foundation Model Stack, providing a (pre)training example to efficiently train FMS models, specifically Llama2, using native PyTorch features like FSDP for training and SDPA implementation of Flash attention v2. It focuses on leveraging FSDP for training efficiently, not as an end-to-end framework. The repo benchmarks training throughput on different GPUs, shares strategies, and provides installation and training instructions. It trained a model on IBM curated data achieving high efficiency and performance metrics.

liboai

liboai is a simple C++17 library for the OpenAI API, providing developers with access to OpenAI endpoints through a collection of methods and classes. It serves as a spiritual port of OpenAI's Python library, 'openai', with similar structure and features. The library supports various functionalities such as ChatGPT, Audio, Azure, Functions, Image DALL·E, Models, Completions, Edit, Embeddings, Files, Fine-tunes, Moderation, and Asynchronous Support. Users can easily integrate the library into their C++ projects to interact with OpenAI services.

extension-gen-ai

The Looker GenAI Extension provides code examples and resources for building a Looker Extension that integrates with Vertex AI Large Language Models (LLMs). Users can leverage the power of LLMs to enhance data exploration and analysis within Looker. The extension offers generative explore functionality to ask natural language questions about data and generative insights on dashboards to analyze data by asking questions. It leverages components like BQML Remote Models, BQML Remote UDF with Vertex AI, and Custom Fine Tune Model for different integration options. Deployment involves setting up infrastructure with Terraform and deploying the Looker Extension by creating a Looker project, copying extension files, configuring BigQuery connection, connecting to Git, and testing the extension. Users can save example prompts and configure user settings for the extension. Development of the Looker Extension environment includes installing dependencies, starting the development server, and building for production.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.