Awesome-LLM-Safety

A curated list of safety-related papers, articles, and resources focused on Large Language Models (LLMs). This repository aims to provide researchers, practitioners, and enthusiasts with insights into the safety implications, challenges, and advancements surrounding these powerful models.

Stars: 1564

Welcome to our Awesome-llm-safety repository! We've curated a collection of the latest, most comprehensive, and most valuable resources on large language model safety (llm-safety). But we don't stop there; included are also relevant talks, tutorials, conferences, news, and articles. Our repository is constantly updated to ensure you have the most current information at your fingertips.

README:

English | 中文

Welcome to our Awesome-llm-safety repository! 🥰🥰🥰

🔥 News

🧑💻 Our Work

We've curated a collection of the latest 😋, most comprehensive 😎, and most valuable 🤩 resources on large language model safety (llm-safety). But we don't stop there; included are also relevant talks, tutorials, conferences, news, and articles. Our repository is constantly updated to ensure you have the most current information at your fingertips.

If a resource is relevant to multiple subcategories, we place it under each applicable section. For instance, the "Awesome-LLM-Safety" repository will be listed under each subcategory to which it pertains🤩!.

✔️ Perfect for Majority

- For beginners curious about llm-safety, our repository serves as a compass for grasping the big picture and diving into the details. Classic or influential papers retained in the README provide a beginner-friendly navigation through interesting directions in the field;

- For seasoned researchers, this repository is a tool to keep you informed and fill any gaps in your knowledge. Within each subtopic, we are diligently updating all the latest content and continuously backfilling with previous work. Our thorough compilation and careful selection are time-savers for you.

🧭 How to Use this Guide

- Quick Start: In the README, users can find a curated list of select information sorted by date, along with links to various consultations.

- In-Depth Exploration: If you have a special interest in a particular subtopic, delve into the "subtopic" folder for more. Each item, be it an article or piece of news, comes with a brief introduction, allowing researchers to swiftly zero in on relevant content.

💼 How to Contribution

If you have completed an insightful work or carefully compiled conference papers, we would love to add your work to the repository.

- For individual papers, you can raise an issue, and we will quickly add your paper under the corresponding subtopic.

- If you have compiled a collection of papers for a conference, you are welcome to submit a pull request directly. We would greatly appreciate your contribution. Please note that these pull requests need to be consistent with our existing format.

📜Advertisement

🌱 If you would like more people to read your recent insightful work, please contact me via email. I can offer you a promotional spot here for up to one month.

Let’s start LLM Safety tutorial!

-

🛡️Awesome LLM-Safety🛡️

- 🤗Introduction

- 🚀Table of Contents

- [🔐Security & Discussion](#security & discussion)

- 🔏Privacy

- 📰Truthfulness & Misinformation

- 😈JailBreak & Attacks

- [🛡️Defenses & Mitigation](#️defenses & mitigation)

- 💯Datasets & Benchmark

- 🧑🏫 Scholars 👩🏫

- 🧑🎓Author

| Date | Link | Publication | Authors |

|---|---|---|---|

| 2024/5/20 | Managing extreme AI risks amid rapid progress | Yoshua Bengio, Geoffrey Hinton, Andrew Yao, Dawn Song, Pieter Abbeel, Trevor Darrell, Yuval Noah Harari, Ya-Qin Zhang, Lan Xue, Shai Shalev-Shwartz, Gillian Hadfield, Jeff Clune, Tegan Maharaj, Frank Hutter, Atılım Güneş Baydin, Sheila McIlraith, Qiqi Gao, Ashwin Acharya, David Krueger, Anca Dragan, Philip Torr, Stuart Russell, Daniel Kahneman, Jan Brauner, Sören Mindermann | Science |

| Date | Institute | Publication | Paper |

|---|---|---|---|

| 20.10 | Facebook AI Research | arxiv | Recipes for Safety in Open-domain Chatbots |

| 22.03 | OpenAI | NIPS2022 | Training language models to follow instructions with human feedback |

| 23.07 | UC Berkeley | NIPS2023 | Jailbroken: How Does LLM Safety Training Fail? |

| 23.12 | OpenAI | Open AI | Practices for Governing Agentic AI Systems |

| Date | Type | Title | URL |

|---|---|---|---|

| 22.02 | Toxicity Detection API | Perspective API |

link paper |

| 23.07 | Repository | Awesome LLM Security | link |

| 23.10 | Tutorials | Awesome-LLM-Safety | link |

| 24.01 | Tutorials | Awesome-LM-SSP | link |

👉Latest&Comprehensive Security Paper

| Date | Institute | Publication | Paper |

|---|---|---|---|

| 19.12 | Microsoft | CCS2020 | Analyzing Information Leakage of Updates to Natural Language Models |

| 21.07 | Google Research | ACL2022 | Deduplicating Training Data Makes Language Models Better |

| 21.10 | Stanford | ICLR2022 | Large language models can be strong differentially private learners |

| 22.02 | Google Research | ICLR2023 | Quantifying Memorization Across Neural Language Models |

| 22.02 | UNC Chapel Hill | ICML2022 | Deduplicating Training Data Mitigates Privacy Risks in Language Models |

| Date | Type | Title | URL |

|---|---|---|---|

| 23.10 | Tutorials | Awesome-LLM-Safety | link |

| 24.01 | Tutorials | Awesome-LM-SSP | link |

👉Latest&Comprehensive Privacy Paper

| Date | Institute | Publication | Paper |

|---|---|---|---|

| 21.09 | University of Oxford | ACL2022 | TruthfulQA: Measuring How Models Mimic Human Falsehoods |

| 23.11 | Harbin Institute of Technology | arxiv | A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions |

| 23.11 | Arizona State University | arxiv | Can Knowledge Graphs Reduce Hallucinations in LLMs? : A Survey |

| Date | Type | Title | URL |

|---|---|---|---|

| 23.07 | Repository | llm-hallucination-survey | link |

| 23.10 | Repository | LLM-Factuality-Survey | link |

| 23.10 | Tutorials | Awesome-LLM-Safety | link |

👉Latest&Comprehensive Truthfulness&Misinformation Paper

| Date | Institute | Publication | Paper |

|---|---|---|---|

| 20.12 | USENIX Security 2021 | Extracting Training Data from Large Language Models | |

| 22.11 | AE Studio | NIPS2022(ML Safety Workshop) | Ignore Previous Prompt: Attack Techniques For Language Models |

| 23.06 | arxiv | Are aligned neural networks adversarially aligned? | |

| 23.07 | CMU | arxiv | Universal and Transferable Adversarial Attacks on Aligned Language Models |

| 23.10 | University of Pennsylvania | arxiv | Jailbreaking Black Box Large Language Models in Twenty Queries |

| Date | Type | Title | URL |

|---|---|---|---|

| 23.01 | Community | Reddit/ChatGPTJailbrek | link |

| 23.02 | Resource&Tutorials | Latest Jailbreak Prompts | link |

| 23.10 | Tutorials | Awesome-LLM-Safety | link |

| 23.10 | Article | Adversarial Attacks on LLMs(Author: Lilian Weng) | link |

| 23.11 | Video | [1hr Talk] Intro to Large Language Models From 45:45(Author: Andrej Karpathy) |

link |

| 24.09 | Repo | awesome_LLM-harmful-fine-tuning-papers | link |

| 12.10 | Resource | Jailbreak Commuinities | link |

| 12.10 | Article | Jailbreak Techniques and Safeguards | link |

👉Latest&Comprehensive JailBreak & Attacks Paper

| Date | Institute | Publication | Paper |

|---|---|---|---|

| 21.07 | Google Research | ACL2022 | Deduplicating Training Data Makes Language Models Better |

| 22.04 | Anthropic | arxiv | Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback |

| Date | Type | Title | URL |

|---|---|---|---|

| 23.10 | Tutorials | Awesome-LLM-Safety | link |

👉Latest&Comprehensive Defenses Paper

| Date | Institute | Publication | Paper |

|---|---|---|---|

| 20.09 | University of Washington | EMNLP2020(findings) | RealToxicityPrompts: Evaluating Neural Toxic Degeneration in Language Models |

| 21.09 | University of Oxford | ACL2022 | TruthfulQA: Measuring How Models Mimic Human Falsehoods |

| 22.03 | MIT | ACL2022 | ToxiGen: A Large-Scale Machine-Generated datasets for Adversarial and Implicit Hate Speech Detection |

| Date | Type | Title | URL |

|---|---|---|---|

| 23.10 | Tutorials | Awesome-LLM-Safety | link |

- Toxicity - RealToxicityPrompts datasets

- Truthfulness - TruthfulQA datasets

👉Latest&Comprehensive datasets & Benchmark Paper

🤗If you have any questions, please contact our authors!🤗

✉️: ydyjya ➡️ [email protected]

💬: LLM Safety Discussion

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-LLM-Safety

Similar Open Source Tools

Awesome-LLM-Safety

Welcome to our Awesome-llm-safety repository! We've curated a collection of the latest, most comprehensive, and most valuable resources on large language model safety (llm-safety). But we don't stop there; included are also relevant talks, tutorials, conferences, news, and articles. Our repository is constantly updated to ensure you have the most current information at your fingertips.

llm_aigc

The llm_aigc repository is a comprehensive resource for everything related to llm (Large Language Models) and aigc (AI Governance and Control). It provides detailed information, resources, and tools for individuals interested in understanding and working with large language models and AI governance and control. The repository covers a wide range of topics including model training, evaluation, deployment, ethics, and regulations in the AI field.

awesome-LLM-resources

This repository is a curated list of resources for learning and working with Large Language Models (LLMs). It includes a collection of articles, tutorials, tools, datasets, and research papers related to LLMs such as GPT-3, BERT, and Transformer models. Whether you are a researcher, developer, or enthusiast interested in natural language processing and artificial intelligence, this repository provides valuable resources to help you understand, implement, and experiment with LLMs.

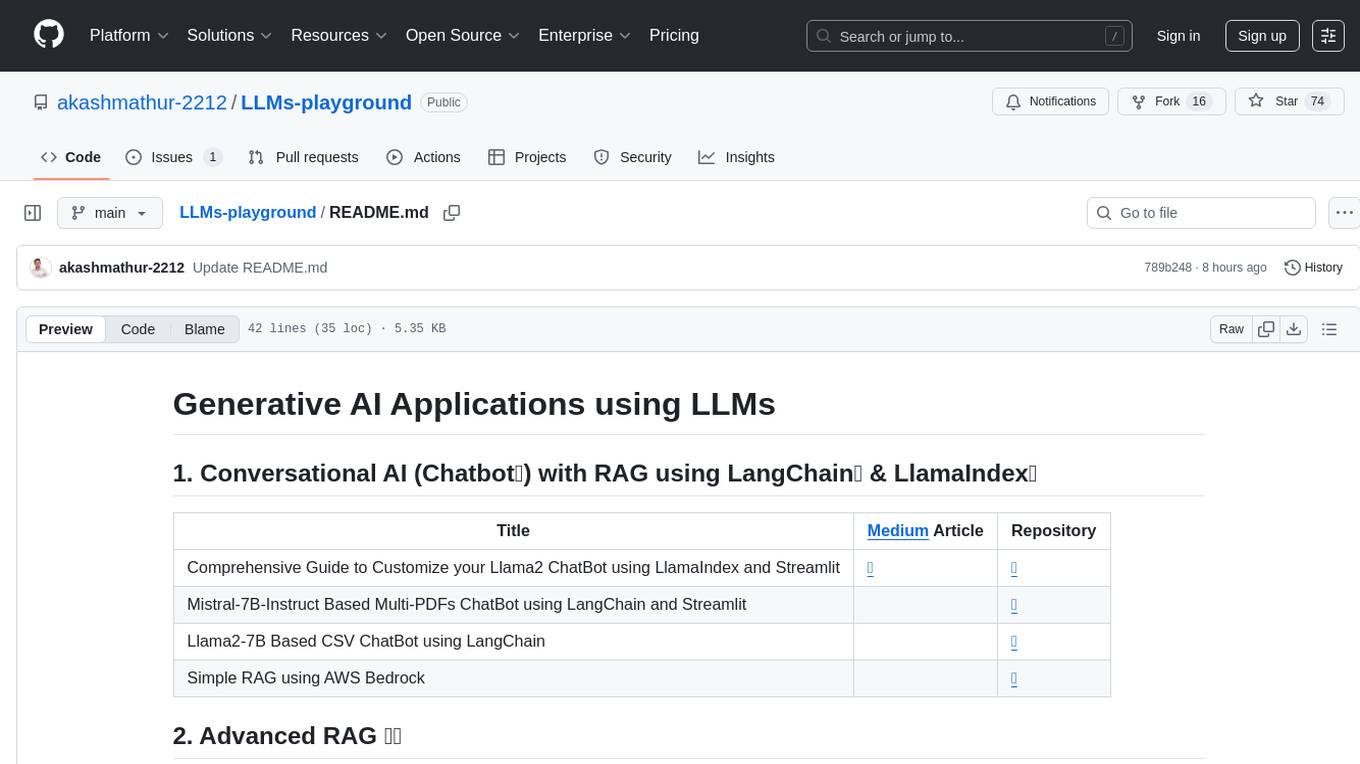

LLMs-playground

LLMs-playground is a repository containing code examples and tutorials for learning and experimenting with Large Language Models (LLMs). It provides a hands-on approach to understanding how LLMs work and how to fine-tune them for specific tasks. The repository covers various LLM architectures, pre-training techniques, and fine-tuning strategies, making it a valuable resource for researchers, students, and practitioners interested in natural language processing and machine learning. By exploring the code and following the tutorials, users can gain practical insights into working with LLMs and apply their knowledge to real-world projects.

GenAiGuidebook

GenAiGuidebook is a comprehensive resource for individuals looking to begin their journey in GenAI. It serves as a detailed guide providing insights, tips, and information on various aspects of GenAI technology. The guidebook covers a wide range of topics, including introductory concepts, practical applications, and best practices in the field of GenAI. Whether you are a beginner or an experienced professional, this resource aims to enhance your understanding and proficiency in GenAI.

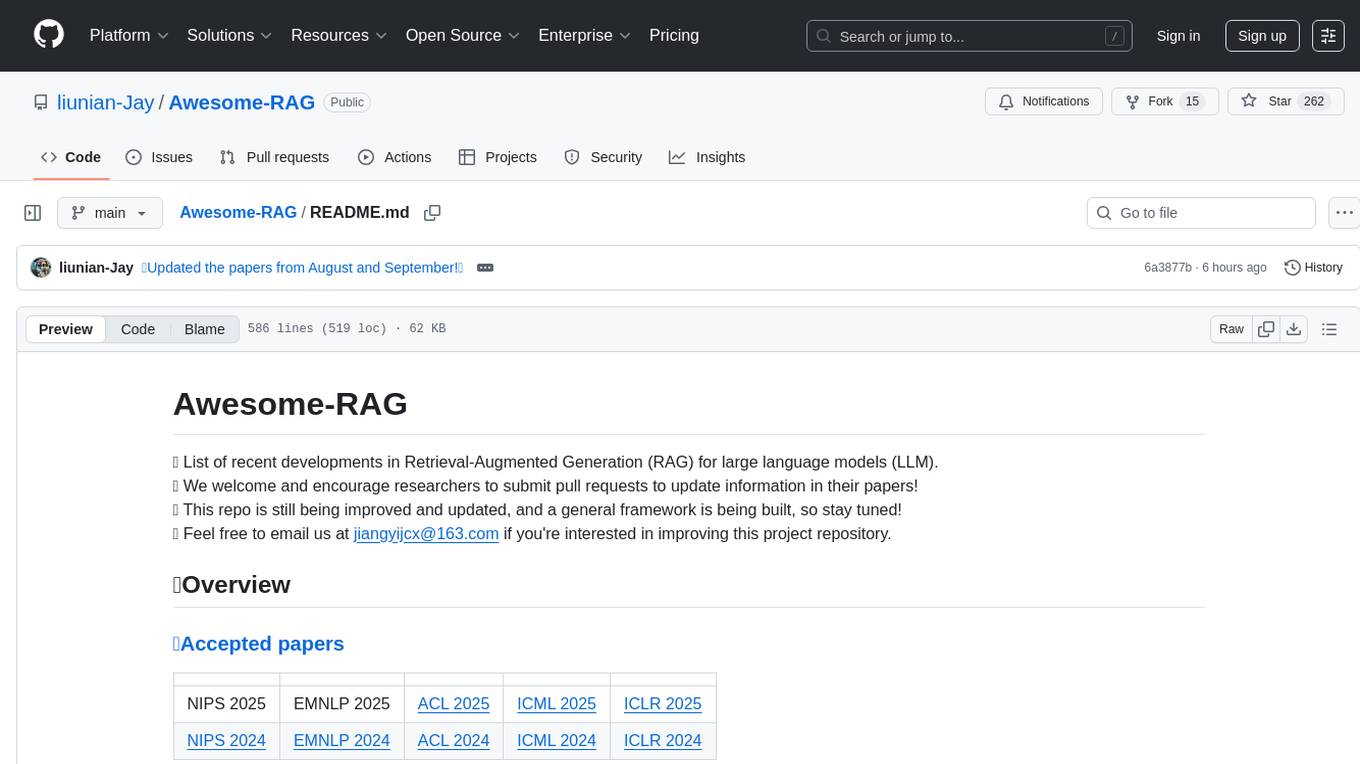

Awesome-RAG

Awesome-RAG is a repository that lists recent developments in Retrieval-Augmented Generation (RAG) for large language models (LLM). It includes accepted papers, evaluation datasets, latest news, and papers from various conferences like NIPS, EMNLP, ACL, ICML, and ICLR. The repository is continuously updated and aims to build a general framework for RAG. Researchers are encouraged to submit pull requests to update information in their papers. The repository covers a wide range of topics related to RAG, including knowledge-enhanced generation, contrastive reasoning, self-alignment, mobile agents, and more.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

rag-in-action

rag-in-action is a GitHub repository that provides a practical course structure for developing a RAG system based on DeepSeek. The repository likely contains resources, code samples, and tutorials to guide users through the process of building and implementing a RAG system using DeepSeek technology. Users interested in learning about RAG systems and their development may find this repository helpful in gaining hands-on experience and practical knowledge in this area.

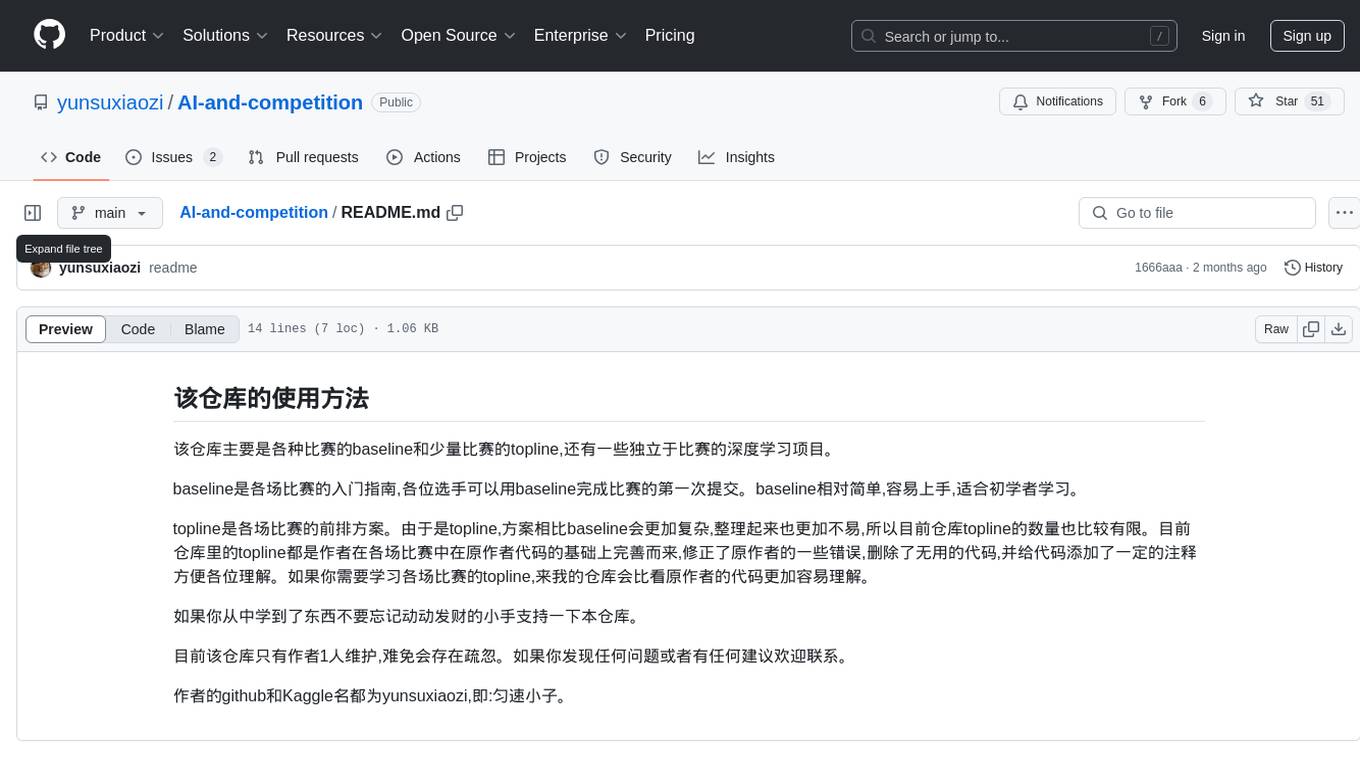

AI-and-competition

This repository provides baselines for various competitions, a few top solutions for some competitions, and independent deep learning projects. Baselines serve as entry guides for competitions, suitable for beginners to make their first submission. Top solutions are more complex and refined versions of baselines, with limited quantity but enhanced quality. The repository is maintained by a single author, yunsuxiaozi, offering code improvements and annotations for better understanding. Users can support the repository by learning from it and providing feedback.

h4cker

This repository is a comprehensive collection of cybersecurity-related references, scripts, tools, code, and other resources. It is carefully curated and maintained by Omar Santos. The repository serves as a supplemental material provider to several books, video courses, and live training created by Omar Santos. It encompasses over 10,000 references that are instrumental for both offensive and defensive security professionals in honing their skills.

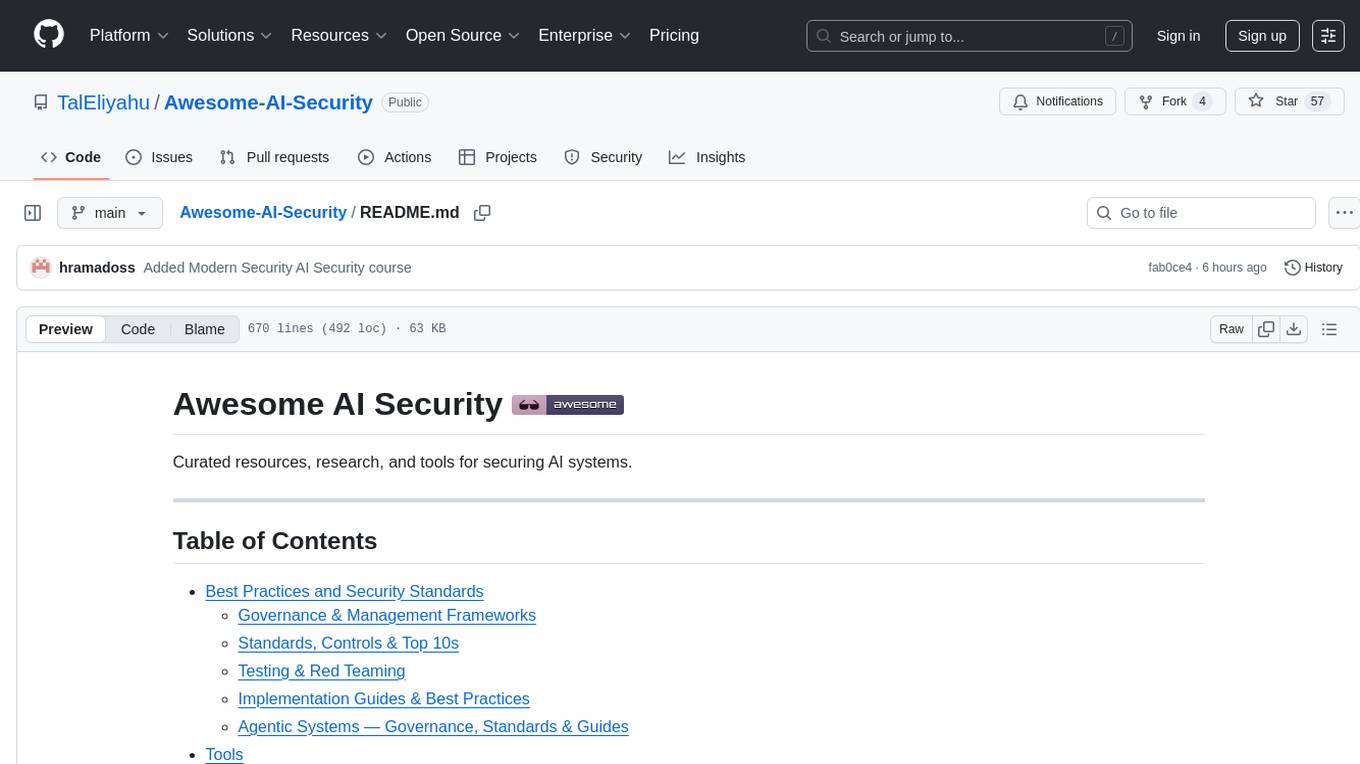

Awesome-AI-Security

Awesome-AI-Security is a curated list of resources for AI security, including tools, research papers, articles, and tutorials. It aims to provide a comprehensive overview of the latest developments in securing AI systems and preventing vulnerabilities. The repository covers topics such as adversarial attacks, privacy protection, model robustness, and secure deployment of AI applications. Whether you are a researcher, developer, or security professional, this collection of resources will help you stay informed and up-to-date in the rapidly evolving field of AI security.

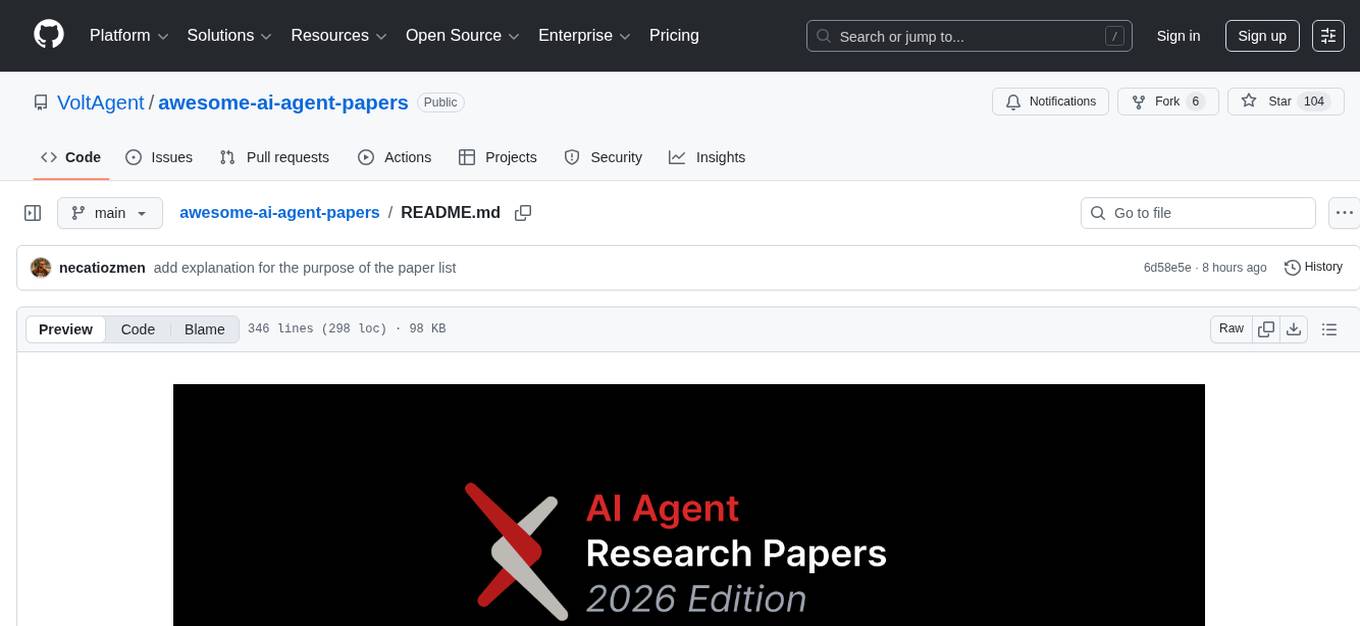

awesome-ai-agent-papers

This repository contains a curated list of papers related to artificial intelligence agents. It includes research papers, articles, and resources covering various aspects of AI agents, such as reinforcement learning, multi-agent systems, natural language processing, and more. Whether you are a researcher, student, or practitioner in the field of AI, this collection of papers can serve as a valuable reference to stay updated with the latest advancements and trends in AI agent technologies.

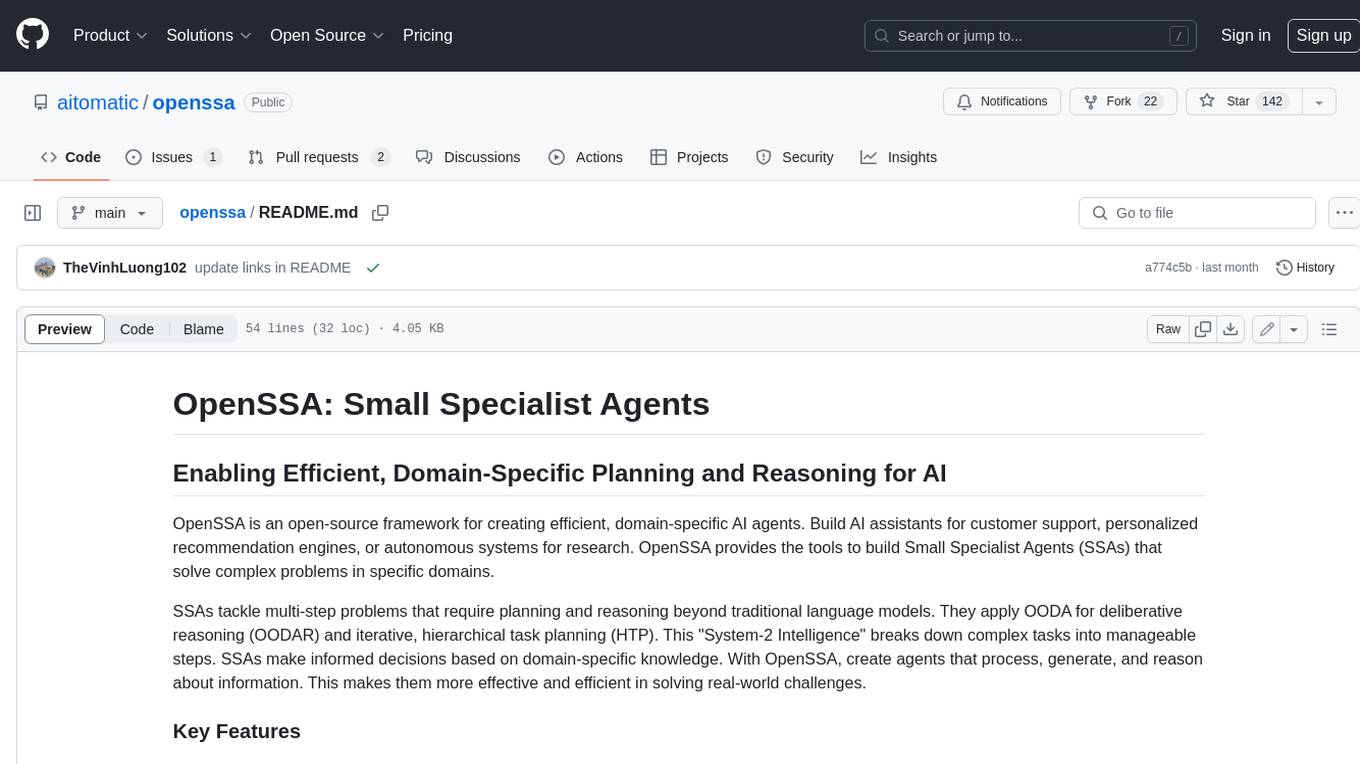

openssa

OpenSSA is an open-source framework for creating efficient, domain-specific AI agents. It enables the development of Small Specialist Agents (SSAs) that solve complex problems in specific domains. SSAs tackle multi-step problems that require planning and reasoning beyond traditional language models. They apply OODA for deliberative reasoning (OODAR) and iterative, hierarchical task planning (HTP). This "System-2 Intelligence" breaks down complex tasks into manageable steps. SSAs make informed decisions based on domain-specific knowledge. With OpenSSA, users can create agents that process, generate, and reason about information, making them more effective and efficient in solving real-world challenges.

awesome-artificial-intelligence-guidelines

The 'Awesome AI Guidelines' repository aims to simplify the ecosystem of guidelines, principles, codes of ethics, standards, and regulations around artificial intelligence. It provides a comprehensive collection of resources addressing ethical and societal challenges in AI systems, including high-level frameworks, principles, processes, checklists, interactive tools, industry standards initiatives, online courses, research, and industry newsletters, as well as regulations and policies from various countries. The repository serves as a valuable reference for individuals and teams designing, building, and operating AI systems to navigate the complex landscape of AI ethics and governance.

ai-workshop-code

The ai-workshop-code repository contains code examples and tutorials for various artificial intelligence concepts and algorithms. It serves as a practical resource for individuals looking to learn and implement AI techniques in their projects. The repository covers a wide range of topics, including machine learning, deep learning, natural language processing, computer vision, and reinforcement learning. By exploring the code and following the tutorials, users can gain hands-on experience with AI technologies and enhance their understanding of how these algorithms work in practice.

HuggingArxivLLM

HuggingArxiv is a tool designed to push research papers related to large language models from Arxiv. It helps users stay updated with the latest developments in the field of large language models by providing notifications and access to relevant papers.

For similar tasks

Awesome-LLM-Safety

Welcome to our Awesome-llm-safety repository! We've curated a collection of the latest, most comprehensive, and most valuable resources on large language model safety (llm-safety). But we don't stop there; included are also relevant talks, tutorials, conferences, news, and articles. Our repository is constantly updated to ensure you have the most current information at your fingertips.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.