Fast-LLM

Accelerating your LLM training to full speed! Made with ❤️ by ServiceNow Research

Stars: 224

Fast-LLM is an open-source library designed for training large language models with exceptional speed, scalability, and flexibility. Built on PyTorch and Triton, it offers optimized kernel efficiency, reduced overheads, and memory usage, making it suitable for training models of all sizes. The library supports distributed training across multiple GPUs and nodes, offers flexibility in model architectures, and is easy to use with pre-built Docker images and simple configuration. Fast-LLM is licensed under Apache 2.0, developed transparently on GitHub, and encourages contributions and collaboration from the community.

README:

Fast-LLM is a cutting-edge open-source library for training large language models with exceptional speed, scalability, and flexibility. Built on PyTorch and Triton, Fast-LLM empowers AI teams to push the limits of generative AI, from research to production.

Optimized for training models of all sizes—from small 1B-parameter models to massive clusters with 70B+ parameters—Fast-LLM delivers faster training, lower costs, and seamless scalability. Its fine-tuned kernels, advanced parallelism techniques, and efficient memory management make it the go-to choice for diverse training needs.

As a truly open-source project, Fast-LLM allows full customization and extension without proprietary restrictions. Developed transparently by a community of professionals on GitHub, the library benefits from collaborative innovation, with every change discussed and reviewed in the open to ensure trust and quality. Fast-LLM combines professional-grade tools with unified support for GPT-like architectures, offering the cost efficiency and flexibility that serious AI practitioners demand.

[!NOTE] Fast-LLM is not affiliated with Fast.AI, FastHTML, FastAPI, FastText, or other similarly named projects. Our library's name refers to its speed and efficiency in language model training.

-

🚀 Fast-LLM is Blazingly Fast:

- ⚡️ Optimized kernel efficiency and reduced overheads.

- 🔋 Optimized memory usage for best performance.

- ⏳ Minimizes training time and cost.

-

📈 Fast-LLM is Highly Scalable:

- 📡 Distributed training across multiple GPUs and nodes using 3D parallelism (Data, Tensor, and Pipeline).

- 🔗 Supports sequence length parallelism to handle longer sequences effectively.

- 🧠 ZeRO-1, ZeRO-2, and ZeRO-3 implementations for improved memory efficiency.

- 🎛️ Mixed precision training support for better performance.

- 🏋️♂️ Large batch training and gradient accumulation support.

- 🔄 Reproducible training with deterministic behavior.

-

🎨 Fast-LLM is Incredibly Flexible:

- 🤖 Compatible with all common language model architectures in a unified class.

- ⚡ Efficient dropless Mixture-of-Experts (MoE) implementation with SoTA performance.

- 🧩 Customizable language model architectures, data loaders, loss functions, and optimizers (in progress).

- 🤗 Seamless integration with Hugging Face Transformers.

-

🎯 Fast-LLM is Super Easy to Use:

- 📦 Pre-built Docker images for quick deployment.

- 📝 Simple YAML configuration for hassle-free setup.

- 💻 Command-line interface for easy launches.

- 📊 Detailed logging and real-time monitoring features.

- 📚 Extensive documentation and practical tutorials (in progress).

-

🌐 Fast-LLM is Truly Open Source:

- ⚖️ Licensed under Apache 2.0 for maximum freedom to use Fast-LLM at work, in your projects, or for research.

- 💻 Transparently developed on GitHub with public roadmap and issue tracking.

- 🤝 Contributions and collaboration are always welcome!

We'll walk you through how to use Fast-LLM to train a large language model on a cluster with multiple nodes and GPUs. We'll show an example setup using a Slurm cluster and a Kubernetes cluster.

For this demo, we will train a Mistral-7B model from scratch for 100 steps on random data. The config file examples/mistral-4-node-benchmark.yaml is pre-configured for a multi-node setup with 4 DGX nodes, each with 8 A100-80GB or H100-80GB GPUs.

[!NOTE] Fast-LLM scales from a single GPU to large clusters. You can start small and expand based on your resources.

Expect to see a significant speedup in training time compared to other libraries! For training Mistral-7B, Fast-LLM is expected to achieve a throughput of 9,800 tokens/s/H100 (batch size 32, sequence length 8k) on a 4-node cluster with 32 H100s.

- A Slurm cluster with at least 4 DGX nodes with 8 A100-80GB or H100-80GB GPUs each.

- CUDA 12.1 or higher.

- Dependencies: PyTorch, Triton, and Apex installed on all nodes.

-

Deploy the nvcr.io/nvidia/pytorch:24.07-py3 Docker image to all nodes (recommended), because it contains all the necessary dependencies.

-

Install Fast-LLM on all nodes:

sbatch <<EOF #!/bin/bash #SBATCH --nodes=$(scontrol show node | grep -c NodeName) #SBATCH --ntasks-per-node=1 #SBATCH --ntasks=$(scontrol show node | grep -c NodeName) #SBATCH --exclusive srun bash -c 'pip install --no-cache-dir -e "git+https://github.com/ServiceNow/Fast-LLM.git#egg=llm[CORE,OPTIONAL,DEV]"' EOF

-

Use the example Slurm job script examples/fast-llm.sbat to submit the job to the cluster:

sbatch examples/fast-llm.sbat

-

Monitor the job's progress:

- Logs: Follow

job_output.logandjob_error.login your working directory for logs. - Status: Use

squeue -u $USERto see the job status.

- Logs: Follow

Now, you can sit back and relax while Fast-LLM trains your model at full speed! ☕

- A Kubernetes cluster with at least 4 DGX nodes with 8 A100-80GB or H100-80GB GPUs each.

- KubeFlow installed.

- Locked memory limit set to unlimited at the host level on all nodes. Ask your cluster admin to do this if needed.

-

Create a Kubernetes PersistentVolumeClaim (PVC) named

fast-llm-homethat will be mounted to/home/fast-llmin the container using examples/fast-llm-pvc.yaml:kubectl apply -f examples/fast-llm-pvc.yaml

-

Create a PyTorchJob resource using the example configuration file examples/fast-llm.pytorchjob.yaml:

kubectl apply -f examples/fast-llm.pytorchjob.yaml

-

Monitor the job status:

- Use

kubectl get pytorchjobsto see the job status. - Use

kubectl logs -f fast-llm-master-0 -c pytorchto follow the logs.

- Use

That's it! You're now up and running with Fast-LLM on Kubernetes. 🚀

📖 Want to learn more? Check out our documentation for more information on how to use Fast-LLM.

🔨 We welcome contributions to Fast-LLM! Have a look at our contribution guidelines.

🐞 Something doesn't work? Open an issue!

Fast-LLM is licensed by ServiceNow, Inc. under the Apache 2.0 License. See LICENSE for more information.

For security issues, email [email protected]. See our security policy.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Fast-LLM

Similar Open Source Tools

Fast-LLM

Fast-LLM is an open-source library designed for training large language models with exceptional speed, scalability, and flexibility. Built on PyTorch and Triton, it offers optimized kernel efficiency, reduced overheads, and memory usage, making it suitable for training models of all sizes. The library supports distributed training across multiple GPUs and nodes, offers flexibility in model architectures, and is easy to use with pre-built Docker images and simple configuration. Fast-LLM is licensed under Apache 2.0, developed transparently on GitHub, and encourages contributions and collaboration from the community.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

ml-retreat

ML-Retreat is a comprehensive machine learning library designed to simplify and streamline the process of building and deploying machine learning models. It provides a wide range of tools and utilities for data preprocessing, model training, evaluation, and deployment. With ML-Retreat, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to optimize their models. The library is built with a focus on scalability, performance, and ease of use, making it suitable for both beginners and experienced machine learning practitioners.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

verl

veRL is a flexible and efficient reinforcement learning training framework designed for large language models (LLMs). It allows easy extension of diverse RL algorithms, seamless integration with existing LLM infrastructures, and flexible device mapping. The framework achieves state-of-the-art throughput and efficient actor model resharding with 3D-HybridEngine. It supports popular HuggingFace models and is suitable for users working with PyTorch FSDP, Megatron-LM, and vLLM backends.

deepteam

Deepteam is a powerful open-source tool designed for deep learning projects. It provides a user-friendly interface for training, testing, and deploying deep neural networks. With Deepteam, users can easily create and manage complex models, visualize training progress, and optimize hyperparameters. The tool supports various deep learning frameworks and allows seamless integration with popular libraries like TensorFlow and PyTorch. Whether you are a beginner or an experienced deep learning practitioner, Deepteam simplifies the development process and accelerates model deployment.

AReaL

AReaL (Ant Reasoning RL) is an open-source reinforcement learning system developed at the RL Lab, Ant Research. It is designed for training Large Reasoning Models (LRMs) in a fully open and inclusive manner. AReaL provides reproducible experiments for 1.5B and 7B LRMs, showcasing its scalability and performance across diverse computational budgets. The system follows an iterative training process to enhance model performance, with a focus on mathematical reasoning tasks. AReaL is equipped to adapt to different computational resource settings, enabling users to easily configure and launch training trials. Future plans include support for advanced models, optimizations for distributed training, and exploring research topics to enhance LRMs' reasoning capabilities.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

graphbit

GraphBit is an industry-grade agentic AI framework built for developers and AI teams that demand stability, scalability, and low resource usage. It is written in Rust for maximum performance and safety, delivering significantly lower CPU usage and memory footprint compared to leading alternatives. The framework is designed to run multi-agent workflows in parallel, persist memory across steps, recover from failures, and ensure 100% task success under load. With lightweight architecture, observability, and concurrency support, GraphBit is suitable for deployment in high-scale enterprise environments and low-resource edge scenarios.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

AI_Spectrum

AI_Spectrum is a versatile machine learning library that provides a wide range of tools and algorithms for building and deploying AI models. It offers a user-friendly interface for data preprocessing, model training, and evaluation. With AI_Spectrum, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is designed to be flexible and scalable, making it suitable for both beginners and experienced data scientists.

RustGPT

A complete Large Language Model implementation in pure Rust with no external ML frameworks. Demonstrates building a transformer-based language model from scratch, including pre-training, instruction tuning, interactive chat mode, full backpropagation, and modular architecture. Model learns basic world knowledge and conversational patterns. Features custom tokenization, greedy decoding, gradient clipping, modular layer system, and comprehensive test coverage. Ideal for understanding modern LLMs and key ML concepts. Dependencies include ndarray for matrix operations and rand for random number generation. Contributions welcome for model persistence, performance optimizations, better sampling, evaluation metrics, advanced architectures, training improvements, data handling, and model analysis. Follows standard Rust conventions and encourages contributions at beginner, intermediate, and advanced levels.

FedML

FedML is a unified and scalable machine learning library for running training and deployment anywhere at any scale. It is highly integrated with FEDML Nexus AI, a next-gen cloud service for LLMs & Generative AI. FEDML Nexus AI provides holistic support of three interconnected AI infrastructure layers: user-friendly MLOps, a well-managed scheduler, and high-performance ML libraries for running any AI jobs across GPU Clouds.

bisheng

Bisheng is a leading open-source **large model application development platform** that empowers and accelerates the development and deployment of large model applications, helping users enter the next generation of application development with the best possible experience.

slime

Slime is an LLM post-training framework for RL scaling that provides high-performance training and flexible data generation capabilities. It connects Megatron with SGLang for efficient training and enables custom data generation workflows through server-based engines. The framework includes modules for training, rollout, and data buffer management, offering a comprehensive solution for RL scaling.

For similar tasks

Fast-LLM

Fast-LLM is an open-source library designed for training large language models with exceptional speed, scalability, and flexibility. Built on PyTorch and Triton, it offers optimized kernel efficiency, reduced overheads, and memory usage, making it suitable for training models of all sizes. The library supports distributed training across multiple GPUs and nodes, offers flexibility in model architectures, and is easy to use with pre-built Docker images and simple configuration. Fast-LLM is licensed under Apache 2.0, developed transparently on GitHub, and encourages contributions and collaboration from the community.

bumblecore

BumbleCore is a hands-on large language model training framework that allows complete control over every training detail. It provides manual training loop, customizable model architecture, and support for mainstream open-source models. The framework follows core principles of transparency, flexibility, and efficiency. BumbleCore is suitable for deep learning researchers, algorithm engineers, learners, and enterprise teams looking for customization and control over model training processes.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

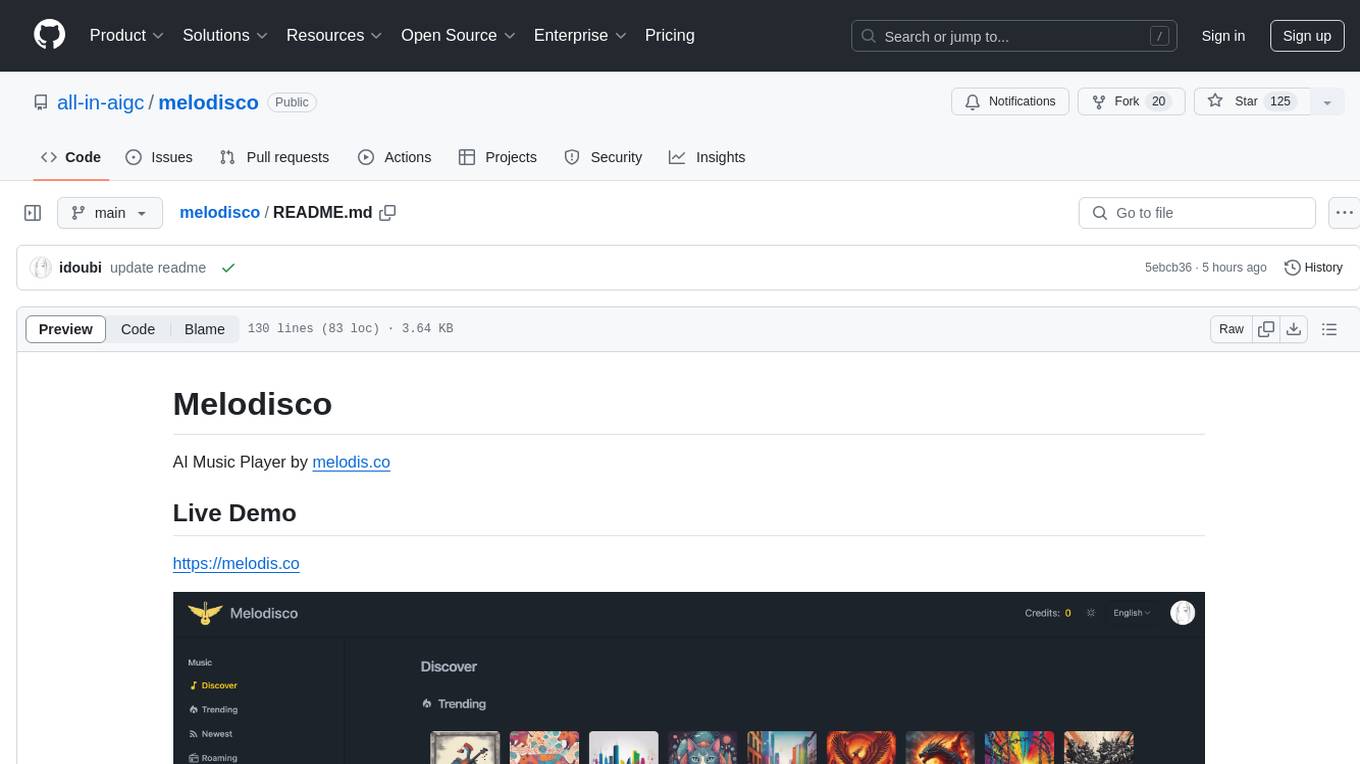

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.

PDFMathTranslate

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.