Best AI tools for< Scale Training >

20 - AI tool Sites

Quantified

Quantified is an AI-driven platform designed to enhance sales training and performance for enterprises in industries such as Pharmaceuticals, Financial Services, Insurance, and Global Enterprises. The platform offers AI-powered simulations, roleplay scenarios, and personalized practice sessions to improve sales outcomes, coaching efficiency, and training effectiveness. Quantified enables organizations to scale training programs, certify field teams, handle objections, and conduct sales meetings with clarity and confidence. Through practical applications and detailed examples, the platform transforms traditional training methods by providing a realistic sales presentation experience and personalized learning opportunities.

Hyperbound

Hyperbound is an AI Sales Role-Play & Upskilling Platform designed to help sales teams improve their skills through realistic AI roleplays. It allows users to practice cold, warm, discovery, and post-sales calls with AI buyers customized for their target persona. The platform has received high ratings and positive feedback from sales professionals globally, offering interactive demos and no credit card required for booking a demo.

NVIDIA Run:ai

NVIDIA Run:ai is an enterprise platform for AI workloads and GPU orchestration. It accelerates AI and machine learning operations by addressing key infrastructure challenges through dynamic resource allocation, comprehensive AI life-cycle support, and strategic resource management. The platform significantly enhances GPU efficiency and workload capacity by pooling resources across environments and utilizing advanced orchestration. NVIDIA Run:ai provides unparalleled flexibility and adaptability, supporting public clouds, private clouds, hybrid environments, or on-premises data centers.

FluidStack

FluidStack is a leading GPU cloud platform designed for AI and LLM (Large Language Model) training. It offers unlimited scale for AI training and inference, allowing users to access thousands of fully-interconnected GPUs on demand. Trusted by top AI startups, FluidStack aggregates GPU capacity from data centers worldwide, providing access to over 50,000 GPUs for accelerating training and inference. With 1000+ data centers across 50+ countries, FluidStack ensures reliable and efficient GPU cloud services at competitive prices.

Articulate

Articulate is the world's best creator platform for online workplace learning, offering a comprehensive suite of tools for creating engaging and effective online training content. With features like AI Assistant, Rise, Storyline, and Reach, Articulate provides millions of course assets, prebuilt templates, and easy-to-use creator tools to simplify course creation from start to finish. The platform is loved by customers and industry experts for its ability to increase compliance rates, reduce incidents, and deliver blended learning experiences. Articulate is a one-stop solution for organizations looking to create and deliver online training at scale.

Scale AI

Scale AI is an AI tool that accelerates the development of AI applications for enterprise, government, and automotive sectors. It offers Scale Data Engine for generative AI, Scale GenAI Platform, and evaluation services for model developers. The platform leverages enterprise data to build sustainable AI programs and partners with leading AI models. Scale's focus on generative AI applications, data labeling, and model evaluation sets it apart in the AI industry.

Scale AI

Scale AI is an AI tool that accelerates the development of AI applications for various sectors including enterprise, government, and automotive industries. It offers solutions for training models, fine-tuning, generative AI, and model evaluations. Scale Data Engine and GenAI Platform enable users to leverage enterprise data effectively. The platform collaborates with leading AI models and provides high-quality data for public and private sector applications.

Macgence AI Training Data Services

Macgence is an AI training data services platform that offers high-quality off-the-shelf structured training data for organizations to build effective AI systems at scale. They provide services such as custom data sourcing, data annotation, data validation, content moderation, and localization. Macgence combines global linguistic, cultural, and technological expertise to create high-quality datasets for AI models, enabling faster time-to-market across the entire model value chain. With more than 5 years of experience, they support and scale AI initiatives of leading global innovators by designing custom data collection programs. Macgence specializes in handling AI training data for text, speech, image, and video data, offering cognitive annotation services to unlock the potential of unstructured textual data.

VESSL AI

VESSL AI is a platform offering Liquid AI Infra & Persistent GPU Cloud services, allowing users to easily access and utilize GPUs for running AI workloads. It provides a seamless experience from zero to running AI workloads, catering to AI startups, enterprise AI teams, and research & academia. VESSL AI offers GPU products for every stage, with options like spot, on-demand, and reserved capacity, along with features like multi-cloud failover, pay-as-you-go pricing, and production-ready reliability. The platform is designed to help users scale their AI projects efficiently and effectively.

Lambda

Lambda is a superintelligence cloud platform that offers on-demand GPU clusters for multi-node training and fine-tuning, private large-scale GPU clusters, seamless management and scaling of AI workloads, inference endpoints and API, and a privacy-first chat app with open source models. It also provides NVIDIA's latest generation infrastructure for enterprise AI. With Lambda, AI teams can access gigawatt-scale AI factories for training and inference, deploy GPU instances, and leverage the latest NVIDIA GPUs for high-performance computing.

Denvr DataWorks AI Cloud

Denvr DataWorks AI Cloud is a cloud-based AI platform that provides end-to-end AI solutions for businesses. It offers a range of features including high-performance GPUs, scalable infrastructure, ultra-efficient workflows, and cost efficiency. Denvr DataWorks is an NVIDIA Elite Partner for Compute, and its platform is used by leading AI companies to develop and deploy innovative AI solutions.

ColdIQ

ColdIQ is an AI-powered sales prospecting tool that helps B2B companies with revenue above $100k/month to build outbound systems that sell for them. The tool offers end-to-end cold outreach campaign setup and management, email infrastructure setup and warmup, audience research and targeting, data scraping and enrichment, campaigns optimization, sending automation, sales systems implementation, training on tools best practices, sales tools recommendations, free gap analysis, sales consulting, and copywriting frameworks. ColdIQ leverages AI to tailor messaging to each prospect, automate outreach, and flood calendars with opportunities.

Whale

Whale is an AI-powered software designed to help businesses document their standard operating procedures, policies, and internal company knowledge. It streamlines the process of onboarding, training, and growing teams by leveraging AI technology to assist in creating and organizing documentation. Whale offers features such as AI-assisted SOP and process documentation, automated training flows, a single source of truth for knowledge management, and an AI assistant named Alice to help with various tasks. The platform aims to systemize and scale businesses by providing a user-friendly interface and dedicated support services.

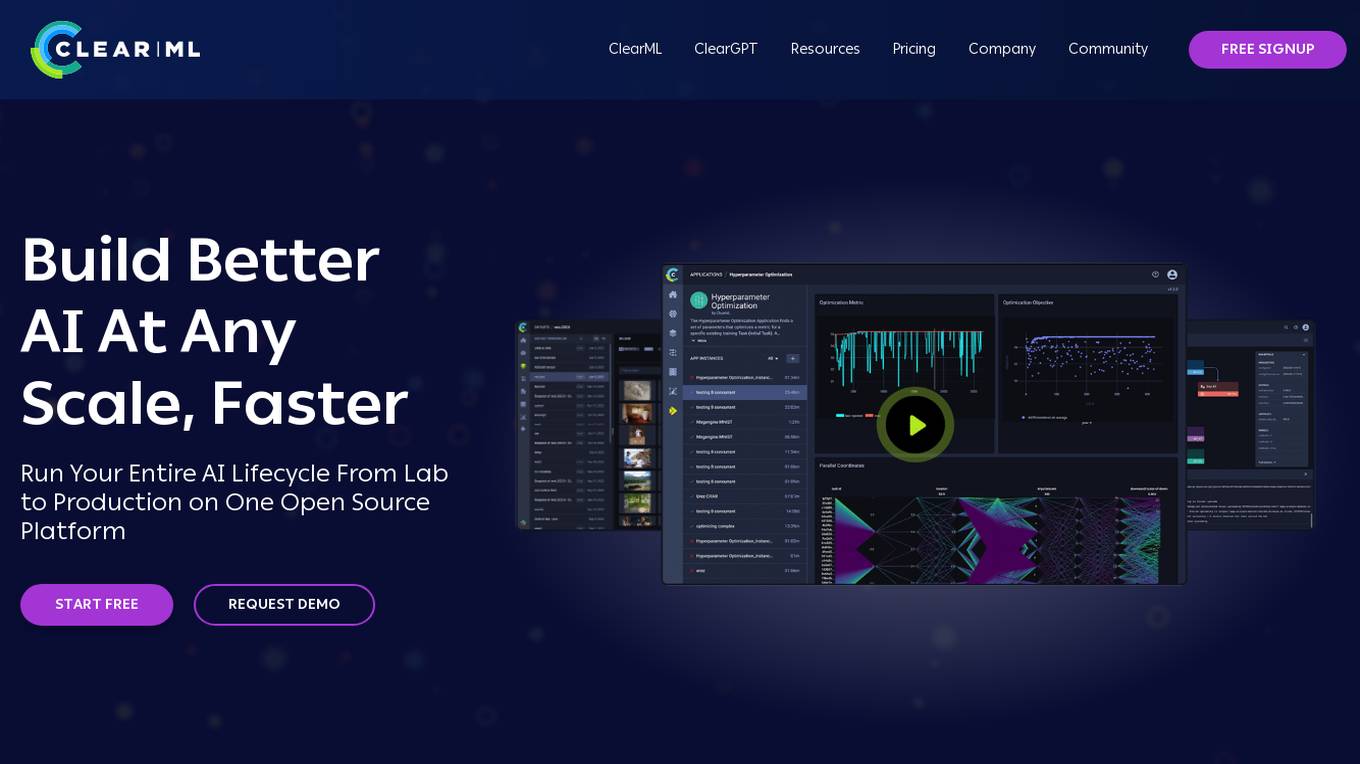

ClearML

ClearML is an open-source, end-to-end platform for continuous machine learning (ML). It provides a unified platform for data management, experiment tracking, model training, deployment, and monitoring. ClearML is designed to make it easy for teams to collaborate on ML projects and to ensure that models are deployed and maintained in a reliable and scalable way.

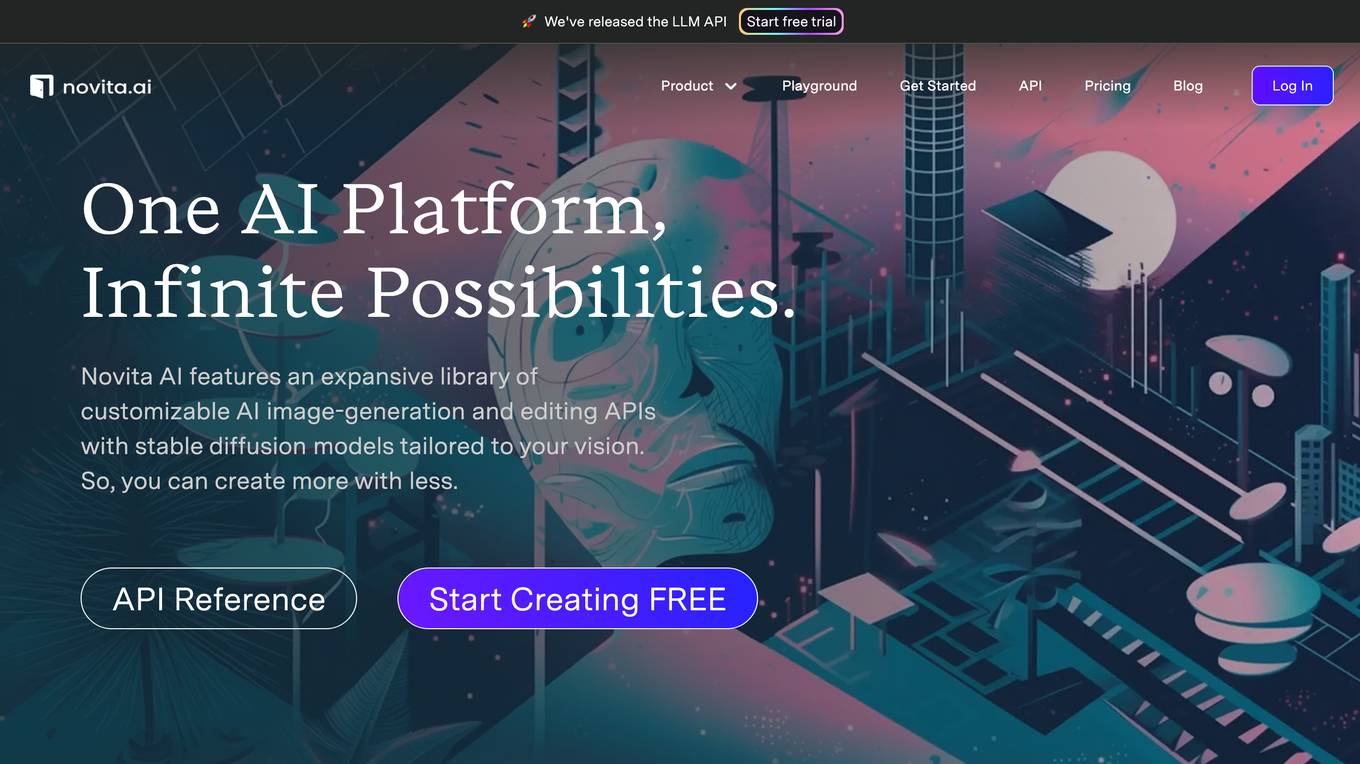

Novita AI

Novita AI is an AI cloud platform that offers Model APIs, Serverless, and GPU Instance solutions integrated into one cost-effective platform. It provides tools for building AI products, scaling with serverless architecture, and deploying with GPU instances. Novita AI caters to startups and businesses looking to leverage AI technologies without the need for extensive machine learning expertise. The platform also offers a Startup Program, 24/7 service support, and has received positive feedback for its reasonable pricing and stable API services.

IllumiDesk

IllumiDesk is a generative AI platform for instructors and content developers that helps teams create and monetize content tailored 10X faster. With IllumiDesk, you can automate grading tasks, collaborate with your learners, create awesome content at the speed of AI, and integrate with the services you know and love. IllumiDesk's AI will help you create, maintain, and structure your content into interactive lessons. You can also leverage IllumiDesk's flexible integration options using the RESTful API and/or LTI v1.3 to leverage existing content and flows. IllumiDesk is trusted by training agencies and universities around the world.

Luma AI

Luma AI is a 3D capture platform that allows users to create interactive 3D scenes from videos. With Luma AI, users can capture 3D models of people, objects, and environments, and then use those models to create interactive experiences such as virtual tours, product demonstrations, and training simulations.

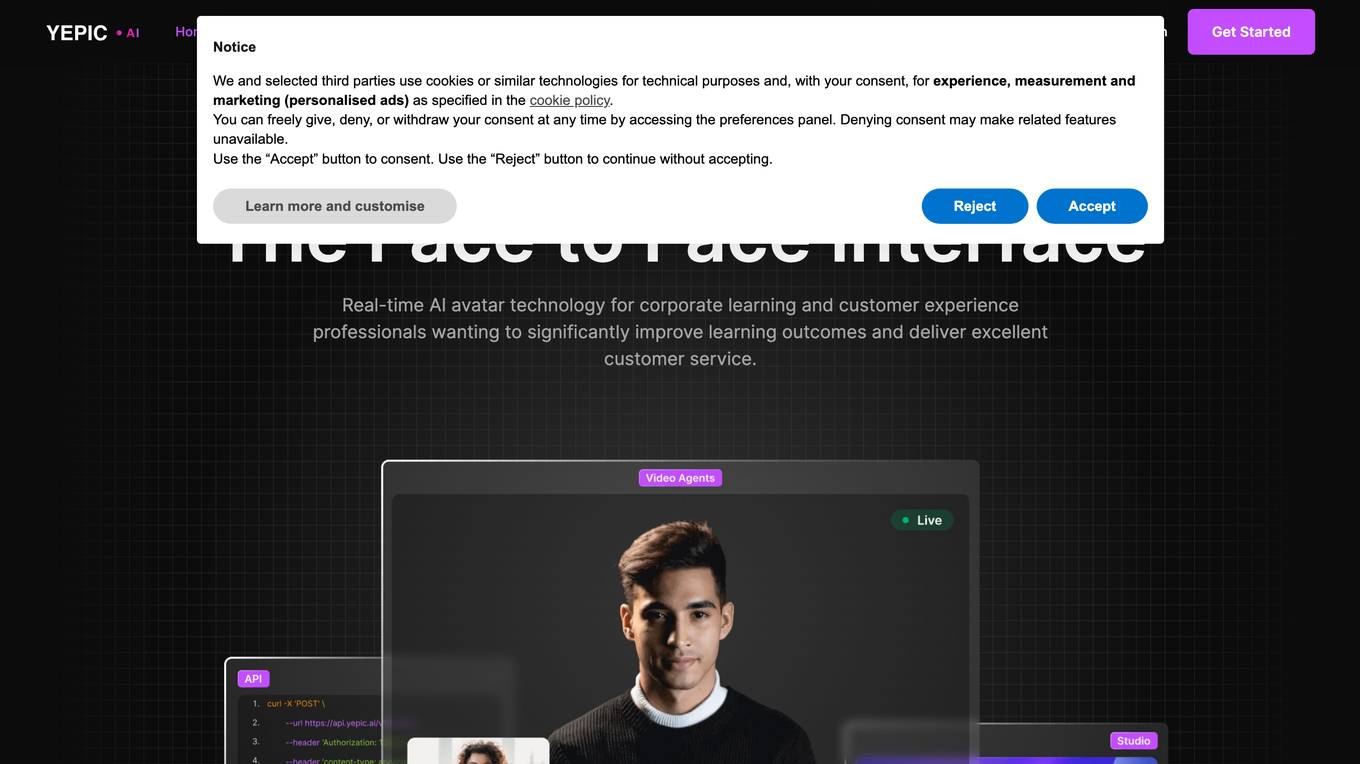

Yepic AI

Yepic AI is a real-time AI avatar technology for corporate learning and customer experience professionals wanting to significantly improve learning outcomes and deliver excellent customer service. It offers a range of products including asynchronous studio express, asynchronous studio pro, real-time video agents, and real-time asynchronous API. Yepic AI's avatars are knowledgeable, lifelike, and multilingual, and can be used for a variety of purposes such as education and training, health and fitness, and customer support.

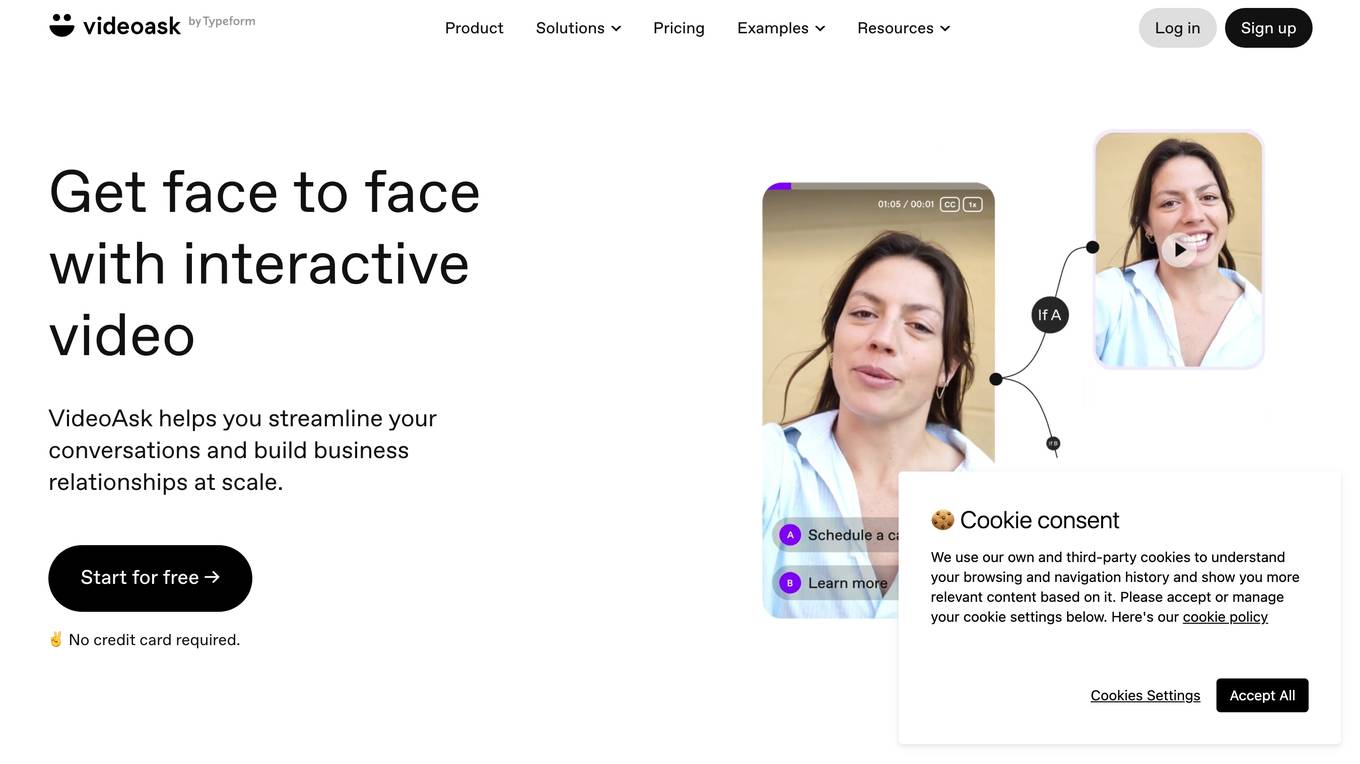

VideoAsk by Typeform

VideoAsk by Typeform is an interactive video platform that helps streamline conversations and build business relationships at scale. It offers features such as asynchronous interviews, easy scheduling, tagging, gathering contact info, capturing leads, research and feedback, training, customer support, and more. Users can create interactive video forms, conduct async interviews, and engage with their audience through AI-powered video chatbots. The platform is user-friendly, code-free, and integrates with over 1,500 applications through Zapier.

Spiral

Spiral is an AI-powered tool designed to automate 80% of repeat writing, thinking, and creative tasks. It allows users to create Spirals to accelerate any writing task by training it on examples to generate outputs in their desired voice and style. The tool includes a powerful Prompt Builder to help users work faster and smarter, transforming content into tweets, PRDs, proposals, summaries, and more. Spiral extracts patterns from text to deduce voice and style, enabling users to iterate on outputs until satisfied. Users can share Spirals with their team to maximize quality and streamline processes.

6 - Open Source AI Tools

training-operator

Kubeflow Training Operator is a Kubernetes-native project for fine-tuning and scalable distributed training of machine learning (ML) models created with various ML frameworks such as PyTorch, Tensorflow, XGBoost, MPI, Paddle and others. Training Operator allows you to use Kubernetes workloads to effectively train your large models via Kubernetes Custom Resources APIs or using Training Operator Python SDK. > Note: Before v1.2 release, Kubeflow Training Operator only supports TFJob on Kubernetes. * For a complete reference of the custom resource definitions, please refer to the API Definition. * TensorFlow API Definition * PyTorch API Definition * Apache MXNet API Definition * XGBoost API Definition * MPI API Definition * PaddlePaddle API Definition * For details of all-in-one operator design, please refer to the All-in-one Kubeflow Training Operator * For details on its observability, please refer to the monitoring design doc.

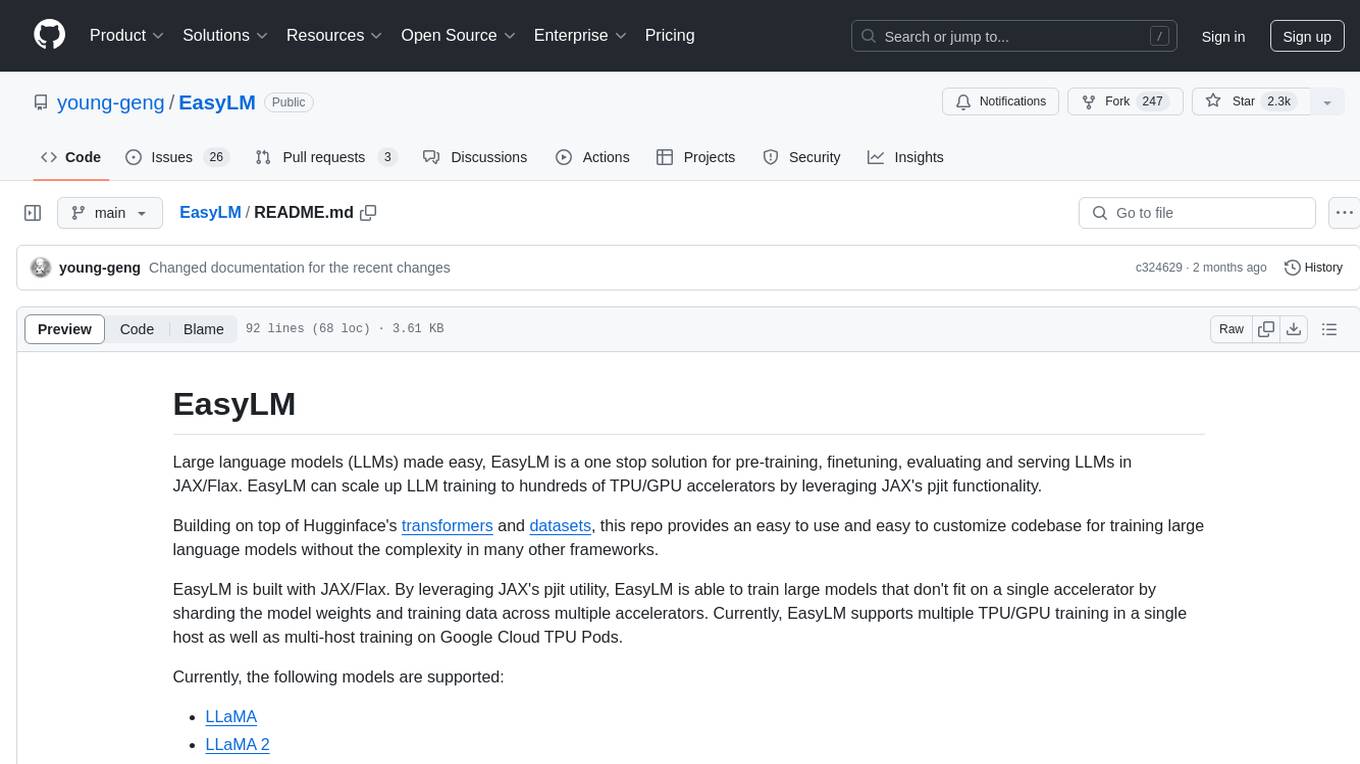

EasyLM

EasyLM is a one-stop solution for pre-training, fine-tuning, evaluating, and serving large language models in JAX/Flax. It simplifies the process by leveraging JAX's pjit functionality to scale up training to multiple TPU/GPU accelerators. Built on top of Huggingface's transformers and datasets, EasyLM offers an easy-to-use and customizable codebase for training large language models without the complexity found in other frameworks. It supports sharding model weights and training data across multiple accelerators, enabling multi-TPU/GPU training on a single host or across multiple hosts on Google Cloud TPU Pods. EasyLM currently supports models like LLaMA, LLaMA 2, and LLaMA 3.

felafax

Felafax is a framework designed to tune LLaMa3.1 on Google Cloud TPUs for cost efficiency and seamless scaling. It provides a Jupyter notebook for continued-training and fine-tuning open source LLMs using XLA runtime. The goal of Felafax is to simplify running AI workloads on non-NVIDIA hardware such as TPUs, AWS Trainium, AMD GPU, and Intel GPU. It supports various models like LLaMa-3.1 JAX Implementation, LLaMa-3/3.1 PyTorch XLA, and Gemma2 Models optimized for Cloud TPUs with full-precision training support.

Fast-LLM

Fast-LLM is an open-source library designed for training large language models with exceptional speed, scalability, and flexibility. Built on PyTorch and Triton, it offers optimized kernel efficiency, reduced overheads, and memory usage, making it suitable for training models of all sizes. The library supports distributed training across multiple GPUs and nodes, offers flexibility in model architectures, and is easy to use with pre-built Docker images and simple configuration. Fast-LLM is licensed under Apache 2.0, developed transparently on GitHub, and encourages contributions and collaboration from the community.

cosmos-rl

Cosmos-RL is a flexible and scalable Reinforcement Learning framework specialized for Physical AI applications. It provides a toolchain for large scale RL training workload with features like parallelism, asynchronous processing, low-precision training support, and a single-controller architecture. The system architecture includes Tensor Parallelism, Sequence Parallelism, Context Parallelism, FSDP Parallelism, and Pipeline Parallelism. It also utilizes a messaging system for coordinating policy and rollout replicas, along with dynamic NCCL Process Groups for fault-tolerant and elastic large-scale RL training.

torchtitan

Torchtitan is a PyTorch native platform designed for rapid experimentation and large-scale training of generative AI models. It provides a flexible foundation for developers to build upon with extension points for creating custom extensions. The tool showcases PyTorch's latest distributed training features and supports pretraining Llama 3.1 LLMs of various sizes. It offers key features like multi-dimensional parallelisms, FSDP2 with per-parameter sharding, Tensor Parallel, Pipeline Parallel, and more. Users can contribute to the tool through the experiments folder and core contributions guidelines. Installation can be done from source, nightly builds, or stable releases. The tool also supports training Llama 3.1 models and provides guidance on starting a training run and multi-node training. Citation information is available for referencing the tool in academic work, and the source code is under a BSD 3 license.

20 - OpenAI Gpts

R&D Process Scale-up Advisor

Optimizes production processes for efficient large-scale operations.

CIM Analyst

In-depth CIM analysis with a structured rating scale, offering detailed business evaluations.

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

Business Angel - Startup and Insights PRO

Business Angel provides expert startup guidance: funding, growth hacks, and pitch advice. Navigate the startup ecosystem, from seed to scale. Essential for entrepreneurs aiming for success. Master your strategy and launch with confidence. Your startup journey begins here!

Sysadmin

I help you with all your sysadmin tasks, from setting up your server to scaling your already exsisting one. I can help you with understanding the long list of log files and give you solutions to the problems.

Seabiscuit Launch Lander

Startup Strong Within 180 Days: Tailored advice for launching, promoting, and scaling businesses of all types. It covers all stages from pre-launch to post-launch and develops strategies including market research, branding, promotional tactics, and operational planning unique your business. (v1.8)

Startup Advisor

Startup advisor guiding founders through detailed idea evaluation, product-market-fit, business model, GTM, and scaling.