PDFMathTranslate

PDF scientific paper translation with preserved formats - 基于 AI 完整保留排版的 PDF 文档全文双语翻译,支持 Google/DeepL/Ollama/OpenAI 等服务,提供 CLI/GUI/Docker/Zotero

Stars: 19243

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

README:

PDF scientific paper translation and bilingual comparison.

- 📊 Preserve formulas, charts, table of contents, and annotations (preview).

- 🌐 Support multiple languages, and diverse translation services.

- 🤖 Provides commandline tool, interactive user interface, and Docker

Feel free to provide feedback in GitHub Issues or Telegram Group.

For details on how to contribute, please consult the Contribution Guide.

- [Mar. 3, 2025] Experimental support for the new backend BabelDOC WebUI added as an experimental option (by @awwaawwa)

- [Feb. 22 2025] Better release CI and well-packaged windows-amd64 exe (by @awwaawwa)

- [Dec. 24 2024] The translator now supports local models on Xinference (by @imClumsyPanda)

- [Dec. 19 2024] Non-PDF/A documents are now supported using

-cp(by @reycn) - [Dec. 13 2024] Additional support for backend by (by @YadominJinta)

- [Dec. 10 2024] The translator now supports OpenAI models on Azure (by @yidasanqian)

You can try our application out using either of the following demos:

- Public free service online without installation (recommended).

- Immersive Translate - BabelDOC 1000 free pages per month. (recommended)

- Demo hosted on HuggingFace

- Demo hosted on ModelScope without installation.

Note that the computing resources of the demo are limited, so please avoid abusing them.

For different use cases, we provide distinct methods to use our program:

1. UV install

-

Python installed (3.10 <= version <= 3.12)

-

Install our package:

pip install uv uv tool install --python 3.12 pdf2zh

-

Execute translation, files generated in current working directory:

pdf2zh document.pdf

2. Windows exe

-

Download pdf2zh-version-win64.zip from release page

-

Unzip and double-click

pdf2zh.exeto run.

3. Graphic user interface

1. Python installed (3.10 <= version <= 3.12) 2. Install our package:pip install pdf2zh-

Start using in browser:

pdf2zh -i

-

If your browswer has not been started automatically, goto

http://localhost:7860/

See documentation for GUI for more details.

4. Docker

-

Pull and run:

docker pull byaidu/pdf2zh docker run -d -p 7860:7860 byaidu/pdf2zh

-

Open in browser:

http://localhost:7860/

For docker deployment on cloud service:

5. Zotero Plugin

See Zotero PDF2zh for more details.

6. Commandline

-

Python installed (3.10 <= version <= 3.12)

-

Install our package:

pip install pdf2zh

-

Execute translation, files generated in current working directory:

pdf2zh document.pdf

[!TIP]

If you're using Windows and cannot open the file after downloading, please install vc_redist.x64.exe and try again.

If you cannot access Docker Hub, please try the image on GitHub Container Registry.

docker pull ghcr.io/byaidu/pdfmathtranslate docker run -d -p 7860:7860 ghcr.io/byaidu/pdfmathtranslate

The present program needs an AI model(wybxc/DocLayout-YOLO-DocStructBench-onnx) before working and some users are not able to download due to network issues. If you have a problem with downloading this model, we provide a workaround using the following environment variable:

set HF_ENDPOINT=https://hf-mirror.comFor PowerShell user:

$env:HF_ENDPOINT = https://hf-mirror.comIf the solution does not work to you / you encountered other issues, please refer to frequently asked questions.

Execute the translation command in the command line to generate the translated document example-mono.pdf and the bilingual document example-dual.pdf in the current working directory. Use Google as the default translation service. More support translation services can find HERE.

In the following table, we list all advanced options for reference:

| Option | Function | Example |

|---|---|---|

| files | Local files | pdf2zh ~/local.pdf |

| links | Online files | pdf2zh http://arxiv.org/paper.pdf |

-i |

Enter GUI | pdf2zh -i |

-p |

Partial document translation | pdf2zh example.pdf -p 1 |

-li |

Source language | pdf2zh example.pdf -li en |

-lo |

Target language | pdf2zh example.pdf -lo zh |

-s |

Translation service | pdf2zh example.pdf -s deepl |

-t |

Multi-threads | pdf2zh example.pdf -t 1 |

-o |

Output dir | pdf2zh example.pdf -o output |

-f, -c

|

Exceptions | pdf2zh example.pdf -f "(MS.*)" |

-cp |

Compatibility Mode | pdf2zh example.pdf --compatible |

--skip-subset-fonts |

Skip font subset | pdf2zh example.pdf --skip-subset-fonts |

--ignore-cache |

Ignore translate cache | pdf2zh example.pdf --ignore-cache |

--share |

Public link | pdf2zh -i --share |

--authorized |

Authorization | pdf2zh -i --authorized users.txt [auth.html] |

--prompt |

Custom Prompt | pdf2zh --prompt [prompt.txt] |

--onnx |

[Use Custom DocLayout-YOLO ONNX model] | pdf2zh --onnx [onnx/model/path] |

--serverport |

[Use Custom WebUI port] | pdf2zh --serverport 7860 |

--dir |

[batch translate] | pdf2zh --dir /path/to/translate/ |

--config |

configuration file | pdf2zh --config /path/to/config/config.json |

--serverport |

[custom gradio server port] | pdf2zh --serverport 7860 |

--babeldoc |

Use Experimental backend BabelDOC to translate |

pdf2zh --babeldoc -s openai example.pdf |

For detailed explanations, please refer to our document about Advanced Usage for a full list of each option.

The current pdf2zh API is temporarily deprecated. The API will be provided again after pdf2zh 2.0 is released. For users who need programmatic access, please use the babeldoc.high_level.async_translate function of BabelDOC.

This API being temporarily deprecated means: the relevant code will not be removed for now, but no technical support will be provided, and no bug fixes will be made.

-

[ ] Parse layout with DocLayNet based models, PaddleX, PaperMage, SAM2

-

[ ] Fix page rotation, table of contents, format of lists

-

[ ] Fix pixel formula in old papers

-

[ ] Async retry except KeyboardInterrupt

-

[ ] Knuth–Plass algorithm for western languages

-

[ ] Support non-PDF/A files

-

Immersive Translation sponsors monthly Pro membership redemption codes for active contributors to this project, see details at: CONTRIBUTOR_REWARD.md

-

New backend: BabelDOC

-

Document merging: PyMuPDF

-

Document parsing: Pdfminer.six

-

Document extraction: MinerU

-

Document Preview: Gradio PDF

-

Multi-threaded translation: MathTranslate

-

Layout parsing: DocLayout-YOLO

-

Document standard: PDF Explained, PDF Cheat Sheets

-

Multilingual Font: Go Noto Universal

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for PDFMathTranslate

Similar Open Source Tools

PDFMathTranslate

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

OSA

OSA (Open-Source-Advisor) is a tool designed to improve the quality of scientific open source projects by automating the generation of README files, documentation, CI/CD scripts, and providing advice and recommendations for repositories. It supports various LLMs accessible via API, local servers, or osa_bot hosted on ITMO servers. OSA is currently under development with features like README file generation, documentation generation, automatic implementation of changes, LLM integration, and GitHub Action Workflow generation. It requires Python 3.10 or higher and tokens for GitHub/GitLab/Gitverse and LLM API key. Users can install OSA using PyPi or build from source, and run it using CLI commands or Docker containers.

openlrc

Open-Lyrics is a Python library that transcribes voice files using faster-whisper and translates/polishes the resulting text into `.lrc` files in the desired language using LLM, e.g. OpenAI-GPT, Anthropic-Claude. It offers well preprocessed audio to reduce hallucination and context-aware translation to improve translation quality. Users can install the library from PyPI or GitHub and follow the installation steps to set up the environment. The tool supports GUI usage and provides Python code examples for transcription and translation tasks. It also includes features like utilizing context and glossary for translation enhancement, pricing information for different models, and a list of todo tasks for future improvements.

rpaframework

RPA Framework is an open-source collection of libraries and tools for Robotic Process Automation (RPA), designed to be used with Robot Framework and Python. It offers well-documented core libraries for Software Robot Developers, optimized for Robocorp Control Room and Developer Tools, and accepts external contributions. The project includes various libraries for tasks like archiving, browser automation, date/time manipulations, cloud services integration, encryption operations, database interactions, desktop automation, document processing, email operations, Excel manipulation, file system operations, FTP interactions, web API interactions, image manipulation, AI services, and more. The development of the repository is Python-based and requires Python version 3.8+, with tooling based on poetry and invoke for compiling, building, and running the package. The project is licensed under the Apache License 2.0.

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

skylos

Skylos is a privacy-first SAST tool for Python, TypeScript, and Go that bridges the gap between traditional static analysis and AI agents. It detects dead code, security vulnerabilities (SQLi, SSRF, Secrets), and code quality issues with high precision. Skylos uses a hybrid engine (AST + optional Local/Cloud LLM) to eliminate false positives, verify via runtime, find logic bugs, and provide context-aware audits. It offers automated fixes, end-to-end remediation, and 100% local privacy. The tool supports taint analysis, secrets detection, vulnerability checks, dead code detection and cleanup, agentic AI and hybrid analysis, codebase optimization, operational governance, and runtime verification.

paperbanana

PaperBanana is an automated academic illustration tool designed for AI scientists. It implements an agentic framework for generating publication-quality academic diagrams and statistical plots from text descriptions. The tool utilizes a two-phase multi-agent pipeline with iterative refinement, Gemini-based VLM planning, and image generation. It offers a CLI, Python API, and MCP server for IDE integration, along with Claude Code skills for generating diagrams, plots, and evaluating diagrams. PaperBanana is not affiliated with or endorsed by the original authors or Google Research, and it may differ from the original system described in the paper.

opencode-telegram-bot

OpenCode Telegram Bot is a secure Telegram client for OpenCode CLI that allows users to run AI coding tasks, monitor progress, switch models, and manage sessions from their phone. The bot communicates with the local OpenCode server and the Telegram Bot API only, ensuring security. It supports multiple languages and provides features like remote coding, session management, live status updates, model switching, agent modes, voice prompts, context control, input flow control, configurable reply formatting, strict user ID whitelist for security, and UI localization. Users can configure environment variables, voice and audio transcription, model settings, and more. The bot enforces security measures and supports various platforms like macOS, Windows, and Linux.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

MHA2MLA

This repository contains the code for the paper 'Towards Economical Inference: Enabling DeepSeek's Multi-Head Latent Attention in Any Transformer-based LLMs'. It provides tools for fine-tuning and evaluating Llama models, converting models between different frameworks, processing datasets, and performing specific model training tasks like Partial-RoPE Fine-Tuning and Multiple-Head Latent Attention Fine-Tuning. The repository also includes commands for model evaluation using Lighteval and LongBench, along with necessary environment setup instructions.

agentops

AgentOps is a toolkit for evaluating and developing robust and reliable AI agents. It provides benchmarks, observability, and replay analytics to help developers build better agents. AgentOps is open beta and can be signed up for here. Key features of AgentOps include: - Session replays in 3 lines of code: Initialize the AgentOps client and automatically get analytics on every LLM call. - Time travel debugging: (coming soon!) - Agent Arena: (coming soon!) - Callback handlers: AgentOps works seamlessly with applications built using Langchain and LlamaIndex.

atlas-mcp-server

ATLAS (Adaptive Task & Logic Automation System) is a high-performance Model Context Protocol server designed for LLMs to manage complex task hierarchies. Built with TypeScript, it features ACID-compliant storage, efficient task tracking, and intelligent template management. ATLAS provides LLM Agents task management through a clean, flexible tool interface. The server implements the Model Context Protocol (MCP) for standardized communication between LLMs and external systems, offering hierarchical task organization, task state management, smart templates, enterprise features, and performance optimization.

libllm

libLLM is an open-source project designed for efficient inference of large language models (LLM) on personal computers and mobile devices. It is optimized to run smoothly on common devices, written in C++14 without external dependencies, and supports CUDA for accelerated inference. Users can build the tool for CPU only or with CUDA support, and run libLLM from the command line. Additionally, there are API examples available for Python and the tool can export Huggingface models.

ScaleLLM

ScaleLLM is a cutting-edge inference system engineered for large language models (LLMs), meticulously designed to meet the demands of production environments. It extends its support to a wide range of popular open-source models, including Llama3, Gemma, Bloom, GPT-NeoX, and more. ScaleLLM is currently undergoing active development. We are fully committed to consistently enhancing its efficiency while also incorporating additional features. Feel free to explore our **_Roadmap_** for more details. ## Key Features * High Efficiency: Excels in high-performance LLM inference, leveraging state-of-the-art techniques and technologies like Flash Attention, Paged Attention, Continuous batching, and more. * Tensor Parallelism: Utilizes tensor parallelism for efficient model execution. * OpenAI-compatible API: An efficient golang rest api server that compatible with OpenAI. * Huggingface models: Seamless integration with most popular HF models, supporting safetensors. * Customizable: Offers flexibility for customization to meet your specific needs, and provides an easy way to add new models. * Production Ready: Engineered with production environments in mind, ScaleLLM is equipped with robust system monitoring and management features to ensure a seamless deployment experience.

ai-coders-context

The @ai-coders/context repository provides the Ultimate MCP for AI Agent Orchestration, Context Engineering, and Spec-Driven Development. It simplifies context engineering for AI by offering a universal process called PREVC, which consists of Planning, Review, Execution, Validation, and Confirmation steps. The tool aims to address the problem of context fragmentation by introducing a single `.context/` directory that works universally across different tools. It enables users to create structured documentation, generate agent playbooks, manage workflows, provide on-demand expertise, and sync across various AI tools. The tool follows a structured, spec-driven development approach to improve AI output quality and ensure reproducible results across projects.

For similar tasks

PDFMathTranslate

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

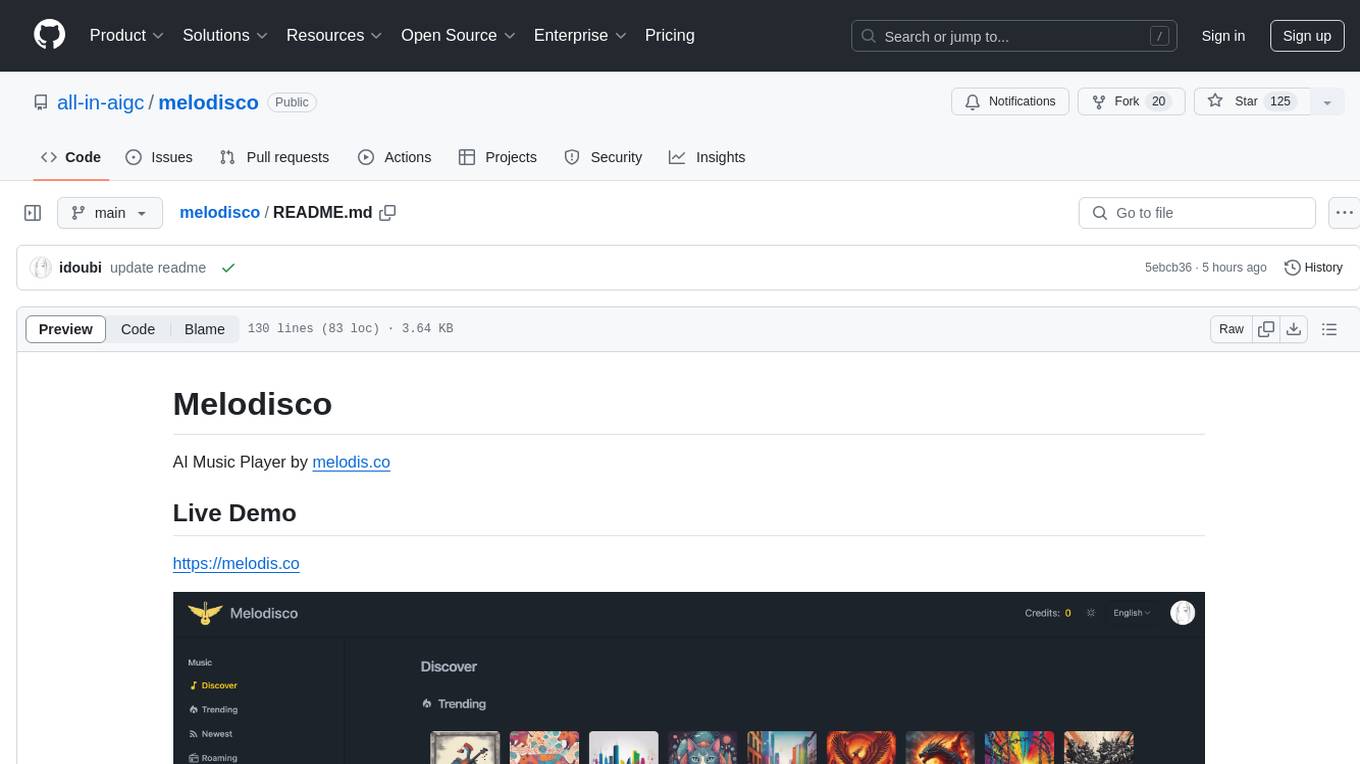

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.

grps_trtllm

The grps-trtllm repository is a C++ implementation of a high-performance OpenAI LLM service, combining GRPS and TensorRT-LLM. It supports functionalities like Chat, Ai-agent, and Multi-modal. The repository offers advantages over triton-trtllm, including a complete LLM service implemented in pure C++, integrated tokenizer supporting huggingface and sentencepiece, custom HTTP functionality for OpenAI interface, support for different LLM prompt styles and result parsing styles, integration with tensorrt backend and opencv library for multi-modal LLM, and stable performance improvement compared to triton-trtllm.

discord-ai-bot

Discord AI Bot is a chatbot tool designed to interact with Ollama and AUTOMATIC1111 Stable Diffusion on Discord. The bot allows users to set up and configure a Discord bot to communicate with the mentioned AI models. Users can follow step-by-step instructions to install Node.js, Ollama, and the required dependencies, create a Discord bot, and interact with the bot by mentioning it in messages. Additionally, the tool provides set-up instructions for Docker users to easily deploy the bot using Docker containers. Overall, Discord AI Bot simplifies the process of integrating AI chatbots into Discord servers for interactive communication.

For similar jobs

SLR-FC

This repository provides a comprehensive collection of AI tools and resources to enhance literature reviews. It includes a curated list of AI tools for various tasks, such as identifying research gaps, discovering relevant papers, visualizing paper content, and summarizing text. Additionally, the repository offers materials on generative AI, effective prompts, copywriting, image creation, and showcases of AI capabilities. By leveraging these tools and resources, researchers can streamline their literature review process, gain deeper insights from scholarly literature, and improve the quality of their research outputs.

paper-ai

Paper-ai is a tool that helps you write papers using artificial intelligence. It provides features such as AI writing assistance, reference searching, and editing and formatting tools. With Paper-ai, you can quickly and easily create high-quality papers.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and follows a process of embedding docs and queries, searching for top passages, creating summaries, scoring and selecting relevant summaries, putting summaries into prompt, and generating answers. Users can customize prompts and use various models for embeddings and LLMs. The tool can be used asynchronously and supports adding documents from paths, files, or URLs.

ChatData

ChatData is a robust chat-with-documents application designed to extract information and provide answers by querying the MyScale free knowledge base or uploaded documents. It leverages the Retrieval Augmented Generation (RAG) framework, millions of Wikipedia pages, and arXiv papers. Features include self-querying retriever, VectorSQL, session management, and building a personalized knowledge base. Users can effortlessly navigate vast data, explore academic papers, and research documents. ChatData empowers researchers, students, and knowledge enthusiasts to unlock the true potential of information retrieval.

noScribe

noScribe is an AI-based software designed for automated audio transcription, specifically tailored for transcribing interviews for qualitative social research or journalistic purposes. It is a free and open-source tool that runs locally on the user's computer, ensuring data privacy. The software can differentiate between speakers and supports transcription in 99 languages. It includes a user-friendly editor for reviewing and correcting transcripts. Developed by Kai Dröge, a PhD in sociology with a background in computer science, noScribe aims to streamline the transcription process and enhance the efficiency of qualitative analysis.

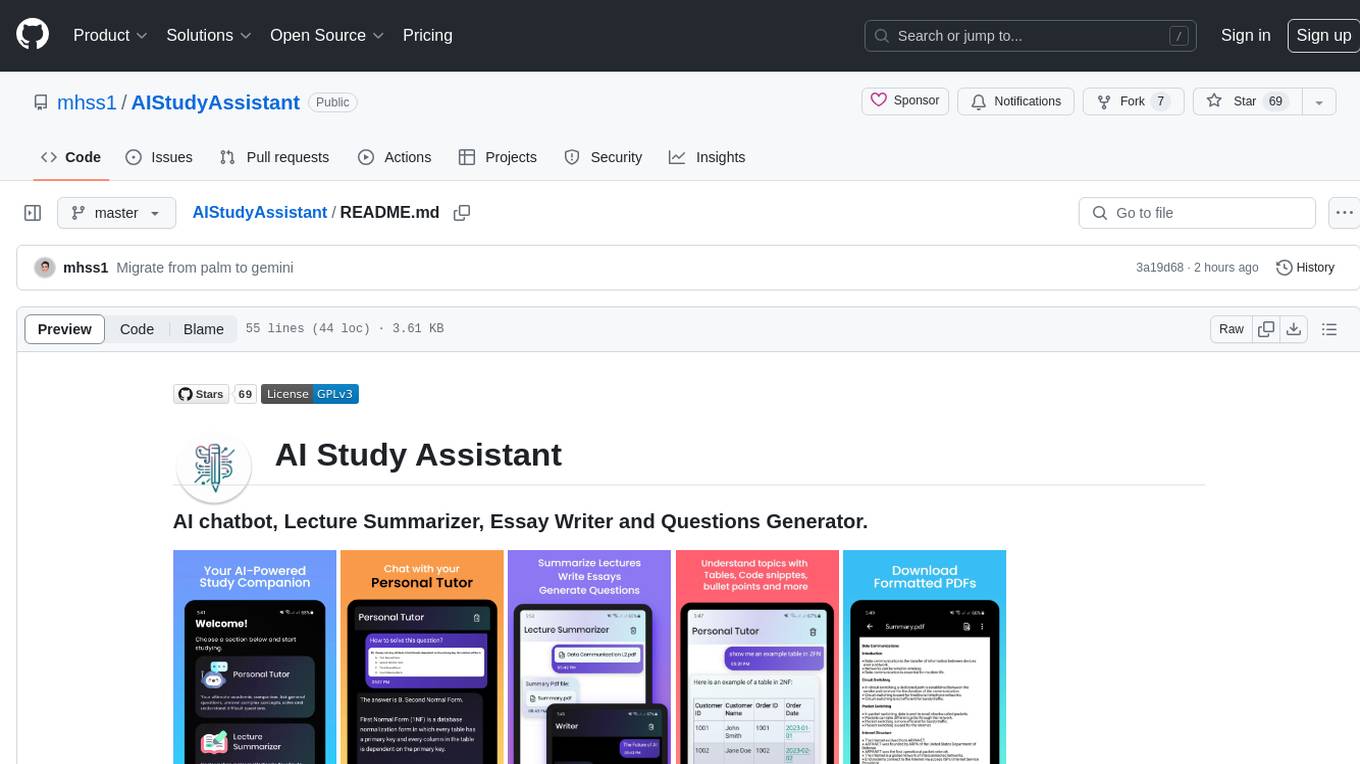

AIStudyAssistant

AI Study Assistant is an app designed to enhance learning experience and boost academic performance. It serves as a personal tutor, lecture summarizer, writer, and question generator powered by Google PaLM 2. Features include interacting with an AI chatbot, summarizing lectures, generating essays, and creating practice questions. The app is built using 100% Kotlin, Jetpack Compose, Clean Architecture, and MVVM design pattern, with technologies like Ktor, Room DB, Hilt, and Kotlin coroutines. AI Study Assistant aims to provide comprehensive AI-powered assistance for students in various academic tasks.

data-to-paper

Data-to-paper is an AI-driven framework designed to guide users through the process of conducting end-to-end scientific research, starting from raw data to the creation of comprehensive and human-verifiable research papers. The framework leverages a combination of LLM and rule-based agents to assist in tasks such as hypothesis generation, literature search, data analysis, result interpretation, and paper writing. It aims to accelerate research while maintaining key scientific values like transparency, traceability, and verifiability. The framework is field-agnostic, supports both open-goal and fixed-goal research, creates data-chained manuscripts, involves human-in-the-loop interaction, and allows for transparent replay of the research process.

k2

K2 (GeoLLaMA) is a large language model for geoscience, trained on geoscience literature and fine-tuned with knowledge-intensive instruction data. It outperforms baseline models on objective and subjective tasks. The repository provides K2 weights, core data of GeoSignal, GeoBench benchmark, and code for further pretraining and instruction tuning. The model is available on Hugging Face for use. The project aims to create larger and more powerful geoscience language models in the future.