paper-qa

LLM Chain for answering questions from documents with citations

Stars: 3616

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and follows a process of embedding docs and queries, searching for top passages, creating summaries, scoring and selecting relevant summaries, putting summaries into prompt, and generating answers. Users can customize prompts and use various models for embeddings and LLMs. The tool can be used asynchronously and supports adding documents from paths, files, or URLs.

README:

This is a minimal package for doing question and answering from PDFs or text files (which can be raw HTML). It strives to give very good answers, with no hallucinations, by grounding responses with in-text citations.

By default, it uses OpenAI Embeddings with a simple numpy vector DB to embed and search documents. However, via langchain you can use open-source models or embeddings (see details below).

paper-qa uses the process shown below:

- embed docs into vectors

- embed query into vector

- search for top k passages in docs

- create summary of each passage relevant to query

- score and select only relevant summaries

- put summaries into prompt

- generate answer with prompt

See our paper for more details:

@article{lala2023paperqa,

title={PaperQA: Retrieval-Augmented Generative Agent for Scientific Research},

author={L{\'a}la, Jakub and O'Donoghue, Odhran and Shtedritski, Aleksandar and Cox, Sam and Rodriques, Samuel G and White, Andrew D},

journal={arXiv preprint arXiv:2312.07559},

year={2023}

}Question: How can carbon nanotubes be manufactured at a large scale?

Carbon nanotubes can be manufactured at a large scale using the electric-arc technique (Journet6644). This technique involves creating an arc between two electrodes in a reactor under a helium atmosphere and using a mixture of a metallic catalyst and graphite powder in the anode. Yields of 80% of entangled carbon filaments can be achieved, which consist of smaller aligned SWNTs self-organized into bundle-like crystallites (Journet6644). Additionally, carbon nanotubes can be synthesized and self-assembled using various methods such as DNA-mediated self-assembly, nanoparticle-assisted alignment, chemical self-assembly, and electro-addressed functionalization (Tulevski2007). These methods have been used to fabricate large-area nanostructured arrays, high-density integration, and freestanding networks (Tulevski2007). 98% semiconducting CNT network solution can also be used and is separated from metallic nanotubes using a density gradient ultracentrifugation approach (Chen2014). The substrate is incubated in the solution and then rinsed with deionized water and dried with N2 air gun, leaving a uniform carbon network (Chen2014).

Journet6644: Journet, Catherine, et al. "Large-scale production of single-walled carbon nanotubes by the electric-arc technique." nature 388.6644 (1997): 756-758.

Tulevski2007: Tulevski, George S., et al. "Chemically assisted directed assembly of carbon nanotubes for the fabrication of large-scale device arrays." Journal of the American Chemical Society 129.39 (2007): 11964-11968.

Chen2014: Chen, Haitian, et al. "Large-scale complementary macroelectronics using hybrid integration of carbon nanotubes and IGZO thin-film transistors." Nature communications 5.1 (2014): 4097.

Version 4 removed langchain from the package because it no longer supports pickling. This also simplifies the package a bit - especially prompts. Langchain can still be used, but it's not required. You can use any LLMs from langchain, but you will need to use the LangchainLLMModel class to wrap the model.

Install with pip:

pip install paper-qaYou need to have an LLM to use paper-qa. You can use OpenAI, llama.cpp (via Server), or any LLMs from langchain. OpenAI just works, as long as you have set your OpenAI API key (export OPENAI_API_KEY=sk-...). See instructions below for other LLMs.

To use paper-qa, you need to have a list of paths/files/urls (valid extensions include: .pdf, .txt). You can then use the Docs class to add the documents and then query them. Docs will try to guess citation formats from the content of the files, but you can also provide them yourself.

from paperqa import Docs

my_docs = ...# get a list of paths

docs = Docs()

for d in my_docs:

docs.add(d)

answer = docs.query("What manufacturing challenges are unique to bispecific antibodies?")

print(answer.formatted_answer)The answer object has the following attributes: formatted_answer, answer (answer alone), question , and context (the summaries of passages found for answer).

paper-qa is written to be used asynchronously. The synchronous API is just a wrapper around the async. Here are the methods and their async equivalents:

| Sync | Async |

|---|---|

Docs.add |

Docs.aadd |

Docs.add_file |

Docs.aadd_file |

Docs.add_url |

Docs.add_url |

Docs.get_evidence |

Docs.aget_evidence |

Docs.query |

Docs.aquery |

The synchronous version just call the async version in a loop. Most modern python environments support async natively (including Jupyter notebooks!). So you can do this in a Jupyter Notebook:

from paperqa import Docs

my_docs = ...# get a list of paths

docs = Docs()

for d in my_docs:

await docs.aadd(d)

answer = await docs.aquery("What manufacturing challenges are unique to bispecific antibodies?")add will add from paths. You can also use add_file (expects a file object) or add_url to work with other sources.

By default, it uses a hybrid of gpt-3.5-turbo and gpt-4-turbo. You can adjust this:

docs = Docs(llm='gpt-3.5-turbo')or you can use any other model available in langchain:

from paperqa import Docs

from langchain_community.chat_models import ChatAnthropic

docs = Docs(llm="langchain",

client=ChatAnthropic())Note we split the model into the wrapper and client, which is ChatAnthropic here. This is because client stores the non-pickleable part and langchain LLMs are only sometimes serializable/pickleable. The paper-qa Docs must always serializable. Thus, we split the model into two parts.

import pickle

docs = Docs(llm="langchain",

client=ChatAnthropic())

model_str = pickle.dumps(docs)

docs = pickle.loads(model_str)

# but you have to set the client after loading

docs.set_client(ChatAnthropic())You can use llama.cpp to be the LLM. Note that you should be using relatively large models, because paper-qa requires following a lot of instructions. You won't get good performance with 7B models.

The easiest way to get set-up is to download a llama file and execute it with -cb -np 4 -a my-llm-model --embedding which will enable continuous batching and embeddings.

from paperqa import Docs, LlamaEmbeddingModel

from openai import AsyncOpenAI

# start llamap.cpp client with

local_client = AsyncOpenAI(

base_url="http://localhost:8080/v1",

api_key = "sk-no-key-required"

)

docs = Docs(client=local_client,

embedding_model=LlamaEmbeddingModel(),

llm_model=OpenAILLMModel(config=dict(model="my-llm-model", temperature=0.1, frequency_penalty=1.5, max_tokens=512)))You can use langchain embedding models, or the SentenceTransformer models. For example

from paperqa import Docs, SentenceTransformerEmbeddingModel

from openai import AsyncOpenAI

# start llamap.cpp client with

local_client = AsyncOpenAI(

base_url="http://localhost:8080/v1",

api_key = "sk-no-key-required"

)

docs = Docs(client=local_client,

embedding_model=SentenceTransformerEmbeddingModel(),

llm_model=OpenAILLMModel(config=dict(model="my-llm-model", temperature=0.1, frequency_penalty=1.5, max_tokens=512)))Just like in the above examples, we have to split the Langchain model into a client and model to keep Docs serializable.

from paperqa import Docs, LangchainEmbeddingModel

docs = Docs(embedding_model=LangchainEmbeddingModel(), embedding_client=OpenAIEmbeddings())You can adjust the numbers of sources (passages of text) to reduce token usage or add more context. k refers to the top k most relevant and diverse (may from different sources) passages. Each passage is sent to the LLM to summarize, or determine if it is irrelevant. After this step, a limit of max_sources is applied so that the final answer can fit into the LLM context window. Thus, k > max_sources and max_sources is the number of sources used in the final answer.

docs.query("What manufacturing challenges are unique to bispecific antibodies?", k = 5, max_sources = 2)You do not need to use papers -- you can use code or raw HTML. Note that this tool is focused on answering questions, so it won't do well at writing code. One note is that the tool cannot infer citations from code, so you will need to provide them yourself.

import glob

source_files = glob.glob('**/*.js')

docs = Docs()

for f in source_files:

# this assumes the file names are unique in code

docs.add(f, citation='File ' + os.path.name(f), docname=os.path.name(f))

answer = docs.query("Where is the search bar in the header defined?")

print(answer)You may want to cache parsed texts and embeddings in an external database or file. You can then build a Docs object from those directly:

docs = Docs()

for ... in my_docs:

doc = Doc(docname=..., citation=..., dockey=..., citation=...)

texts = [Text(text=..., name=..., doc=doc) for ... in my_texts]

docs.add_texts(texts, doc)If you want to use an external vector store, you can also do that directly via langchain. For example, to use the FAISS vector store from langchain:

from paperqa import LangchainVectorStore, Docs

from langchain_community.vector_store import FAISS

from langchain_openai import OpenAIEmbeddings

my_index = LangchainVectorStore(cls=FAISS, embedding_model=OpenAIEmbeddings())

docs = Docs(texts_index=my_index)Well that's a really good question! It's probably best to just download PDFs of papers you think will help answer your question and start from there.

If you use Zotero to organize your personal bibliography,

you can use the paperqa.contrib.ZoteroDB to query papers from your library,

which relies on pyzotero.

Install pyzotero to use this feature:

pip install pyzoteroFirst, note that paperqa parses the PDFs of papers to store in the database,

so all relevant papers should have PDFs stored inside your database.

You can get Zotero to automatically do this by highlighting the references

you wish to retrieve, right clicking, and selecting "Find Available PDFs".

You can also manually drag-and-drop PDFs onto each reference.

To download papers, you need to get an API key for your account.

- Get your library ID, and set it as the environment variable

ZOTERO_USER_ID.- For personal libraries, this ID is given here at the part "Your userID for use in API calls is XXXXXX".

- For group libraries, go to your group page

https://www.zotero.org/groups/groupname, and hover over the settings link. The ID is the integer after /groups/. (h/t pyzotero!)

- Create a new API key here and set it as the environment variable

ZOTERO_API_KEY.- The key will need read access to the library.

With this, we can download papers from our library and add them to paperqa:

from paperqa.contrib import ZoteroDB

docs = paperqa.Docs()

zotero = ZoteroDB(library_type="user") # "group" if group library

for item in zotero.iterate(limit=20):

if item.num_pages > 30:

continue # skip long papers

docs.add(item.pdf, docname=item.key)which will download the first 20 papers in your Zotero database and add

them to the Docs object.

We can also do specific queries of our Zotero library and iterate over the results:

for item in zotero.iterate(

q="large language models",

qmode="everything",

sort="date",

direction="desc",

limit=100,

):

print("Adding", item.title)

docs.add(item.pdf, docname=item.key)You can read more about the search syntax by typing zotero.iterate? in IPython.

If you want to search for papers outside of your own collection, I've found an unrelated project called paper-scraper that looks like it might help. But beware, this project looks like it uses some scraping tools that may violate publisher's rights or be in a gray area of legality.

keyword_search = 'bispecific antibody manufacture'

papers = paperscraper.search_papers(keyword_search)

docs = paperqa.Docs()

for path,data in papers.items():

try:

docs.add(path)

except ValueError as e:

# sometimes this happens if PDFs aren't downloaded or readable

print('Could not read', path, e)

answer = docs.query("What manufacturing challenges are unique to bispecific antibodies?")

print(answer)By default PyPDF is used since it's pure python and easy to install. For faster PDF reading, paper-qa will detect and use PymuPDF (fitz):

pip install pymupdfTo execute a function on each chunk of LLM completions, you need to provide a function that when called with the name of the step produces a list of functions to execute on each chunk. For example, to get a typewriter view of the completions, you can do:

def make_typewriter(step_name):

def typewriter(chunk):

print(chunk, end="")

return [typewriter] # <- note that this is a list of functions

...

docs.query("What manufacturing challenges are unique to bispecific antibodies?", get_callbacks=make_typewriter)In general, embeddings are cached when you pickle a Docs regardless of what vector store you use. See above for details on more explicit management of them.

You can customize any of the prompts, using the PromptCollection class. For example, if you want to change the prompt for the question, you can do:

from paperqa import Docs, Answer, PromptCollection

my_qaprompt = "Answer the question '{question}' "

"Use the context below if helpful. "

"You can cite the context using the key "

"like (Example2012). "

"If there is insufficient context, write a poem "

"about how you cannot answer.\n\n"

"Context: {context}\n\n"

prompts=PromptCollection(qa=my_qaprompt)

docs = Docs(prompts=prompts)Following the syntax above, you can also include prompts that are executed after the query and before the query. For example, you can use this to critique the answer.

It's not that different! This is similar to the tree response method in LlamaIndex. I just have included some prompts I find useful, readers that give page numbers/line numbers, and am focused on one task - answering technical questions with cited sources.

There has been some great work on retrievers in langchain and you could say this is an example of a retriever.

The Docs class can be pickled and unpickled. This is useful if you want to save the embeddings of the documents and then load them later.

import pickle

# save

with open("my_docs.pkl", "wb") as f:

pickle.dump(docs, f)

# load

with open("my_docs.pkl", "rb") as f:

docs = pickle.load(f)

docs.set_client() #defaults to OpenAIFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for paper-qa

Similar Open Source Tools

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and follows a process of embedding docs and queries, searching for top passages, creating summaries, scoring and selecting relevant summaries, putting summaries into prompt, and generating answers. Users can customize prompts and use various models for embeddings and LLMs. The tool can be used asynchronously and supports adding documents from paths, files, or URLs.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and includes a process of embedding docs, queries, searching for top passages, creating summaries, using an LLM to re-score and select relevant summaries, putting summaries into prompt, and generating answers. The tool can be used to answer specific questions related to scientific research by leveraging citations and relevant passages from documents.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

LeanCopilot

Lean Copilot is a tool that enables the use of large language models (LLMs) in Lean for proof automation. It provides features such as suggesting tactics/premises, searching for proofs, and running inference of LLMs. Users can utilize built-in models from LeanDojo or bring their own models to run locally or on the cloud. The tool supports platforms like Linux, macOS, and Windows WSL, with optional CUDA and cuDNN for GPU acceleration. Advanced users can customize behavior using Tactic APIs and Model APIs. Lean Copilot also allows users to bring their own models through ExternalGenerator or ExternalEncoder. The tool comes with caveats such as occasional crashes and issues with premise selection and proof search. Users can get in touch through GitHub Discussions for questions, bug reports, feature requests, and suggestions. The tool is designed to enhance theorem proving in Lean using LLMs.

py-vectara-agentic

The `vectara-agentic` Python library is designed for developing powerful AI assistants using Vectara and Agentic-RAG. It supports various agent types, includes pre-built tools for domains like finance and legal, and enables easy creation of custom AI assistants and agents. The library provides tools for summarizing text, rephrasing text, legal tasks like summarizing legal text and critiquing as a judge, financial tasks like analyzing balance sheets and income statements, and database tools for inspecting and querying databases. It also supports observability via LlamaIndex and Arize Phoenix integration.

LongRAG

This repository contains the code for LongRAG, a framework that enhances retrieval-augmented generation with long-context LLMs. LongRAG introduces a 'long retriever' and a 'long reader' to improve performance by using a 4K-token retrieval unit, offering insights into combining RAG with long-context LLMs. The repo provides instructions for installation, quick start, corpus preparation, long retriever, and long reader.

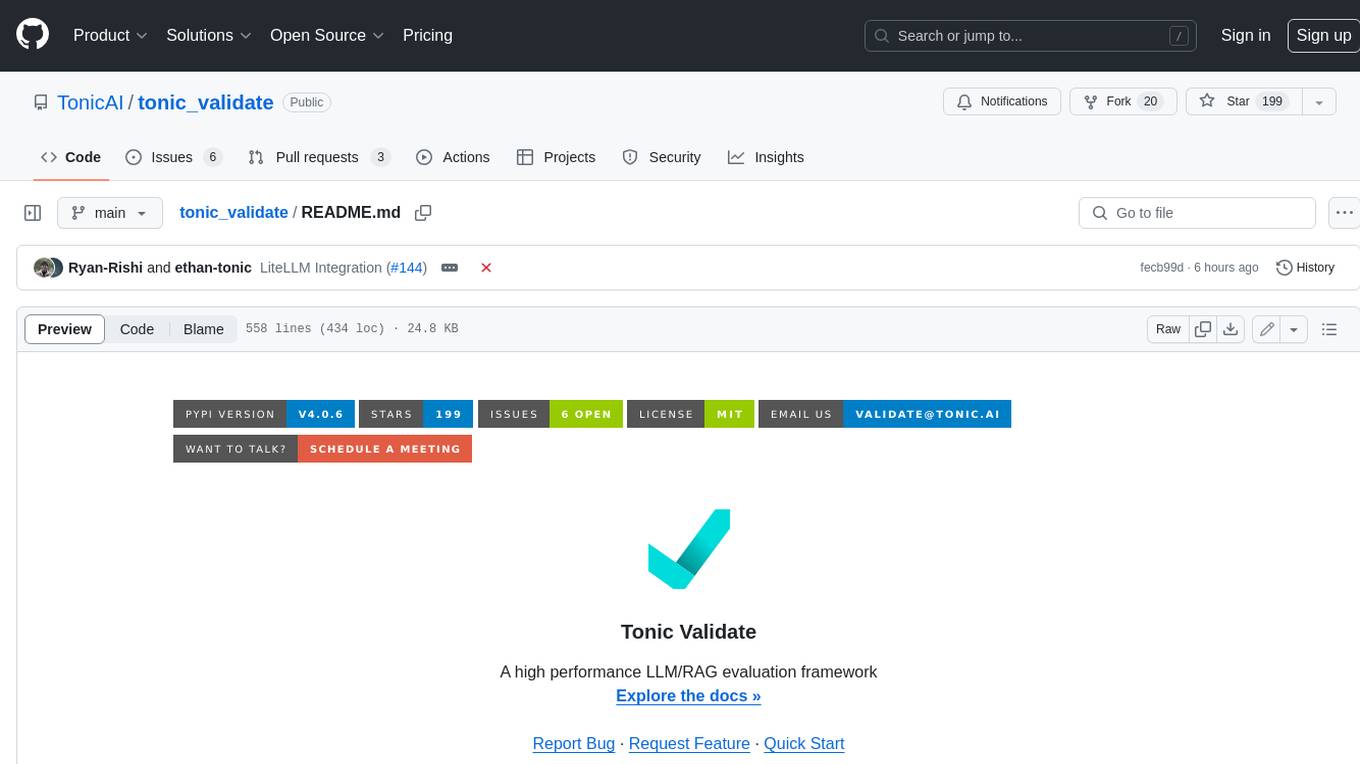

tonic_validate

Tonic Validate is a framework for the evaluation of LLM outputs, such as Retrieval Augmented Generation (RAG) pipelines. Validate makes it easy to evaluate, track, and monitor your LLM and RAG applications. Validate allows you to evaluate your LLM outputs through the use of our provided metrics which measure everything from answer correctness to LLM hallucination. Additionally, Validate has an optional UI to visualize your evaluation results for easy tracking and monitoring.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

langevals

LangEvals is an all-in-one Python library for testing and evaluating LLM models. It can be used in notebooks for exploration, in pytest for writing unit tests, or as a server API for live evaluations and guardrails. The library is modular, with 20+ evaluators including Ragas for RAG quality, OpenAI Moderation, and Azure Jailbreak detection. LangEvals powers LangWatch evaluations and provides tools for batch evaluations on notebooks and unit test evaluations with PyTest. It also offers LangEvals evaluators for LLM-as-a-Judge scenarios and out-of-the-box evaluators for language detection and answer relevancy checks.

fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a structured approach to breaking down problems into individual components and applying AI to them one at a time. Fabric includes a collection of pre-defined Patterns (prompts) that can be used for a variety of tasks, such as extracting the most interesting parts of YouTube videos and podcasts, writing essays, summarizing academic papers, creating AI art prompts, and more. Users can also create their own custom Patterns. Fabric is designed to be easy to use, with a command-line interface and a variety of helper apps. It is also extensible, allowing users to integrate it with their own AI applications and infrastructure.

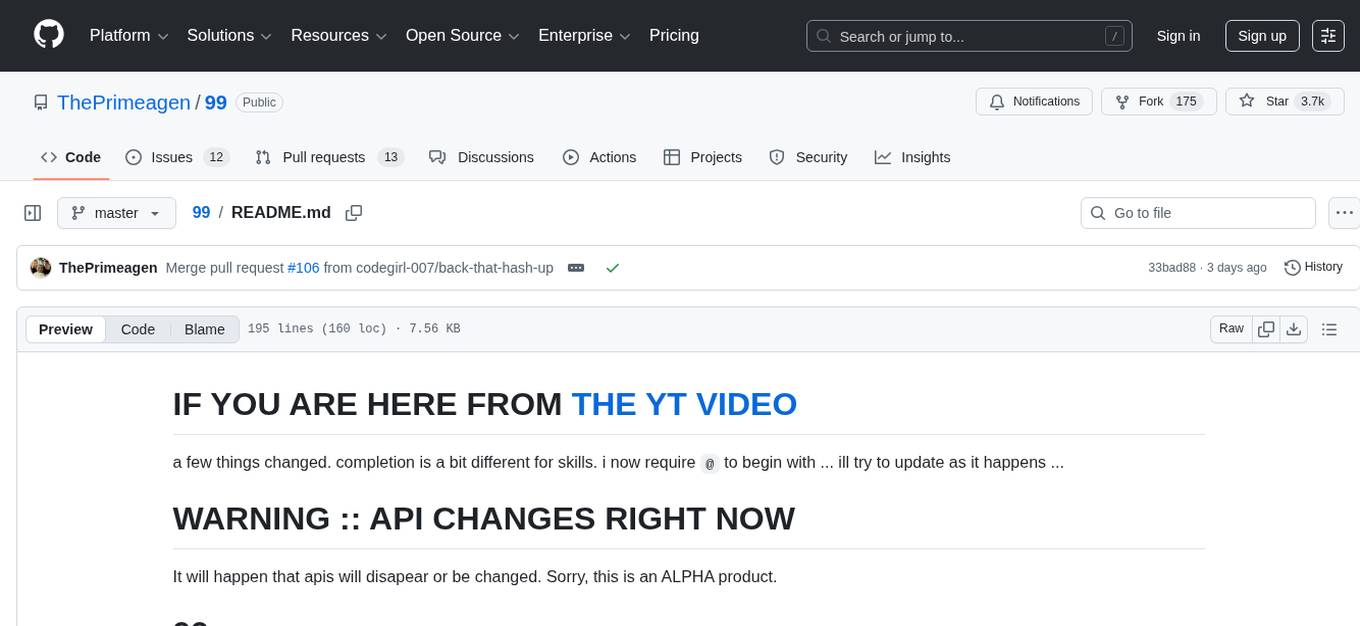

99

The AI client 99 is designed for Neovim users to streamline requests to AI and limit them to restricted areas. It supports visual, search, and debug functionalities. Users must have a supported AI CLI installed such as opencode, claude, or cursor-agent. The tool allows for configuration of completions, referencing rules and files to add context to requests. 99 supports multiple AI CLI backends and providers. Users can report bugs by providing full running debug logs and are advised not to request features directly. Known usability issues include long function definition problems, duplication of comment definitions in lua and jsdoc, visual selection sending the whole file, occasional issues with auto-complete, and potential errors with 'export function' prompts.

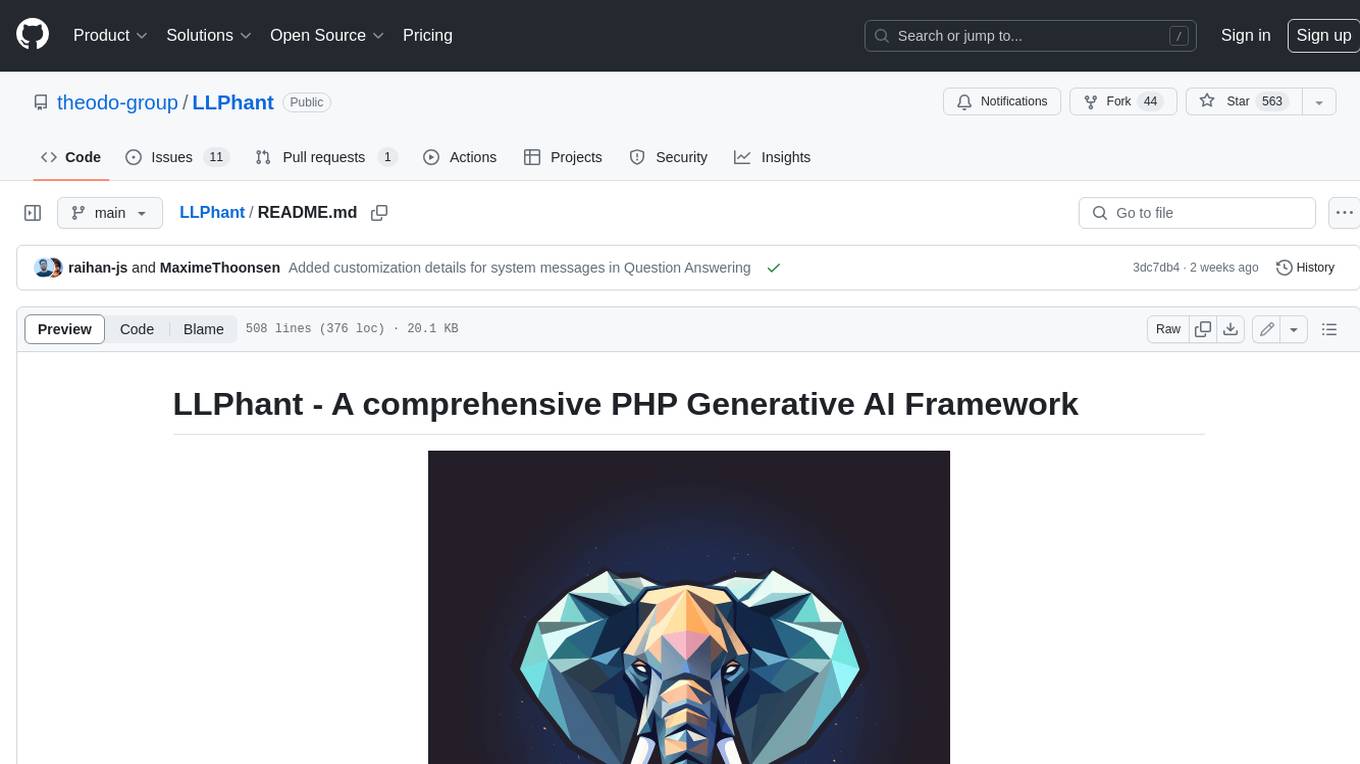

LLPhant

LLPhant is a comprehensive PHP Generative AI Framework that provides a simple and powerful way to build apps. It supports Symfony and Laravel and offers a wide range of features, including text generation, chatbots, text summarization, and more. LLPhant is compatible with OpenAI and Ollama and can be used to perform a variety of tasks, including creating semantic search, chatbots, personalized content, and text summarization.

vectara-answer

Vectara Answer is a sample app for Vectara-powered Summarized Semantic Search (or question-answering) with advanced configuration options. For examples of what you can build with Vectara Answer, check out Ask News, LegalAid, or any of the other demo applications.

ash_ai

Ash AI is a tool that provides a Model Context Protocol (MCP) server for exposing tool definitions to an MCP client. It allows for the installation of dev and production MCP servers, and supports features like OAuth2 flow with AshAuthentication, tool data access, tool execution callbacks, prompt-backed actions, and vectorization strategies. Users can also generate a chat feature for their Ash & Phoenix application using `ash_oban` and `ash_postgres`, and specify LLM API keys for OpenAI. The tool is designed to help developers experiment with tools and actions, monitor tool execution, and expose actions as tool calls.

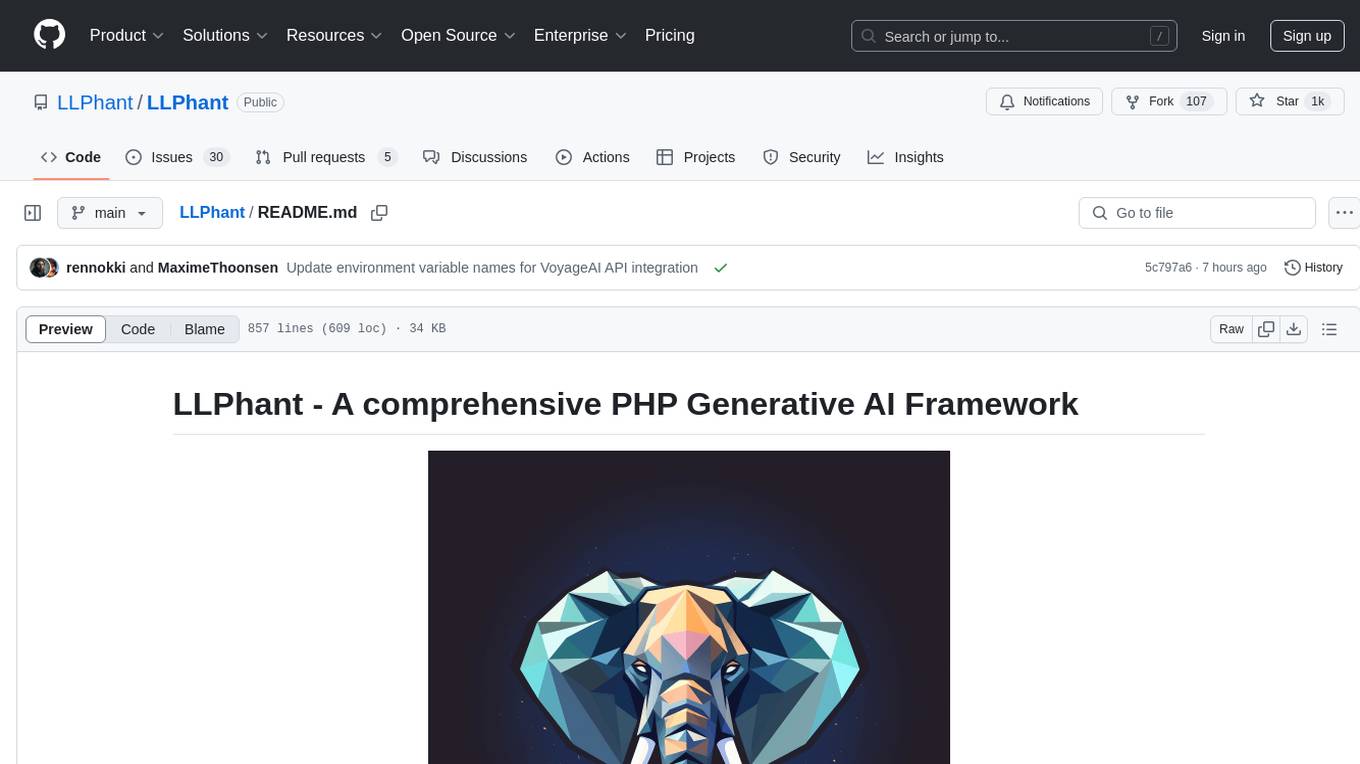

LLPhant

LLPhant is a comprehensive PHP Generative AI Framework designed to be simple yet powerful, compatible with Symfony and Laravel. It supports various LLMs like OpenAI, Anthropic, Mistral, Ollama, and services compatible with OpenAI API. The framework enables tasks such as semantic search, chatbots, personalized content creation, text summarization, personal shopper creation, autonomous AI agents, and coding tool assistance. It provides tools for generating text, images, speech-to-text transcription, and customizing system messages for question answering. LLPhant also offers features for embeddings, vector stores, document stores, and question answering with various query transformations and reranking techniques.

airflow-ai-sdk

This repository contains an SDK for working with LLMs from Apache Airflow, based on Pydantic AI. It allows users to call LLMs and orchestrate agent calls directly within their Airflow pipelines using decorator-based tasks. The SDK leverages the familiar Airflow `@task` syntax with extensions like `@task.llm`, `@task.llm_branch`, and `@task.agent`. Users can define tasks that call language models, orchestrate multi-step AI reasoning, change the control flow of a DAG based on LLM output, and support various models in the Pydantic AI library. The SDK is designed to integrate LLM workflows into Airflow pipelines, from simple LLM calls to complex agentic workflows.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

SLR-FC

This repository provides a comprehensive collection of AI tools and resources to enhance literature reviews. It includes a curated list of AI tools for various tasks, such as identifying research gaps, discovering relevant papers, visualizing paper content, and summarizing text. Additionally, the repository offers materials on generative AI, effective prompts, copywriting, image creation, and showcases of AI capabilities. By leveraging these tools and resources, researchers can streamline their literature review process, gain deeper insights from scholarly literature, and improve the quality of their research outputs.

paper-ai

Paper-ai is a tool that helps you write papers using artificial intelligence. It provides features such as AI writing assistance, reference searching, and editing and formatting tools. With Paper-ai, you can quickly and easily create high-quality papers.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and follows a process of embedding docs and queries, searching for top passages, creating summaries, scoring and selecting relevant summaries, putting summaries into prompt, and generating answers. Users can customize prompts and use various models for embeddings and LLMs. The tool can be used asynchronously and supports adding documents from paths, files, or URLs.

ChatData

ChatData is a robust chat-with-documents application designed to extract information and provide answers by querying the MyScale free knowledge base or uploaded documents. It leverages the Retrieval Augmented Generation (RAG) framework, millions of Wikipedia pages, and arXiv papers. Features include self-querying retriever, VectorSQL, session management, and building a personalized knowledge base. Users can effortlessly navigate vast data, explore academic papers, and research documents. ChatData empowers researchers, students, and knowledge enthusiasts to unlock the true potential of information retrieval.

noScribe

noScribe is an AI-based software designed for automated audio transcription, specifically tailored for transcribing interviews for qualitative social research or journalistic purposes. It is a free and open-source tool that runs locally on the user's computer, ensuring data privacy. The software can differentiate between speakers and supports transcription in 99 languages. It includes a user-friendly editor for reviewing and correcting transcripts. Developed by Kai Dröge, a PhD in sociology with a background in computer science, noScribe aims to streamline the transcription process and enhance the efficiency of qualitative analysis.

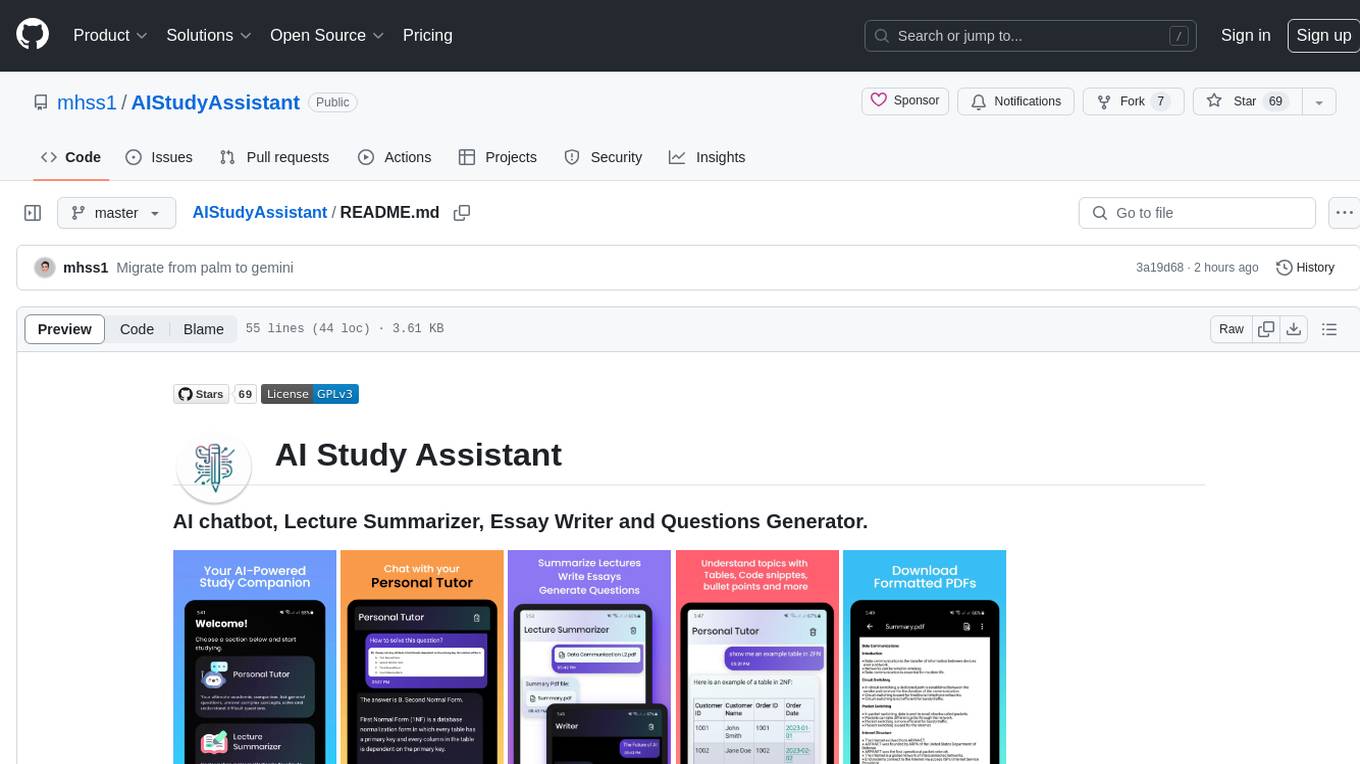

AIStudyAssistant

AI Study Assistant is an app designed to enhance learning experience and boost academic performance. It serves as a personal tutor, lecture summarizer, writer, and question generator powered by Google PaLM 2. Features include interacting with an AI chatbot, summarizing lectures, generating essays, and creating practice questions. The app is built using 100% Kotlin, Jetpack Compose, Clean Architecture, and MVVM design pattern, with technologies like Ktor, Room DB, Hilt, and Kotlin coroutines. AI Study Assistant aims to provide comprehensive AI-powered assistance for students in various academic tasks.

data-to-paper

Data-to-paper is an AI-driven framework designed to guide users through the process of conducting end-to-end scientific research, starting from raw data to the creation of comprehensive and human-verifiable research papers. The framework leverages a combination of LLM and rule-based agents to assist in tasks such as hypothesis generation, literature search, data analysis, result interpretation, and paper writing. It aims to accelerate research while maintaining key scientific values like transparency, traceability, and verifiability. The framework is field-agnostic, supports both open-goal and fixed-goal research, creates data-chained manuscripts, involves human-in-the-loop interaction, and allows for transparent replay of the research process.

k2

K2 (GeoLLaMA) is a large language model for geoscience, trained on geoscience literature and fine-tuned with knowledge-intensive instruction data. It outperforms baseline models on objective and subjective tasks. The repository provides K2 weights, core data of GeoSignal, GeoBench benchmark, and code for further pretraining and instruction tuning. The model is available on Hugging Face for use. The project aims to create larger and more powerful geoscience language models in the future.