k2

Code and datasets for paper "K2: A Foundation Language Model for Geoscience Knowledge Understanding and Utilization" in WSDM-2024

Stars: 153

K2 (GeoLLaMA) is a large language model for geoscience, trained on geoscience literature and fine-tuned with knowledge-intensive instruction data. It outperforms baseline models on objective and subjective tasks. The repository provides K2 weights, core data of GeoSignal, GeoBench benchmark, and code for further pretraining and instruction tuning. The model is available on Hugging Face for use. The project aims to create larger and more powerful geoscience language models in the future.

README:

- The paper "K2: A Foundation Language Model for Geoscience Knowledge Understanding and Utilization" has been accepted by WSDM2024, in Mexico!

- Code and data for paper "K2: A Foundation Language Model for Geoscience Knowledge Understanding and Utilization"

- Demo: https://k2.acemap.info host by ourselves on a single GeForce RTX 3090 with intranet penetration (with only three threads, with max length as 512)

- A larger language model for Geoscience as a foundation model for academic copilot is geogalactica!

- The data pre-processing toolkits are open-sourced on sciparser!

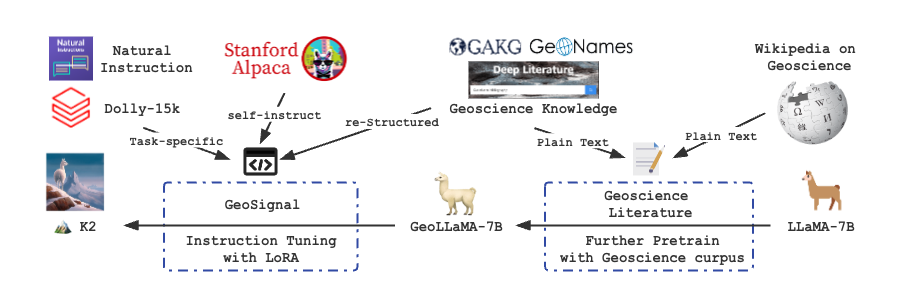

We introduce K2 (7B), an open-source language model trained by firstly further pretraining LLaMA on collected and cleaned geoscience literature, including geoscience open-access papers and Wikipedia pages, and secondly fine-tuning with knowledge-intensive instruction tuning data (GeoSignal). As for preliminary evaluation, we use GeoBench (consisting of NPEE and AP Test on Geology, Geography, and Environmental Science) as the benchmark. K2 outperforms the baselines on objectiv e and subjective tasks compared to several baseline models with similar parameters. In this repository, we will share the following code and data.

- We release K2 weights in two parts (one can add our delta to the original LLaMA weights and use

peft_modelwithtransformersto obtain the entire K2 model.)- Delta weights after further pretraining with the geoscience text corpus to comply with the LLaMA model license.

- Adapter model weights trained by PEFT (LoRA).

- We release the core data of GeoSignal that K2 used for the training.

- We release the GeoBench, the first-ever benchmark for the evaluation of the capability of LLMs in geoscience.

- We release the code of further pretrain and instruction tuning of K2.

The following is the overview of training K2:

1. Prepare the code and the environment

Clone our repository, create a Python environment, and activate it via the following command

git clone https://github.com/davendw49/k2.git

cd k2

conda env create -f k2.yaml

conda activate k22. Prepare the pretrained K2 (GeoLLaMA)

The current version of K2 is on Huggingface Model The previous version of K2 consists of two parts: a delta model (like Vicuna), an add-on weight towards LLaMA-7B, and an adapter model (trained via PEFT).

| Delta model | Adapter model | Full model |

|---|---|---|

| k2_fp_delta | k2_it_adapter | k2_v1 |

- Referring to the repo of Vicuna, we share the command of building up the pretrained weighted of K2

python -m apply_delta --base /path/to/weights/of/llama --target /path/to/weights/of/geollama/ --delta daven3/k2_fp_deltaComing soon...

3. Use K2

base_model = /path/to/k2

tokenizer = LlamaTokenizer.from_pretrained(base_model)

model = LlamaForCausalLM.from_pretrained(

base_model,

load_in_8bit=load_8bit,

device_map=device_map

torch_dtype=torch.float16

)

model.config.pad_token_id = tokenizer.pad_token_id = 0

model.config.bos_token_id = 1

model.config.eos_token_id = 2Or, alternatively,

base_model = /path/to/geollama

lora_weights = /path/to/adapter/model

tokenizer = LlamaTokenizer.from_pretrained(base_model)

model = LlamaForCausalLM.from_pretrained(

base_model,

load_in_8bit=load_8bit,

device_map=device_map

torch_dtype=torch.float16

)

model = PeftModel.from_pretrained(

model,

lora_weights,

torch_dtype=torch.float16,

device_map=device_map,

)

model.config.pad_token_id = tokenizer.pad_token_id = 0

model.config.bos_token_id = 1

model.config.eos_token_id = 2- More detail are in

./generation/

In this repo, we share the instruction data and benchmark data:

- GeoSignal:

./data/geosignal/ - GeoBench:

./data/geobench/

Our text corpus for further pretraining on LLaMA-7B consists of 3.9 billion tokens from geoscience papers published in selected high-quality journals in earth science and mainly collected by GAKG.

Delta Model on Hugging Face: daven3/k2_fp_delta

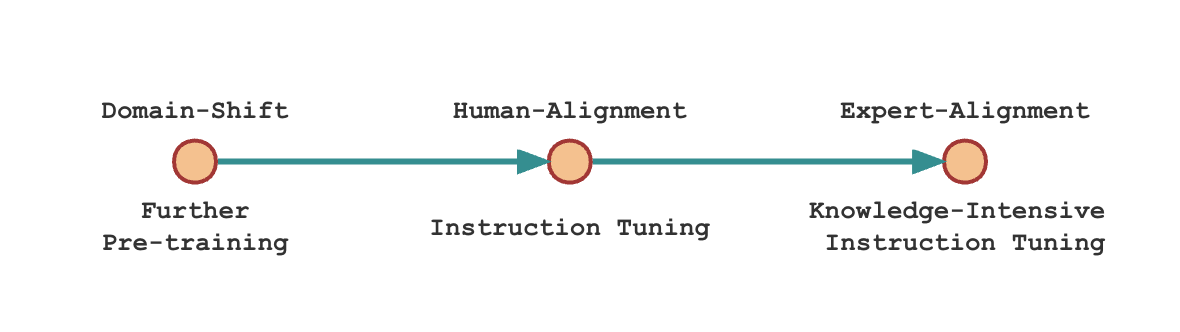

Scientific domain adaptation has two main steps during instruction tuning.

- Instruction tuning with general instruction-tuning data. Here we use Alpaca-GPT4.

- Instruction tuning with restructured domain knowledge, which we call expertise instruction tuning. For K2, we use knowledge-intensive instruction data, GeoSignal.

The following is the illustration of the training domain-specific language model recipe:

- Adapter Model on Hugging Face: daven3/k2_it_adapter

- Dataset on Hugging Face: geosignal

In GeoBench, we collect 183 multiple-choice questions in NPEE, and 1,395 in AP Test, for objective tasks. Meanwhile, we gather all 939 subjective questions in NPEE to be the subjective tasks set and use 50 to measure the baselines with human evaluation.

- Dataset on Hugging Face: geobench

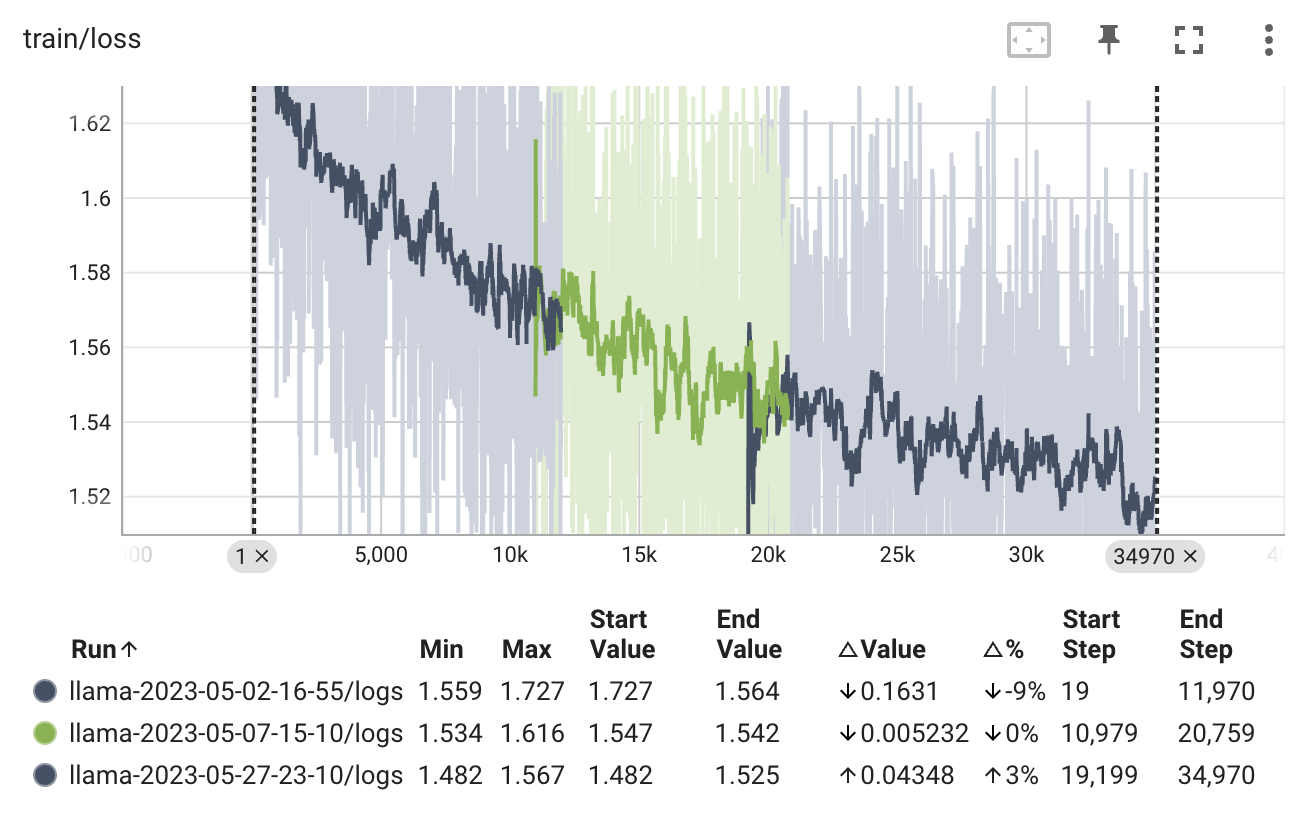

The training script is run_clm.py

deepspeed --num_gpus=4 run_clm.py --deepspeed ds_config_zero.json >log 2>&1 &The parameters we use:

- Batch size per device: 2

- Global batch size: 128 (2*4gpu*16gradient accumulation steps)

- Number of trainable parameters: 6738415616 (7b)

- lr: 1e-5

- bf16: true

- tf32: true

- Warmup: 0.03/3 epoch (nearly 1000 steps)

- Zero_optimization_stage: 3

Tips: We can not resume smoothly from checkpoints for the limited computing power. Therefore, we did not load the optimizer state dict when resuming training. Even though there are two noticeable spikes in the diagram, the performance seems to stay at normal.

The training script is finetune.py

- For the first step: is alignment with human

python finetune.py --base_model /path/to/checkpoint-30140 --data_path /path/to/alpaca.json --output_dir /path/to/stage/one/model/ --cuda_id 2 --lora_target_modules "q_proj" "k_proj" "v_proj"- For the second step: alignment with expert

python finetune.py --base_model /path/to/checkpoint-30140 --data_path /path/to/geosignal.json --output_dir /path/to/stage/two/model/ --cuda_id 2 --lora_target_modules "q_proj" "k_proj" "v_proj" --resume_from_checkpoint /path/to/stage/one/model/- batch_size: 128

- micro batch size: 4

- num epochs: 1

- learning rate: 3e-4

- cutoff len: 512

- val set size: 2000

- lora r: 8

- lora alpha: 16

- lora dropout: 0.05

- lora target modules: ["q_proj", "k_proj", "v_proj"]

| Case 1 | Case 2 | Case 3 |

|---|---|---|

|

|

|

-

Answer:

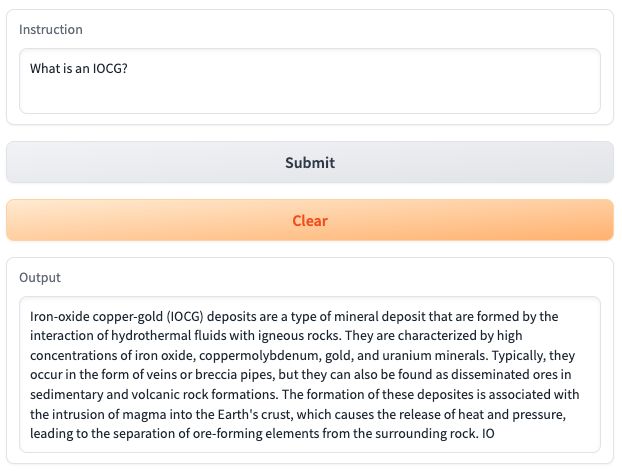

- Question: What is an IOCG?

- Answer: Iron-oxide copper-gold (IOCG) deposits are a type of mineral deposit that are formed by the interaction of hydrothermal fluids with igneous rocks. They are characterized by high concentrations of iron oxide, coppermolybdenum, gold, and uranium minerals. Typically, they occur in the form of veins or breccia pipes, but they can also be found as disseminated ores in sedimentary and volcanic rock formations. The formation of these deposites is associated with the intrusion of magma into the Earth's crust, which causes the release of heat and pressure, leading to the separation of ore-forming elements from the surrounding rock. IO

-

Answer and Explain:

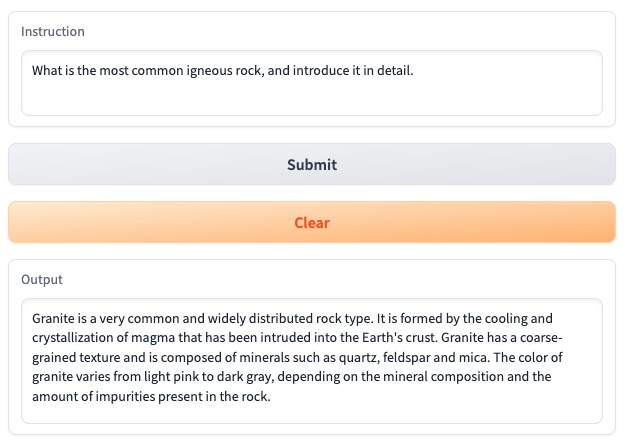

- Question: What is the most common igneous rock, and introduce it in detail.

- Answer: Granite is a very common and widely distributed rock type. It is formed by the cooling and crystallization of magma that has been intruded into the Earth's crust. Granite has a coarse-grained texture and is composed of minerals such as quartz, feldspar and mica. The color of granite varies from light pink to dark gray, depending on the mineral composition and the amount of impurities present in the rock.

-

Answer with comparison:

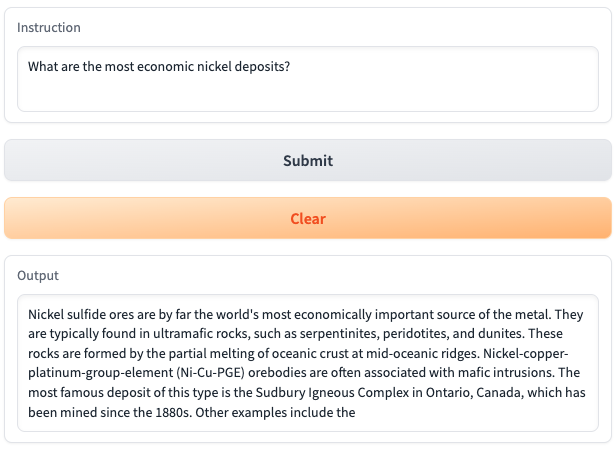

- Question: What are the most economic nickel deposits?

- Answer: Nickel sulfide ores are by far the world's most economically important source of the metal. They are typically found in ultramafic rocks, such as serpentinites, peridotites, and dunites. These rocks are formed by the partial melting of oceanic crust at mid-oceanic ridges. Nickel-copper-platinum-group-element (Ni-Cu-PGE) orebodies are often associated with mafic intrusions. The most famous deposit of this type is the Sudbury Igneous Complex in Ontario, Canada, which has been mined since the 1880s. Other examples include the

We share the original evaluation code in evaluation folder, and we will release Geo-Eval in the near future with more evaluation methods.

K2 is originally from the name of the second-highest mountain in the world, and we believe that in the future, larger and more powerful geoscience language models will be created. What is more, to train a model to shift to a discipline with a significant domain barrier, we have encountered many difficulties (collecting corpus, clean academic data, computing power, ...), which shares with the fact that climbing K2 is no less challenging than Mount Everest 🏔️.

This project was founded by Acemap at Shanghai Jiao Tong University, including Cheng Deng, Tianhang Zhang, Zhongmou He, Qiyuan Chen, Yuanyuan Shi, Le Zhou, supervised by Weinan Zhang, Luoyi Fu, Zhouhan Lin, Junxian He, and Xinbing Wang. The whole project is supported by Chenghu Zhou and the Institute of Geographical Science, Natural Resources Research, Chinese Academy of Sciences.

K2 has referred to the following open-source projects. We want to express our gratitude and respect to the researchers of the projects.

- Facebook LLaMA: https://github.com/facebookresearch/llama

- Stanford Alpaca: https://github.com/tatsu-lab/stanford_alpaca

- alpaca-lora by @tloen: https://github.com/tloen/alpaca-lora

- alpaca-gp4 by Chansung Park: https://github.com/tloen/alpaca-lora/issues/340

K2 is under the support of Chenghu Zhou and the Institute of Geographical Science, Natural Resources Research, Chinese Academy of Sciences.

We would also like to express our appreciation for the effort of data processing from Yutong Xu and Beiya Dai.

K2 is a research preview intended for non-commercial use only, subject to the model License of LLaMA and the Terms of Use of the data generated by OpenAI. Please contact us if you find any potential violations. The code is released under the Apache License 2.0. The data GeoSignal and GeoBench is updating occasionally, if you want to subscribe the data, you can emaill us [email protected].

Citation ArXiv

If you use the code or data of K2, please declare the reference with the following:

@misc{deng2023learning,

title={K2: A Foundation Language Model for Geoscience Knowledge Understanding and Utilization},

author={Cheng Deng and Tianhang Zhang and Zhongmou He and Yi Xu and Qiyuan Chen and Yuanyuan Shi and Luoyi Fu and Weinan Zhang and Xinbing Wang and Chenghu Zhou and Zhouhan Lin and Junxian He},

year={2023},

eprint={2306.05064},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for k2

Similar Open Source Tools

k2

K2 (GeoLLaMA) is a large language model for geoscience, trained on geoscience literature and fine-tuned with knowledge-intensive instruction data. It outperforms baseline models on objective and subjective tasks. The repository provides K2 weights, core data of GeoSignal, GeoBench benchmark, and code for further pretraining and instruction tuning. The model is available on Hugging Face for use. The project aims to create larger and more powerful geoscience language models in the future.

RLHF-Reward-Modeling

This repository contains code for training reward models for Deep Reinforcement Learning-based Reward-modulated Hierarchical Fine-tuning (DRL-based RLHF), Iterative Selection Fine-tuning (Rejection sampling fine-tuning), and iterative Decision Policy Optimization (DPO). The reward models are trained using a Bradley-Terry model based on the Gemma and Mistral language models. The resulting reward models achieve state-of-the-art performance on the RewardBench leaderboard for reward models with base models of up to 13B parameters.

zshot

Zshot is a highly customizable framework for performing Zero and Few shot named entity and relationships recognition. It can be used for mentions extraction, wikification, zero and few shot named entity recognition, zero and few shot named relationship recognition, and visualization of zero-shot NER and RE extraction. The framework consists of two main components: the mentions extractor and the linker. There are multiple mentions extractors and linkers available, each serving a specific purpose. Zshot also includes a relations extractor and a knowledge extractor for extracting relations among entities and performing entity classification. The tool requires Python 3.6+ and dependencies like spacy, torch, transformers, evaluate, and datasets for evaluation over datasets like OntoNotes. Optional dependencies include flair and blink for additional functionalities. Zshot provides examples, tutorials, and evaluation methods to assess the performance of the components.

fuse-med-ml

FuseMedML is a Python framework designed to accelerate machine learning-based discovery in the medical field by promoting code reuse. It provides a flexible design concept where data is stored in a nested dictionary, allowing easy handling of multi-modality information. The framework includes components for creating custom models, loss functions, metrics, and data processing operators. Additionally, FuseMedML offers 'batteries included' key components such as fuse.data for data processing, fuse.eval for model evaluation, and fuse.dl for reusable deep learning components. It supports PyTorch and PyTorch Lightning libraries and encourages the creation of domain extensions for specific medical domains.

Reflection_Tuning

Reflection-Tuning is a project focused on improving the quality of instruction-tuning data through a reflection-based method. It introduces Selective Reflection-Tuning, where the student model can decide whether to accept the improvements made by the teacher model. The project aims to generate high-quality instruction-response pairs by defining specific criteria for the oracle model to follow and respond to. It also evaluates the efficacy and relevance of instruction-response pairs using the r-IFD metric. The project provides code for reflection and selection processes, along with data and model weights for both V1 and V2 methods.

MME-RealWorld

MME-RealWorld is a benchmark designed to address real-world applications with practical relevance, featuring 13,366 high-resolution images and 29,429 annotations across 43 tasks. It aims to provide substantial recognition challenges and overcome common barriers in existing Multimodal Large Language Model benchmarks, such as small data scale, restricted data quality, and insufficient task difficulty. The dataset offers advantages in data scale, data quality, task difficulty, and real-world utility compared to existing benchmarks. It also includes a Chinese version with additional images and QA pairs focused on Chinese scenarios.

MMStar

MMStar is an elite vision-indispensable multi-modal benchmark comprising 1,500 challenge samples meticulously selected by humans. It addresses two key issues in current LLM evaluation: the unnecessary use of visual content in many samples and the existence of unintentional data leakage in LLM and LVLM training. MMStar evaluates 6 core capabilities across 18 detailed axes, ensuring a balanced distribution of samples across all dimensions.

Quantus

Quantus is a toolkit designed for the evaluation of neural network explanations. It offers more than 30 metrics in 6 categories for eXplainable Artificial Intelligence (XAI) evaluation. The toolkit supports different data types (image, time-series, tabular, NLP) and models (PyTorch, TensorFlow). It provides built-in support for explanation methods like captum, tf-explain, and zennit. Quantus is under active development and aims to provide a comprehensive set of quantitative evaluation metrics for XAI methods.

gepa

GEPA (Genetic-Pareto) is a framework for optimizing arbitrary systems composed of text components like AI prompts, code snippets, or textual specs against any evaluation metric. It employs LLMs to reflect on system behavior, using feedback from execution and evaluation traces to drive targeted improvements. Through iterative mutation, reflection, and Pareto-aware candidate selection, GEPA evolves robust, high-performing variants with minimal evaluations, co-evolving multiple components in modular systems for domain-specific gains. The repository provides the official implementation of the GEPA algorithm as proposed in the paper titled 'GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning'.

LLMLingua

LLMLingua is a tool that utilizes a compact, well-trained language model to identify and remove non-essential tokens in prompts. This approach enables efficient inference with large language models, achieving up to 20x compression with minimal performance loss. The tool includes LLMLingua, LongLLMLingua, and LLMLingua-2, each offering different levels of prompt compression and performance improvements for tasks involving large language models.

LongBench

LongBench v2 is a benchmark designed to assess the ability of large language models (LLMs) to handle long-context problems requiring deep understanding and reasoning across various real-world multitasks. It consists of 503 challenging multiple-choice questions with contexts ranging from 8k to 2M words, covering six major task categories. The dataset is collected from nearly 100 highly educated individuals with diverse professional backgrounds and is designed to be challenging even for human experts. The evaluation results highlight the importance of enhanced reasoning ability and scaling inference-time compute to tackle the long-context challenges in LongBench v2.

magpie

This is the official repository for 'Alignment Data Synthesis from Scratch by Prompting Aligned LLMs with Nothing'. Magpie is a tool designed to synthesize high-quality instruction data at scale by extracting it directly from an aligned Large Language Models (LLMs). It aims to democratize AI by generating large-scale alignment data and enhancing the transparency of model alignment processes. Magpie has been tested on various model families and can be used to fine-tune models for improved performance on alignment benchmarks such as AlpacaEval, ArenaHard, and WildBench.

baal

Baal is an active learning library that supports both industrial applications and research use cases. It provides a framework for Bayesian active learning methods such as Monte-Carlo Dropout, MCDropConnect, Deep ensembles, and Semi-supervised learning. Baal helps in labeling the most uncertain items in the dataset pool to improve model performance and reduce annotation effort. The library is actively maintained by a dedicated team and has been used in various research papers for production and experimentation.

AgentCPM

AgentCPM is a series of open-source LLM agents jointly developed by THUNLP, Renmin University of China, ModelBest, and the OpenBMB community. It addresses challenges faced by agents in real-world applications such as limited long-horizon capability, autonomy, and generalization. The team focuses on building deep research capabilities for agents, releasing AgentCPM-Explore, a deep-search LLM agent, and AgentCPM-Report, a deep-research LLM agent. AgentCPM-Explore is the first open-source agent model with 4B parameters to appear on widely used long-horizon agent benchmarks. AgentCPM-Report is built on the 8B-parameter base model MiniCPM4.1, autonomously generating long-form reports with extreme performance and minimal footprint, designed for high-privacy scenarios with offline and agile local deployment.

SheetCopilot

SheetCopilot is an assistant agent that manipulates spreadsheets by following user commands. It leverages Large Language Models (LLMs) to interact with spreadsheets like a human expert, enabling non-expert users to complete tasks on complex software such as Google Sheets and Excel via a language interface. The tool observes spreadsheet states, polishes generated solutions based on external action documents and error feedback, and aims to improve success rate and efficiency. SheetCopilot offers a dataset with diverse task categories and operations, supporting operations like entry & manipulation, management, formatting, charts, and pivot tables. Users can interact with SheetCopilot in Excel or Google Sheets, executing tasks like calculating revenue, creating pivot tables, and plotting charts. The tool's evaluation includes performance comparisons with leading LLMs and VBA-based methods on specific datasets, showcasing its capabilities in controlling various aspects of a spreadsheet.

AgentGym-RL

AgentGym-RL is a framework designed to train Long-Long Memory (LLM) agents for multi-turn interactive decision-making through Reinforcement Learning. It addresses challenges in training agents for real-world scenarios by supporting mainstream RL algorithms and introducing the ScalingInter-RL method for stable optimization. The framework includes modular components for environment, agent reasoning, and training pipelines. It offers diverse environments like Web Navigation, Deep Search, Digital Games, Embodied Tasks, and Scientific Tasks. AgentGym-RL also supports various online RL algorithms and post-training strategies. The tool aims to enhance agent performance and exploration capabilities through long-horizon planning and interaction with the environment.

For similar tasks

k2

K2 (GeoLLaMA) is a large language model for geoscience, trained on geoscience literature and fine-tuned with knowledge-intensive instruction data. It outperforms baseline models on objective and subjective tasks. The repository provides K2 weights, core data of GeoSignal, GeoBench benchmark, and code for further pretraining and instruction tuning. The model is available on Hugging Face for use. The project aims to create larger and more powerful geoscience language models in the future.

RLHF-Reward-Modeling

This repository contains code for training reward models for Deep Reinforcement Learning-based Reward-modulated Hierarchical Fine-tuning (DRL-based RLHF), Iterative Selection Fine-tuning (Rejection sampling fine-tuning), and iterative Decision Policy Optimization (DPO). The reward models are trained using a Bradley-Terry model based on the Gemma and Mistral language models. The resulting reward models achieve state-of-the-art performance on the RewardBench leaderboard for reward models with base models of up to 13B parameters.

h2o-llmstudio

H2O LLM Studio is a framework and no-code GUI designed for fine-tuning state-of-the-art large language models (LLMs). With H2O LLM Studio, you can easily and effectively fine-tune LLMs without the need for any coding experience. The GUI is specially designed for large language models, and you can finetune any LLM using a large variety of hyperparameters. You can also use recent finetuning techniques such as Low-Rank Adaptation (LoRA) and 8-bit model training with a low memory footprint. Additionally, you can use Reinforcement Learning (RL) to finetune your model (experimental), use advanced evaluation metrics to judge generated answers by the model, track and compare your model performance visually, and easily export your model to the Hugging Face Hub and share it with the community.

MathCoder

MathCoder is a repository focused on enhancing mathematical reasoning by fine-tuning open-source language models to use code for modeling and deriving math equations. It introduces MathCodeInstruct dataset with solutions interleaving natural language, code, and execution results. The repository provides MathCoder models capable of generating code-based solutions for challenging math problems, achieving state-of-the-art scores on MATH and GSM8K datasets. It offers tools for model deployment, inference, and evaluation, along with a citation for referencing the work.

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

langserve_ollama

LangServe Ollama is a tool that allows users to fine-tune Korean language models for local hosting, including RAG. Users can load HuggingFace gguf files, create model chains, and monitor GPU usage. The tool provides a seamless workflow for customizing and deploying language models in a local environment.

LLM-Fine-Tuning

This GitHub repository contains examples of fine-tuning open source large language models. It showcases the process of fine-tuning and quantizing large language models using efficient techniques like Lora and QLora. The repository serves as a practical guide for individuals looking to optimize the performance of language models through fine-tuning.

For similar jobs

SLR-FC

This repository provides a comprehensive collection of AI tools and resources to enhance literature reviews. It includes a curated list of AI tools for various tasks, such as identifying research gaps, discovering relevant papers, visualizing paper content, and summarizing text. Additionally, the repository offers materials on generative AI, effective prompts, copywriting, image creation, and showcases of AI capabilities. By leveraging these tools and resources, researchers can streamline their literature review process, gain deeper insights from scholarly literature, and improve the quality of their research outputs.

paper-ai

Paper-ai is a tool that helps you write papers using artificial intelligence. It provides features such as AI writing assistance, reference searching, and editing and formatting tools. With Paper-ai, you can quickly and easily create high-quality papers.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and follows a process of embedding docs and queries, searching for top passages, creating summaries, scoring and selecting relevant summaries, putting summaries into prompt, and generating answers. Users can customize prompts and use various models for embeddings and LLMs. The tool can be used asynchronously and supports adding documents from paths, files, or URLs.

ChatData

ChatData is a robust chat-with-documents application designed to extract information and provide answers by querying the MyScale free knowledge base or uploaded documents. It leverages the Retrieval Augmented Generation (RAG) framework, millions of Wikipedia pages, and arXiv papers. Features include self-querying retriever, VectorSQL, session management, and building a personalized knowledge base. Users can effortlessly navigate vast data, explore academic papers, and research documents. ChatData empowers researchers, students, and knowledge enthusiasts to unlock the true potential of information retrieval.

noScribe

noScribe is an AI-based software designed for automated audio transcription, specifically tailored for transcribing interviews for qualitative social research or journalistic purposes. It is a free and open-source tool that runs locally on the user's computer, ensuring data privacy. The software can differentiate between speakers and supports transcription in 99 languages. It includes a user-friendly editor for reviewing and correcting transcripts. Developed by Kai Dröge, a PhD in sociology with a background in computer science, noScribe aims to streamline the transcription process and enhance the efficiency of qualitative analysis.

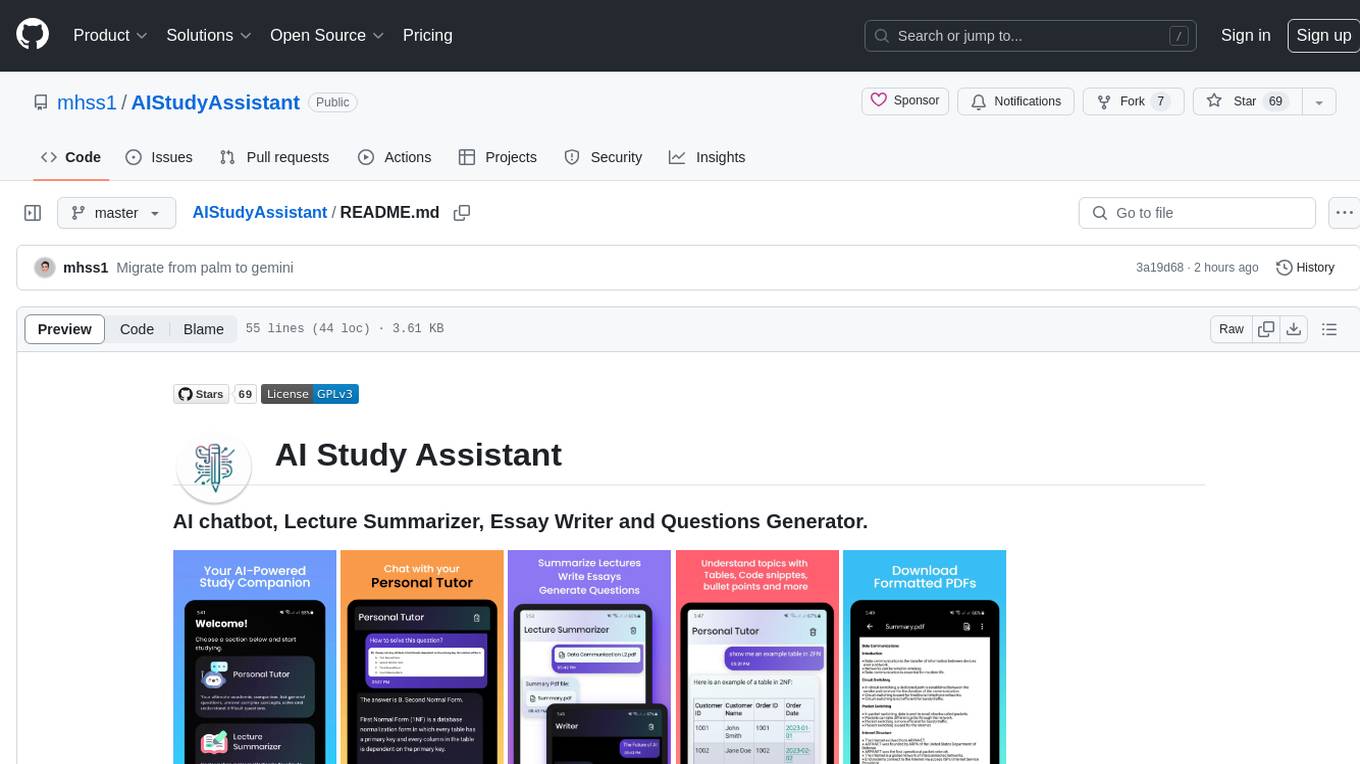

AIStudyAssistant

AI Study Assistant is an app designed to enhance learning experience and boost academic performance. It serves as a personal tutor, lecture summarizer, writer, and question generator powered by Google PaLM 2. Features include interacting with an AI chatbot, summarizing lectures, generating essays, and creating practice questions. The app is built using 100% Kotlin, Jetpack Compose, Clean Architecture, and MVVM design pattern, with technologies like Ktor, Room DB, Hilt, and Kotlin coroutines. AI Study Assistant aims to provide comprehensive AI-powered assistance for students in various academic tasks.

data-to-paper

Data-to-paper is an AI-driven framework designed to guide users through the process of conducting end-to-end scientific research, starting from raw data to the creation of comprehensive and human-verifiable research papers. The framework leverages a combination of LLM and rule-based agents to assist in tasks such as hypothesis generation, literature search, data analysis, result interpretation, and paper writing. It aims to accelerate research while maintaining key scientific values like transparency, traceability, and verifiability. The framework is field-agnostic, supports both open-goal and fixed-goal research, creates data-chained manuscripts, involves human-in-the-loop interaction, and allows for transparent replay of the research process.

k2

K2 (GeoLLaMA) is a large language model for geoscience, trained on geoscience literature and fine-tuned with knowledge-intensive instruction data. It outperforms baseline models on objective and subjective tasks. The repository provides K2 weights, core data of GeoSignal, GeoBench benchmark, and code for further pretraining and instruction tuning. The model is available on Hugging Face for use. The project aims to create larger and more powerful geoscience language models in the future.