Mooncake

Mooncake is the serving platform for Kimi, a leading LLM service provided by Moonshot AI.

Stars: 4802

Mooncake is a serving platform for Kimi, a leading LLM service provided by Moonshot AI. It features a KVCache-centric disaggregated architecture that separates prefill and decoding clusters, leveraging underutilized CPU, DRAM, and SSD resources of the GPU cluster. Mooncake's scheduler balances throughput and latency-related SLOs, with a prediction-based early rejection policy for highly overloaded scenarios. It excels in long-context scenarios, achieving up to a 525% increase in throughput while handling 75% more requests under real workloads.

README:

Mooncake is the serving platform for  Kimi, a leading LLM service provided by

Kimi, a leading LLM service provided by  Moonshot AI.

Now both the Transfer Engine and Mooncake Store are open-sourced!

This repository also hosts its technical report and the open-sourced traces.

Moonshot AI.

Now both the Transfer Engine and Mooncake Store are open-sourced!

This repository also hosts its technical report and the open-sourced traces.

- Feb 12, 2026: Mooncake Joins PyTorch Ecosystem We are thrilled to announce that Mooncake has officially joined the PyTorch Ecosystem!

- Jan 28, 2026: FlexKV, a distributed KV store and cache system from Tencent and NVIDIA in collaboration with the community, now supports distributed KVCache reuse with the Mooncake Transfer Engine.

- Dec 27, 2025: Collaboration with ROLL! Check out the paper here.

- Dec 23, 2025: SGLang introduces Encode-Prefill-Decode (EPD) Disaggregation with Mooncake as a transfer backend. This integration allows decoupling compute-intensive multimodal encoders (e.g., Vision Transformers) from language model nodes, utilizing Mooncake's RDMA engine for zero-copy transfer of large multimodal embeddings.

- Dec 19, 2025: Mooncake Transfer Engine has been integrated into TensorRT LLM for KVCache transfer in PD-disaggregated inference.

- Dec 19, 2025: Mooncake Transfer Engine has been directly integrated into vLLM v1 as a KV Connector in PD-disaggregated setups.

- Nov 07, 2025: RBG + SGLang HiCache + Mooncake, a role-based out-of-the-box solution for cloud native deployment, which is elastic, scalable, and high-performance.

- Sept 18, 2025: Mooncake Store empowers vLLM Ascend by serving as the distributed KV cache pool backend.

- Sept 10, 2025: SGLang officially supports Mooncake Store as a hierarchical KV caching storage backend. The integration extends RadixAttention with multi-tier KV cache storage across device, host, and remote storage layers.

- Sept 10, 2025: The official & high-performance version of Mooncake P2P Store is open-sourced as checkpoint-engine. It has been successfully applied in K1.5 and K2 production training, updating Kimi-K2 model (1T parameters) across thousands of GPUs in ~20s.

- Aug 23, 2025: xLLM high-performance inference engine builds hybrid KV cache management based on Mooncake, supporting global KV cache management with intelligent offloading and prefetching.

- Aug 18, 2025: vLLM-Ascend integrates Mooncake Transfer Engine for KV cache register and disaggregate prefill, enabling efficient distributed inference on Ascend NPUs.

- Jul 20, 2025: Mooncake powers the deployment of Kimi K2 on 128 H200 GPUs with PD disaggregation and large-scale expert parallelism, achieving 224k tokens/sec prefill throughput and 288k tokens/sec decode throughput.

- Jun 20, 2025: Mooncake becomes a PD disaggregation backend for LMDeploy.

- May 9, 2025: NIXL officially supports Mooncake Transfer Engine as a backend plugin.

- May 8, 2025: Mooncake x LMCache unite to pioneer KVCache-centric LLM serving system.

- May 5, 2025: Supported by Mooncake Team, SGLang release guidance to deploy DeepSeek with PD Disaggregation on 96 H100 GPUs.

- Apr 22, 2025: LMCache officially supports Mooncake Store as a remote connector.

- Apr 10, 2025: SGLang officially supports Mooncake Transfer Engine for disaggregated prefilling and KV cache transfer.

- Mar 7, 2025: We open-sourced the Mooncake Store, a distributed KVCache based on Transfer Engine. vLLM's xPyD disaggregated prefilling & decoding based on Mooncake Store will be released soon.

- Feb 25, 2025: Mooncake receives the Best Paper Award at FAST 2025!

- Feb 21, 2025: The updated traces used in our FAST'25 paper have been released.

- Dec 16, 2024: vLLM officially supports Mooncake Transfer Engine for disaggregated prefilling and KV cache transfer.

- Nov 28, 2024: We open-sourced the Transfer Engine, the central component of Mooncake. We also provide two demonstrations of Transfer Engine: a P2P Store and vLLM integration.

- July 9, 2024: We open-sourced the trace as a JSONL file.

- June 27, 2024: We present a series of Chinese blogs with more discussions on zhihu 1, 2, 3, 4, 5, 6, 7.

- June 26, 2024: Initial technical report release.

Mooncake features a KVCache-centric disaggregated architecture that separates the prefill and decoding clusters. It also leverages the underutilized CPU, DRAM, and SSD resources of the GPU cluster to implement a disaggregated KVCache pool.

The core of Mooncake is its KVCache-centric scheduler, which balances maximizing overall effective throughput while meeting latency-related Service Level Objectives (SLOs). Unlike traditional studies that assume all requests will be processed, Mooncake faces challenges in highly overloaded scenarios. To mitigate these, we developed a prediction-based early rejection policy. Experiments show that Mooncake excels in long-context scenarios. Compared to the baseline method, Mooncake can achieve up to a 525% increase in throughput in certain simulated scenarios while adhering to SLOs. Under real workloads, Mooncake’s innovative architecture enables Kimi to handle 75% more requests.

Mooncake Core Component: Transfer Engine (TE) The core of Mooncake is the Transfer Engine (TE), which provides a unified interface for batched data transfer across various storage devices and network links. Supporting multiple protocols including TCP, RDMA, CXL/shared-memory, and NVMe over Fabric (NVMe-of), TE is designed to enable fast and reliable data transfer for AI workloads. Compared to Gloo (used by Distributed PyTorch) and traditional TCP, TE achieves significantly lower I/O latency, making it a superior solution for efficient data transmission.

P2P Store and Mooncake Store Both P2P Store and Mooncake Store are built on the Transfer Engine and provide key/value caching for different scenarios. P2P Store focuses on sharing temporary objects (e.g., checkpoint files) across nodes in a cluster, preventing bandwidth saturation on a single machine. Mooncake Store, on the other hand, supports distributed pooled KVCache, specifically designed for XpYd disaggregation to enhance resource utilization and system performance.

Mooncake Integration with Leading LLM Inference Systems Mooncake has been seamlessly integrated with several popular large language model (LLM) inference systems. Through collaboration with the vLLM and SGLang teams, Mooncake now officially supports prefill-decode disaggregation. By leveraging the high-efficiency communication capabilities of RDMA devices, Mooncake significantly improves inference efficiency in prefill-decode disaggregation scenarios, providing robust technical support for large-scale distributed inference tasks. In addition, Mooncake has been successfully integrated with SGLang's Hierarchical KV Caching, vLLM's prefill serving, and LMCache, augmenting KV cache management capabilities across large-scale inference scenarios.

Elastic Expert Parallelism Support Mooncake adds elasticity and fault tolerance support for MoE model inference, enabling inference systems to remain responsive and recoverable in the event of GPU failures or changes in resource configuration. This functionality includes automatic faulty rank detection and can work with the EPLB module to dynamically route tokens to healthy ranks during inference.

Tensor-Centric Ecosystem Mooncake establishes a full-stack, Tensor-oriented AI infrastructure where Tensors serve as the fundamental data carrier. The ecosystem spans from the Transfer Engine, which accelerates Tensor data movement across heterogeneous storage (DRAM/VRAM/NVMe), to the P2P Store and Mooncake Store for distributed management of Tensor objects (e.g., Checkpoints and KVCache), up to the Mooncake Backend enabling Tensor-based elastic distributed computing. This architecture is designed to maximize Tensor processing efficiency for large-scale model inference and training.

Use Transfer Engine Standalone (Guide)

Transfer Engine is a high-performance data transfer framework. Transfer Engine provides a unified interface to transfer data from DRAM, VRAM or NVMe, while the technical details related to hardware are hidden. Transfer Engine supports multiple communication protocols including TCP, RDMA (InfiniBand/RoCEv2/eRDMA/NVIDIA GPUDirect), NVMe over Fabric (NVMe-of), NVLink, HIP, CXL, and Ascend. For a complete list of supported protocols and configuration guide, see the Supported Protocols Documentation.

-

Efficient use of multiple RDMA NIC devices. Transfer Engine supports the use of multiple RDMA NIC devices to achieve the aggregation of transfer bandwidth.

-

Topology aware path selection. Transfer Engine can select optimal devices based on the location (NUMA affinity, etc.) of both source and destination.

-

More robust against temporary network errors. Once transmission fails, Transfer Engine will try to use alternative paths for data delivery automatically.

With 40 GB of data (equivalent to the size of the KVCache generated by 128k tokens in the LLaMA3-70B model), Mooncake Transfer Engine delivers up to 87 GB/s and 190 GB/s of bandwidth in 4×200 Gbps and 8×400 Gbps RoCE networks respectively, which are about 2.4x and 4.6x faster than the TCP protocol.

P2P Store (Guide)

P2P Store is built on the Transfer Engine and supports sharing temporary objects between peer nodes in a cluster. P2P Store is ideal for scenarios like checkpoint transfer, where data needs to be rapidly and efficiently shared across a cluster. P2P Store has been used in the checkpoint transfer service of Moonshot AI.

-

Decentralized architecture. P2P Store leverages a pure client-side architecture with global metadata managed by the etcd service.

-

Efficient data distribution. Designed to enhance the efficiency of large-scale data distribution, P2P Store avoids bandwidth saturation issues by allowing replicated nodes to share data directly. This reduces the CPU/RDMA NIC pressures of data providers (e.g., trainers).

Mooncake Store (Guide)

Mooncake Store is a distributed KVCache storage engine specialized for LLM inference based on Transfer Engine. It is the central component of the KVCache-centric disaggregated architecture. The goal of Mooncake Store is to store the reusable KV caches across various locations in an inference cluster. Mooncake Store has been supported in SGLang's Hierarchical KV Caching, vLLM's prefill serving and is now integrated with LMCache to provide enhanced KVCache management capabilities.

-

Multi-replica support: Mooncake Store supports storing multiple data replicas for the same object, effectively alleviating hotspots in access pressure.

-

High bandwidth utilization: Mooncake Store supports striping and parallel I/O transfer of large objects, fully utilizing multi-NIC aggregated bandwidth for high-speed data reads and writes.

SGLang Integration (Guide)

SGLang officially supports Mooncake Store as a HiCache storage backend. This integration enables scalable KV cache retention and high-performance access for large-scale LLM serving scenarios.

- Hierarchical KV Caching: Mooncake Store serves as an external storage backend in SGLang's HiCache system, extending RadixAttention with multi-level KV cache storage across device, host, and remote storage layers.

- Flexible Cache Management: Supports multiple cache policies including write-through, write-through-selective, and write-back modes, with intelligent prefetching strategies for optimal performance.

- Comprehensive Optimizations: Features advanced data plane optimizations including page-first memory layout for improved I/O efficiency, zero-copy mechanisms for reduced memory overhead, GPU-assisted I/O kernels delivering fast CPU-GPU transfers, and layer-wise overlapping for concurrent KV cache loading while computation executes.

- Elastic Expert Parallel: Mooncake's collective communication backend and expert parallel kernels are integrated into SGLang to enable fault-tolerant expert parallel inference (sglang#11657).

- Significant Performance Gains: The multi-turn benchmark demonstrates substantial performance improvements over the non-HiCache setting. See our benchmark report for more details.

- Community Feedback: Effective KV caching significantly reduces TTFT by eliminating redundant and costly re-computation. Integrating SGLang HiCache with the Mooncake service enables scalable KV cache retention and high-performance access. In our evaluation, we tested the DeepSeek-R1-671B model under PD-disaggregated deployment using in-house online requests sampled from a general QA scenario. On average, cache hits achieved an 84% reduction in TTFT compared to full re-computation. – Ant Group

vLLM Integration (Guide v0.2)

To optimize LLM inference, the vLLM community is working on supporting disaggregated prefilling (PR 10502). This feature allows separating the prefill phase from the decode phase in different processes. The vLLM uses nccl and gloo as the transport layer by default, but currently it cannot efficiently decouple both phases in different machines.

We have implemented vLLM integration, which uses Transfer Engine as the network layer instead of nccl and gloo, to support inter-node KVCache transfer (PR 10884). Transfer Engine provides simpler interfaces and more efficient use of RDMA devices.

We will soon release the new vLLM integration based on Mooncake Store, which supports xPyD prefill/decode disaggregation.

Update[Dec 16, 2024]: Here is the latest vLLM Integration (Guide v0.2) that is based on vLLM's main branch.

By supporting Topology Aware Path Selection and multi-card bandwidth aggregation, Mean TTFT of vLLM with Transfer Engine is up to 25% lower than traditional TCP-based transports. In the future, we will further improve TTFT through GPUDirect RDMA and zero-copy.

| Backend/Setting | Output Token Throughput (tok/s) | Total Token Throughput (tok/s) | Mean TTFT (ms) | Median TTFT (ms) | P99 TTFT (ms) |

|---|---|---|---|---|---|

| Transfer Engine (RDMA) | 12.06 | 2042.74 | 1056.76 | 635.00 | 4006.59 |

| TCP | 12.05 | 2041.13 | 1414.05 | 766.23 | 6035.36 |

- Click here to access detailed benchmark results.

More advanced features are coming soon, so stay tuned!

Mooncake is designed and optimized for high-speed RDMA networks. Though Mooncake supports TCP-only data transfer, we strongly recommend users to evaluate the functionality and performance of Mooncake with RDMA network support.

The following need to be installed before running any component of Mooncake:

- RDMA Driver & SDK, such as Mellanox OFED.

- Python 3.10, virtual environment is recommended.

- CUDA 12.1 and above, including NVIDIA GPUDirect Storage Support, if the package is built with

-DUSE_CUDA(disabled by default). You may install them from here.

The simplest way to use Mooncake Transfer Engine is using pip:

For CUDA-enabled systems:

pip install mooncake-transfer-engineFor non-CUDA systems:

pip install mooncake-transfer-engine-non-cuda[!IMPORTANT]

- The CUDA version (

mooncake-transfer-engine) includes Mooncake-EP and GPU topology detection, requiring CUDA 12.1+.- The non-CUDA version (

mooncake-transfer-engine-non-cuda) is for environments without CUDA dependencies.- If users encounter problems such as missing

lib*.so, they should uninstall the package they installed and build the binaries manually.

Mooncake supports Docker-based deployment, see Build Guide in detail.

The following are additional dependencies for building Mooncake:

- Build essentials, including gcc, g++ (9.4+) and cmake (3.16+).

- Go 1.20+, if you want to build with

-DWITH_P2P_STORE,-DUSE_ETCD(enabled by default to use etcd as metadata servers), or-DSTORE_USE_ETCD(use etcd for the failover of the store master). - CUDA 12.1 and above, including NVIDIA GPUDirect Storage Support, if the package is built with

-DUSE_CUDA. This is NOT included in thedependencies.shscript. You may install them from here. - [Optional] Rust Toolchain, if you want to build with

-DWITH_RUST_EXAMPLE. This is NOT included in thedependencies.shscript. - [Optional]

hiredis, if you want to build with-DUSE_REDISto use Redis instead of etcd as metadata servers. - [Optional]

curl, if you want to build with-DUSE_HTTPto use HTTP instead of etcd as metadata servers.

The build and installation steps are as follows:

-

Retrieve source code from GitHub repo

git clone https://github.com/kvcache-ai/Mooncake.git cd Mooncake -

Install dependencies

bash dependencies.sh

-

Compile Mooncake and examples

mkdir build cd build cmake .. make -j sudo make install # optional, make it ready to be used by vLLM/SGLang

- [x] First release of Mooncake and integrate with latest vLLM

- [ ] Share KV caches across multiple serving engines

- [ ] User and developer documentation

{

"timestamp": 27482,

"input_length": 6955,

"output_length": 52,

"hash_ids": [46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 2353, 2354]

}

{

"timestamp": 30535,

"input_length": 6472,

"output_length": 26,

"hash_ids": [46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 2366]

}The above presents two samples from our trace dataset. The trace includes the timing of request arrivals, the number of input tokens, the number of output tokens, and the remapped block hash. To protect our customers' privacy, we applied several mechanisms to remove user-related information while preserving the dataset's utility for simulated evaluation. More descriptions of the trace (e.g., up to 50% cache hit ratio) can be found in Section 4 of the technical report.

Update[Feb 21, 2025]: The updated traces used in our FAST'25 paper have been released! Please refer to the paper's appendix (found here) for more details.

Please kindly cite our paper if you find the paper or the traces are useful:@article{qin2025mooncake_tos,

author = {Qin Ruoyu and Li Zheming and He Weiran and Cui Jialei and Tang Heyi and Ren Feng and Ma Teng and Cai Shangming and Zhang Yineng and Zhang Mingxing and Wu Yongwei and Zheng Weimin and Xu Xinran},

title = {Mooncake: A KVCache-centric Disaggregated Architecture for LLM Serving},

year = {2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

issn = {1553-3077},

url = {https://doi.org/10.1145/3773772},

doi = {10.1145/3773772},

journal = {ACM Trans. Storage},

month = {nov},

keywords = {Machine learning system, LLM serving, KVCache},

}

@inproceedings{qin2025mooncake,

author = {Ruoyu Qin and Zheming Li and Weiran He and Jialei Cui and Feng Ren and Mingxing Zhang and Yongwei Wu and Weimin Zheng and Xinran Xu},

title = {Mooncake: Trading More Storage for Less Computation {\textemdash} A {KVCache-centric} Architecture for Serving {LLM} Chatbot},

booktitle = {23rd USENIX Conference on File and Storage Technologies (FAST 25)},

year = {2025},

isbn = {978-1-939133-45-8},

address = {Santa Clara, CA},

pages = {155--170},

url = {https://www.usenix.org/conference/fast25/presentation/qin},

publisher = {USENIX Association},

month = {feb},

}

@article{qin2024mooncake_arxiv,

title = {Mooncake: A KVCache-centric Disaggregated Architecture for LLM Serving},

author = {Ruoyu Qin and Zheming Li and Weiran He and Mingxing Zhang and Yongwei Wu and Weimin Zheng and Xinran Xu},

year = {2024},

url = {https://arxiv.org/abs/2407.00079},

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Mooncake

Similar Open Source Tools

Mooncake

Mooncake is a serving platform for Kimi, a leading LLM service provided by Moonshot AI. It features a KVCache-centric disaggregated architecture that separates prefill and decoding clusters, leveraging underutilized CPU, DRAM, and SSD resources of the GPU cluster. Mooncake's scheduler balances throughput and latency-related SLOs, with a prediction-based early rejection policy for highly overloaded scenarios. It excels in long-context scenarios, achieving up to a 525% increase in throughput while handling 75% more requests under real workloads.

dash-infer

DashInfer is a C++ runtime tool designed to deliver production-level implementations highly optimized for various hardware architectures, including x86 and ARMv9. It supports Continuous Batching and NUMA-Aware capabilities for CPU, and can fully utilize modern server-grade CPUs to host large language models (LLMs) up to 14B in size. With lightweight architecture, high precision, support for mainstream open-source LLMs, post-training quantization, optimized computation kernels, NUMA-aware design, and multi-language API interfaces, DashInfer provides a versatile solution for efficient inference tasks. It supports x86 CPUs with AVX2 instruction set and ARMv9 CPUs with SVE instruction set, along with various data types like FP32, BF16, and InstantQuant. DashInfer also offers single-NUMA and multi-NUMA architectures for model inference, with detailed performance tests and inference accuracy evaluations available. The tool is supported on mainstream Linux server operating systems and provides documentation and examples for easy integration and usage.

uccl

UCCL is a command-line utility tool designed to simplify the process of converting Unix-style file paths to Windows-style file paths and vice versa. It provides a convenient way for developers and system administrators to handle file path conversions without the need for manual adjustments. With UCCL, users can easily convert file paths between different operating systems, making it a valuable tool for cross-platform development and file management tasks.

veScale

veScale is a PyTorch Native LLM Training Framework. It provides a set of tools and components to facilitate the training of large language models (LLMs) using PyTorch. veScale includes features such as 4D parallelism, fast checkpointing, and a CUDA event monitor. It is designed to be scalable and efficient, and it can be used to train LLMs on a variety of hardware platforms.

UltraRAG

The UltraRAG framework is a researcher and developer-friendly RAG system solution that simplifies the process from data construction to model fine-tuning in domain adaptation. It introduces an automated knowledge adaptation technology system, supporting no-code programming, one-click synthesis and fine-tuning, multidimensional evaluation, and research-friendly exploration work integration. The architecture consists of Frontend, Service, and Backend components, offering flexibility in customization and optimization. Performance evaluation in the legal field shows improved results compared to VanillaRAG, with specific metrics provided. The repository is licensed under Apache-2.0 and encourages citation for support.

clearml

ClearML is a suite of tools designed to streamline the machine learning workflow. It includes an experiment manager, MLOps/LLMOps, data management, and model serving capabilities. ClearML is open-source and offers a free tier hosting option. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm. ClearML provides extensive logging capabilities, including source control info, execution environment, hyper-parameters, and experiment outputs. It also offers automation features, such as remote job execution and pipeline creation. ClearML is designed to be easy to integrate, requiring only two lines of code to add to existing scripts. It aims to improve collaboration, visibility, and data transparency within ML teams.

clearml

ClearML is an auto-magical suite of tools designed to streamline AI workflows. It includes modules for experiment management, MLOps/LLMOps, data management, model serving, and more. ClearML offers features like experiment tracking, model serving, orchestration, and automation. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm for remote debugging. ClearML aims to simplify collaboration, automate processes, and enhance visibility in AI projects.

tinyllm

tinyllm is a lightweight framework designed for developing, debugging, and monitoring LLM and Agent powered applications at scale. It aims to simplify code while enabling users to create complex agents or LLM workflows in production. The core classes, Function and FunctionStream, standardize and control LLM, ToolStore, and relevant calls for scalable production use. It offers structured handling of function execution, including input/output validation, error handling, evaluation, and more, all while maintaining code readability. Users can create chains with prompts, LLM models, and evaluators in a single file without the need for extensive class definitions or spaghetti code. Additionally, tinyllm integrates with various libraries like Langfuse and provides tools for prompt engineering, observability, logging, and finite state machine design.

kvcached

kvcached is a new KV cache management system that supports on-demand KV cache allocation. It implements the concept of GPU virtual memory, allowing applications to reserve virtual address space without immediately committing physical memory. Physical memory is then automatically allocated and mapped as needed at runtime. This capability allows multiple LLMs to run concurrently on a single GPU or a group of GPUs (TP) and flexibly share the GPU memory, significantly improving GPU utilization and reducing memory fragmentation. kvcached is compatible with popular LLM serving engines, including SGLang and vLLM.

eole

EOLE is an open language modeling toolkit based on PyTorch. It aims to provide a research-friendly approach with a comprehensive yet compact and modular codebase for experimenting with various types of language models. The toolkit includes features such as versatile training and inference, dynamic data transforms, comprehensive large language model support, advanced quantization, efficient finetuning, flexible inference, and tensor parallelism. EOLE is a work in progress with ongoing enhancements in configuration management, command line entry points, reproducible recipes, core API simplification, and plans for further simplification, refactoring, inference server development, additional recipes, documentation enhancement, test coverage improvement, logging enhancements, and broader model support.

fuse-med-ml

FuseMedML is a Python framework designed to accelerate machine learning-based discovery in the medical field by promoting code reuse. It provides a flexible design concept where data is stored in a nested dictionary, allowing easy handling of multi-modality information. The framework includes components for creating custom models, loss functions, metrics, and data processing operators. Additionally, FuseMedML offers 'batteries included' key components such as fuse.data for data processing, fuse.eval for model evaluation, and fuse.dl for reusable deep learning components. It supports PyTorch and PyTorch Lightning libraries and encourages the creation of domain extensions for specific medical domains.

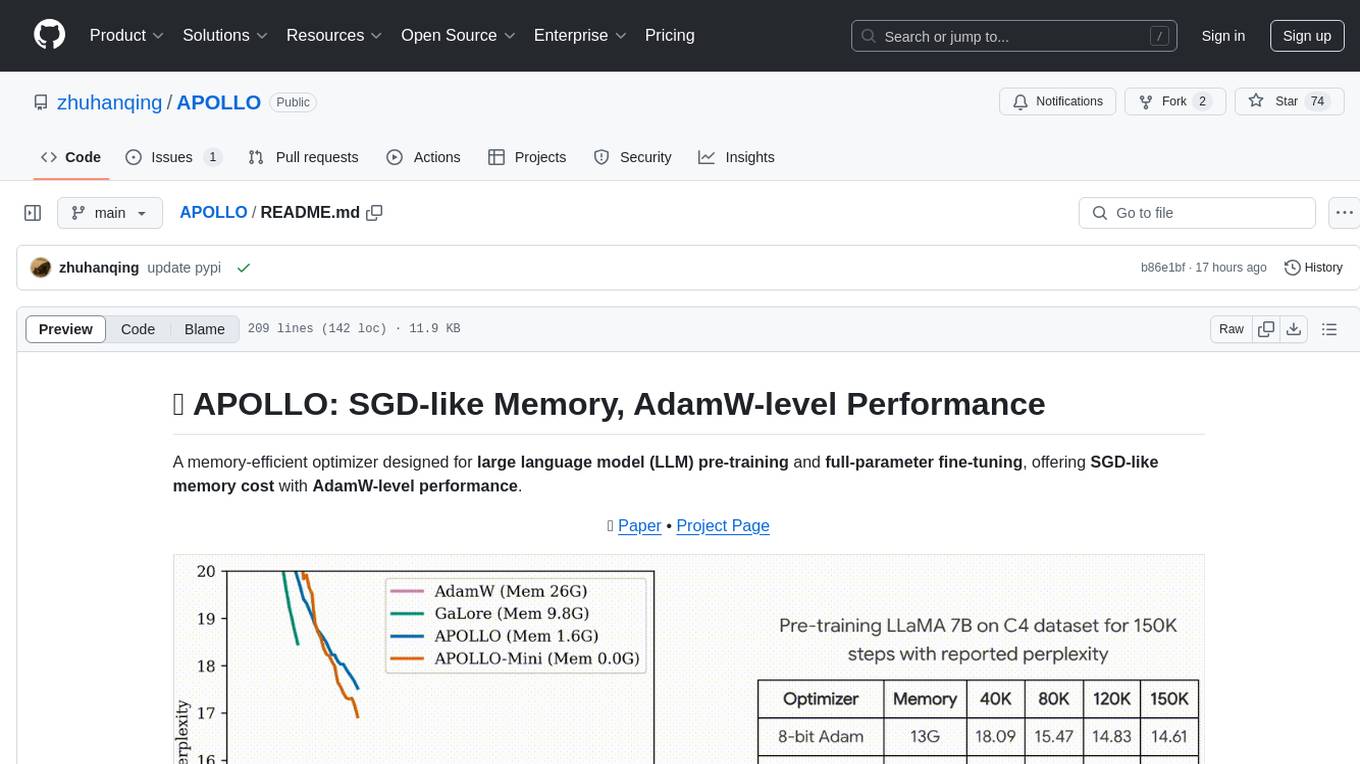

APOLLO

APOLLO is a memory-efficient optimizer designed for large language model (LLM) pre-training and full-parameter fine-tuning. It offers SGD-like memory cost with AdamW-level performance. The optimizer integrates low-rank approximation and optimizer state redundancy reduction to achieve significant memory savings while maintaining or surpassing the performance of Adam(W). Key contributions include structured learning rate updates for LLM training, approximated channel-wise gradient scaling in a low-rank auxiliary space, and minimal-rank tensor-wise gradient scaling. APOLLO aims to optimize memory efficiency during training large language models.

cleanlab

Cleanlab helps you **clean** data and **lab** els by automatically detecting issues in a ML dataset. To facilitate **machine learning with messy, real-world data** , this data-centric AI package uses your _existing_ models to estimate dataset problems that can be fixed to train even _better_ models.

swiftide

Swiftide is a fast, streaming indexing and query library tailored for Retrieval Augmented Generation (RAG) in AI applications. It is built in Rust, utilizing parallel, asynchronous streams for blazingly fast performance. With Swiftide, users can easily build AI applications from idea to production in just a few lines of code. The tool addresses frustrations around performance, stability, and ease of use encountered while working with Python-based tooling. It offers features like fast streaming indexing pipeline, experimental query pipeline, integrations with various platforms, loaders, transformers, chunkers, embedders, and more. Swiftide aims to provide a platform for data indexing and querying to advance the development of automated Large Language Model (LLM) applications.

petals

Petals is a tool that allows users to run large language models at home in a BitTorrent-style manner. It enables fine-tuning and inference up to 10x faster than offloading. Users can generate text with distributed models like Llama 2, Falcon, and BLOOM, and fine-tune them for specific tasks directly from their desktop computer or Google Colab. Petals is a community-run system that relies on people sharing their GPUs to increase its capacity and offer a distributed network for hosting model layers.

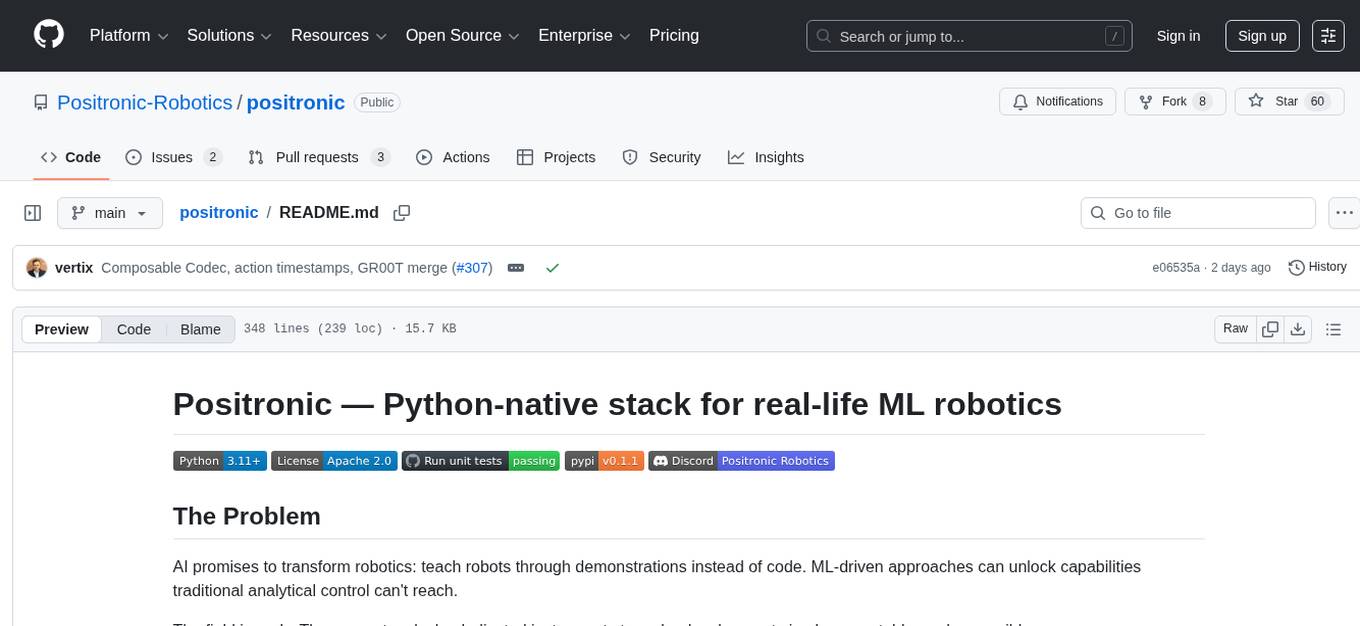

positronic

Positronic is an end-to-end toolkit for building ML-driven robotics systems, aiming to simplify data collection, messy data handling, and complex deployment in the field of robotics. It provides a Python-native stack for real-life ML robotics, covering hardware integration, dataset curation, policy training, deployment, and monitoring. The toolkit is designed to make professional-grade ML robotics approachable, without the need for ROS. Positronic offers solutions for data ops, hardware drivers, unified inference API, and iteration workflows, enabling teams to focus on developing manipulation systems for robots.

For similar tasks

Mooncake

Mooncake is a serving platform for Kimi, a leading LLM service provided by Moonshot AI. It features a KVCache-centric disaggregated architecture that separates prefill and decoding clusters, leveraging underutilized CPU, DRAM, and SSD resources of the GPU cluster. Mooncake's scheduler balances throughput and latency-related SLOs, with a prediction-based early rejection policy for highly overloaded scenarios. It excels in long-context scenarios, achieving up to a 525% increase in throughput while handling 75% more requests under real workloads.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.