learn-agentic-ai

Learn Agentic AI using Dapr Agentic Cloud Ascent (DACA) Design Pattern and Agent-Native Cloud Technologies: OpenAI Agents SDK, Memory, MCP, A2A, Knowledge Graphs, Dapr, Rancher Desktop, and Kubernetes.

Stars: 3661

Learn Agentic AI is a repository that is part of the Panaversity Certified Agentic and Robotic AI Engineer program. It covers AI-201 and AI-202 courses, providing fundamentals and advanced knowledge in Agentic AI. The repository includes video playlists, projects, and project submission guidelines for students to enhance their understanding and skills in the field of AI engineering.

README:

This repo is part of the Panaversity Certified Agentic & Robotic AI Engineer program. You can also review the certification and course details in the program guide. This repo provides learning material for Agentic AI and Cloud courses.

Here’s a polished, professional rewrite you can use as a one-pager or slide—tight on wording, clear on stakes, and just a touch playful so it doesn’t read like it was written by a committee (no offense to committees 😄).

Pakistan must place smart, early bets on the technologies and talent that will define the agentic AI era—because we intend to train millions of agentic-AI developers across the country and abroad, and launch startups at scale (ambitious, yes—but coffee is cheaper than regret).

We believe the future of AI is agentic: systems that plan, coordinate tools, and take actions to deliver outcomes, not just answers (aka “from chat to getting things done”—and ideally without breaking anything valuable). This hypothesis guides our curriculum design, tooling choices, and venture focus.

Our bet for large-scale agentic systems is a cloud-native stack: Kubernetes for orchestration, Dapr (Actors, Workflows, and Agents) for reliable micro-primitives, and Ray for elastic distributed compute. Together, these provide the building blocks for durable, observable, horizontally scalable agent swarms.

Most AI pilots fail not because the models are incapable, but because teams don’t know how to integrate AI into workflows, controls, and economics. Recent coverage of an MIT study reports that ~95% of enterprise gen-AI implementations show no measurable P&L impact—largely due to poor problem selection and integration practices, not model quality. Our program is designed to close this gap with workflow design, safety guardrails, and ROI-first delivery. An MIT report that 95% of AI pilots fail spooked investors. But it’s the reason why those pilots failed that should make the C-suite anxious

The next web is a fabric of interoperable agents coordinating via open protocols—MCP for standardized tool/context access, A2A for authenticated agent-to-agent collaboration, and NANDA for identity, authorization, and verifiable audit. These emerging standards enable composable automation across apps, devices, and clouds—shifting the browser from a tab list to an outcome orchestrator with trust and consent built in (finally, fewer tabs, more results).

- Talent engine: hands-on training in agentic patterns (planning, tools, memory, evaluation), workflow design, and safety—tied to real industry use-cases (because “Hello, World” doesn’t move P&L).

- Reference stack: Kubernetes + Dapr + Ray blueprints with observability, guardrails, and cost controls—shippable by small teams (and auditable by large ones).

- Protocol readiness: MCP/A2A/NANDA-aware agent designs to ensure our solutions interoperate as the standards mature (future-proof beats future-guess).

If any hypothesis is wrong, we’ll measure, publish, and pivot fast—because the only unforgivable error is not learning.

“How do we design AI Agents that can handle 10 million concurrent AI Agents without failing?”

Note: The challenge is intensified as we must guide our students to solve this issue with minimal financial resources available during training.

Kubernetes with Dapr can theoretically handle 10 million concurrent agents in an agentic AI system without failing, but achieving this requires extensive optimization, significant infrastructure, and careful engineering. While direct evidence at this scale is limited, logical extrapolation from existing benchmarks, Kubernetes’ scalability, and Dapr’s actor model supports feasibility, especially with rigorous tuning and resource allocation.

Condensed Argument with Proof and Logic:

-

Kubernetes Scalability:

- Evidence: Kubernetes supports up to 5,000 nodes and 150,000 pods per cluster (Kubernetes docs), with real-world examples like PayPal scaling to 4,000 nodes and 200,000 pods (InfoQ, 2023) and KubeEdge managing 100,000 edge nodes and 1 million pods (KubeEdge case studies). OpenAI’s 2,500-node cluster for AI workloads (OpenAI blog, 2022) shows Kubernetes can handle compute-intensive tasks.

- Logic: For 10 million users, a cluster of 5,000–10,000 nodes (e.g., AWS g5 instances with GPUs) can distribute workloads. Each node can run hundreds of pods, and Kubernetes’ horizontal pod autoscaling (HPA) dynamically adjusts to demand. Bottlenecks (e.g., API server, networking) can be mitigated by tuning etcd, using high-performance CNIs like Cilium, and optimizing DNS.

-

Dapr’s Efficiency for Agentic AI:

- Evidence: Dapr’s actor model supports thousands of virtual actors per CPU core with double-digit millisecond latency (Dapr docs, 2024). Case studies show Dapr handling millions of events, e.g., Tempestive’s IoT platform processing billions of messages (Dapr blog, 2023) and DeFacto’s system managing 3,700 events/second (320 million daily) on Kubernetes with Kafka (Microsoft case study, 2022).

- Logic: Agentic AI relies on stateful, low-latency agents. Dapr Agents, built on the actor model, can represent 10 million users as actors, distributed across a Kubernetes cluster. Dapr’s state management (e.g., Redis) and pub/sub messaging (e.g., Kafka) ensure efficient coordination and resilience, with automatic retries preventing failures. Sharding state stores and message brokers scales to millions of operations/second.

-

Handling AI Workloads:

- Evidence: LLM inference frameworks like vLLM and TGI serve thousands of requests/second per GPU (vLLM benchmarks, 2024). Kubernetes orchestrates GPU workloads effectively, as seen Kubernetes manages GPU workloads, as seen in NVIDIA’s AI platform scaling to thousands of GPUs (NVIDIA case study, 2023).

- Logic: Assuming each user generates 1 request/second requiring 0.01 GPU, 10 million users need ~100,000 GPUs. Batching, caching, and model parallelism reduce this to a feasible ~10,000–20,000 GPUs, achievable in hyperscale clouds (e.g., AWS). Kubernetes’ resource scheduling ensures optimal GPU utilization.

-

Networking and Storage:

- Evidence: EMQX on Kubernetes handled 1 million concurrent connections with tuning (EMQX blog, 2024). C10M benchmarks (2013) achieved 10 million connections using optimized stacks. Dapr’s state stores (e.g., Redis) support millions of operations/second (Redis benchmarks, 2024).

- Logic: 10 million connections require ~100–1,000 Gbps bandwidth, supported by modern clouds. High-throughput databases (e.g., CockroachDB) and caching (e.g., Redis Cluster) handle 10 TB of state data for 10 million users (1 KB/user). Kernel bypass (e.g., DPDK) and eBPF-based CNIs (e.g., Cilium) minimize networking latency.

-

Resilience and Monitoring:

- Evidence: Dapr’s resiliency policies (retries, circuit breakers) and Kubernetes’ self-healing (pod restarts) ensure reliability (Dapr docs, 2024). Dapr’s OpenTelemetry integration scales monitoring for millions of agents (Prometheus case studies, 2023).

- Logic: Real-time metrics (e.g., latency, error rates) and distributed tracing prevent cascading failures. Kubernetes’ liveness probes and Dapr’s workflow engine recover from crashes, ensuring 99.999% uptime.

Feasibility with Constraints:

- Challenge: No direct benchmark exists for 10 million concurrent users with Dapr/Kubernetes in an agentic AI context. Infrastructure costs (e.g., $10M–$100M for 10,000 nodes) are prohibitive for low-budget scenarios.

- Solution: Use open-source tools (e.g., Minikube, kind) for local testing and cloud credits (e.g., AWS Educate) for students. Simulate 10 million users with tools like Locust on smaller clusters (e.g., 100 nodes), extrapolating results. Optimize Dapr’s actor placement and Kubernetes’ resource quotas to maximize efficiency on limited hardware. Leverage free-tier databases (e.g., MongoDB Atlas) and message brokers (e.g., RabbitMQ).

Conclusion: Kubernetes with Dapr can handle 10 million concurrent users in an agentic AI system, supported by their proven scalability, real-world case studies, and logical extrapolation. For students with minimal budgets, small-scale simulations, open-source tools, and cloud credits make the problem tractable, though production-scale deployment requires hyperscale resources and expertise.

Agentic AI Top Trend of 2025

Let's understand and learn about "Dapr Agentic Cloud Ascent (DACA)", our winning design pattern for developing and deploying planet scale multi-agent systems.

The Dapr Agentic Cloud Ascent (DACA) guide introduces a strategic design pattern for building and deploying sophisticated, scalable, and resilient agentic AI systems. Addressing the complexities of modern AI development, DACA integrates the OpenAI Agents SDK for core agent logic with the Model Context Protocol (MCP) for standardized tool use and the Agent2Agent (A2A) protocol for seamless inter-agent communication, all underpinned by the distributed capabilities of Dapr. Grounded in AI-first and cloud-first principles, DACA promotes the use of stateless, containerized applications deployed on platforms like Azure Container Apps (Serverless Containers) or Kubernetes, enabling efficient scaling from local development to planetary-scale production, potentially leveraging free-tier cloud services and self-hosted LLMs for cost optimization. The pattern emphasizes modularity, context-awareness, and standardized communication, envisioning an Agentia World where diverse AI agents collaborate intelligently. Ultimately, DACA offers a robust, flexible, and cost-effective framework for developers and architects aiming to create complex, cloud-native agentic AI applications that are built for scalability and resilience from the ground up.

Comprehensive Guide to Dapr Agentic Cloud Ascent (DACA) Design Pattern

- Agentic AI Developer and AgentOps Professionals

Table 1: Comparison of Abstraction Levels in AI Agent Frameworks

| Framework | Abstraction Level | Key Characteristics | Learning Curve | Control Level | Simplicity |

|---|---|---|---|---|---|

| OpenAI Agents SDK | Minimal | Python-first, core primitives (Agents, Handoffs, Guardrails), direct control | Low | High | High |

| CrewAI | Moderate | Role-based agents, crews, tasks, focus on collaboration | Low-Medium | Medium | Medium |

| AutoGen | High | Conversational agents, flexible conversation patterns, human-in-the-loop support | Medium | Medium | Medium |

| Google ADK | Moderate | Multi-agent hierarchies, Google Cloud integration (Gemini, Vertex AI), rich tool ecosystem, bidirectional streaming | Medium | Medium-High | Medium |

| LangGraph | Low-Moderate | Graph-based workflows, nodes, edges, explicit state management | Very High | Very High | Low |

| Dapr Agents | Moderate | Stateful virtual actors, event-driven multi-agent workflows, Kubernetes integration, 50+ data connectors, built-in resiliency | Medium | Medium-High | Medium |

The table clearly identifies why OpenAI Agents SDK should be the main framework for agentic development for most use cases:

- It excels in simplicity and ease of use, making it the best choice for rapid development and broad accessibility.

- It offers high control with minimal abstraction, providing the flexibility needed for agentic development without the complexity of frameworks like LangGraph.

- It outperforms most alternatives (CrewAI, AutoGen, Google ADK, Dapr Agents) in balancing usability and power, and while LangGraph offers more control, its complexity makes it less practical for general use.

If your priority is ease of use, flexibility, and quick iteration in agentic development, OpenAI Agents SDK is the clear winner based on the table. However, if your project requires enterprise-scale features (e.g., Dapr Agents) or maximum control for complex workflows (e.g., LangGraph), you might consider those alternatives despite their added complexity.

- Agentic & DACA Theory - 1 week

- UV & OpenAI Agents SDK - 5 weeks

- Agentic Design Patterns - 2 weeks

- Memory [LangMem & mem0] 1 week

- Postgres/Redis (Managed Cloud) - 1 week

- FastAPI (Basic) - 2 weeks

- Containerization (Rancher Desktop) - 1 week

- Hugging Face Docker Spaces - 1 week

Note: These videos are for additional learning, and do not cover all the material taught in the onsite classes.

Prerequisite: Successful completion of AI-101: Modern AI Python Programming - Your Launchpad into Intelligent Systems

- Rancher Desktop with Local Kubernetes - 4 weeks

- Advanced FastAPI with Kubernetes - 2 weeks

- Dapr [workflows, state, pubsub, secrets] - 3 Week

- CockRoachdb & RabbitMQ Managed Services - 2 weeks

- Model Context Protocol - 2 weeks

- Serverless Containers Deployment (ACA) - 2 weeks

Prerequisite: Successful completion of AI-201

- Certified Kubernetes Application Developer (CKAD) - 4 weeks

- A2A Protocol - 2 weeks

- Voice Agents - 2 weeks

- Dapr Agents/Google ADK - 2 weeks

- Self-LLMs Hosting - 1 week

- Finetuning LLMs - 3 weeks

Prerequisite: Successful completion of AI-201 & AI-202

Quizzes + Hackathons (Everything is Onsite)

- Advanced Modern Python (including asyncio) [Q1]

- OpenAI Agents SDK (48 MCQ in 2 hour) [01_ai_agents_first]

- Protocols & Design Patterns (A2A and MCP) [05_ai_protocols]

- Hackathon1 - 8 Hours (Using Above Quiz Stack)

- Containerization + FastAPI [05_daca_agent_native_dev = 01 + 02 ]

- Kubernetes (Rancher Desktop) [Stimulations] [05_daca_agent_native_dev = 02 ]

- Dapr-1 - State, PubSub, Bindings, Invocation [05_daca_agent_native_dev = 03 ]

- Dapr-2 - Workflows, Virtual Actors [04_agent_native = 04, 05, 06]

- Hackathon2 - 8 Hours (Agent Native Startup)

- CKAD + DAPR + ArgoCD (Simulations) [06_daca_deployment_guide + 07_ckad]

Total Questions: 48 MCQs

Duration: 120 Minutes

Difficulty Level: Intermediate or Advanced (NOT beginner-level)

This is a well-constructed, comprehensive quiz that accurately tests deep knowledge of the OpenAI Agents SDK. However, it's significantly more challenging than typical beginner-level assessments.

Difficulty Level for Beginners

The quiz is challenging for beginners due to the following factors:

-

Technical Depth: Questions require understanding the OpenAI Agents SDK’s architecture (e.g., Agents, Tools, Handoffs, Runner), Pydantic models, async programming, and prompt engineering. These are advanced topics for someone new to AI or Python.

-

Conceptual Complexity: Topics like dynamic instructions, context management, error handling, and Chain-of-Thought prompting require familiarity with both theoretical and practical aspects of agentic AI.

-

Code Analysis: Many questions involve analyzing code snippets, understanding execution paths, and predicting outcomes, which demand strong Python and debugging skills. Domain Knowledge: Questions on Markdown are simpler, but the majority focus on niche SDK features, making the quiz specialized.

-

Beginner Challenges: Beginners (e.g., those with basic Python knowledge and minimal AI experience) would struggle with SDK-specific concepts like Runner.run_sync, tool_choice, and Pydantic validation, as well as async programming and multi-agent workflows.

-

Difficulty Rating: Advanced (not beginner-friendly). Beginners would need foundational knowledge in Python, async programming, and LLMs, plus specific training on the OpenAI Agents SDK to perform well.

To excel in this quiz, focus on understanding the core components and philosophy of the OpenAI Agents SDK, such as its "Python-first" design for orchestration, the roles of Agents and Tools, and how primitives like "Handoffs" facilitate multi-agent collaboration. Pay close attention to how the SDK manages the agent loop, handles tool calls and Pydantic models for typed inputs/outputs, and uses context objects. Review concepts like dynamic instructions, agent cloning, error handling during tool execution, and the nuances of Runner.run_sync() versus streaming. Additionally, refresh your knowledge of prompt engineering techniques, including crafting clear instructions, guiding the agent's reasoning (e.g., Chain-of-Thought), and managing sensitive data through persona and careful prompting. Finally, ensure you're comfortable with basic Markdown syntax for links and images.

Preparation Guide for Beginner Students

This OpenAI Agents SDK quiz is designed for intermediate to advanced learners and requires substantial preparation to succeed. Before attempting this assessment, ensure you have a solid foundation in Python programming, including object-oriented concepts, async/await patterns, decorators, and error handling. You'll need to thoroughly study Pydantic models for data validation, understanding field definitions, default values, and validation behavior. Dedicate significant time to the OpenAI Agents SDK documentation (https://openai.github.io/openai-agents-python/), focusing on core concepts like Agents, Tools, Handoffs, context management, and the agent execution loop. Practice writing and analyzing code that uses the @function_tool decorator, Runner.run_sync(), agent cloning, and multi-agent orchestration patterns. Review prompt engineering techniques from the OpenAI cookbook, particularly Chain-of-Thought prompting, system message design, and handling sensitive data. Finally, familiarize yourself with basic Markdown syntax for links and images. Plan to spend at least 2-3 weeks studying these materials, complete hands-on coding exercises with the SDK. Consider this quiz a capstone assessment that requires comprehensive understanding rather than a beginner-level introduction to the concepts.

Quiz Covers:

https://openai.github.io/openai-agents-python/

https://cookbook.openai.com/examples/gpt4-1_prompting_guide

https://www.markdownguide.org/basic-syntax/

https://www.markdownguide.org/cheat-sheet/

https://github.com/panaversity/learn-agentic-ai/tree/main/01_ai_agents_first

You Can Generate Mock Quizzes for Practice using LLMs from this Prompt:

Create a comprehensive quiz covering OpenAI Agents SDK. It should include as many MCQ Quiz Questions as required to test the material, the questions should be difficult and at the graduate level and should test both concepts and include code were required. From the following following documentation:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for learn-agentic-ai

Similar Open Source Tools

learn-agentic-ai

Learn Agentic AI is a repository that is part of the Panaversity Certified Agentic and Robotic AI Engineer program. It covers AI-201 and AI-202 courses, providing fundamentals and advanced knowledge in Agentic AI. The repository includes video playlists, projects, and project submission guidelines for students to enhance their understanding and skills in the field of AI engineering.

dash-infer

DashInfer is a C++ runtime tool designed to deliver production-level implementations highly optimized for various hardware architectures, including x86 and ARMv9. It supports Continuous Batching and NUMA-Aware capabilities for CPU, and can fully utilize modern server-grade CPUs to host large language models (LLMs) up to 14B in size. With lightweight architecture, high precision, support for mainstream open-source LLMs, post-training quantization, optimized computation kernels, NUMA-aware design, and multi-language API interfaces, DashInfer provides a versatile solution for efficient inference tasks. It supports x86 CPUs with AVX2 instruction set and ARMv9 CPUs with SVE instruction set, along with various data types like FP32, BF16, and InstantQuant. DashInfer also offers single-NUMA and multi-NUMA architectures for model inference, with detailed performance tests and inference accuracy evaluations available. The tool is supported on mainstream Linux server operating systems and provides documentation and examples for easy integration and usage.

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

Mooncake

Mooncake is a serving platform for Kimi, a leading LLM service provided by Moonshot AI. It features a KVCache-centric disaggregated architecture that separates prefill and decoding clusters, leveraging underutilized CPU, DRAM, and SSD resources of the GPU cluster. Mooncake's scheduler balances throughput and latency-related SLOs, with a prediction-based early rejection policy for highly overloaded scenarios. It excels in long-context scenarios, achieving up to a 525% increase in throughput while handling 75% more requests under real workloads.

llm-course

The LLM course is divided into three parts: 1. 🧩 **LLM Fundamentals** covers essential knowledge about mathematics, Python, and neural networks. 2. 🧑🔬 **The LLM Scientist** focuses on building the best possible LLMs using the latest techniques. 3. 👷 **The LLM Engineer** focuses on creating LLM-based applications and deploying them. For an interactive version of this course, I created two **LLM assistants** that will answer questions and test your knowledge in a personalized way: * 🤗 **HuggingChat Assistant**: Free version using Mixtral-8x7B. * 🤖 **ChatGPT Assistant**: Requires a premium account. ## 📝 Notebooks A list of notebooks and articles related to large language models. ### Tools | Notebook | Description | Notebook | |----------|-------------|----------| | 🧐 LLM AutoEval | Automatically evaluate your LLMs using RunPod |  | | 🥱 LazyMergekit | Easily merge models using MergeKit in one click. |  | | 🦎 LazyAxolotl | Fine-tune models in the cloud using Axolotl in one click. |  | | ⚡ AutoQuant | Quantize LLMs in GGUF, GPTQ, EXL2, AWQ, and HQQ formats in one click. |  | | 🌳 Model Family Tree | Visualize the family tree of merged models. |  | | 🚀 ZeroSpace | Automatically create a Gradio chat interface using a free ZeroGPU. |  |

FloTorch

FloTorch is an innovative product designed to simplify and optimize the decision-making process for leveraging Large Language Models (LLMs) in Retrieval Augmented Generation (RAG) systems. It focuses on providing a well-architected framework, maximizing efficiency, eliminating complexity, accelerating selection, and fostering innovation. The tool offers a streamlined, user-friendly approach to help users achieve efficiency, accuracy, and cost-effectiveness in the fast-paced digital landscape of AI.

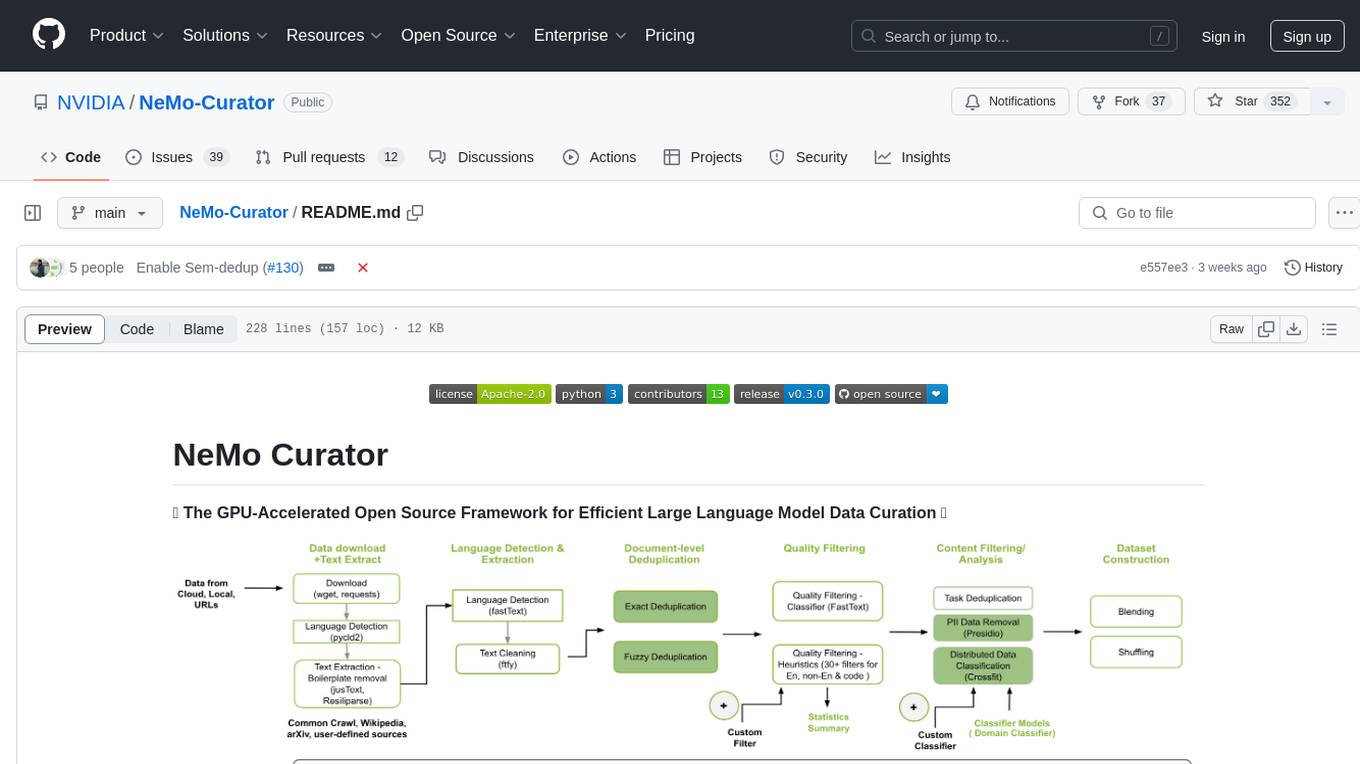

Curator

NeMo Curator is a Python library designed for fast and scalable data processing and curation for generative AI use cases. It accelerates data processing by leveraging GPUs with Dask and RAPIDS, providing customizable pipelines for text and image curation. The library offers pre-built pipelines for synthetic data generation, enabling users to train and customize generative AI models such as LLMs, VLMs, and WFMs.

FinRobot

FinRobot is an open-source AI agent platform designed for financial applications using large language models. It transcends the scope of FinGPT, offering a comprehensive solution that integrates a diverse array of AI technologies. The platform's versatility and adaptability cater to the multifaceted needs of the financial industry. FinRobot's ecosystem is organized into four layers, including Financial AI Agents Layer, Financial LLMs Algorithms Layer, LLMOps and DataOps Layers, and Multi-source LLM Foundation Models Layer. The platform's agent workflow involves Perception, Brain, and Action modules to capture, process, and execute financial data and insights. The Smart Scheduler optimizes model diversity and selection for tasks, managed by components like Director Agent, Agent Registration, Agent Adaptor, and Task Manager. The tool provides a structured file organization with subfolders for agents, data sources, and functional modules, along with installation instructions and hands-on tutorials.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

dapr-agents

Dapr Agents is a developer framework for building production-grade resilient AI agent systems that operate at scale. It enables software developers to create AI agents that reason, act, and collaborate using Large Language Models (LLMs), while providing built-in observability and stateful workflow execution to ensure agentic workflows complete successfully. The framework is scalable, efficient, Kubernetes-native, data-driven, secure, observable, vendor-neutral, and open source. It offers features like scalable workflows, cost-effective AI adoption, data-centric AI agents, accelerated development, integrated security and reliability, built-in messaging and state infrastructure, and vendor-neutral and open source support. Dapr Agents is designed to simplify the development of AI applications and workflows by providing a comprehensive API surface and seamless integration with various data sources and services.

core

CORE is an open-source unified, persistent memory layer for all AI tools, allowing developers to maintain context across different tools like Cursor, ChatGPT, and Claude. It aims to solve the issue of context switching and information loss between sessions by creating a knowledge graph that remembers conversations, decisions, and insights. With features like unified memory, temporal knowledge graph, browser extension, chat with memory, auto-sync from apps, and MCP integration hub, CORE provides a seamless experience for managing and recalling context. The tool's ingestion pipeline captures evolving context through normalization, extraction, resolution, and graph integration, resulting in a dynamic memory that grows and changes with the user. When recalling from memory, CORE utilizes search, re-ranking, filtering, and output to provide relevant and contextual answers. Security measures include data encryption, authentication, access control, and vulnerability reporting.

ChatLaw

ChatLaw is an open-source legal large language model tailored for Chinese legal scenarios. It aims to combine LLM and knowledge bases to provide solutions for legal scenarios. The models include ChatLaw-13B and ChatLaw-33B, trained on various legal texts to construct dialogue data. The project focuses on improving logical reasoning abilities and plans to train models with parameters exceeding 30B for better performance. The dataset consists of forum posts, news, legal texts, judicial interpretations, legal consultations, exam questions, and court judgments, cleaned and enhanced to create dialogue data. The tool is designed to assist in legal tasks requiring complex logical reasoning, with a focus on accuracy and reliability.

cleanlab

Cleanlab helps you **clean** data and **lab** els by automatically detecting issues in a ML dataset. To facilitate **machine learning with messy, real-world data** , this data-centric AI package uses your _existing_ models to estimate dataset problems that can be fixed to train even _better_ models.

AI6127

AI6127 is a course focusing on deep neural networks for natural language processing (NLP). It covers core NLP tasks and machine learning models, emphasizing deep learning methods using libraries like Pytorch. The course aims to teach students state-of-the-art techniques for practical NLP problems, including writing, debugging, and training deep neural models. It also explores advancements in NLP such as Transformers and ChatGPT.

tunix

Tunix is a JAX-based library designed for post-training Large Language Models. It provides efficient support for supervised fine-tuning, reinforcement learning, and knowledge distillation. Tunix leverages JAX for accelerated computation and integrates seamlessly with the Flax NNX modeling framework. The library is modular, efficient, and designed for distributed training on accelerators like TPUs. Currently in early development, Tunix aims to expand its capabilities, usability, and performance.

For similar tasks

learn-agentic-ai

Learn Agentic AI is a repository that is part of the Panaversity Certified Agentic and Robotic AI Engineer program. It covers AI-201 and AI-202 courses, providing fundamentals and advanced knowledge in Agentic AI. The repository includes video playlists, projects, and project submission guidelines for students to enhance their understanding and skills in the field of AI engineering.

GenAiGuidebook

GenAiGuidebook is a comprehensive resource for individuals looking to begin their journey in GenAI. It serves as a detailed guide providing insights, tips, and information on various aspects of GenAI technology. The guidebook covers a wide range of topics, including introductory concepts, practical applications, and best practices in the field of GenAI. Whether you are a beginner or an experienced professional, this resource aims to enhance your understanding and proficiency in GenAI.

Awesome-Books-Notes

Awesome CS Books is a repository that archives excellent books related to computer science and technology, named in the format of {year}-{author}-{title}-{version}. It includes reading notes for each book, with PDF links provided at the beginning of the notes. The repository focuses on IT CS-related books, valuable open courses, and aims to provide a systematic way of learning to alleviate fragmented skills and one-sidedness. It respects the original authors by linking to official/copyright websites and emphasizes non-commercial use of the documents.

rustcrab

Rustcrab is a repository for Rust developers, offering resources, tools, and guides to enhance Rust programming skills. It is a Next.js application with Tailwind CSS and TypeScript, featuring real-time display of GitHub stars, light/dark mode toggling, integration with daily.dev, and social media links. Users can clone the repository, install dependencies, run the development server, build for production, and deploy to various platforms. Contributions are encouraged through opening issues or submitting pull requests.

awesome-ai-newsletters

Awesome AI Newsletters is a curated list of AI-related newsletters that provide the latest news, trends, tools, and insights in the field of Artificial Intelligence. It includes a variety of newsletters covering general AI news, prompts for marketing and productivity, AI job opportunities, and newsletters tailored for professionals in the AI industry. Whether you are a beginner looking to stay updated on AI advancements or a professional seeking to enhance your knowledge and skills, this repository offers a collection of valuable resources to help you navigate the world of AI.

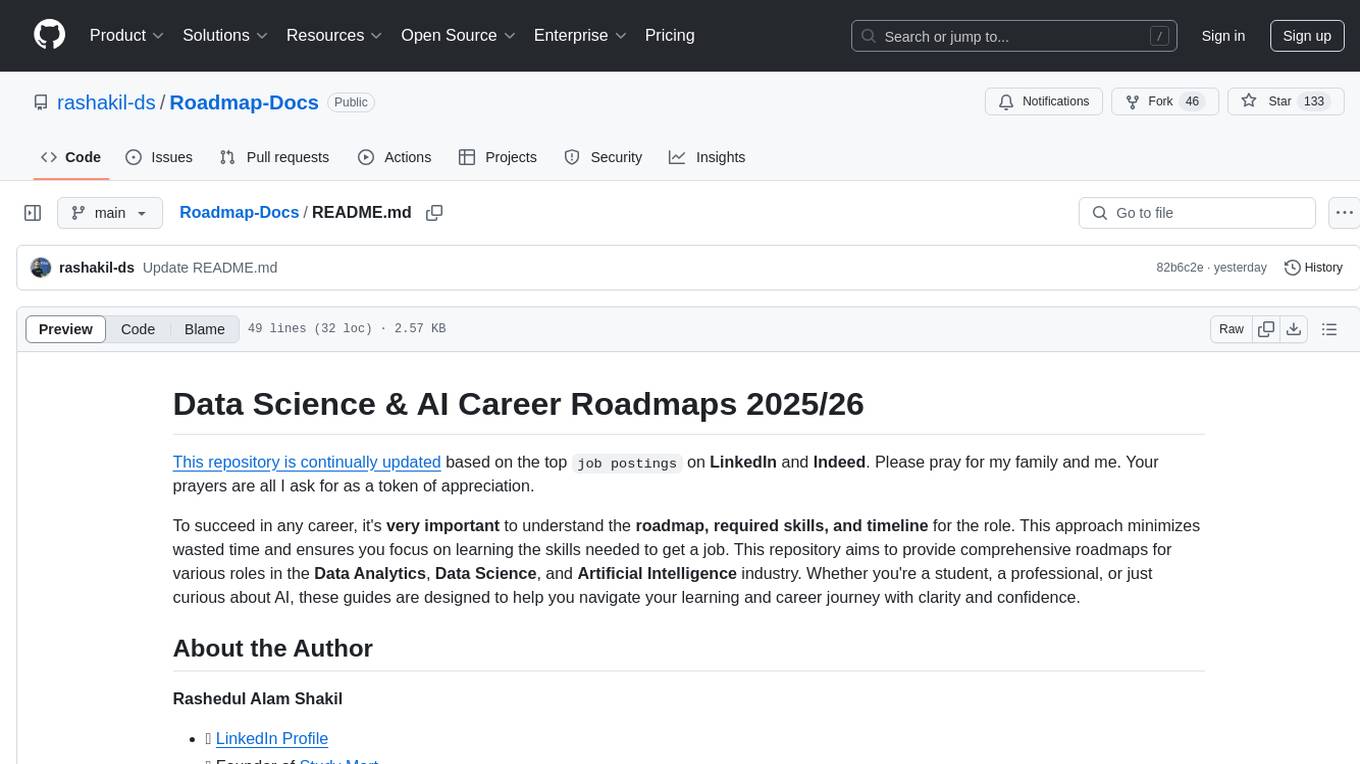

Roadmap-Docs

This repository provides comprehensive roadmaps for various roles in the Data Analytics, Data Science, and Artificial Intelligence industry. It aims to guide individuals, whether students or professionals, in understanding the required skills and timeline for different roles, helping them focus on learning the necessary skills to secure a job. The repository includes detailed guides for roles such as Data Analyst, Data Engineer, Data Scientist, AI Engineer, Computer Vision Engineer, Generative AI Engineer, Machine Learning Engineer, NLP Engineer, and Domain-Specific ML Topics for Researchers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.