higress

🤖 AI Gateway | AI Native API Gateway

Stars: 7537

Higress is an open-source cloud-native API gateway built on the core of Istio and Envoy, based on Alibaba's internal practice of Envoy Gateway. It is designed for AI-native API gateway, serving AI businesses such as Tongyi Qianwen APP, Bailian Big Model API, and Machine Learning PAI platform. Higress provides capabilities to interface with LLM model vendors, AI observability, multi-model load balancing/fallback, AI token flow control, and AI caching. It offers features for AI gateway, Kubernetes Ingress gateway, microservices gateway, and security protection gateway, with advantages in production-level scalability, stream processing, extensibility, and ease of use.

README:

Official Site | Docs | Blog | MCP Server QuickStart | Developer Guide | Wasm Plugin Hub |

Higress is a cloud-native API gateway based on Istio and Envoy, which can be extended with Wasm plugins written in Go/Rust/JS. It provides dozens of ready-to-use general-purpose plugins and an out-of-the-box console (try the demo here).

Higress's AI gateway capabilities support all mainstream model providers both domestic and international. It also supports hosting MCP (Model Context Protocol) Servers through its plugin mechanism, enabling AI Agents to easily call various tools and services. With the openapi-to-mcp tool, you can quickly convert OpenAPI specifications into remote MCP servers for hosting. Higress provides unified management for both LLM API and MCP API.

🌟 Try it now at https://mcp.higress.ai/ to experience Higress-hosted Remote MCP Servers firsthand:

Higress was born within Alibaba to solve the issues of Tengine reload affecting long-connection services and insufficient load balancing capabilities for gRPC/Dubbo. Within Alibaba Cloud, Higress's AI gateway capabilities support core AI applications such as Tongyi Bailian model studio, machine learning PAI platform, and other critical AI services. Alibaba Cloud has built its cloud-native API gateway product based on Higress, providing 99.99% gateway high availability guarantee service capabilities for a large number of enterprise customers.

You can click the button below to install the enterprise version of Higress:

If you use open-source Higress and wish to obtain enterprise-level support, you can contact the project maintainer johnlanni's email: [email protected] or social media accounts (WeChat ID: nomadao, DingTalk ID: chengtanzty). Please note Higress when adding as a friend :)

Higress can be started with just Docker, making it convenient for individual developers to set up locally for learning or for building simple sites:

# Create a working directory

mkdir higress; cd higress

# Start higress, configuration files will be written to the working directory

docker run -d --rm --name higress-ai -v ${PWD}:/data \

-p 8001:8001 -p 8080:8080 -p 8443:8443 \

higress-registry.cn-hangzhou.cr.aliyuncs.com/higress/all-in-one:latestPort descriptions:

- Port 8001: Higress UI console entry

- Port 8080: Gateway HTTP protocol entry

- Port 8443: Gateway HTTPS protocol entry

All Higress Docker images use Higress's own image repository and are not affected by Docker Hub rate limits. In addition, the submission and updates of the images are protected by a security scanning mechanism (powered by Alibaba Cloud ACR), making them very secure for use in production environments.

If you experience a timeout when pulling image from

higress-registry.cn-hangzhou.cr.aliyuncs.com, you can try replacing it with the following docker registry mirror source:North America:

higress-registry.us-west-1.cr.aliyuncs.comSoutheast Asia:

higress-registry.ap-southeast-7.cr.aliyuncs.com

For other installation methods such as Helm deployment under K8s, please refer to the official Quick Start documentation.

If you are deploying on the cloud, it is recommended to use the Enterprise Edition

-

MCP Server Hosting:

Higress hosts MCP Servers through its plugin mechanism, enabling AI Agents to easily call various tools and services. With the openapi-to-mcp tool, you can quickly convert OpenAPI specifications into remote MCP servers.

Key benefits of hosting MCP Servers with Higress:

-

Unified authentication and authorization mechanisms

-

Fine-grained rate limiting to prevent abuse

-

Comprehensive audit logs for all tool calls

-

Rich observability for monitoring performance

-

Simplified deployment through Higress's plugin mechanism

-

Dynamic updates without disruption or connection drops

-

-

AI Gateway:

Higress connects to all LLM model providers using a unified protocol, with AI observability, multi-model load balancing, token rate limiting, and caching capabilities:

-

Kubernetes ingress controller:

Higress can function as a feature-rich ingress controller, which is compatible with many annotations of K8s' nginx ingress controller.

Gateway API is already supported, and it supports a smooth migration from Ingress API to Gateway API.

Compared to ingress-nginx, the resource overhead has significantly decreased, and the speed at which route changes take effect has improved by ten times.

The following resource overhead comparison comes from sealos.

For details, you can read this article to understand how sealos migrates the monitoring of tens of thousands of ingress resources from nginx ingress to higress.

-

Microservice gateway:

Higress can function as a microservice gateway, which can discovery microservices from various service registries, such as Nacos, ZooKeeper, Consul, Eureka, etc.

It deeply integrates with Dubbo, Nacos, Sentinel and other microservice technology stacks.

-

Security gateway:

Higress can be used as a security gateway, supporting WAF and various authentication strategies, such as key-auth, hmac-auth, jwt-auth, basic-auth, oidc, etc.

-

Production Grade

Born from Alibaba's internal product with over 2 years of production validation, supporting large-scale scenarios with hundreds of thousands of requests per second.

Completely eliminates traffic jitter caused by Nginx reload, configuration changes take effect in milliseconds and are transparent to business. Especially friendly to long-connection scenarios such as AI businesses.

-

Streaming Processing

Supports true complete streaming processing of request/response bodies, Wasm plugins can easily customize the handling of streaming protocols such as SSE (Server-Sent Events).

In high-bandwidth scenarios such as AI businesses, it can significantly reduce memory overhead.

-

Easy to Extend

Provides a rich official plugin library covering AI, traffic management, security protection and other common functions, meeting more than 90% of business scenario requirements.

Focuses on Wasm plugin extensions, ensuring memory safety through sandbox isolation, supporting multiple programming languages, allowing plugin versions to be upgraded independently, and achieving traffic-lossless hot updates of gateway logic.

-

Secure and Easy to Use

Based on Ingress API and Gateway API standards, provides out-of-the-box UI console, WAF protection plugin, IP/Cookie CC protection plugin ready to use.

Supports connecting to Let's Encrypt for automatic issuance and renewal of free certificates, and can be deployed outside of K8s, started with a single Docker command, convenient for individual developers to use.

Join our Discord community! This is where you can connect with developers and other enthusiastic users of Higress.

Higress would not be possible without the valuable open-source work of projects in the community. We would like to extend a special thank you to Envoy and Istio.

- Higress Console: https://github.com/higress-group/higress-console

- Higress Standalone: https://github.com/higress-group/higress-standalone

- Higress Plugin Server:https://github.com/higress-group/plugin-server

- Higress Wasm Plugin Golang SDK:https://github.com/higress-group/wasm-go

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for higress

Similar Open Source Tools

higress

Higress is an open-source cloud-native API gateway built on the core of Istio and Envoy, based on Alibaba's internal practice of Envoy Gateway. It is designed for AI-native API gateway, serving AI businesses such as Tongyi Qianwen APP, Bailian Big Model API, and Machine Learning PAI platform. Higress provides capabilities to interface with LLM model vendors, AI observability, multi-model load balancing/fallback, AI token flow control, and AI caching. It offers features for AI gateway, Kubernetes Ingress gateway, microservices gateway, and security protection gateway, with advantages in production-level scalability, stream processing, extensibility, and ease of use.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

agentgateway

Agentgateway is an open source data plane optimized for agentic AI connectivity within or across any agent framework or environment. It provides drop-in security, observability, and governance for agent-to-agent and agent-to-tool communication, supporting leading interoperable protocols like Agent2Agent (A2A) and Model Context Protocol (MCP). Highly performant, security-first, multi-tenant, dynamic, and supporting legacy API transformation, agentgateway is designed to handle any scale and run anywhere with any agent framework.

chatnio

Chat Nio is a next-generation AIGC one-stop business solution that combines the advantages of frontend-oriented lightweight deployment projects with powerful API distribution systems. It offers rich model support, beautiful UI design, complete Markdown support, multi-theme support, internationalization support, text-to-image support, powerful conversation sync, model market & preset system, rich file parsing, full model internet search, Progressive Web App (PWA) support, comprehensive backend management, multiple billing methods, innovative model caching, and additional features. The project aims to address limitations in conversation synchronization, billing, file parsing, conversation URL sharing, channel management, and API call support found in existing AIGC commercial sites, while also providing a user-friendly interface design and C-end features.

agent-zero

Agent Zero is a personal, organic agentic framework designed to be dynamic, transparent, customizable, and interactive. It uses the computer as a tool to accomplish tasks, with features like general-purpose assistant, computer as a tool, multi-agent cooperation, customizable and extensible framework, and communication skills. The tool is fully Dockerized, with Speech-to-Text and TTS capabilities, and offers real-world use cases like financial analysis, Excel automation, API integration, server monitoring, and project isolation. Agent Zero can be dangerous if not used properly and is prompt-based, guided by the prompts folder. The tool is extensively documented and has a changelog highlighting various updates and improvements.

AI-Gateway

The AI-Gateway repository explores the AI Gateway pattern through a series of experimental labs, focusing on Azure API Management for handling AI services APIs. The labs provide step-by-step instructions using Jupyter notebooks with Python scripts, Bicep files, and APIM policies. The goal is to accelerate experimentation of advanced use cases and pave the way for further innovation in the rapidly evolving field of AI. The repository also includes a Mock Server to mimic the behavior of the OpenAI API for testing and development purposes.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

UltraRAG

The UltraRAG framework is a researcher and developer-friendly RAG system solution that simplifies the process from data construction to model fine-tuning in domain adaptation. It introduces an automated knowledge adaptation technology system, supporting no-code programming, one-click synthesis and fine-tuning, multidimensional evaluation, and research-friendly exploration work integration. The architecture consists of Frontend, Service, and Backend components, offering flexibility in customization and optimization. Performance evaluation in the legal field shows improved results compared to VanillaRAG, with specific metrics provided. The repository is licensed under Apache-2.0 and encourages citation for support.

open-webui

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs. For more information, be sure to check out our Open WebUI Documentation.

nexent

Nexent is a powerful tool for analyzing and visualizing network traffic data. It provides comprehensive insights into network behavior, helping users to identify patterns, anomalies, and potential security threats. With its user-friendly interface and advanced features, Nexent is suitable for network administrators, cybersecurity professionals, and anyone looking to gain a deeper understanding of their network infrastructure.

core

CORE is an open-source unified, persistent memory layer for all AI tools, allowing developers to maintain context across different tools like Cursor, ChatGPT, and Claude. It aims to solve the issue of context switching and information loss between sessions by creating a knowledge graph that remembers conversations, decisions, and insights. With features like unified memory, temporal knowledge graph, browser extension, chat with memory, auto-sync from apps, and MCP integration hub, CORE provides a seamless experience for managing and recalling context. The tool's ingestion pipeline captures evolving context through normalization, extraction, resolution, and graph integration, resulting in a dynamic memory that grows and changes with the user. When recalling from memory, CORE utilizes search, re-ranking, filtering, and output to provide relevant and contextual answers. Security measures include data encryption, authentication, access control, and vulnerability reporting.

gptme

Personal AI assistant/agent in your terminal, with tools for using the terminal, running code, editing files, browsing the web, using vision, and more. A great coding agent that is general-purpose to assist in all kinds of knowledge work, from a simple but powerful CLI. An unconstrained local alternative to ChatGPT with 'Code Interpreter', Cursor Agent, etc. Not limited by lack of software, internet access, timeouts, or privacy concerns if using local models.

neuro-san-studio

Neuro SAN Studio is an open-source library for building agent networks across various industries. It simplifies the development of collaborative AI systems by enabling users to create sophisticated multi-agent applications using declarative configuration files. The tool offers features like data-driven configuration, adaptive communication protocols, safe data handling, dynamic agent network designer, flexible tool integration, robust traceability, and cloud-agnostic deployment. It has been used in various use-cases such as automated generation of multi-agent configurations, airline policy assistance, banking operations, market analysis in consumer packaged goods, insurance claims processing, intranet knowledge management, retail operations, telco network support, therapy vignette supervision, and more.

codegate

CodeGate is a local gateway that enhances the safety of AI coding assistants by ensuring AI-generated recommendations adhere to best practices, safeguarding code integrity, and protecting individual privacy. Developed by Stacklok, CodeGate allows users to confidently leverage AI in their development workflow without compromising security or productivity. It works seamlessly with coding assistants, providing real-time security analysis of AI suggestions. CodeGate is designed with privacy at its core, keeping all data on the user's machine and offering complete control over data.

saga-reader

Saga Reader is an AI-driven think tank-style reader that automatically retrieves information from the internet based on user-specified topics and preferences. It uses cloud or local large models to summarize and provide guidance, and it includes an AI-driven interactive companion reading function, allowing you to discuss and exchange ideas with AI about the content you've read. Saga Reader is completely free and open-source, meaning all data is securely stored on your own computer and is not controlled by third-party service providers. Additionally, you can manage your subscription keywords based on your interests and preferences without being disturbed by advertisements and commercialized content.

StratosphereLinuxIPS

Slips is a powerful endpoint behavioral intrusion prevention and detection system that uses machine learning to detect malicious behaviors in network traffic. It can work with network traffic in real-time, PCAP files, and network flows from tools like Suricata, Zeek/Bro, and Argus. Slips threat detection is based on machine learning models, threat intelligence feeds, and expert heuristics. It gathers evidence of malicious behavior and triggers alerts when enough evidence is accumulated. The tool is Python-based and supported on Linux and MacOS, with blocking features only on Linux. Slips relies on Zeek network analysis framework and Redis for interprocess communication. It offers a graphical user interface for easy monitoring and analysis.

For similar tasks

higress

Higress is an open-source cloud-native API gateway built on the core of Istio and Envoy, based on Alibaba's internal practice of Envoy Gateway. It is designed for AI-native API gateway, serving AI businesses such as Tongyi Qianwen APP, Bailian Big Model API, and Machine Learning PAI platform. Higress provides capabilities to interface with LLM model vendors, AI observability, multi-model load balancing/fallback, AI token flow control, and AI caching. It offers features for AI gateway, Kubernetes Ingress gateway, microservices gateway, and security protection gateway, with advantages in production-level scalability, stream processing, extensibility, and ease of use.

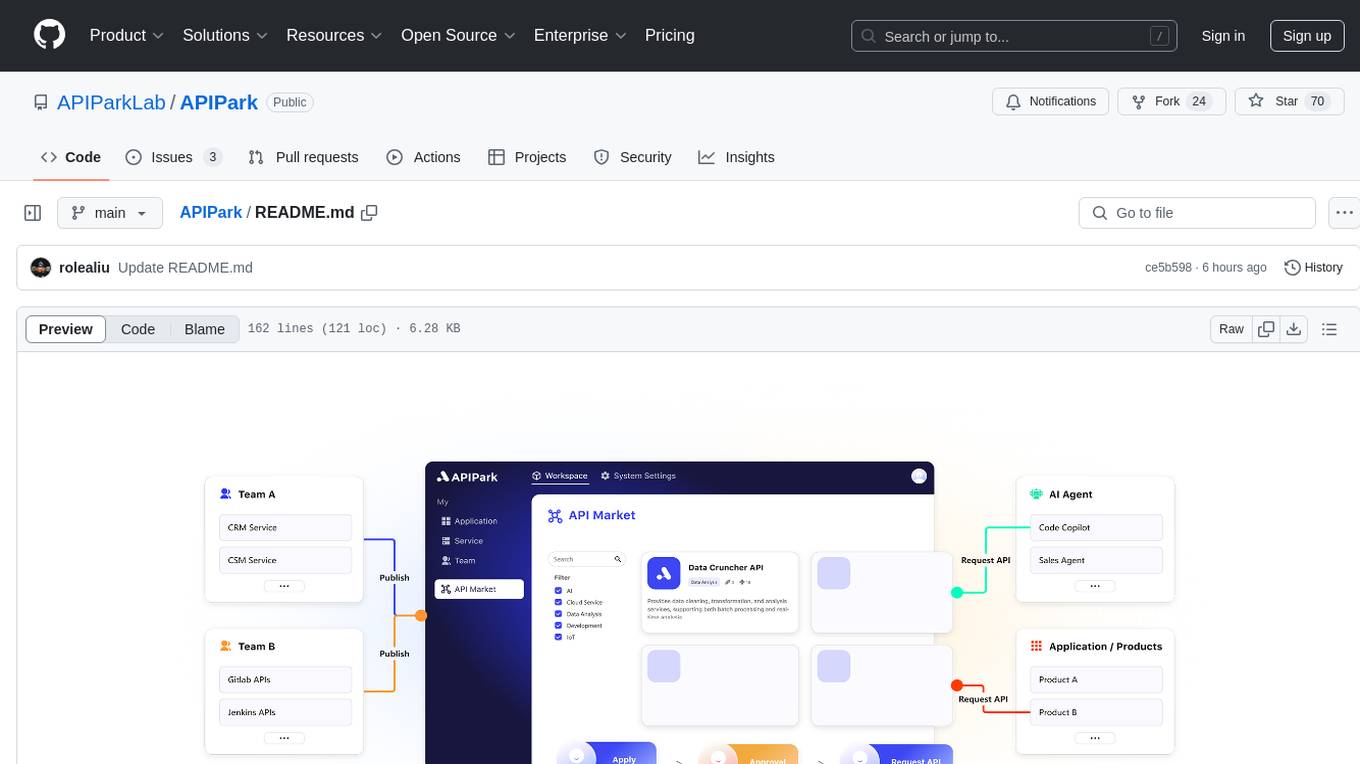

APIPark

APIPark is an open-source AI Gateway and Developer Portal that enables users to easily manage, integrate, and deploy AI and API services. It provides robust API management features, including creation, monitoring, and access control, to help developers efficiently and securely develop and manage their APIs. The platform aims to solve challenges such as connecting to powerful AI models, managing complex AI & API call relationships, overseeing API creation and security, simplifying fault detection and troubleshooting, and enhancing the visibility and valuation of data assets.

For similar jobs

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

booster

Booster is a powerful inference accelerator designed for scaling large language models within production environments or for experimental purposes. It is built with performance and scaling in mind, supporting various CPUs and GPUs, including Nvidia CUDA, Apple Metal, and OpenCL cards. The tool can split large models across multiple GPUs, offering fast inference on machines with beefy GPUs. It supports both regular FP16/FP32 models and quantised versions, along with popular LLM architectures. Additionally, Booster features proprietary Janus Sampling for code generation and non-English languages.

xFasterTransformer

xFasterTransformer is an optimized solution for Large Language Models (LLMs) on the X86 platform, providing high performance and scalability for inference on mainstream LLM models. It offers C++ and Python APIs for easy integration, along with example codes and benchmark scripts. Users can prepare models in a different format, convert them, and use the APIs for tasks like encoding input prompts, generating token ids, and serving inference requests. The tool supports various data types and models, and can run in single or multi-rank modes using MPI. A web demo based on Gradio is available for popular LLM models like ChatGLM and Llama2. Benchmark scripts help evaluate model inference performance quickly, and MLServer enables serving with REST and gRPC interfaces.

amazon-transcribe-live-call-analytics

The Amazon Transcribe Live Call Analytics (LCA) with Agent Assist Sample Solution is designed to help contact centers assess and optimize caller experiences in real time. It leverages Amazon machine learning services like Amazon Transcribe, Amazon Comprehend, and Amazon SageMaker to transcribe and extract insights from contact center audio. The solution provides real-time supervisor and agent assist features, integrates with existing contact centers, and offers a scalable, cost-effective approach to improve customer interactions. The end-to-end architecture includes features like live call transcription, call summarization, AI-powered agent assistance, and real-time analytics. The solution is event-driven, ensuring low latency and seamless processing flow from ingested speech to live webpage updates.

ai-lab-recipes

This repository contains recipes for building and running containerized AI and LLM applications with Podman. It provides model servers that serve machine-learning models via an API, allowing developers to quickly prototype new AI applications locally. The recipes include components like model servers and AI applications for tasks such as chat, summarization, object detection, etc. Images for sample applications and models are available in `quay.io`, and bootable containers for AI training on Linux OS are enabled.

XLearning

XLearning is a scheduling platform for big data and artificial intelligence, supporting various machine learning and deep learning frameworks. It runs on Hadoop Yarn and integrates frameworks like TensorFlow, MXNet, Caffe, Theano, PyTorch, Keras, XGBoost. XLearning offers scalability, compatibility, multiple deep learning framework support, unified data management based on HDFS, visualization display, and compatibility with code at native frameworks. It provides functions for data input/output strategies, container management, TensorBoard service, and resource usage metrics display. XLearning requires JDK >= 1.7 and Maven >= 3.3 for compilation, and deployment on CentOS 7.2 with Java >= 1.7 and Hadoop 2.6, 2.7, 2.8.