Best AI tools for< Configure Kubernetes Ingress >

20 - AI tool Sites

Lambda Docs

Lambda Docs is an AI tool that provides cloud and hardware solutions for individuals, teams, and organizations. It offers services such as Managed Kubernetes, Preinstalled Kubernetes, Slurm, and access to GPU clusters. The platform also provides educational resources and tutorials for machine learning engineers and researchers to fine-tune models and deploy AI solutions.

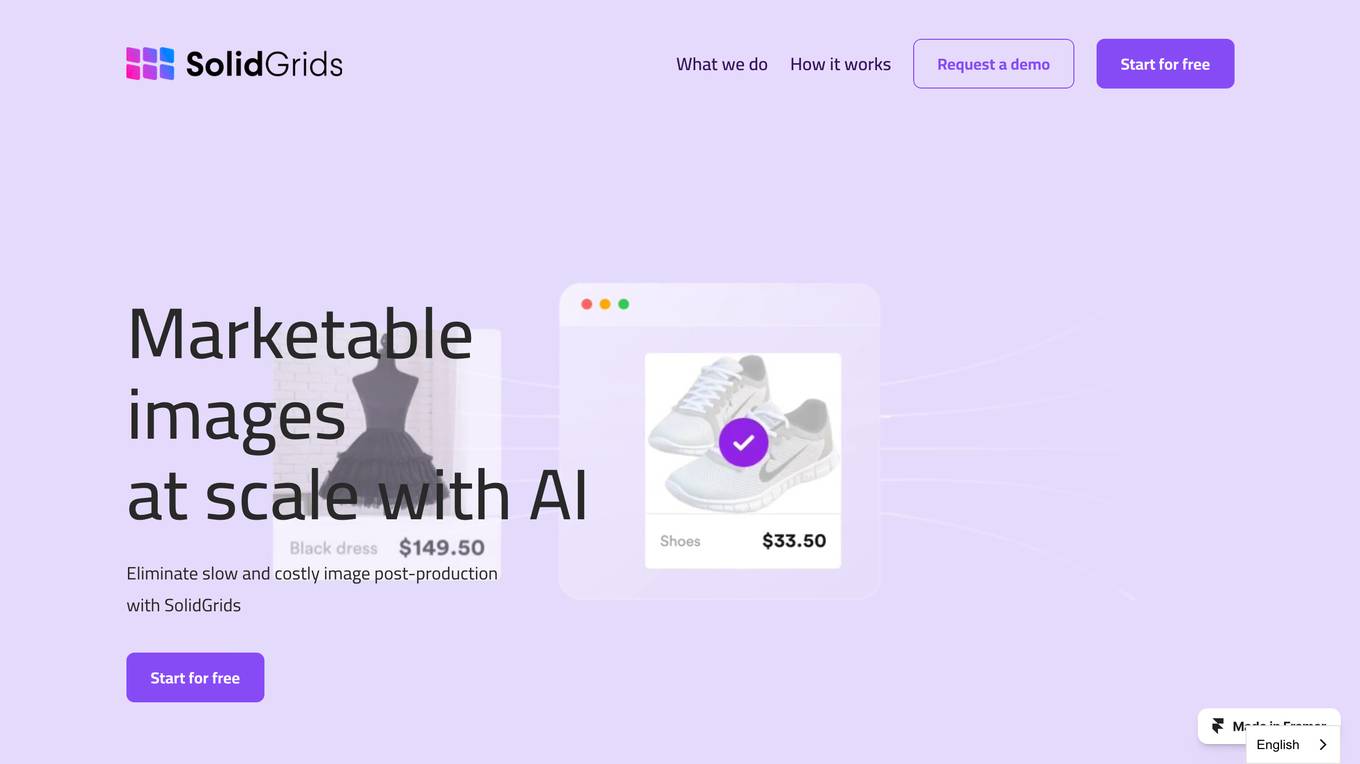

SolidGrids

SolidGrids is an AI-powered image enhancement tool designed specifically for e-commerce businesses. It automates the image post-production process, saving time and resources. With SolidGrids, you can easily remove backgrounds, enhance product images, and create consistent branding across your e-commerce site. The platform offers seamless cloud integrations and is cost-effective compared to traditional methods.

AgentGPT

AgentGPT is an AI tool designed to assist users in various tasks by generating text based on specific inputs. It leverages the power of AI to provide solutions for web scraping, report creation, trip planning, study plan development, and more. Users can easily create agents with specific goals and deploy them for efficient results. The tool offers a user-friendly interface and a range of pre-built examples to help users get started quickly and effectively.

SendingFlow

SendingFlow is a marketing automation tool specifically designed for Webflow websites. It offers a simple and powerful platform for email marketing, with features such as email series generation, templates, and data-driven decision-making. The tool aims to streamline workflows, protect sender reputation, and help users make data-driven decisions to enhance their email campaigns. SendingFlow is backed by Petit Hack, a Webflow agency dedicated to empowering marketers by providing intuitive tools for effective email marketing on the Webflow platform.

FineTuneAIs.com

FineTuneAIs.com is a platform that specializes in custom AI model fine-tuning. Users can fine-tune their AI models to achieve better performance and accuracy. The platform requires JavaScript to be enabled for optimal functionality.

Atomic Bot

Atomic Bot is an AI application that serves as a user-friendly native app bringing OpenClaw (Clawdbot) capabilities to everyday users. It offers a simplified way to access the full OpenClaw experience through a human-friendly interface, enabling users to manage tasks such as email, calendars, documents, browser actions, and workflows efficiently. With features like Gmail management, calendar autopilot, document summarization, browser control, and task automation, Atomic Bot aims to enhance productivity and streamline daily workflows. The application prioritizes privacy by allowing users to choose between running it on their local device, in the cloud, or a hybrid setup, ensuring data control and security.

Free Text to Speech Online Converter Tools

This website provides a free text-to-speech converter tool that utilizes Microsoft's AI speech library to synthesize realistic-sounding speech from text. It offers customizable voice options, fine-tuned speech controls, and multilingual support with over 330 neural network voices across 129 languages. The tool is accessible on various browsers, including Chrome, Firefox, and Edge, and can be used for a range of applications, such as text readers and voice-enabled assistants.

MiClient.ai

MiClient.ai is an AI-powered Sales automation SaaS that offers a Lead Management System Software to streamline the deal closure process for businesses. It provides a comprehensive CRM software with CPQ capabilities, enabling users to manage their pipeline, create deals, send proposals, co-review deals with customers, and close contracts with e-signatures. MiClient.ai aims to boost sales efficiency by automating client interactions, speeding up proposal sending, eliminating quoting errors, and maximizing revenue through intelligent pricing strategies.

ITVA

ITVA is an AI automation tool for network infrastructure products that revolutionizes network management by enabling users to configure, query, and document their network using natural language. It offers features such as rapid configuration deployment, network diagnostics acceleration, automated diagram generation, and modernized IP address management. ITVA's unique solution securely connects to networks, combining real-time data with a proprietary dataset curated by veteran engineers. The tool ensures unparalleled accuracy and insights through its real-time data pipeline and on-demand dynamic analysis capabilities.

OpenResty

The website is currently displaying a '403 Forbidden' error, which means that access to the requested resource is denied. This error is typically caused by insufficient permissions or server misconfiguration. The 'openresty' message indicates that the server is using the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, often used for building high-performance web applications. The website may be experiencing technical issues that prevent users from accessing its content.

Cloobot X

Cloobot X is a Gen-AI-powered implementation studio that accelerates the deployment of enterprise applications with fewer resources. It leverages natural language processing to model workflow automation, deliver sandbox previews, configure workflows, extend functionalities, and manage versioning & changes. The platform aims to streamline enterprise application deployments, making them simple, swift, and efficient for all stakeholders.

VeroCloud

VeroCloud is a platform offering tailored solutions for AI, HPC, and scalable growth. It provides cost-effective cloud solutions with guaranteed uptime, performance efficiency, and cost-saving models. Users can deploy HPC workloads seamlessly, configure environments as needed, and access optimized environments for GPU Cloud, HPC Compute, and Tally on Cloud. VeroCloud supports globally distributed endpoints, public and private image repos, and deployment of containers on secure cloud. The platform also allows users to create and customize templates for seamless deployment across computing resources.

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server understood the request but refuses to authorize it. This error is typically caused by insufficient permissions or misconfiguration on the server side. The 'openresty' message suggests that the server is using the OpenResty web platform. OpenResty is a powerful web platform based on Nginx and LuaJIT, providing high performance and flexibility for web applications.

OpenResty

The website is currently displaying a '403 Forbidden' error, which means that access to the requested resource is forbidden. This error is typically caused by insufficient permissions or misconfiguration on the server side. The message 'openresty' suggests that the server is using the OpenResty web platform. OpenResty is a dynamic web platform based on NGINX and Lua that is commonly used for building high-performance web applications. It provides a powerful and flexible environment for developing and deploying web services.

403 Forbidden

The website seems to be experiencing a 403 Forbidden error, which indicates that the server is refusing to respond to the request. This error is often caused by incorrect permissions on the server or misconfigured security settings. The message 'openresty' suggests that the server may be running on the OpenResty web platform. OpenResty is a web platform based on NGINX and LuaJIT, known for its high performance and scalability. Users encountering a 403 Forbidden error on a website may need to contact the website administrator or webmaster for assistance in resolving the issue.

OpenResty

The website is currently displaying a '403 Forbidden' error, which means that access to the requested resource is denied. This error is typically caused by insufficient permissions or server misconfiguration. The 'openresty' message indicates that the server is using the OpenResty web platform. OpenResty is a scalable web platform that integrates the Nginx web server with various Lua-based modules, providing powerful features for web development and server-side scripting.

OpenResty

The website is currently displaying a '403 Forbidden' error, which indicates that the server understood the request but refuses to authorize it. This error is often encountered when trying to access a webpage without the necessary permissions. The 'openresty' mentioned in the text is likely the software running on the server. It is a web platform based on NGINX and LuaJIT, known for its high performance and scalability in handling web traffic. The website may be using OpenResty to manage its server configurations and handle incoming requests.

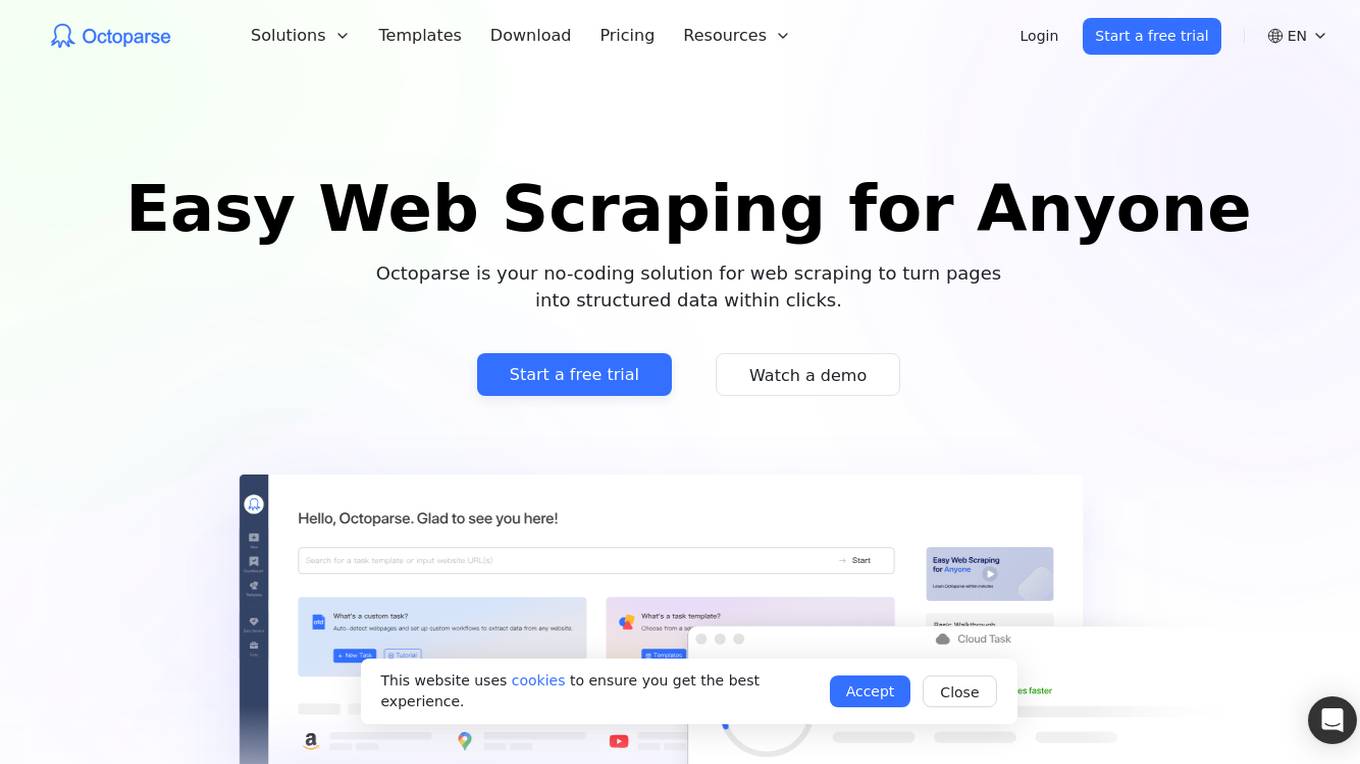

Octoparse

Octoparse is an AI web scraping tool that offers a no-coding solution for turning web pages into structured data with just a few clicks. It provides users with the ability to build reliable web scrapers without any coding knowledge, thanks to its intuitive workflow designer. With features like AI assistance, automation, and template libraries, Octoparse is a powerful tool for data extraction and analysis across various industries.

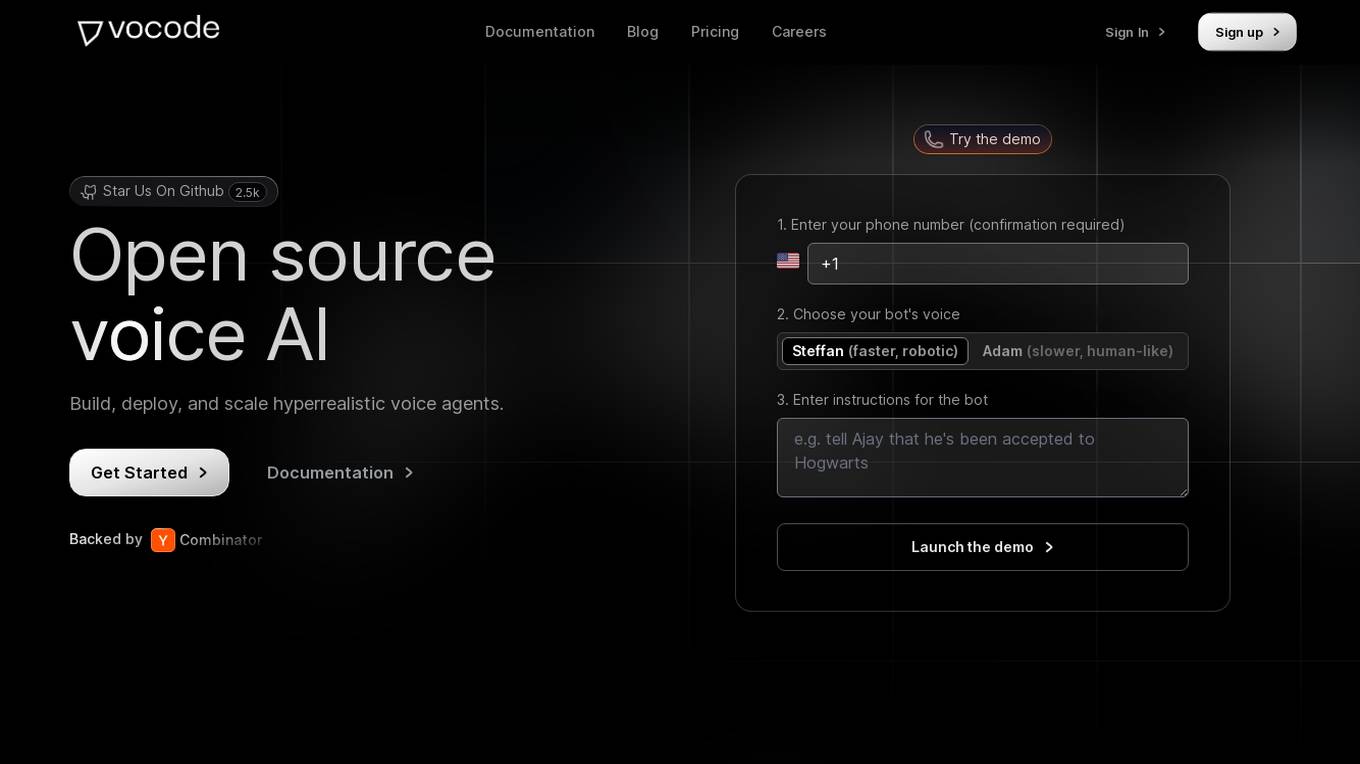

Vocode

Vocode is an open-source voice AI platform that enables users to build, deploy, and scale hyperrealistic voice agents. It offers fully programmable voice bots that can be integrated into workflows without the need for human intervention. With multilingual capability, custom language models, and the ability to connect to knowledge bases, Vocode provides a comprehensive solution for automating actions like scheduling, payments, and more. The platform also offers analytics and monitoring features to track bot performance and customer interactions, making it a valuable tool for businesses looking to enhance customer support and engagement.

OpenResty Server Manager

The website seems to be experiencing a 403 Forbidden error, which typically indicates that the server is denying access to the requested resource. This error is often caused by incorrect permissions or misconfigurations on the server side. The message 'openresty' suggests that the server may be using the OpenResty web platform. Users encountering this error may need to contact the website administrator for assistance in resolving the issue.

1 - Open Source AI Tools

higress

Higress is an open-source cloud-native API gateway built on the core of Istio and Envoy, based on Alibaba's internal practice of Envoy Gateway. It is designed for AI-native API gateway, serving AI businesses such as Tongyi Qianwen APP, Bailian Big Model API, and Machine Learning PAI platform. Higress provides capabilities to interface with LLM model vendors, AI observability, multi-model load balancing/fallback, AI token flow control, and AI caching. It offers features for AI gateway, Kubernetes Ingress gateway, microservices gateway, and security protection gateway, with advantages in production-level scalability, stream processing, extensibility, and ease of use.

20 - OpenAI Gpts

Istio Advisor Plus

Rich in Istio knowledge, with a focus on configurations, troubleshooting, and bug reporting.

Calendar and email Assistant

Your expert assistant for Google Calendar and gmail tasks, integrated with Zapier (works with free plan). Supports: list, add, update events to calendar, send gmail. You will be prompted to configure zapier actions when set up initially. Conversation data is not used for openai training.

Salesforce Sidekick

Personal assistant for Salesforce configuration, coding, troubleshooting, solutioning, proposal writing, and more. This is not an official Salesforce product or service.

FlashSystem Expert

Expert on IBM FlashSystem, offering 'How-To' guidance and technical insights.

CUDA GPT

Expert in CUDA for configuration, installation, troubleshooting, and programming.

SIP Expert

A senior VoIP engineer with expertise in SIP, RTP, IMS, and WebRTC. Kinda employed at sipfront.com, your telco test automation company.

Gradle Expert

Your expert in Gradle build configuration, offering clear, practical advice.